What sort of power supply do you actually need for an RTX 5090?

We dive deep into the reality of power demands for Nvidia's flagship consumer GPU.

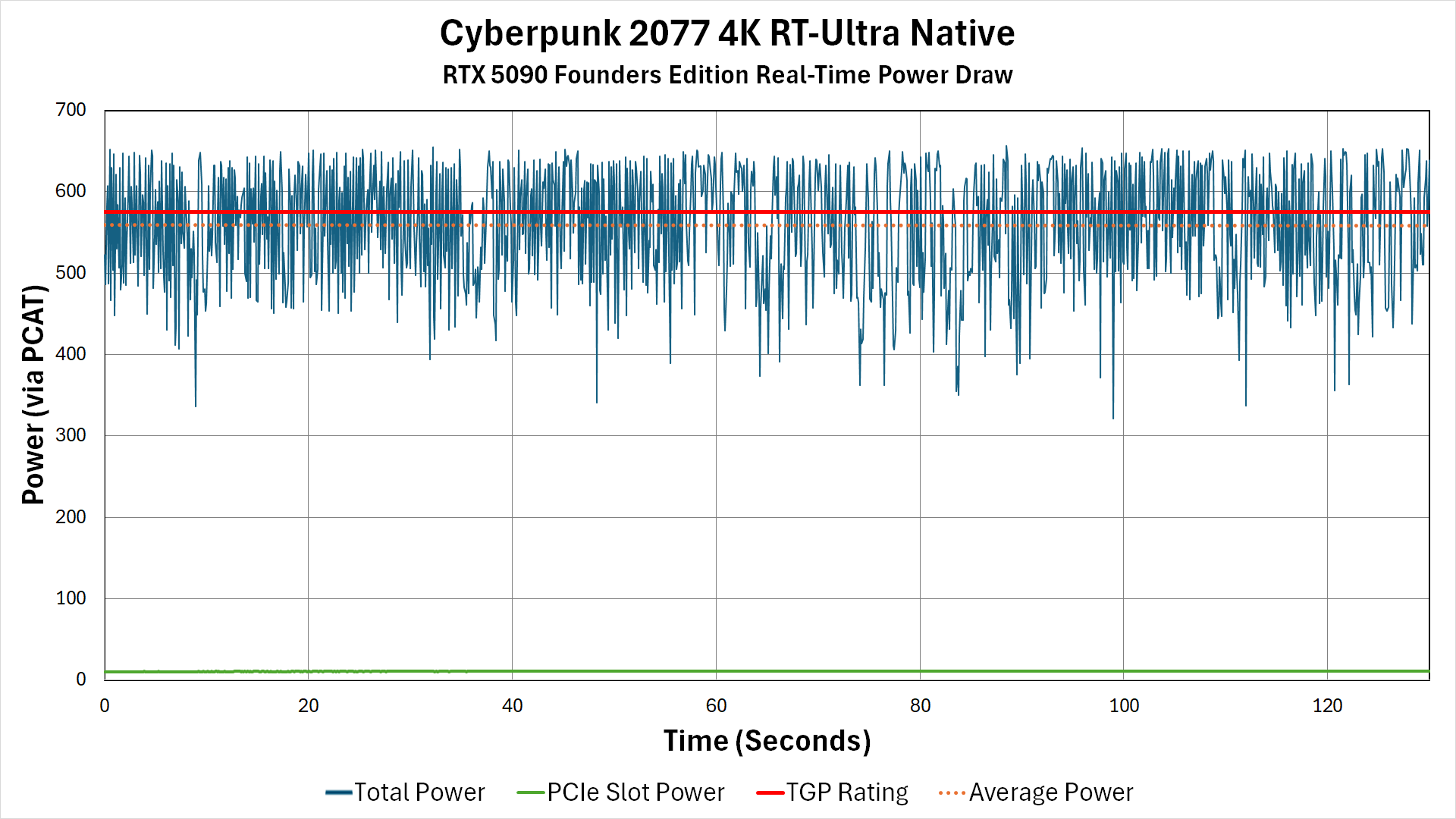

The Nvidia GeForce RTX 5090 now reigns as the fastest graphics card on the planet — for gaming and consumer use, at least — but it has encountered plenty of criticism due to its 16-pin power connectors, which seem to have a proclivity for melting. It also has a voracious power appetite and can generate tremendous short-lived spikes in power consumption that range up to nearly 700W, necessitating a clean and steady supply of power for optimal operation.

The tremendous power consumption is the byproduct of the 5090's tremendous performance; it's easily the fastest gaming GPU on the market. The RTX 5090 isn't going to dethrone the data center GPUs for AI training or inference, and that's not the point. It will push more pixels than anything else, and likely will remain as the fastest gaming solution until either Nvidia comes out with its next-generation RTX 60-series (in around 2027), or perhaps in a year or so, Nvidia will release an RTX 5090 Ti, RTX 5090 Super, or possibly even a Titan Blackwell. It's the best graphics card if you're most interested in performance, price be damned.

If you have an RTX 5090, what sort of power supply do you need to run it?

Officially, Nvidia recommends at least a 1000W power supply. It's a perfectly reasonable suggestion, since the RTX 5090 commands a base TGP (Total Graphics Power) rating of 575W. That means even a 1000W PSU would already be hitting 58% load just from the graphics card. Toss in a CPU, motherboard, memory, and storage, and you could easily pull upward of 800W from a power supply, especially if you’re using a high-end power-hungry CPU like the Intel Core i9-14900K.

The other thing to note is that transients — short-lived spikes in power draw — on the RTX 5090 can be quite a bit higher than the official 575W TGP. Looking through our test data, Cyberpunk 2077 was one of the worst offenders, and it saw spikes as high as 659W.

That's the real-time in-line power draw, lasting for 0.1 seconds or more. When you take that figure, and throw in a CPU like the Core i9-14900K that demands up to 253W of power, your PSU needs to be prepared for power spikes up to the 900 - 1000W range.

That's definitely the upper limit of how far we'd recommend pushing even a top-quality 1000W PSU. With an 80 Plus Gold power supply, 80% load will mean the PSU draws closer to 900W from the outlet, and transients could hit 1050W or more. The ATX PSU spec covers transients, but having some extra headroom is never a bad idea on an extreme PC build.

Besides power draw, cooling down the heat dissipated throughout the PSU due to inefficiencies means its internal fans will have to work harder, which creates more noise. Such a PSU will inevitably run hotter and louder than if it's only pulling 500W.

There are some things you can do to improve the situation, and other factors to consider. So, what are your options for an RTX 5090 build?

| Header Cell - Column 0 | 80 Plus Bronze | 80 Plus Gold | 80 Plus Platinum | 80 Plus Titanium |

|---|---|---|---|---|

115V 10% | - | - | - | 90 |

115V 20% | 82 | 87 | 90 | 92 |

115V 50% | 85 | 90 | 92 | 94 |

115V 100% | 82 | 87 | 89 | 90 |

230V 20% | - | - | - | 90 |

230V 20% | 85 | 90 | 92 | 94 |

230V 50% | 88 | 92 | 94 | 96 |

230V 100% | 85 | 89 | 90 | 94 |

Buy a higher power and higher quality PSU

80 Plus Gold is the minimum we'd recommend for any modern PC. For U.S. residents and those with 115V electrical sockets, 80 Plus Gold certification requires the PSU to achieve at least 87%, 90%, and 87% efficiency at loads of 20%, 50%, and 100%, respectively. What about the in-between loads, like 75%? There's no specific requirement, other than that it would fall between the 50% and 100% efficiency ratings.

If you purchase an 80 Plus Platinum PSU, the efficiencies increase to 90%, 92%, and 89%. That's not a huge difference, as if you're dealing with a PC pulling 800W from the PSU, that 89% efficiency would mean 899W compared to 920W for 87% efficiency. It's only a 21W increase. Still, that extra power ends up as heat within your PSU that needs to be dealt with.

What about 80 Plus Titanium, the current top-rated standard? That pushes the efficiency curve to 92%, 94%, and 90%. This offers at least 90% efficiency even at 10% load. Note that the low to middle loads see a bigger increase than the high load, so pulling 800W out of the PSU could still mean the PSU is drawing 889W from the outlet — only a 10W difference from Platinum.

But the efficiency estimates so far assume we're sticking with a 1000W PSU. With a higher wattage PSU, like a 1500W or 1600W model, you can improve efficiency thanks to more headroom. The Gold, Platinum, and Titanium rated PSUs are 90% 92%, and 94% efficient, respectively, at 50% load.

As a more extreme example, going from an 80 Plus Gold 1000W PSU to an 80 Plus Titanium 1600W PSU would improve the efficiency of an 800W load from around 87% to 94% — from 920W to 851W.

That's a pretty significant difference. Is it worth the additional cost? For a graphics card that can cost $3,000 or more? Sure, if you have the funds for one, why not put some additional money into the other?

You're not likely to make up the difference in power savings over the lifetime of either the GPU or PSU, but at least you can be reasonably certain that your power supply won't be a limiting factor.

But efficiency ratings aren’t the only thing you need to consider. There are varying levels of quality among the Gold, Platinum, and Titanium PSUs. In theory, the component requirements to reach Platinum or Titanium level should guarantee a higher quality power supply; however, that is occasionally not the case.

The best thing to do would be to search for power supply reviews that cover the nitty-gritty details. Doing your research beats taking a chance on an unknown Gold unit from a brand you might never have heard of.

Get an ATX 3.1 (or later) PSU

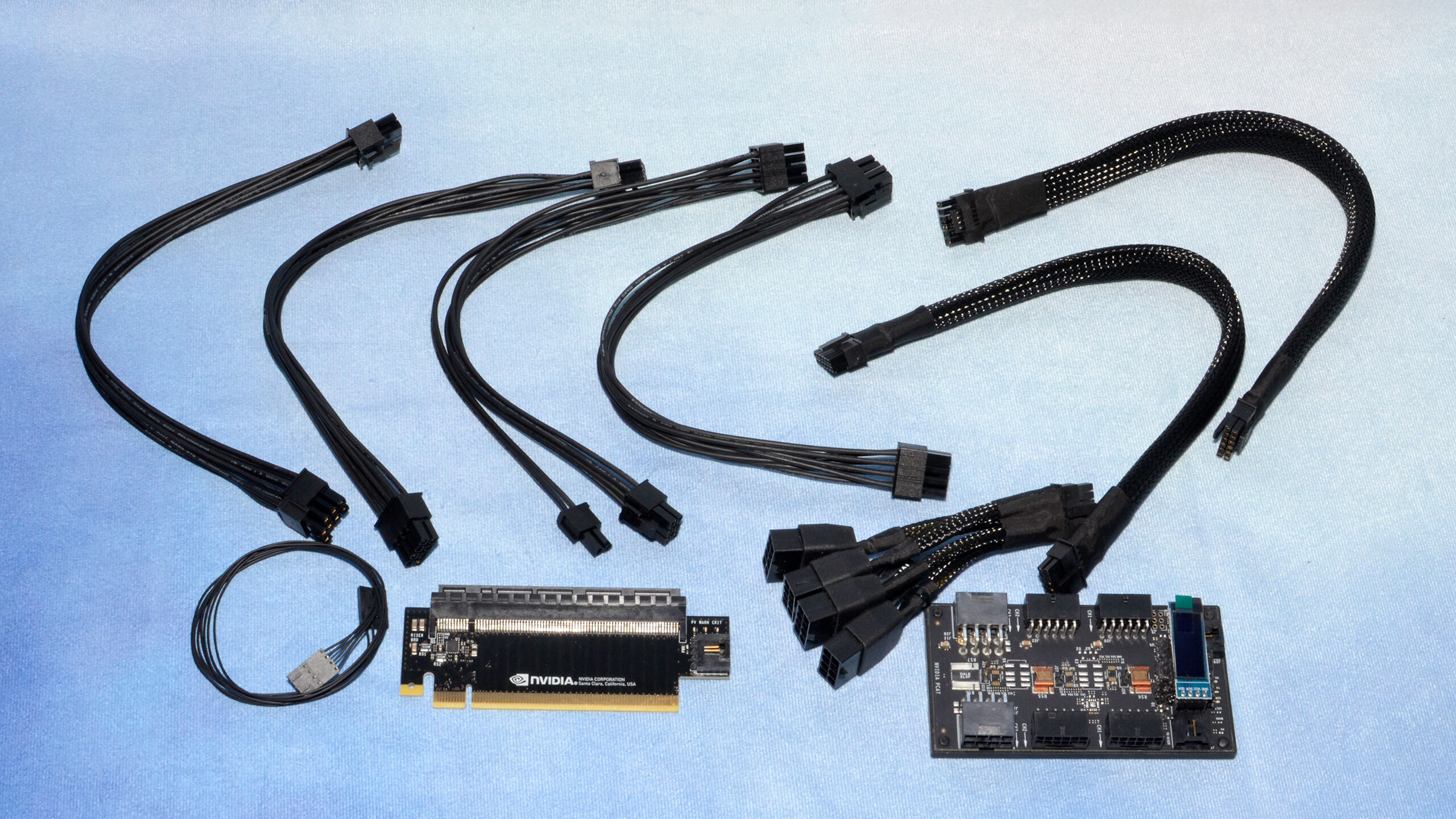

An addendum to the above is perhaps obvious to some, but in the interest of covering our bases, you really should be using a power supply that follows the ATX 3.1 or later standards. ATX 3.0 introduced the now-replaced 12VHPWR 16-pin connector for PCIe 5.0 devices like graphics cards. And then we had Nvidia's RTX 4090 meltdown problems.

ATX 3.1 was introduced to rectify the issues with the initial specification. For one, it uses the new and improved 12V-2x6 standard, which has longer terminals on the 12V and ground pins, combined with shorter sense pins. The shorter sense pins allow the GPU to detect if the connector is loose or incorrectly inserted and shut itself down, with the intention of avoiding arcing, melting, and potential component failure.

Besides 12V-2x6, ATX 3.1 also specifies a shorter hold-up time of 12ms (versus 17ms on ATX 3.0), which improves efficiency. So even if you can get a new 12V-2x6 connector for an ATX 3.0 PSU, it's still not necessarily going to be the same as an ATX 3.1 PSU.

But really, the most important aspect of the 16-pin connector is that it uses the new 12V-2x6 rather than the outdated 12VHPWR — especially with the 5090 pulling up to 575W or more, compared to 'only' 450W on the 4090 cards that were already suffering meltdowns.

This new connector has seen its own fair share of complaints from the enthusiast community about melting issues, but the anecdotal evidence does appear to support that this occurs less frequently than with the older standard. It's best to use an ATX 3.1 power supply for the RTX 5090 to reduce the chance of damage, but do be aware that even if you closely follow all of the standard best practices of connecting a GPU connector the connector could be problematic.

Should you use a direct 16-pin connection or the Nvidia 4-way 8-pin to 16-pin adapter?

Assuming you've got all your other ducks in a row, with an ATX 3.1 PSU rated at 1200W or more, you still have to decide how you're actually going to wire up the RTX 5090. Every model will come with an Nvidia 4-way 8-pin to single 16-pin adapter. But should you use it?

In general, the answer is no. It's a bad idea to use adapters and extension cables with your power supply. Every one of those is a potential point of failure, never mind that there's no guarantee of quality if you're using alternative third-party adapters. The best solution will be to use a direct 16-pin connection from the PSU to the graphics card.

Unless you're like me, and I mean that very specifically.

I tested GPUs for my job, often swapping graphics cards in my test rig every day, sometimes with multiple swaps per day (like when a new game comes out and I test a dozen different GPUs or more). That puts a lot more wear and tear on all the connectors, and it's also potential wear and tear on the graphics card power sockets — 8-pin or 16-pin.

Put simply, the 16-pin connectors feel less robust overall. It's about the same size as an 8-pin connector but with twice as many pins. I've had the small plastic clip on one graphics card (an RTX 4070 Ti) break off after only two 16-pin mating cycles. Conversely, I have cards with 8-pin connectors where I've swapped them in and out of my test PCs literally hundreds of times. As you can imagine, replacing a 16-pin (or 8-pin) connector on a graphics card isn't a simple process.

So in my case, where I'm swapping GPUs so frequently, the use of adapter cables is worth the risk, as they make it less likely I'll wear out the power socket on a graphics card. If an adapter cable starts feeling loose or too easy to insert, I can replace it with a new cable pretty easily. But if you're not swapping GPUs all the time, I'd stick to a direct 16-pin 12V-2x6 cable and leave it alone.

Can you use an 850W power supply with an RTX 5090?

In theory, yes, provided your PC stays below 850W of total power use. But running a PSU flat out at 100% load, even for a few hours per day, would almost certainly reduce its lifespan. I know people who did cryptocurrency mining back in the day, where they had 800W loads running on 1000W 80 Plus Gold PSUs, and most of those PSUs would fail after two or three years.

Again, quality plays a role, and determining whether you have a great PSU or one that merely ticks the right boxes is complex. Check our best power supplies guide for specific recommendations that we've tested. As noted already, all 1000W Gold PSUs aren't created equal, and the same goes for all 850W Platinum PSUs. Some would probably manage just fine with a 5090; others might create instability.

The age of your power supply is also a big factor. A brand new 850W Platinum PSU will behave differently than a five-year-old 850W Platinum PSU (and a five-year-old PSU wouldn't be ATX 3.0 compliant, never mind ATX 3.1). Your PSU might have come with a 10-year warranty, but if it fails and takes another component or two with it, getting warranty service will be a headache that’s best avoided.

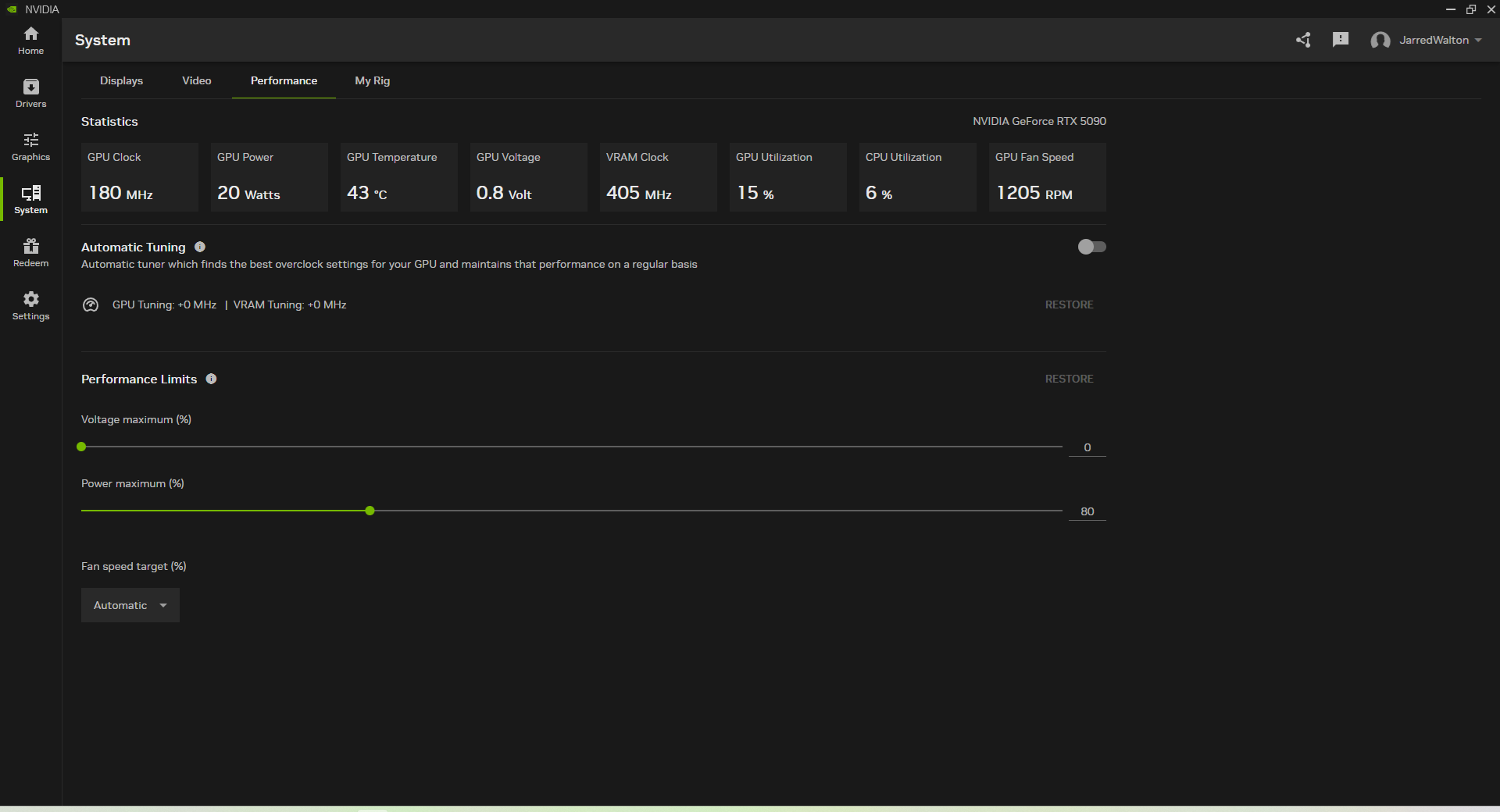

Undervolting and underclocking to reduce power consumption

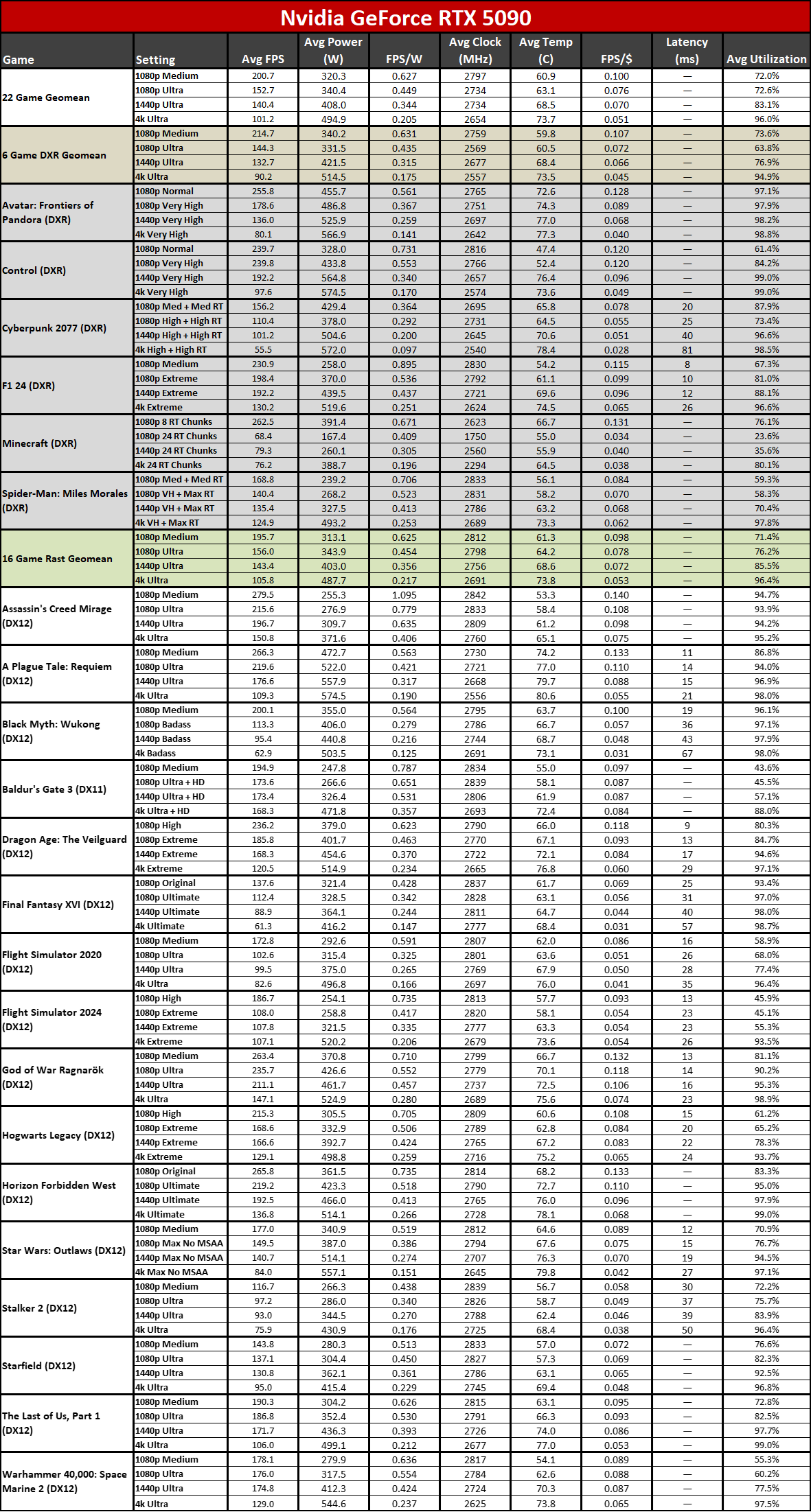

If you don't want to pick up a new $200–$400 PSU to run your $2,000 RTX 5090 graphics card (which might have cost $2,500 or more), there's an alternative approach that might work. In our testing, the RTX 5090 rarely needed to use its full 575W TGP. Of the 22 games that we used for testing, about half used more than 500W of power at 4K ultra, and only five broke the 550W mark. If we limit the testing to 1440p ultra, just five used more than 500W, and only two went past 550W.

That means you could manually limit the power to an RTX 5090 to around 500W (85–90%) and get close to the base level of performance, depending on the games you play. Games that are already hitting the 575W limit might have a 5–10% performance drop, while games that aren't breaking 500W should still run as normal. If you're willing to drop to 80% on the power limit, that would cap the 5090 at around 460W, which should be low enough that an 800–850W PSU would be fine.

The problem is that spending $2,000 or more on the fastest GPU available, only to then run it at less than maximum performance, strikes us as a bit odd. There's already a somewhat limited advantage for the 5090 compared to the 4090, with about 25% higher performance on average at 4K ultra. With a power limit of 80%, you'd probably cut that potential advantage down to 10–15%.

So, what should you choose?

The bottom line is that the RTX 5090 can be quite the power guzzler under load. If you want to make full use of a card — and particularly if it's a factory overclocked card — we would recommend going above and beyond Nvidia's baseline 1000W recommendation. A reasonable target would be a 1500W 80 Plus Platinum PSU, which has a decent amount of headroom for efficiency. Those start at around $299 normally, though sometimes a sale can drop the price as low as $199.

If you want to step down a bit, 1200W should also be fine. One of our GPU testbeds has a be quiet! 1500W power supply, and the be quiet! Straight Power 12 1200W should do nicely, and it'll be a fraction of the cost of your RTX 5090.

If you do manage to get your hands on an RTX 5090, and you choose to run it with a lower wattage PSU, it might work fine. But at the first sign of instability? You should check the power draw and strongly consider one of the above options. If you don’t, you risk pushing beyond the boundaries of what your power supply can safely deliver.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.