What Is a Monitor's Gamma? A Basic Definition

Gamma explained

Your monitor’s gamma tells you its pixels’ luminance at every brightness level, from 0-100%. Lower gamma makes shadows looks brighter and can result in a flatter, washed out image, where it's harder to see brighter highlights. Higher gamma can make it harder to see details in shadows. Some monitors offer different gamma modes, allowing you to tweak image quality to your preference. The standard for the sRGB color space, however, is a value of 2.2.

Gamma is important because it affects the appearance of dark areas, like blacks and shadows and midtones, as well as highlights. Monitors with poor gamma can either crush detail at various points or wash it out, making the entire picture appear flat and dull. Proper gamma leads to more depth and realism and a more three-dimensional image.

The image gallery below (courtesy of BenQ) shows an image with the 2.2 gamma standard compared to that same image with low gamma and with high gamma.

Testing Gamma

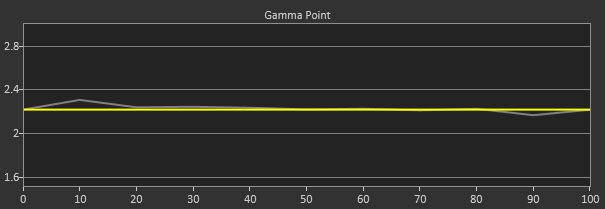

Typically, if you are running on the Windows operating system, the most accurate color is achieved with a gamma value of 2.2 (for Mac OS, the ideal gamma value is 1.8). So when testing monitors, we strive for a gamma value of 2.2. A monitor’s range of gamma values indicates how far the lowest and highest values differ from the 2.2 standard (the smaller the difference, the better).

In our monitor reviews, we’ll show you gamma charts like the one above, with the x axis representing different brightness levels. The yellow line represents 2.2 gamma value. The closer the gray line conforms to the yellow line, the better.

This article is part of the Tom's Hardware Glossary.

Further reading:

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

- Display Testing Explained: How We Test Monitors and TVs: Grayscale Tracking, Gamma Response and Color Gamut

- How to Choose a PC Monitor

- Best Gaming Monitors

- Best Budget 4K Monitors

Scharon Harding has over a decade of experience reporting on technology with a special affinity for gaming peripherals (especially monitors), laptops, and virtual reality. Previously, she covered business technology, including hardware, software, cyber security, cloud, and other IT happenings, at Channelnomics, with bylines at CRN UK.