Intel Core i7 (Nehalem): Architecture By AMD?

Nehalem: An Overview

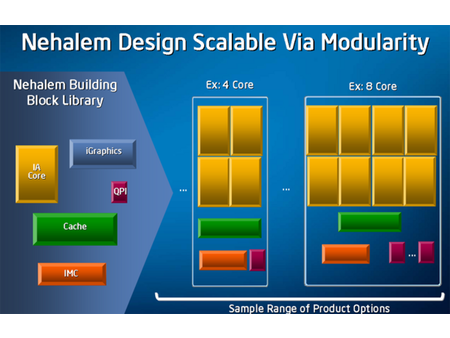

It’s hard to talk about an overview of an architecture like Nehalem, which is fundamentally designed to be modular. The Intel engineers wanted to design a set of building blocks that can be assembled like Legos to create the various versions of the architecture.

It is possible, though, to take a look at the flagship of the new architecture—the very high-end version that will be used in servers and high-performance workstations. At first glance, the specs will likely remind you of the Barcelona (K10) architecture from AMD. It is natively quad-core and has three levels of cache, a built-in memory controller, and a high-performance system of point-to-point interconnections for communicating with peripherals and other CPUs in multiprocessor configurations. This proves that it wasn’t AMD’s technological choices that were bad, but simply its implementation, which hasn’t scaled well enough through its current design.

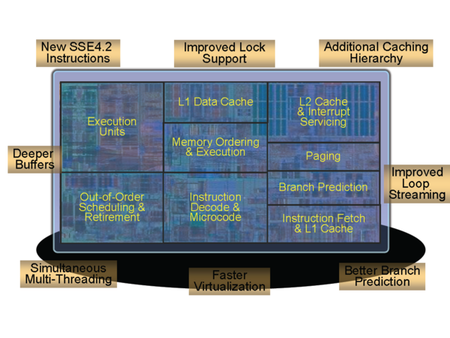

But Intel has done more than just revise its architecture by taking inspiration from their competitor’s innovations. With a budget of more than 700 million transistors (731 million, to be exact), the engineers were able to greatly improve certain characteristics of the execution core while adding new functionality. For example, simultaneous multi-threading (SMT), which had already appeared with the Pentium 4 "Northwood" under the name Hyper-Threading has made its comeback. Associated with four physical cores, certain versions of Nehalem that incorporate two dies in a single package will be capable of executing up to 16 threads simultaneously. While this change appears simple at first glance, as we’ll see later on, it has a wide impact at several levels of the pipeline; many buffers need to be re-dimensioned so that this mode of operation doesn’t impact performance. As has been the case with each new architecture for several years now, Intel has also added new SSE instructions to Nehalem. The architecture supports SSE 4.2, components of which appear to be borrowed from AMD’s K10 micro-architecture. .

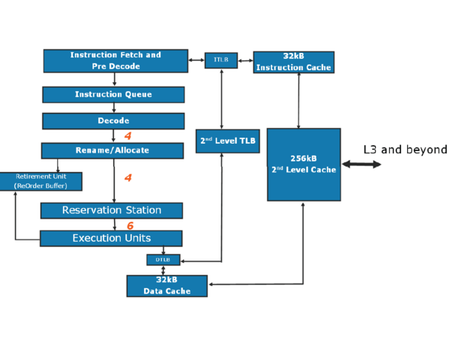

Now that you know the broad outlines of the new architecture, it’s time to take a more detailed look, starting with the front end of the pipeline—the part that’s in charge of reading instructions in memory and preparing them for execution.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Nehalem: An Overview

Prev Page Introduction Next Page Reading And Decoding Instructions-

cl_spdhax1 good write-up, cant wait for the new architecture , plus the "older" chips are going to become cheaper/affordable. big plus.Reply -

neiroatopelcc No explaination as to why you can't use performance modules with higher voltage though.Reply -

neiroatopelcc AuDioFreaK39TomsHardware is just now getting around to posting this?Not to mention it being almost a direct copy/paste from other articles I've seen written about Nehalem architecture.I regard being late as a quality seal really. No point being first, if your info is only as credible as stuff on inquirer. Better be last, but be sure what you write is correct.Reply

-

cangelini AuDioFreaK39TomsHardware is just now getting around to posting this?Not to mention it being almost a direct copy/paste from other articles I've seen written about Nehalem architecture.Reply

Perhaps, if you count being translated from French. -

randomizer Yea, 13 pages is quite alot to translate. You could always use google translation if you want it done fast :kaola:Reply -

Duncan NZ Speaking of french... That link on page 3 goes to a French article that I found fascinating... Would be even better if there was an English version though, cause then I could actually read it. Any chance of that?Reply

Nice article, good depth, well written -

neiroatopelcc randomizerYea, 13 pages is quite alot to translate. You could always use google translation if you want it done fast I don't know french, so no idea if it actually works. But I've tried from english to germany and danish, and viseversa. Also tried from danish to german, and the result is always the same - it's incomplete, and anything that is slighty technical in nature won't be translated properly. In short - want it done right, do it yourself.Reply -

neiroatopelcc I don't think cangelini meant to say, that no other english articles on the subject exist.Reply

You claimed the article on toms was a copy paste from another article. He merely stated that the article here was based on a french version. -

enewmen Good article.Reply

I actually read the whole thing.

I just don't get TLP when RAM is cheap and the Nehalem/Vista can address 128gigs. Anyway, things have changed a lot since running Win NT with 16megs RAM and constant memory swapping. -

cangelini I can't speak for the author, but I imagine neiro's guess is fairly accurate. Written in French, translated to English, and then edited--I'm fairly confident they're different stories ;)Reply