Intel Core i7 (Nehalem): Architecture By AMD?

Memory Subsystem

An Integrated Memory Controller

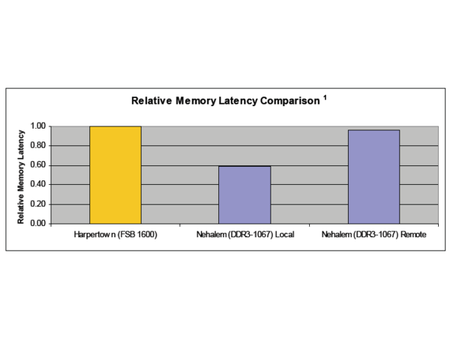

Intel has taken its time catching up to AMD on this point. But as is often the case, when the giant does something, he takes a giant step. Where Barcelona had two 64-bit memory controllers supporting DDR2, Intel’s top-of-the-line configuration will include three DDR3 memory controllers. Hooked up to DDR3-1333, which Nehalem will also support, that adds up to a bandwidth of 32 GB/s in certain configurations. But the advantage of an integrated memory controller isn’t just a matter of bandwidth. It also substantially lowers memory access latency, which is just as important, considering that each access costs several hundred cycles. Though the latecy reduction achieved by an integrated memory controller will be appreciable in the context of desktop use, it is multi-socket server configurations that will get the full benefit of the more scalable architecture. Before, while bandwidth remained constant when CPUs were added, now each new CPU added will increase bandwidth, since each processor has its own local memory space.

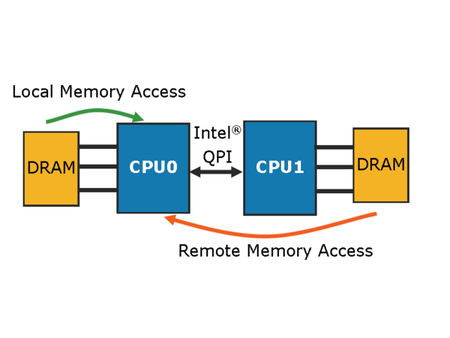

Obviously this is not a miracle solution. This is a Non Uniform Memory Access (NUMA) configuration, which means that memory accesses can be more or less costly, depending on where the data is in memory. An access to local memory obviously has the lowest latency and the highest bandwidth; conversely, an access to remote memory requires a transit via the QPI link, which reduces performance.

The impact on performance is difficult to predict, since it’ll be dependent on the application and the operating system. Intel says the performance hit for remote access is around 70% in terms of latency, and that bandwidth can be reduced by half compared to local access. According to Intel, even with remote access via the QPI link, latency will still be lower than on earlier processors where the memory controller was on the northbridge. However, those considerations only apply to server applications, and for a long time now they’ve already been designed with the specifics of NUMA configurations in mind.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Memory Subsystem

Prev Page QuickPath Interconnect Next Page A Three-Level Cache Hierarchy-

cl_spdhax1 good write-up, cant wait for the new architecture , plus the "older" chips are going to become cheaper/affordable. big plus.Reply -

neiroatopelcc No explaination as to why you can't use performance modules with higher voltage though.Reply -

neiroatopelcc AuDioFreaK39TomsHardware is just now getting around to posting this?Not to mention it being almost a direct copy/paste from other articles I've seen written about Nehalem architecture.I regard being late as a quality seal really. No point being first, if your info is only as credible as stuff on inquirer. Better be last, but be sure what you write is correct.Reply

-

cangelini AuDioFreaK39TomsHardware is just now getting around to posting this?Not to mention it being almost a direct copy/paste from other articles I've seen written about Nehalem architecture.Reply

Perhaps, if you count being translated from French. -

randomizer Yea, 13 pages is quite alot to translate. You could always use google translation if you want it done fast :kaola:Reply -

Duncan NZ Speaking of french... That link on page 3 goes to a French article that I found fascinating... Would be even better if there was an English version though, cause then I could actually read it. Any chance of that?Reply

Nice article, good depth, well written -

neiroatopelcc randomizerYea, 13 pages is quite alot to translate. You could always use google translation if you want it done fast I don't know french, so no idea if it actually works. But I've tried from english to germany and danish, and viseversa. Also tried from danish to german, and the result is always the same - it's incomplete, and anything that is slighty technical in nature won't be translated properly. In short - want it done right, do it yourself.Reply -

neiroatopelcc I don't think cangelini meant to say, that no other english articles on the subject exist.Reply

You claimed the article on toms was a copy paste from another article. He merely stated that the article here was based on a french version. -

enewmen Good article.Reply

I actually read the whole thing.

I just don't get TLP when RAM is cheap and the Nehalem/Vista can address 128gigs. Anyway, things have changed a lot since running Win NT with 16megs RAM and constant memory swapping. -

cangelini I can't speak for the author, but I imagine neiro's guess is fairly accurate. Written in French, translated to English, and then edited--I'm fairly confident they're different stories ;)Reply