AMD Ryzen 5 2600 CPU Review: Efficient And Affordable

Why you can trust Tom's Hardware

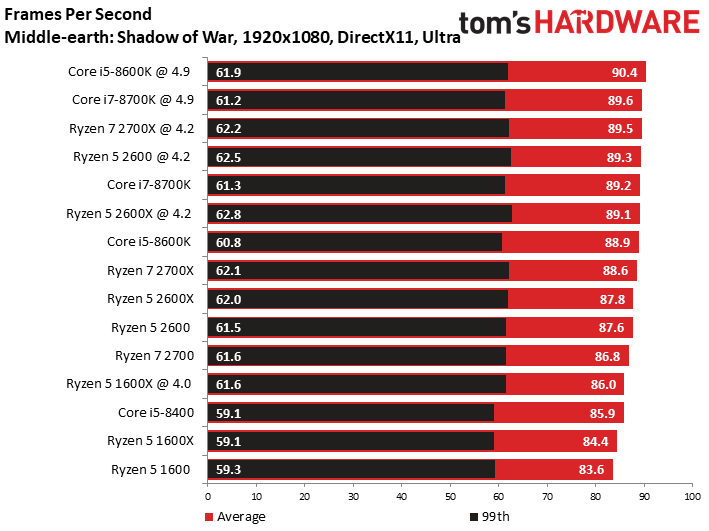

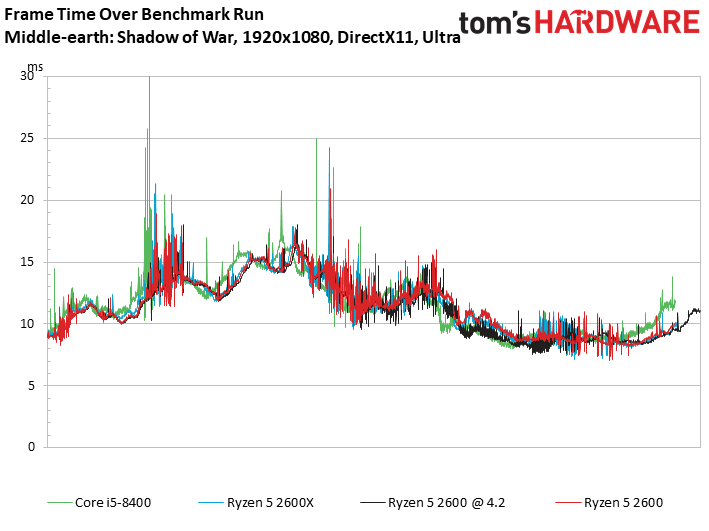

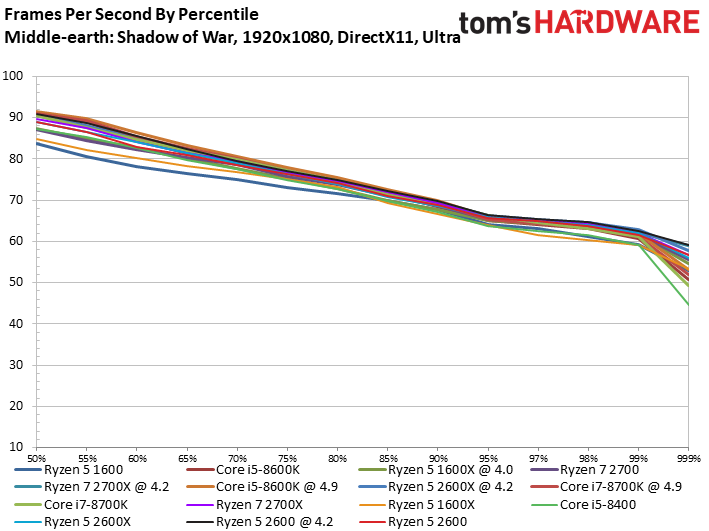

Middle-earth: Shadow Of War

Middle-earth: Shadow of War doesn't scale as well as some of our other benchmarks, and it certainly isn't as sensitive to IPC throughput and clock rate as Shadow of Mordor. This serves as a reminder that most games respond better to faster graphics cards. CPUs don't play as large of a role in determining game performance.

As we can see, though, slower processors can bottleneck graphics cards if the combination isn't balanced well. Ryzen 5 1600 found a spot at the bottom of our results, while AMD's newer Ryzen 5 2600 demonstrated a nice little step up in the benchmark results.

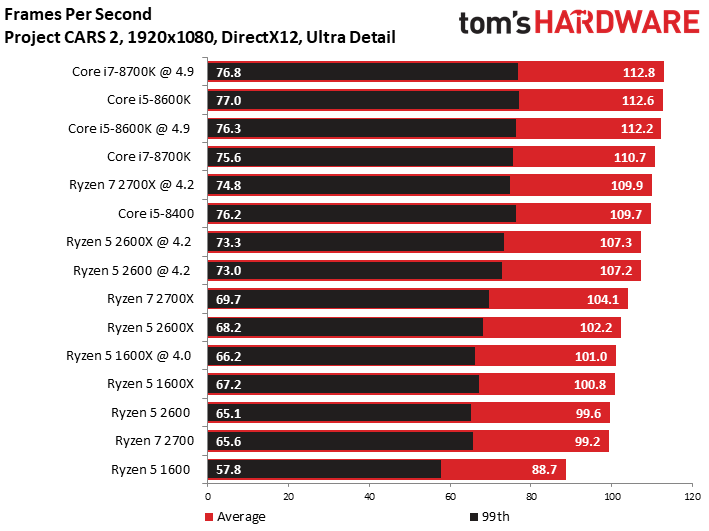

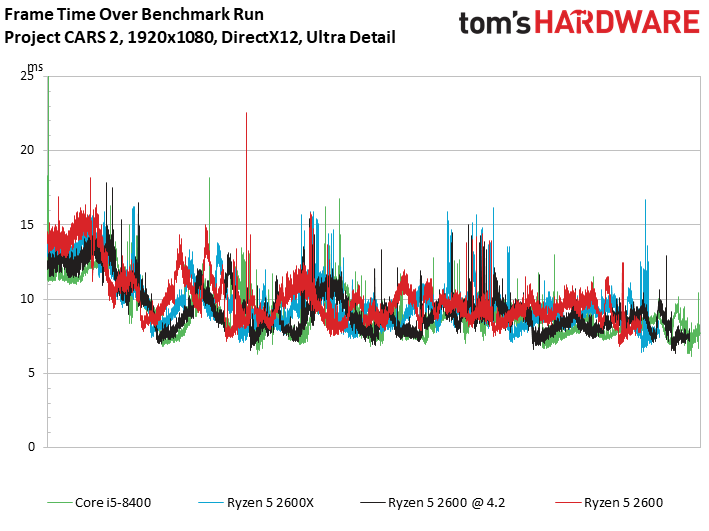

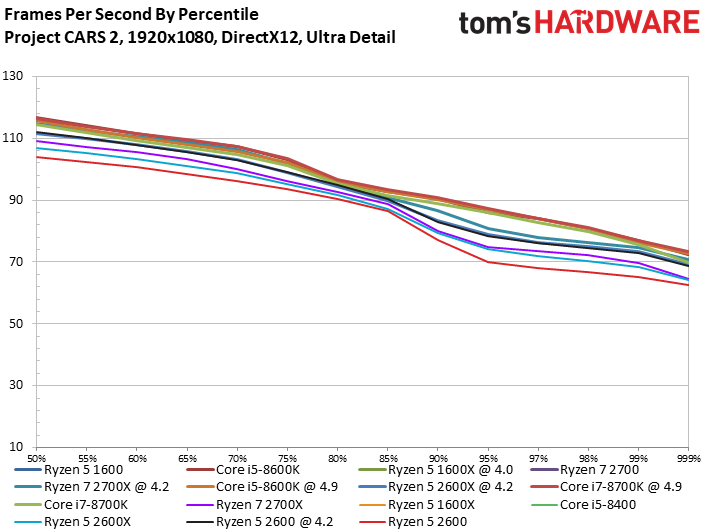

Project CARS 2

Ryzen 5 2600 offers impressive performance, given its $200 price point. But it couldn't quite catch Intel's Core i5-8400 in Project CARS 2, even after overclocking.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Shadow Of War & Project CARS 2

Prev Page Far Cry Primal, GTA: V & Hitman Next Page Office & Productivity

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

joeblowsmynose Really grasping at straws to come up with some cons, eh? "needs better than stock cooler for serious overclocking", "slower than a faster, more expensive CPU", lol. Those cons apply to ever CPU ever made.Reply -

Gillerer Now I know you want to use 1080p in order to get differences between CPUs and not be GPU limited. The problem is, those differences are therefore artificially inflated compared to some enthusiast gamers' hardware.Reply

It'd be nice to have one middle-of-the-road (in terms of GPU/CPU-boundness) game benchmarked in 1440p and 4k, too, as a sort of sanity check. If the differences diminish to rounding error territory, people looking to game in those resolutions with good settings might be better off getting a "good enough" CPU and putting all extra money towards the GPU.

Not only that, if there actually *was* a distinct advangage to getting the best IPC Intel mainstream processor for high-res, high-settings gaming, finding that out would be interesting. -

Blytz I chucked a basic corsair liquid cooler on my 1600X and it runs all cores @ 4.0 I hope the clock for clock is worth the upgrade for those making that stepReply -

1_rick I did the same thing (except I used an NZXT AIO) with the same results. It seems like a 4.2 OC means the 2600 isn't a worthwhile upgrade from the 1600X, but that's what I expected.Reply -

Giroro Right now the price difference between the 2600x and 2600 is $229 vs $189 , so a $40 difference.Reply -

theyeti87 I would love to know whether or not the Indium (I) Iodide 99.999% anhydrous beads that I package for Global Foundries at work is used for the solder in these chips.Reply -

Dugimodo I'm not sure if it does but I think the 8400 needs to include a basic cooler in the cost analysis after seeing several reviews that show it thermal throttles on stock cooling and loses up to 20% performance depending on load and case cooling etc. These charts make it look better than the 2600 when in reality the difference may be almost nothing. Not that I'm buying either :) too much of a performance junkie for that.Reply -

derekullo Reply21009625 said:Really grasping at straws to come up with some cons, eh? "needs better than stock cooler for serious overclocking", "slower than a faster, more expensive CPU", lol. Those cons apply to ever CPU ever made.

On the flipside the last con "Only $20 cheaper than 95W Ryzen 5 2600X" does make a lot of sense.

For only $20 you get noticeably better performance with "you may even say because" a much better cooler.

Even if I was building a computer for my grandmother who only wanted to use it to do Facebook and free casino games, I'd still go with the 2600x

-

derekullo Reply21011262 said:I think for Granny I'd go the 2400G and save on a graphics card myself.

True, but between the 2600s I'd choose the X.