Workstation Graphics: 14 FirePro And Quadro Cards

We put 14 professional and seven gaming graphics cards from two generations through a number of workstation, general-purpose computing, and synthetic applications. By the end of our nearly 70 charts, you should know which board is right for your workload.

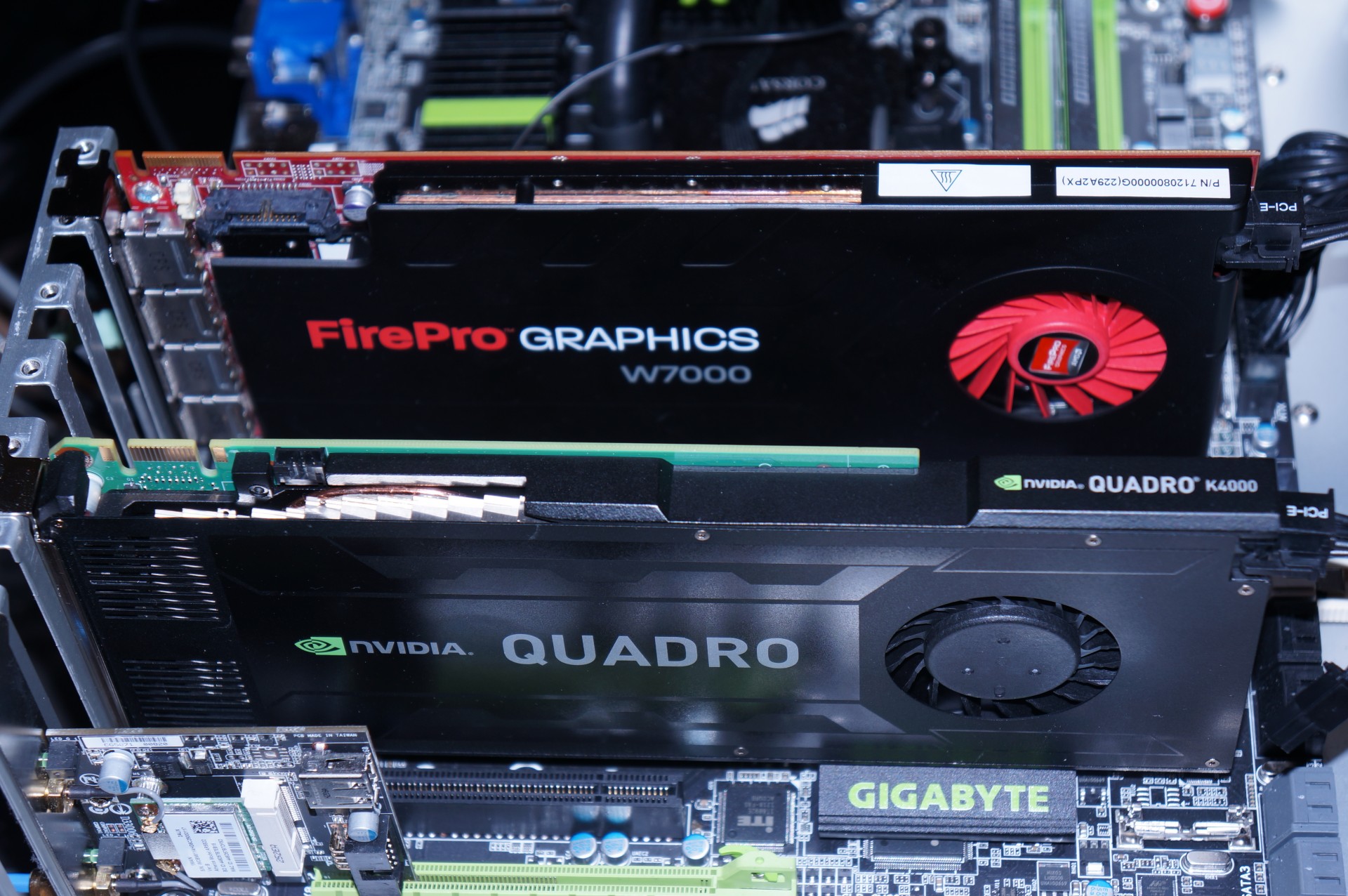

Nvidia's Quadro K4000 And AMD's FirePro W7000 Get Recommendations

Conclusion

Even though the results differ massively from one benchmark to the next for both AMD and Nvidia graphics cards, there’s somewhat of a common thread. We'll cover our first impressions for each company separately, and then reveal the two cards we liked best.

Nvidia: Steady Performance or Slightly Improved, Efficiency Much Better

You have to give it to Nvidia: the company is squeezing a lot of performance out of its GPU hardware. Comparing the Quadro K5000 to the Quadro 5000 and the Quadro K4000 to the Quadro 4000 are two good examples. The K5000 even comes close to the Quadro 6000's performance on occasion, while using a little more than half as much power in the process.

However, the Quadro K5000 and K4000 share some of the limitations of Nvidia’s gaming graphics cards based on GK104. Simply, that GPU wasn't designed for compute. Nvidia’s drivers are still top-notch, and its cards are going to be your first choice in an application the company is optimizing for.

AMD: Performance Vastly Improved, Efficiency Steady or Slightly Improved

AMD deserves respect for its GCN-based FirePro cards. They offer vastly better performance than their predecessors. AMD caught up in many areas where it previously trailed Nvidia in the professional space. And if a workload overwhelms one of these boards, it's a driver issue, not a limitation of the hardware.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The company is also in a better place with its drivers for many applications. Of course, there is still room for improvement. The GCN-based cards naturally do well in compute-heavy applications via OpenCL support. There's a real alternative to Nvidia’s Quadro cards, particularly when you take price into account. Again, this is as long as AMD's driver is optimized for the workload in question. In titles that haven't received much attention yet, performance is less compelling.

Recommended Workhorses: Nvidia's Quadro K4000 and AMD's FirePro W7000

Nvidia's Quadro K4000 and AMD's FirePro W7000 simply offer the most bang for the buck. The pricier cards in both companies' higher-performing tiers are typically too expensive for the average professional, and often aren't the right choice anyway since they emphasize compute-intensive workloads. Pay particular attention to the types of software you plan to run on your workstation, and pick a professional graphics card accordingly. If your vendor of choice hasn't put much effort into optimizing for it, then there's a good chance you're going to be disappointed.

If the two cards we're most excited about are too expensive, then AMD's FirePro W5000 is a good alternative, delivering decent performance from a cut-back version of the Pitcairn GPU. Nvidia also sent along one of its Quadro K2000 cards after this story was completed, and we updated our Workstation Graphics 2013 Chartsto reflect that board's performance, too.

Bottom Line

For the most part, gaming graphics cards don't work for professional applications, and increasingly, ISVs are requiring workstation-class hardware. The only real exceptions are DirectX-based titles like AutoCAD 2013 and Inventor 2013, where the additional optimizations to a pro card and its drivers aren't necessary. There are also certain compute-heavy applications for which desktop-oriented cards perform well also, so long as you can live without features like ECC memory. But if one messed up byte could throw your result off, sending Wall Street into a tailspin, a workstation graphics card designed for the job is a smart choice.

When we look at the market as a whole, AMD is much more competitive now than ever before, while Nvidia continues to optimize and polish its existing products. The race hasn’t been this exciting in a long time. It remains to be seen if AMD can get its drivers certified for more applications. After all, the tremendous architecture that works so well for the company in the gaming space has a ton of potential in the workstation segment, too. Makes us wonder if an excellent software bundle might do wonders for its workstation line-up?

Current page: Nvidia's Quadro K4000 And AMD's FirePro W7000 Get Recommendations

Prev Page Efficiency

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

FloKid So what are the workstation cards for anyways? Seems like the gaming cards beat them pretty bad at most tests.Reply -

Cryio Man. If the GeForce 8 made a killing back in the day, the HD 7000 series show no sign of stopping, wether it's gaming of workstation.Reply -

bambiboom Gentlemen?,Reply

A valiant effort, but in my view, a very important aspect of the comparisons has been neglected, namely, image quality,

It is useful to make quantitative comparisons of workstation cards performing the same tasks, but when gaming / consumer cards are also compared only in terms of speed, the results are not necessarily reflective of these cards' use in content creation. Yes, speed is critical in navigating 3D models- shifting polygons, but the end result of those models is likely to be renderings or animations in which the final quality- refinement of detail and subtlety is more critical than in games.

A fundamental aspect that reflects on the results in this comparison is that the test platform using an i3-3770K is not indicative of a workstation platform for which the workstation cards were designed and the drivers optimized. There are a number of very good reason for Xeons and Opterons and especially, for the existence of dual CPU's with lots of threads. There are other aspects of these components that bear on results, e.g., the memory bandwidth of the i7-3770K is only about half of a Xeon E5-1660. Note too, that that there are good reasons why Xeons have locked multipliers and can not be overclocked- speed is not their measure of success in priority to precision and extreme stability. Also important in this comparison is the presence of ECC RAM which is present in both the system and workstation GPU memory, which was treated a bit lightly, but that is essential for precision, especially in simulations and tasks like financial analysis. Also, ECC affects system speed in it's error correcting duties and parity checks and therefore runs slower than non-ECC. Again, to be truly indicative of workstation cards, it would be more useful to use a workstation to make the comparisons.

An aspect of this report that was not sufficiently clarified, is that the rendering based applications are entirely reflective of CPU performance. Rendering is one of the few tasks that can use all the available system threads and anyone who renders images from 3D models and especially doing animations will today have a dual CPU six or eight core Xeon. I That comparisons were made involving rendering applications on a four-core machine in conditions of which the number of cores / threads matters significantly. believe that some of the dramatic differences in Maya performance in these tests may have been related to the platform used. I have a Previous generation dual four-core system yielding eight cores and sixteen threads at 3.16GHz (Xeon X5460) and during rendering, all eight cores go from 58C to 93C and the RAM (DDR2-667 ECC)from 68C to 85C in about ten minutes.

Also, it's possible that the significant variation in rendering performance then may be due to system throttling and the GPU drivers that are finishing every frame under error-correcting RAM. In this task, the image quality is dependent on precision polygon calculation and i.e, particle placement, such that there are no artifacts, that shadows and color gradients are accurate and refined. Gaming cards emphasize frame rates and are optimized to finish frames more "casually" to achieve higher frame rates. This is why a GTX can't be used for Solidworks modeling either as tasks like structural, thermal, and gas flow simulations must have error correcting memory and Solidworks can produce as much as 128X anti-aliasing where a GTX will produce 16X. When a GTX is pushed in this way, especially on a consumer platform they perform poorly. Again, the image precision and quality aspect was lost in favor of a comparison of speed only.

The introduction of tests involving single and double precision and comments regarding the fundamental differences of priority in the drivers were useful and in my view might have been more extensive as this gets more to the heart of the differences between consumer and workstation cards.

Making quantitative comparisons of image quality is contradictory by definition, but in my view, quality is fundamental to an understanding of these graphics cards. As well, this would assist in explaining to content cobsumers the most important reason content creators are willing to spend $3,500 on a Quadro 6000 when an $800 GTX will make some things faster. Yes, AutoCad 2D is purposely made to run on almost any system- but when the going gets tough***, the tough get a dual Xeon, a pile of ECC, and a Quadro / Firepro!

***(Everything else!)

Cheers, BambiBoom

-

falchard Actually most of these tests don't hit on why you get a Workstation card. In a CAD environment the goal is to get the most amount of polies on screen in real time. SPECview is the benchmark suite to test this and you can see the difference the card makes.Reply

In the tests I found the CUDA numbers disappointing, but you would get a Tesla card for CUDA not a workstation card.

On the OpenCL numbers it paints a different picture where there is almost no difference between the consumer card and the workstation card. I was actually expecting the workstation cards to perform better, but once again I think that's an avenue of FireStream and Tesla cards. -

rmpumper Reply11115442 said:So what are the workstation cards for anyways? Seems like the gaming cards beat them pretty bad at most tests.

People buy workstation cards for better viewport performance and better image quality and as you can see from specviewperf numbers, gaming GPUs are completely useless for that. -

catmull-rom I don't really get why pro cards are recommended so easily? I know this site want's manufacturers to keep sending them cards but the data just doesn't support a simple end conclusion.Reply

I totally get that in some work-areas you want ecc, you want certified drivers, you want as much stability and security and / or extra performance in specific areas. Compared to the work the hardware cost is of little importance, so I totally agree, get a pro workstation with a pro card. You want to be on the safest side while doing big engineering projects, parts for planes, scientific and / or financial calculations etc.

But that being said, and especially for the content creation / entertainment / media sector you really need think if a pro card is useful and worth it. Most 3D apps work great on game cards, and as you can see as far as rendering is concerned game cards are your best choice for speed if you can live with the limitations. Also for a lot of CAD work you can get away fine with a game card.

So it's not just Autocad or Inventor which don't need a pro card. Most people will be just fine with them on 3ds max or alike, rhino and solidworks.

I don't get why there are no test scores with Solidworks and game cards in this article? Game cards work fine mostly and pro cards offer little extra featurewise in this app. The driver issue really seems like a bad excuse not to have some game scores in there.

Also, I have never really looked at Specview. It's seems to heavily favor pro cards while it doesn't tell you most apps will work fine with game cards. -

vhjmd Wonderful article, you should make an update with intel HD 3000 and HD 4000 because at least Siemens NX now supports officially those cards because have performance for Open GL.Reply -

mapesdhs With the pro cards at last not hindered by slower-clocked workstationReply

CPUs, we can finally see these cards show their true potential. You're

getting results that more closely match my own this time, confirming what

I suspected, that workstation CPUs' low clock rates hold back the

Viewperf 11 tests significantly in some cases. Many of them seem very

sensitive to absolute clock rate, especially ProE.

And interesting to compare btw given that your test system has a 4.5GHz

3770K. Mine has a 5GHz 2700K; for the Lightwave test with a Quadro 4000,

I get 93.21, some 10% faster than with the 3770K. I'm intrigued that you

get such a high score for the Maya test though, mine is much lower

(54.13); driver differences perhaps? By contrast, my tcvis/snx scores are

almost identical.

I mentioned ProE (I get 16.63 for a Quadro 4K + 2700K/5.0); Igor, can you

confirm whether or not the ProE test is single-threaded? Someone told me

ProE is single-threaded, but I've not checked yet.

FloKid, I don't know how you could miss the numbers but in some cases

the gamer cards are an order of magnitude slower than the pro cards,

especially in the Viewperf tests. As rmpumper says, pro cards often give

massively better viewport performance.

bambiboom, although you're right about image quality, you're wrong about

performance with workstation CPUs - many pro apps benefit much more from

absolute higher speed of a single CPU with less threads, rather than just

lots of threads. I have a dual-X5570 Dell T7500 and it's often smoked for

pro apps by my 5GHz 2700K (even more so by my 3930K); compare to my

Viewperf results as linked above. Mind you, as I'm sure you'd be the

first to point out, this doesn't take into account real-world situations

where one might also be dealing with large data sets, lots of I/O and

other preprocessing in a pro app such as propprietory database traversal,

etc., in which case yes indeed a lots-of-threads workstation matters, as

might ECC RAM and other issues. It varies. You're definitely right though

about image precision, RAM reliability, etc.

falchard, the problem with Tesla cards is cost. I know someone who'd

love to put three Teslas in his system, but he can't afford to. Thus, in

the meantime, three GTX 580s is a good compromise (his primary card

is a Quadro 4K).

catmull-rom, if I can quote, you said, "... if you can live with the

limitations.", but therein lies the issue: the limitation is with

problems such as rendering artifacts which are normally deemed

unacceptable (potentially disastrous for some types of task such as

medical imaging, financial transaction processing and GIS). Also, to

understand Viewperf and other pro apps, you need to understand viewport

performance, and the big differences in driver support that exist between

gamer and pro cards. Pro & gamer cards are optimised for different types

of 3D primitive/function, eg. pro apps often use a lot of antialiased

lines (games don't), while gamer cards use a lot of 2-sided textures (pro

apps don't). This is reflected in the drivers, which is why (for example)

a line test in Maya can be 10X faster on a pro card, while a game test

like 3DMark06 can be 10X faster on a gamer card.

Also, as Teddy Gage pointed out on the creativecow site recently, pro

cards have more reliable drivers (very important indeed), greater viewport

accuracy, better binned chips (better fault testing), run cooler, are smaller,

use less power and come with better customer support.

For comparing the two types of card, speed is just one of a great many

factors to consider, and in many cases is not the most important factor.

Saving several hundred $ by buying a gamer card is pointless if the app

crashes because of a memory error during a 12-hour render. The time lost

could be catastrophic it means one misses a submission deadline; that's

just not viable for the pro users I know.

Ian.