Overclocking: Can Sandy Bridge-E Be Made More Efficient?

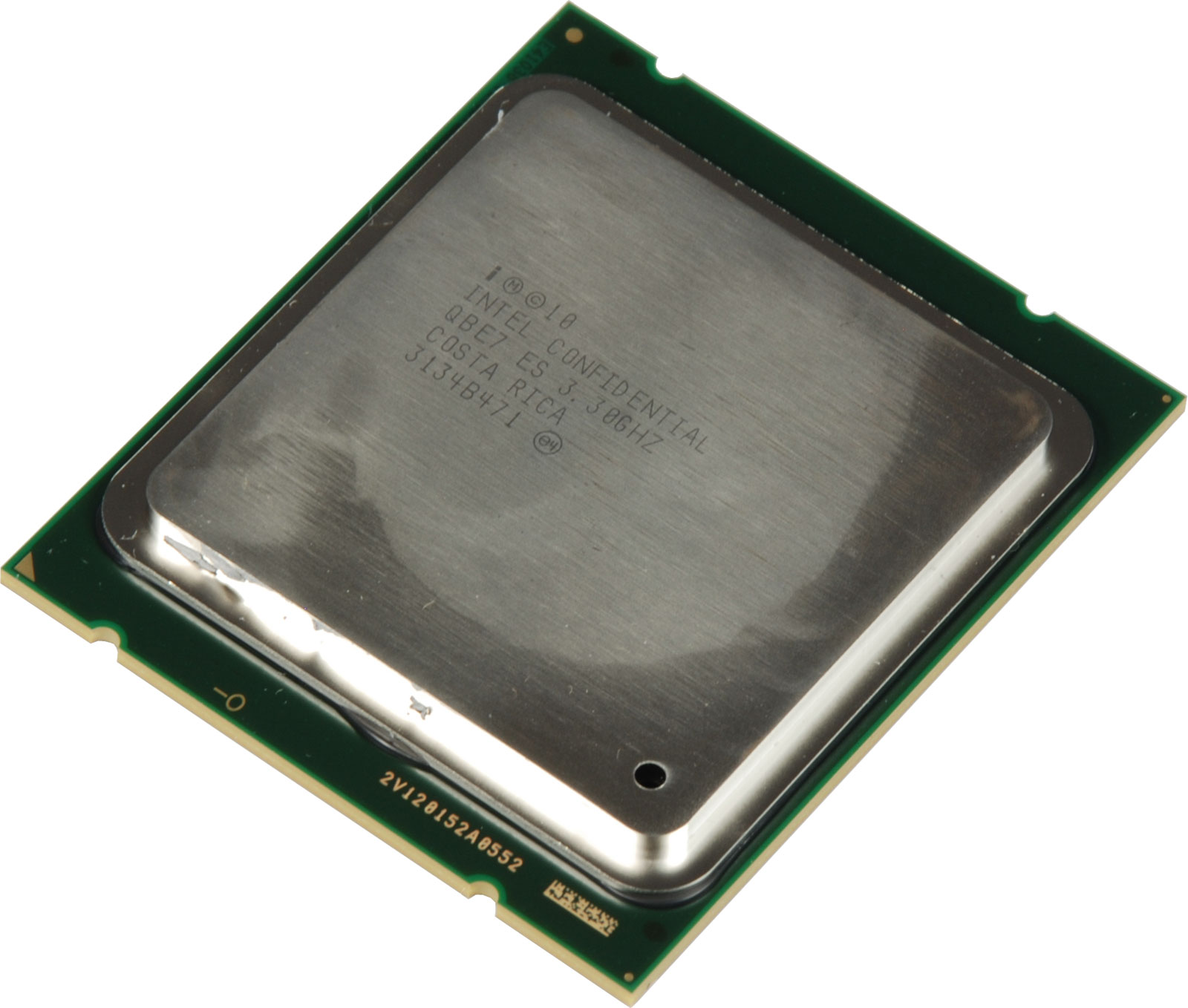

Intel's six-core processors are fast, but enthusiasts almost always want to push unlocked multipliers harder. Core i7-3960X can easily exceed 4 GHz, but what happens to power efficiency when clock rates go up? Sandy Bridge-E demonstrates weaknesses there.

Sandy Bridge-E: Does The E Stand For Efficiency?

Intel sells the fastest desktop processors you can buy; this much is known. Although some of the company's offerings are disappointingly neutered by locked ratio multipliers, the ones that aren't regularly turn out new speed records thanks to ambitious overclockers. Topping 4 GHz is no problem, even with six cores and a 15 MB of shared L3 cache pushing the complexity of Intel's chips into the billions of transistors. But what happens to efficiency when such a large piece of silicon is pushed to its limits?

It's a good question. As we showed in Core i7-2600K Overclocked: Speed Meets Efficiency, you can actually get better efficiency from this architecture if you overclock it sensibly. Now, we're gunning to see if those results can be duplicated with Sandy Bridge-E, the configuration with six cores, and soon, when Xeon E5 emerges, eight.

Overclocking: For Sport Or Necessity?

Gone are the days when you'd search high and low for that one processor model able to overclock like a beast at a price that was too good to be true. There are so many models now, and so much feature-level differentiation, that it makes more sense to find an affordable CPU that can do what you need it to, and then push from there. For most of us, there's nothing a Core i5-2500K can't do that a Core i7-2600K can for a much higher price. Of course, it doesn't help that most mainstream hardware leads the software industry. Little of what we run on our desktops requires a 4.5 or 5 GHz version of what we already have running at 3 or 4 GHz.

That isn't stopping AMD and Intel from becoming more overclocking-friendly, though (or perhaps it'd be more accurate to say that they're getting more savvy about using overclocking as a differentiator worth a price premium). AMD boasts unlocked ratios up and down its FX stack, for example. Meanwhile, Intel just announced that it will offer, for a small fee, CPU insurance that covers processor replacement in the event of overclocking damage.

Furthermore, Intel finds itself without a competitor in the high-end segment. AMD is currently selling more value-oriented processors, but its best effort currently competes (in terms of absolute performance) with models in the middle of Intel's mainstream portfolio. It can't compete where more affluent enthusiasts are spending money. In terms of manufacturing, Intel is currently about 18 months ahead, which is why AMD's 32 nm-based CPUs and APUs are relatively new, as Intel readies its 22 nm Ivy Bridge-based line-up.

This competitive advantage gives Intel considerable scalability for product planning and efficiency: processors that operate below their design ceiling naturally use less power, giving us plenty of room to measure the effect of tuning for even more speed.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Finding The Optimal Clock Rate

Every processor has an ideal clock rate (or at least an optimal range) at which the chip provides the best possible performance per watt. If you can find that point for your platform, you're sure to get the best performance for the amount of power used. We're using a Core i7-3960X to come up with the ideal combination of low energy consumption at idle with the highest possible clock rate still able to keep energy consumption within reasonable limits.

Current page: Sandy Bridge-E: Does The E Stand For Efficiency?

Next Page Intel's Core i7-3000 Family: Dominating The High-End

Patrick Schmid was the editor-in-chief for Tom's Hardware from 2005 to 2006. He wrote numerous articles on a wide range of hardware topics, including storage, CPUs, and system builds.

-

Yargnit What about trying to under-volt it at slight under-clocks to slight-overclocks. How much room is there to reduce it's stock voltage to gain better efficiency?Reply -

billj214 Was there an efficiency chart made for the Core i7 2600k or 2700k?Reply

Nice to know Intel doesn't just set the stock clock speed for just performance! -

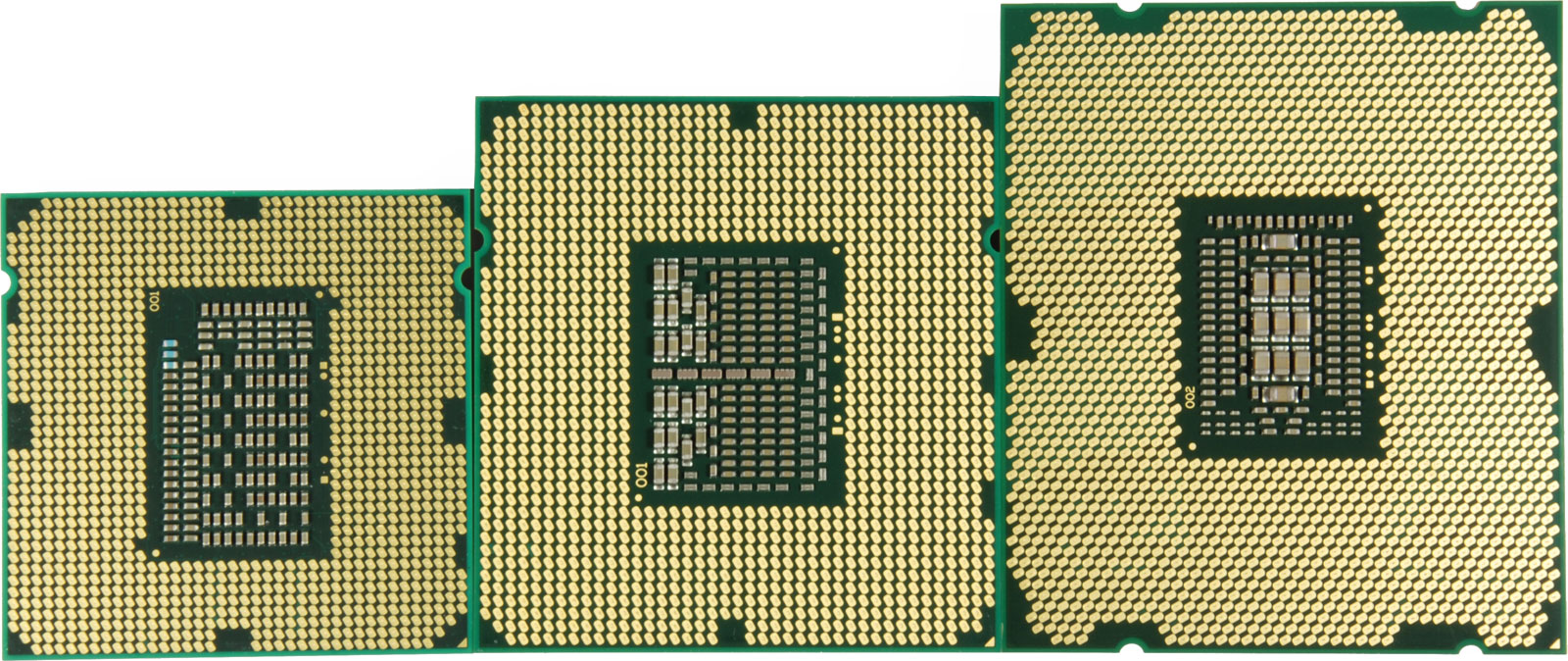

Marcus52 ReplyAnd then there's the Core i7-3820, which only sports four cores, but operates at a base clock rate of 3.6 GHz. Although this less-complex chip could probably hit higher Turbo Boost frequencies, Intel limits it to 3.9 GHz to keep it from outshining the top-end Core i7-3960X in single-threaded tasks.

Did someone at Intel tell you that was the reason for a lower Turbo Boost limit, or did you just assume it?

I think we should be careful of this kind of guess at another person's, or company's, reasoning. There could be some other cause for the limit - for example, they will obviously sell it for a lower price, so wouldn't a possible reason be they have looser binning specs to allow for chips that wouldn't make it under more strenuous tests through? (Remember, Intel, or any CPU manufacturer, doesn't warrant the product based on what it can be pushed to, and is generally going to provide it at a clock rate they feel is safe over time to guarantee.)

I'm certainly not saying it is a bad assumption, what you said makes sense to me, but I do think there are enough other reasonable possibilities that I wouldn't have stated it as a fact unless I knew it to be.

;) -

Marcus52 Thanks for the analysis!Reply

I do think articles like this are very important; those of us who overclock, especially when we turn off all the power-saving features in hopes of reaching that max stable a CPU can do, should be aware of how much money we are spending if we keep said OC. It's more than just the high end cooling solution.

The people that bash higher capacity PSUs could also stand to learn a thing or two, here. An overclocked CPU can require a huge amount of peak power over and above what a stock CPU needs (349W measured here). An overclocked Sandy Bridge-E and an overclocked GTX 580 could require a peak power of 650W just considering those 2 components!

A Kill A Watt or similar device is a great way to measure how much you actually spend a month operating your computer. You might be surprised.

;) -

giovanni86 Just a thought, so at 4.7Ghz the performance increase was only 16%? For being such a High overclock i was hoping for more then that. You guys literally upped the bar from stock clock to the OC clock by 1.4ghz, seems like a small increase in performance if you look at the amount of watts it takes.. Well at least its good 2 know my future billing of electricity will sure be expensive.. =PReply -

cangelini Marcus52Did someone at Intel tell you that was the reason for a lower Turbo Boost limit, or did you just assume it?I think we should be careful of this kind of guess at another person's, or company's, reasoning. There could be some other cause for the limit - for example, they will obviously sell it for a lower price, so wouldn't a possible reason be they have looser binning specs to allow for chips that wouldn't make it under more strenuous tests through? (Remember, Intel, or any CPU manufacturer, doesn't warrant the product based on what it can be pushed to, and is generally going to provide it at a clock rate they feel is safe over time to guarantee.)I'm certainly not saying it is a bad assumption, what you said makes sense to me, but I do think there are enough other reasonable possibilities that I wouldn't have stated it as a fact unless I knew it to be.Hence the "probably." Of course, we don't know for sure, nor would Intel ever admit as such, but it's an educated guess nonetheless. =)Reply