Stereo Shoot-Out: Nvidia's New 3D Vision 2 Vs. AMD's HD3D

Nvidia just updated its stereoscopic 3D ecosystem with 3D Vision 2. We show you what makes this initiative different and how it compares to the competition. Then, we benchmark GeForce and Radeon graphics cards in a no-holds-barred stereo showdown!

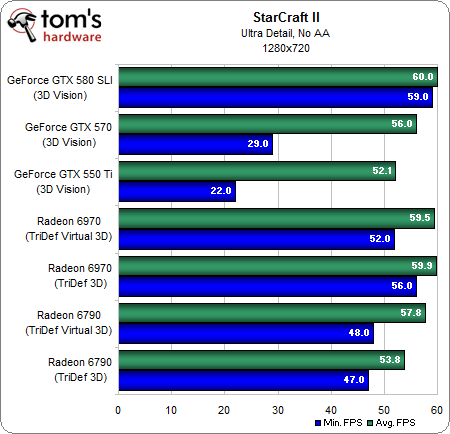

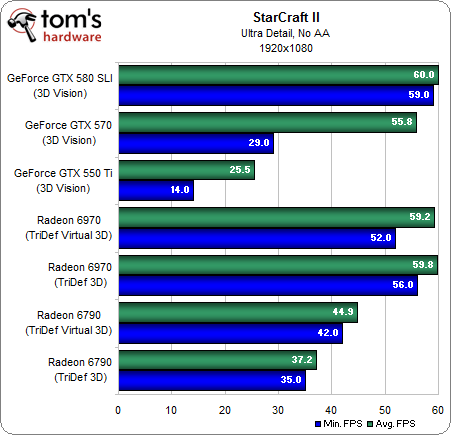

Benchmark Results: StarCraft II

An extremely popular Blizzard title, StarCraft II is a great place to start our performance comparison. This is generally a CPU-limited game, so the differences we see in frame rates should be mostly attributable to the overhead required by stereoscopic 3D rendering:

Without anti-aliasing enabled, the Radeons perform very well compared to Nvidia's GeForce cards. Only the GeForce GTX 550 Ti is unplayable at 1920x1080. Nevertheless, the GeForce GTX 580 SLI configuration delivers the best overall performance by a little bit.

How can this be? Two GeForce GTX 580s are significantly more powerful than a single Radeon HD 6970, right? Absolutely. The thing to remember is that running in stereo mode limits performance to a 60 FPS maximum (the display output has to be synchronized to the active shutter glasses, which give you 60 frames per second in each eye). Thus, in a processor-bound game, you'll find that the most expensive GPUs may serve up more performance than these technologies can exploit.

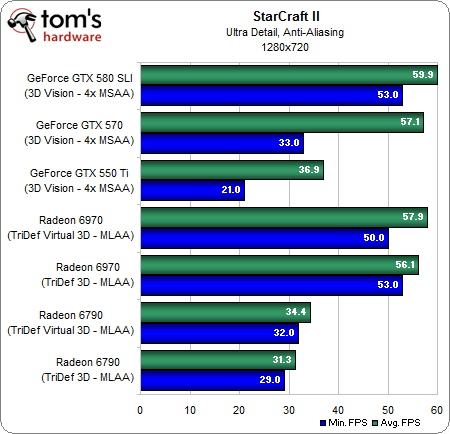

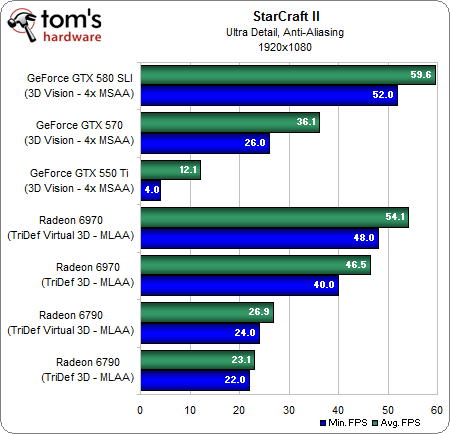

Now let’s add anti-aliasing to the mix. There’s no in-game anti-aliasing available in StarCraft II, so we have to force it through the driver. Multi-sampling does not work with the TriDef driver in this game, so AMD's Radeon cards have to be tested with morphological anti-aliasing (MLAA), a post-processing filter that hunts down jagged edges. Unfortunately, this doesn’t result in an apples-to-apples comparison. It's still relevant, though, because it demonstrates the realistic options available to each solution if you want to game with AA turned on.

With 4x anti-aliasing enabled, the GeForce GTX 580 SLI configuration still isn't being hamstrung, suggesting those two cards still have performance to deliver. AMD's Radeon HD 6970 finishes second, coming closer to its limit. It even manages to beat the GeForce GTX 570 in normal 3D mode. The Radeon HD 6790 fails to achieve 30 FPS at 1080p.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Benchmark Results: StarCraft II

Prev Page Test System And Benchmarks Next Page Benchmark Results: Lost Planet 2Don Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.

-

airborne11b I didn't see any mention of crosstalk *3D GHOSTING* in this article.Reply

Does this new nvidia vision 2 really reduce crosstalk? He'll it's even listed on the promotion of the product

http://media.bestofmicro.com/7/X/311325/original/Third%20Generation%203D%20Monitors.JPG

Yet I saw no mention of it in this article. Any word on how well it handles cross talking / 3D ghosting would be appreciated. -

de5_Roy the glasses look kinda dorky.. still waiting for glasses-free 3d. i'd rather use a 120 hz monitor instead of eye-hurting 60 hz ones (without 3d).Reply -

bystander It would appear that virtual 3D mode takes a lot less power to render a single image and extrapolate the other eyes image than it is to render two images independently in normal mode. This appears to be the only reason it does compete without crossfire support. This is both good and bad. It works in almost all situations, but never at great visual quality.Reply

I'd also like to point out that the lack of AA is not a big deal in 3D. I find I don't notice the same issues without AA in 3D. When the mind fuses two images together, it's not as bothered by AA. -

airborne11b greghomeIMO, 3D is still not as appealing and no as cheap as Eyefinity or 2D Vision Tri-Screen Gaming.Reply

I'm a fan of both 3x monitor set ups, but 3D is a lot cooler.

Problem with 3x monitors is the fish-eye effect that's very disturbing (and not fixable) in landscape mode. The best you can do with 3x monitors is use expensive 1920 x 1200 IPS monitors in portrait mode, but in this set up the bezels are normally cutting right through game HUDs / hotkey bars and puts the bezels far too close to your center of view.

Further more, for this kind of Eyefinity/Nvidia surround monitor set up, costs about $1200 - $1500. (Or even more expensive projector set ups that require a ton of space and cost as much or more if you want to try and get rid of the bezels)

Now consider Nvidia 3D. It adds amazing depth and realism to the games over 2D, doesn't have a negitive "fish eye effect", no bezels to deal with, same GPU power requirements as 3x monitors (or less), doesn't interrupt game huds or hotbars and only costs about $600-700 for the most expensive 27" screen + glasses combos. (even cheaper with smaller monitors.

The clear choice is 3D imo.

But 3x monitors is still much better then single 2D monitor. I rocked 5760 x 1080 in BFBC2, Aion and L4D for a long time :P.

3D is cooler though. -

billcat479 It seems people don't follow the news on this area very much. It's not sounding all that great.Reply

I guess most people haven't read that people using 3D tv's have been getting headaches and it's not a few but a lot of people.

They should have left it in the theaters.

I wouldn't be surprised to find if and when they do a good long term study of people using them long term in gaming start to have long term medical problems if or when they get around to doing good studies on the topic. I have read enough to stay away from this 3D glasses hardware. At best I'd only use it very little and for short term use.

They really do need to do medical testing on this because people are being effected by prolonged use of 3D glasses with tv. Add all day video gaming and I think there is a possibility small or large of long term or perm. damage to people. They dumped this on the market pretty fast without doing any studies that I know of but with the amount of people showing headaches I think it is getting more attention or should damm well start checking out the possibility of any chance of eye damage or worse.

Eyesight is pretty useful.

If they ever get a holographic display then I'd be into it.

-

amk-aka-Phantom Dirt 3, first benchmark: 6790 and 6970 should switch places! Right now 6790 is performing 5 times as good as the 6970 :D Fix that, please.Reply -

CaedenV @billcatReply

So shutter tech which has been around some 15 years is dangerous, but holographic tech which isn't really available yet would be good? I would think you would want to exercise caution with any new optical tech. Personally I am allergic to the laser-to-eye theory of hologram tech.

As for the article, it was a great review! Looks like the tech is still too high end for my budget, but I am sure they will iron out all the kinks by the time I am ready to replace my monitor (which wont be soon as I love the thing). I am really curious about how the next gen graphics cards will improve in this area! Cant wait for those reviews! -

airborne11b billcat479It seems people don't follow the news on this area very much. It's not sounding all that great. I guess most people haven't read that people using 3D tv's have been getting headaches and it's not a few but a lot of people. They should have left it in the theaters. I wouldn't be surprised to find if and when they do a good long term study of people using them long term in gaming start to have long term medical problems if or when they get around to doing good studies on the topic. I have read enough to stay away from this 3D glasses hardware. At best I'd only use it very little and for short term use. They really do need to do medical testing on this because people are being effected by prolonged use of 3D glasses with tv. Add all day video gaming and I think there is a possibility small or large of long term or perm. damage to people. They dumped this on the market pretty fast without doing any studies that I know of but with the amount of people showing headaches I think it is getting more attention or should damm well start checking out the possibility of any chance of eye damage or worse. Eyesight is pretty useful. If they ever get a holographic display then I'd be into it.Reply

This is the kind of uninformed, ignorant posts that irritate me. Interweb wanna be docs who don't know how the human body works. Allow me to educate you.

People used to say that "reading a book in the dark" or watching TV or normal PC monitor "too close" would "damage your eyes". In fact we know today that eye sight degeneration has a few factors, none of which are from normal straining.

The most common cause of eye sight degeneration is Presbyopia (from the normal aging process, where the lens progressively loses its capacity to increase its power for near vision)

Also, UV rays degenerate tissue so it's recommended you way UV protective sunglasses when outside in daylight. UV rays can cause your eyesight to weaken over time.

Also refractive error(Common in people ALL ages): A condition may be either because the eye is too short or long in length, or because the cornea or lens does not have the required refractive power. There are three types of refractive errors which are Myopia (near-sight), Hypermetropia (long-sight) and Astigmatism which is the condition where the eye does not focus the light evenly, usually due to the cornea of the eye being more curved in one direction than the other. It may occur on its own or may be associated with myopia or hypermetropia.

The very worst thing that 3D vision can do in terms of negitive health effects, is the same EXACT effects of reading too much, IE; an extremely short term headache. To cure the headache TAKE A BREAK.

Also, as you build tolerance to 3D vision (As I have just after a week or 2 of consistent use) the headaches go away. Also Nvidia 3D settings allow you to adjust the depth of the 3D, less depth = less strain and you can progressively increase 3D as you build tolerance to the use of 3D monitors.

In closing, don't post nonsense about what you don't understand. It makes you look stupid. -

oneseraph I normally don't chime in however in this case I just have to say "Who Cares"? Every time I see an article about 3D graphics and 3D display tech there are always excuses for the technology not being ready. Did I misunderstand or did this article point out that neither Nvidia or AMD have a ready for prime time product. So why are they releasing this crap to us consumers and calling it a feature? When the truth is it Tech that still belongs in the lab. Come on, if you bought a blender that would blend strawberries but would not work if you put bananas in it you not only return the item but in all likelihood there would be a class action suite against the manufacturer. In short for right now 3D is just not ready. The marketing departments of both Nvidia and AMD are being more than a little dishonest about they're respective 3D features. There are lots of good reasons to buy a new graphics card. Just don't be fooled into thinking that 3D is one of them.Reply