Overclocking GeForce GTX 1080 Ti To 2.1 GHz Using Water

Our launch coverage showed that Nvidia's GeForce GTX 1080 Ti Founders Edition is held back by its stock cooler. So, we replaced the vapor chamber and centrifugal fan with a custom water-cooling loop. Was it worth it? Absolutely!

Overclocking, Efficiency, Heat, And Conclusion

Overclocking and Stability

Surprisingly, our water-cooled Founders Edition card made it all the way to 2.1 GHz stably. It's possible that Nvidia sent us a golden sample, so there's no way to know if our result is representative of the cards available to everyone. GPU Boost takes the clock rates down by one notch as soon as the sensor hits 40°C. Consequently, we configured our fans and pump to keep the card under 45°C at all times.

To start, we looked at how high the GeForce GTX 1080 Ti Founders Edition would jump at its stock settings. Then, we slowly increased its base frequency, eventually leveling off at a GPU Boost clock rate of 2.1 GHz at 30°C. Ideally, we'd hit the same frequency at 40-44°C using more conservative settings, so long as nothing else throttles the GPU.

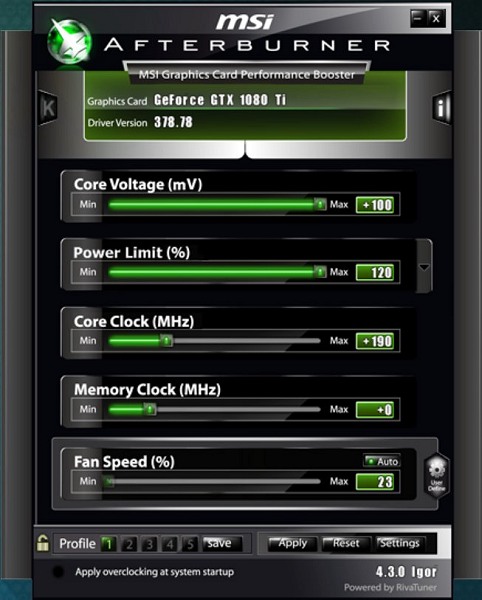

We're fortunate enough to have a version of MSI’s Afterburner utility unlocked especially for us. If you want access to similar settings before a new version of Afterburner is released, you can manually add your 1080 Ti to the third-party database using the VDDC_Generic_Detection entry under the VEN_10DE&DEV_1B06&SUBSYS_120F10DE&REV_?? key. A quick search online should turn up plenty of in-depth instructions on how to do this.

Despite setting the power limit to 120%, it didn't take long for us to run into Nvidia’s built-in limiter. Overclocking is pointless if it doesn't result in a stable and consistent experience. That's why we use an hour-long combination of Metro: Last Light at 4K, The Witcher 3 at QHD, and Ghost Recon Wildlands at Ultra HD. We further pushed stability by running rendering tasks using 3ds Max, Nvidia Iray, LuxRender, and FurMark’s one-hour stress test.

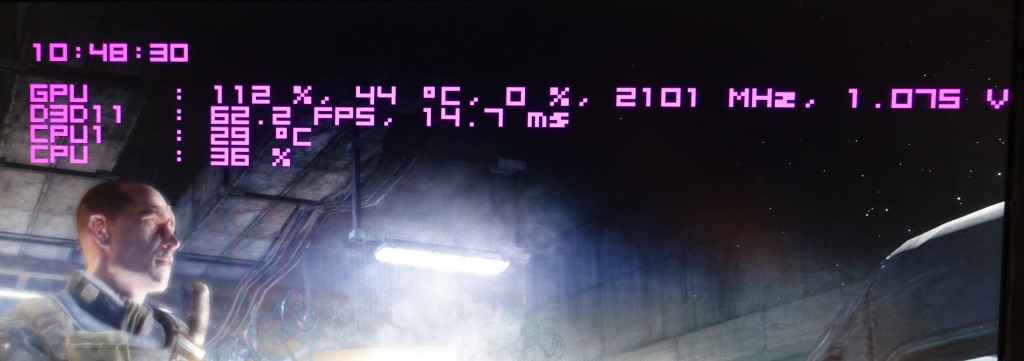

A quick glance at the power target display reveals 112%. This poses some questions that need to be answered, since neither the GPU frequency nor the power consumption was stable. Usually, the overlays applied by diagnostic utilities (like the one pictured above) are only refreshed once per second. That's too long of an interval; as we know, a lot can happen inside of one second.

Realized Clock Frequency and Power Consumption

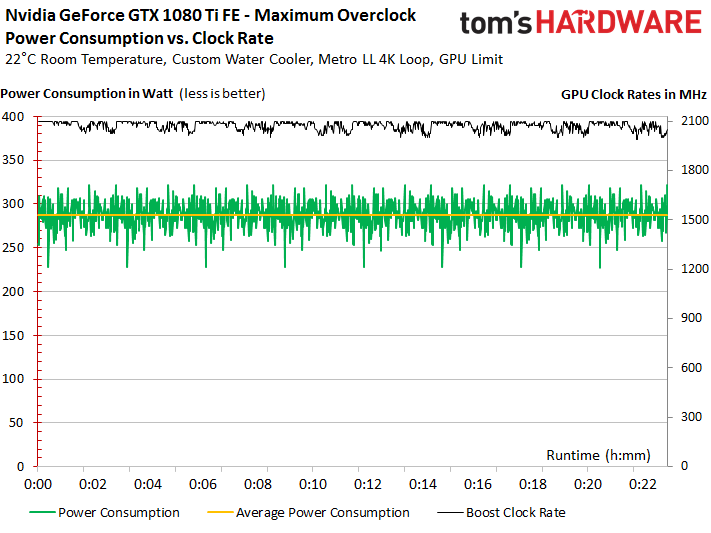

As an example, we're using Metro: Last Light at Ultra HD because it presents a fairly consistent load profile. The Witcher 3 gave us some peaks that exceeded the ones we saw under Metro: Last Light, but its average load during longer gaming sessions was lower. Furthermore, Metro's scripted benchmark heats up graphics cards more than most other sequences.

Our GeForce GTX 1080 Ti does hit 2101 MHz during the Metro: Last Light and The Witcher 3 tests. However, GPU Boost clock rates fluctuate depending on load, dropping to 2088 MHz fairly often. The frequency even falls to 2 GHz on occasion, only to quickly recover.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The recorded power limit curves tell us that our 120% setting is just a maximum. It's never actually hit. There are brief excursions up to 119% that last for fractions of a second, but the limit immediately retreats back to 110-112%. The average hovers right around 114 to 115%.

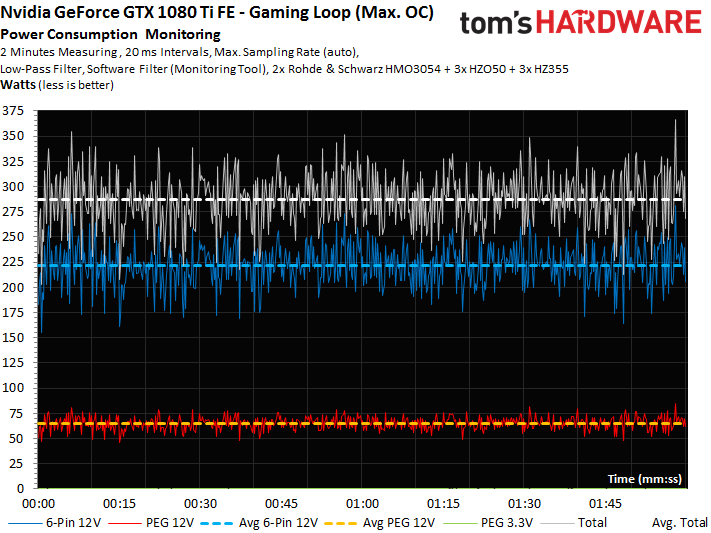

This result corresponds with our average power consumption result, which lands at 287W across all eight test runs. We used an intelligent low-pass filter and a special form of resampling to keep the amount of data generated by our measurements manageable (as many as 6000 values per second would have quickly overwhelmed Excel).

A higher-resolution graph of one test loop shows just how frantically GPU Boost tries to intervene and limit power consumption. The simplified summary in the graph gives us an average of 287.1W, while the more detailed one below, without resampling, yields 286.9W. The two results are practically identical; the difference is within the measurement's margin of error, proving our filtered data is accurate.

Some plausible peaks do break through the 300W barrier, but the average gaming power consumption lands just shy of 290W. Measurements in excess of 300W on a card that hasn't been modified are quite simply false. Nvidia's protections make sure of this.

MORE: Best CPU Cooling

MORE: How To Choose A CPU Cooler

MORE: All Cooling Content

Efficiency at Different Clock Frequencies

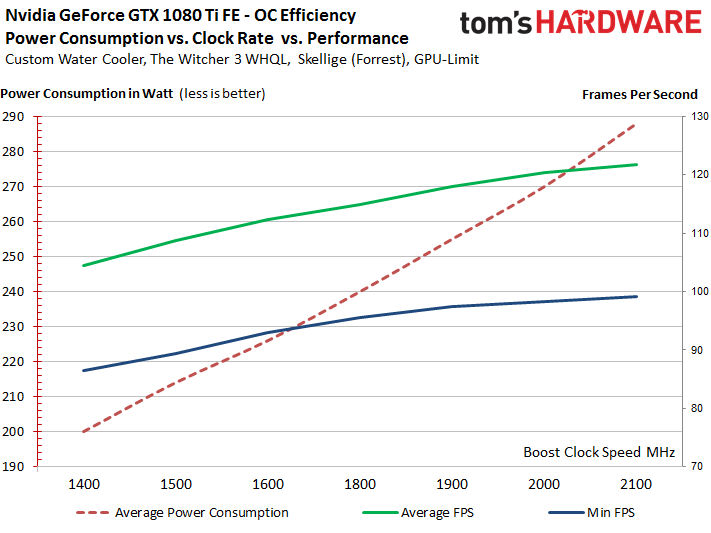

Let’s take a look at The Witcher 3. Several readers have asked us to use a game other than Metro: Last Light, so here we go. After a bit of experimentation, we found that a higher frame rate at 2560x1440 produces a more consistent load than hammering the card at 3840x2160 in this title, so that's what we're using.

To evaluate efficiency, we increased the clock rate in 100 MHz increments along the achievable GPU Boost frequency curve. Since GPUs fall into different quality categories, we didn’t manually undervolt, but rather set a plausible base frequency and then lowered the power target until we hit a sweet spot for consistent operation.

We completed five runs for each frequency step, and discarded the best and worst outcomes. Averaging data from the remaining three runs gave us a final result for that clock rate. Naturally, the power consumption curve gets steeper with increasing frequency, whereas the curves for the average and minimum FPS show the opposite pattern. Interestingly, this trend is more pronounced for the minimum than for the average frame rate.

Impressive speed-ups are available almost all the way to GP102's maximum clock rate, even if performance doesn’t scale perfectly. In other words, more consistent GPU Boost frequencies would translate to even higher real-world FPS. Seeing that this would require a shunt mod (like the one we've seen applied to Nvidia's Titan X), gunning for even more performance isn't a trivial matter. Our German lab only has one GeForce GTX 1080 Ti right now, so we're not risking it at this point.

Temperatures and Infrared Measurements

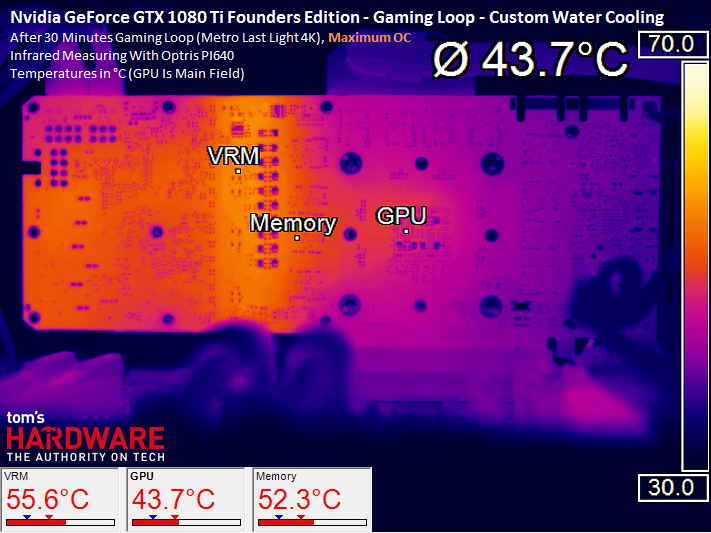

High GPU Boost frequencies like the ones we're seeing require GPU temperatures in the 40°C range. The only way to achieve that is by using a open-loop water-cooling setup. Our solution produced a delta of just 7°C between the temperature of the GPU and the water exiting its block. Consequently, we skipped Aquacomputer’s active backplate. The measurements were taken after a 30-minute warm-up phase in our closed bench table.

The results are great: even with Nvidia's GeForce GTX 1080 Ti Founders Edition running at its peak overclock, the GPU's temperature never exceeds 44°C.

| Peak Temperatures | |

|---|---|

| GPU Diode | 44°C (GPU-Z) |

| GPU Package | 43.7°C (Infrared Camera) |

| Water (In) | 28.2°C (Sensor in Fitting) |

| Water (Out) | 36.7°C (Sensor in Fitting) |

| VRM Hot-Spot | 55.6°C (Infrared Camera) |

| Memory Block | 52.3°C (Infrared Camera, Area with Highest Temperature) |

| Ambient Temperature | 22.1°C (Infrared Camera, Reference Measurement Area) |

Our thermal readings, which come from Optris' PI640 infrared camera, document the solid copper block's enormous cooling performance.

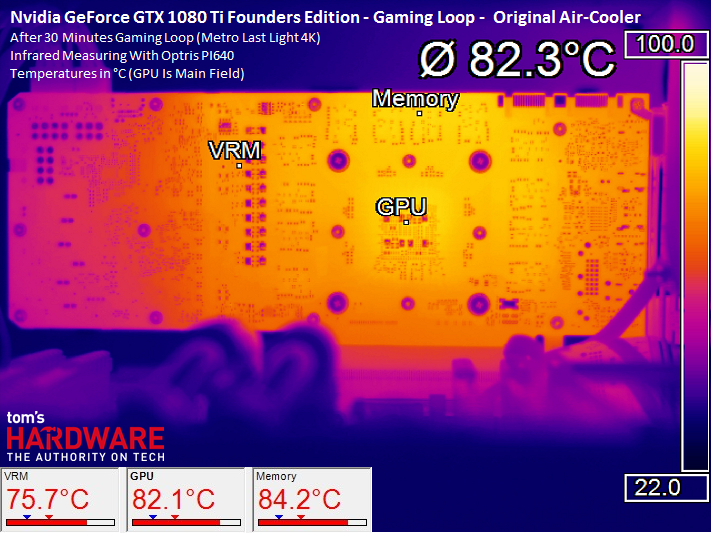

A comparison to the stock air cooler is just sad.

Summary and Conclusion

The GeForce GTX 1080 Ti Founders Edition, just like Nvidia's Titan X (Pascal) before it, is a monster of a graphics card when you augment its cooling. On air, the board doesn't really make it past 1.9 GHz consistently, and even that requires you pay a price in extremely high noise levels. Enthusiasts spending $700 for high-end graphics might want to consider investing in water-cooling as well to realize the card's full potential.

A water block does cost upwards of $100 per card, but the rest of the loop can last for many years if it’s maintained well. Complete kits are available for as little as $300 (without the graphics card block), which makes sense as a one-time expense. If you have the spare cash, we think you'll enjoy the results. Otherwise you'll probably want to buy a good set of non-vented headphones instead.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

- 1

- 2

Current page: Overclocking, Efficiency, Heat, And Conclusion

Prev Page Building A Water-Cooled Nvidia GeForce GTX 1080 Ti Founders Edition-

sparkyman215 Mm I love watercooling. I may have missed it, but what are the fps differences with oc?Reply -

FormatC Please take a look at page Two. You find the different FPS, 100 MHz wise (Efficiency) ;)Reply

-

AlexanderVFD You mention using "digital sensors". Now, I'm only an industrial maintenance technician that maintains a 7000T drop forge, so I could be mistaken-- Last I checked, switches are digital(on/off), and sensors provide an analogue signal, via 4-20mA, 2-8VDC, or 0-10VDC. Can you please elaborate on this digital sensor?Reply -

Rookie_MIB Reply19419419 said:You mention using "digital sensors". Now, I'm only an industrial maintenance technician that maintains a 7000T drop forge, so I could be mistaken-- Last I checked, switches are digital(on/off), and sensors provide an analogue signal, via 4-20mA, 2-8VDC, or 0-10VDC. Can you please elaborate on this digital sensor?

You're confusing digital with binary. A switch is binary (on/off). Digital means (in this case) - not analog. It might use somewhat analog material properties in determining the temperature - as in reading a thermistor built into the electronic IC circuit - but it returns a full digital result in degrees C (normally) and has all the electronics necessary to report a result.

An analog temp sensor equivalent would be the actual thermistor itself. But, you would need the other parts necessary to determine the temp. Something to measure the resistance of the thermistor, then the conversion tables for the materials to convert the resistance readings into actual temperatures.

A digital circuit isolates the end user from all that and just returns a result calculated from it's digital circuitry. -

Sayasith I'm looking to get the same watercooling build. Can anyone tell me what type of tubing is that? those look way more simple than regular tubing.Reply -

Terry Perry And in another Year or so All new cards will be out Beating this. Look how many T.I.'s there have been in 6 years. This card is Still not ready for full 4K. VERY CLOSEReply -

Stubbies Sparkyman215 I think I get what you are aiming for and it is a rather interesting oversight that they didn't graph a stock one next to these results but thankfully since the 1080Ti FE was also tested on The Witcher 3 at the same resolution we can pull numbers from that one for a rough comparison.Reply

The look at the FE on The Witcher 3 produced 96 FPS min and 114.8 FPS average and that *should* be somewhere in the stated 1480-1580 GPU boost range quoted. That does match up decently at the close to 1600 level so adding in those extra 500 MHz looks like it hit 99 FPS min and 121 or 122 Average. Roughly 3 extra FPS on the min and 6 or 7 FPS for the average. -

Achaios Don't think it is worth it to spend $699 and an additional $399 for a Custom Water Loop, not to say anything about work required to set this up properly (abt 2 days), on a card that will be completely outclassed in every possible way by the next NVIDIA architecture to come around Dec 2017.Reply -

JackNaylorPE Reply19419201 said:Please take a look at page Two. You find the different FPS, 100 MHz wise (Efficiency) mg]

I interpreted his message as asking for a comparison of the results with the stock card versus (w/ air cooler) versus the same card with water block ... perhaps because I was also looking for the same thing (w/o having to flip back and forth between the two articles.

19419828 said:Don't think it is worth it to spend $699 and an additional $399 for a Custom Water Loop, not to say anything about work required to set this up properly (abt 2 days), on a card that will be completely outclassed in every possible way by the next NVIDIA architecture to come around Dec 2017.

That's not really a fair comparison.

1. One analogy I find appropriate is when folks say , well it's not worth it to spend $120 instead of $100, a 20% increase in cost, for faster RAM when average gains in gaming fps are 2 -3 % (actual 0% say for Metro - 11% for F1). However, that's a false equivalency because the entire system is going faster, not just the RAM, so the increase in cost on a $1,500 system for boosting the RAM speed is just 1.33%

2. That water block is not just bringing a performance increase, it's drastically reducing noise, temperatures and improving aesthetics. It's also bringing home more stable CPU OCs.

3. The loop was already there. If desired, you can get a prefilled block or even a card with prefilled water block installed, if time is a concern reducing your additional install time to seconds.

https://www.youtube.com/watch?v=Sq4iNbCD844

4. Two days ? Unless you are doing a build w/ custom bends and rigid acrylic tubing, it won't take two days. Adding a water block to a Swiftech AIO for example:

Attach block = 20 minutes.

Install 2nd radiator = 10 minutes

Install (4) G-1/4 compression fittings = 5 minutes

Cut and install tubing = 15 minutes

Drain, mix and add coolant = 20 minutes

Then you are going to let it run for an hour, come back and bleed it a bit ... rinse and repeat at 4 hours and 24 hours but if it tales more than 90 - 120 minutes total of actual time investment, that would be unusual.

Installing the Swiftech at the same time would add 20 minutes tops.

5. Yes the next architecture will top it ... but so will the next one so that kinda kills the relevance. Typically, folks keep GFX cards anywhere from 2 - 6 years except for the minority of "must have latest new thing" folks. You already built the box, you already bought the Ti, so the real additional cost is the $125 block, $80 2nd radiator (if 1st one wasn't big enough, the (4) fittings ($50 for fancy smancy ones) , coolant ($12) and tubing ($8).

6. Personally, with no performance increase, I'd have no issue forking over $300 for the silence alone.

But ultimately, purchase decisions are a cost / benefit analysis where the value gained from the purchase is weighed against the value spent. As each individual's goal's are different, and each person's disposable income / financial obligations are different, a sound decision for one is a ridiculous decision for another. As far as water cooling a GFX card goes, the only decision I would argue against is doing it on a FE card as the value gained from the investment is going to be somewhat gimped by the limitations of the reference PCB itself.

Of course, Boost 3 is already nerfing performance on the 10xx series, and as of yet no one has as yet cracked the BIOS and developed a BIOS Editor which might allow one to push back these limits. So it remains to be seen just how much this will be a factor in WC can take things. In the last two generations, nVidia has nerfed what AIB partners can accomplish, (both legally and physically) and rendered the extreme cards (Lightning, Matrix, Classified) somewhat irrelevant given their substantial additional cost. But last generation, the "what we could do part" increased a bit w/ each jump towards the top tier. We'll have to wait till the AIB cards are tested to see what's going to be possible It's great to see just what could be accomplished with the new TI, but still, I wouldn't invest $250 or more in adding GFX water cooling w/o spending the extra $20 on an AIB card.