GeForce GTX 480 And 470: From Fermi And GF100 To Actual Cards!

Power Consumption And Heat

Here’s where things get dicey. I knew going into this story that the GeForce GTX 480 and 470 would be hot, power-hungry boards—Nvidia told me as much back in January. But measuring the extent of those values is an unscientific practice, at best.

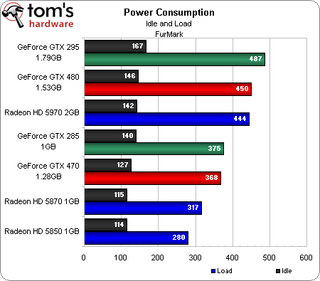

FurMark is generally frowned upon as an unrealistic representation of peak power (a power virus, as AMD’s Dave Baumann puts it). However, it does serve as a theoretical worst-case scenario. Indeed, while the GeForce GTX 480 doesn’t use as much power as the dual-GPU GeForce GTX 295, it does out-consume the dual-GPU Radeon HD 5970 (no small feat, at 450W system power draw). Also, the GeForce GTX 470 uses significantly more power than the Radeon HD 5870.

Notably-missing from the chart is ATI’s Radeon HD 4870 X2, which we know from past reviews to chew up about as much power as Nvidia’s GeForce GTX 295. However, neither of the X2s in the lab seem to respond to FurMark at all anymore, running at a constant 13 frames per second or so and chewing up slightly-higher-than-idle power numbers. Maybe ATI “cured” that virus with forced lower frequencies in FurMark (the X2 was only able to hit 13 FPS or so, while our other cards were doing 40 or 50 FPS). But that doesn’t prevent the X2 from jumping into the 400+ watt system power range in actual games.

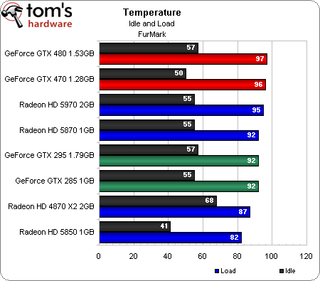

Heat jumps in FurMark as well, though it’s worth noting that none of these boards encountered heat-related stability issues. Keeping up with the thermals does mean the GeForce GTX 480’s fan ramps up fairly aggressively and does generate quite a bit of noise. However, we were unable to replicate that behavior in any real-world gaming load.

We’re actually a bit surprised about the idle power numbers as they were measured. AMD impressed us with the Radeon HD 5870’s 27W idle board rating, achieved in part by clocking its GPU down to 157 MHz and its GDDR5 memory to 300 MHz. Nvidia goes even further, dropping clocks to 50 MHz core, 67 MHz memory (270 MT/s data rate), and 100 MHz for the shaders. Nvidia doesn’t cite its idle board power, but an educated guess would still put the GeForce GTX 480 around 60W at those frequencies.

Just how do the GeForce GTX 480 and 470 size up in such a real-world load? Great question.

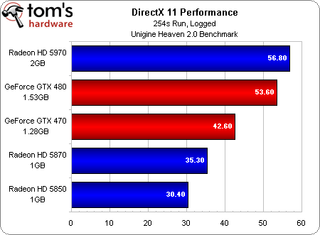

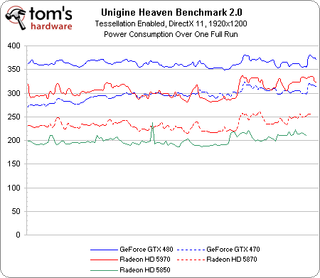

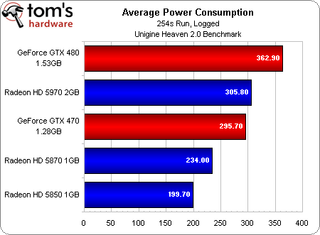

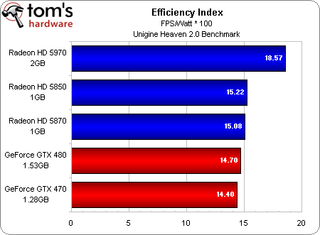

I ran all of the DirectX 11 cards in our story through the Unigine v2.0 benchmark, measuring average performance in frames per second. During the run, I had each configuration hooked up to a logger, polling power consumption every two seconds, yielding an average over the run. By dividing power use into average performance, we get an index that should give efficiency advocates something to think about.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Despite its aggressive power use, the Radeon HD 5970’s performance is enough to make it the most efficient board in the lineup, followed by the Radeon HD 5850 and Radeon HD 5870. Nvidia’s GeForce GTX 480 and GTX 470 pull up the rear. What I'm wondering is this: Nvidia rates the GTX 480 with a 250W maximum board power. AMD cites 294W for the 5970. Why do we keep seeing Nvidia's card using more system power?

Admittedly, these results are easily skewed—we can drive them one way or the other by hand-picking certain setting to cater to one or another architecture’s strengths. For example, turning off tessellation would give the Radeons a sizable advantage, since they take a more substantial hit when the feature is enabled. In fact, a couple of days before the launch, Unigine released v2.0 of the Heaven test, which adds even more emphasis on tessellation than the first revision used to generate our first batch of results. Thus, our numbers represent a best-case scenario for Nvidia; easing up on the tessellation load shifts the efficiency index even further in favor of the Radeon HD 5800-series cards, and we have charts demonstrating that, too.

Current page: Power Consumption And Heat

Prev Page Dual-Card Scaling: GeForce GTX 480 In SLI Next Page Conclusion-

restatement3dofted I have been waiting for this review since freaking January. Tom's Hardware, I love you.Reply

With official reviews available, the GTX 480 certainly doesn't seem like the rampaging ATI-killer they boasted it would be, especially six months after ATI started rolling out 5xxx cards. Now I suppose I'll just cross my fingers that this causes prices for the 5xxx cards to shift a bit (a guy can dream, can't he?), and wait to see what ATI rolls out next. Unless something drastic happens, I don't see myself choosing a GF100 card over an ATI alternative, at least not for this generation of GPUs. -

tipoo Completely unimpressed. 6 months late. Too expensive. Power hog. Performance not particularly impressive. The Radeon 5k series has been delivering a near identical experience for 6 months now, at a lower price.Reply

-

tpi2007 hmmm.. so this is a paper launch... six months after and they do a paper launch on a friday evening, after the stock exchange has closed.. smart move by Nvidia, that way people will cool off during the weekend, but I think their stocks won't perform that brilliantly on monday...Reply -

Godhatesusall high power consumption, high prices along with a (small, all things considered) performance edge over ATI is all there is. Are 100$ more for a gtx 480 really worth 5-10% increase in performance?Reply

Though the big downside of fermi are temps. 97 is a very large(and totally unacceptable) temperature level. IMO fermi cards will start dying from thermal death some months from now.

I just wanted competition,so that prices would be lower and we(the consumers) could get more bang for our buck. Surely fermi doesnt help alot in that direction(a modest 30$ cut for 5870 and 5850 from ATI and fermi wont stand a chance). It seems AMD/ATI clearly won this round -

Pei-chen Wow, it seems Nvidia actually went ahead and designed a DX11 card and found out how difficult it is to design. ATI/AMD just slapped a DX11 sticker on their DX10 card and sells it as DX11. In half a year HD 5000 will be so outdated that all it can play is DX10 games.Reply -

outlw6669 Kinda impressed :/Reply

The minimum frame rates are quite nice at least...

Lets talk again when a version with the full 512 SP is released.

Most Popular