GeForce GTX 480 And 470: From Fermi And GF100 To Actual Cards!

Dual-Card Scaling: GeForce GTX 480 In SLI

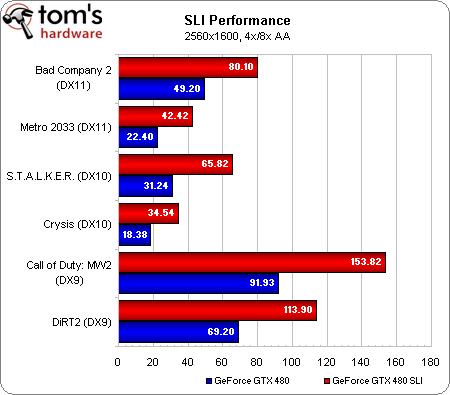

We were able to get our hands on a second GeForce GTX 480 late in the game for some multi-GPU testing. And although we weren’t able to run the configuration through the complete game suite, we did run Metro 2033, S.T.A.L.K.E.R.: CoP, Crysis, Call of Duty: Modern Warfare 2, and DiRT 2 at 2560x1600 with 4x MSAA and Battlefield: Bad Company 2 at the same resolution with 8x MSAA.

Naturally, slinging a pair of high-end graphics cards makes the most sense to gamers running high-resolution screens. Almost across the board (Crysis is debatable), 2560x1600 with anti-aliasing enabled becomes a very real option. And once 3D Vision Surround becomes testable, you’ll almost certainly want the fastest cards you can get your hands on in order to achieve playable frame rates at, say, 5760x1200 in stereoscopic mode. Last-generation’s cards might be supported, but we're almost positive that they'll be too slow for the quality settings to which enthusiasts have grown accustomed, as scaling between the two cards isn’t expected to be as smooth as what we’ve seen from SLI.

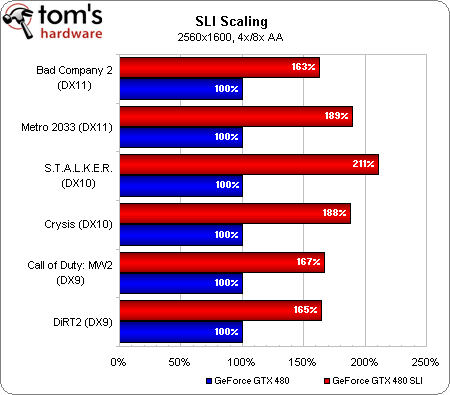

The second chart maps out scaling from one card to two. In the worst-case scenario, SLI yields a 63% speed-up—not bad for a brand new game like Bad Company 2. In S.T.A.L.K.E.R.: CoP, the performance increase is more than double. Yes, we re-ran those numbers; they’re consistent. Despite the degree of horsepower wielded by the latest generation of GPUs, it’s still easy to slam into graphics bottlenecks. A pair of GeForce GTX 480s in SLI shows that at the highest resolutions, a second card can come close to doubling performance—the fastest frame rates you’re likely to see for a while, since power constraints mean it’s unlikely there is a dual-GPU card to anticipate.

Do bear in mind that there are some system builder considerations here. We had two expansion slots between cards on the Gigabyte X58A-UD5 motherboard used for testing. Nvidia recommends that, on boards with three slots available, you use the first and third, leaving room between. In short, GTX 480s back to back is a bad idea. A beefy power supply is a given, though Nvidia hasn’t yet recommended the best wattage for a dual-GPU setup.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Dual-Card Scaling: GeForce GTX 480 In SLI

Prev Page Benchmark Results: Battlefield: Bad Company 2 (DirectX 10/11) Next Page Power Consumption And Heat-

restatement3dofted I have been waiting for this review since freaking January. Tom's Hardware, I love you.Reply

With official reviews available, the GTX 480 certainly doesn't seem like the rampaging ATI-killer they boasted it would be, especially six months after ATI started rolling out 5xxx cards. Now I suppose I'll just cross my fingers that this causes prices for the 5xxx cards to shift a bit (a guy can dream, can't he?), and wait to see what ATI rolls out next. Unless something drastic happens, I don't see myself choosing a GF100 card over an ATI alternative, at least not for this generation of GPUs. -

tipoo Completely unimpressed. 6 months late. Too expensive. Power hog. Performance not particularly impressive. The Radeon 5k series has been delivering a near identical experience for 6 months now, at a lower price.Reply

-

tpi2007 hmmm.. so this is a paper launch... six months after and they do a paper launch on a friday evening, after the stock exchange has closed.. smart move by Nvidia, that way people will cool off during the weekend, but I think their stocks won't perform that brilliantly on monday...Reply -

Godhatesusall high power consumption, high prices along with a (small, all things considered) performance edge over ATI is all there is. Are 100$ more for a gtx 480 really worth 5-10% increase in performance?Reply

Though the big downside of fermi are temps. 97 is a very large(and totally unacceptable) temperature level. IMO fermi cards will start dying from thermal death some months from now.

I just wanted competition,so that prices would be lower and we(the consumers) could get more bang for our buck. Surely fermi doesnt help alot in that direction(a modest 30$ cut for 5870 and 5850 from ATI and fermi wont stand a chance). It seems AMD/ATI clearly won this round -

Pei-chen Wow, it seems Nvidia actually went ahead and designed a DX11 card and found out how difficult it is to design. ATI/AMD just slapped a DX11 sticker on their DX10 card and sells it as DX11. In half a year HD 5000 will be so outdated that all it can play is DX10 games.Reply -

outlw6669 Kinda impressed :/Reply

The minimum frame rates are quite nice at least...

Lets talk again when a version with the full 512 SP is released.