GeForce GTX 480 And 470: From Fermi And GF100 To Actual Cards!

Tessellation And Anti-Aliasing

Two of the most notable areas Nvidia chose to zero in on with its Fermi architecture were geometry and anti-aliasing performance. Each is affected in a different way, but the concept is similar for both: enable more realism and improve image quality.

Tessellation: Standardized In DirectX 11

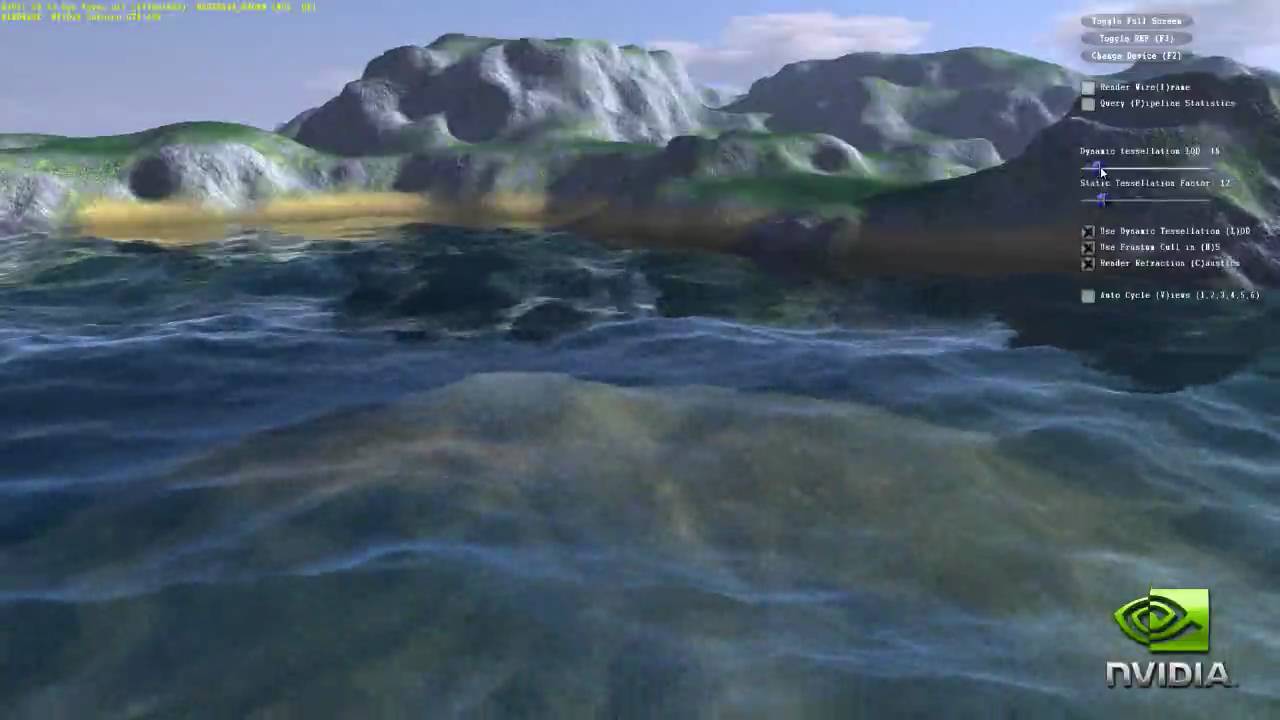

Tessellation is nothing new, but it’s suddenly the buzzword du jour now that it’s a feature of DirectX 11 (and not just limited to one vendor’s implementation). In the six months or so since Microsoft’s latest API made its debut, we’ve seen a couple of games with tessellation support, but they’ve frankly not lived up to what we we’d been amped up for by AMD. We’ve since seen some impressive demos from Nvidia, and the promise of what well-deployed tessellation can do certainly looks good.

Each of the GeForce GTX 480’s 15 Shader Multiprocessors and each of the GTX 470’s 14 SMs includes its own PolyMorph engine, which works with the rest of the SM to fetch vertices, tessellate, perform viewport transformation, attribute setup, and output to memory. From our earlier look at the GF100 GPU:

In between each stage, the SM handles vertex/hull shading and domain/geometry shading. From each PolyMorph engine, primitives are sent to the raster engine, each capable of eight pixels per clock (totaling 32 pixels per clock across the chip).

Now, why was it necessary to get more granular about the way geometry was being handled when a monolithic fixed-function front-end has worked so well in the past? After all, hasn’t ATI enabled tessellation units in something like six generations of its GPUs (as far back as TruForm in 2001)? Ah, yes. But how many games actually took advantage of tessellation between then and now? That’s the point.

Ever since the days of Nvidia’s GeForce 2 architecture, we’ve been hearing about programmable pixel and then vertex shading. Now we’re getting some very impressive shaders able to add tremendous detail to the latest DirectX 9 and 10 games (Nvidia claims a 150x increase in shading performance from the GeForce FX 5800-series to GT200). But I know we’ve all seen some of the terri-bad geometry that totally ruins the guise of realism in our favorite games. Purportedly, the next frontier in augmenting graphics realism involves cranking the dial on geometry.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

…in order to facilitate the performance needed to make tessellation feasible, Nvidia had to shift away from that monolithic front-end and toward a more parallel design. Hence, the four raster and (15 or 14, depending on the card) PolyMorph engines. The company naturally has its own demos that show how much more efficient GF100 is versus the Cypress architecture, which employs the “bottlenecked” monolithic design—however, we’ll want to compare the performance of a title like Aliens Vs. Predator from Rebellion Developments with tessellation on and off to weigh a more balanced app. Up front, though, Nvidia claims that GF100 enables up to 8x better performance in geometry-bound environments than GT200.

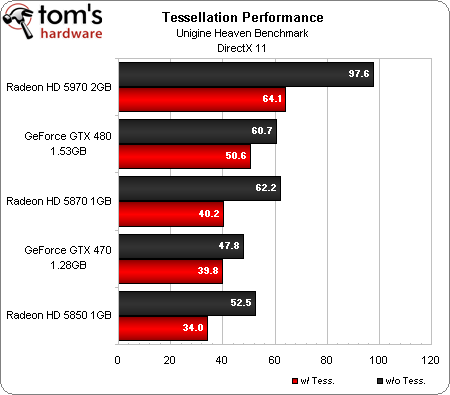

It turns out the AvP isn’t the best game to measure tessellation performance—the technology is only applied to alien models, and it’s hardly a “geometry-bound environment.” Thus, we ran the Unigine Heaven 1.0 benchmark on all of our DirectX 11-capable cards to better gauge the efficiency of each architecture when tessellation is applied:

The way these cards fall is fairly expected, but it’s interesting to note the drop-off in performance when turning tessellation on. The Radeon HD 5970, 5870, and 5850 retain 66%, 65%, and 65% of their original performance, respectively. The GeForce GTX 480 and 470 retain 83% and 83% of their performance, respectively.

It’s fair to say that the Fermi architecture certainly gives up less performance than AMD’s design. But remember this is one component of next-generation games, and it’s not yet being used as intensively as the latest demos might suggest. For that, we’ll need mid-range cards enabling the technology in a broader market. AMD has them, but Nvidia still doesn’t. As DirectX 11 hardware sees greater adoption, game developers will dedicate more attention to enhancing their titles with it.

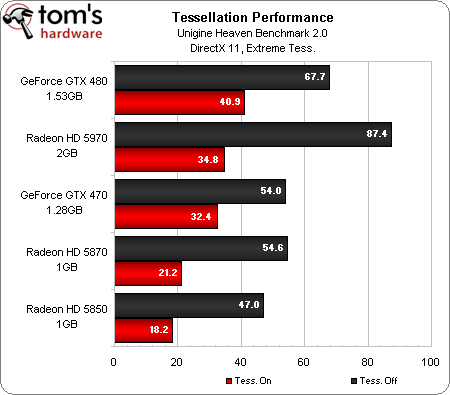

Update: If you want to get even more theoretical about the way GF100's distributed PolyMorph design handles tessellation scaling, check out the following chart:

This is the Unigine Heaven 2.0 benchmark cranked all the way up to Extreme. Though it's a fairly unrealistic implementation of tessellation (there's diminishing returns on the geometry created here), it conveys how well GF100 handles an increased tessellation load versus Cypress. GeForce GTX 480 is retaining 60% of its performance, while Radeon HD 5870 is down to 39% of its baseline frame rate.

Anti-Aliasing: Now with More Vitamin CSAA

The concept of burning through horsepower came up when we started talking about the Radeon HD 5800-series cards, and it’s relevant here, too. AMD and Nvidia both have all of this potential performance at their disposal. AMD is using features like Eyefinity to expand beyond 30” LCDs to render across larger surfaces, making the case for higher-end GPUs. Nvidia is using 3D Vision Surround to do the same thing, plus throwing more complex PhysX effects at the graphics processor.

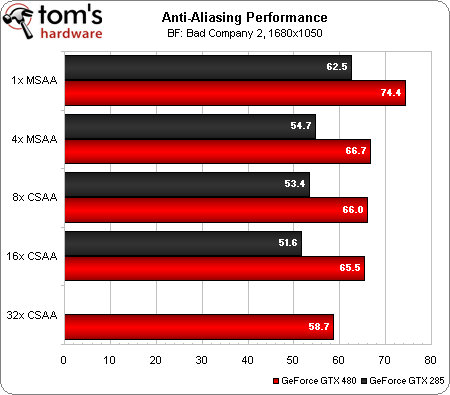

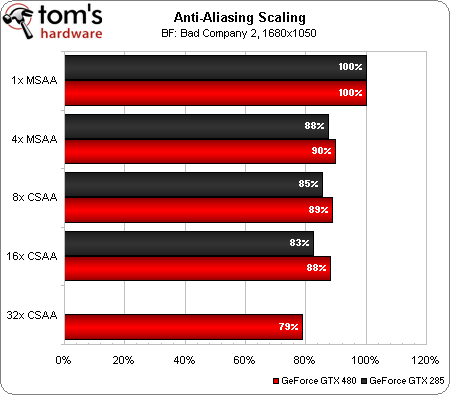

In the same vein, GeForce GTX 480 and 470 also support 32x coverage sampling anti-aliasing, which decouples coverage from color/z/stencil data, and thus reduces the bandwidth and storage costs of applying AA versus MSAA. Using a little Battlefield: Bad Company 2 at 1680x1050, we tested Nvidia’s various anti-aliasing techniques.

Naturally, Nvidia’s GeForce GTX 285 is slower than the GTX 480, so look instead to the scaling chart, which shows the benefit of GF100’s enhanced ROP performance. As the AA is slowly cranked up, the GeForce GTX 480 loses less of its frame rate than GTX 285. And at the 32x CSAA setting, it’s impressive to see the GeForce GTX 480 running at nearly 80% of its baseline speed sans AA. Clearly, if you have the performance to spare, turning on anti-aliasing is a good way to improve image quality here.

Current page: Tessellation And Anti-Aliasing

Prev Page Meet The GeForce GTX 480 And 470 Next Page Nvidia Surround, Display Output, And Video-

restatement3dofted I have been waiting for this review since freaking January. Tom's Hardware, I love you.Reply

With official reviews available, the GTX 480 certainly doesn't seem like the rampaging ATI-killer they boasted it would be, especially six months after ATI started rolling out 5xxx cards. Now I suppose I'll just cross my fingers that this causes prices for the 5xxx cards to shift a bit (a guy can dream, can't he?), and wait to see what ATI rolls out next. Unless something drastic happens, I don't see myself choosing a GF100 card over an ATI alternative, at least not for this generation of GPUs. -

tipoo Completely unimpressed. 6 months late. Too expensive. Power hog. Performance not particularly impressive. The Radeon 5k series has been delivering a near identical experience for 6 months now, at a lower price.Reply

-

tpi2007 hmmm.. so this is a paper launch... six months after and they do a paper launch on a friday evening, after the stock exchange has closed.. smart move by Nvidia, that way people will cool off during the weekend, but I think their stocks won't perform that brilliantly on monday...Reply -

Godhatesusall high power consumption, high prices along with a (small, all things considered) performance edge over ATI is all there is. Are 100$ more for a gtx 480 really worth 5-10% increase in performance?Reply

Though the big downside of fermi are temps. 97 is a very large(and totally unacceptable) temperature level. IMO fermi cards will start dying from thermal death some months from now.

I just wanted competition,so that prices would be lower and we(the consumers) could get more bang for our buck. Surely fermi doesnt help alot in that direction(a modest 30$ cut for 5870 and 5850 from ATI and fermi wont stand a chance). It seems AMD/ATI clearly won this round -

Pei-chen Wow, it seems Nvidia actually went ahead and designed a DX11 card and found out how difficult it is to design. ATI/AMD just slapped a DX11 sticker on their DX10 card and sells it as DX11. In half a year HD 5000 will be so outdated that all it can play is DX10 games.Reply -

outlw6669 Kinda impressed :/Reply

The minimum frame rates are quite nice at least...

Lets talk again when a version with the full 512 SP is released.