GeForce GTX 680 2 GB Review: Kepler Sends Tahiti On Vacation

Enthusiasts want to know about Nvidia's next-generation architecture so badly that they broke into our content management system and took the data to be used for today's launch. Now we can really answer how Kepler fares against AMD's GCN architecture.

GPU Boost: Graphics Afterburners

GPU Boost: When 1 GHz Isn’t Enough

Beyond its use of very fast GDDR5 memory, GeForce GTX 680 also enjoys a pretty significant graphics clock advantage compared to any of Nvidia’s prior designs: the GPU operates at 1006 MHz by default. But Nvidia says the engine can actually run faster—even within its 195 W thermal design power.

The problem with setting a higher frequency is that applications tax the processor in different ways, some more rigorously than others. Nvidia guarantees that GeForce GTX 680 will duck under its power ceiling at 1006 MHz, even in demanding real-world titles. Less power-hungry games end up leaving performance on the table, though. Enter GPU Boost.

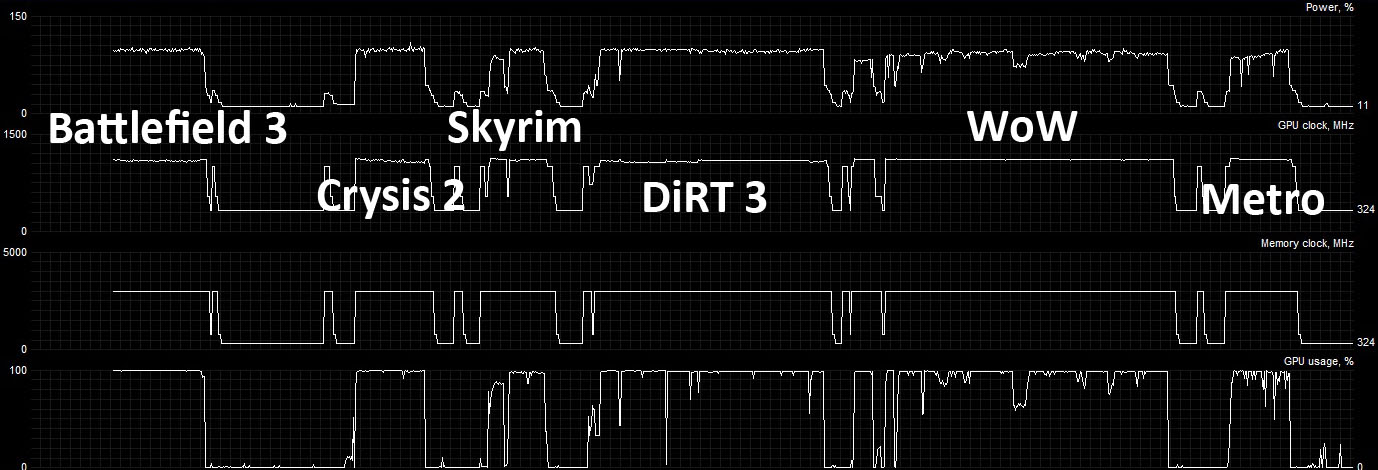

When headroom exists in the card’s thermal budget, GPU Boost dynamically increases the chip’s clock rate and voltage to improve performance. The mechanism is actually somewhat similar to Intel’s Turbo Boost technology in that a number of variables are monitored (power use, temperature, utilization, and more—Nvidia won’t divulge the full list) by on-board hardware and software. Data is fed through a software algorithm, which then alters the GPU’s clock within roughly 100 ms.

As you might imagine, for every variable that GeForce GTX 680 measures, a handful of other factors come into play. Are you using the card in Antarctica or in Death Valley? Was your GK104 cut from the outside of wafer or the center? The point is that no two GeForce GTX 680s will behave identically. And there’s no way to turn GPU Boost off, so we can’t isolate its effect. Consequently, Nvidia’s board partners are going to cite two figures on their packaging. First, you’ll get the base clock, equivalent to the classic core speed. Second, vendors will need to report a boost clock, representing the average frequency achieved under load in a game that doesn’t hit this card’s TDP. For GeForce GTX 680, that number should be 1058 MHz. Quite the mouthful, right? Let’s try illustrating.

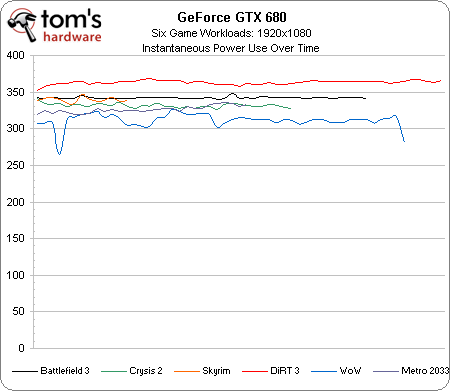

I ripped the chart above from an upcoming page where we measure performance per watt. What it shows is power consumption over time during six different game benchmarks, run at 1920x1080. And as you can see, system power use ranges from about 260 to somewhere around 370 W. On average, there might be a 50 W difference between a largely processor-bound game like World of Warcraft and a shader-intensive title like DiRT 3.

Given these power numbers, you might expect that the most power-hungry titles hit the 680’s ceiling, while others get nice, fat clock rate infusions.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

In all actuality, though, even a game like DiRT gets sped-up. In the The first chart from the top shows maximum board power in percent, and it’s pretty clear that World of Warcraft bounces around quite a bit. Given plenty of observable headroom, it gets our board’s maximum clock rate of 1110 MHz. Metro 2033 is another fairly low-power game, which also gets pegged at 1110 MHz. Crysis 2, DiRT 3, and even Battlefield 3 receive less consistent boosts. Still, though, they run a bit faster, too.

How does GPU Boost differ from AMD’s PowerTune technology, which we first covered in our Radeon HD 6970 and 6950 review? In essence, PowerTune adds granularity between a GPU’s highest p-state and the intermediate state it’d hit if TDP were exceeded. From my Cayman coverage:

“…rather than designing the Radeon HD 6000s with worst-case applications in mind, AMD is able to dial in a higher core clock at the factory (880 MHz in the case of the 6970) and rely on PowerTune to modulate performance down in the applications that would have previously forced the company to ship at, say, 750 MHz.”

In other words, a PowerTune-equipped graphics card might ship at a faster frequency than a board designed with a worst-case thermal event in mind—and that’s good. But it does not accelerate to exploit available power headroom. AMD considers this a good thing, touting consistent performance as a virtue.

Everyone’s take is going to be a different, of course. We’re not particularly enthused about the impact of variability in our numbers and inconsistent scaling from one game to another as GPU Boost tinkers around in the background. At the same time, we appreciate the ingenuity of a technology that dynamically strives to maximize performance at a given power budget when it can, and then throttles down when it’s not needed. I think that our concerns have to be outweighed by the real-world potential benefit to gamers in this case. We would like to have an option to turn GPU Boost off, though, if only to measure a baseline performance number in some sort of development mode.

Current page: GPU Boost: Graphics Afterburners

Prev Page GK104: The Chip And Architecture Next Page Overclocking: I Want More Than GPU Boost-

outlw6669 Nice results, this is how the transition to 28nm should be.Reply

Now we just need prices to start dropping, although significant drops will probably not come until the GK110 is released :/ -

Scotty99 Its a midrange card, anyone who disagrees is plain wrong. Thats not to say its a bad card, what happened here is nvidia is so far ahead of AMD in tech that the mid range card purposed to fill the 560ti in the lineup actually competed with AMD's flagship. If you dont believe me that is fine, you will see in a couple months when the actual flagship comes out, the ones with the 384 bit interface.Reply -

Chainzsaw Wow not too bad. Looks like the 680 is actually cheaper than the 7970 right now, about 50$, and generally beats the 7970, but obviously not at everything.Reply

Good going Nvidia... -

rantoc 2x of thoose ordered and will be delivered tomorrow, will be a nice geeky weekend for sure =)Reply