Nvidia GeForce GTX Titan 6 GB: GK110 On A Gaming Card

After almost one year of speculation about a flagship gaming card based on something bigger and more complex than GK104, Nvidia is just about ready with its GeForce GTX Titan, based on GK110. Does this monster make sense, or is it simply too expensive?

Compute Performance And Striking A Balance

As I was testing Nvidia’s GeForce GTX Titan, but before the company was able to talk in depth about the card’s features, I noticed that double-precision performance was dismally low in diagnostic tools like SiSoftware Sandra. Although it should have been 1/3 the FP32 rate, my results looked more like the 1/24 expected from GeForce GTX 680.

It turns out that, in order to maximize the card’s clock rate and minimize its thermal output, Nvidia purposely forces GK110’s FP64 units to run at 1/8 of the chip’s clock rate by default. Multiply that by the 1:3 ratio of double- to single-precision CUDA cores, and the numbers I saw initially turn out to be correct.

But Nvidia claims this card is the real deal, capable of 4.5 TFLOPS single- and 1.5 TFLOPS double-precision throughput. So, what gives?

It’s improbable that Tesla customers are going to cheap out on gaming cards that lack ECC memory protection, the bundled GPU management/monitoring software, support for GPUDirect, or support for Hyper-Q (Update, 3/5/2013: Nvidia just let us know that Titan supports Dynamic Parallelism and Hyper-Q for CUDA streams, and does not support ECC, the RDMA feature of GPU Direct, or Hyper-Q for MPI connections). However, developers can still get their hands on Titan cards to further promulgate GPU-accelerated apps (without spending close to eight grand on a Tesla K20X), so Nvidia does want to enable GK110’s full compute potential.

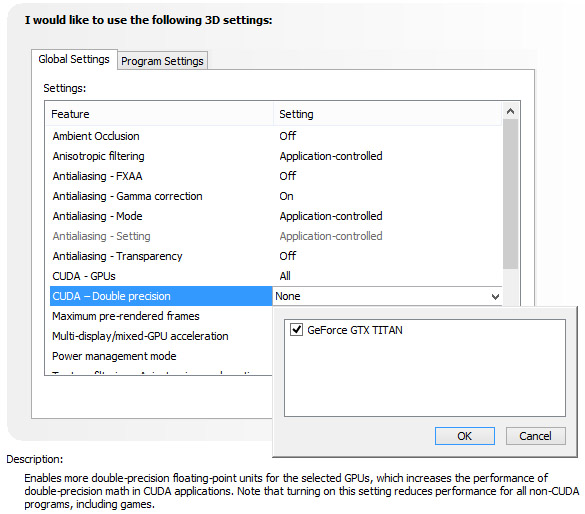

Tapping in to the full-speed FP64 CUDA cores requires opening the driver control panel, clicking the Manage 3D Settings link, scrolling down to the CUDA – Double precision line item, and selecting your GeForce GTX Titan card. This effectively disables GPU Boost, so you’d only want to toggle it on if you specifically needed to spin up the FP64 cores.

We can confirm the option unlocks GK110’s compute potential, but we cannot yet share our benchmark results. So, you’ll need to look out for those in a couple of days.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Compute Performance And Striking A Balance

Prev Page GK110: The True Tank Next Page GPU Boost 2.0: Changing A Technology’s Behavior-

jaquith Hmm...$1K yeah there will be lines. I'm sure it's sweet.Reply

Better idea, lower all of the prices on the current GTX 600 series by 20%+ and I'd be a happy camper! ;)

Crysis 3 broke my SLI GTX 560's and I need new GPU's... -

Trull Dat price... I don't know what they were thinking, tbh.Reply

AMD really has a chance now to come strong in 1 month. We'll see. -

tlg The high price OBVIOUSLY is related to low yields, if they could get thousands of those on the market at once then they would price it near the gtx680. This is more like a "nVidia collector's edition" model. Also gives nVidia the chance to claim "fastest single gpu on the planet" for some time.Reply -

tlg AMD already said in (a leaked?) teleconference that they will not respond to the TITAN with any card. It's not worth the small market at £1000...Reply -

wavebossa "Twelve 2 Gb packages on the front of the card and 12 on the back add up to 6 GB of GDDR5 memory. The .33 ns Samsung parts are rated for up to 6,000 Mb/s, and Nvidia operates them at 1,502 MHz. On a 384-bit aggregate bus, that’s 288.4 GB/s of bandwidth."Reply

12x2 + 12x2 = 6? ...

"That card bears a 300 W TDP and consequently requires two eight-pin power leads."

Shows a picture of a 6pin and an 8pin...

I haven't even gotten past the first page but mistakes like this bug me

-

wavebossa wavebossa"Twelve 2 Gb packages on the front of the card and 12 on the back add up to 6 GB of GDDR5 memory. The .33 ns Samsung parts are rated for up to 6,000 Mb/s, and Nvidia operates them at 1,502 MHz. On a 384-bit aggregate bus, that’s 288.4 GB/s of bandwidth."12x2 + 12x2 = 6? ..."That card bears a 300 W TDP and consequently requires two eight-pin power leads."Shows a picture of a 6pin and an 8pin...I haven't even gotten past the first page but mistakes like this bug meReply

Nevermind, the 2nd mistake wasn't a mistake. That was my own fail reading. -

ilysaml ReplyThe Titan isn’t worth $600 more than a Radeon HD 7970 GHz Edition. Two of AMD’s cards are going to be faster and cost less.

My understanding from this is that Titan is just 40-50% faster than HD 7970 GHz Ed that doesn't justify the Extra $1K. -

battlecrymoderngearsolid Can't it match GTX 670s in SLI? If yes, then I am sold on this card.Reply

What? Electricity is not cheap in the Philippines.