Why you can trust Tom's Hardware

Intel Core i7-10700K Overclocking Settings, Power and Thermals

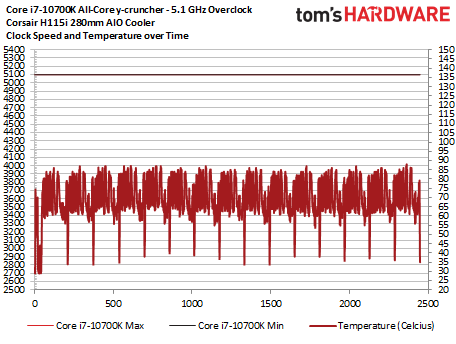

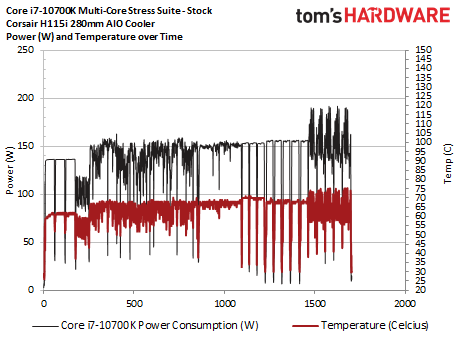

We dialed in a 5.1-GHz overclock with a 1.36V vCore and left the motherboard to its own devices for LLC control (auto), giving us a steady 1.38V under heavy load. As we did with the Core i9-10900K, we dialed the ring bus multiplier to 48 to improve stability and performance, but that's an optional tweak. We also ran our overclocked configuration with memory at DDR4-3600 with 18-18-18-36 timings.

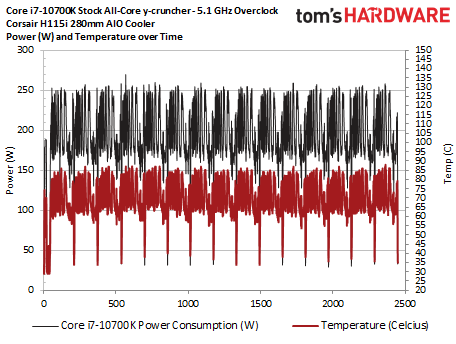

The Core i7-10700K proved to be fairly easy to cool with a Corsair H115i 280mm AIO cooler. The chip peaked at 85C during a string of y-cruncher multi-threaded tests, and power peaked at slightly over 250W. That's a new high for Core i7, but you could get away with bulky air coolers if you reign in your maximum frequency targets.

SIlicon Lottery, a boutique vendor that pre-bins chips, reports that 20% of its samples reached 5.1 GHz on all cores, and more than half can hit 5.0 GHz. However, Silicon Lottery pairs those multi-core boosts with higher four-core boosts of 5.2 and 5.1 GHz, respectively. That means a bit more tuning would likely extract higher clocks with our sample, which we bought at retail.

There really isn't too much to talk about with our overclocking efforts: The Core i7-10700K is an easy overclocker, and we have little doubt that a bit more tuning could unlock more performance.

Intel Core i7-10700K Turbo Boost and Thermals

As per normal for any of our testing of stock settings, we disabled the MCE (Multi-Core Enhancement) feature that amounts to automatic overclocking (Enhanced Multi-Core Performance in Gigabyte's parlance).

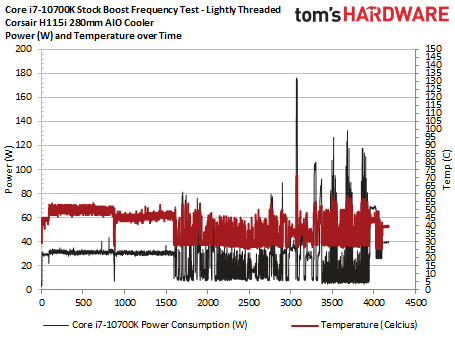

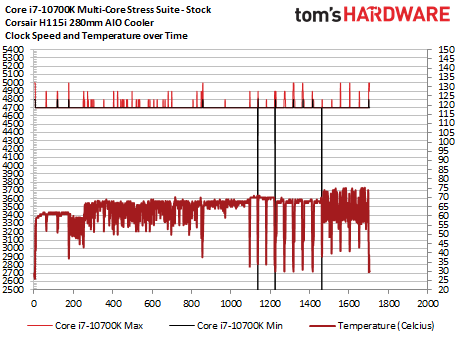

We ran our standard frequency test for lightly-threaded workloads (methodology here). Here we see frequent boost activity to 5.1 GHz during the PCMark 10 tests, and power consumption and thermals weren't really an issue, though we did record a spike to 178W.

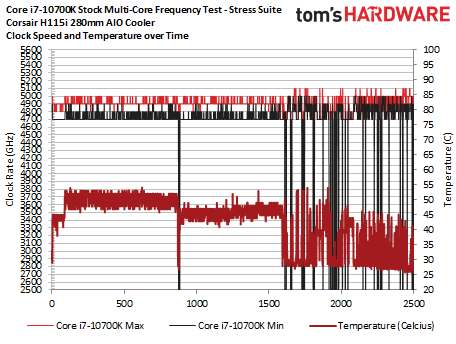

As you can see above, thermals were good with the Corsair H115i, albeit with the fans cranking at full speed, during a string of real-world stress tests that includes multiple instances of the Corona ray-tracing benchmark, x265 HandBrake rendering tests, POV-Ray multi-threaded benchmarks, Cinebench R20 runs, and finally five iterations of the AVX-intensive threaded y-cruncher.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The Intel Core i7-10700K ran at 4.7 GHz during heavy all-core loads, and temperatures spiked to 76C with the Corsair H115i cooler. Power consumption peaked at 190W, which is well under the PL2 rating of 229W.

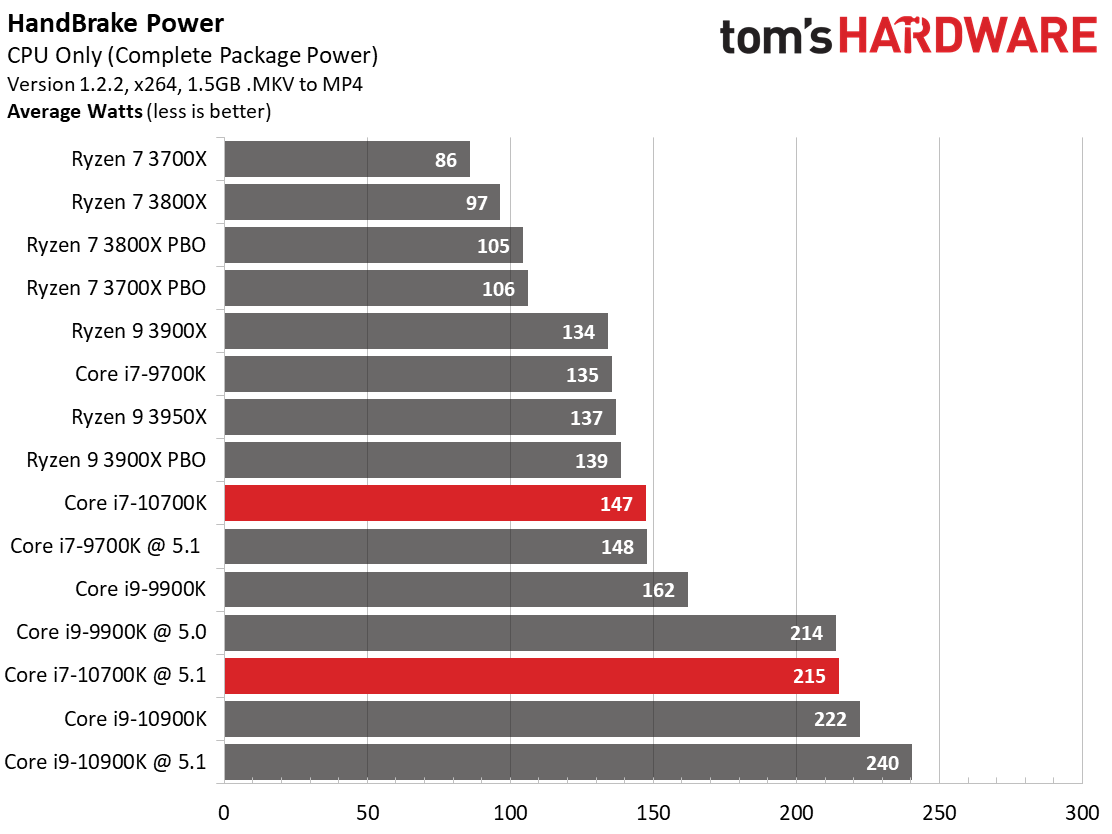

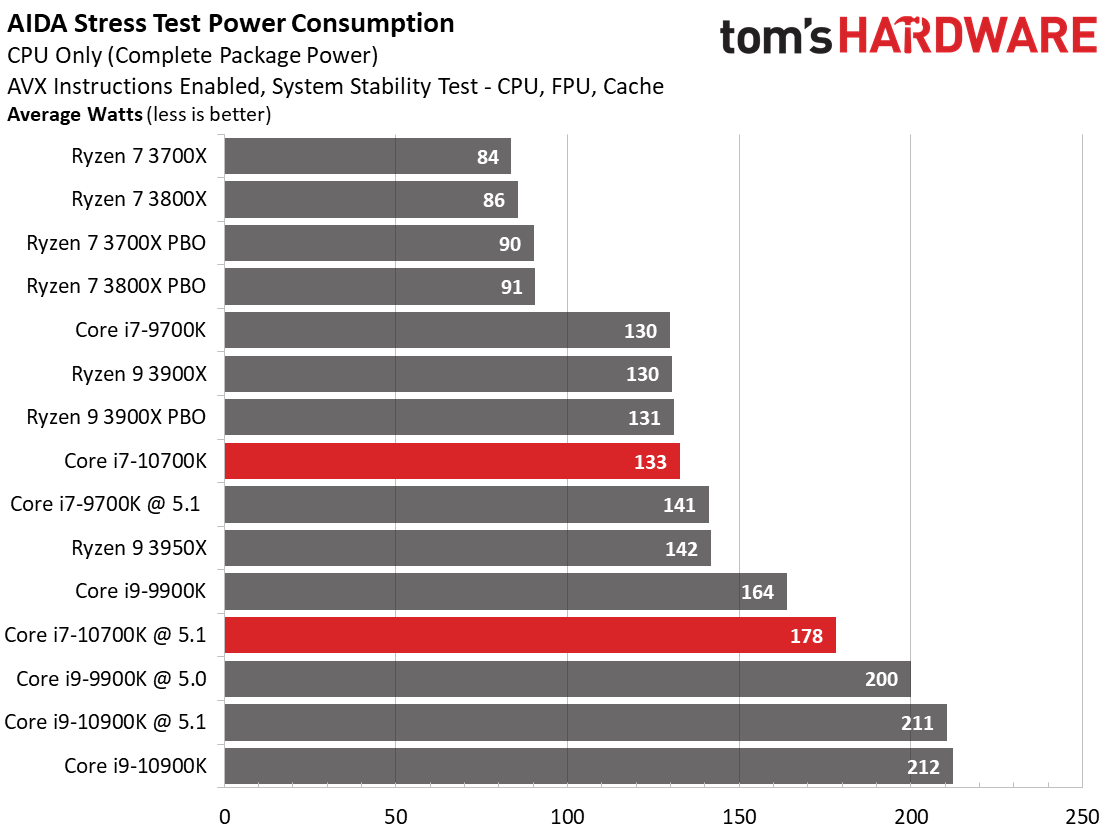

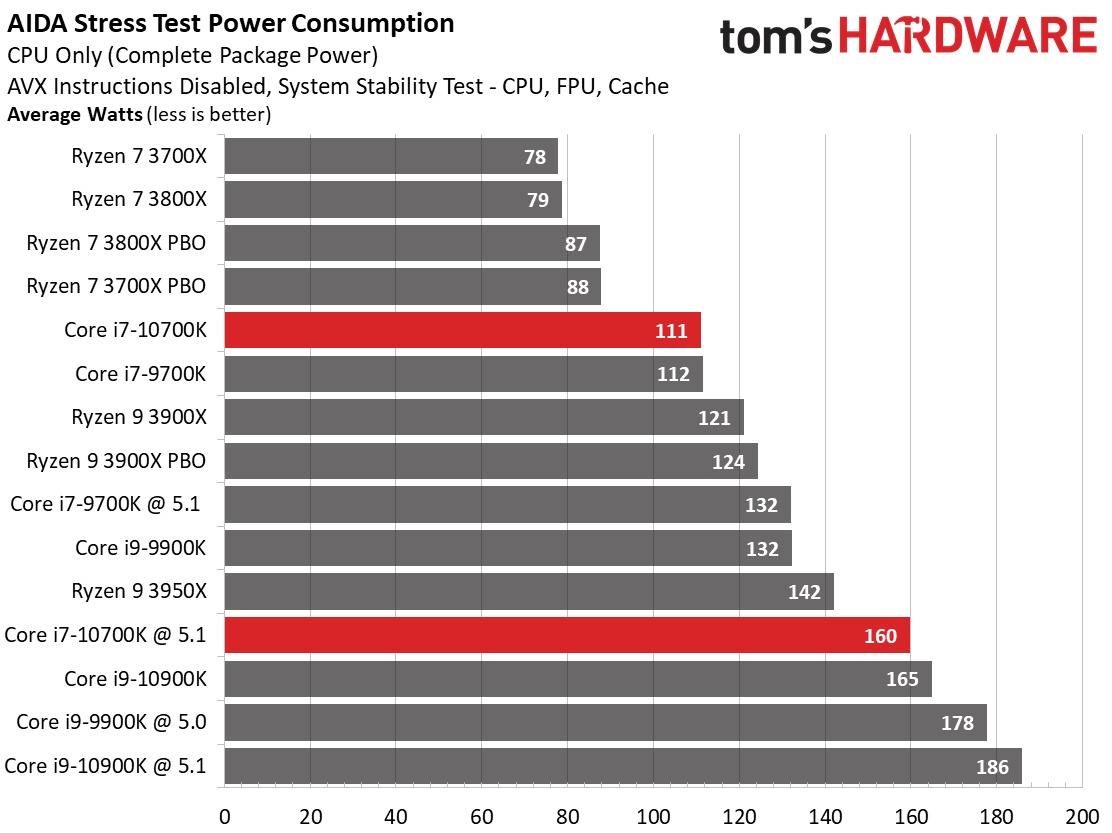

Intel Core i7-10700K Power Consumption and Efficiency

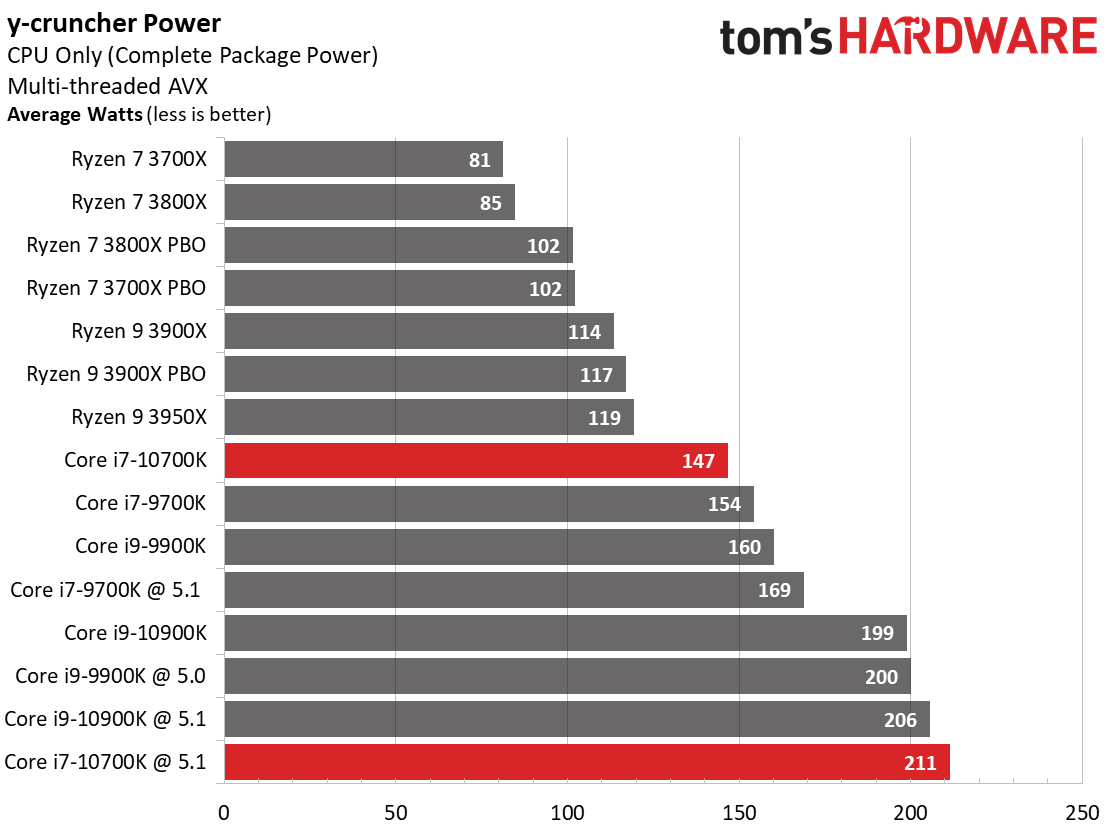

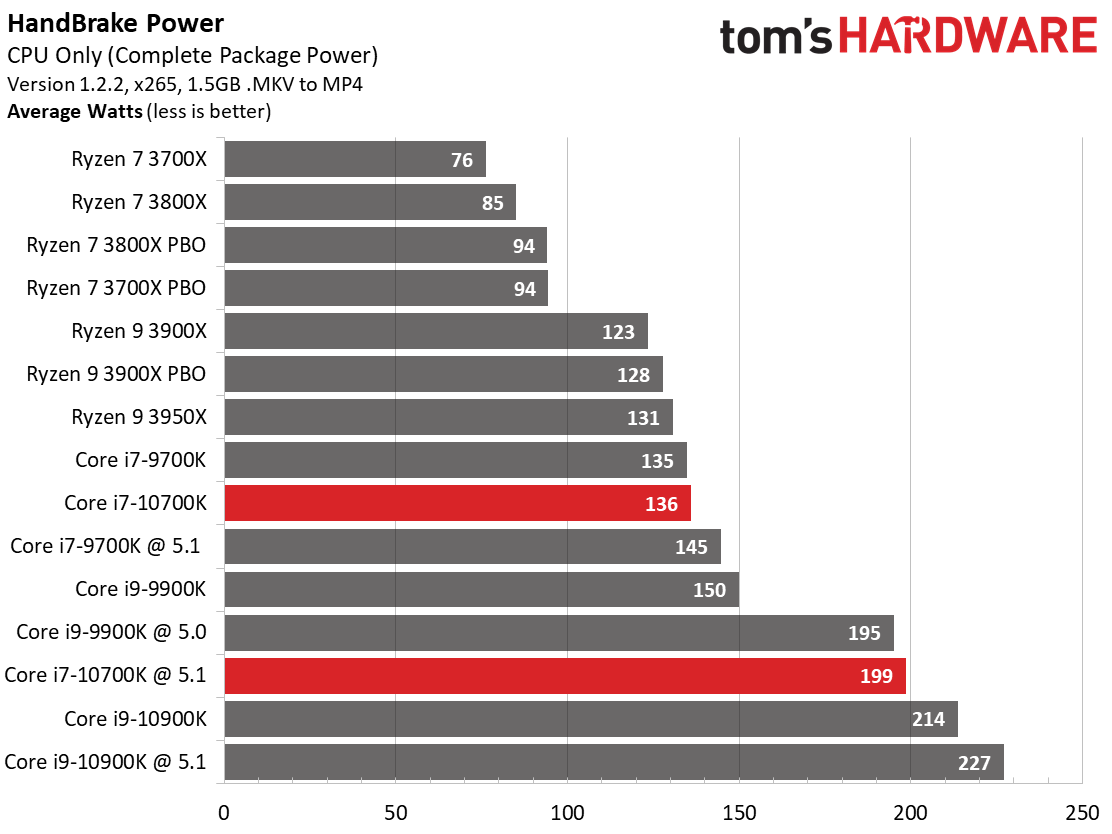

At stock settings, we logged an average of 147W of power consumption for the Core i7-10700K during the AVX-intensive multi-threaded y-cruncher workload, which is 9% less power than the similarly-equipped Core i9-9900K. That's impressive given that both chips run at 4.7 GHz on all cores during the test. As you'll see in the application testing later, the 10700K delivers faster performance, meaning that, compared to its spiritual predecessor, it yields a comparatively solid increase in power efficiency in SIMD workloads. The Core i7-10700K also draws less power than the 9700K, which has the same core count but doesn't come with Hyper-Threading.

We logged similar power consumption during our overclocking efforts, with the 10700K's fixed 5.1 GHz clock rate yielding a maximum of 211W under load, compared to the 9900K's 5.0 GHz overclock that pulled down 206W. As always, take power readings during overclocking with a grain of salt, as fine-tuning and motherboard firmwares have a huge impact here.

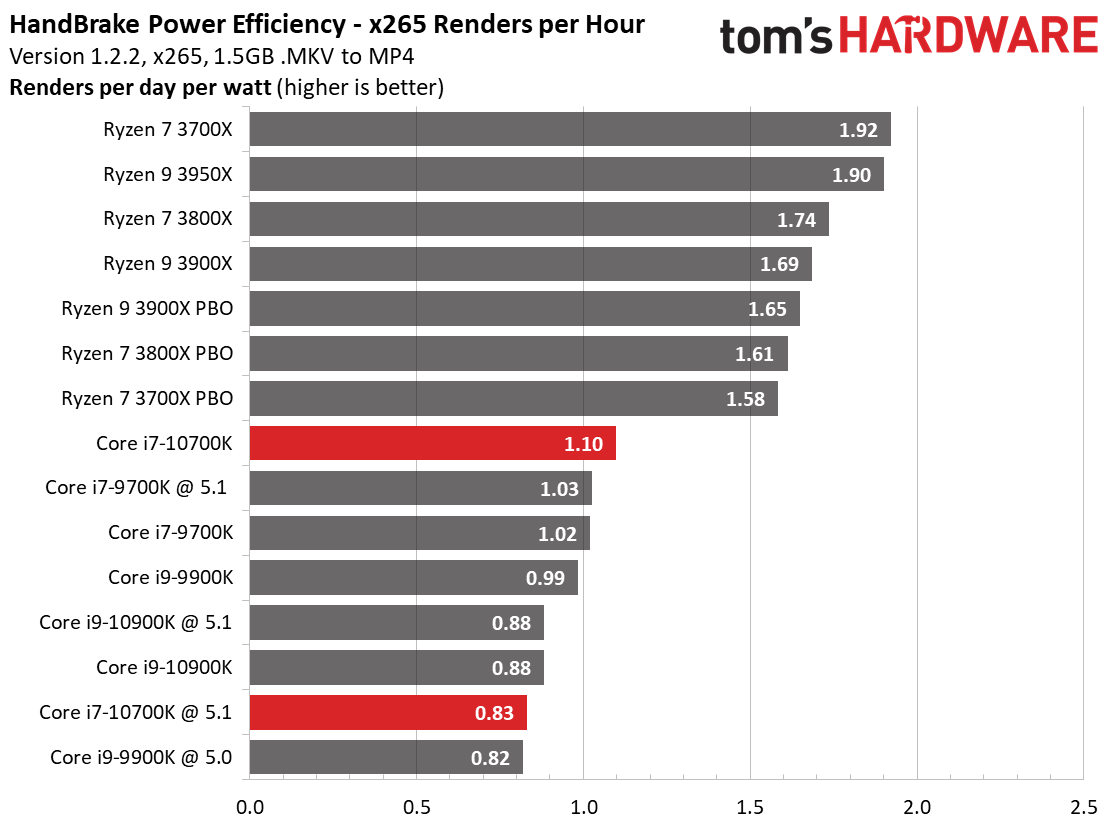

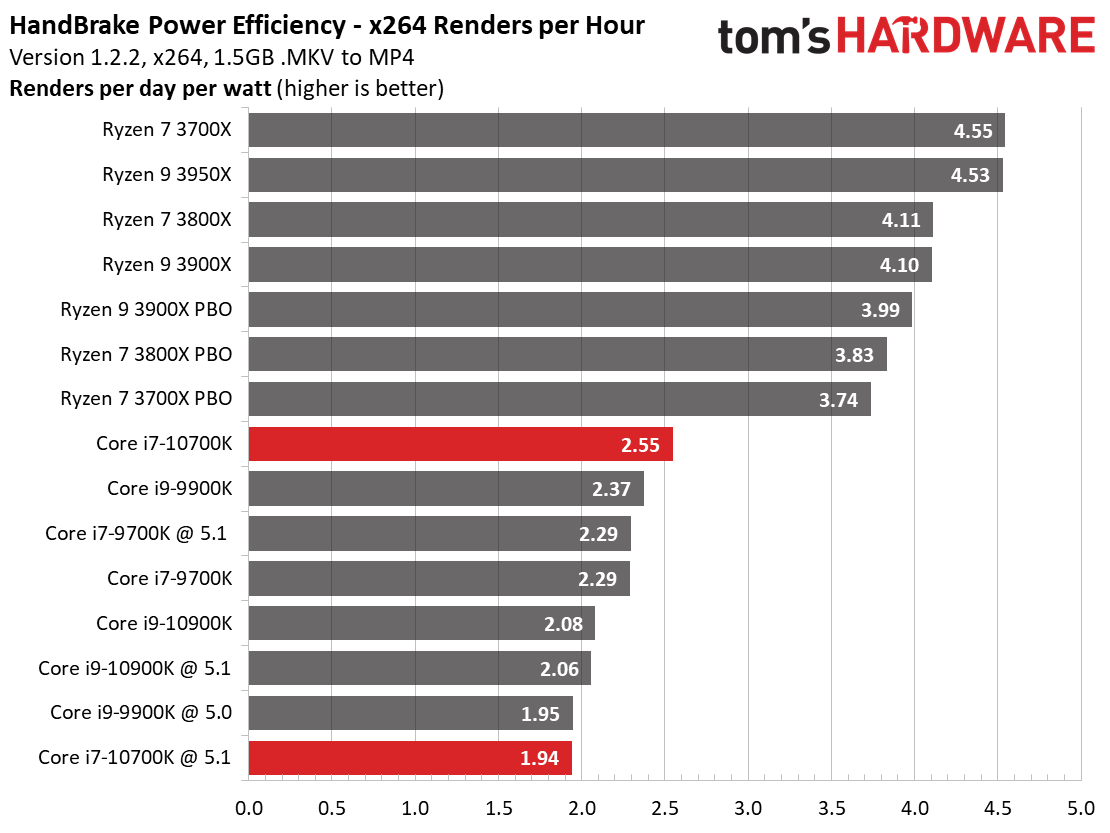

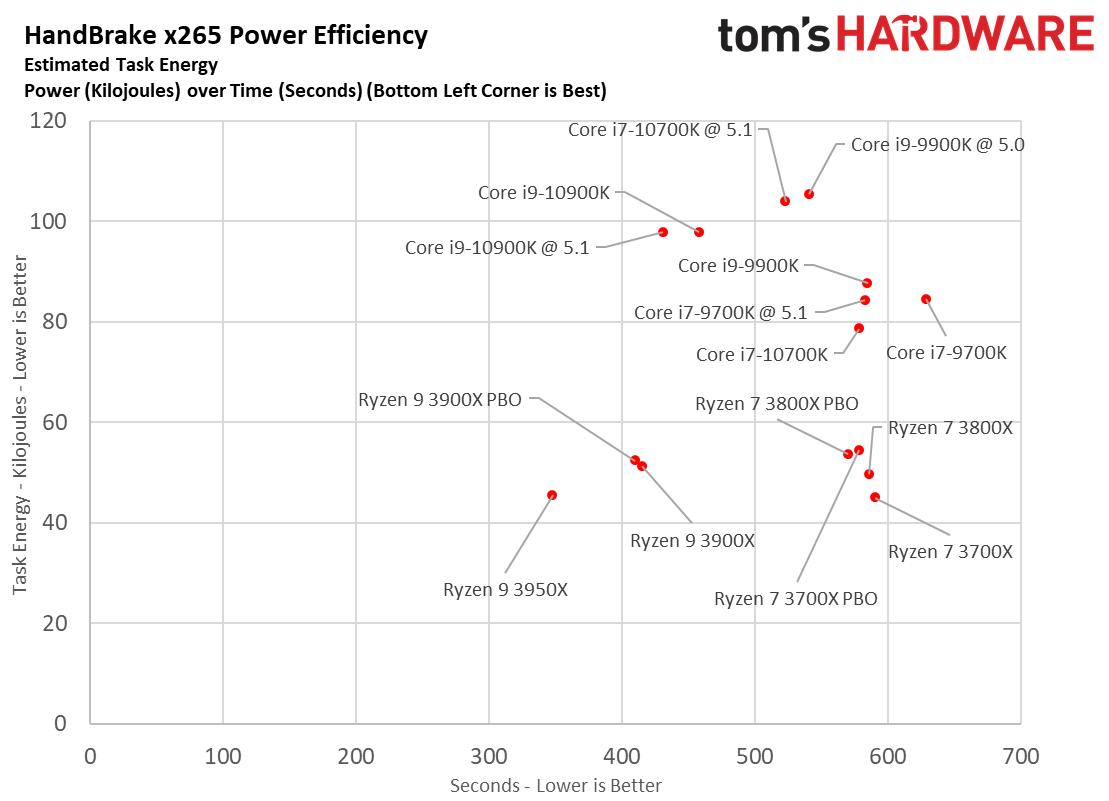

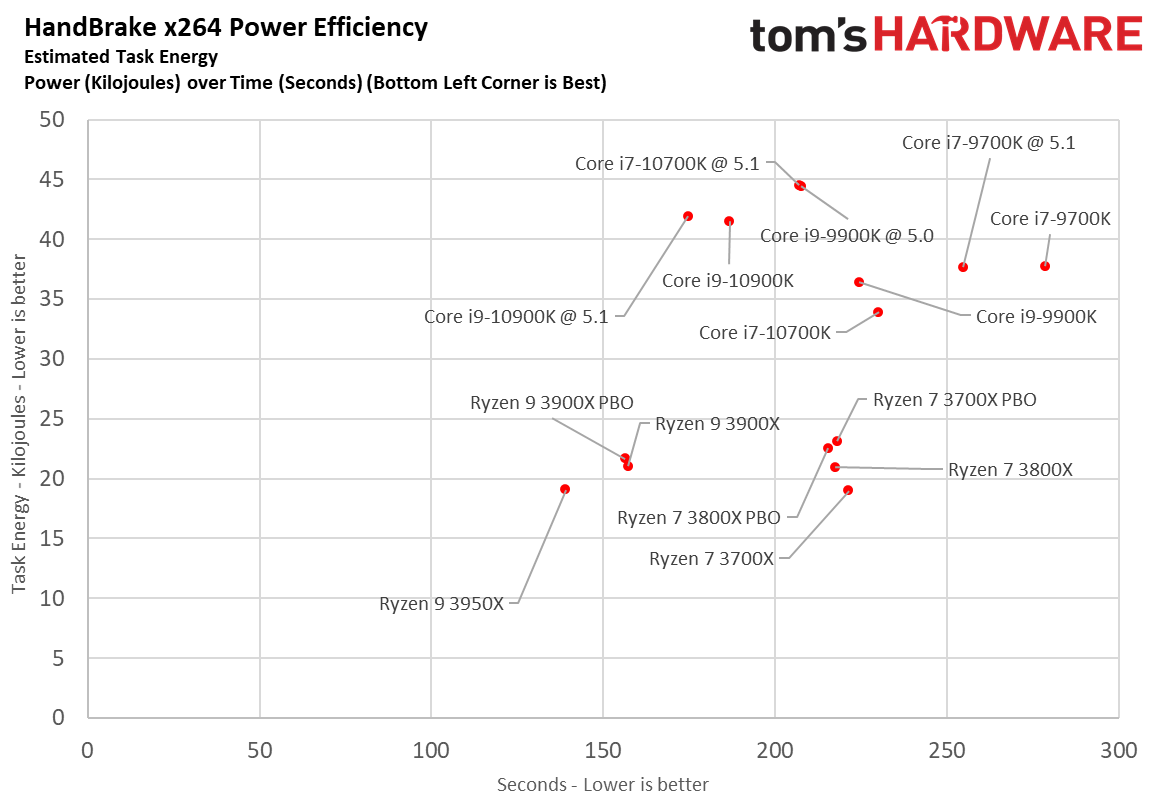

As expected, the Core i7-10700K consumes much more power than the Ryzen 3000 chips, but that isn't surprising given their denser and more power-efficient 7nm node. That advantage really shines through when we take a look at power consumption during our HandBrake x264 and x265 workloads. Still, basic power consumption measurements never tell the full story – the Ryzen processors also dominate the renders-per-day efficiency metrics.

Here, we take a slightly different look at power consumption by calculating the cumulative amount of energy required to perform an x264 and x265 HandBrake workload, respectively. We plot this 'task energy' value in Kilojoules on the left side of the chart.

These workloads are comprised of a fixed amount of work, so we can plot the task energy against the time required to finish the job (bottom axis), thus generating a really useful power chart. Bear in mind that faster compute times, and lower task energy requirements, are ideal. That means processors that fall the closest to the bottom left corner of the chart are best.

Intel's hyper-optimized 14nm++ process can't beat the Ryzen processors in these measurements of power consumed during AVX-accelerated encoding tasks. The 10700K represents a decent step forward from the 9900K, but it's clear there's still a large disparity between the AMD and Intel camps.

| Intel Socket 1200 (Z490) | Core i7-10700K, Core i9-10900K |

| Row 1 - Cell 0 | Gigabyte Aorus Z490 Master |

| Row 2 - Cell 0 | 2x 8GB G.Skill FlareX DDR4-3200 - Stock: DDR4-2933, OC: DDR4-3600 |

| AMD Socket AM4 (X570) | AMD Ryzen 9 3950X, 3900X, Ryzen 7 3800X; 3700X |

| MSI MEG X570 Godlike | |

| Row 5 - Cell 0 | 2x 8GB G.Skill FlareX DDR4-3200 - Stock: DDR4-3200, OC: DDR4-3600 |

| Intel LGA 1151 (Z390) | Intel Core i9-9900K, i7-9700K |

| MSI MEG Z390 Godlike | |

| 2x 8GB G.Skill FlareX DDR4-3200 - Stock: DDR4-2666, OC: DDR4-3600 | |

| All Systems | Nvidia GeForce RTX 2080 Ti |

| 2TB Intel DC4510 SSD | |

| EVGA Supernova 1600 T2, 1600W | |

| Row 12 - Cell 0 | Open Benchtable |

| Windows 10 Pro (1903 - All Updates) | |

| Row 14 - Cell 0 | Workstation Tests - 4x 16GB Corsair Dominator - Corsair Force MP600 |

| Cooling | Corsair H115i, Custom Loop, Noctua NH-D15S |

MORE: Best CPUs

MORE: Intel and AMD Processor Hierarchy Comparisons

MORE: All CPUs Content

Current page: Core i7-10700K Power Consumption, Thermals, Overclocking, Test Setup

Prev Page Core i9 gaming at Core i7 pricing Next Page Intel Core i7-10700K Gaming Benchmarks

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

jgraham11 Wow that's high power consumption! Good thing you are running liquid cooling! Compare that to the AMD 3700X at half the power!Reply

Look at the shorter tests, the Intel systems seems to do better until their turbo runs out. AMD seems to do better with anything that takes more time.

I realize that both AMD and Intel systems are overclocked but the AMD systems seem to do better with memory tuning, maybe instead of just using PBO (a one click solution), take the same time you use for the Intel processors and put that into the memory. AMD would show even better in games and anything that was memory intensive.

1440p gaming would be more relevant! I do realize it doesn't make for good looking graphs but 1080p is not the typical use case for this CPU and comparable GPU.

Good review overall. Pretty fair way of presenting the information. Good memory choices. Efficiency focus was great to see.

Way better than the typical casting AMD CPUs in bad light, for example where Toms put the 3400G ($159) against the Intel 9700k($379.00). -

RodroX Good review, too bad at this price point you only show 1920x1080p game results.Reply

I understand thats the only way to keep GPU from been a "bottleneck", but if I was to spend this much money I would aim for playing at 1440p High details.

I still think that no matter what you wana play, the resolution and/or refresh rate, at this point in time the best bang for the buck for ultrafast FPS is the Core i5 10600K + high speed memory, and you feel like it some quick brainless overclocking.

Other than that just pick the Ryzen your wallet can buy and be happy. -

DrDrLc Look... Can we actually get gaming benchmarks with modern games and at higher resolutions?Reply

Intel keeps on saying that they are better for gaming because of single-threaded 1080p benchmarks, but we don't game like that with 2080ti cards. We don't build $2000 machines with one of the most expensive gaming cards to run 1080p ffs.

Are you going to give us next gen graphics card benchmarks only at 1080p?

If not, can we actually see what this new CPU will look like in comparison to others at resolutions that we use? We've been watching all the review sites doing this for three or four years now... It's Intel's marketing strategy, and you are letting them do it, and playing along! It's a problem, because we don't have any reviews that actually show us what to buy for the way that we actually play games. These are reviews are actually useless for gamers. -

barryv88 I find it downright shocking to see that popular hardware review sites such as Tom's are still harping so much on HD gaming results.Reply

Yes, game results at HD are less GPU bound, but it only paints Intel in a good picture while hiding a rather shocking truth - that AMD is incredibly gaming competitive at the world's sweet spot for gaming resolutions. Q H D. (Typically 2560x1440).Tom's explicitly stated in the past that QHD is the best area for gaming given that screens today with G/Fsync/144hz+ options cost less than $500 and are very affordable in general, today. 4K is still too high for most GPU's to handle, meaning that QHD fills a good middle ground at the end of the day, beating HD at higher image quality.

Really Tom's, get your act straight. It's time to nudge your audience towards higher gaming echelons.

Start publishing QHD CPU results in addition as this will entice people to make the switch towards higher res gaming but also this - AMD Ryzen CPU's in general are less than 5% slower than Intel chips at QHD or 4K FPS. Intel is therefore NOT the indisputable king as no god given person can tell such a small difference in high res FPS. Enough with the gaming myths! Ryzen CPU's tend to pack more cores that actually makes them far more future prove. If most of those cores sit idle in today's gaming, who cares? Wouldn't you rather have enough cores in reserve for more future demanding games and enjoy streaming/recording quality with extra muscle under the hood to enjoy less stutter? Call me stupid, but to me this actually makes Ryzen a better gaming choice.

Your article adds to this whole HD stagnation thing that's been going on for over 10 years. Over 90% of steam players still game at HD. It's time to give em reason to make the next push - QHD and then 4K.

Make a start. I challenge Paul Alcorn to do a "world sweet spot QHD CPU FPS" article. Get around 10 people or so to game on both Intel and AMD machines. Gather the results and lets see if Intel still holds the crown. -

Drunk Canuck The only downside is how hot the 10700K gets. When I got mine, I ran a stress test at stock and it hit 74 degrees with a Fractal Celsius S24 AIO with my case open (Define R6) for maximum airflow and all fans running. It gets into the mid 80's when the case is closed. I'd assume I'd get those temperatures with the case open when overclocking and that's a bit much.Reply

I will say this though, my new system runs Doom Eternal really well. Basically everything cranked to high or ultra at 2K resolution with a GTX 1070. -

milleron This year, many will be building new computers mostly dedicated to Microsoft Flight Simulator 2020. In the past, flight sims were more likely to be CPU-bound than GPU-bound, but it's not clear that that's any longer the case. Is there any way to predict before final release of this much-anticipated game whether it will benefit more from single-core clock speed or multi-threading? Would anyone hazard a guess on whether to plan an Intel or Ryzen build?Reply -

RodroX Replymilleron said:This year, many will be building new computers mostly dedicated to Microsoft Flight Simulator 2020. In the past, flight sims were more likely to be CPU-bound than GPU-bound, but it's not clear that that's any longer the case. Is there any way to predict before final release of this much-anticipated game whether it will benefit more from single-core clock speed or multi-threading? Would anyone hazard a guess on whether to plan an Intel or Ryzen build?

You should wait for reviews when its launched.

Other than that this is the best you can check right now: https://www.pcgamer.com/microsoft-flight-simulator-system-requirements/

Im guessing any Ryzen 5/7 3xxx will play nice, probably thye Ryzen 7 3700X could be a cheaper, yet very good option vs the i7 10700K.

And if rumors becomes true the new Ryzen 4xxx could be a new jewel for gaming. But that wont be out till atleast the end of september. -

st379 There are still 1080p 240hz monitors that are being sold.Reply

1080p ultra is very realistic, Paul did not test it at 1080p low he tested it on max settings.

It is not like some review sites that test at 720p low or even worse at 480p.

This is a cpu review not a gpu.

In gpu review I would expect it to be tested in 1080p up to 4k, maybe even 8k. -

RodroX Replyst379 said:There are still 1080p 240hz monitors that are being sold.

1080p ultra is very realistic, Paul did not test it at 1080p low he tested it on max settings.

It is not like some review sites that test at 720p low or even worse at 480p.

This is a cpu review not a gpu.

In gpu review I would expect it to be tested in 1080p up to 4k, maybe even 8k.

True, but it does not change the fact that there are also more and more 1440p monitors sold everyday. And when you spend this amount of money for a CPU many people will also consider a high end GPU with a 1440p monitor for gaming, thus it will be very nice to have those results. -

barryv88 Reply

DX12 should remove alot of CPU bottleneck and hopefully we'll see great core scaling if all pans out for the engine. I for one would like to see how the engine handles scenery draw and how smooth everything will translate to FPS.milleron said:This year, many will be building new computers mostly dedicated to Microsoft Flight Simulator 2020. In the past, flight sims were more likely to be CPU-bound than GPU-bound, but it's not clear that that's any longer the case. Is there any way to predict before final release of this much-anticipated game whether it will benefit more from single-core clock speed or multi-threading? Would anyone hazard a guess on whether to plan an Intel or Ryzen build?