Larrabee: Intel's New GPU

x86: The Right Choice?

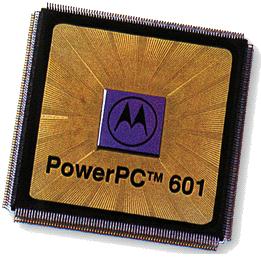

We might tend to forget this additional cost of several hundred thousand transistors today, when modern processors have several hundred million transistors, but if you go back in time 15 years ago when the Pentium and PowerPC 601 were in rivalry, Intel’s latest processor used approximately 15% more transistors than IBM’s did. And a difference of 500,000 transistors when the entire processor had approximately three million is far from negligible. So did economic factors run counter to good technological sense? Not really.

First of all, Intel has the benefit of 30 years of expertise with its x86 architecture, and even if it does possess skills with VLIW (IA64) and even RISC (i860, i960) architectures, its experience with the x86 architecture is a genuine advantage. Obviously, that experience is with hardware as well as software, since Intel can re-use its years of research on x86 compilers.

A second point is that the choice of CISC has advantages as well as disadvantages. while RISC instructions are of similar size and constructed in the same way to make decoding easier, CISC instructions are of variable size. Also, while decoding is complicated, x86 code is traditionally more compact than the equivalent RISC code. Here again you might tend to think that factor is negligible, but in this case, these are processors with very small caches, where every kilobyte counts.

The choice of the x86 has one more advantage: it’s an opportunity for Intel to re-use one of its older designs, which is exactly what the engineers did. Intel’s last in-order processor was the Pentium MMX (P55), but the improvements it made over the classic Pentium (the P54C) weren’t really worthwhile in the context of the Larrabee project. It made more sense to use the original. But here again a question arises: Wouldn’t it have made more sense to start from scratch and design a processor that was perfectly suited to Larrabee?

There’s a tenacious myth in the public’s mind associated with starting with a clean slate when it comes to processor designs, which is seen as synonymous with quality. Most people see it as an opportunity to get rid of all the encumbrances of the past. But in practice, it’s extremely rare for engineers to start from scratch. First of all, because there’s no point in it. Many building blocks are perfectly reusable and don’t need to be created all over again. Also, it would be too expensive in terms of time and money.

While Intel undeniably knows how to design and produce x86 processors, we tend to forget the amount of time it takes to completely debug a design. Obviously, famous bugs like the Pentium floating-point division bug or Phenom Translation Lookaside Buffer (TLB) come to mind because they made headlines. But in fact, all processors have bugs of varying significance. All you need to do is read the manufacturers’ data sheets that list them for a certain processor model. The advantage of modifying an existing design like the Pentium is that it has already been extensively tested and debugged, which is a great head start. Insider rumors say that Intel has re-used the Pentium design that the Pentagon carefully modified when it was used for military purposes. That’s not very credible, but it adds to the Larrabee mystique, and Intel will likely not deny it.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: x86: The Right Choice?

Prev Page An Overview Next Page In Detail: The Scalar Unit And SMT-

thepinkpanther very interesting, i know nvidia cant settle for being the second best. As always its good for the consumer.Reply -

IzzyCraft Yes interesting, but intel already makes like 50% of every gpu i rather not see them take more market share and push nvidia and amd out although i doubt it unless they can make a real performer, which i have no doubt on paper they can but with drivers etc i doubt it.Reply -

Alien_959 Very interesting, finally some more information about Intel upcoming "GPU".Reply

But as I sad before here if the drivers aren't good, even the best hardware design is for nothing. I hope Intel invests more on to the software side of things and will be nice to have a third player. -

crisisavatar cool ill wait for windows 7 for my next build and hope to see some directx 11 and openGL3 support by then.Reply -

Stardude82 Maybe there is more than a little commonality with the Atom CPUs: in-order execution, hyper threading, low power/small foot print.Reply

Does the duo-core NV330 have the same sort of ring architecture? -

"Simultaneous Multithreading (SMT). This technology has just made a comeback in Intel architectures with the Core i7, and is built into the Larrabee processors."Reply

just thought i'd point out that with the current amd vs intel fight..if intel takes away the x86 licence amd will take its multithreading and ht tech back leaving intel without a cpu and a useless gpu -

liemfukliang Driver. If Intel made driver as bad as Intel Extreme than event if Intel can make faster and cheaper GPU it will be useless.Reply -

phantom93 Damn, hoped there would be some pictures :(. Looks interesting, I didn't read the full article but I hope it is cheaper so some of my friends with reg desktps can join in some Orginal Hardcore PC Gaming XD.Reply