Intel’s 24-Core, 14-Drive Modular Server Reviewed

Management Module

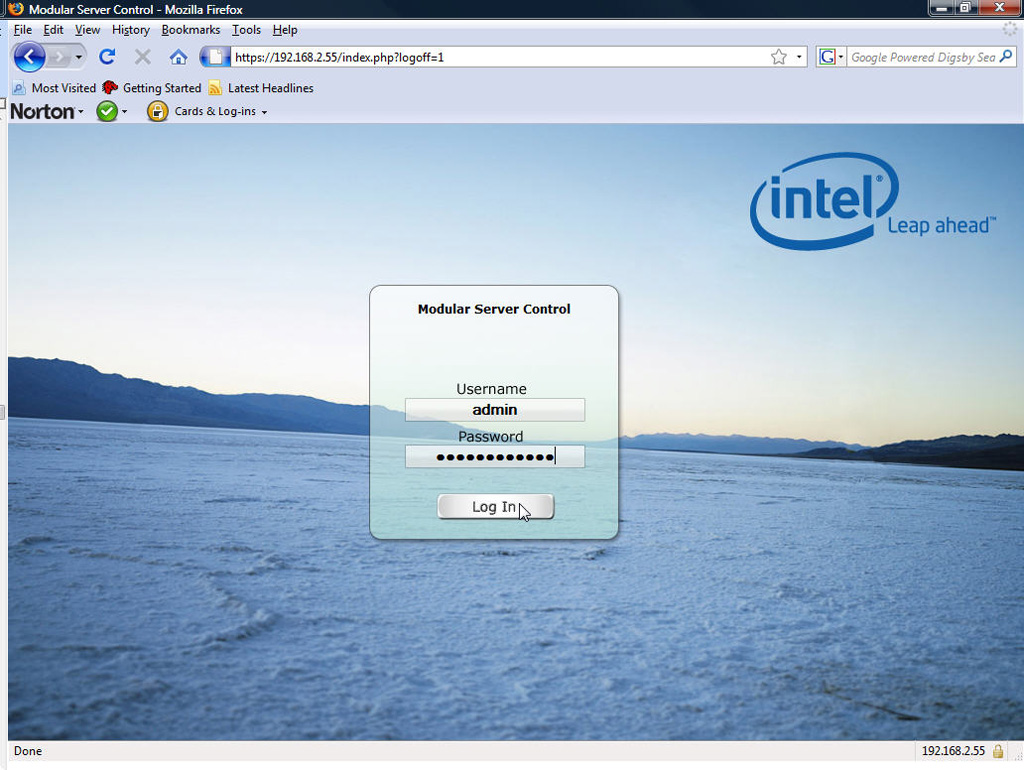

Remote administration of the MFSYS25 is made possible by the management module that sits in the center of the chassis’ rear panel. Via the module’s single network port, the management module offers a Web-based user interface that is used to configure, manage, and monitor the hardware inside the modular server as well as provide remote functionality for the compute modules.

The functions of the management module are served with a built-in Linux-based operating system. On the first try, I was able to connect to the modular server control application using Firefox and then was able to use PuTTY to telnet to the Linux CLI, but with the latter interface, I had limited functionality. I couldn’t find anything on Intel's Website that had instructions on how to properly use the CLI, so I just relied on the Web interface instead.

Along with the network port, there’s a reset button to reboot the management module. A nine-pin serial port is also built into the module, although a MFSYS25 FAQ on Intel's Website states that the serial port is only used for manufacturing and engineering. I then found an Intel document (Solution ID: CS-029107) that explains how to connect to the management module by using a terminal program. This program serves as a backup procedure in case you lose network connectivity or need to reset the module back to its factory defaults.

Unlike the other hot-swappable modules in the MFSYS25, the management module is not redundant as there is no space in the back for a second module, nor is there a second network port on the single management module that comes with the MFSYS25. It’s assumed that as long as the compute modules are running, then you can survive without a management module until it’s replaced. RDP, telnet, or SSH could be used for remote connection, but local administration of each of the running servers would require using the video and USB ports on the compute modules. One good thing about replacing a management module is that if the module is completely lost, the configuration is backed up on a flash card located on the chassis midplane. The data on the memory card is then restored once the management module is replaced.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Management Module

Prev Page Ethernet Switch Module Next Page Storage Controller Module-

kevikom This is not a new concept. HP & IBM already have Blade servers. HP has one that is 6U and is modular. You can put up to 64 cores in it. Maybe Tom's could compare all of the blade chassis.Reply -

sepuko Are the blades in IBM's and HP's solutions having to carry hard drives to operate? Or are you talking of certain model or what are you talking about anyway I'm lost in your general comparison. "They are not new cause those guys have had something similar/the concept is old."Reply -

Why isn't the poor network performance addressed as a con? No GigE interface should be producing results at FastE levels, ever.Reply

-

nukemaster So, When you gonna start folding on it :pReply

Did you contact Intel about that network thing. There network cards are normally top end. That has to be a bug.

You should have tried to render 3d images on it. It should be able to flex some muscles there. -

MonsterCookie Now frankly, this is NOT a computational server, and i would bet 30% of the price of this thing, that the product will be way overpriced and one could buid the same thing from normal 1U servers, like Supermicro 1U Twin.Reply

The nodes themselves are fine, because the CPU-s are fast. The problem is the build in Gigabit LAN, which is jut too slow (neither the troughput nor the latency of the GLan was not ment for these pourposes).

In a real cumputational server the CPU-s should be directly interconnected with something like Hyper-Transport, or the separate nodes should communicate trough build-in Infiniband cards. The MINIMUM nowadays for a computational cluster would be 10G LAN buid in, and some software tool which can reduce the TCP/IP overhead and decrease the latency. -

less its a typo the bench marked older AMD opterons. the AMD opteron 200s are based off the 939 socket(i think) which is ddr1 ecc. so no way would it stack up to the intel.Reply

-

The server could be used as a Oracle RAC cluster. But as noted you really want better interconnects than 1gb Ethernet. And I suspect from the setup it makes a fare VM engine.Reply