Intel’s 24-Core, 14-Drive Modular Server Reviewed

MFSYS25 Modular Server Chassis

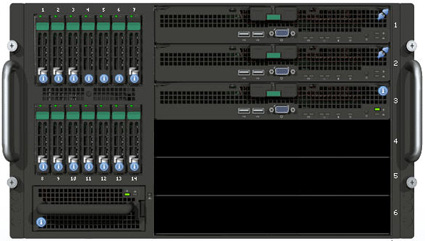

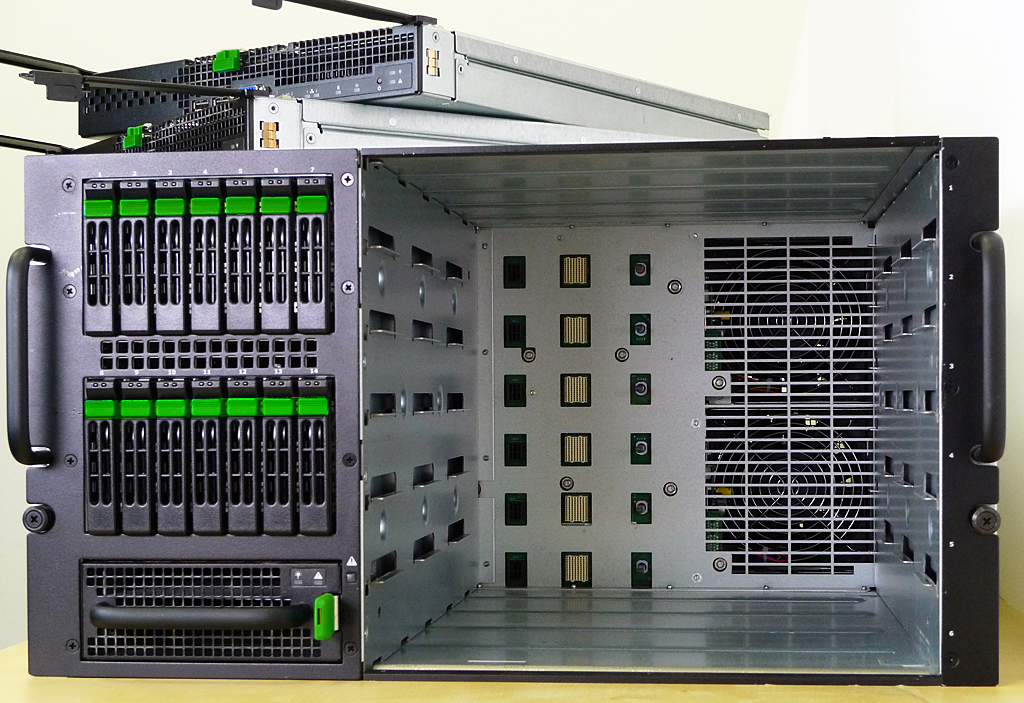

The core piece of hardware in the modular server is the 90 lb. 6U MFSYS25 chassis. It’s designed to hold six compute modules, 14 SAS drives, four power supply modules, two main cooling modules, two Ethernet switch modules, two storage controller modules, a single I/O cooling module, and the main management module. Intel says a fully loaded MFSYS25 would weigh just under 200 lbs. Compared to a stack of six 1U DellPE 1950 servers, the MFSYS25 is just a little lighter in weight thanks to its all-in-one modular design. Still, the installation of a fully loaded MFSYS25 is not a one-person job and requires more than two people to mount it.

The front of the chassis offers easy access to the compute modules, the I/O cooling module, and the SAN drives. The remaining real estate around the front bezel leaves little or no room for additional components. The sole indicator light built into the frame is the system fault LED that turns amber if there’s a problem coming from one of the MFSYS25’s rear-mounted components (such as a main cooling module).

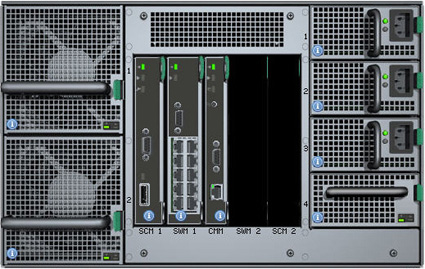

On the back of the MFSYS25, you have a number of bays for more cooling, power, management, networking, and storage modules. For the most part, Intel provides optional redundancy for everything but the management module. Depending on where and how the machine is setup, having a single Management Module may not be an immediate problem as long as the compute modules get their power and are accessible via their own VGA and USB ports. Still, redundancy for the remote management of the chassis should have been an option.

The design of the chassis is impressive as it offers efficient airflow from the front intake points through the chassis and out of the system’s rear assembly. That said, I’d recommend using perforated doors if racking the server is a requirement. Just remember to keep the cabling very sparse. There’s plenty of air going through this machine once it’s powered on. Blocking the air going in and out of the chassis will heat things up. Regarding the air-flow out of the back of the chassis, it’s one of my pet peeves with some cable-management systems when bundled cables and mechanical arms block the exhaust coming out of servers cooling fans. Unfortunately, the demo we received didn’t come with any rack mounts or rails, so we weren’t able to see what racking configuration Intel has in mind for the system. For proper airflow, Intel also makes it clear in its user guide that none of the hot-swappable device bays should be left empty and that you need to use either the appropriate module or one of the specially designed covers to maintain flow. The documentation also warns that anything out of place for more than two minutes could result in possible performance degradation.

Regarding system-status monitoring, indicator lights play an important role in server management. They provide a quick-and-easy way to see if there’s a problem with a server. As mentioned already, the chassis has one built-in status light on the front panel.

Most of the information on the system’s health and configuration is only accessible through the modular server management UI called the “Modular Server Control.” This setup assumes that most folks will have the luxury of connecting to the MFSYS25 across a network all the time. Consider the scenario where you happen to be nearby the modular server and just want to take a quick glance at the temperature reading in the chassis or you want to look at the IP address assigned to the chassis or one of its servers. You would have to go find a local PC and log into the modular server control. Instead, wouldn’t it be nice to just walk up to the server and press a button to get a quick answer to your question? In a production environment, servers should come with some kind of alphanumeric LED-based module mounted on the faceplate that displays some basic information at the press of the button. This is especially convenient if the server needs to be physically identified or looked at during a quick spot check.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: MFSYS25 Modular Server Chassis

Prev Page Introduction Next Page MFS5000SI Compute Modules-

kevikom This is not a new concept. HP & IBM already have Blade servers. HP has one that is 6U and is modular. You can put up to 64 cores in it. Maybe Tom's could compare all of the blade chassis.Reply -

sepuko Are the blades in IBM's and HP's solutions having to carry hard drives to operate? Or are you talking of certain model or what are you talking about anyway I'm lost in your general comparison. "They are not new cause those guys have had something similar/the concept is old."Reply -

Why isn't the poor network performance addressed as a con? No GigE interface should be producing results at FastE levels, ever.Reply

-

nukemaster So, When you gonna start folding on it :pReply

Did you contact Intel about that network thing. There network cards are normally top end. That has to be a bug.

You should have tried to render 3d images on it. It should be able to flex some muscles there. -

MonsterCookie Now frankly, this is NOT a computational server, and i would bet 30% of the price of this thing, that the product will be way overpriced and one could buid the same thing from normal 1U servers, like Supermicro 1U Twin.Reply

The nodes themselves are fine, because the CPU-s are fast. The problem is the build in Gigabit LAN, which is jut too slow (neither the troughput nor the latency of the GLan was not ment for these pourposes).

In a real cumputational server the CPU-s should be directly interconnected with something like Hyper-Transport, or the separate nodes should communicate trough build-in Infiniband cards. The MINIMUM nowadays for a computational cluster would be 10G LAN buid in, and some software tool which can reduce the TCP/IP overhead and decrease the latency. -

less its a typo the bench marked older AMD opterons. the AMD opteron 200s are based off the 939 socket(i think) which is ddr1 ecc. so no way would it stack up to the intel.Reply

-

The server could be used as a Oracle RAC cluster. But as noted you really want better interconnects than 1gb Ethernet. And I suspect from the setup it makes a fare VM engine.Reply