Intel’s 24-Core, 14-Drive Modular Server Reviewed

MFS5000SI Compute Modules

Each of the Intel MFS5000SI compute modules fits into one of the six horizontal slots on the front-right of the MFSYS25 chassis. Simply put, the compute module looks like a 1U server minus any internal drives and without a power supply. Each of the MFSYS5000SI modules slides into one of six bays on the front of the main chassis, much like they would with a typical blade server. Once mounted, the modules can be powered up via the modular server control application or with the small power button on each compute module.

The compute modules included in the evaluation unit were configured as follows:

Server 1:

- 2 Intel Xeon E5440 CPUs @ 2.83 GHz

- 8 GB of Kingston FB DDR2-667 RAM

Server 2 and 3:

- 2 Intel Xeon E5410 CPUs @ 2.33 GHz

- 16 GB of Kingston FB DDR2-667 RAM

All Servers:

- Intel 5000P chipset

- Intel 5000P memory controller hub

- Intel 6321ESB I/O controller hub

- Network interface controller

- LSI 1064e controller, SAS 3000 Series

- AMD ES1000 16 MB graphics accelerator

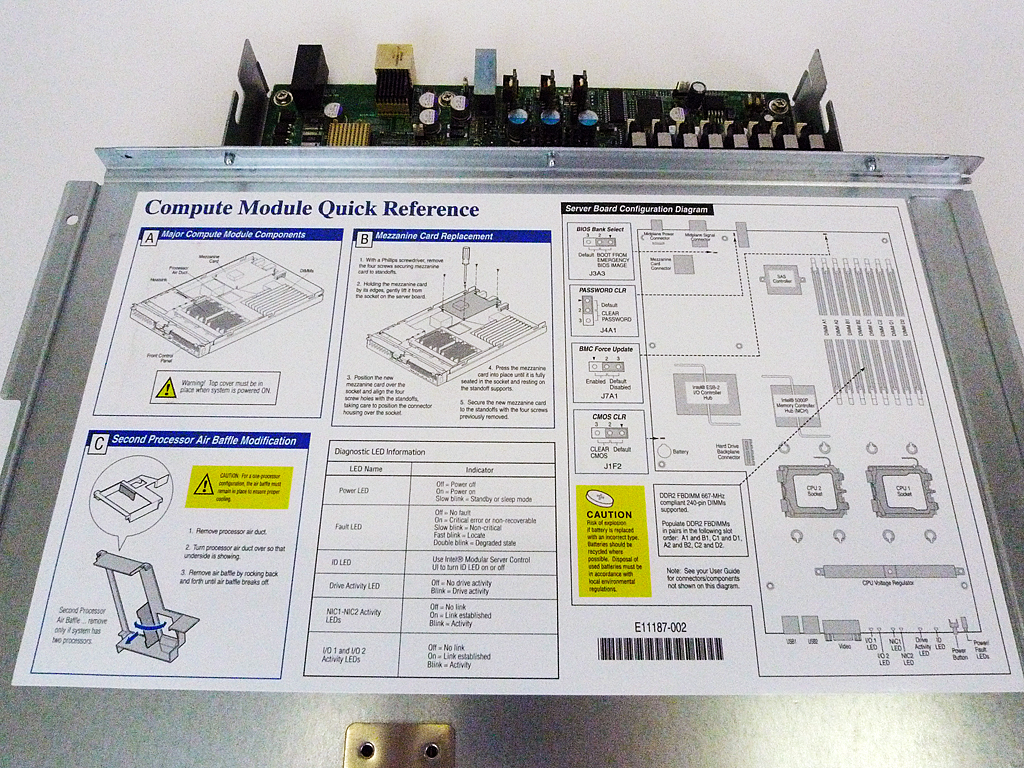

One of the two core components built into the compute modules’ system board is the Intel 5000P memory controller hub. The 5000P serves as a system bus interface for the processor subsystem, offers fully buffered DIMM (FBD) thermal management, and supports memory RASUM features, such as memory scrubbing, self testing, and mirroring. With the inclusion of the memory controller hub built into the motherboard’s architecture, transfer speeds of memory data are between 6.4 to 8 GB/s while data transfers across the 1,333 MHz data bus can reach speeds up to 10.66 GB/s.

The MFSYS5000SI compute modules support up to two Intel 5100/5200 Duo-Core and 5300/5400 Quad-Core Xeon processors. Older Xeon processors are not supported. The eight DIMM slots on the compute modules hold up to 32 GB of paired memory in non-mirrored mode. As the board does not support non-buffered DIMMs, only fully buffered DDR2-533 and DDR2-667 DIMMs are supported, although the former are not validated for the MFS5000SI. The eight DIMM slots themselves are split among four separate channels mastered by the memory controller hub, which Intel theorizes would support the peak bandwidths mentioned above: DDR2-533 at 6.4 GB/s and DDR2-667 at 8 GB/s. As for supported memory configurations, DIMMs must be paired, except in a single DIMM system where the DIMM must sit in the first slot (A1).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The second core component is the Intel 6321 ESB I/O controller hub. Besides serving as an I/O controller, this onboard device offers support for the other I/O devices on the compute module board, including the PCI-X bridge, the GB Ethernet controller and baseboard management. I/O devices that rely on the controller hub gateway services include the dual gigabyte Ethernet connections, USB connections, and the LSI SAS 1064e SAS controller.

Network connectivity from the MFSYS5000SI to the chassis is provided by the built-in dual gigabyte Ethernet media access control features in the 6321ESB I/O controller hub. Since networking runs across the chassis’ midplane, there are no physical network ports needed on the compute module. Instead, data is routed through a gigabyte Ethernet controller to the rear Ethernet switch module on the back of the chassis. Network performance is also streamlined by the Intel I/O Acceleration Technology as data is run through the Xeon processors, which by design, minimizes system overhead.

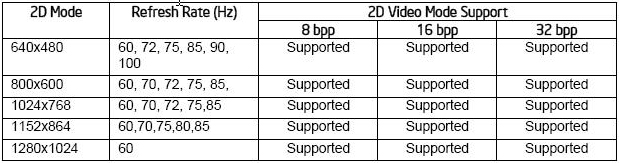

The 16 MB AMD ES1000 graphics accelerator serves as the graphics engine, which can be accessed via the front VGA port of the MFSYS5000SI. The modes supported by the accelerator are as follows:

There are additional ports for local administration on the front panel of each compute module. These are especially handy for those times when you can’t access the compute modules over the network. You get one VGA port to run your video and two USB 2.0 ports for input devices or external drives.

Not too far from the video port is a small power button on the front panel. It's great to have the power button to manually start up the server, but it’s one of those really tiny buttons that’s not big enough to be able to press it with a finger. You’ll have to use a pen or paper clip to be able to apply enough pressure to trigger the power-on process. My concern about having a button like this is that I’ve seen small, similar-sized buttons broken as operators and administrators tend to use too much force when pressing down on them with a pen.

For quick status checks, activity and status LEDs for NICs 1 and 2, drive activity, power, and I/O are on the lower edge of the panel, while the remaining real estate on the front panel is perforated for air induction.

Current page: MFS5000SI Compute Modules

Prev Page MFSYS25 Modular Server Chassis Next Page Ethernet Switch Module-

kevikom This is not a new concept. HP & IBM already have Blade servers. HP has one that is 6U and is modular. You can put up to 64 cores in it. Maybe Tom's could compare all of the blade chassis.Reply -

sepuko Are the blades in IBM's and HP's solutions having to carry hard drives to operate? Or are you talking of certain model or what are you talking about anyway I'm lost in your general comparison. "They are not new cause those guys have had something similar/the concept is old."Reply -

Why isn't the poor network performance addressed as a con? No GigE interface should be producing results at FastE levels, ever.Reply

-

nukemaster So, When you gonna start folding on it :pReply

Did you contact Intel about that network thing. There network cards are normally top end. That has to be a bug.

You should have tried to render 3d images on it. It should be able to flex some muscles there. -

MonsterCookie Now frankly, this is NOT a computational server, and i would bet 30% of the price of this thing, that the product will be way overpriced and one could buid the same thing from normal 1U servers, like Supermicro 1U Twin.Reply

The nodes themselves are fine, because the CPU-s are fast. The problem is the build in Gigabit LAN, which is jut too slow (neither the troughput nor the latency of the GLan was not ment for these pourposes).

In a real cumputational server the CPU-s should be directly interconnected with something like Hyper-Transport, or the separate nodes should communicate trough build-in Infiniband cards. The MINIMUM nowadays for a computational cluster would be 10G LAN buid in, and some software tool which can reduce the TCP/IP overhead and decrease the latency. -

less its a typo the bench marked older AMD opterons. the AMD opteron 200s are based off the 939 socket(i think) which is ddr1 ecc. so no way would it stack up to the intel.Reply

-

The server could be used as a Oracle RAC cluster. But as noted you really want better interconnects than 1gb Ethernet. And I suspect from the setup it makes a fare VM engine.Reply