Hands-On With Nvidia's Titan X (Pascal) In SLI

We test VR, SLI, Nvidia's new high-bandwidth bridges, and the concern that HBM2 might have been a better choice for Titan X.

SLI And VR

Without going into depth on how SLI works (you’ll find more detail in Nvidia's SLI Technology In 2015: What You Need To Know), it primarily utilizes that AFR technology mentioned on the previous page, which imposes a two-frame delay (or queue) to properly provide scaling benefits. And while you won't mind those extra milliseconds on a typical desktop display, an additional 17 ms of motion-to-photon lag in VR will affect your experience.

The workaround for VR is assigning GPUs to specific eyes for stereo rendering acceleration. This naturally requires optimization on the developer’s end, and as a result, SLI just isn’t supported by most VR titles as of mid-2016.

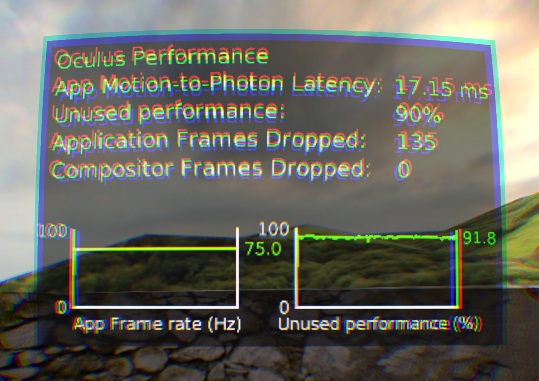

Consequently, we did some testing of the Oculus Rift using the only title we know punishes a GeForce GTX 980, Elite: Dangerous. We’re manually reporting performance based on the in-HMD debug tool display. Unfortunately, Oculus has not responded to our requests to enable logging-to-disk of that tool's data, so we can’t chart that experience out quantitatively.

SLI is disabled in these runs. You can leave the technology turned on in Nvidia's control panel, but the application only exploits one GPU no matter what.

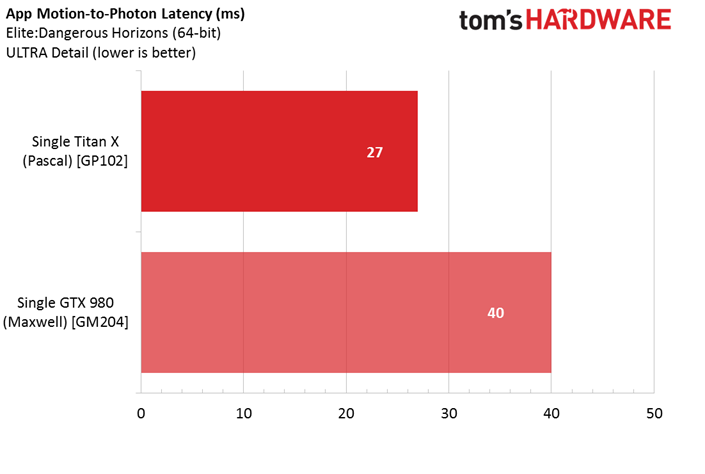

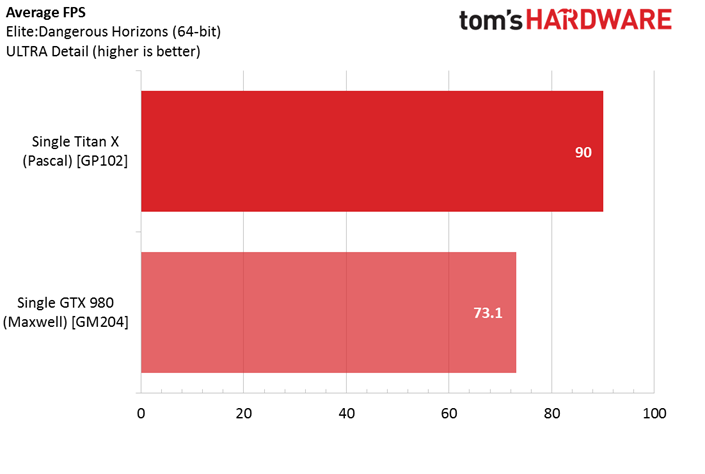

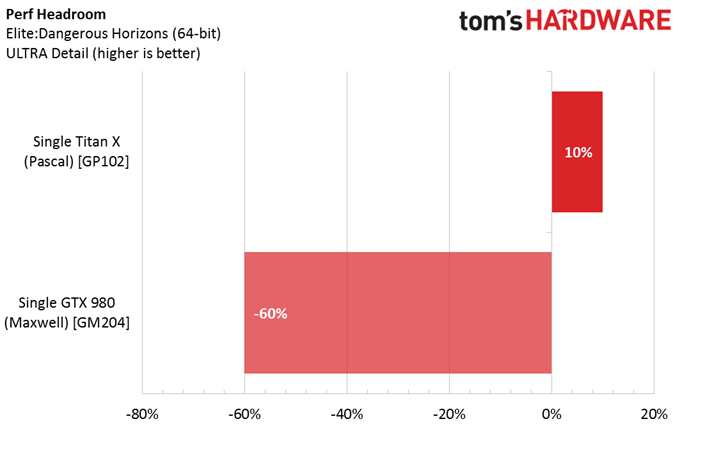

Notice that a single Titan X (Pascal) is barely able to keep up using the Ultra detail preset, with a minimal performance headroom of ~10%. Conversely, a GTX 980 just isn't fast enough to facilitate a smooth experience, averaging 73.1 FPS (below the 90 FPS target), while almost doubling motion-to-photon latency. Not even the Titan X manages to stay below the 20 ms that John Carmack of Oculus describes as the "sweet spot" of VR. You'll have to drop the quality level for an optimal VR experience in Elite: Dangerous for now.

MORE: Best Virtual Reality Headsets

MORE: All Virtual Reality Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

ryguybuddy I would like to see another follow-up with Titan X's in a watercool loop and a 6950X or something to see how much of an improvement that would make.Reply -

clonazepam I vaguely remember reading a different article where the two types of SLI bridges were x-rayed and examined much more closely. IIRC, there's very little difference. The newer one has the traces all set to equal lengths (the click of a button in design software), and one pin I think is changed to Ground which allows the driver to detect its presence. I think they're physically equal in otherwise, besides trace length, LED, and the change to ground.Reply

Anyway, I've done the multiple card setups for many generations, but game development is going in a direction where that's no longer a worthwhile endeavor as support dwindles more and more.

Thanks for the read. -

Realist9 This is an interesting experiment 'for science'. But that's all. SLI support in games is so spotty, unreliable, and sometimes non-beneficial, that it is now, IMO, irrelevant.Reply -

Compuser10165 I think going X99 with such a system is a requirement in order to reduce the cpu limit factor. Desite the 10 core 6950X, an OCed 5960X (to about 4.4-4.5ghz) should be enough for such a setup. That is because Haswell -E is easier to OC at higher frequencies versus Broadwell-E.Reply -

ledhead11 Thanks for the review. It's nice seeing these SLI reviews especially since there are so few for either the Titan X or 1080's.Reply

When I got my 1080's I tried the EVGA bridge and had problems with getting full contact on my cards. Some boots would show the cards, some didn''t, so for a time I used dual ribbons until I got the NVidia HB a week or two later. The NVidia worked for me no problem. The main difference I noticed was, a few more FPS here and there but really a more stable, consistent frame rate. I read the same article about the x-ray comparisons as well before purchasing and have to say all this info is getting pretty consistent.

I can tell you that 1080SLI has very similar performance behavior as to the reviews of Titan X SLI I've seen. Both SLI setups seem to really shine in the 4k/60hz or 1440p/144hz. When I tried DSR 5k on my 4k display the frames quickly dropped to around 40fps.

I'm not really seeing the CPU bottleneck you mention except for the Firestrike tests. Whether 4k/60hz or 1440p/144hz my 4930k @ 4.10ghz rarely goes above 40%.

I completely agree with you about what to use the Titan's for- 4k/5k all the way. 1080SLI just starts to hit a ceiling at around 60-80fps in 4k and averages 100-150fps in 1440p depending on the game. -

ledhead11 Oh and I forgot to mention, the most noticeable FPS increases with the HB bridge also happened at 4k for me.Reply -

niz I'm very pleased with my (P) Titan X, I upgraded from a 980GTX mostly for VR (Vive) and to be able to play Elite Dangerous at VR Ultra with superscaling set to 2.0, which it does fine now and is so rock solid I just leave SS set to 2.0 for everything in VR. With E:D in VR there's an occasional chugging but it seems to only be during loading screens (i.e. as a result of file I/O) so it seems that theres a real chipset issue that I/O can steal damaging amounts of bandwidth from something (PCIe bus?) rather than the CPU or GPU being maxxed out.Reply

I have an acer X34 monitor so 3440 x 1440, my PC is running a i7-6700k at stock speed. I keep thinking about blowing another $1200 just to go SLI just because "moar is moar" and just the thought of Titans in SLI give me a nerd boner, but it honestly seems like I'd see no noticeable benefit. -

RedJaron Reply

Just because a CPU can be clocked higher doesn't mean it performs better. Improvements in IPC and efficiency more often than not make up for the lower clock speeds.18749995 said:I think going X99 with such a system is a requirement in order to reduce the cpu limit factor. Desite the 10 core 6950X, an OCed 5960X (to about 4.4-4.5ghz) should be enough for such a setup. That is because Haswell -E is easier to OC at higher frequencies versus Broadwell-E. -

somebodyspecial Skipped the article after looking at contents. Still waiting on PRO benchmarks for this card to see how it does vs. other top cards in stuff like adobe (cuda vs. amd's OpenCL or whatever works best for them), 3dsmax, Maya, Blender etc. These cards are not even aimed at gamers, so wake me when you test what they were designed to do (pro stuff on the cheap), so content creators have some idea of the true value of these as cheap alternatives to $5000 NV cards (P6000, etc).Reply -

rcald2000 + Filippo L. Scognamiglio PasiniReply

Since you noticed two Titan XP's achieving only 50% utilization when paired with the i7-6700k, then which overclock CPU would diminish or eliminate that condition? i7-5820k (CPU I own) or an i7-5960X? Any other recommendations would be appreciated. Thank you for the great article.