The Oculus Rift Review

The Oculus Rift, the first high-end, consumer VR head-mounted display is now available, and it's poised to usher in a future of immersive experiences.

Why you can trust Tom's Hardware

The Complexity of Benchmarking VR

As you might expect, the tools for quantifying a virtual reality experience are still immature. Tests we were running on pre-production hardware no longer work on the retail Rift. Other benchmarks that were supposed to be ready for launch are still in the final stages of validation. And when it comes to guidance from Oculus itself, we’re told that much of the evaluation process involves simply using the HMD.

Well sure, we’re doing plenty of that too. But passing judgement on such a highly-anticipated piece of hardware with little more than “the experience feels smooth enough” feels so…incomplete.

Consulting with AMD and Nvidia turned up conflicting guidance; one company conceded that Fraps is the best we have for measuring performance, while the other expressed apprehension in trusting any metric at this early point. After all, what good is a result if it can’t be knowledgeably explained?

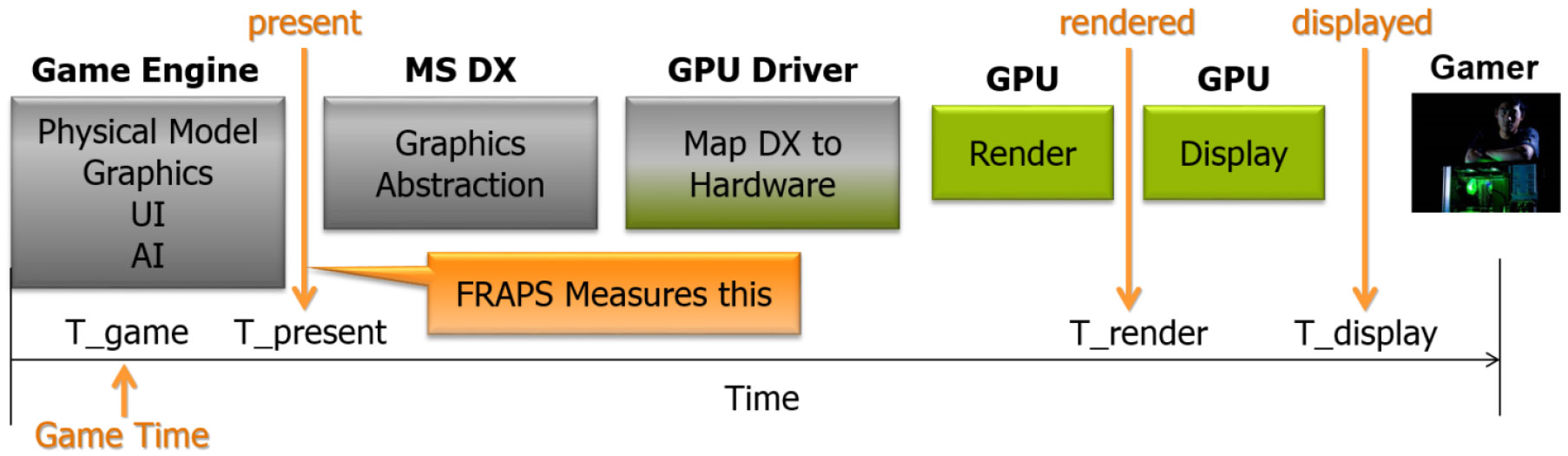

Indeed, this is not the first time Fraps’ utility was called into question. Years ago, long before we were even thinking about a retail HMD, AMD was on trial for inconsistent frame delivery, which manifested as stuttering. To make a long story short, the company helped explain how Fraps collects data and where its results can be misconstrued. Because the software does its work in between the game and Windows, intercepting Present calls before they reach Direct3D several stages in the display pipeline don’t factor in to a Fraps result.

There’s even more complexity in VR. Specific to the Rift, each eye’s frame passes through Asynchronous Timewarp (ATW) prior to display. In the event that a frame misses its 11.11ms deadline (corresponding to a 90Hz refresh), ATW reuses the previous frame with updated headset position information, preventing the judder that would have occurred otherwise.

Now, we’re very short on confirmed fact at this point—Oculus is being elusive, while representatives at AMD and Nvidia don’t appear to know for sure—but we suspect that a missed frame would show up on Fraps, while Oculus’ runtime would mask its effect in real-world game play. And after spending plenty of time benchmarking and playing on the Rift, that’s what seems to be happening.

Thus, in a world where Fraps is our only insight into performance, we have to kick off our benchmarks with a colossal caveat. There is some correlation between our Fraps results and playing on the Rift, but read our accompanying analysis before drawing conclusions. In many cases, problematic-looking charts really aren’t as troublesome as they seem.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Our comparisons are further complicated by dynamic quality adjustments that games will increasingly use to maintain that critical 11.11ms cadence. You see, in order to correct for the spacial distortion imposed by an HMD’s lenses, more pixels need to be rendered than the actual resolution of the display—a common target is 1.4x. But if a game engine determines it’s going to miss its frame rate goal, 1.4x might slip to 1.3 or 1.2x, sacrificing some quality (we’re told this can be almost imperceptible) in the name of maintaining an immersive experience. Fraps should pick up the performance side of that, but a difference in quality adds an unknown to our analysis…at least until someone creates a tool for collecting that information. Beyond these adaptive changes to the viewport, Valve Software’s senior graphics programmer Alex Vlachos says that anti-aliasing can also be adjusted on the fly, or radial density masking can be applied to maintain performance.

Understand also that frame rates and more granular frame times do not reflect all aspects of the HMD’s performance, either. Latency and persistence play a significant role in the comfort and enjoyment of a VR experience, and we simply cannot get those measurements from the Rift with the tools at our disposal today.

What we can do, then, is present the Fraps-based numbers we generated and do our best to correlate them to our real-world experience gaming with the Rift. In the days to come, we expect a press preview build of VRMark that facilitates draw call to photon latency, which will effectively capture more of the pipeline. Basemark’s VRScore is also imminent. That one claims to offer some of the same measurements as VRMark, plus simultaneous left and right eye latency measurements, dropped frame detection and duplicate frame detection. When final, approved versions of those early tools become available, we’ll report the results. So without further ado, let’s look at the hardware involved.

How We Tested Oculus Rift

Oculus shipped us a VR-ready PC for our evaluation with decidedly mainstream specifications. And while we certainly used that for much of our testing, we put one of our quickest lab machines to work for the performance measurements. This consists of a Core i7-5960X host processor, 16GB of G.Skill DDR4 memory, MSI’s X99S Xpower AC motherboard and a 500GB Crucial MX200 SSD.

Given the computational requirements of VR, there are only a few graphics cards relevant to the discussion. We wanted to test Oculus’ recommended Radeon R9 290 and GeForce GTX 970, a handful of cards above that specification and at least one board below it. As it has so many times in the past, MSI stepped in with a number of boards to help complete our line-up, including:

Those cards complement the Radeon R9 Fury X, GeForce GTX 980 Ti and GeForce GTX 970 we already had in the lab.

It’s also worth mentioning that MSI sent two of its R9 390X and GTX 980 cards so that we could generate results using CrossFire and SLI. We were later told, however, that none of Oculus’ launch titles support multi-GPU rendering. When applications start utilizing AMD’s Affinity Multi-GPU and Nvidia’s VR SLI technologies, we’ll use those cards to evaluate the speed-up (in his 2016 GDC presentation, “Advanced VR Rendering Performance,” Valve’s Vlachos said to expect a 30-35% increase).

We chose four applications from Oculus’ list of launch titles to benchmark. All of them offer a limited number of quality options, which we maxed out in three cases. And they’re all imperfect in that it’s incredibly difficult to repeat the same sequence in successive runs.

In the middle of our testing, Nvidia sent word of a new driver, 364.64, which we installed and started over with. We’re told that this is a version of the driver that will launch alongside the Rift. That later version should include a few extra bug fixes. However, Nvidia tells us that 364.64 is representative of the performance and image quality available at launch.

Separately, AMD informed us of a new Radeon Software Crimson Edition 16.3.2 driver that will support Oculus’ SDK 1.3. That news came several days after we wrapped up benchmarking and sent the Rift off for experiential testing at another Tom’s Hardware lab. As such, our results come from version 16.3.1, published on 3/16/16. We asked AMD for an accounting of the changes made to 16.3.2, but have not received a response.

| Benchmarks | |

|---|---|

| EVE: Valkyrie | Highest detail settings, basic training run-through, Fraps-based recording |

| Chronos | Epic graphics options (Rendering Quality, Shadow Quality, Rendering Resolution), Tale of the Scouring sequence, Fraps-based recording |

| Radial-G | Highest detail settings, Dead Zone Alpha race, Fraps-based recording |

| Lucky's Tale | Medium detail settings, first level run-through to first door, Fraps-based recording |

MORE: Best Graphics Cards

Current page: The Complexity of Benchmarking VR

Prev Page What Can (And Can't) You Do With The Rift Next Page Benchmark ResultsKevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

ingtar33 My main concern about the Rift isn't addressed in this article. And that's if it will become Orphanware. You see, there is a fraction of the gaming public who has a computer able to play games on a Rift, and an even smaller number of those people who will spend $600 to buy a Rift.Reply

This leaves the game manufacturers in a tough spot. They want to make and market games that will play on the most pcs possible. So my fear is that in 6-18mo we'll see the end of titles that will play on the rift, and nothing new in the pipeline as those titles will prove to be financial duds to the industry, thanks to the tiny install base.

And then the Rift will become Orphanware, a product without a market or market support. -

Realist9 I stopped reading in the "setup up the rift" when I saw:Reply

1. "you're asked to set up an account..."

2. "you're prompted to configure a payment method..."

What am I setting up an account for, why do I have to 'sign in', and what am I setting up a payment for?? -

kcarbotte Reply17729616 said:My main concern about the Rift isn't addressed in this article. And that's if it will become Orphanware. You see, there is a fraction of the gaming public who has a computer able to play games on a Rift, and an even smaller number of those people who will spend $600 to buy a Rift.

This leaves the game manufacturers in a tough spot. They want to make and market games that will play on the most pcs possible. So my fear is that in 6-18mo we'll see the end of titles that will play on the rift, and nothing new in the pipeline as those titles will prove to be financial duds to the industry, thanks to the tiny install base.

And then the Rift will become Orphanware, a product without a market or market support.

Time will be the ultimate test for the Rift, but I don't see that fate coming.

The companies working in the VR industry are incredibly excited about the prospect of this new medium. When the biggest companies in the world are pushing to bring something to market in the same way, it should be telling of the potential these companies see.

Facebook and Oculus have not been shy about saying that adoption will be slow. If developers haven't been paying attention to that, then they will surely fail. Most understand that the market will be small, but in the early days of VR the market share will be large, as most people buying in this early will have a hunger to try out as much of the content as possible.

I've also spoken with several VR developers in person about this issue. Most, if not all of them, are prepared for low volume sales. This is precicesly the reason why you won't see many AAA titles exclusively for VR for a while. The games will be shorter, and inexpensive to produce.

VR games are mostly being developed by indie shops that have little overhead, and few employees to pay. They are also mostly working for minimum wage, hoping to see bonuses at the end of the year from better than expected sales.

I don't think we have any chance of seeing the Rift, or the Vive, fall into the realm of abandonware. Tools are getting easier to use (VR editors), and cheaper to access (Unity, Unreal Engine, Cryengine are all free for individuals.) The resources for people to build for this medium are vast, and there's no better time to be an idie dev than now. VR is a new market, and anyone has as much chance as the next to make the next big killer app. It resets the industry and makes it easier to jump into.

VR will not be dominated by the likes of EA and Activision for some time now. It opens the door for anyone with a good idea to become the next powerhouse. That will be a very compelling prospect for many indipendant, and small development firms.

-

Clerk Max No mention of VR motion sickness or kinetosis in this conclusion ? This is a major showstopper, preventing more than a few minutes of immersion for most people.Reply -

kcarbotte Reply17729694 said:I stopped reading in the "setup up the rift" when I saw:

1. "you're asked to set up an account..."

2. "you're prompted to configure a payment method..."

What am I setting up an account for, why do I have to 'sign in', and what am I setting up a payment for??

If you kept reading you'd know the answer.

You are signing up for Oculus Home, the only way to access content for the Rift.

As soon as you put the headset on, the sensor inside it initializes Oculus Home. Without an account, you can't access any of the content. It's exactly like accessing content on Steam. You need to sign in.

That's the same reason you need a payment method. There's free games, so you can skip it, but you can't access any of the paid content without an Oculus Home account.

If SteamVR ends up supporting the final rift, then you may be able to play other games on it, but at this time, you need Oculus Home for everything on the Rift, including existing games like Project Cars. -

Joe Black I get the sense that it is where 3D gaming was right after Windows95 and directX launched.Reply

That's cool.Believe it or not you actually did need a relatively beefy PC for that back in the day. It was not for everybody yet. -

bobpies ReplyWhat am I setting up an account for,

to download and play the games

why do I have to 'sign in'

to access your games

and what am I setting up a payment for??

to buy the games. -

kcarbotte Reply17729767 said:No mention of VR motion sickness or kinetosis in this conclusion ? This is a major showstopper, preventing more than a few minutes of immersion for most people.

Read page 8 if you want to know about my motion sickness experience. Only two games gave me any kind of trouble, and both of them were caused by first person experiences. Its well established that moving around in first person is not comfortable for many people. The motion messes with your brain because you aren't actually physically moving. actually sitting, while your character moves around isn't comfortable at all for me. Some people don't have trouble, but the comfort levels are there for a reason. Both of those games are listed as intense experiences, so even Oculus acknowleges that that some folks will get sick.

All of the 3rd person games that I tried, and the games with cockpits (Radial G, Project Cars, Eve: Valkyrie) are all very comfortable.

We'll talk more about motion sickness and effects of being in VR for extended periods over the coming weeks. So far, with the limited time we've had with it, I've only used it for less than 10 hours total. There's plenty of games that we've not even fired up yet, so a full discussion about getting motion sickness seesm premature.

-

kcarbotte Reply17729831 said:I get the sense that it is where 3D gaming was right after Windows95 and directX launched.

That's cool.Believe it or not you actually did need a relatively beefy PC for that back in the day. It was not for everybody yet.

That's a good comparison.

I like to use the first Atari console as a comparison. This is the dawn of a new medium that people don't yet understand. It will be expensive, and not for everyone in the early days, but look at where video games are today. If everyone had the same opinion about Atari back then, as many people seem to feel about VR, the entire video game industry wouldn't have existed as we see it today.

VR will be similar. It will take a long time to get mass adoption, and the road there will have plenty of changes and advancements, but it's definitely going to happen. VR is far too compelling and has far too much potential for it not to. -

Realist9 The 'conclusion' page is really spot on. Specifically, the parts about AAA titles and casual audience.Reply

So, does the release version of the Rift still require graphics settings in Project Cars to be turned down with pop in and jaggies? (read this in a Anand article from 16 Mar).