PCI Express And SLI Scaling: How Many Lanes Do You Need?

Features

By

Thomas Soderstrom

published

Are the most elaborate platforms really required to host the fastest GPUs, or can you get away with P55's lane-splitting scheme? As Nvidia’s latest graphics processors push 3D performance to new heights, we examine the interfaces needed to support them.

Add us as a preferred source on Google

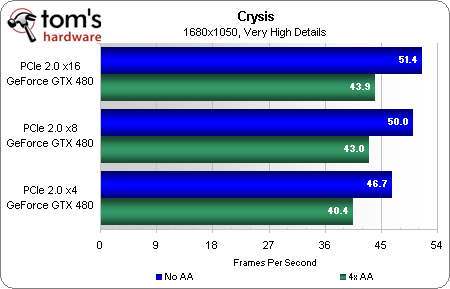

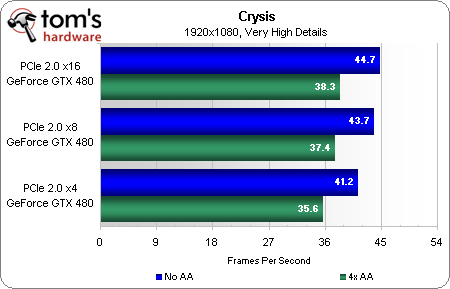

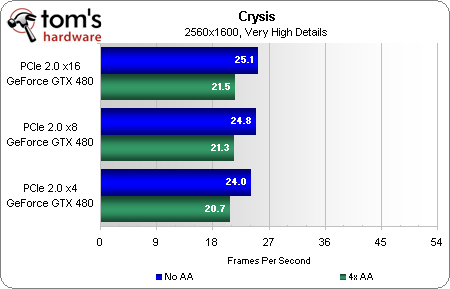

PCIe Scaling: Crysis

Experience tells us that Crysis is usually GPU-limited, and it appears that bandwidth limits are far less of a problem as resolution is increased.

The x4 slot suffers a 9% performance handicap at 1680x1050, while the x8 slot allows the GPU to reach 98% of its performance potential. That is to say, the mid-sized slot looks like an acceptable option for Crysis.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: PCIe Scaling: Crysis

Prev Page PCIe Scaling: Call of Duty: Modern Warfare 2 Next Page PCIe Scaling: DiRT 2Thomas Soderstrom is a Senior Staff Editor at Tom's Hardware US. He tests and reviews cases, cooling, memory and motherboards.

80 Comments

Comment from the forums

-

amk09 I love how people always bash on x8 x8 and how it sucks, when in reality x16 x16 is only 4% better.Reply

You spend unnecessary $$$ on a x58 platform while I save money that I can put towards a GPU upgrade with my p55 platform :) -

carlhenry i'm curious how other games are "dependent" on bandwidth while others are not... does that mean that the games that aren't dependent on bandwidth isn't using the full potential given the the size advantage of x16 over the x8? i wish every game would utilize every inch of your hardware in the future.Reply -

sambadagio For all your fps hunters, I bet you only have a screen at home with 50 or 60Hz. So just for your information, everything above 50 or 60fps is just useless... In this aspect, a PCIx 4x is actually enough... ;-)Reply

-

luke904 so a 4850 crossfire setup will hardly be bottlenecked by an 8 lane motherboard.Reply

anyone know if 4850's are going to be unavailable any time soon? You could get the 3000 series for quite awhile after the 4000's released so I'm crossing my fingers until i can afford a cpu upgrade and another 4850

cpu is currently a 7750BE and so im pretty sure it would bottleneck the 4850's. I think it does with just one actually. -

jgv115 @ carlhenryReply

It's not the game's fault. The GPU can only go as fast as it was made to go. So in simple terms you could say that GPUs these days aren't "fast" enough to use all the bandwidth PCI Express offers. -

outlw6669 Very nice review but I have to ask, why did you not test with 5970's?Reply

On a card for card basis they are still quite a bit more powerful than the GTX 480 and should require the most bandwidth of any current card for maximum performance. -

barmaley This review tells me that if you already have an i7 and at least 2xPCIe 16x lanes on your motherboard then in order to play modern games, all you are going to be upgrading for the next several years is your graphics.Reply -

Aionism Even though I'm not interested in SLI I am glad to finally see a benchmark comparing PCI-E x16 and x4. My motherboard only allows me to use my video card in my x4 slot for some reason. I've been wondering how much performance I've been losing over that.Reply