What Do High-End Graphics Cards Cost In Terms Of Electricity?

Many reviews analyze the minimum and maximum power consumption of a given graphics card. But just how much power does a high-end graphics card really need during the course of standard operation? This long-term test sheds some light on that question.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Explanation Of The Calculation Method

The Fundamentals of Our Consumption Calculation

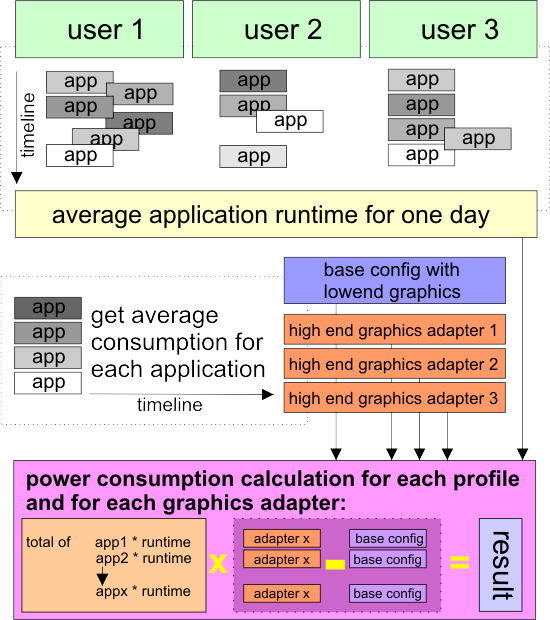

It might seem complicated at first reading, but it is in fact really quite simple. We follow a specific person over a long period of time and track the run time of all programs and the total power consumption, including idle periods. The result is a representative, statistical average value of an average day. The details of the computer configuration used do not matter, as we are just after the average daily application usage of the users for now.

For our next step, we choose any of the relevant applications and measure the energy consumption of our test system equipped with a very low-end graphics card. The results are assigned to each respective application as a base value. Then, we measure the average consumption of each application again, but with different, more powerful, high-end graphics cards.

The period of time for testing varies between the applications. Games are tested for at least 15 minutes (much longer in most cases), depending on how graphics-heavy the game is, for example. We limited ourselves to five minutes for hardware-accelerated video.

As the last step for each profile, the power consumption of every single graphics card configuration is determined, including the basic configuration. We simply use the sum of time spent in the programs per day, multiplied by the specific consumption of each graphics card setup for the respective applications.

Results

In the end, we get the average total power consumption values for each system and graphics cards used. By subtracting the base configuration values from each result, we get the pure consumption compared to the base configuration. Multiplying the results with the current cost of electricity, we get to total costs, both the initial costs and the operational costs. Multiplying the daily values by the amount of days using the computer per year takes us to a yearly result.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Explanation Of The Calculation Method

Prev Page Initial Idea And Power Consumption Definition Next Page Creating The Application Usage Profiles

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

damric I don't get it. Are they saying that a GTX 480 will cost a hard core gamer $90/year in electricity? Seems like a drop in the bucket considering my power bills are over $90/month in the winter and over $250/month in the summer. Just think of all the money the hard core gamer saves from not having a girlfriend :DReply -

scook9 They are also neglecting the positive side effects like not needing a space heater in the winter....you recoup alot of energy right there :DReply -

porksmuggler ^Tell me about it, warmest room in the house right here. Turn the thermostat down, and boot the rig up.Reply

Typo on the enthusiast graph. calculations are correct, but it should be 13ct/kWh, not 22ct/kWh. -

aznshinobi The fact that you mentioned a porsche. no matter what the context. I love that you mentioned it :DReply -

AMW1011 So at worst, my GTX 480 is costing me $90 a year? Sorry if I'm not alarmed...Reply

Also I can't imagine having 8 hours of gaming time every day. 5 hours even seems extreme. Sometimes, you just can't game AT ALL in a day, or a week.

Some people do have lives... -

nebun alikumNvidia cards consume power like crazywho cares....if you have the money to buy them you can pay for the electricity...it's just like SUVs, you have the money to buy them you can keep them runningReply -

nebun AMW1011So at worst, my GTX 480 is costing me $90 a year? Sorry if I'm not alarmed...Also I can't imagine having 8 hours of gaming time every day. 5 hours even seems extreme. Sometimes, you just can't game AT ALL in a day, or a week.Some people do have lives...i run my 480 sli rig to fold almost 24/7...do i care about my bill...HELL NOReply