Dell Precision T5600: Two Eight-Core CPUs In A Workstation

Results: NewTek LightWave 3D 11.5, E-on Vue 11, And Blender

NewTek LightWave 3D 11.5

If you've followed our revamped workstation coverage, you know that our 3D application testing started with LightWave. This is based purely on my familiarity with that product.

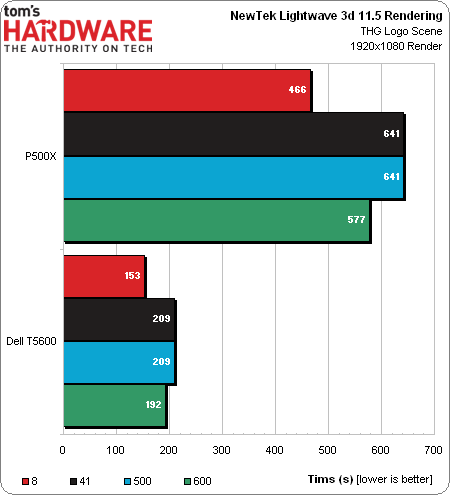

LightWave 3D Rendering

This scene originated in LightWave and is probably best optimized for it. It has numerous n-gons (polygons with more than four points) that keep geometry manageable, but then get necessarily tripled before getting exported to other applications. In all four frames, the difference between the two machines is close to a factor of three.

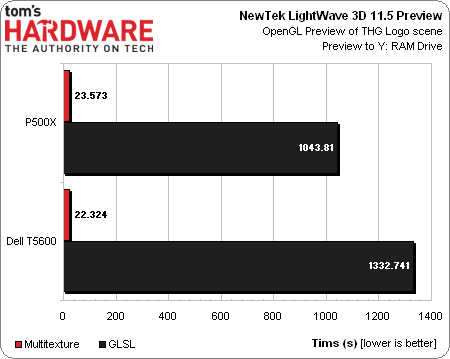

LightWave 3D OpenGL Preview

The OpenGL preview in LightWave serves the same purpose as the animation preview in 3dsMax and the playblast in Maya and works similarly. We run the preview test in the older multitextureshaders and the newer GLSL modes. The multitextureshaders are designed to work with more antiquated OpenGL systems and are incredibly fast for generating previews, while the GLSL technique uses shaders that evaluate much more of the surface options, including bump mapping and procedurals. Naturally, the GLSL mode takes a lot longer than the older mode, and the single-threaded nature of the preview means that the baseline machine comes out faster in GLSL. Thanks for that, higher clock rates and Intel's Ivy Bridge architecture.

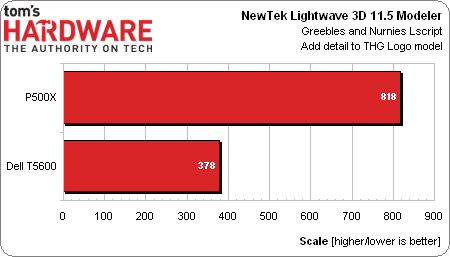

LightWave 3D Modeler

This test uses a script within LightWave to clone polygonal geometry across the surface of our Tom's Hardware logo object. That, in a few separate stages, is how the detailing for the logo was created. The metric also reports how much time it takes to complete this operation. Even though Modeler is largely single-threaded, the test responds extremely well to the T5600's additional memory bandwidth, wrapping up in less than half the time of our baseline system.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

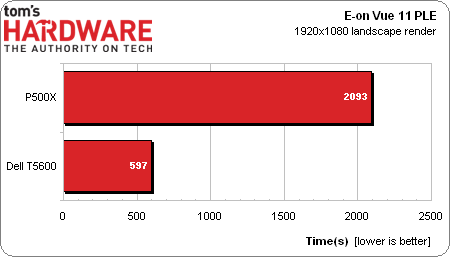

E-on Vue 11 PLE

E-on’s Vue is a piece of 3D software designed for rendering landscapes. Our scene was originally created in Vue 8, and we load it into the current Personal Learning Edition for rendering. Vue makes good use of the Xeon E5-2687W's extra cores and memory bandwidth, yielding a 3.5x-faster result than the baseline.

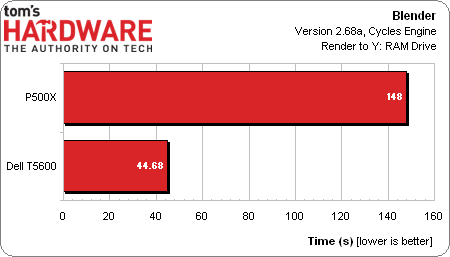

Blender

Blender’s new Cycles renderer is more modern and efficient than the previous Tiles renderer. It supports newer features, including GPU-based rendering. But in this test, we’re performing a purely CPU-based benchmark. As we’ve seen with several other render tests, the Precision T5600 comes out slightly more than three times faster than iBuyPower box.

Current page: Results: NewTek LightWave 3D 11.5, E-on Vue 11, And Blender

Prev Page Results: Autodesk 3ds Max And Maya Next Page Results: Digital Audio Workstation-

kennai Would it be possible for you guys to test this in gaming applications? I was really curious how well these CPU's would do in gaming with high end gaming GPU's, since it's pretty much my dream CPU set up >.>.Reply

Also, good job on the review as always. -

Am I reading this right, in the SPECviewperf 11 bench graph: the ($480-ish) PNY Quadro 2000 (P500X) beat the ($ 1800-ish) PNY Quadro K5000 by significant margins in the SW-02, as well as some other ones as well. This sure has makes me think twice about wanting to upgrade my 2000 to a K4000.Reply

-

blackjackedy Reply11768418 said:Am I reading this right, in the SPECviewperf 11 bench graph: the ($480-ish) PNY Quadro 2000 (P500X) beat the ($ 1800-ish) PNY Quadro K5000 by significant margins in the SW-02, as well as some other ones as well. This sure has makes me think twice about wanting to upgrade my 2000 to a K4000.

It says this right beneath the graph:

The tests seem evenly split between single- and multi-threaded workloads, and some of them incur little or no hit from AA, which points to something other than the GPU bottlenecking performance. In fact, SolidWorks performs better with AA on. How odd is that?

-

Reply

Correct if I am wrong, but as far as I know the basic S*#tWorks is not optimized for multi-threading (hence I am only running an i7 3820 and anything higher would not benefit the performance). Now SW Simulations and PhotoView360 is a different story.11768444 said:11768418 said:Am I reading this right, in the SPECviewperf 11 bench graph: the ($480-ish) PNY Quadro 2000 (P500X) beat the ($ 1800-ish) PNY Quadro K5000 by significant margins in the SW-02, as well as some other ones as well. This sure has makes me think twice about wanting to upgrade my 2000 to a K4000.

It says this right beneath the graph:

The tests seem evenly split between single- and multi-threaded workloads, and some of them incur little or no hit from AA, which points to something other than the GPU bottlenecking performance. In fact, SolidWorks performs better with AA on. How odd is that?

I just might run SpecviewPerf 11 on my system to see how it performs. To others it might matter, but in my design, I could care less about AA; I am just happy when SolidWorks does not crash.

-

Draven35 Yes, several of the tests the P500X's higher CPU speed makes a huge difference. Also, ViewPerf uses Solidworks 2010 code, AFAIK.Reply

Photoview 360's renderer is written by the guys at Luxology, based on the renderer from their 3d application Modo, and is very well multithreaded.

Tuffjuff: I asked myself the same question about the RAM. The machine would have performed vastly better in the AE tests with 32 GB, because i could have used all of the physical CPU cores. -

bambiboom Gentlemen?,Reply

A very good and welcome review. The systems compared were, however, not at the same level relative to their categories. More would have been revealed if the P500X used something like a GTX 680 (In other words,about 2nd from the top of their respective lines) rather than a Quadro 2000 which is two generations past and in effect, just a much lower line ancestor of the K5000. I imagine these tests are complex and time-consuming, but it would have provided perspective if at least one direct competitor from HP and/or Lenovo appeared.

A couple of comments on the T5600 design.

1. I can understand the trends toward more compact cases, and even the need to pander to styling and branding, but the TX600 series is inexecusably short on drive bays. My mother's 2010 dual-core Athlon X2 in a $39 case, "Grandma's TurboKitten 3000", has more expansion bays. Still, the T5600 situation is better than the impending Mac Dustbin Pro.

2. The brutalist architecture may have convenient handles, but to me is a clunker, both visually and in features. I don't know anyone in architecture, industrial design, graphic design, animation, or video editing that doesn't keep their workstation vertically, who doesn't also hate vertical optical drives, and also often have two of those plus a card reader. Also, As Jon Carroll mentions, this is short on front USB 3.0 ports. I would question a workstation at this level without at least three USB 3.0 ports on the front. There are never enough USB ports on a workstation. The Precision T5400 has two front, six rear, and two on the back of the (SK-8135) keyboard! USB 2.0 ports and I still have to add a four-port hub on one of the back ports.

Oh, and Jon, the indentation on the top of the T5600 is not for car keys- that's where you would set your short-cabled USB external drive(s)- and flash drives-if there were enough USB 3.0 ports. My Precision T5400 I think is wearing in an indentation in that exact location from a WD Passport.

3. As tuffjuff also comments, 16GB of RAM is not nearly enough for this kind of machine. Dual CPU systems divide the RAM equally between the processors- these motherboards have separate slots and special sequences of symmetrical positioning. This means that the test system had, in effect, only 8GB of RAM per CPU or as I like to express it- 1GB per core. There's a reason the T5600 .supports 128GB and the T7600 can use 512GB of RAM- Windows, programs and files are big and in these systems, a lot of programs are running at once. I use a formula of 3GB for the OS, 2GB for each simultaneous application and 3GB for open files. As my workstations often use five or six applications plus a constant Intertubes and Windows Exploder, sorry, Explorer, my new four-core HP z420 has 24GB of RAM (6GB/core). If I had a dual E5-2687w system, given there are so many more cores to feed, I would therefore consider 64GB a reasonable level- 32GB per CPU (4GB/core).

4. The most worrying comments in the review concerns the noise. Of course, a system with two 150W CPU's and school bus- sized GPU needs good airflow, but this one devotes so much of the facade to the grille that the optical drive has to be in the stupid vertical position, and apparently this openness that lets the air in also lets the noise out. But, in my view, noise from a workstation is close to being a deal-breaker. This is another reason why the vertical drive is so silly- few put their workstation horizontally on the desktop right in front of them because of the noise.

Dell apparently wants to ease out of the declining PC business, and these kinds of design decisions might help that process. I think though that Dell, plus Autodesk and Adobe that want to force eternal cloud computing subscription fees are going to find many, many workstation users that will object and going to buy AutoCad 2014 and CS6, run them on Precision T7500's, and preserve the DVD's in hermetically sealed containers. I, for one, will never, ever be sending my industrial design files into the ether and onto other firms' servers.

This assessment is a good demonstration of the way in which workstations and creation applications continue to evolve each other. However, as many workstations applications have become far more capable, especially in 3D modeling and simulation, there is still a vast under-utilization of multiple cores in those applications. It's not accidental that the T5600 review emphasized rendering as that it's an example where the core applications have adapted to the availability of multiple cores and also can take advantage of GPU co-processing. It's an odd thing and a puzzle> make a model in Maya and run simulations in Solidworks or Inventor essentially on a single core, but make a rendering of that model using fourteen cores. I make Sketchup Pro models that when they go over about 20MB become almost unusable without navigating in monochrome and clever, careful, and constant fussing with layers. Rendering is very calculation intensive, but so are thermal, gas flow, atmospheric, molecular biological, and structural modeling and simulations.

The T5600 review, as it's concentrates on applications that reveal the whole capabilities of the $4,000 of CPU's and $1,800 of CUDA cores also reveals this fundamental engineering hollow in workstation applications > and indeed in another important realm. I'm not a gamer, but on this site I can feel gamers wondering the same thing as workstation wonks > Software companies > there are billions of CPU cores waiting for something to do! Why the hell aren't there more multi-core applications?

Cheers,

BambiBoom

PS>

1. Dell Precision T5400 (2009)> 2X Xeon X5460 quad core @3.16GHz > 16 GB ECC 667> Quadro FX 4800 (1.5GB) > WD RE4 / Segt Brcda 500GB > Windows 7 Ultimate 64-bit > HP 2711x 27" 1920 x 1080 > AutoCad, Revit, Solidworks, Sketchup Pro, Corel Technical Designer, Adobe CS MC, WordP Office, MS Office > architecture, industrial design, graphic design, rendering, writing

2. HP z420 (2013)> Xeon E5-1620 quad core @ 3.6 / 3.8GHz > 24GB ECC 1600 > Firepro V4900 (Soon Quadro K4000) > Samsung 840 SSD 250GB / Seagate Barracuda 500GB > Windows 7 Professional 64 > to be loaded > AutoCad, Revit, Inventor, Maya (2011), Solidworks 2010, Adobe CS4, Corel Technical Design X-5, Sketchup Pro, WordP Office X-5, MS Office

-

Shankovich My school updated our lab with these. We run CFD or FEA on them mostly, and it's godly.Reply