Six SSD DC S3500 Drives And Intel's RST: Performance In RAID, Tested

Intel lent us six SSD DC S3500 drives with its home-brewed 6 Gb/s SATA controller inside. We match them up to the Z87/C226 chipset's six corresponding ports, a handful of software-based RAID modes, and two operating systems to test their performance.

Results: RAID 5 Performance

RAID 5 can sustain the failure of one drive. RAID 6 (which Intel's integrated controller does not support) will keep an array up even after two failures. Of course, if you build a volume using SSDs, RAID 5 will cost you one drive worth of capacity. Using a trio of 480 GB SSD DC S3500s, losing a third of the configuration hurts. Giving up one drive out of six is less painful. But as you add storage to the array, the percentage of capacity lost to parity goes down.

Typically, writing the extra parity data also means that performance drops below single-drive levels, particularly without DRAM-based caching. Intel's Rapid Storage Technology relies on host processing power and not a discrete RAID controller, but it can help speed up writes substantially (particularly sequentials), depending on how caching is configured. With that said, enabling caching is far more helpful on arrays of mechanical disks. Why? Random writes are literally hit or miss. If data is in the array's cache, it's serviced at DRAM speeds. If not, latency shoots up as the I/O is located elsewhere. That penalty doesn't affect most hard drive arrays, but it slows down SSDs more dramatically.

It's somewhat of an issue, then, that read and write caching cannot be fully disengaged with Intel's RST in RAID 5. For more potential speed, disk drives would read data near a requested sector (on the way to the needed data) and toss that information in a buffer with the hope that, should it be called upon, it'd be ready more quickly. That's plausible in a sequential operation, but a lot less so when it comes to random accesses. As it happens, this same principle applies to RAID arrays. Write caching can be disabled for data security reasons, but RST always has some form of read-ahead enabled, passing data along to a RAM buffer on the host for use later. A hard drive's buffer typically holds this information, but a RAID setup passes the data along to the controller.

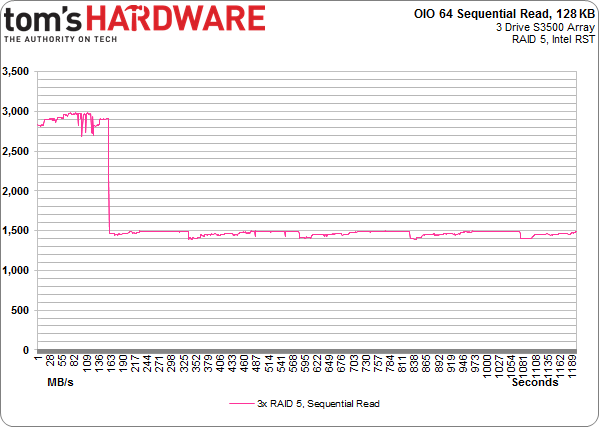

What does that end up looking like? Over the last two pages, I've tried to drive home that you have up to about 1.6 GB/s of usable throughput with Intel's PCH. Now, consider this chart:

With the previously discussed read-ahead behavior passing un-requested, adjacent data along to the host system's memory, sequential reads enjoy a significant boost, but only while the DRAM cache lasts. As you can see, read speeds from a three-drive RAID 5 array reach a stratospheric 3 GB/s. The data isn't coming from the SSDs, but rather our DDR3. The caveat is that getting such a notable boost requires significant drive utilization. It's a lot easier to stack commands on a hard drive. But it's a lot more challenging to do this in the real world on an SSD, since they service requests so much faster.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Results: RAID 5 Performance

Prev Page Results: RAID 0 Performance Next Page Results: A Second Look At RAID 5-

SteelCity1981 "we settled on Windows 7 though. As of right now, I/O performance doesn't look as good in the latest builds of Windows."Reply

Ha. Good ol Windows 7... -

vertexx In your follow-up, it would really be interesting to see Linux Software RAID vs. On-Board vs. RAID controller.Reply -

tripleX Wow, some of those graphs are unintelligible. Did anyone even read this article? Surely more would complain if they did.Reply -

utomo There is Huge market on Tablet. to Use SSD in near future. the SSD must be cheap to catch this huge market.Reply -

tripleX Wow, some of those graphs are unintelligible. Did anyone even read this article? Surely more would complain if they did.Reply -

tripleX Wow, some of those graphs are unintelligible. Did anyone even read this article? Surely more would complain if they did.Reply -

klimax "You also have more efficient I/O schedulers (and more options for configuring them)." Unproven assertion. (BTW: Comparison should have been against Server edition - different configuration for schedulers and some other parameters are different too)Reply

As for 8.1, you should have by now full release. (Or you don't have TechNet or other access?) -

rwinches " The RAID 5 option facilitates data protection as well, but makes more efficient use of capacity by reserving one drive for parity information."Reply

RAID 5 has distributed parity across all member drives. Doh!