Six SSD DC S3500 Drives And Intel's RST: Performance In RAID, Tested

Intel lent us six SSD DC S3500 drives with its home-brewed 6 Gb/s SATA controller inside. We match them up to the Z87/C226 chipset's six corresponding ports, a handful of software-based RAID modes, and two operating systems to test their performance.

Results: RAID 0 Performance

RAID 0 offers scintillating speed if you're willing to compromise on reliability. Striping also yields the best capacity. In our case, that's almost 3 TB of solid-state storage presented to the operating system as a single logical volume. Just because we get 100 percent of each SSD's capacity doesn't mean we can harness 100 percent of its performance, though.

That's especially true of Intel's implementation, since all of the RAID calculations are performed on host resources. On a dedicated RAID card, you have a discrete controller offloading the storage load. I'm simply hoping that a modern Haswell-based quad-core CPU won't have any trouble feeding six 6 Gb/s SSDs.

The test methodology differs from what we did on the previous page. Since RAID 0 requires at least two drives, we create a two-drive array, resulting in one logical volume. Then we alter the workload. For JBOD testing, we used one thread to load each drive with I/O. This time, we use two workers on the logical drive, scaling from a queue depth of one to 64. Two threads applying one command at a time gives us a total I/O count of two. Or, if we push a queue depth of eight through each thread, we get an aggregate queue depth of 16. One thread tends to bog down under more intense workloads. So, multiple threads are used to extract peak performance. But because we want the comparison to be as fair as possible, we're using two threads for each config.

Sequential Performance

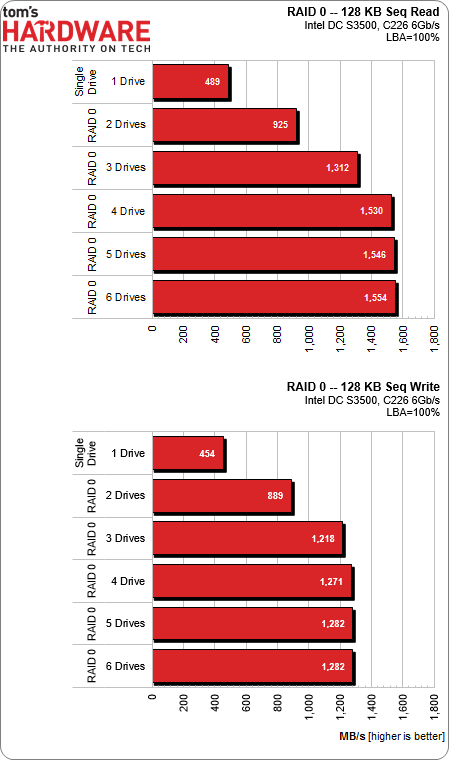

Without question, sequential speed is the most obvious victim of a controller that doesn't have enough bandwidth to do its job. Compare the single-drive performance to many drives in our read and write tests for a bit of perspective. Switching over to this chart format makes it easier to illustrate the throughput ceiling, so bear with the variety in graphics for a moment.

Starting with reads, one SSD tops at 489 MB/s. Two striped drives yield 925 MB/s, and three deliver up to 1300. After that, striping four or more SSD DC S3500s on the PCH's native ports is mostly pointless. Instead, you'd want a system with processor-based PCI Express and a more powerful RAID controller to extract better performance.

The same goes for writes, which top out at a maximum of 1282 MB/s. With this test setup, one SSD DC S3500 writes sequentially at an impressive 92% of its read speed. That drops to 82% with four or more drives in RAID 0.

Random Performance

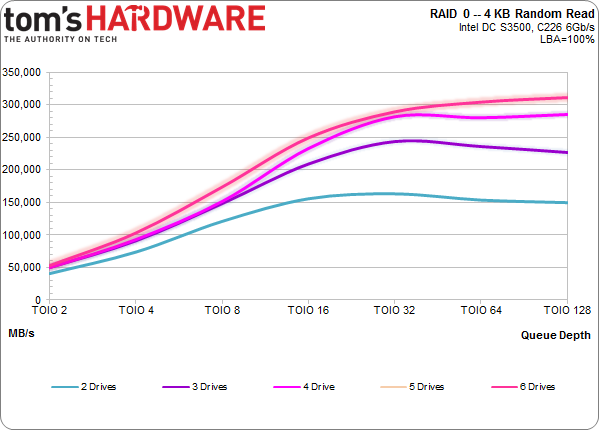

So, if total throughput is limited, diminishing the fantastic sequential performance of these drives, switching to small random accesses should help us get our speed fix, right? Yes and no. As I already mentioned, 100,000 4 KB IOPS is just under 410 MB/s. Theoretically, we should be able to push huge numbers under that vicious bandwidth ceiling. Except, small accesses really tax the host processor. Even achieving such an aggressive load can be difficult, since each I/O creates overhead.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

On the previous page, we used one workload generator on each drive. For everything else, we need to even the playing field as much as possible, which takes us to places where choosing two threads to test each RAID configuration yields sub-optimal results. We could use trail and error to figure out which setup maximizes performance with each combination of drives. However, that'd cause our test with four SSDs in RAID 5 to differ from the same number of drives in RAID 0, which isn't what we want to do. So, we need to compromise by finding a setup that works well across the board and stick to it.

Sure enough, we fall short of the potential we saw during our JBOD-based tests, which is what we expected. Two SSDs in RAID 0 deliver more than 150,000 IOPS. From there, scaling narrows quite a bit, and the four-, five-, and six-drive arrays don't improve upon each other at all. But that's not their fault. When we do the math, 300,000 4 KB IOPS converts to more than 1.2 GB/s. Factor in our workload setup and platform limitations; we're getting about as much performance from these drives as we can hope for.

On the left side of the chart, all of the tested configurations are pretty similar. Increasing the number of drives in the array doesn't do a ton. Though, even with two drives, 50,000 I/Os with two outstanding commands isn't a bad result. Perhaps the best part is that, with two drives, you can get almost 100,000 IOPS with four commands outstanding. Compare that to one high-performance desktop-oriented drive that only sees those numbers with a queue depth of 32. The RAID solution just keeps on scaling as the workload gets more intense.

Just like in the graphics world, multiplying hardware doesn't guarantee perfect scaling. JBOD testing helps show that one drive's performance doesn't affect another's. In RAID, however, the slowest member drive is the chain's weak link. If we were to remove an SSD DC S3500 and add a previous-gen product, the end result would be six drives that'd behave like that older repository.

What does that mean when our drives are working together in RAID? Well, not every drive is equally fast all of the time. If the workload is striped across them, then one drive may be slightly slower just then. Latency measurements tell the tale, with the briefest 1% of the I/O during a small slice of time perhaps taking 20 millionths of a second to complete, while at the other end of the spectrum taking 20,000 µs for a round-trip operation. Most of the time, average latency is fairly low, so most RAID arrays employing identical SSDs are are well-balanced.

This is one reason why consistency matters. If a service depends on storage subsystem to deliver low-latency performance, big spikes in the time it takes to service I/O can really affect certain applications. Some SSDs are better than others in that regard, which is one reason the SSD DC S3700 reviewed so well. In contrast, the S3500 was created for environments where higher endurance and a tight latencies take a backseat to value.

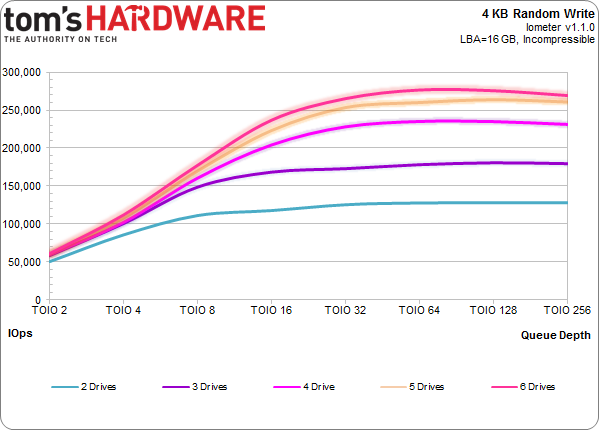

The story is the same for writes. Given six SSDs, you can get up to 276,000 4 KB IOPS. Otherwise, scaling slows way down as you stack on additional drives. As with reads, the performance at lower queue depths is great, and all configurations build momentum until a queue depth of 32, where they all seem to level out. Remember that a SATA drive with NCQ support only has 32 positions in which to store commands.

Current page: Results: RAID 0 Performance

Prev Page Results: JBOD Performance Next Page Results: RAID 5 Performance-

SteelCity1981 "we settled on Windows 7 though. As of right now, I/O performance doesn't look as good in the latest builds of Windows."Reply

Ha. Good ol Windows 7... -

vertexx In your follow-up, it would really be interesting to see Linux Software RAID vs. On-Board vs. RAID controller.Reply -

tripleX Wow, some of those graphs are unintelligible. Did anyone even read this article? Surely more would complain if they did.Reply -

utomo There is Huge market on Tablet. to Use SSD in near future. the SSD must be cheap to catch this huge market.Reply -

tripleX Wow, some of those graphs are unintelligible. Did anyone even read this article? Surely more would complain if they did.Reply -

tripleX Wow, some of those graphs are unintelligible. Did anyone even read this article? Surely more would complain if they did.Reply -

klimax "You also have more efficient I/O schedulers (and more options for configuring them)." Unproven assertion. (BTW: Comparison should have been against Server edition - different configuration for schedulers and some other parameters are different too)Reply

As for 8.1, you should have by now full release. (Or you don't have TechNet or other access?) -

rwinches " The RAID 5 option facilitates data protection as well, but makes more efficient use of capacity by reserving one drive for parity information."Reply

RAID 5 has distributed parity across all member drives. Doh!