Six SSD DC S3500 Drives And Intel's RST: Performance In RAID, Tested

Intel lent us six SSD DC S3500 drives with its home-brewed 6 Gb/s SATA controller inside. We match them up to the Z87/C226 chipset's six corresponding ports, a handful of software-based RAID modes, and two operating systems to test their performance.

Results: A Second Look At RAID 5

Sequential Performance

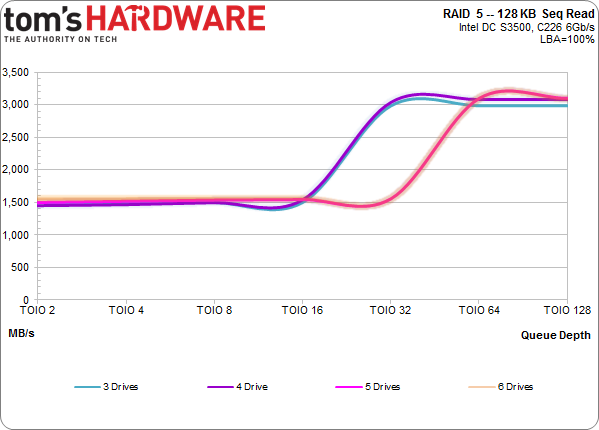

Now that we've illustrated the read-ahead tendencies of Intel's RST, it's easier to put RAID 5's sequential scaling in context. The journey begins at 1.5 GB/s, which is right where we'd expect given the chipset's DMI link. But as the outstanding command count increases, read caching kicks into gear, pushing each array up to 3 GB/s. Depending on how long each queue depth is measured, it's probable that a longer benchmark would average down the caching effect somewhat.

It's also interesting that the three- and four-drive arrays start caching and peak earlier, while the five- and six-drive setups hit their ceilings later.

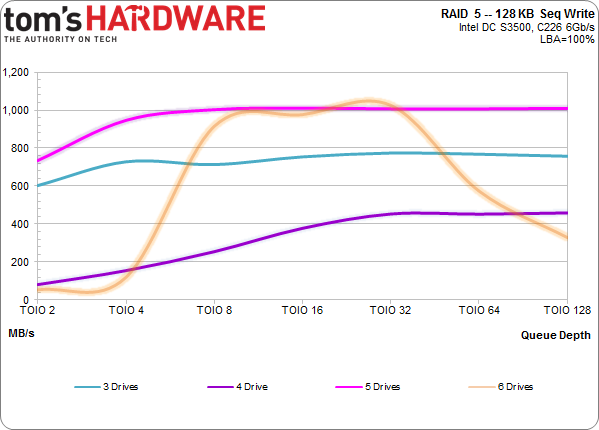

Write performance is traded off for RAID 5's data protection. Nevertheless, Intel's built-in SATA controller can achieve decent results, even if they're not always consistent.

The four- and six-drive setups deliver the strange results in this benchmark. Notice how much faster three SSDs are than four. Stranger still, the six-drive array sneaks up on five SSDs, though it falls short at low and high command counts.

Random Performance

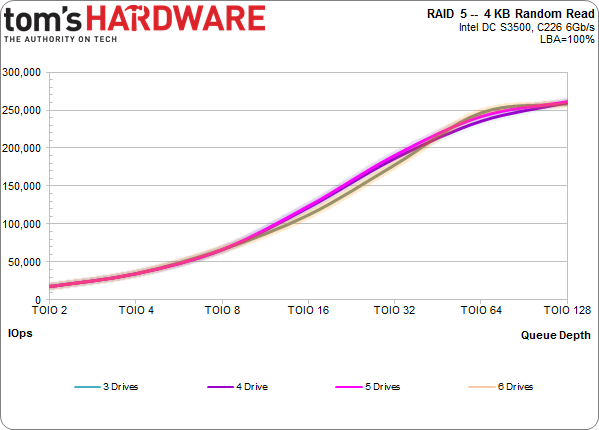

This is what the random performance in a RAID 5 configuration looks like scaling from an OIO count of 2 through 128.

Nothing like the first two charts, right? With three, four, five, and six drives in RAID 5, every run looks the same. Adding more drives doesn't affect read IOPS in this setup. We could probably experiment with synthetic workloads to amplify any small difference between the arrays, but that's a significant challenge. Since we aren't seeing any scaling, it's possible that we're simply dealing with a bottleneck not necessarily related to throughput or host performance.

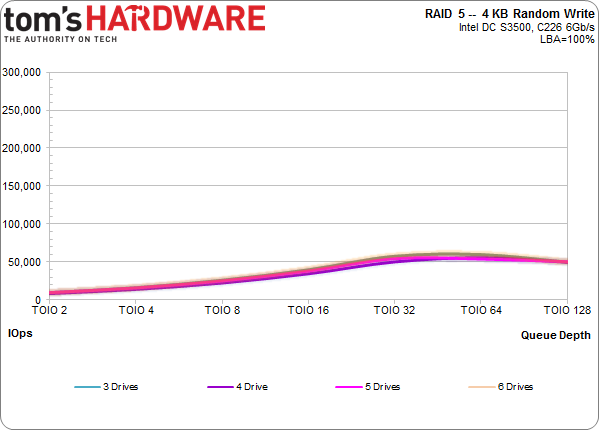

The same situation surfaces with 4 KB writes. From a total outstanding I/O count of 2 to 128, performance starts far lower than a single drive and ends up there, too. Ten-thousand IOPS is approximately one-third of an SSD DC S3500's specification at a queue depth of one. Random writes will always present a challenge in RAID 5, though. It's just hard to write small chunks of data to random addresses while managing parity data.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Results: A Second Look At RAID 5

Prev Page Results: RAID 5 Performance Next Page Mixing Block Sizes And Read/Write Ratios-

SteelCity1981 "we settled on Windows 7 though. As of right now, I/O performance doesn't look as good in the latest builds of Windows."Reply

Ha. Good ol Windows 7... -

vertexx In your follow-up, it would really be interesting to see Linux Software RAID vs. On-Board vs. RAID controller.Reply -

tripleX Wow, some of those graphs are unintelligible. Did anyone even read this article? Surely more would complain if they did.Reply -

utomo There is Huge market on Tablet. to Use SSD in near future. the SSD must be cheap to catch this huge market.Reply -

tripleX Wow, some of those graphs are unintelligible. Did anyone even read this article? Surely more would complain if they did.Reply -

tripleX Wow, some of those graphs are unintelligible. Did anyone even read this article? Surely more would complain if they did.Reply -

klimax "You also have more efficient I/O schedulers (and more options for configuring them)." Unproven assertion. (BTW: Comparison should have been against Server edition - different configuration for schedulers and some other parameters are different too)Reply

As for 8.1, you should have by now full release. (Or you don't have TechNet or other access?) -

rwinches " The RAID 5 option facilitates data protection as well, but makes more efficient use of capacity by reserving one drive for parity information."Reply

RAID 5 has distributed parity across all member drives. Doh!