What Is Nvidia DLSS? A Basic Definition

Nvidia's DLSS graphics explained

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

DLSS stands for deep learning super sampling. It's a type of video rendering technique that looks to boost framerates by rendering frames at a lower resolution than displayed and using deep learning, a type of AI, to upscale the frames so that they look as sharp as expected at the native resolution. For example, with DLSS, a game's frames could be rendered at 1080p resolution, making higher framerates more attainable, then upscaled and output at 4K resolution, bringing sharper image quality over 1080p.

This is an alternative to other rendering techniques — like temporal anti-aliasing (TAA), a post-processing algorithm — that requires an RTX graphics card and game support (see the DLSS Games section below). Games that run at lower frame rates or higher resolutions benefit the most from DLSS.

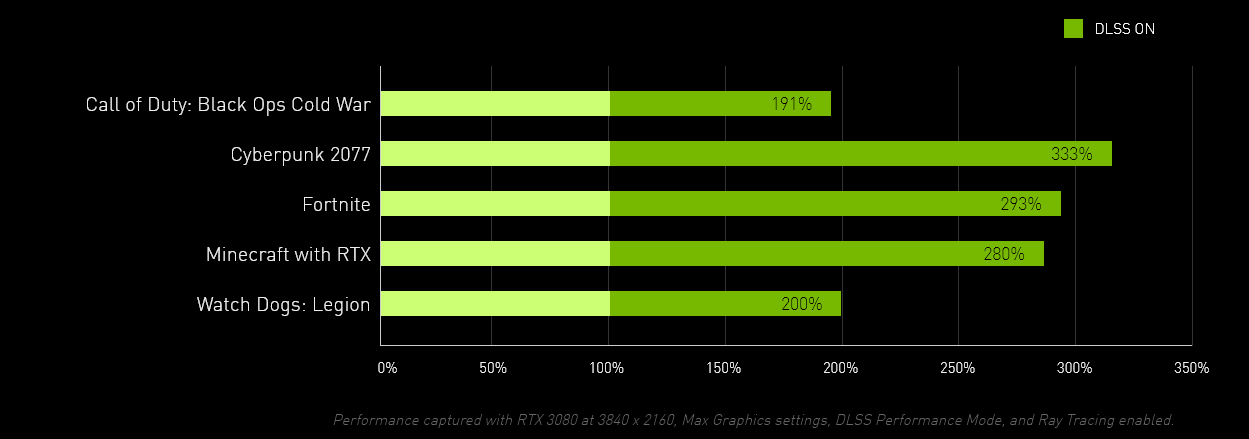

According to Nvidia, DLSS 2.0, the most common version, can boost framerates by 200-300% (see the DLSS 2.0 section below for more). The original DLSS is in far fewer games and we've found it to be less effective, but Nvidia says it can boost framerates "by over 70%." DLSS can really come in handy, even with the best graphics cards, when gaming at a high resolution or with ray tracing, both of which can cause framerates to drop substantially compared to 1080p.

In our experience, it's difficult to spot the difference between a game rendered at native 4K and one rendered in 1080p and upscaled to 4K via DLSS 2.0 (that's the 'performance' mode with 4x upscaling). In motion, it's almost impossible to tell the difference between DLSS 2.0 in quality mode (i.e., 1440p upscaled to 4K), though the performance gains aren't as great.

For a comparison on how DLSS impacts game performance with ray tracing, see: AMD vs Nvidia: Which GPUs Are Best for Ray Tracing?. In that testing we only used DLSS 2.0 in quality mode (2x upscaling), and the gains are still quite large in the more demanding games.

When DLSS was first released, Nvidia claimed it showed more temporal stability and image clarity than TAA. While that might be technically true, it varies depending on the game, and we much prefer DLSS 2.0 over DLSS 1.0. An Nvidia rep confirmed to us that because DLSS requires a fixed amount of GPU time per frame to run the deep learning neural network, games running at high framerates or low resolutions may not have seen a performance boost with DLSS 1.0.

Below is a video from Nvidia (so take it with a grain of salt), comparing Cyberpunk 2007 gameplay at both 1440p resolution and 4K with DLSS 2.0 on versus DLSS 2.0 off.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

DLSS is only available with RTX graphics cards, but AMD today released its own version of the technology, AMD Fidelity FX Super Resolution (AMD FSR). FSR is GPU agnostic, meaning it will work on Nvidia and even Intel GPUs that have the necessary hardware features. At least 10 game studios will claim FSR adoption among their games and engines this year. FSR is also available on the PlayStation 5 and will be available on the Xbox Series X and S.

DLSS Games

In order to use DLSS, you need an RTX graphics card and need to be playing a game or other type of application that supports the feature. You can find a full list of games announced to have DLSS as by the end of July via Nvidia and below.

- Amid Evil

- Anthem

- Aron's Adventure

- Battlefield V

- Bright Memory

- Call of Duty: Black Ops Cold War

- Call of Duty: Modern Warfare

- Call of Duty: Warzone

- Chernobylite

- Control

- CRSED: F.O.A.D. (Formerly Cuisine Royale)

- Crysis Remastered

- Cyberpunk 2077

- Death Stranding

- Deliver Us the Moon

- Doom Eternal

- Dying: 1983

- Edge of Eternity

- Enlisted

- Everspace 2

- F1 2020

- Final Fantasy XV

- Fortnite

- Ghostrunner

- Gu Jian Qi Tan Online

- Icarus

- Into the Radius VR

- Iron Conflict

- Justice

- LEGO Builder's Journey

- Marvel's Avengers

- MechWarrior 5: Mercenaries

- Metro Exodus

- Metro Exodus PC Enhanced Edition

- Minecraft With RTX For Windows 10

- Monster Hunter: World

- Moonlight Blade

- Mortal Shell

- Mount & Blade II: Bannerlord

- Necromunda: Hired Gun

- Nine to Five

- Naraka: Bladepoint

- No Man's Sky

- Nioh 2 - The Complete Edition

- Outriders

- Pumpkin Jack

- Rainbow Six Seige

- Ready or Not

- Red Dead Redemption 2

- Redout: Space Assault

- Rust

- Scavengers

- Shadow of the Tomb Raider

- Supraland

- System Shock

- The Ascent

- The Fabled Woods

- The Medium

- The Persistence

- War Thunder

- Watch Dogs: Legion

- Wolfenstein: Youngblood

- Wrench

- Xuan-Yuan Sword VII

Note that Unreal Engine and Unity Engine both have support for DLSS 2.0, meaning games using those engines should be able to easily implement DLSS. Nvidia also announced that Vulkan-based Linux games will be able to support DLSS on June 23, thanks to a Linux graphics driver adding support for games using Proton.

There are also other types of apps besides games, such as SheenCity Mars, an architectural visualization, that use DLSS.

DLSS 2.0 and DLSS 2.1

In March 2020, Nvidia announced DLSS 2.0, an updated version of DLSS that uses a new deep learning neural network that's supposed to be up to 2 times faster than DLSS 1.0 because it leverages RTX cards' AI processors, called Tensor Cores, more efficiently. This faster network also allows the company to remove any restrictions on supported GPUs, settings and resolutions.

DLSS 2.0 is also supposed to offer better image quality while promising up to 2-3 times the framerate (in 4K Performance Mode) compared to the predecessor's up to around 70% fps boost. Using DLSS 2.0's 4K Performance Mode, Nvidia claims an RTX 2060 graphics card can run games at max settings at a playable framerate. Again, a game has to support DLSS 2.0, and you need an RTX graphics card to reap the benefits.

The original DLSS was apparently limited to about 2x upscaling (Nvidia hasn't confirmed this directly), and many games limited how it could be used. For example, in Battlefield V, if you have an RTX 2080 Ti or faster GPU, you can only enable DLSS at 4K — not at 1080p or 1440p. That's because the overhead of DLSS 1.0 often outweighed any potential benefit at lower resolutions and high framerates.

In September 2020, Nvidia released DLSS 2.1, which added an Ultra Performance Mode for super high-res gaming (9x upscaling), support for VR games, and dynamic resolution. The latter, an Nvidia rep told Tom's Hardware, means that, "The input buffer can change dimensions from frame to frame while the output size remains fixed. If the rendering engine supports dynamic resolution, DLSS can be used to perform the required upscale to the display resolution." Note that you'll often hear people referring to both the original DLSS 2.0 and the 2.1 update as "DLSS 2.0."

DLSS 2.0 Selectable Modes

One of the most notable changes between the original DLSS and the fancy DLSS 2.0 version is the introduction of selectable image quality modes: Quality, Balanced, or Performance — and Ultra Performance with 2.1. This affects the game's rendering resolution, with improved performance but lower image quality as you go through that list.

With 2.0, Performance mode offered the biggest jump, upscaling games from 1080p to 4K. That's 4x upscaling (2x width and 2x height). Balanced mode uses 3x upscaling, and Quality mode uses 2x upscaling. The Ultra Performance mode introduced with DLSS 2.1 uses 9x upscaling and is mostly intended for gaming at 8K resolution (7680 x 4320) with the RTX 3090. While it can technically be used at lower target resolutions, the upscaling artifacts are very noticeable, even at 4K (720p upscaled). Basically, DLSS looks better as it gets more pixels to work with, so while 720p to 1080p looks good, rendering at 1080p or higher resolutions will achieve a better end result.

How does all of that affect performance and quality compared to the original DLSS? For an idea, we can turn to Control, which originally had DLSS 1.0 and then received DLSS 2.0 support when released. (Remember, the following image comes from Nvidia, so it'd be wise to take it with a grain of salt too.)

One of the improvements DLSS 2.0 is supposed to bring is strong image quality in areas with moving objects. The updated rendering in the above fan image looks far better than the image using DLSS 1.0, which actually looked noticeably worse than having DLSS off.

DLSS 2.0 is also supposed to provide an improvement over standard DLSS in areas of the image where details are more subtle.

Nvidia promised that DLSS 2.0 would result in greater game adoption. That's because the original DLSS required training the AI network for every new game needed DLSS support. DLSS 2.0 uses a generalized network, meaning it works across all games and is trained by using "non-game-specific content," as per Nvidia.

For a game to support the original DLSS, the developer had to implement it, and then the AI network had to be trained specifically for that game. With DLSS 2.0, that latter step is eliminated. The game developer still has to implement DLSS 2.0, but it should take a lot less work, since it's a general AI network. It also means updates to the DLSS engine (in the drivers) can improve quality for existing games. Unreal Engine 4 and Unreal Engine 5 have DLSS 2.0 support, and Unity will add it via its 2021.2 update this year. That makes trivial for games based on those engines to enable the feature.

How Does DLSS Work?

Both the original DLSS and DLSS 2.0 work with Nvidia's NGX supercomputer for training of their respective AI networks, as well as RTX cards' Tensor Cores, which are used for AI-based rendering.

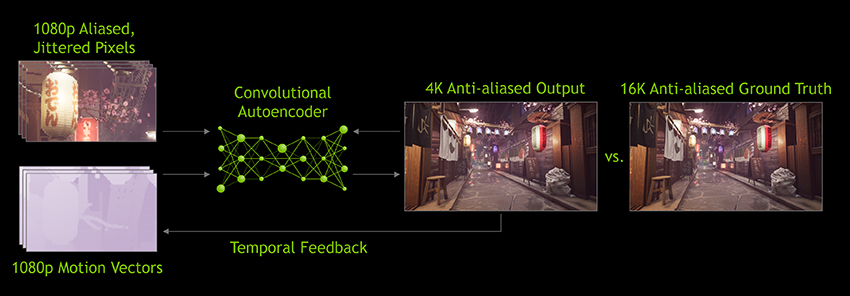

For a game to get DLSS 1.0 support, first Nvidia had to train the DLSS AI neural network, a type of AI network called convolutional autoencoder, with NGX. It started by showing the network thousands of screen captures from the game, each with 64x supersample anti-aliasing. Nvidia also showed the neural network images that didn't use anti-aliasing. The network then compared the shots to learn how to "approximate the quality" of the 64x supersample anti-aliased image using lower quality source frames. The goal was higher image quality without hurting the framerate too much.

The AI network would then repeat this process, tweaking its algorithms along the way so that it could eventually come close to matching the 64x quality with the base quality images via inference. The end result was "anti-aliasing approaching the quality of [64x Super Sampled], whilst avoiding the issues associated with TAA, such as screen-wide blurring, motion-based blur, ghosting and artifacting on transparencies," Nvidia explained in 2018.

DLSS also uses what Nvidia calls "temporal feedback techniques" to ensure sharp detail in the game's images and "improved stability from frame to frame." Temporal feedback is the process of applying motion vectors, which describe the directions objects in the image are moving in across frames, to the native/higher resolution output, so the appearance of the next frame can be estimated in advance.

DLSS 2.0 gets its speed boost through its updated AI network that uses Tensor Cores more efficiently, allowing for better framerates and the elimination of limitations on GPUs, settings and resolutions. Team Green also says DLSS 2.0 renders just 25-50% of the pixels (and only 11% of the pixels for DLSS 2.1 Ultra Performance mode), and uses new temporal feedback techniques for even sharper details and better stability over the original DLSS.

Nvidia's NGX supercomputer still has to train the DLSS 2.0 network, which is also a convolution autoencoder. Two things go into it, as per Nvidia: "low resolution, aliased images rendered by the game engine" and "low resolution, motion vectors from the same images — also generated by the game engine."

DLSS 2.0 uses those motion vectors for temporal feedback, which the convolution autoencoder (or DLSS 2.0 network) performs by taking "the low resolution current frame and the high resolution previous frame to determine on a pixel-by-pixel basis how to generate a higher quality current frame," as Nvidia puts it.

The training process for the DLSS 2.0 network also includes comparing the image output to an "ultra-high-quality" reference image rendered offline in 16K resolution (15360 x 8640). Differences between the images are sent to the AI network for learning and improvements. Nvidia's supercomputer repeatedly runs this process, on potentially tens of thousands or even millions of reference images over time, yielding a trained AI network that can reliably produce images with satisfactory quality and resolution.

With both DLSS and DLSS 2.0, after the AI network's training for the new game is complete, the NGX supercomputer sends the AI models to the Nvidia RTX graphics card through GeForce Game Ready drivers. From there, your GPU can use its Tensor Cores' AI power to run the DLSS 2.0 in real-time alongside the supported game.

Because DLSS 2.0 is a general approach rather than being trained by a single game, it also means the quality of the DLSS 2.0 algorithm can improve over time without a game needing to include updates from Nvidia. The updates reside in the drivers and can impact all games that utilize DLSS 2.0.

This article is part of the Tom's Hardware Glossary.

Further reading:

Scharon Harding has over a decade of experience reporting on technology with a special affinity for gaming peripherals (especially monitors), laptops, and virtual reality. Previously, she covered business technology, including hardware, software, cyber security, cloud, and other IT happenings, at Channelnomics, with bylines at CRN UK.

-

-Fran- Out of all post-processing techniques out there, I think this one is the most overengineered yet, haha.Reply

I'm not saying that as a bad thing either. For all the crap I give to DLSS, I do find it quite cool technologically speaking. Even if I don't really like all the buzzword marketing shpeal around it!

Still, I am on camp "just lower the resolution and sliders to the right". Or just do subsampling (if the engine allows it) with regular MSAA. Plenty of engines out there of less known games implement it and it does improve things quite decently. I believe there's also some interesting techniques used by VR games now that I can quite remember, but are similar to those.

Cheers! -

escksu ReplyYuka said:Out of all post-processing techniques out there, I think this one is the most overengineered yet, haha.

I'm not saying that as a bad thing either. For all the crap I give to DLSS, I do find it quite cool technologically speaking. Even if I don't really like all the buzzword marketing shpeal around it!

Still, I am on camp "just lower the resolution and sliders to the right". Or just do subsampling (if the engine allows it) with regular MSAA. Plenty of engines out there of less known games implement it and it does improve things quite decently. I believe there's also some interesting techniques used by VR games now that I can quite remember, but are similar to those.

Cheers!

Running 1080p with MSAA will not give you the same image quality compared to 4k with DLSS and the performance will be still worse, esp. with ray tracing. -

hotaru.hino Reply

The thing that DLSS does that makes it stand out is that it reconstructs what the image would be at the desired output resolution. This is why in comparison screenshots DLSS rendered at 1080p then upscaled to say 4K can look as good as 4K itself. Non AI based upscaling algorithms can't recreate the same level of detail, especially when they're working with 50% of the pixels. I think Digital Foundry in their DLSS 2.0 video shows how much DLSS can reconstruct the image vs simple upscaling.Yuka said:Out of all post-processing techniques out there, I think this one is the most overengineered yet, haha.

I'm not saying that as a bad thing either. For all the crap I give to DLSS, I do find it quite cool technologically speaking. Even if I don't really like all the buzzword marketing shpeal around it!

The downside to this is a huge number of games in the past 10-15 year used deferred shading, which doesn't work well with MSAA. I'm pretty sure even forcing it in the driver control panel doesn't actually do anything if the game uses deferred shading. Hence why there was a push for things like FXAA and MLAA since they work on the final output, on top of being cheaper than MSAA and "just as good". Also as mentioned, MSAA is a pretty major performance hit still, so at some point you may as well have rendered at the native resolution.Yuka said:Still, I am on camp "just lower the resolution and sliders to the right". Or just do subsampling (if the engine allows it) with regular MSAA. Plenty of engines out there of less known games implement it and it does improve things quite decently. I believe there's also some interesting techniques used by VR games now that I can quite remember, but are similar to those.

For VR you might be thinking of a combination of foveated rendering and variable rate shading (VRS). Foveated rendering doesn't really work outside of VR and VRS has been implemented in some games, but VRS can be hit or miss depending on which tier is used and it may not even provide that much of a performance benefit. -

coolitic Reply

DLSS looks worse than no AA imo. No matter how you slice it, you just can't improve image quality reliably w/o adding more samples, as w/ MSAA and SSAA. Despite being meant to improve over it, DLSS must inevitably suffer the same shortfalls of TXAA as a simple fact of it being engineered that way.escksu said:Running 1080p with MSAA will not give you the same image quality compared to 4k with DLSS and the performance will be still worse, esp. with ray tracing. -

hotaru.hino Reply

If we're talking about a single image in time, then sure, DLSS won't get the same results reliably across every possible scenario. But I'd argue that instantaneous image quality isn't really as important as image quality over time. Human vision revolves around taking as many samples per second, though the kicker is we can see "high resolution" because the receptors in our eyes wiggle slightly which gives us super-resolution imaging.coolitic said:DLSS looks worse than no AA imo. No matter how you slice it, you just can't improve image quality reliably w/o adding more samples, as w/ MSAA and SSAA. Despite being meant to improve over it, DLSS must inevitably suffer the same shortfalls of TXAA as a simple fact of it being engineered that way.

I'd argue the aim NVIDIA has for DLSS is to mimic this, but using AI to better reconstruct the details rather than having a fixed algorithm that will more likely than not fail to reconstruct the right thing.

EDIT: Also I think developers misused what TXAA was supposed to resolve. It isn't so much a spatial anti-aliasing method, so it's not exclusively about removing jaggies. It's meant to combat shimmering effects because the thing covering a pixel sample point keeps moving in and out of it. If the image is perfectly still, TXAA does absolutely nothing. -

10tacle I first started using DLSS 1.0 when Project Cars 1 came out back in 2015. It was a heavy hitter on GPU frame rates with ultra quality bars maxed out, especially at 1440p and up resolutions. Tied in with some in-game AA settings, I ran DLSS through the Nvidia control app and found a sweet spot between better graphics and high frame rates. I to this day do not understand why it was/is still admonished by many.Reply -

coolitic Replyhotaru.hino said:If we're talking about a single image in time, then sure, DLSS won't get the same results reliably across every possible scenario. But I'd argue that instantaneous image quality isn't really as important as image quality over time. Human vision revolves around taking as many samples per second, though the kicker is we can see "high resolution" because the receptors in our eyes wiggle slightly which gives us super-resolution imaging.

I'd argue the aim NVIDIA has for DLSS is to mimic this, but using AI to better reconstruct the details rather than having a fixed algorithm that will more likely than not fail to reconstruct the right thing.

EDIT: Also I think developers misused what TXAA was supposed to resolve. It isn't so much a spatial anti-aliasing method, so it's not exclusively about removing jaggies. It's meant to combat shimmering effects because the thing covering a pixel sample point keeps moving in and out of it. If the image is perfectly still, TXAA does absolutely nothing.

No, I was specifically talking about over time. TXAA may "solve" shimmering, but it adds unfixable blur that DLSS tries to reduce, but ultimately can never fix as it is inherent to the technology. So, in other words, TXAA has the same problems and supposed benefits that non MSAA/SSAA techniques have in the spatial dimension, but also in the temporal dimension. -

husker Here is my question: In a racing game my opponent is so far behind me (okay, usually in front of me) he is just 2 pixels in size when viewed in 4K. Now I drop to 1080 and the car no longer appears at all because lowering the resolution means lowering (e.g. losing) information about the visual environment. So now we turn on DLSS 2.0 and is that "lost" information somehow restored and that 2 pixels somehow appears on my screen? The article seems to suggest that that is possible but I'm no so sure. This may seems like a silly question, because who cares about a 2 pixel detail; but was just an example for clarity.Reply -

-Fran- Reply

At the end of the day, DLSS is just upsampling, so it will work with the lower resolution and then, the algorithm that makes it tick, will make "inference" (which is the marketing side of "deep learning") based on multiple frames to try and reconstruct a new, bigger, frame.husker said:Here is my question: In a racing game my opponent is so far behind me (okay, usually in front of me) he is just 2 pixels in size when viewed in 4K. Now I drop to 1080 and the car no longer appears at all because lowering the resolution means lowering (e.g. losing) information about the visual environment. So now we turn on DLSS 2.0 and is that "lost" information somehow restored and that 2 pixels somehow appears on my screen? The article seems to suggest that that is possible but I'm no so sure. This may seems like a silly question, because who cares about a 2 pixel detail; but was just an example for clarity.

So, if in the source and using your example, the car is never in the rearview mirror, then DLSS won't have any information to extrapolate from in order to recreate the new frame with the car in it.

EDIT: This also brings an interesting question for border-case scenarios. So if a few frames have the car and others don't? Will it draw the car in the resulting frame or not? Will it be accurate to the real quivalent of the native resolution at all? For high paced action this may be important, but it may happen so fast that it could be a non-issue, but interesting to think anyway.

Regards. -

hotaru.hino Reply

I would say it depends on how much history of previous frames the system keeps. If it's say a 5-frame history and the car goes in and out every 5 frames, then it'd probably pop in and out. But if it's popping in and out every other frame or it's missing in one frame in a random frame, then it'll probably keep it.Yuka said:EDIT: This also brings an interesting question for border-case scenarios. So if a few frames have the car and others don't? Will it draw the car in the resulting frame or not? Will it be accurate to the real quivalent of the native resolution at all? For high paced action this may be important, but it may happen so fast that it could be a non-issue, but interesting to think anyway.

However I would argue that humans work better with more information over time than with a single unit of information in a fraction of a second. I don't think anyone, even at the top tier level, is going to notice or care of something appearing for just a frame when they're already running at 240 FPS or whatever. It also depends on the situation as well. For racing, split second decisions are made when the cars are right next to each other, not when the car is a pixel on the rear view mirror.