Intel Xeon E5-2600 V3 Review: Haswell-EP Redefines Fast

How We Tested

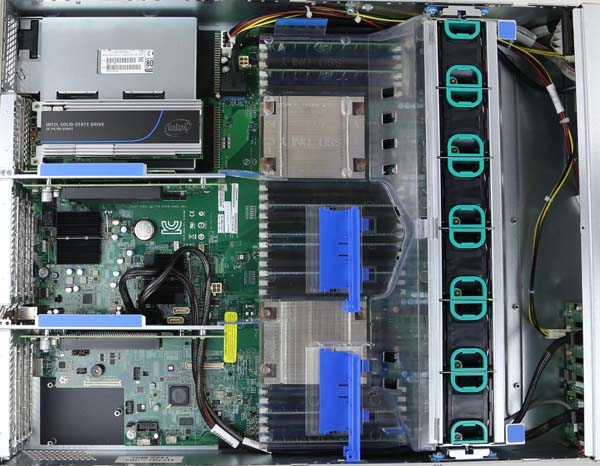

Today's tests involve typical 1U server platforms. Supermicro sent along a new 1U SuperServer configured with two Intel E5-2690 v3 processors and 16 x 8 GB DDR4-2133 DIMMs from Samsung. We had a similar 1U Supermicro platform and pairs of Intel Xeon E5-2690 v1 and v2 processors to create a direct comparison. The Xeon E5-2690s are generally considered the higher-end of what ends up becoming mainstream. For example, companies like Amazon use the E5-2670 v1 and v2 quite extensively in their AWS EC2 compute platforms. The -2690 generally offers the same core count, just at a higher clock rate.

Intel also sent along a 2U "Wildcat Pass" server platform that was configured with two Xeon E5-2699 v3 samples and 8 x 16 GB registered DDR4 modules (with one DIMM per channel) and two SSD DC S3500 SSDs. The E5-2699 v3 is a massive processor. It wields a full 18 cores capable of addressing 36 threads through Hyper-Threading. Forty-five megabytes of shared L3 cache maintain 2.5 MB per core, and the whole configuration fits into a 145 W TDP.

Naturally, this is going to represent a lower-volume, high-dollar server. But it's going to illustrate the full potential of Haswell-EP, too. We're using the Wildcat Pass server as our control for Intel's newest architecture.

Meanwhile, a Lenovo RD640 2U server operates as our control for Sandy Bridge-EP and Ivy Bridge-EP. It leverages 8 x 16 GB of registered DDR3 memory, totaling 128 GB. We also dropped those SSD DC S3500s in there, too.

As we make our comparisons, keep a few points in mind. First, at the time of testing, DDR4 RDIMM pricing is absolutely obscene. Street prices are several times higher per gigabyte than DDR3. This will come down over time as manufacturing ramps up. But prohibitive expense did affect our ability to configure the servers with more than 128 GB.

We are focusing today's review on processor performance and power consumption. As a result, we are using the two SSD DC S3500s with 240 GB each in a RAID 1 array. We did have a stack of trusty SanDisk Lightning 400 GB SLC SSDs available. But neither of our test platforms came with SAS connectivity. Although there are plenty of add-in controllers that would have done the job, there is clearly a market shift happening away from such configurations. Sticking with SATA-based SSDs kept the storage subsystem's power consumption relatively low, while at the same time leaning on a fairly common arrangement in servers reliant on shared network storage.

Bear in mind also that we're using 1U and 2U enclosures, each with a single server inside. The Xeon E5 series is often found in high-density configurations with multiple nodes per 1U, 2U, or 4U chassis. For instance, the venerable Dell C6100, based on Nehalem-EP and Westmere-EP, was extremely popular with large Web 2.0 outfits like Facebook and Twitter. Many of those platforms have been replaced by OpenCompute versions, but we expect many non-traditional designs to be popular with the E5-2600 v3 generation, especially given its power characteristics.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: How We Tested

Prev Page Fortville: 40 GbE Ethernet For The Masses Next Page Supermicro SYS-6018R-WTR-

CaptainTom Wonder how long it is until 18-core CPU's are utilized well in games...Maybe 2018 or 2020?Reply -

dovah-chan ReplyCaptain Tom said:Wonder how long it is until 18-core CPU's are utilized well in games...Maybe 2018 or 2020?

Actually we should be trying to move away from traditional serial-styled processing and move towards parallel processing. Each core can handle only one task at a time and only utilize it's own resources by itself.

This is unlike a GPU, where many processors utilize the same resources and perform multiple tasks at the same time. The problem is that this type of architecture is not supported at all in CPUs and Nvidia is looking for people to learn to program for parallel styled architectures.

But this lineup of CPUs is clearly a marvel of engineering and hard work. Glad to see the server industry will truly start to benefit from the low power and finely-tuned abilities of haswell along with the recently introduced DDR4 which is optimized for low power usage as well. This, combined along with flash-based storage (aka SSDs) which also have lower power drain than the average HDD, will slash through server power bills and save companies literally billions of dollars. Technology is amazing isn't it? -

2Be_or_Not2Be There is still a lot in games that doesn't translate well into parallel processing. A lot of gaming action only happens as a direct result of the user's input, and it usually triggers items that are dependent upon the results from another item. So parallel processing doesn't help a lot there; single-threaded performance helps more.Reply

However, with multiple cores, now we can have better AI and other "off-screen" items that don't necessarily always depend upon the user's direct input. There's still a lot of work to be done there, though. -

2Be_or_Not2Be The new Haswell-EP Xeons are definitely going to help with virtualization. However, I see the high-price of DDR4 and the relative scarcity of it now as being a bit of a handicap to fast adoption, especially since that is one of the major limiting factors to how many servers you can virtualize.Reply

I think all of the major server vendors are going to suck up all of the major memory manufacturers DDR4 capacity for a while before the prices go down. -

balister ReplyThe new Haswell-EP Xeons are definitely going to help with virtualization. However, I see the high-price of DDR4 and the relative scarcity of it now as being a bit of a handicap to fast adoption, especially since that is one of the major limiting factors to how many servers you can virtualize.

I think all of the major server vendors are going to suck up all of the major memory manufacturers DDR4 capacity for a while before the prices go down.

Whether it helps or hinders will ultimately depend on the VM admin. What most VM admins don't realize is that HT can actually end up degrading performance in virtual environments unless the VM admin took specific steps to use HT properly (and most do not). A lot of companies will tell you to turn off HT to increase performance because they've dealt with a lot of VM admins that don't set things up properly (a lot of VM admins over allocate which is part of the reason using HT can degrade performance, but there are other settings as well that have to be set in the Hypervisor so that the guest VMs get the resources they need). -

InvalidError Reply

This is easier said than done since there are tons of everyday algorithms, such as text/code parsing, that are fundamentally incompatible with threading. If you want to build a list or tree using threads, you usually need to split the operation to let each thread work in isolated parts of the list/tree so they do not trip over each other and waste most of their time waiting on mutexes and at the end of the build process, you have a merge process to bring everything back together which is usually not very thread-friendly if you want it to be efficient.14133592 said:Actually we should be trying to move away from traditional serial-styled processing and move towards parallel processing. Each core can handle only one task at a time and only utilize it's own resources by itself.

In many cases, trying to convert algorithms to threads is simply more trouble than it is worth. -

Rob Burns Great to see these processors out, and overall good article. I only wish you used the same benchmark suite you had for the Haswell-E processors: 3DS Max, Adobe Premiere, After Effects, Photoshop. I'd also love to see Vray added to the mix. Not much useful benchmark data in here for 3D professionals. Some good detail on the processors themselves however.Reply -

Drejeck ReplyWonder how long it is until 18-core CPU's are utilized well in games...Maybe 2018 or 2020?

Simply never.

A game is made by sound, logic and graphics. You may dedicate this 3 processes to a number of cores but they remain 3. As you split load some of the logic must recall who did what and where. Logic deals mainly with FPU units, while graphics with integers. GPUs are great integers number crunchers. They have to be fed by the CPU so an extra core manage data through different memories, this is where we start failing. Keeping all in one spot, with the same resources reduces need to transfer data. By implementing a whole processor with GPU, FPU, x86 and sound processor all in one package with on board memory makes for the ultimate gaming processor. As long as we render scenes with triangles we will keep using the legacy stuff. When the time will come to render scenes by pixel we will need a fraction of today's performance, and half of the texture memory (just scale the highest quality) and half of models memory. Epic is already working on that.

-

pjkenned ReplyGreat to see these processors out, and overall good article. I only wish you used the same benchmark suite you had for the Haswell-E processors: 3DS Max, Adobe Premiere, After Effects, Photoshop. I'd also love to see Vray added to the mix. Not much useful benchmark data in here for 3D professionals. Some good detail on the processors themselves however.

Great points. One minor complication is that the NVIDIA GeForce Titan used in the Haswell-E review would not have fit in the 1U servers (let alone be cooled well by then.) Onboard Matrox G200eW graphics are too much of a bottleneck for the standard test suite.

On the other hand, this platform is going to be used primarily in servers. Although there are some really nice workstation options coming, we did not have access in time for testing.

One plus is that you can run the tests directly on your own machine by booting to a Ubuntu 14.04 LTS LiveCD, and issuing three commands. There is a video and the three simple commands here: http://linux-bench.com/howto.html That should give you a rough idea in terms of performance of your system compared to the test systems.

Hopefully we will get some workstation appropriate platforms in the near future where we can run the standard set of TH tests. Thanks for your feedback since it is certainly on the radar.