Part 1: DirectX 11 Gaming And Multi-Core CPU Scaling

We test five theoretical Intel CPUs in 10 different DirectX 11-based games to determine what impact core count has on performance.

Interview with 4A Games & Metro: Last Light Redux

Introduced back in 2013, Metro: Last Light is an older title that we continue to use as a benchmark because of its ability to brutalize graphics hardware. 4A Games’ 4A engine is no longer shiny and new, but it is threaded. What does that mean? Dmitry Lymar, director at 4A Games, answered a few of our questions regarding Metro and its underlying engine.

Tom’s Hardware: We understand that one of the motivators for the 4A Engine was X-Ray’s (GSC Game World’s foundation for the S.T.A.L.K.E.R. series) lack of parallelization. Can you go into more depth about how 4A uses threading and what benefits that confers?

Dmitry: Right from the design stage of the 4A Engine we made correct decisions regarding parallelization, and I am still happy with the system. However, we've had to go through several iterations of adapting/optimizing the engine for target hardware.

Conceptually our model is really simple. We have a few control threads responsible mostly for spawning tasks (or work-items). Other threads are just workers; they "steal" those tasks from the queue and execute them out of order. Many of those tasks spawn another tasks if they need to. The control threads become workers as well when they do their "serial" processing and spawn a lot of tasks.

Usually there are almost no synchronization points, apart from the one at the start of a frame. That is why the scalability is really good.

There are some auxiliary threads as well, but they are mostly I/O threads with little to no compute on them.

As for variations we've used in the past:

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Xbox 360 = Two control threads, four workers + auxes

PlayStation 3 = Two control, 12 workers (actually they were "fibers" or user-mode threads, and 12 of them were required to hide offloading latency to SPUs for our code) + SPUs + auxes

Xbox One/PlayStation 4 = Three control, three workers + one latency-tolerant worker + auxes

PC (for Redux games): One dedicated D3D API submission thread, two or three control (depends on hardware thread count), all other available cores/threads become workers + some aux + some GPU driver threads + a lot of random threads from other processes

PC is slightly more complicated because of oversubscription risks (which result in visible stutter for us), as we do not control what already is running on user's PC.

Tom’s Hardware: Is there an upper bound to the number of host processing cores the engine can schedule to?

Dmitry: No, there is no upper bound. However, there is lower bound. We can run on a dual-thread CPU, but in this case there is already internal oversubscription, which causes lower framerate and stutter. Realistically, a quad-threaded CPU is the minimum for us, with eight-thread CPUs vastly preferred.

Tom’s Hardware: In the two images attached (we shared some of our graphs with Dmitry), it’s easy to see some scaling up to ~six cores at 1920x1080, though even on a GeForce GTX 1080, graphics becomes the bottleneck at 2560x1440 (this is one reason Tom’s benchmarks with Metro). Given the predominantly graphics-bound nature of many games, what makes a threaded engine so important?

Dmitry: Regarding the graphs. As we mostly optimize CPU performance on the current-gen consoles (as they are slower), for you to see our real engine scalability you will have to underclock CPUs to approximately consoles’ performance.

Obviously on such powerful CPUs we will be easily GPU-bound, especially as graphics gets special treatment on the PC from us (read: we try to load more powerful GPUs with better graphics), but that is not the case for consoles. CPU can be quite real bottleneck on XB1/PS4.

Tom’s Hardware: As an influencer, and understanding that companies like Intel and AMD work within power budgets, would you advise gamers to prioritize more cores (as in the Haswell-E/Broadwell-E platform) or higher clock rates/more IPC throughput (as in Skylake) if forced to choose?

Dmitry: For us, quad-core/eight-thread i7 is probably the sweet spot on PC. Mostly because we can be sometimes bound by D3D submission thread, so more threads barely help. As a side note - measuring only throughput/framerate is not the right thing to do for gaming. Framerate stability/smoothness is of equal priority. For example, a higher-clocked i5 can give higher average framerate, but lower-clocked i7 can deliver more even framerate, depending on the machine config of course.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Metro: Last Light Redux

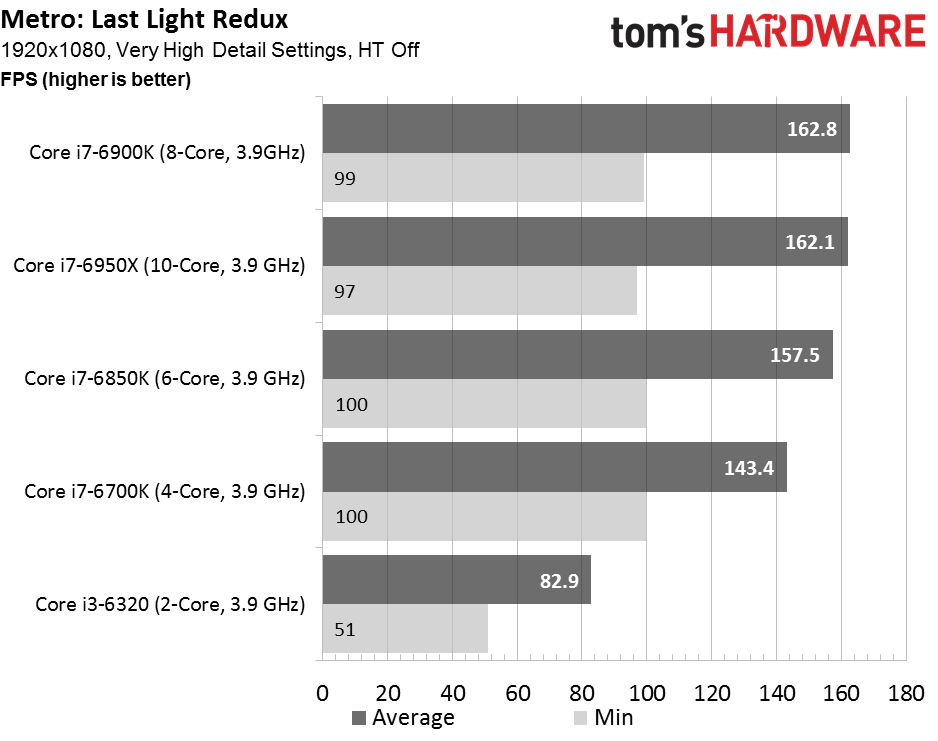

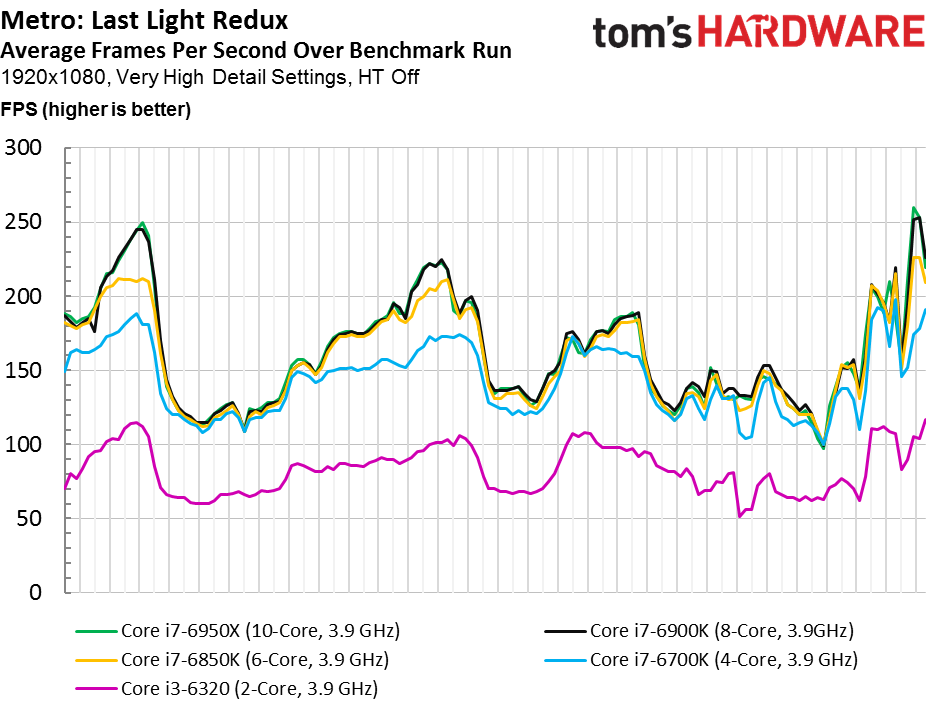

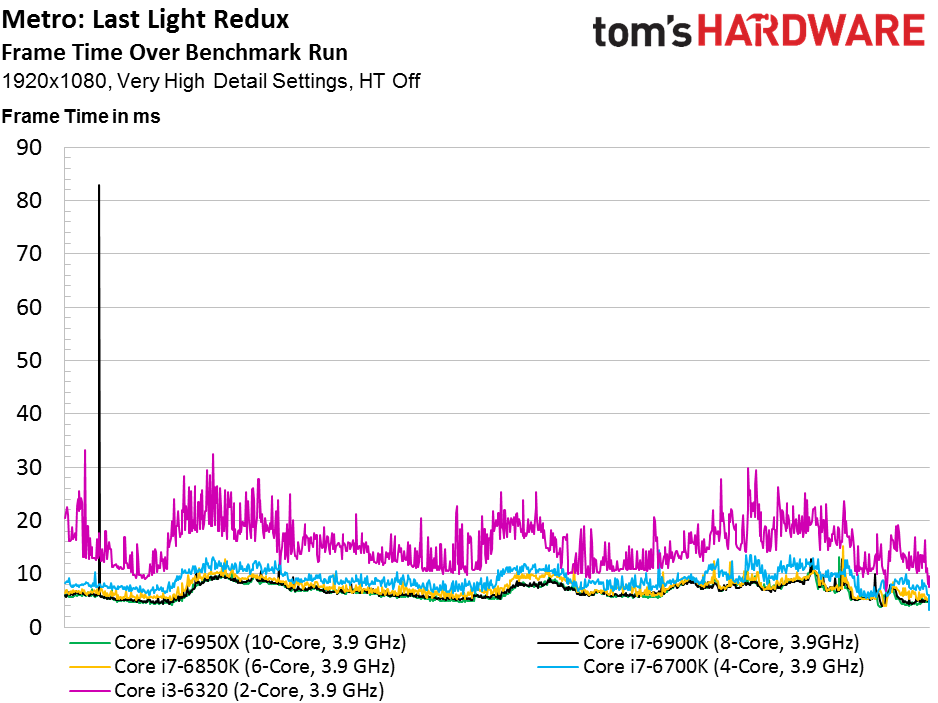

Although it doesn’t seem to matter whether you use an eight- or 10-core CPU, both do demonstrate an advantage over our six-core configuration at 1920x1080. In turn, that processor is notably faster than a four-core Skylake-based design.

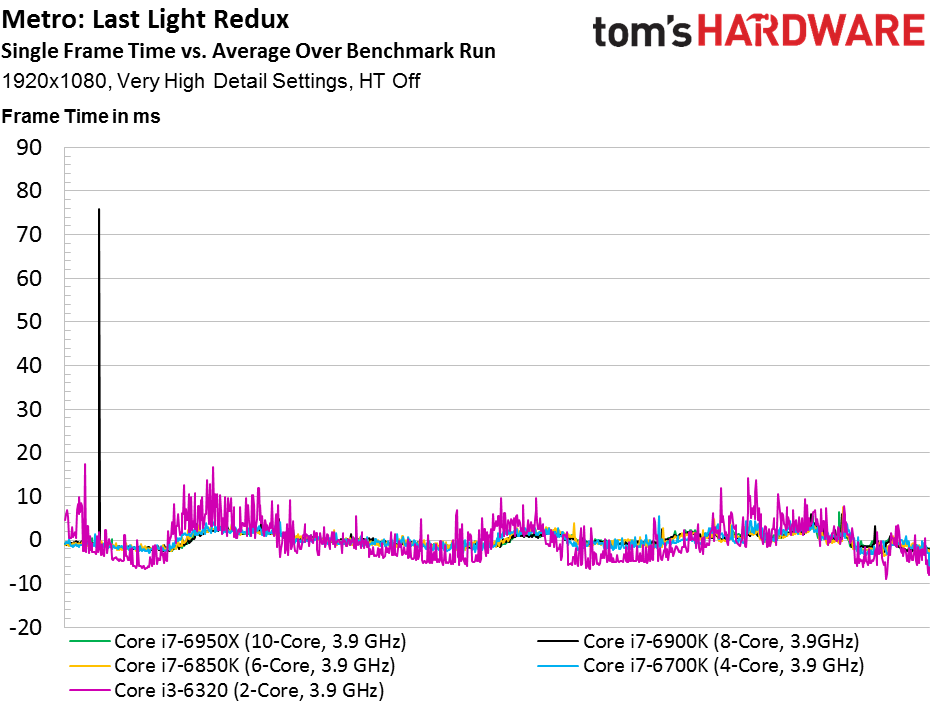

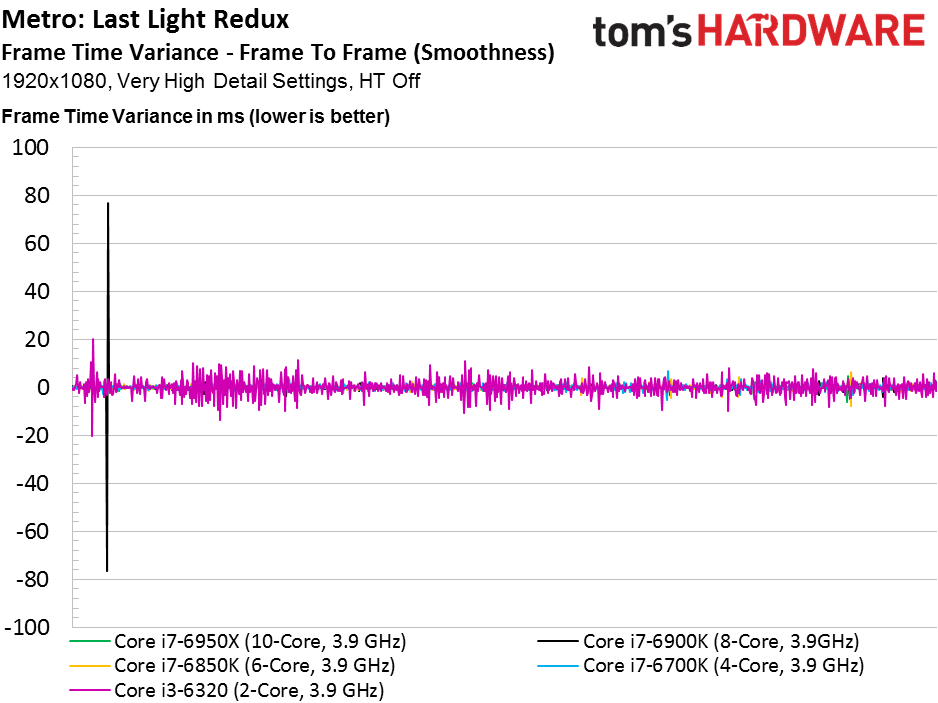

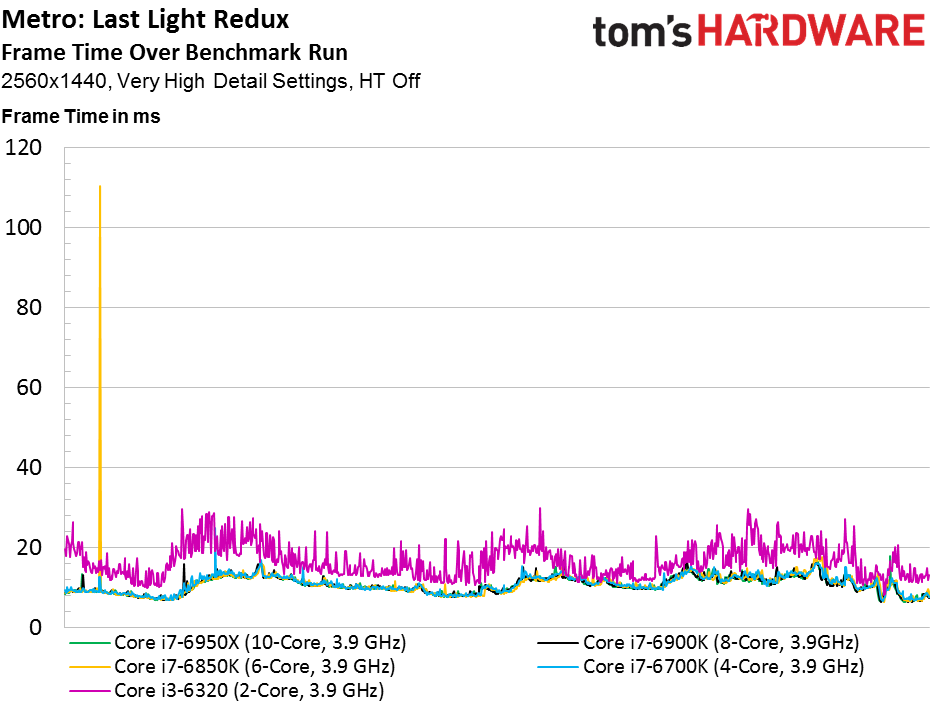

The dual-core CPU technically satisfies 4A Games’ minimum requirement, but it also severely handicaps our GeForce GTX 1080 (as evidenced by an average frame rate lower than the other four systems’ minimums). It’s not as though two cores incur huge frame time spikes in this metric, but true to Dmitry’s comments, frame rates are lower and you clearly see a less-smooth experience on-screen.

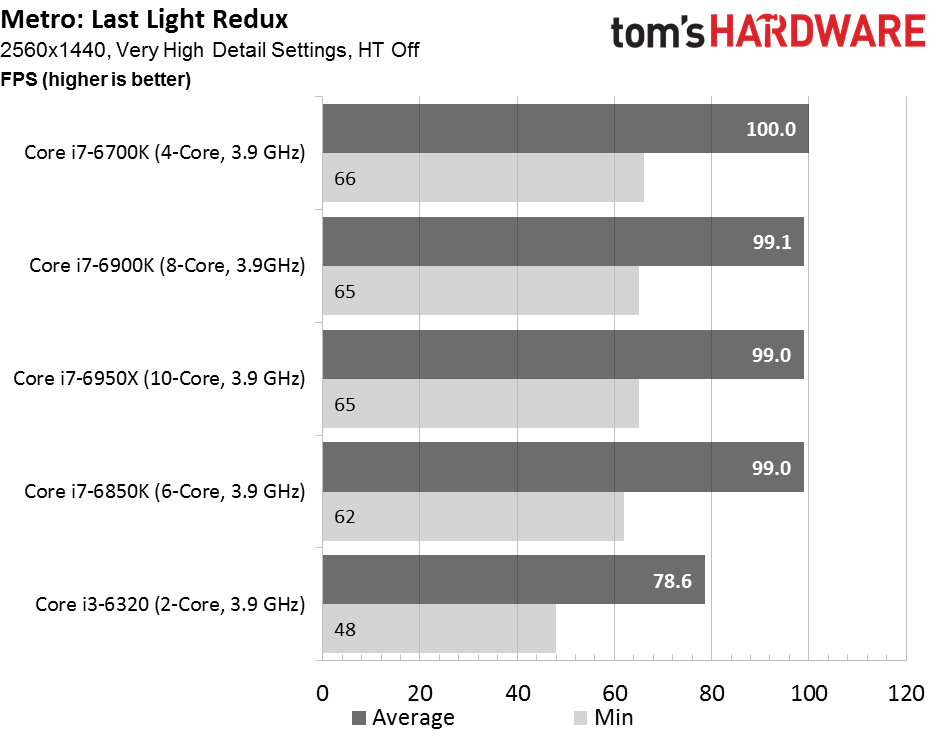

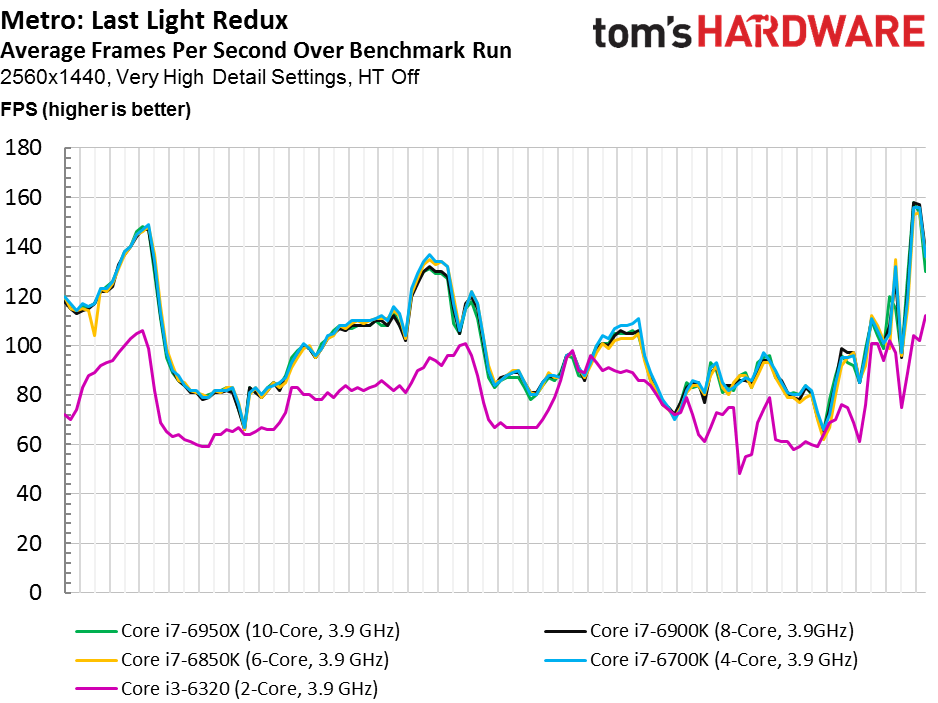

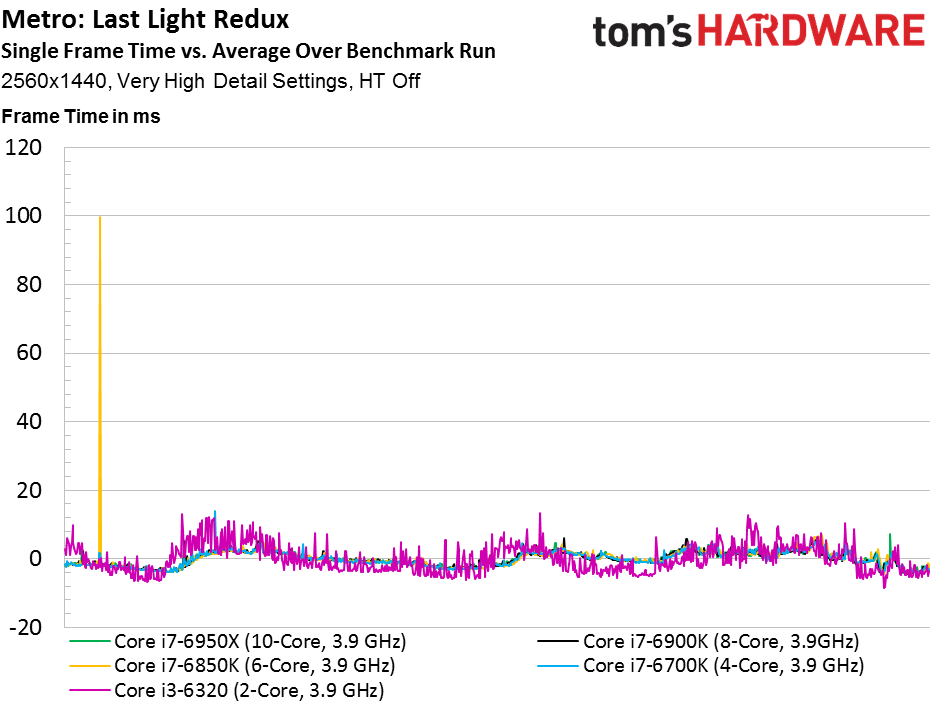

A bump up to 2560x1440 has such an impact on graphics performance that more than four cores no longer matter. In fact, even Broadwell-E’s big L3 caches can’t keep the Skylake architecture’s IPC advantage from shining alongside GeForce GTX 1080.

That’s not to say two Skylake cores can catch up. They can’t. At least not yet. But now we’re seeing 4A Games’ emphasis on higher-quality graphics shrink the gap between host processors.

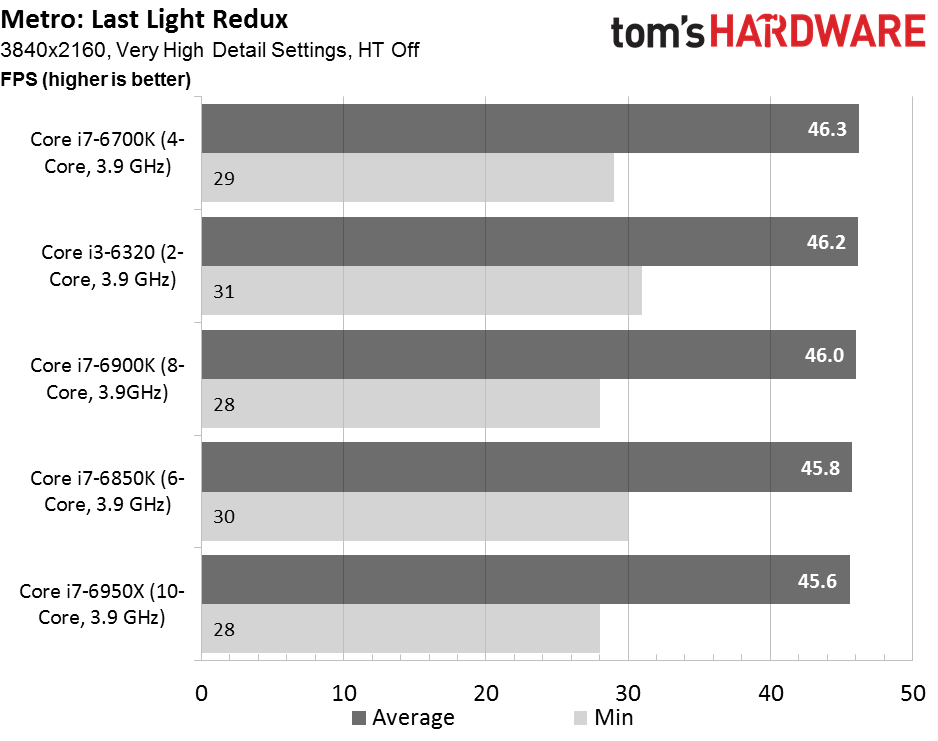

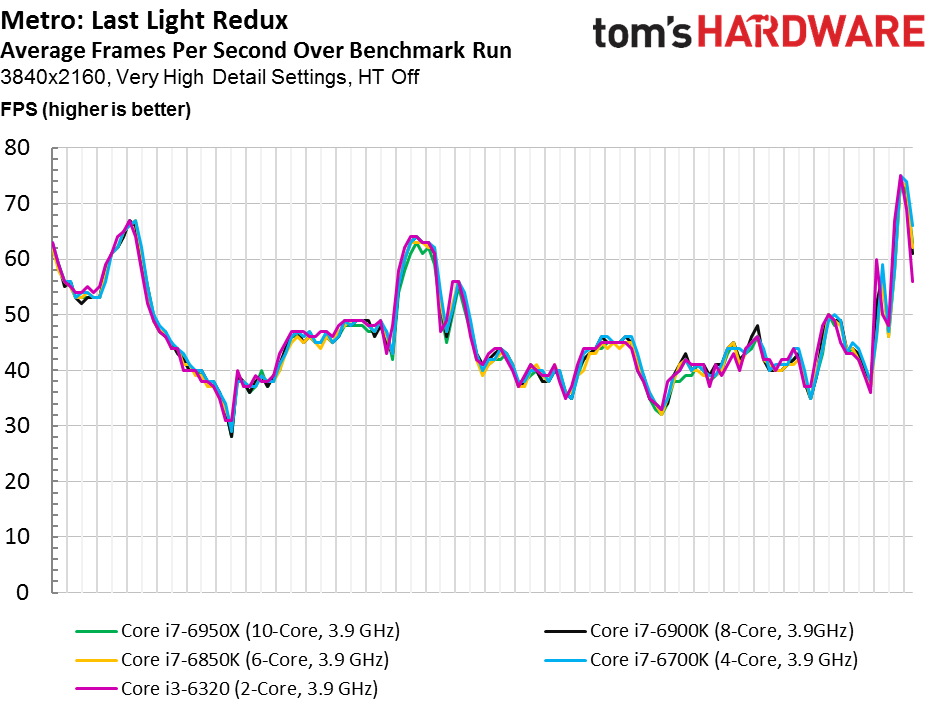

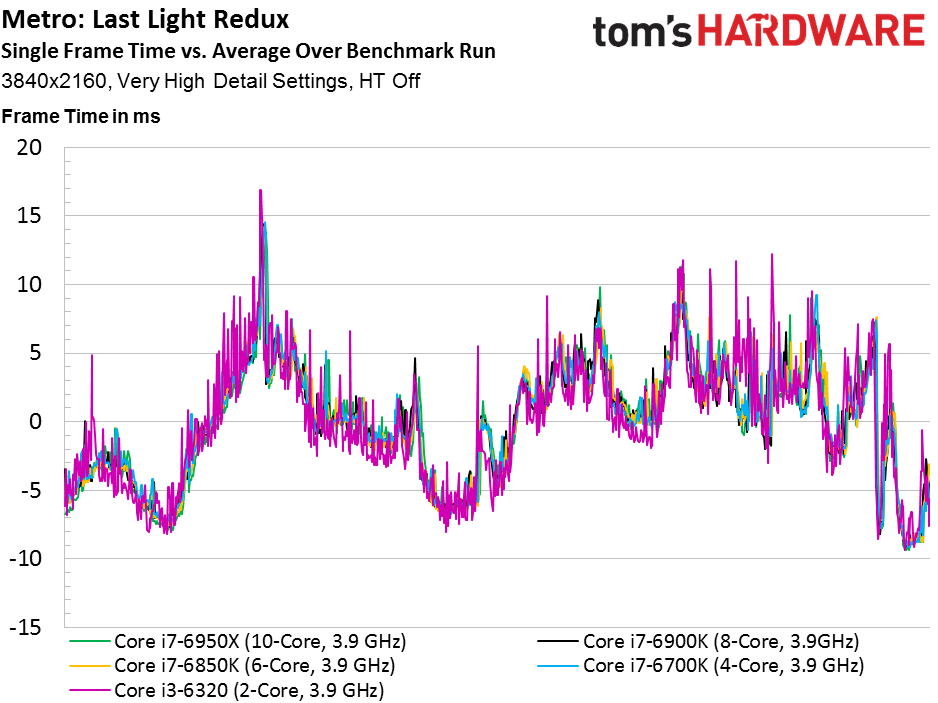

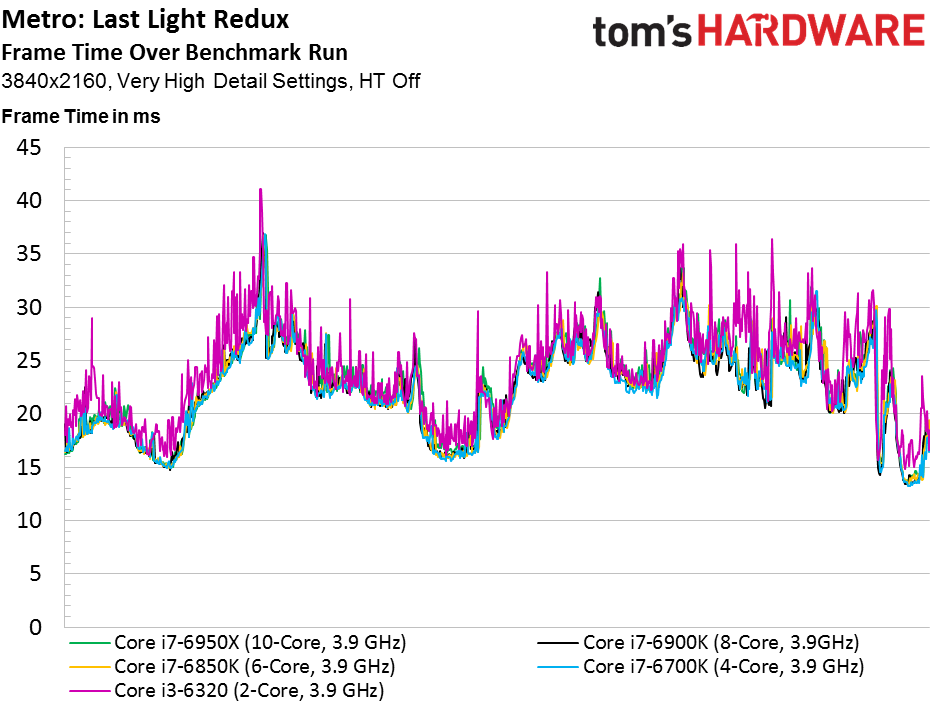

Incredibly, the graphics load at 3840x2160 is such that core count matters little. It’s the Skylake architecture’s ability to get more done per clock cycle that gives GeForce GTX 1080 a touch extra headroom. The result is that we see our four-core sample land in first place, followed by the two-core config. The Broadwell-E chips file in after.

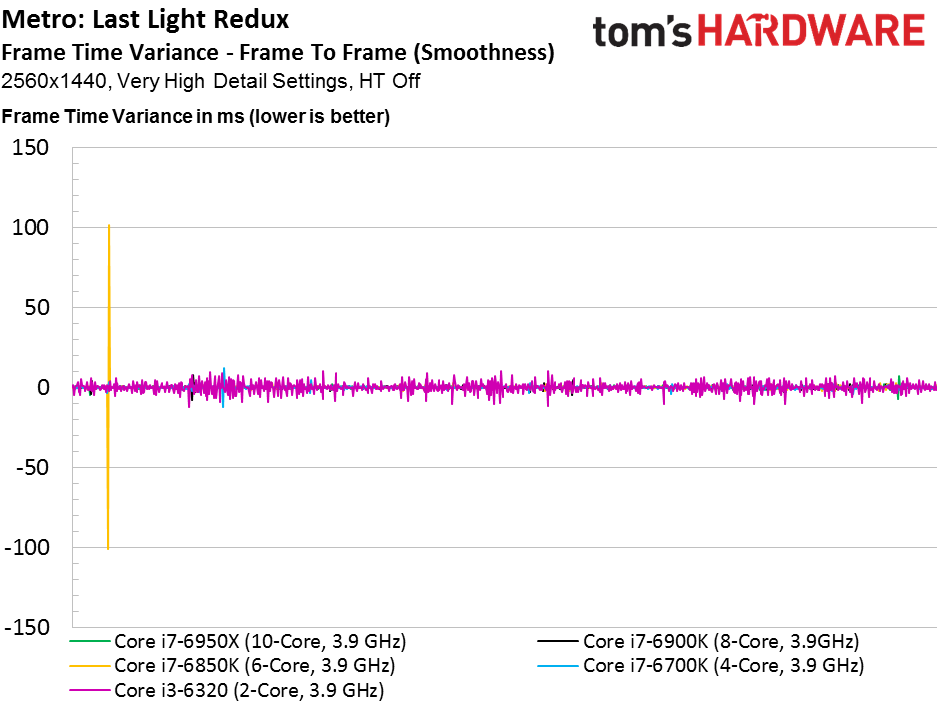

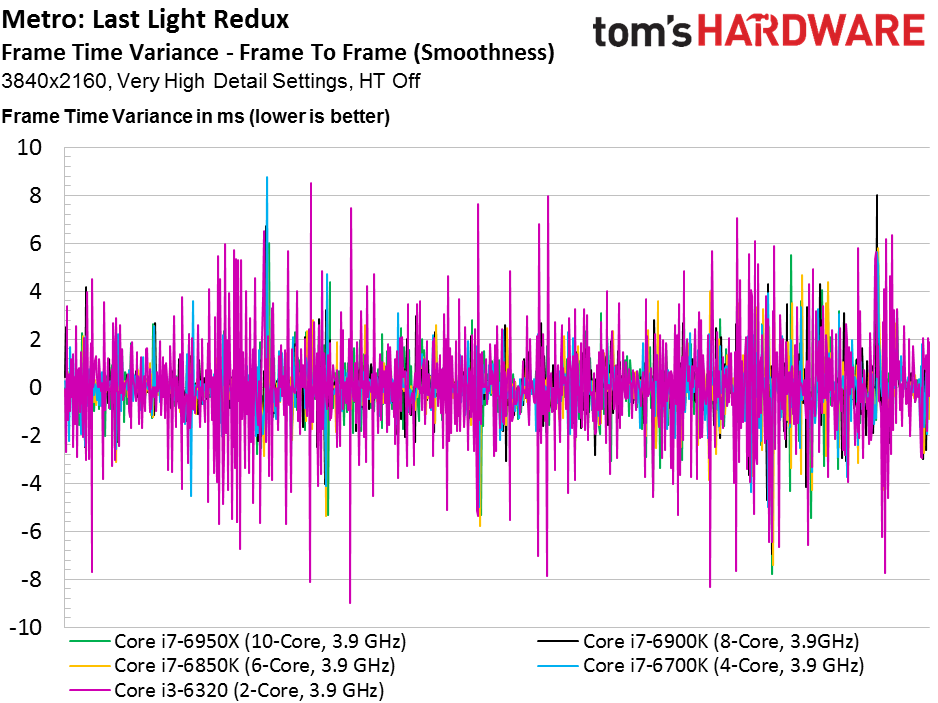

These platforms are essentially tied in the average frame rate and frame rate over time graphs. But the dual-core arrangement does exhibit greater frame time variance just as Dmitry promised, making it a great illustration of his point.

Current page: Interview with 4A Games & Metro: Last Light Redux

Prev Page Grand Theft Auto V & Hitman (2016) Next Page Middle-earth: Shadow of Mordor & Rise of the Tomb Raider-

ledhead11 Awesome article! Looking forward to the rest.Reply

Any chance you can do a run through with 1080SLI or even Titan SLi. There was another article recently on Titan SLI that mentioned 100% CPU bottleneck on the 6700k with 50% load on the Titans @ 4k/60hz. -

Wouldn't it have been a more representative benchmark if you just used the same CPU and limited how many cores the games can use?Reply

-

Traciatim Looks like even years later the prevailing wisdom of "Buy an overclockable i5 with the best video card you can afford" still holds true for pretty much any gaming scenario. I wonder how long it will be until that changes.Reply -

nopking Your GTA V is currently listing at $3,982.00, which is slightly more than I paid for it when it first came out (about 66x)Reply -

TechyInAZ Reply18759076 said:Looks like even years later the prevailing wisdom of "Buy an overclockable i5 with the best video card you can afford" still holds true for pretty much any gaming scenario. I wonder how long it will be until that changes.

Once DX12 goes mainstream, we'll probably see a balanced of "OCed Core i5 with most expensive GPU" For fps shooters. But for CPU the more CPU demanding games it will probably be "Core i7 with most expensive GPU you can afford" (or Zen CPU). -

avatar_raq Great article, Chris. Looking forward for part 2 and I second ledhead11's wish to see a part 3 and 4 examining SLI configurations.Reply -

problematiq I would like to see an article comparing 1st 2nd and 3rd gen I series to the current generation as far as "Should you upgrade?". still cruising on my 3770k though.Reply -

Brian_R170 Isn't it possible use the i7-6950X for all of 2-, 4-, 6-, 8-, and 10-core tests by just disabling cores in the OS? That eliminates the other differences between the various CPUs and show only the benefit of more cores.Reply -

TechyInAZ Reply18759510 said:Isn't it possible use the i7-6950X for all of 2-, 4-, 6-, 8-, and 10-core tests by just disabling cores in the OS? That eliminates the other differences between the various CPUs and show only the benefit of more cores.

Possibly. But it would be a bit unrealistic because of all the extra cache the CPU would have on hand. No quad core has the amount of L2 and L3 cache that the 6950X has. -

filippi I would like to see both i3 w/ HT off and i3 w/ HT on. That article would be the perfect spot to show that.Reply