Network Interface Cards (NICs) 101

Today, we're going over the basics of Network Interface Cards (NICs).

Anatomy

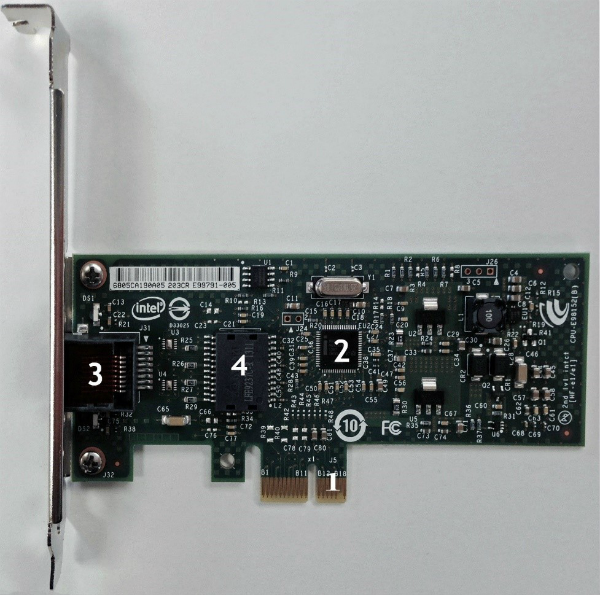

The main function of a network interface card is to encapsulate data and translate it into electronic signals to be sent on the Ethernet cable to its destination. In order to complete this task, the data originates from the motherboard, which transfers information to the device through its expansion slot. As discussed, the current standard is PCIe, and most NICs drop into x1 or x4 slots. It is still possible to purchase PCI-based NICs for backwards compatibility. Standard PCI slots can still be found on modern motherboards, allowing legacy cards to work in newly upgraded computers.

Once the data is on the card, it is directed towards the controller chip, which is similar in concept to a low-end CPU. Data received by the controller is read and encapsulated with the information of the destination prior to being sent out on the line. If the data is received from the Ethernet cable, the controller is responsible for stripping the first three layers of encapsulation prior to handing the data off to the computer. The RJ45 jack is responsible for generating and receiving the electronic signals that are put on the network cable.

The firmware is stored on a chip known as an EPROM (erasable/programmable read-only memory) that can be manually updated to provide more functionality and code fixes.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

freeskier93 I'm honestly not sure what the point of this article is. I mean for 99.99% of users who are on less then a 100 meg connection they will never need to think about a separate NIC, it's really a niche need outside of business. If you guys want to start doing more network articles you should start at the heart of the network, a router. A good NIC is going to be a waste of money if you're still rocking a "gigabit" consumer router.Reply

Personally, we have a gigabit fiber connection an a Ubiquiti EdgeRouter. The Intel NIC on my Asus motherboard will pretty much hit gigabit speeds too. I've hit 950 Mbps up/down with Speed Test and in real world I've hit 800 Mbps with Steam. By comparison out old consumer grade router was choking our speeds to 400 Mbps. -

photonboy Is the USB2 an issue at 50Mbps or 50MBps?Reply

You said "50Mb" which is just over 6MB which seems pretty low to me since the USB2 controller can manage up to 60MBps so i assumed you meant 50MBps. -

mitch074 ReplyIs the USB2 an issue at 50Mbps or 50MBps?

You said "50Mb" which is just over 6MB which seems pretty low to me since the USB2 controller can manage up to 60MBps so i assumed you meant 50MBps.

USB2 has a 480 Megabit/second maximum theoretical debit, not counting protocol overhead - this translates to 50 Megabytes/second maximum theoretical limit. In practice, it's closer to 40 Megabytes/s.

The use of Gigabit Ethernet at home is easily reached when you have a media server : streaming HD content over RJ45 100 allows one streaming at a time, and the rest of the network (web browsing etc.) slows down a lot. Switching to Gigabit allows for a couple streams and normal traffic takes place as usual. Since a TV recorder is essentially a media server, it counts too. -

ubercake I liked the article. It would have been interesting to see different common controllers on a chart listing different characteristics such as latency versus the other (e.g. PCI NIC v PCIe NIC v on-board NIC v wireless NIC) just to quantify exactly what differences we are dealing with.Reply -

g00ey Not a very informative article at all. There are plenty of things to mention just to give a few examples: One could discuss the different cat and how that spec affects the cabling and other hardware in detail. Shielded vs unshielded gear. The different features that you find among different NICs, in more technical detail as to what differentiates a server grade NIC from a desktop grade. How different brands of NICs handles more complex issues such as QoS or TSO and what controllers are better than others in different workloads. What differentiates their signal strenghts how good impedance matching one can get from different ports of different brands of hardware and how well this is resolved. How are packet collisions and losses handled? What latencies can we get? How well do these different NICs really offload the CPU? There are many different features out there such as iSCSI, PXE VDEV, SerDes, IPMI, Cable Diagnostics, Crossover detection, pair swap/polarity/skew correction, NDIS5 Checksum Offload (IP, TCP, UDP) and largesend offload support, IEEE 802.1Q VLAN tagging, Transmit/Receive FIFO (8K/64K) support , ... There are concepts such as HMAC+, RMCP, RAKP, SOL Payload, Kg, SIK, BMC, MSI Writes, SKP Ordered Set resets and Training Sequences (TS), RDTR and RADV timer mechanisms, ICR reads, Bit Banging, ...Reply

There are many different features and concepts, and differences among how different manufacturers handle them. Things could be measured and analysed with spectrum analyzers, and dedicated benchmark hardware, both on the performance and CPU utilization. But none of it is discussed in this article that is quite sub-par unfortunately. -

wtfxxxgp ReplyAnd I'm here, with 12 megabits down, 1 megabit up. On ADSL.

+1

Sometimes I don't even get that.

-

Kewlx25 LSO/TSO/GSO are no longer needed for modern NICs.Reply

A bigger issue is the quality of interrupt coalescing. A 100Mb network can recieve up to 144,800 packets per seconds and cheap network cards issue an interrupt for every packet. Normal CPU time slices are in the order of milliseconds, and your CPU may be handling something like 10,000 switches per second. When you cheap 100Mb NIC attempts to issue 144,800 per second, your CPU will crumble. 1Gb is 10x worse.

Most people doing file transfers will be sending large 1500 byte packets and the max packets per second is for 64byte packets. Most home users don't even notice the difference other than they're only getting 800Mb/s, which could very well just be their mechanical harddrive being the bottleneck, but if they see something like 20% cpu usage while copying, that's why.

High end NICs have large onboard buffers and will interrupt the CPU on the first packet, but subsequent packets they will delay. Don't worry bout the delaying being very long, they're still less than a millisecond. Coupled with that, modern NICs support what is called MSI-X, which is part of PCI-Express since 3.0. Don't worry, they back-ported this 3.0 feature to something like 2.1.

This sweet feature allows for "soft interrupts". Once the first interrupt happens and the driver starts processing the packets, the driver can disable the interrupt. This way new packets that come in do not interrupt the CPU or do not need to be delayed until the next interrupt. The NIC will instead flag a location in memory if any new packets come in. Once the driver is done processing the current batch of packets, the driver can check if there were any "soft" interrupts during this time. If there was, process those packets.

At some point the driver will catch up and process all of the packets. Once this happens, the driver will re-enable the interrupt and switch back to what it was doing before the original interrupt. This dramatically reduces CPU usage while still maintaining low latency and high throughput. The best of both worlds!

Don't pay for a premium Killer NIC, you can get a high end Intel i210 server NIC with all of these awesome features for $60 and will work very well with great drivers for all operating systems. If you need something cheaper, there are plenty of Intel NICs in the $15-$30 range that may not have all of these features, but will still be great for any home user. -

Someone Somewhere ReplyThe major benefit to these cards is that they handle the buffer storage, encoding and decoding of data through the seven network layers.

Um. Network cards don't usually handle offload for anything above TCP (OSI layer 4)... and everything below that is either always done in hardware, or provides negligible overhead.

No one in their right mind is going to offload something like SSH to the NIC. -

evenstephen85 I would have expected and/or liked to have seen some recommendations in this article. We come to Tom's to see results, and there were not even any charts or data to show how/why this would be important. How many times did the article say "we all know this". It's true, we do know this, so give us the details we've come to expect from your articles. How much latency between the two cards difference can we expect etc. Dissapointing read.Reply