Almost 20 TB (Or $50,000) Of SSD DC S3700 Drives, Benchmarked

We've already reviewed Intel's SSD DC S3700 and determined it to be a fast, consistent performer. But what happens when we take two-dozen (or about $50,000) worth of them and create a massive RAID 0 array? Come along as we play around in storage heaven.

The Platform: Built For Storage

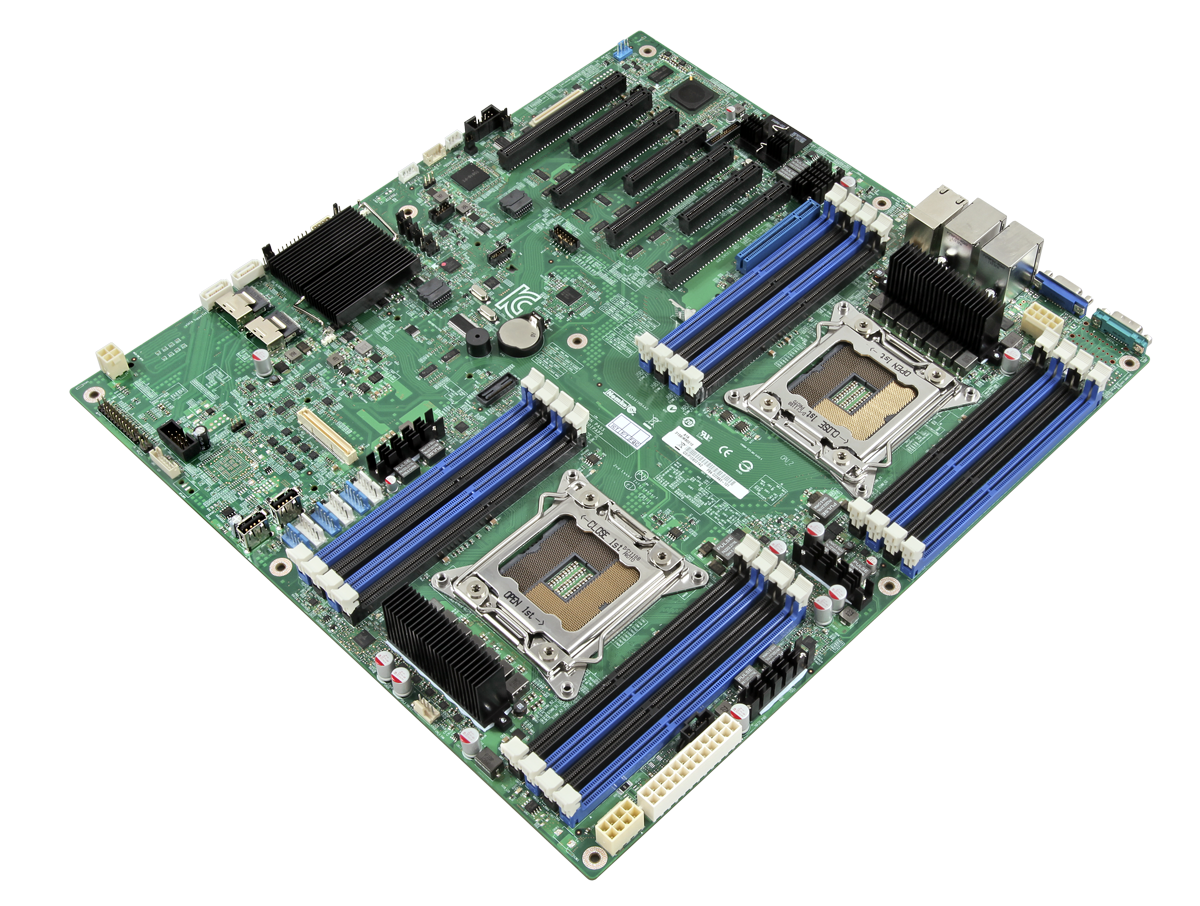

Our testing platform centers on Intel’s S2600IP motherboard. The IP represents the board’s pre-release code name, Iron Pass, while the 2600 refers to the Sandy Bridge-EP-based Xeon E5 processors that drop into the platform's LGA 2011 interfaces. With 16 DDR3 DIMM slots and tons of PCI Express connectivity, there's plenty of room to scale this server up.

If you want, you can start with a single LGA interface populated. However, we're going to want both bristling with eight-core chips. Our server sports twin Xeon E5-2665s running at 2.4 GHz, but able to hit 3.1 GHz in lightly-threaded applications.

The S2600IP motherboard employs Intel's C602 platform controller hub, formerly referred to as Patsburg. As mentioned, PCIe connectivity is excellent, as it should be, given two CPUs armed with 40 lanes each. The board exposes four x16 slots, three eight-lane slots, and a single x8 slot limited to second-gen x4 signaling. Intel also implements a proprietary eight-lane mezzanine slot able to accept some of the company's optional storage controller add-ons.

Otherwise, on-board storage is a bit of a misnomer. The Iron Pass platform maintains both 6 Gb/s SATA ports you expect to find on Intel's 7-series desktop boards. The C602's integrated Storage Controller Unit then adds eight SAS ports, which can either be driven by Intel's RSTe software or LSI's MegaSR driver. Very handy, but we won’t be using that subsystem today. We're merely going to boot from one of the integrated SATA ports.

Of course, a server like this needs plenty of memory to keep each processor fed with data. Kingston helped us out with our experiment by sending 64 GB of its 1.35 V DDR3-1333 ECC-capable RAM. Each 8 GB KVR13LR9D4/8HC module is part of the company's Server Premier family. The line-up consists of many memory types, and is characterized by a locked bill of materials. This is important to builders because it means not re-qualifying memory products based on different components down the road. That'd be a nice guarantee to have on the desktop side, too. Several times we've stumbled on a kit we really like for overclocking, only to find that a second kit with the same model name uses different ICs and behaves differently.

The chassis itself plays host to a few storage-specific features. Twenty-four 2.5” drive carriers and backplane slots grace the front of the 2U enclosure. These are tailor-made for the RES2CV360 expander. With nine ports, we could connect all twenty-four drives to one HBA or RAID adapter. Unfortunately, the LSI-controlled expander adds too much latency, and just chokes the life out of so many SSDs. Were you to swap in a full complement of 2.5” mechanical disks, this setup would be far more desirable. So, we’re bypassing the expander altogether, instead going direct from the backplane to the storage controller. Ideally, we wouldn't have anything in between, but in this case, the backplane can't be helped. In fact, it turns out to be a lifesaver with so many drives.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: The Platform: Built For Storage

Prev Page Toying Around With 18 TB Of Solid-State Storage Next Page Test Setup And Components-

sodaant Those graphs should be labeled IOPS, there's no way you are getting a terabyte per second of throughput.Reply -

cryan mayankleoboy1IIRC, Intel has enabled TRIM for RAID 0 setups. Doesnt that work here too?Reply

Intel has implemented TRIM in RAID, but you need to be using TRIM-enabled SSDs attached to their 7 series motherboards. Then, you have to be using Intel's latest 11.x RST drivers. If you're feeling frisky, you can update most recent motherboards with UEFI ROMs injected with the proper OROMs for some black market TRIM. Works like a charm.

In this case, we used host bus adapters, not Intel onboard PHYs, so Intel's TRIM in RAID doesn't really apply here.

Regards,

Christopher Ryan -

cryan DarkSableIdbuaha.I want.Reply

And I want it back! Intel needed the drives back, so off they went. I can't say I blame them since 24 800GB S3700s is basically the entire GDP of Canada.

techcuriousI like the 3D graphs..

Thanks! I think they complement the line charts and bar charts well. That, and they look pretty bitchin'.

Regards,

Christopher Ryan

-

utroz That sucks about your backplanes holding you back, and yes trying to do it with regular breakout cables and power cables would have been a total nightmare, possible only if you made special holding racks for the drives and had multiple power suppy units to have enough sata power connectors. (unless you used the dreaded y-connectors that are know to be iffy and are not commercial grade) I still would have been interested in someone doing that if someone is crazy enough to do it just for testing purposes to see how much the backplanes are holding performance back... But thanks for all the hard work, this type of benching is by no means easy. I remember doing my first Raid with Iwill 2 port ATA-66 Raid controller with 4 30GB 7200RPM drives and it hit the limits of PCI at 133MB/sec. I tried Raid 0, 1, and 0+1. You had to have all the same exact drives or it would be slower than single drives. The thing took forever to build the arrays and if you shut off the computer wrong it would cause huge issues in raid 0... Fun times...Reply