Grok

Latest about Grok

Elon Musk says Grok 3.5 will provide answers that aren't from internet sources

By Ash Hill published

The Grok 3.5 beta is set to release next week for SuperGrok subscribers, with claims that it's a reasoning model that can provide unique answers that aren't on the internet.

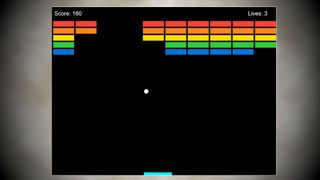

Grok 3 used to clone Breakout game

By Mark Tyson published

Windows development legend Dave Plummer has shared the prompt and code for a Breakout clone he created in Grok 3.

Musk announces xAI gaming studio to develop 'AI games' with photo-realistic graphics

By Sayem Ahmed published

Following the release of Grok 3, Musk's xAI is spinning off into game development, with lofty ambitions.

Elon Musk's Grok 3 is now available, beats ChatGPT in some benchmarks

By Jowi Morales published

Elon Musk just launched Grok 3, which he claims to be the most powerful model available right now.

Musk claims Grok 3 is 'outperforming' rivals, full release imminent

By Jowi Morales published

Elon Musk said at the World Government Summit in Dubai that xAI's Grok 3 will arrive in two to three weeks and will be more powerful than any other LLM currently out there.

Elon Musk confirms that Grok 3 is coming soon

By Anton Shilov published

Grok 3 took 10X compute power to train than Grok 2.

Elon Musk's xAI raises $6 billion to build more powerful AI supercomputers

By Anton Shilov published

xAI, which is set to build a supercomputer with 200,000 Nvidia GPUs, raises $6 billion.

Elon Musk plans to scale the xAI supercomputer to a million GPUs — currently at over 100,000 H100 GPUs and counting

By Anton Shilov published

xAI to scale the Colossus supercomputer to one million processors, which could create the most powerful machine in the world.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.