Adaptec MaxIQ: Flash SSDs Boost RAID Performance

Adaptec Storage Manager And MaxIQ

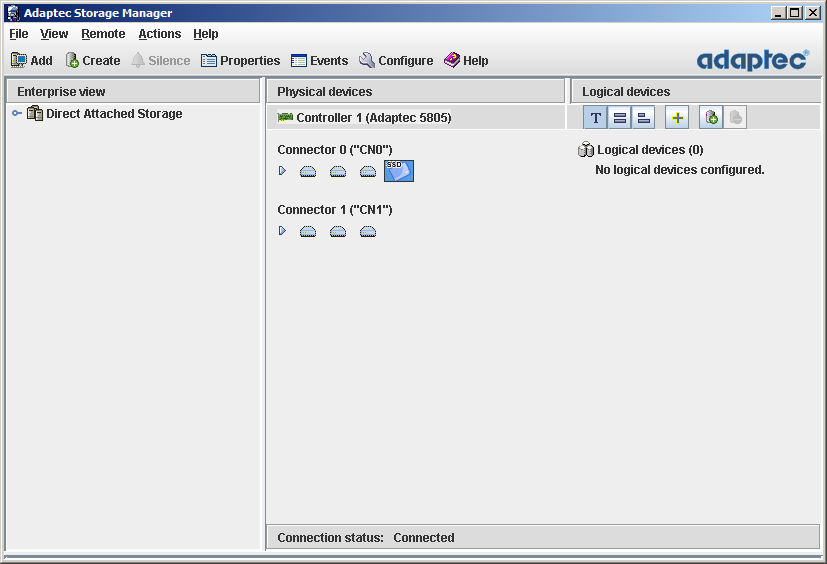

Let’s look at the configuration process of a storage system equipped with Adaptec's MaxIQ. We grabbed an Adaptec RAID 5805 card (firmware 5.2.0.17544) with eight ports and created a RAID 0 array using three Fujitsu MBA3147 15,000 RPM SAS hard drives. This represents an entry-level high-performance hard drive-based RAID solution, and although we used RAID 0, it is nearly comparable with a four-drive RAID 5 configuration in terms of read performance.

Once the updated firmware is installed, you’ll also need an updated version of Adaptec Storage Manager, the management suite for Adaptec storage products. We found a copy on the MaxIQ CD, but it’s always best to get the latest version from the vendor.

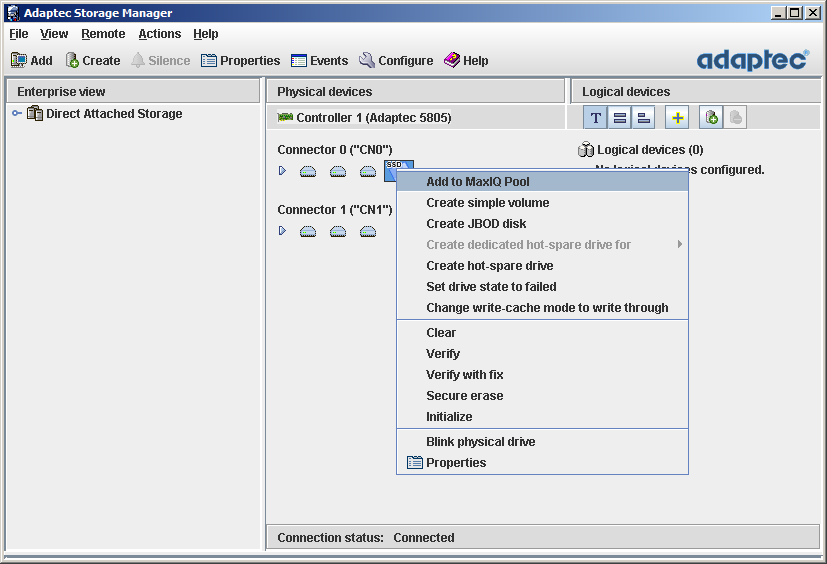

The latest version of ASM will distinguish hard drives from SSDs, which is necessary if you want to convert the X25-E SSD into a caching device. Simply right-click on the SSD…

…and add the SSD to the MaxIQ pool. You can run two or more SSDs if you want caching capacities larger than this SSD’s 32GB, but you’ll have to purchase more drives. Adaptec aims to offer support for other SSDs, but today there remains no alternative to Intel's X25-E.

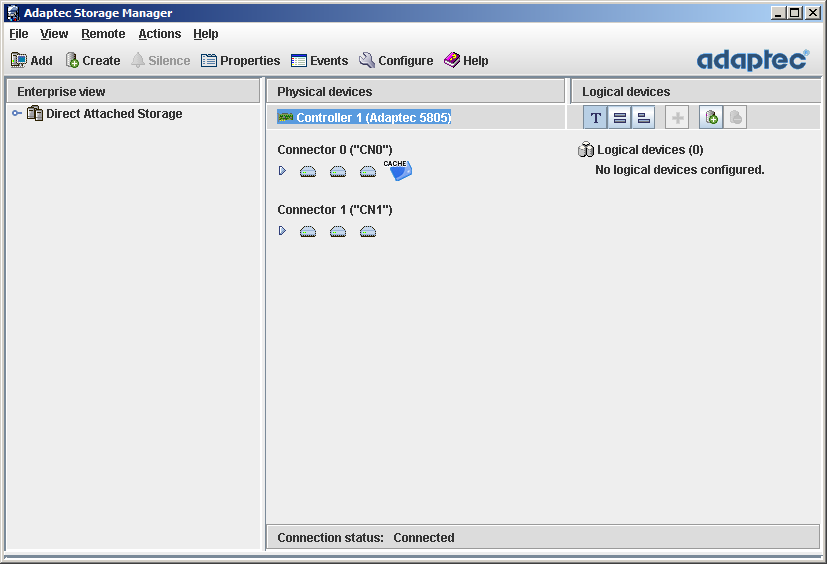

An SSD used as a MaxIQ caching device is marked as such.

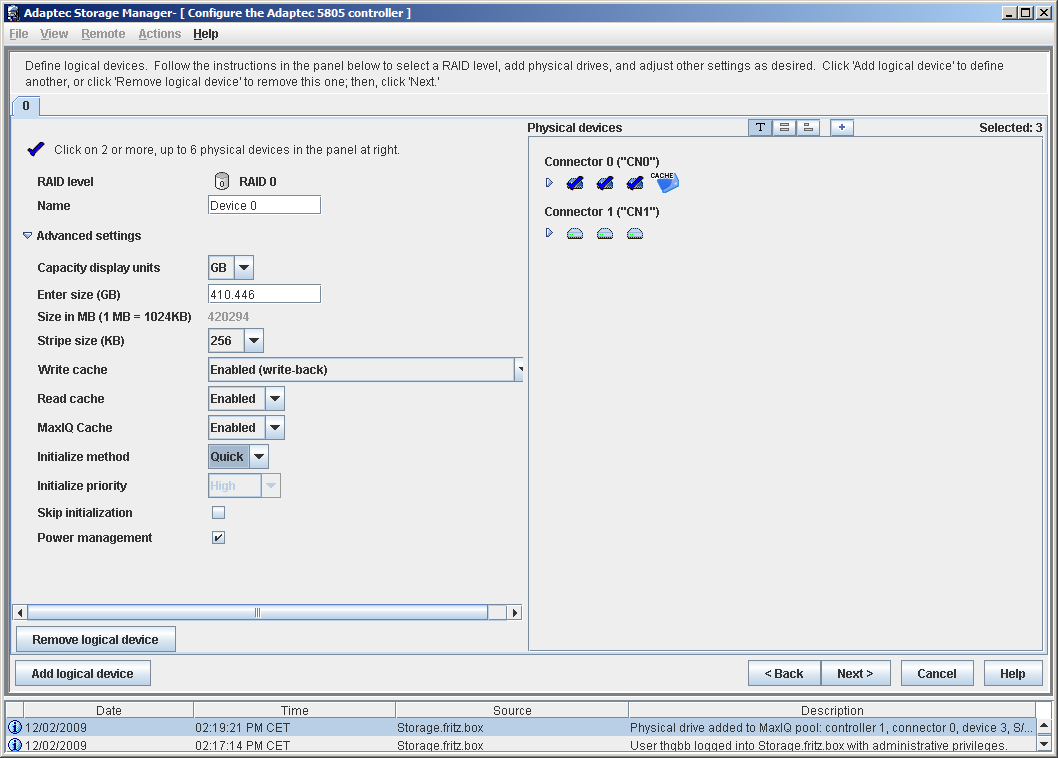

All that remains is to enable MaxIQ caching on the overview page of the desired RAID array. You can activate the cache feature for multiple arrays should you have a more complex storage arrangement. That’s about it. MaxIQ commences operation automatically and is fully transparent.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Adaptec Storage Manager And MaxIQ

Prev Page MaxIQ Details Next Page Test Hardware And Setup