Nvidia GeForce GTX 1080 Pascal Review

Meet GP104

It's almost time for Computex (or launch season, as we've come to know it), and Nvidia is first out of the gate with an implementation of its Pascal architecture for gamers. The GP104 processor powers the company's GeForce GTX 1080 and 1070 cards. Today, we're reviewing the former, but the latter is expected in early June, and it'll undoubtedly be followed by a complete portfolio of Pascal-based derivatives through the end of 2016.

Sure, Pascal promises to be faster and more efficient, with a greater number of resources in its graphics pipeline, packed more densely onto a smaller die and with faster memory on a reworked controller. Sure, it promises to be better for VR, for 4K gaming and more. But as with any launch accompanied by a fresh architecture, there's a lot more going on under the hood.

As always, we're here to explore those promises and put them to the test. So let's get started.

Can GeForce GTX 1080 Change The High-End Game?

Nvidia's GeForce GTX 1080 is the faster of the two gaming graphics cards announced earlier this month. Both employ the company's GP104 processor, which, incidentally, is already its second GPU built on the Pascal architecture (the first being GP100, which surfaced at GTC in April). Nvidia CEO Jen-Hsun Huang titillated enthusiasts during his public unveiling when he claimed that GeForce GTX 1080 would outperform two 980s in SLI.

He also mentioned that GTX 1080 improves the 900-series' ability to get more work done while using less power, doubling the performance and tripling the efficiency of the once-flagship GeForce GTX Titan X—though, if you read the accompanying chart carefully, that figure is specific to certain VR workloads. But if it comes close to being true, we're at a very exciting point in high-end PC gaming.

VR is only just getting off the ground, though steep graphics processing requirements create a substantial barrier to entry. Moreover, most of the games currently available aren't written to take advantage of multi-GPU rendering yet. That means you're generally limited to the fastest single-GPU card you can find. A GTX 1080 able to outmaneuver two 980s should have no trouble in any of today's VR titles, kicking the need for multi-GPU down the road a ways.

The 4K ecosystem is evolving as well. Display interfaces with more available bandwidth, like HDMI 2.0b and DisplayPort 1.3/1.4, are expected to enable 4K monitors with 120Hz panels and dynamic refresh rates by the end of this year. Although the previous generation of top-end GPUs from AMD and Nvidia were positioned as 4K stunners, they necessitated quality compromises in order to maintain playable performance. Nvidia’s GeForce GTX 1080 could be the first card to facilitate fast-enough frame rates at 3840x2160 with detail settings cranked all the way up.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

How about multi-monitor configurations? Many gamers settle for three 1920x1080 screens, if only to avoid the crushing impact of trying to shade more than half a billion pixels per second at 7680x1440. And yet, there are enthusiasts eager to skip over QHD monitors altogether and set up three 4K displays in a 11,520x2160 array.

Alright, so that might a little exotic for even the latest gaming flagship. But Nvidia does have technology baked into its GP104 processor that promises to improve your experience in the workloads most in need of this card—4K and Surround. Before we break into those extras, though, let’s take a closer look at GP104 and its underlying Pascal architecture.

What Goes Into A GP104?

AMD and Nvidia have relied on 28nm process technology since early 2012. At first, both companies capitalized on the node and took big leaps with the Radeon HD 7970 and GeForce GTX 680. Over the subsequent four years, though, they had to get creative in their quests to deliver higher performance. What the Radeon R9 Fury X and GeForce GTX 980 Ti achieve is nothing short of amazing given their complexity. GK104—Nvidia’s first on 28nm—was a 3.5-billion-transistor chip. GM200, which is the heart of GeForce GTX 980 Ti and Titan X, comprises eight billion transistors.

The switch to TSMC’s 16nm FinFET Plus technology means Nvidia’s engineers can stop holding their breath. According to the foundry, 16FF+ is up to 65% faster, twice the density or 70 percent lower power than 28HPM, and Nvidia is undoubtedly leveraging some optimized combination of those attributes to build its GPUs. TSMC also claims it’s reusing the metal back-end process of its existing 20nm process, except with FinFETs rather than planar transistors. Though the company says this helps improve yield and process maturity, it’s telling that there was no high-performance 20nm process (and again, the graphics world is more than four years into 28nm).

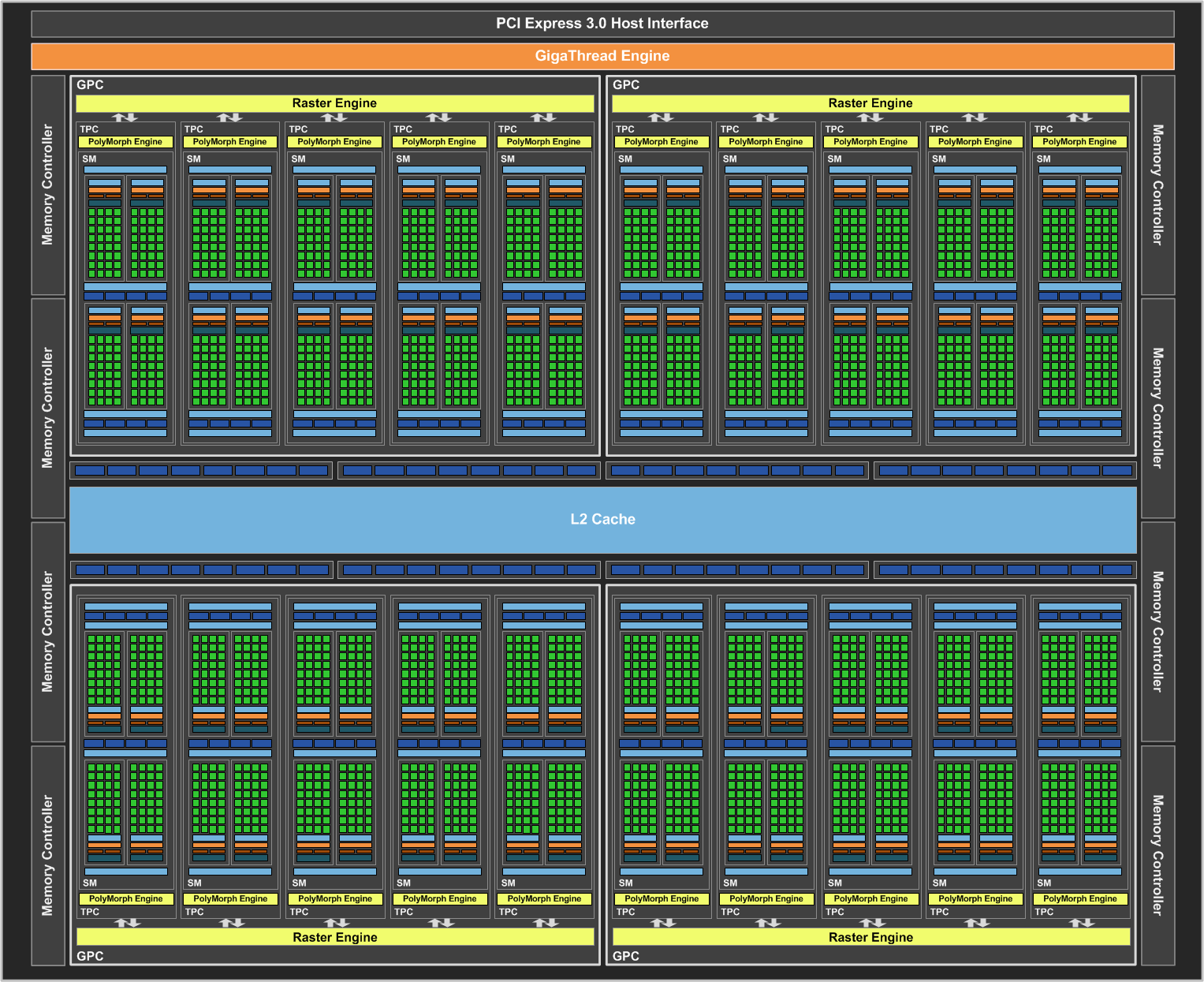

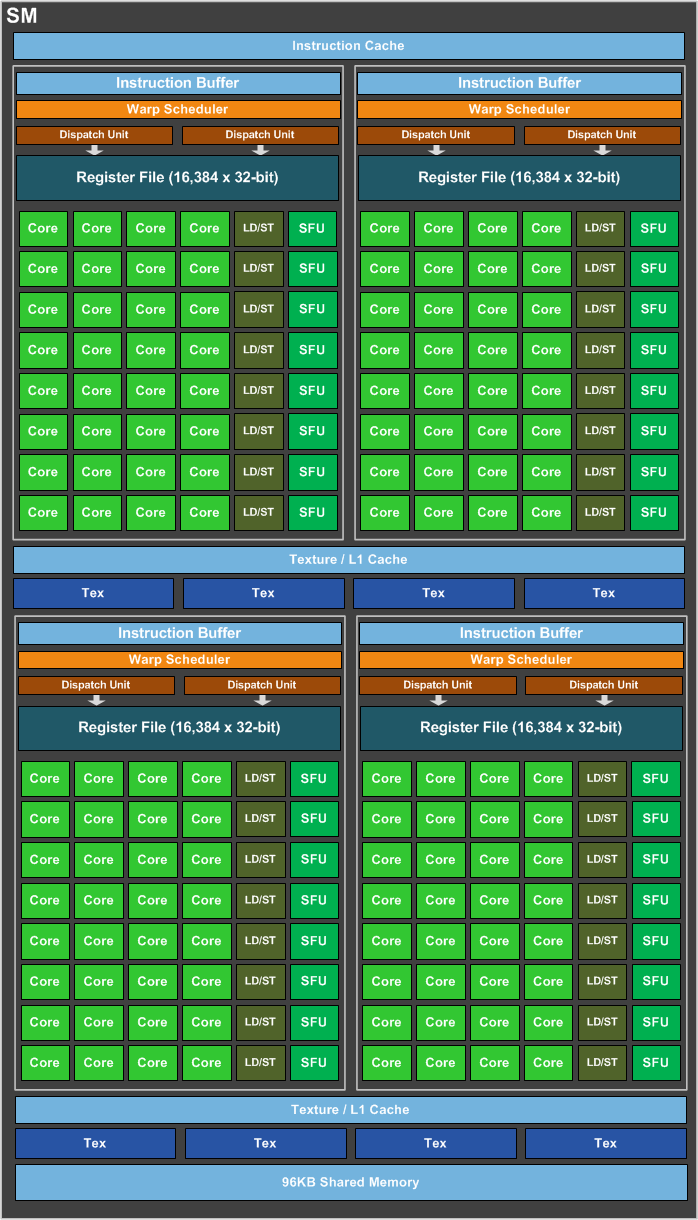

Consequently, the GM204’s spiritual successor is composed of 7.2 billion transistors fit into an area of 314mm². Compare that to GM204’s 5.2 billion transistors on a 398mm² die. At its highest level, one GP104 GPU includes four Graphics Processing Clusters. Each GPC includes five Thread/Texture Processing Clusters and raster engine. Broken down further, a TPC combines one Streaming Multiprocessor and a PolyMorph engine. The SM combines 128 single-precision CUDA cores, 256KB register file capacity, 96KB of shared memory, 48KB of L1/texture cache and eight texture units. Meanwhile, the fourth-generation PolyMorph engine includes a new block of logic that sits at the end of the geometry pipeline and ahead of the raster unit for handling Nvidia’s Simultaneous Multi-Projection feature (more on this shortly). Add all of that up. You get 20 SMs, totaling 2560 CUDA cores and 160 texture units.

The GPU’s back-end features eight 32-bit memory controllers, yielding an aggregate 256-bit path, with eight ROPs and 256KB of L2 cache bound to each. Again, do the math and you get 64 ROPs and 2MB of shared L2. Although Nvidia’s GM204 block diagrams showed four 64-bit controllers and as many 16 ROP partitions, these were grouped together and functionally equivalent.

On that note, a few of GP104’s structural specifications sound similar to parts of GM204, and indeed this new GPU is constructed on a foundation of its predecessor’s building blocks. That’s not a bad thing. If you remember, the Maxwell architecture put a big emphasis on efficiency without shaking up Kepler’s strong points too much. The same applies here.

Adding four SMs might not seem like a recipe for dramatically higher performance. However, GP104 has a few secret weapons up its sleeve. One is substantially higher clock rates. Nvidia’s base GPU frequency is 1607MHz. GM204, in comparison, was spec’d for 1126MHz. The GPU Boost rate is 1733MHz, and we’ve taken our sample up as high as 2100MHz using a beta build of EVGA’s PrecisionX utility. What’d it take to make that headroom possible? According to Jonah Alben, senior vice president of GPU engineering, his team knew that TSMC’s 16FF+ would change the processor’s design, so they put an emphasis on optimizing timings in the chip to clean up paths that’d impede higher frequencies. As a result, GP104’s single-precision compute performance tops out at 8228 GFLOPs (if you stick with the base clock), compared to GeForce GTX 980’s 4612 GFLOP ceiling. And texel fill rate jumps from the 980’s 155.6 GT/s (using the GPU Boost frequency) to 277.3 GT/s.

| GPU | GeForce GTX 1080 (GP104) | GeForce GTX 980 (GM204) |

|---|---|---|

| SMs | 20 | 16 |

| CUDA Cores | 2560 | 2048 |

| Base Clock | 1607MHz | 1126MHz |

| GPU Boost Clock | 1733MHz | 1216MHz |

| GFLOPs (Base Clock) | 8228 | 4612 |

| Texture Units | 160 | 128 |

| Texel Fill Rate | 277.3 GT/s | 144.1 GT/s |

| Memory Data Rate | 10 Gb/s | 7 Gb/s |

| Memory Bandwidth | 320 GB/s | 224 GB/s |

| ROPs | 64 | 64 |

| L2 Cache | 2MB | 2MB |

| TDP | 180W | 165W |

| Transistors | 7.2 billion | 5.2 billion |

| Die Size | 314mm² | 398mm² |

| Process Node | 16nm | 28nm |

Similarly, although we’re still talking about a back-end with 64 ROPs and a 256-bit memory path, Nvidia incorporates GDDR5X memory to augment available bandwidth. The company put a lot of effort into spinning this as positively as possible, given that multiple AMD cards employ HBM and Nvidia’s own Tesla P100 sports HBM2. But it seems as though there’s not enough HBM2 to go around, and the company isn’t willing to accept the limitations of HBM (mainly, four 1GB stacks or the challenges associated with eight 1GB stacks). Thus, we have GDDR5X, which must also be in short supply, given that the GeForce GTX 1070 uses GDDR5. Let’s not marginalize the significance of what we’re getting, though. GDDR5 enabled data rates of 7 Gb/s on the GeForce GTX 980. Over a 256-bit bus, that yielded up to 224 GB/s of throughput. GDDR5X begins life at 10 Gb/s, pushing bandwidth to 320 GB/s (a ~43% increase). Nvidia does this, it says, without increasing power consumption over a redesigned I/O circuit.

Just as the Maxwell architecture made more effective use of bandwidth through optimized caches and compression algorithms, so too does Pascal implement new lossless techniques to extract savings in several places along the memory subsystem. GP104’s delta color compression tries to achieve 2:1 savings, and this mode is purportedly enhanced to be usable more often. There’s also a new 4:1 mode that covers cases when per-pixel differences are very small and compressible into even less space. Finally, Pascal has a new 8:1 mode that combines 4:1 constant compression to 2x2 blocks with 2:1 compression of the differences between them.

Illustrated more simply, the first image above shows an uncompressed screen capture from Project CARS. The next shot shows elements that Maxwell could compress, replaced with magenta. Last, we see that Pascal compresses even more of the scene. According to Nvidia, this translates to a roughly 20% reduction in bytes that need to be fetched from memory per frame.

MORE: Best Graphics CardsMORE: All Graphics Content

Current page: Meet GP104

Next Page Disassembling GeForce GTX 1080 Founders Edition-

JeanLuc Chris, were you invited to the Nvidia press event in Texas?Reply

About time we saw some cards based of a new process, it seemed like we were going to be stuck on 28nm for the rest of time.

As normal Nvidia is creaming it up in DX11 but DX12 performance does look ominous IMO, there's not enough gain over the previous generation and makes me think AMD new Polaris cards might dominate when it comes to DX12. -

slimreaper Could you run an Otoy octane bench? This really could change the motion graphics industry!?Reply

-

F-minus Seriously I have to ask, did nvidia instruct every single reviewer to bench the 1080 against stock maxwell cards? Cause i'd like to see real world scenarios with an OCed 980Ti, because nobody runs stock or even buys stock, if you can even buy stock 980Tis.Reply -

cknobman Nice results but honestly they dont blow me away.Reply

In fact, I think Nvidia left the door open for AMD to take control of the high end market later this year.

And fix the friggin power consumption charts, you went with about the worst possible way to show them. -

FormatC Stock 1080 vs. stock 980 Ti :)Reply

Both cards can be oc'ed and if you have a real custom 1080 in your hand, the oc'ed 980 Ti looks in direct comparison to an oc'ed 1080 worse than the stock card in this review to the other stock card. :) -

Gungar @F-minus, i saw the same thing. The gtx 980Ti overclocks way better thn 1080, i am pretty sure OC vs OC, there is nearly no performance difference. (disappointing)Reply -

toddybody Reply@F-minus, i saw the same thing. The gtx 980Ti overclocks way better thn 1080, i am pretty sure OC vs OC, there is nearly no performance difference. (disappointing)

LOL. My 980ti doesnt hit 2.2Ghz on air. We need to wait for more benchmarks...I'd like to see the G1 980ti against a similar 1080. -

F-minus Exactly, but it seems like nvidia instructed every single outlet to bench the Reference 1080 only against stock Maxwell cards, which is honestly <Mod Edit> - pardon. I bet an OCed 980Ti would come super close to the stock 1080, which at that point makes me wonder why even upgrade now, sure you can push the 1080 too, but I'd wait for a price drop or at least the supposed cheaper AIB cards.Reply -

FormatC I have a handpicked Gigabyte GTX 980 Ti Xtreme Gaming Waterforce at 1.65 Ghz in one of my rigs, it's slower.Reply