Ryzen 9 3900X vs. Core i9-9900K: Gaming Performance Scaling

Ryzen 9 3900X vs. Core i9-9900K: The great CPU scaling comparison, updated with additional DDR4 testing.

If we're talking pure performance, it's easy enough to point to the best graphics card for gaming and the best CPU for gaming. We've done extensive benchmarking and testing of every major GPU and CPU for decades. Today (which as I noted recently is a bad time to buy a new GPU), the fastest graphics card is the RTX 2080 Ti, and the fastest CPU for gaming is the Core i9-10900K. Our GPU test system still uses a Core i9-9900K (the newer stepping D, revision R0, which can improve performance 3-5% depending on the game), but we wanted to provide a complete look at how two of the top CPUs stack up, across a full suite of games and resolutions, with a much wider selection of graphics cards.

Update: We've added Intel with DDR4-3600 and AMD with DDR4-3200 results for the RTX 2080 Ti, to show how other factors can contribute to performance.

That sounds like a pretty simple idea, but what followed was quite a boatload of testing and retesting. The final results are in, and that's what we're going to show today. We started with our Core i9-9900K results, then retested with the latest Nvidia drivers. These tests all use the 451.48 drivers from late June, except for the 2080 Ti which was retested with 451.67 on both platforms just to get the most up-to-date numbers (it didn't change much). We did the same for AMD GPUs, running Adrenalin 2020 20.5.1 drivers on all of the cards, except the RX 5700 XT which was retested with the latest 20.7.1 drivers that arrived last week.

Our two test platforms are similarly equipped, though obviously the motherboards aren't the same. You can see the full set of test hardware for both systems to the right. The GPUs, OS, and drivers are the same versions (Windows 10 May 2020 update, build 2004). The motherboard and SSD are different, with the latter not really having any impact on performance.

Perhaps more importantly, we equipped the AMD system with DDR4-3600 memory, which has a slight impact on performance (but generally doesn't matter for the Intel test system). We used half of a 4x16GB kit of Corsair Dominator Platinum RGB memory, which for the record is a $620 kit on sale.

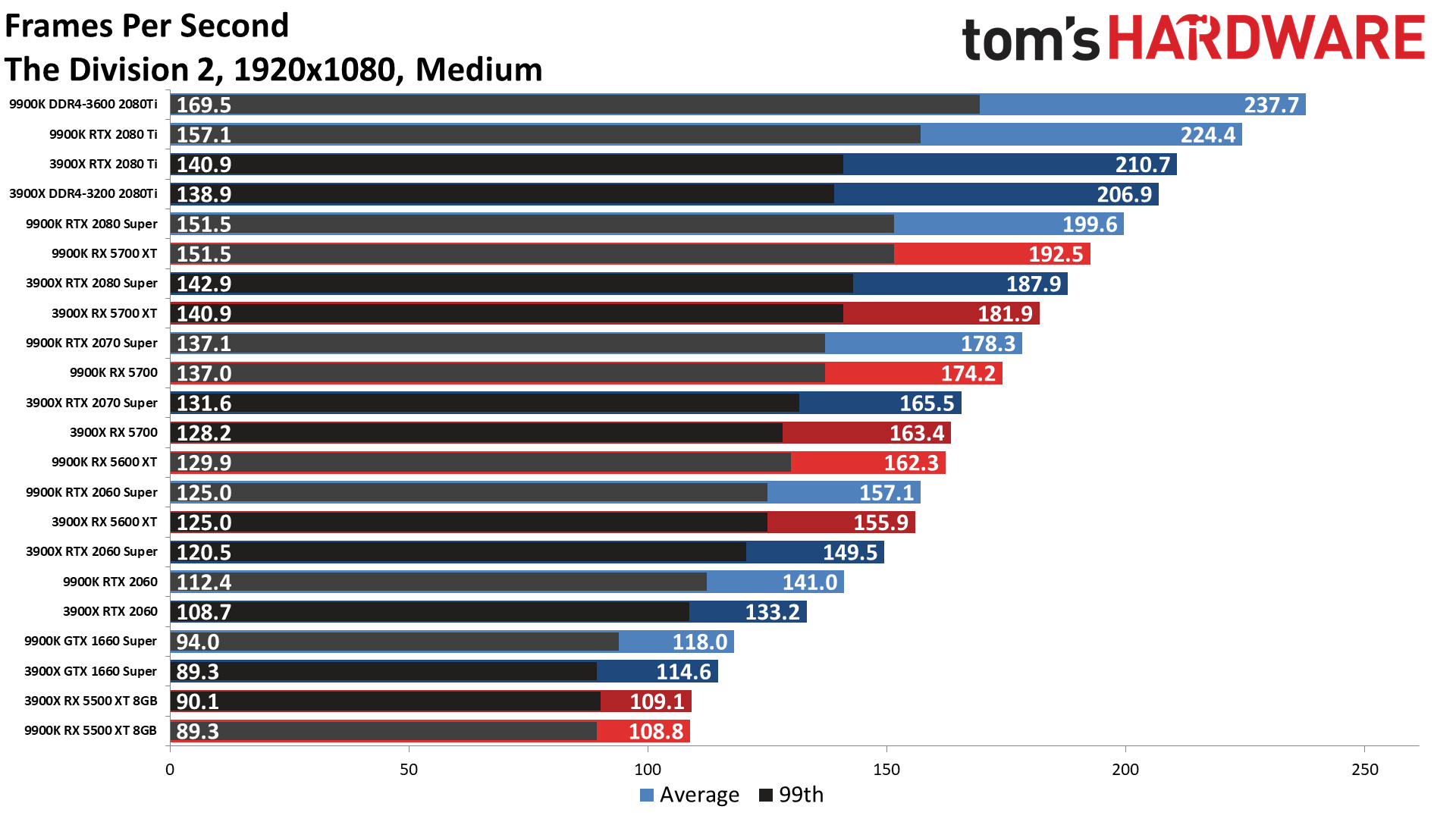

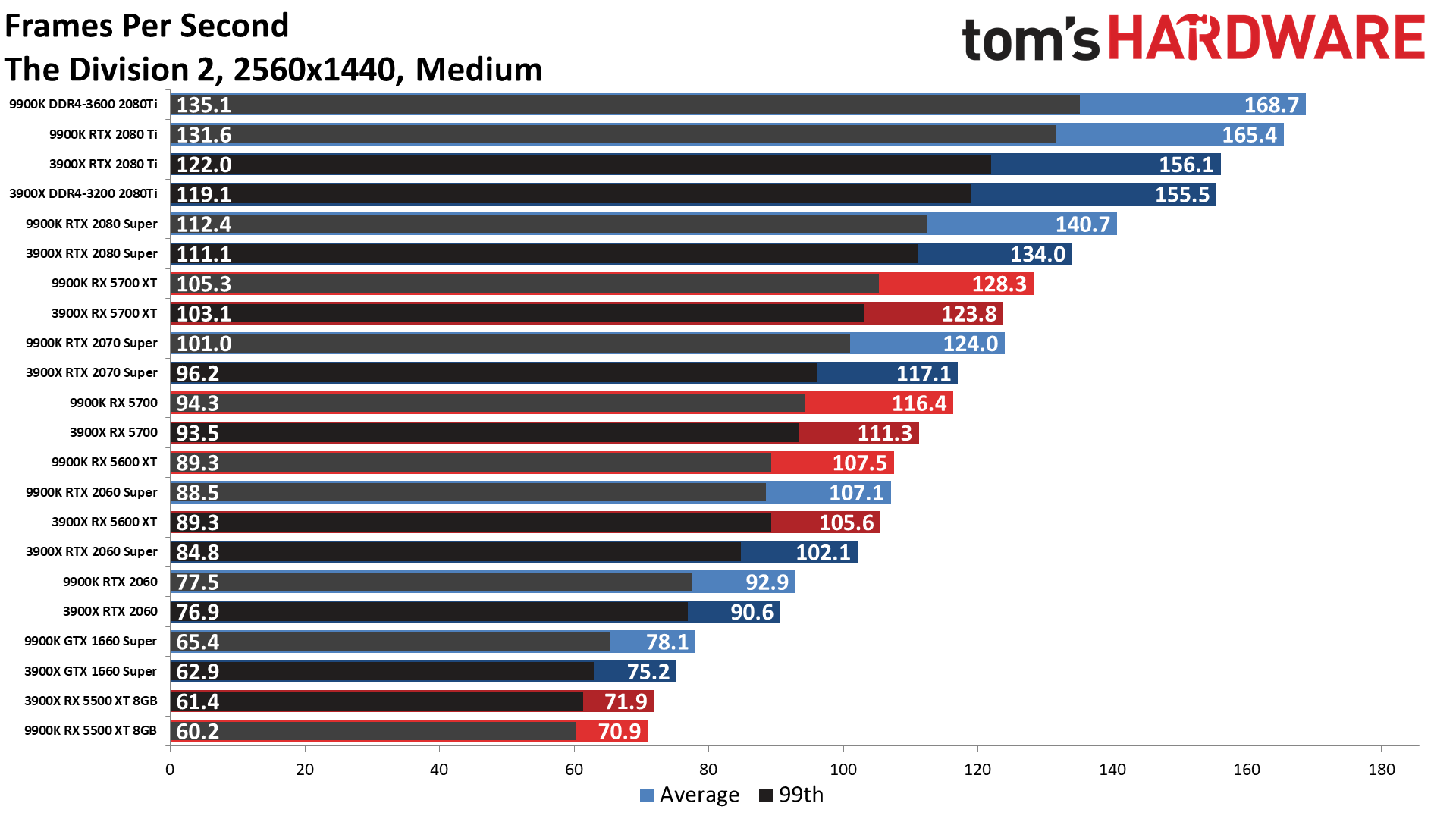

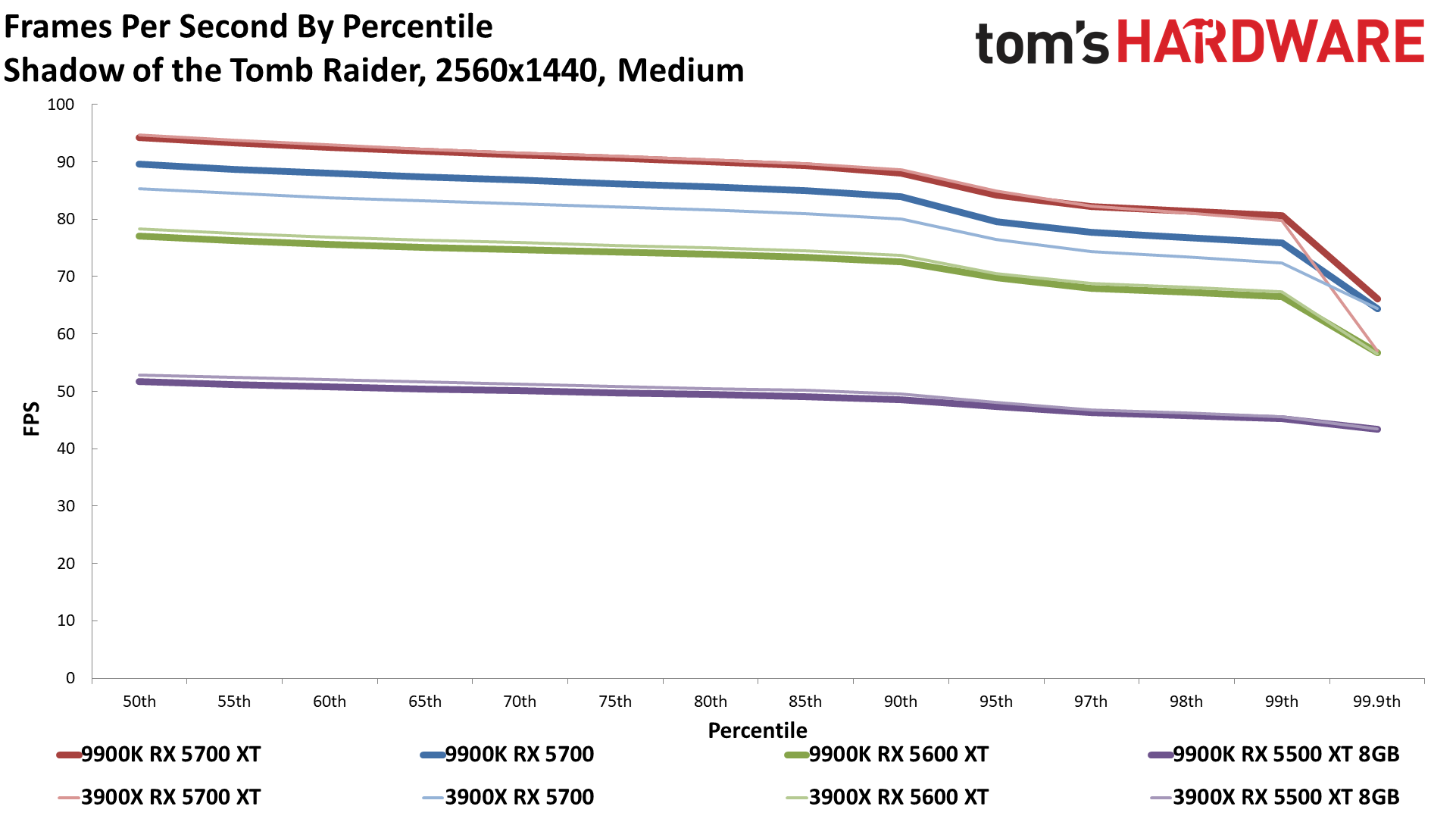

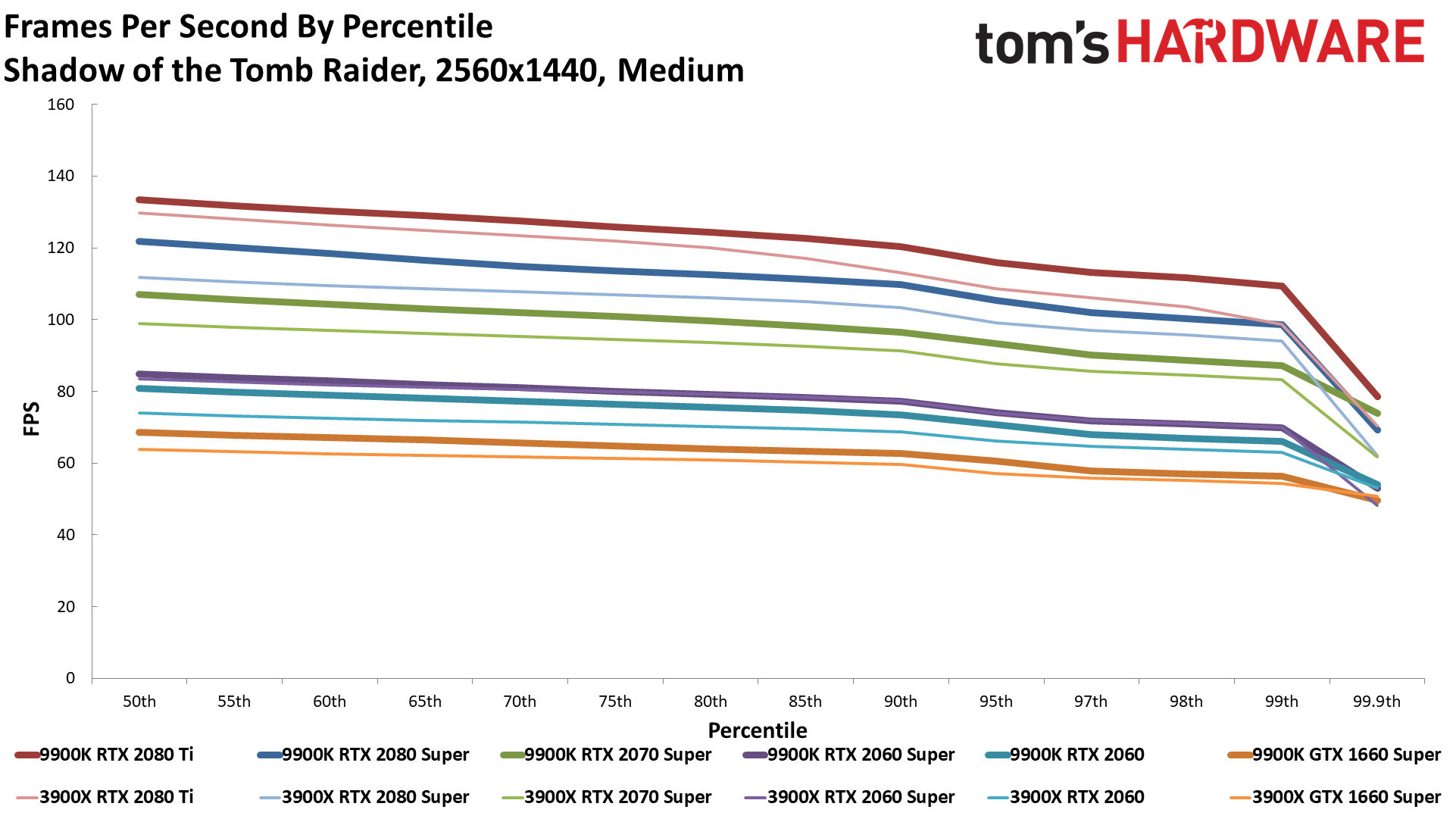

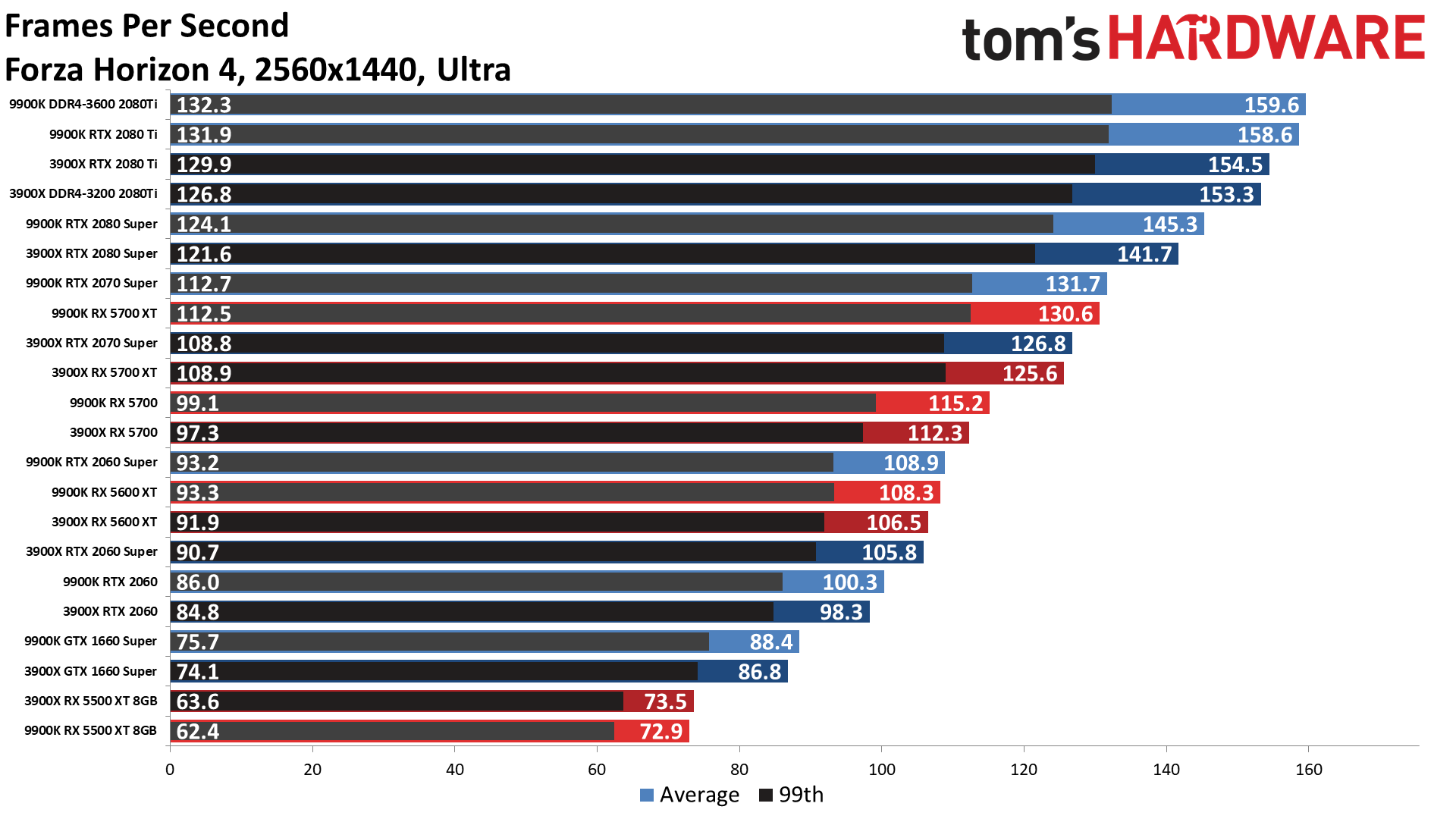

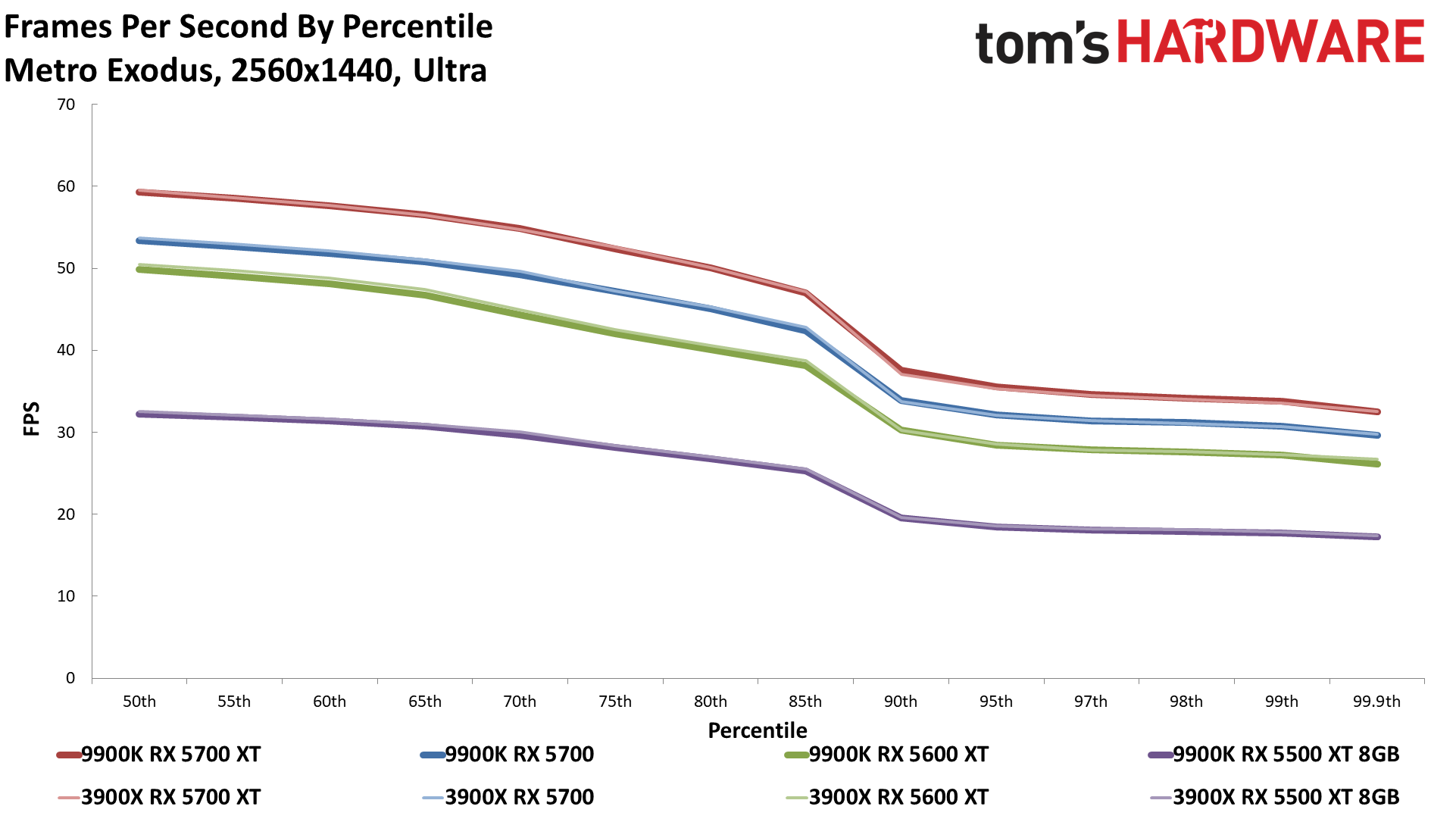

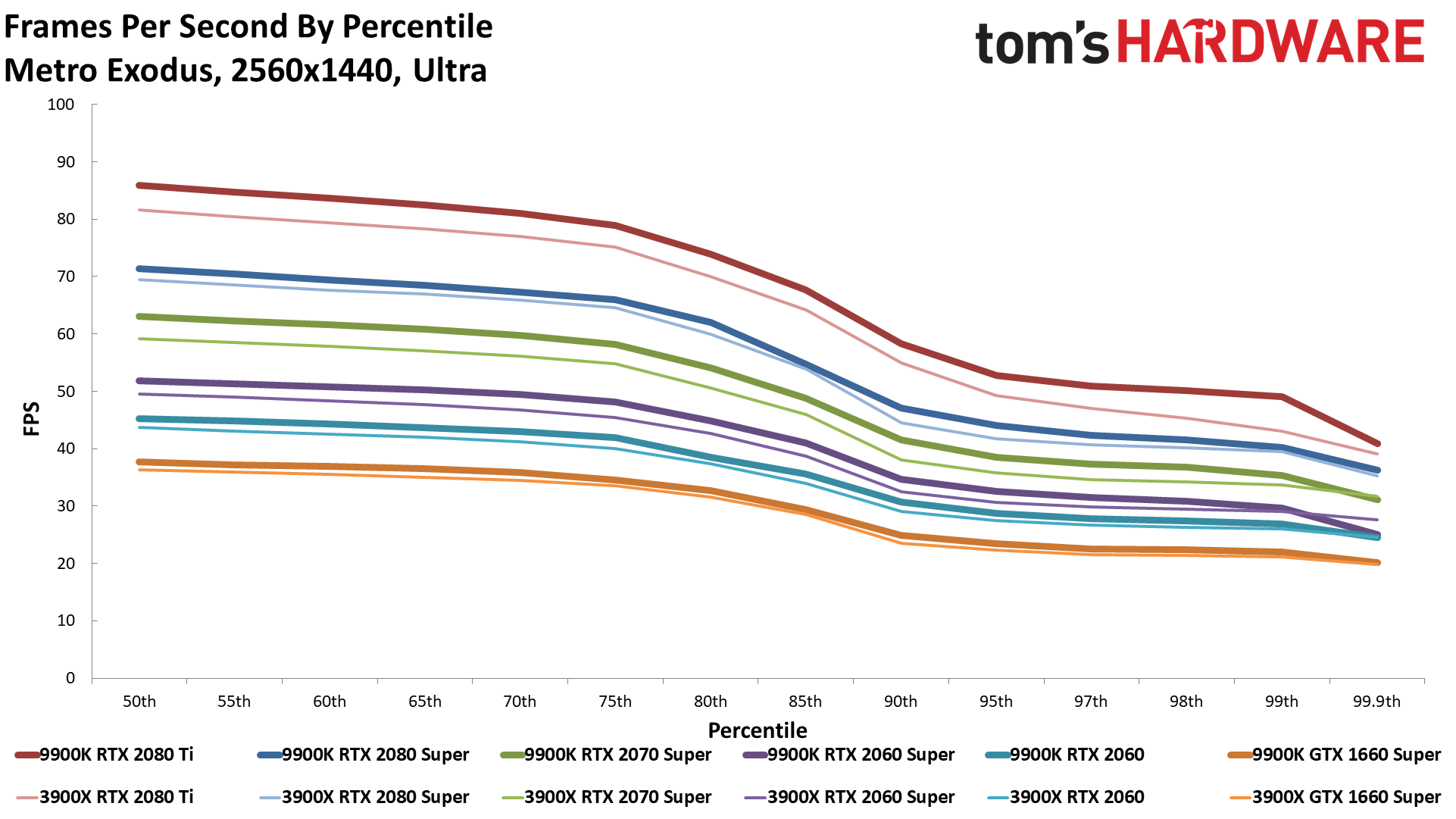

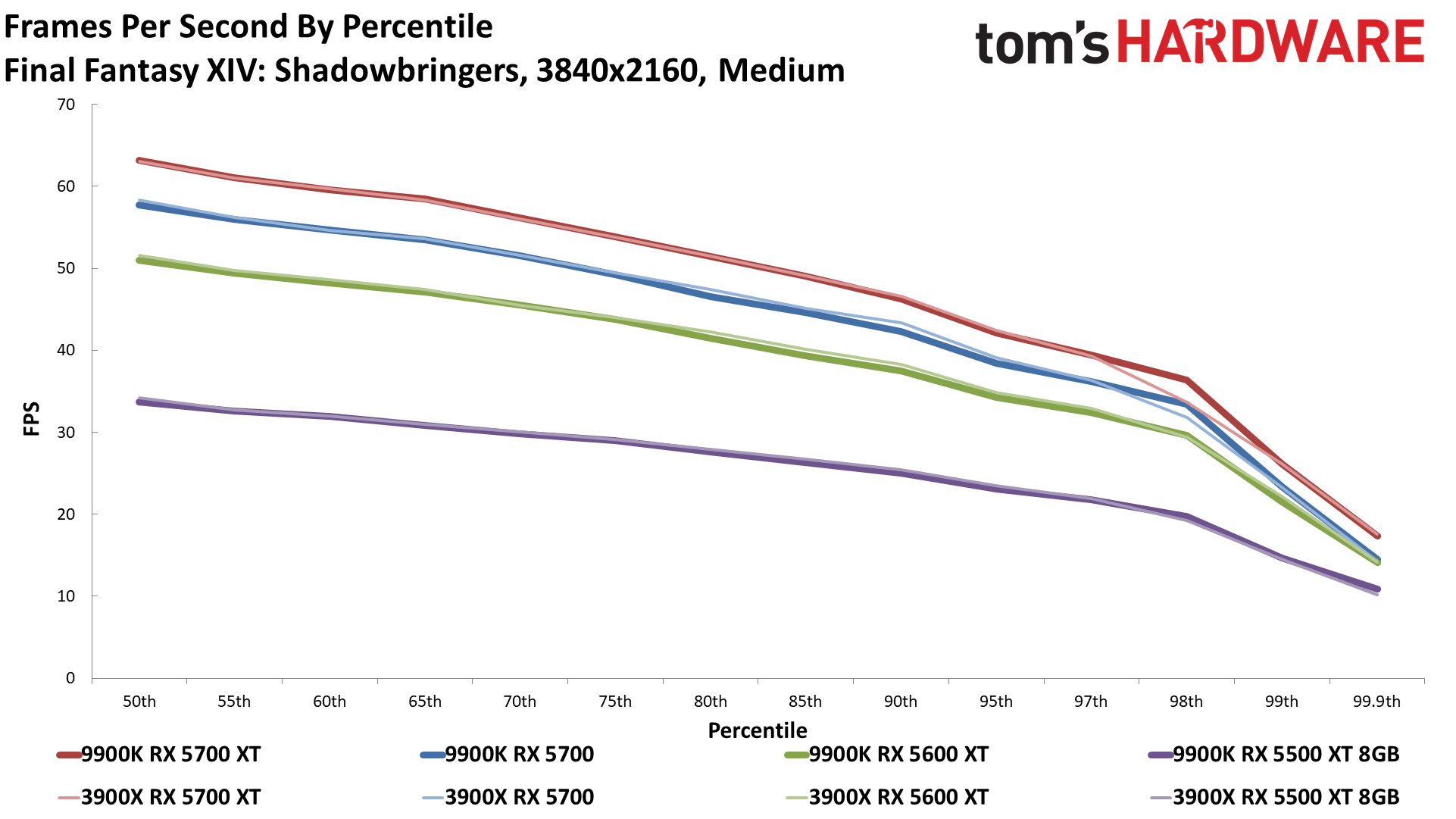

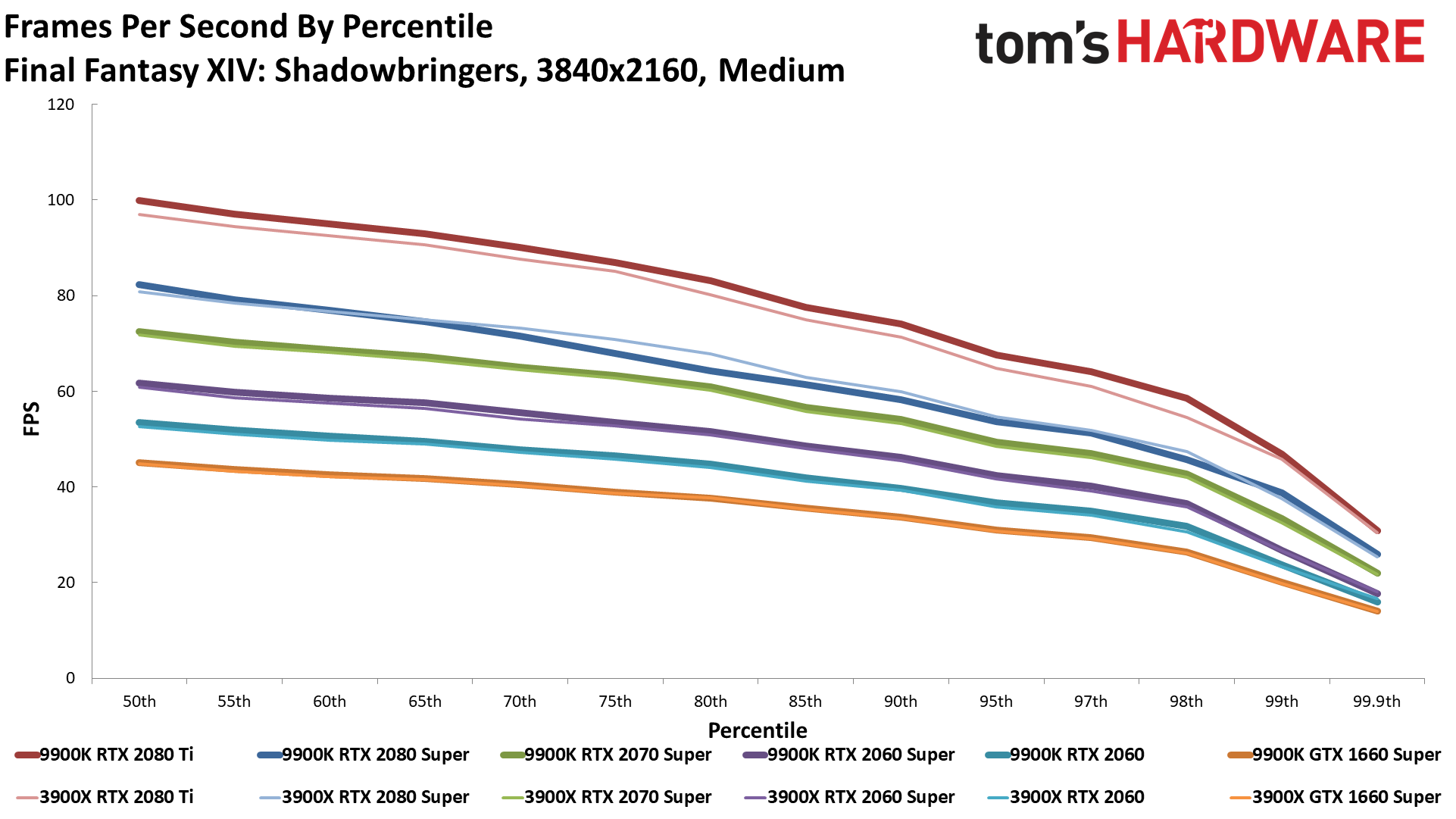

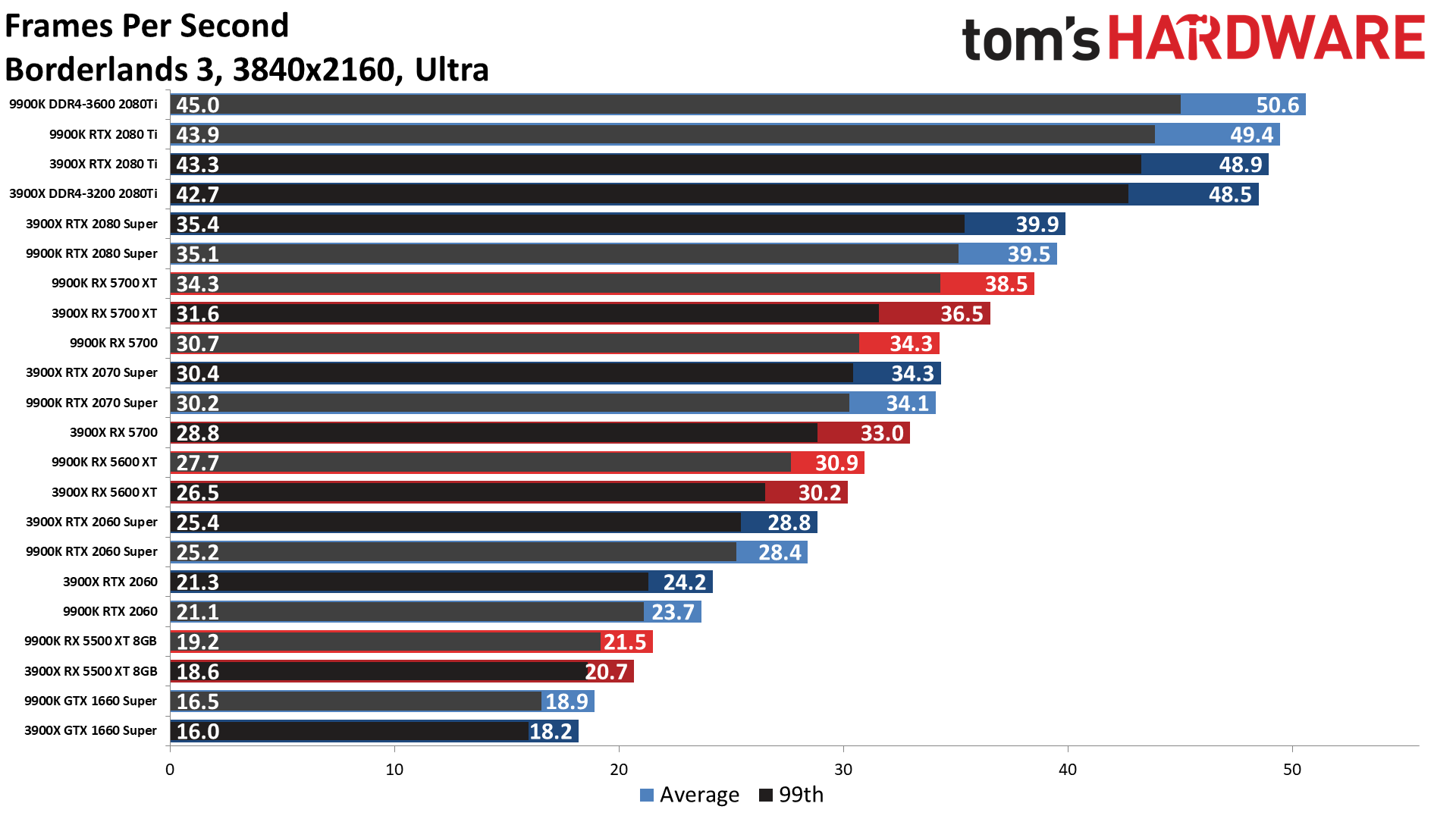

We've done additional testing of the RTX 2080 Ti on the AMD PC with the same set of DDR4-3200 CL16 memory used in the Intel system, and also run tests with the Intel PC using the DDR4-3600 CL16 kit, and have added both of those results to the bar charts. No surprise: the faster RAM helps AMD more than it helps Intel, though overall it's not a massive boost to either CPU. (Note that the Intel with DDR4-3600 and AMD with DDR4-3200 results are not in the line charts.)

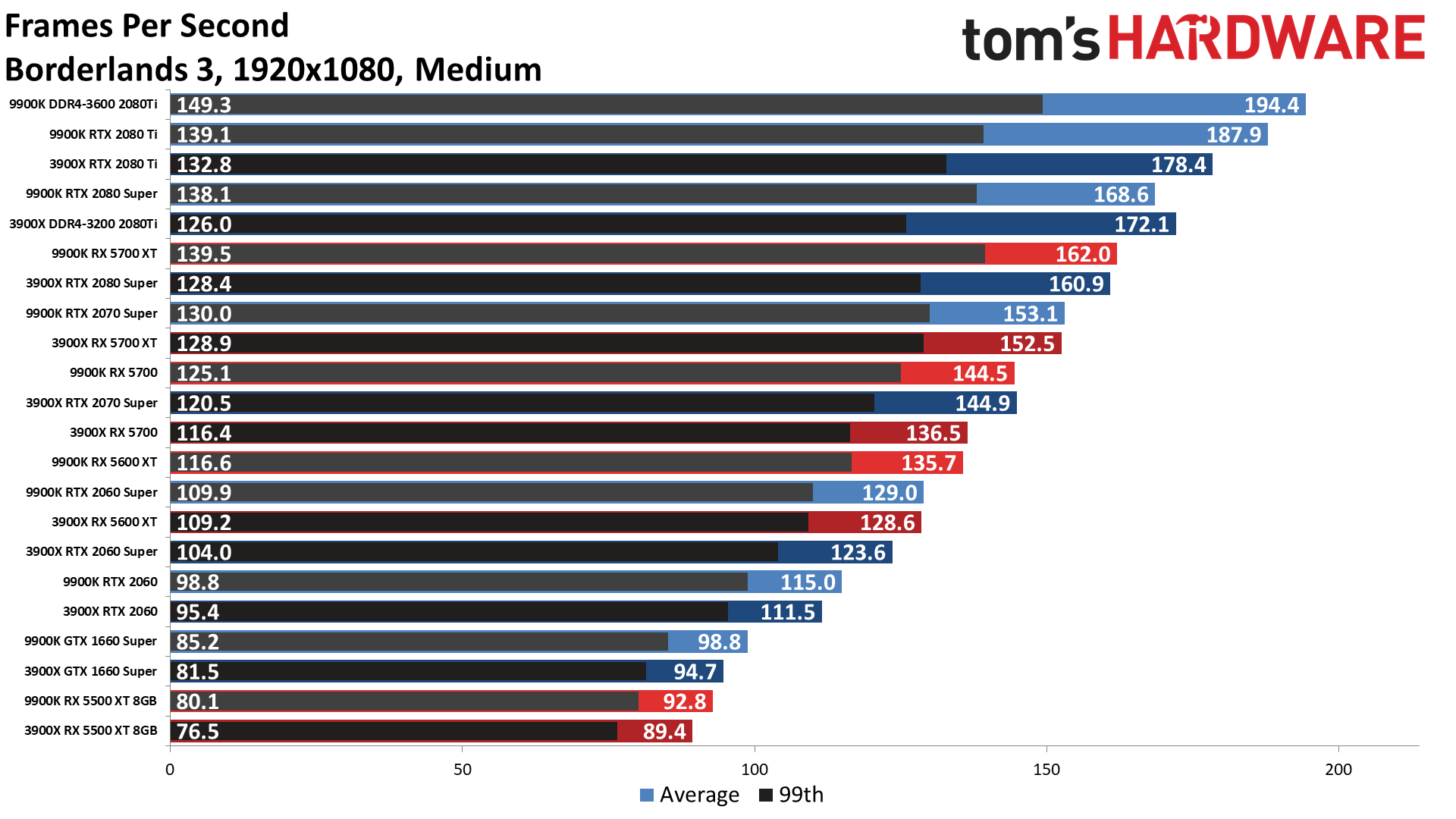

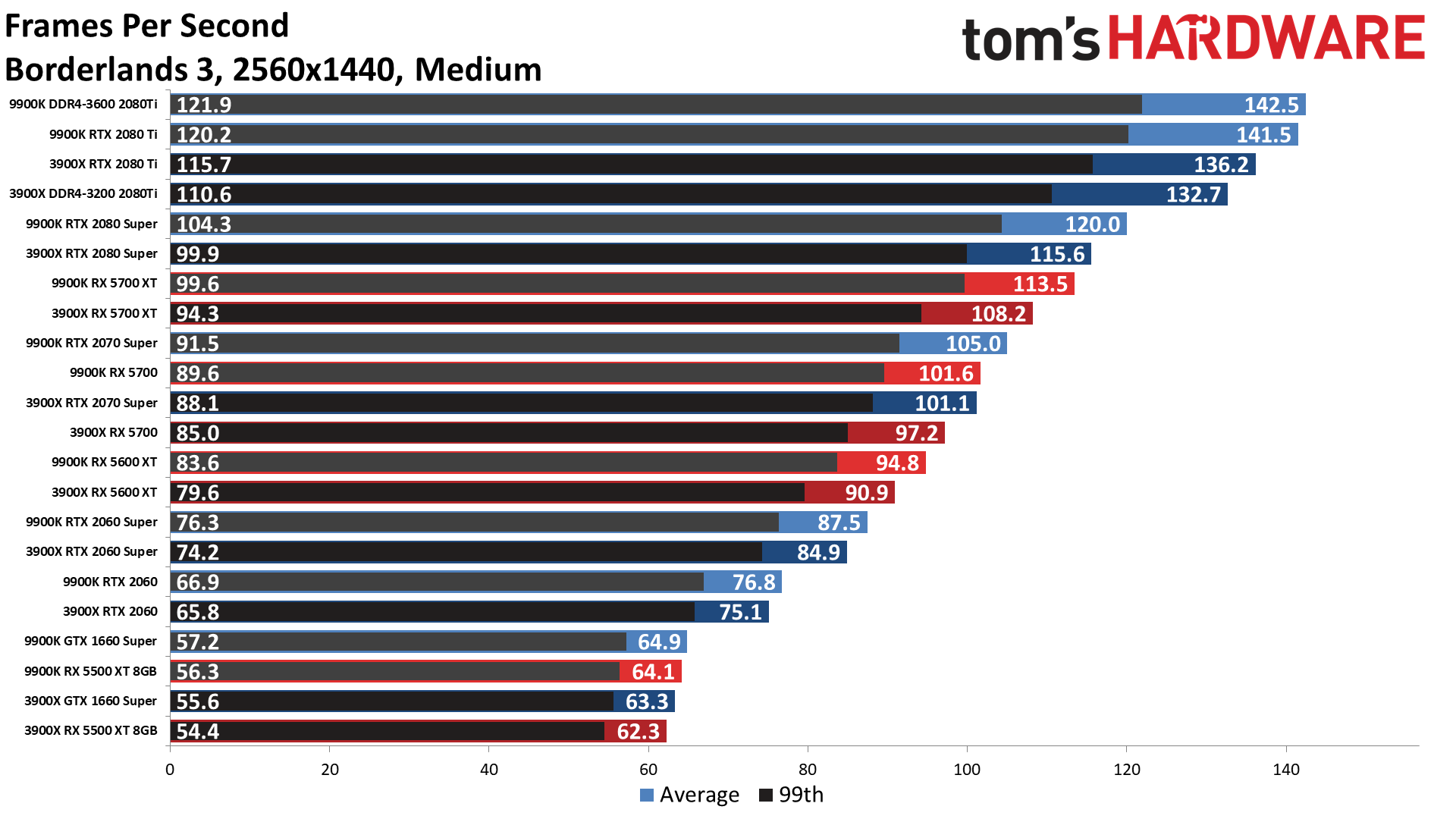

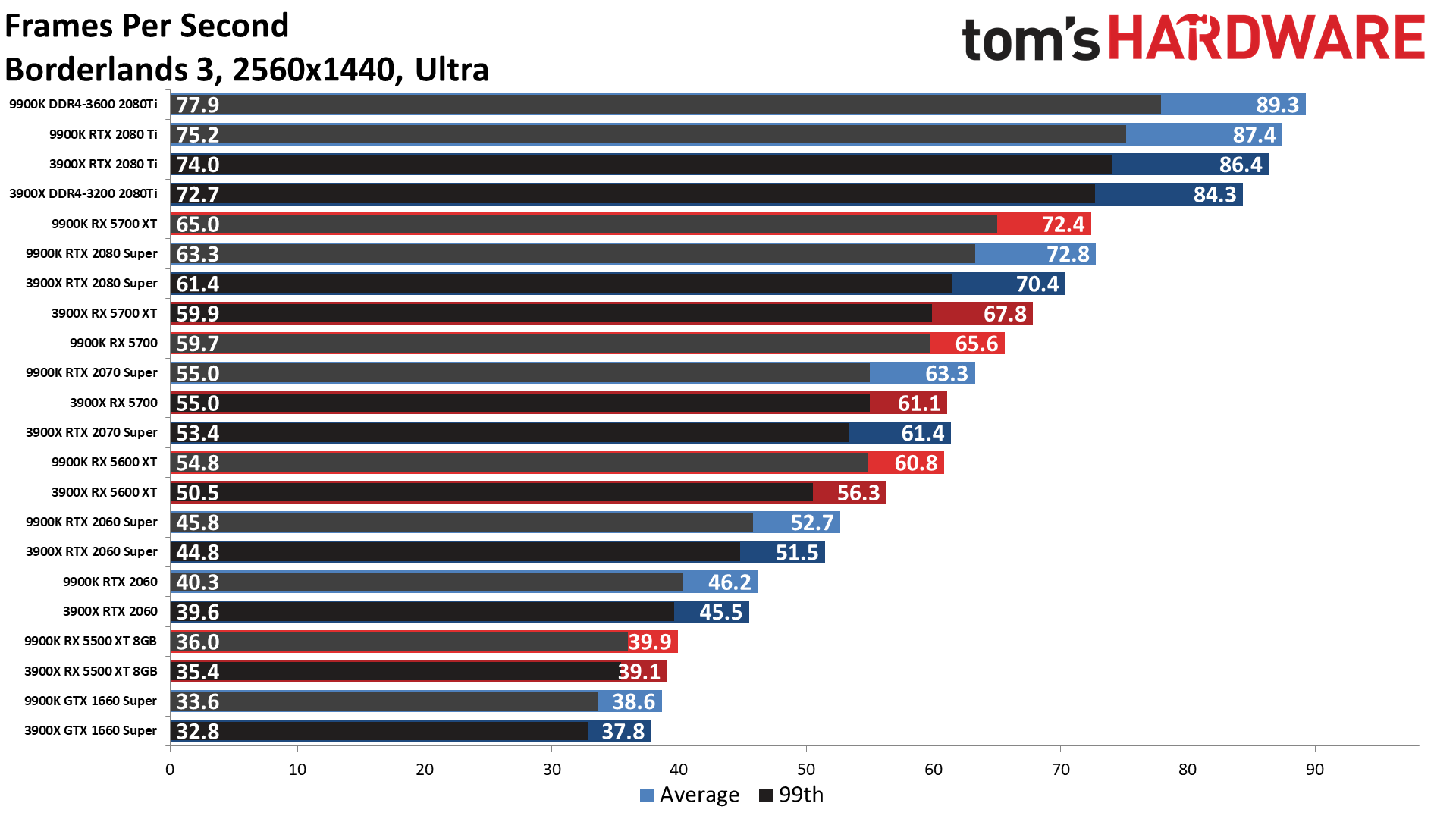

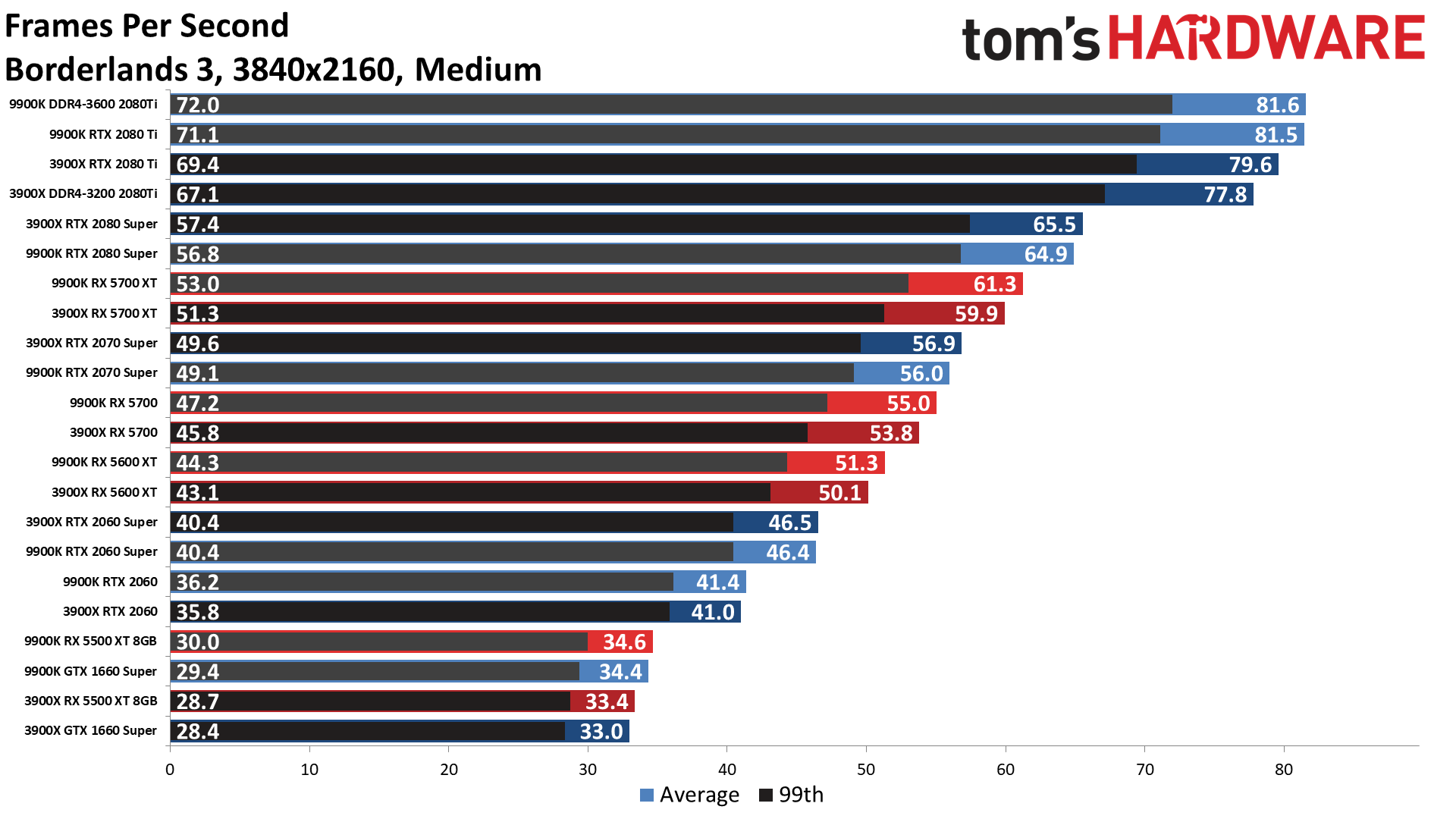

Each test on each setting was run at least three times, and the first run at each setting was discarded (because the GPU is often 'warming up'). We then selected the higher of the two remaining results. After that we checked for any major discrepancies and potentially ran additional tests — for example, Borderlands 3 came out with an update during the past two weeks that delivered substantially higher performance at the ultra preset, necessitating a bunch of retesting of that particular game.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Conventional wisdom is that Intel CPUs are faster for gaming, and it can make up to a 10-15% difference at 1080p ultra when using an RTX 2080 Ti. Run at higher resolutions like 1440p or 4K, or use a slower GPU — or both — and the CPU is going to have far less of an impact. Let’s see just how correct 'conventional wisdom' is, whether you’d even notice the difference in most games, and whether PCIe Gen4 helps AMD’s GPUs make up any ground.

| GPU | Power | Buy | |

|---|---|---|---|

| Nvidia GeForce RTX 2080 Ti | TU102 | 260W | $1,197.99 |

| Nvidia GeForce RTX 2080 Super | TU104 | 250W | $719.99 |

| Nvidia GeForce RTX 2070 Super | TU104 | 215W | $549.99 |

| AMD Radeon RX 5700 XT | Navi 10 | 225W | $379.99 |

| AMD Radeon RX 5700 | Navi 10 | 185W | $349.99 |

| Nvidia GeForce RTX 2060 Super | TU106 | 175W | $399.99 |

| AMD Radeon RX 5600 XT | Navi 10 | 150W | $259.99 |

| Nvidia GeForce RTX 2060 | TU106 | 160W | $314.99 |

| Nvidia GeForce GTX 1660 Super | TU116 | 125W | $229.99 |

| AMD Radeon RX 5500 XT 8GB | Navi 14 | 130W | $199.99 |

We have 180 charts, which we’re grouping into galleries of 30 charts for each of the resolution and setting combinations. We have overall averages plus nine games, with two separate percentile scaling charts (for AMD and Nvidia GPUs on each platform). We’ll start at 1080p medium and move up to 4K ultra with limited discussion of each set of results.

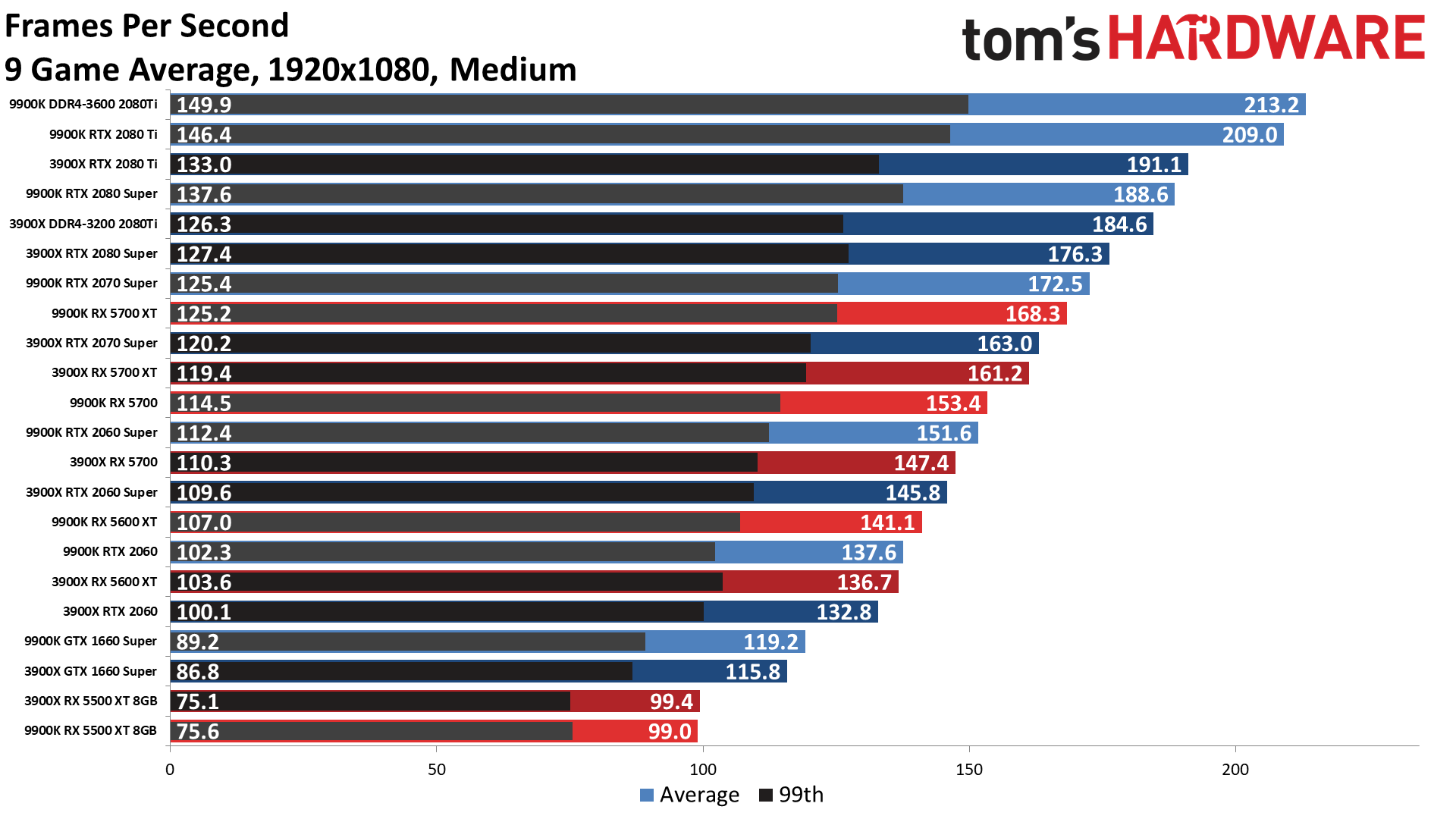

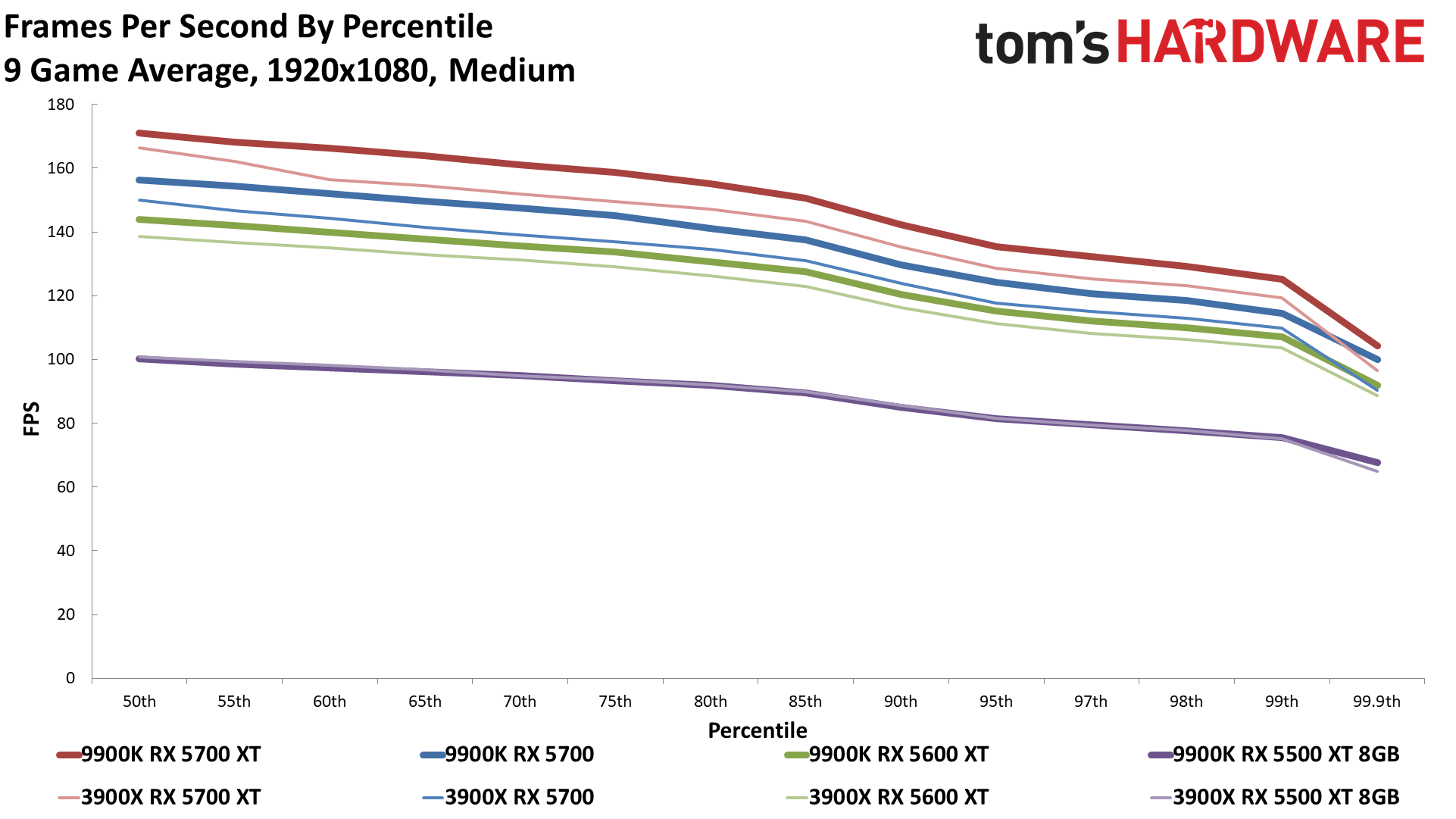

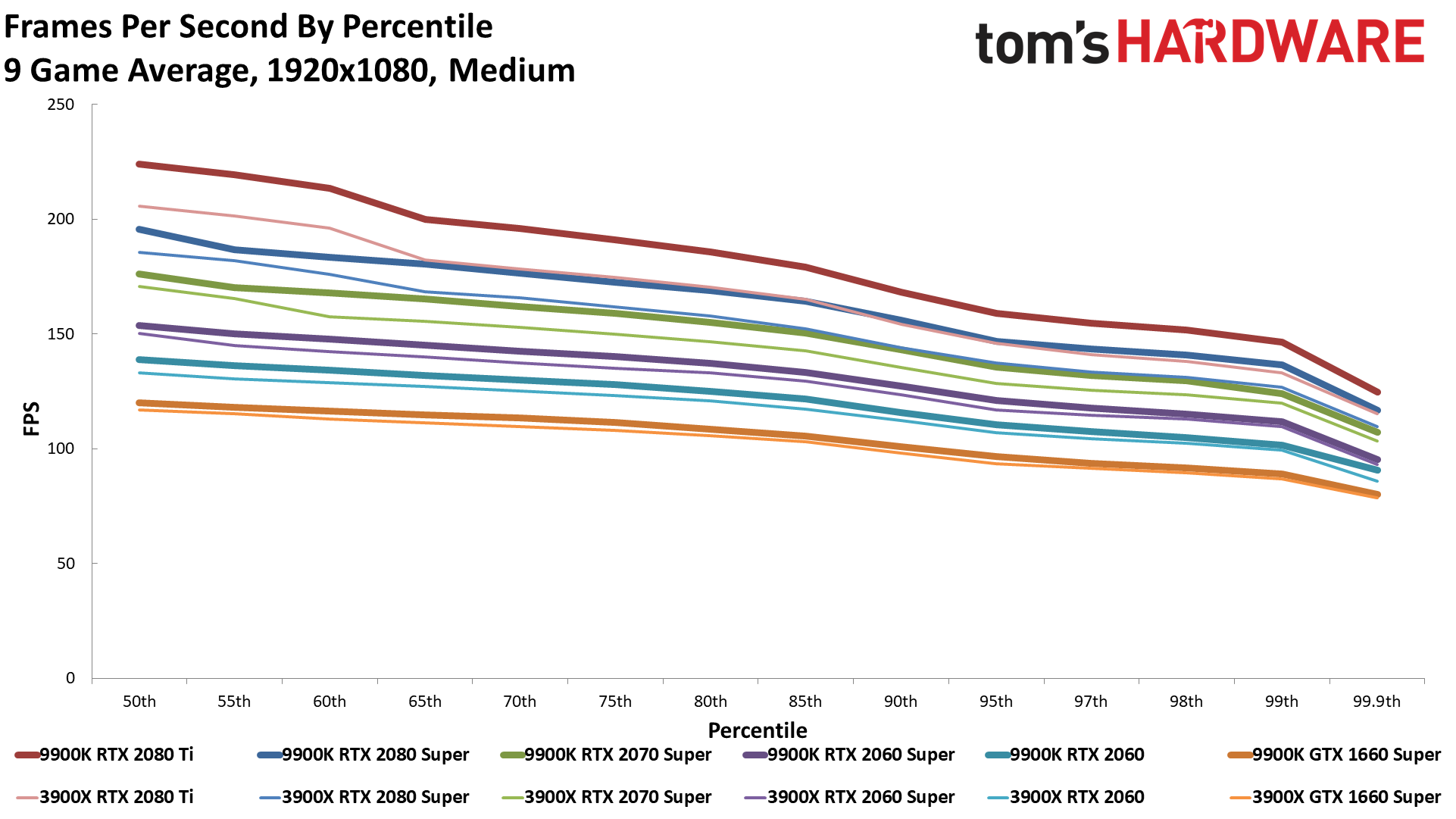

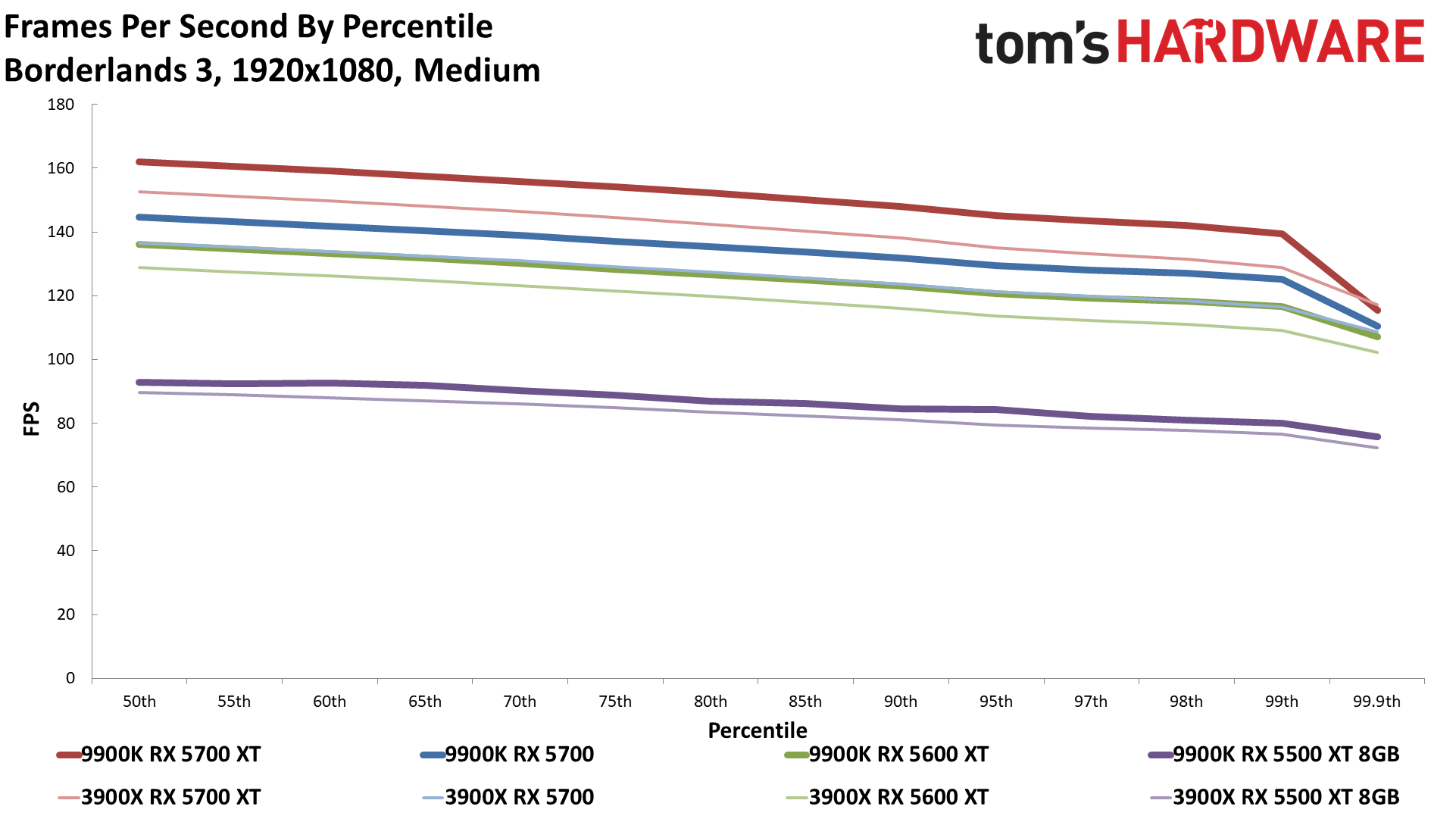

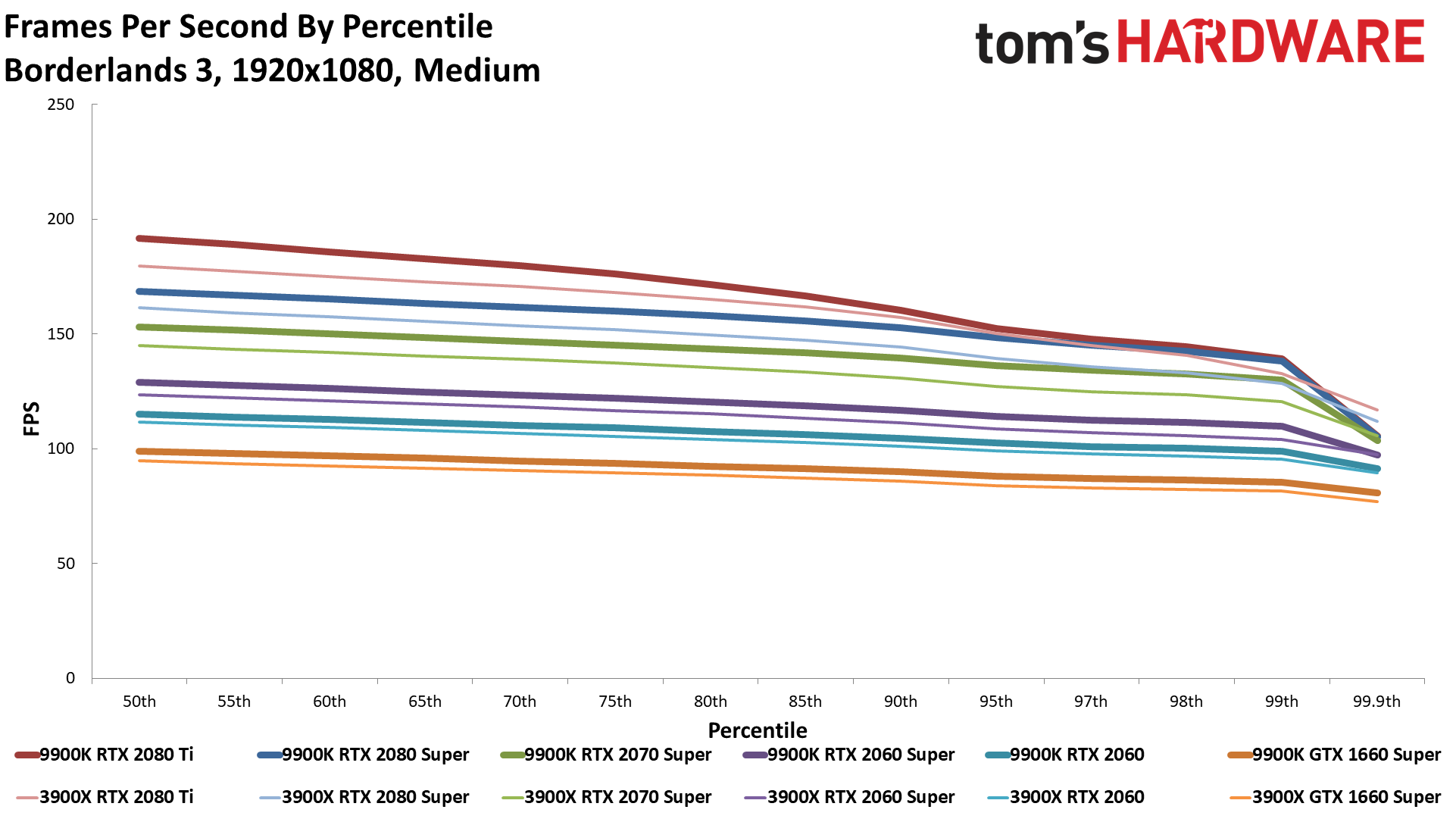

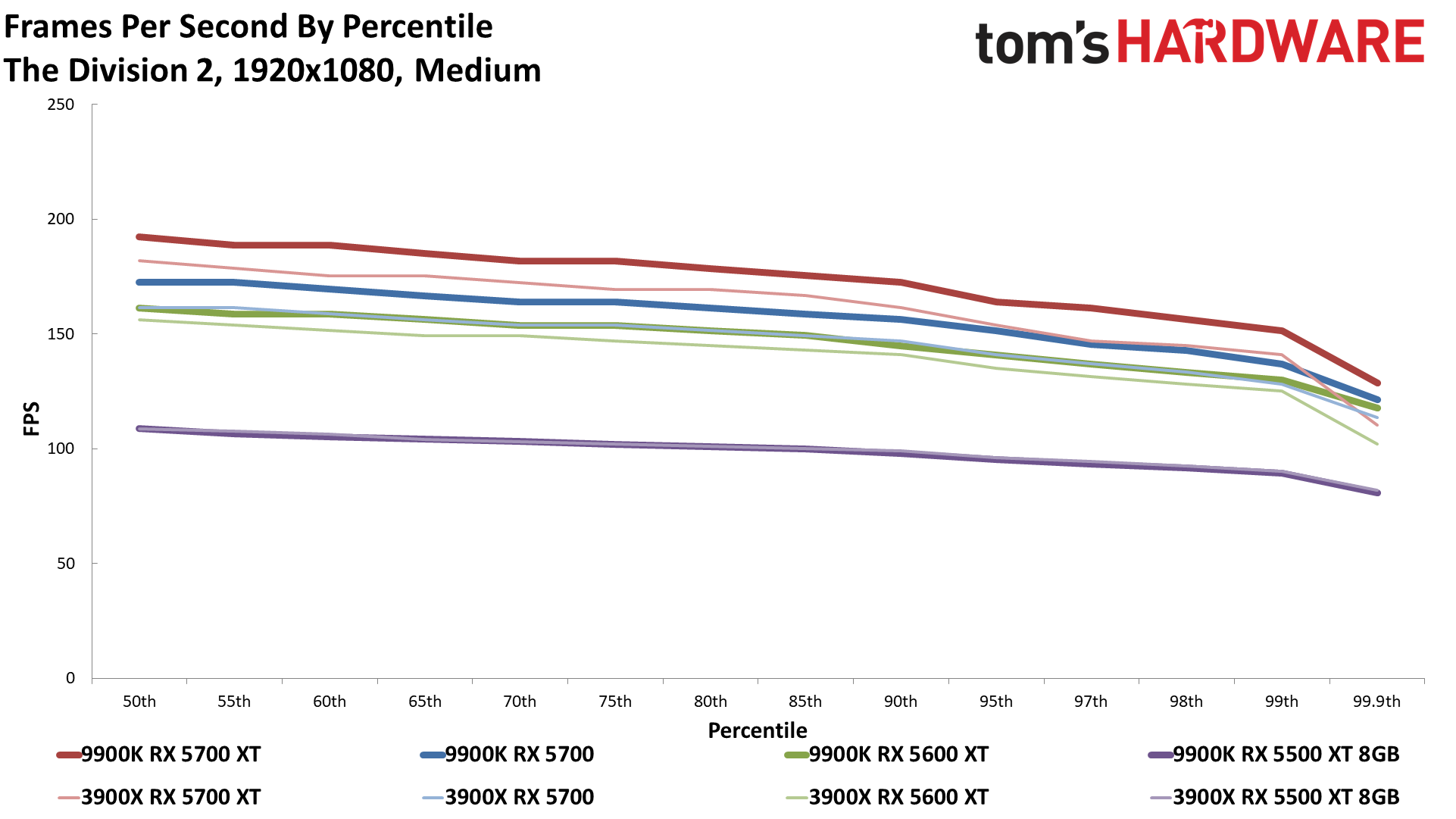

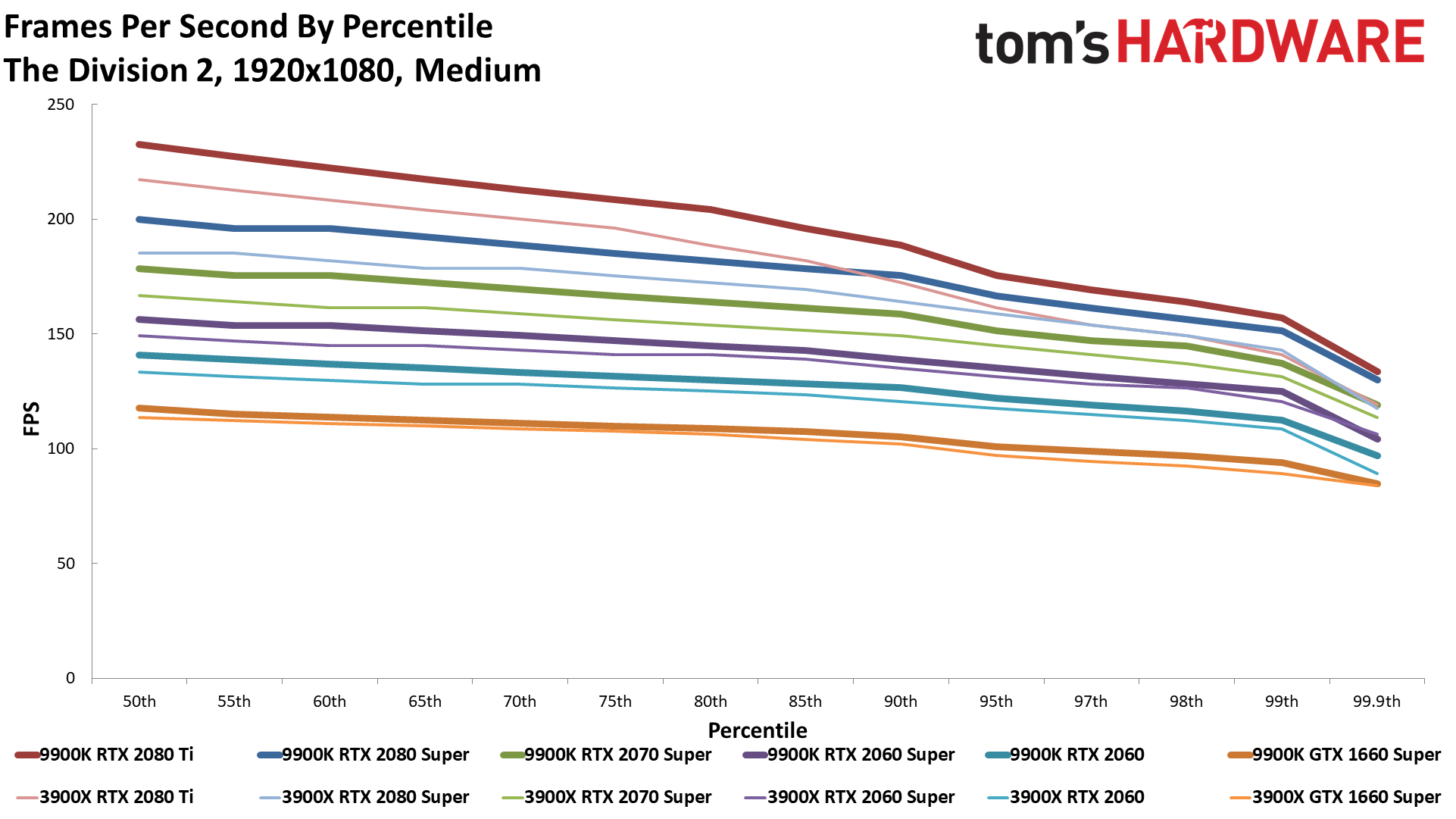

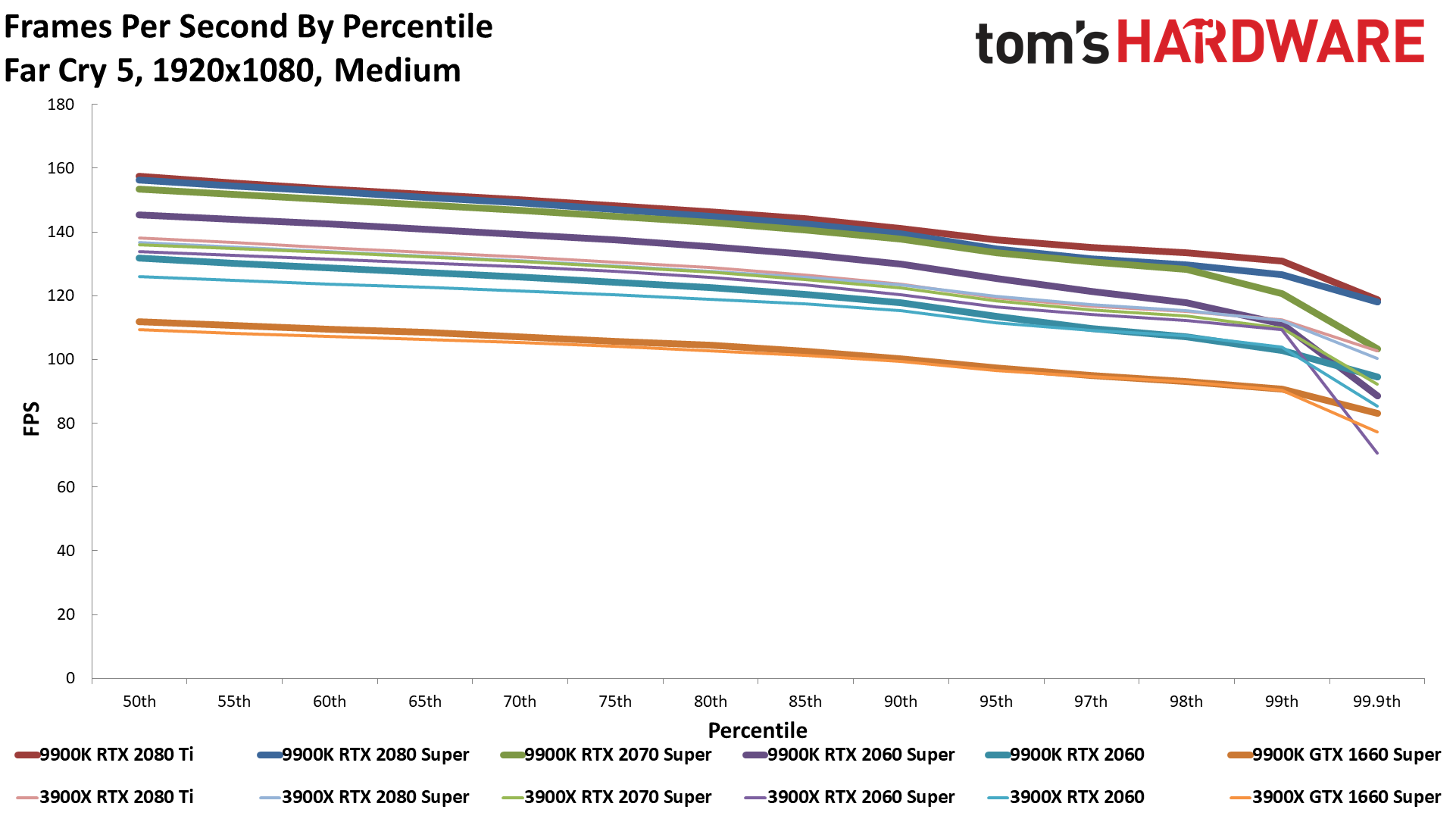

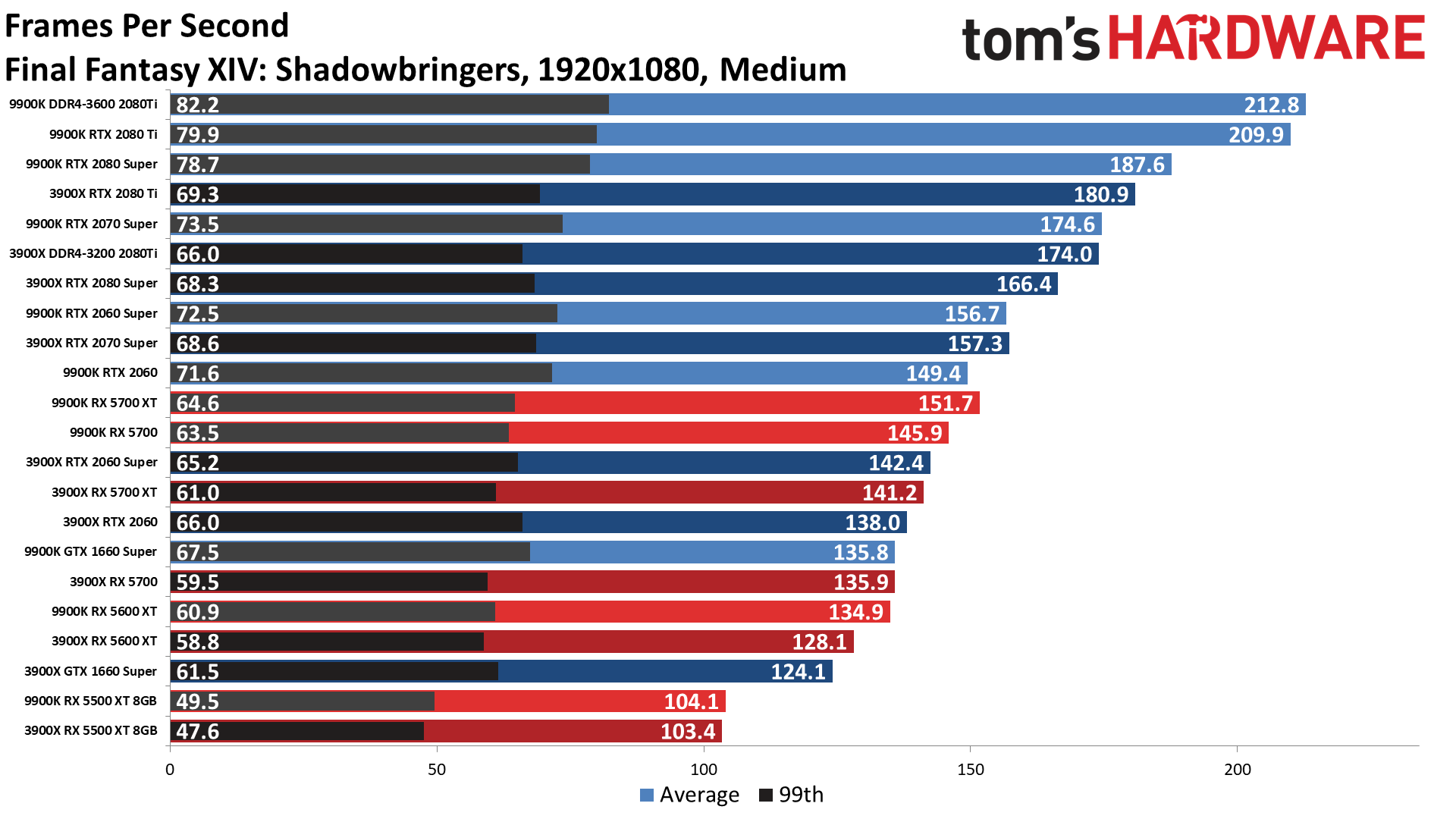

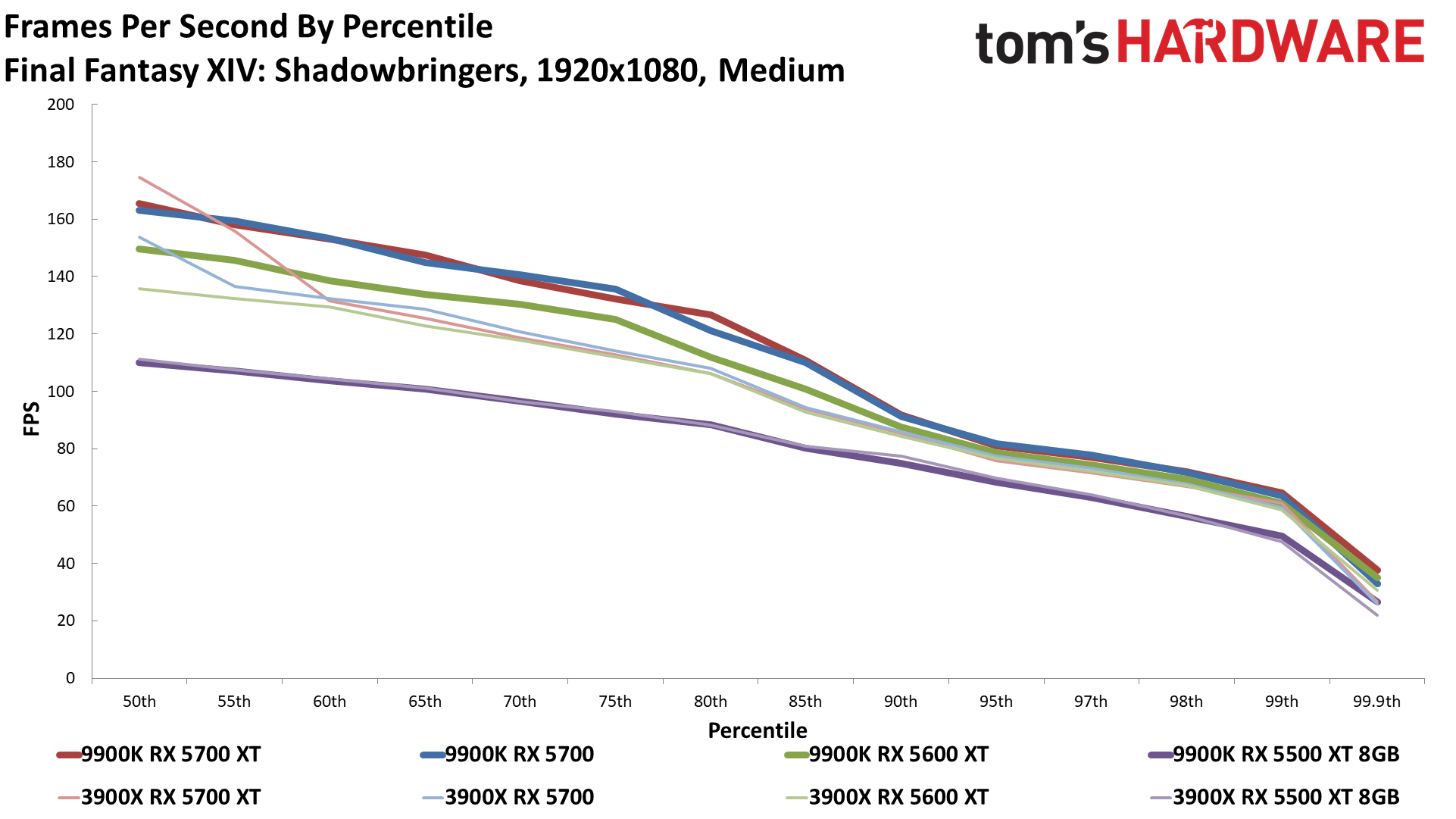

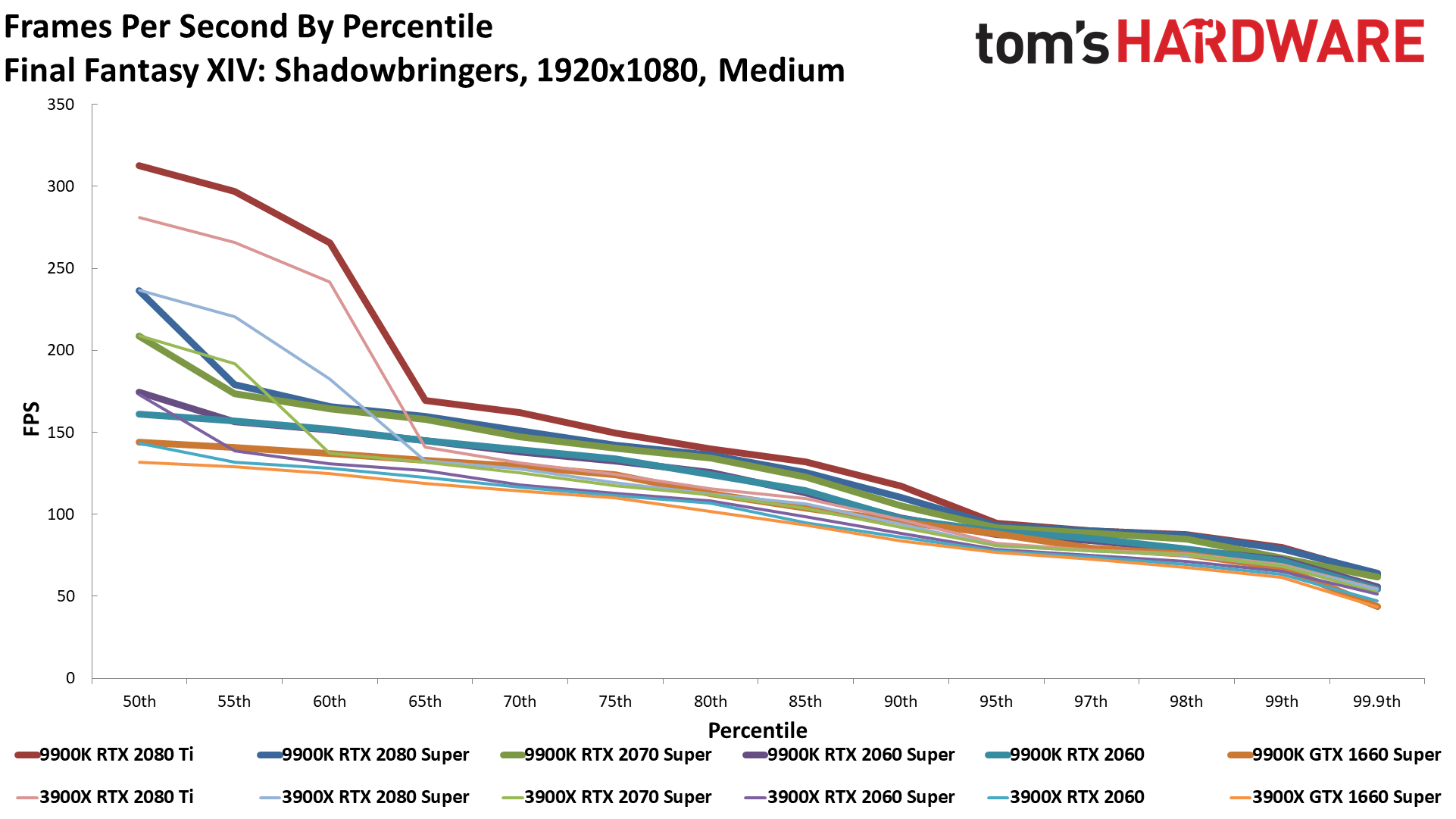

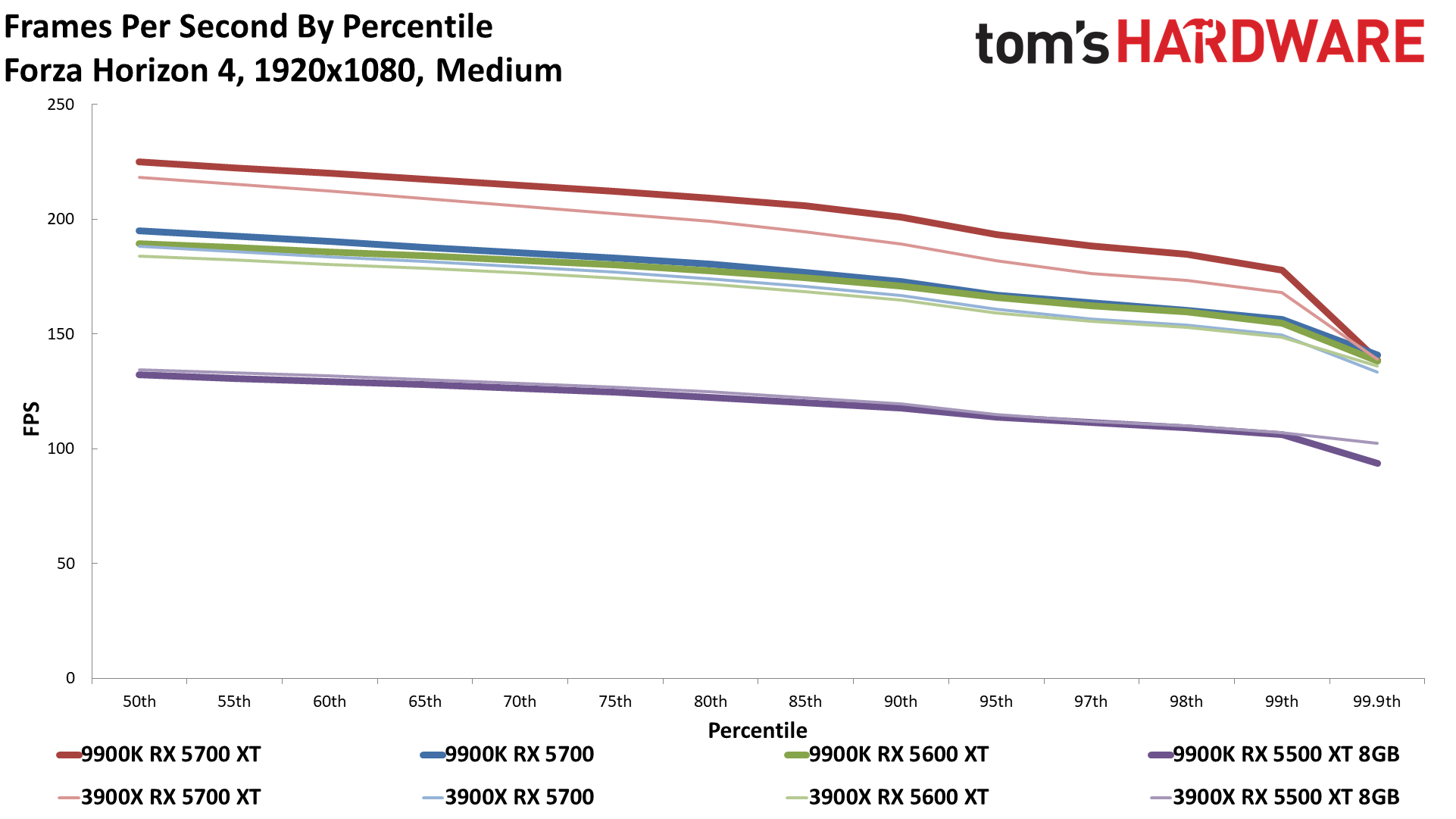

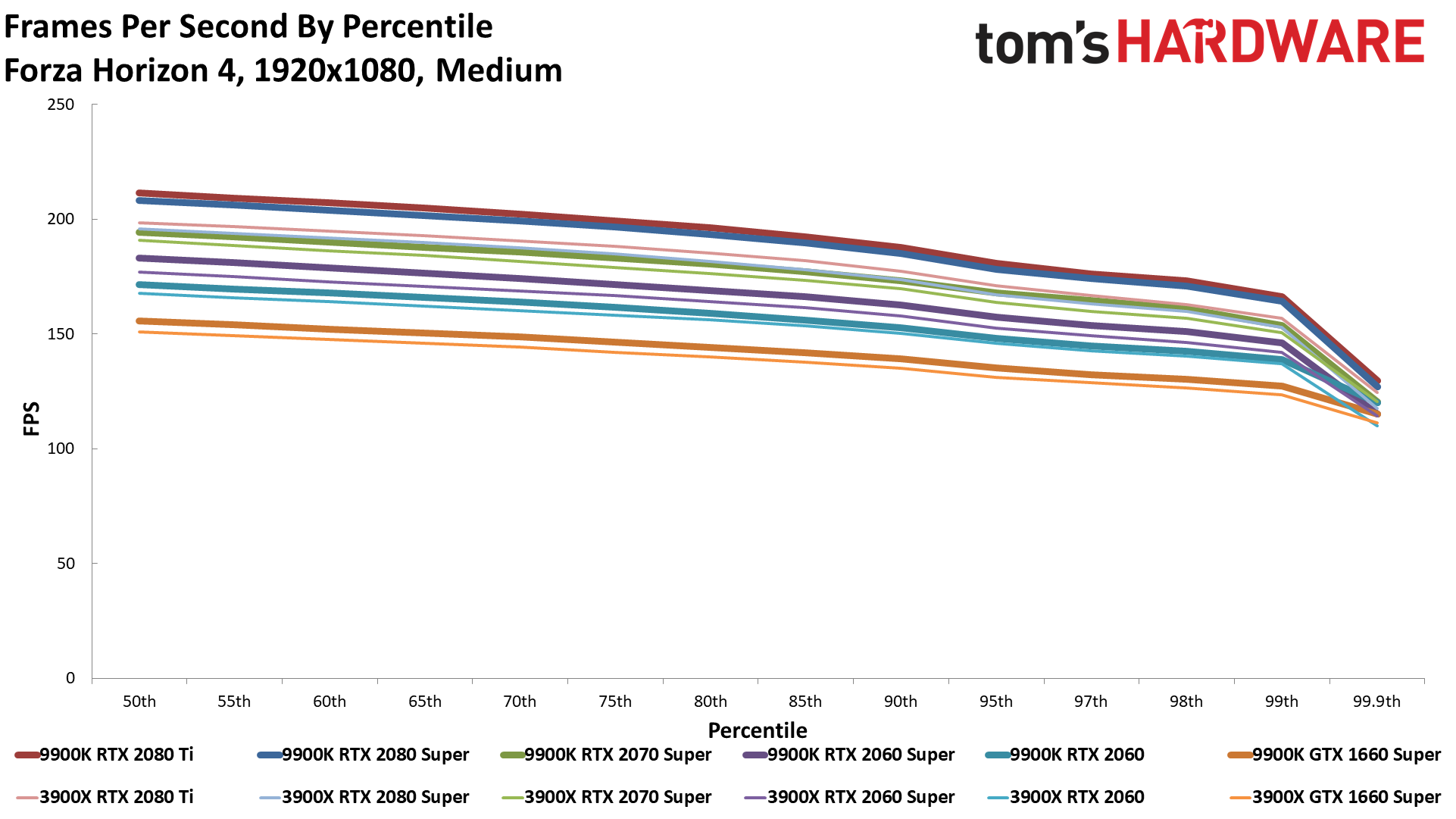

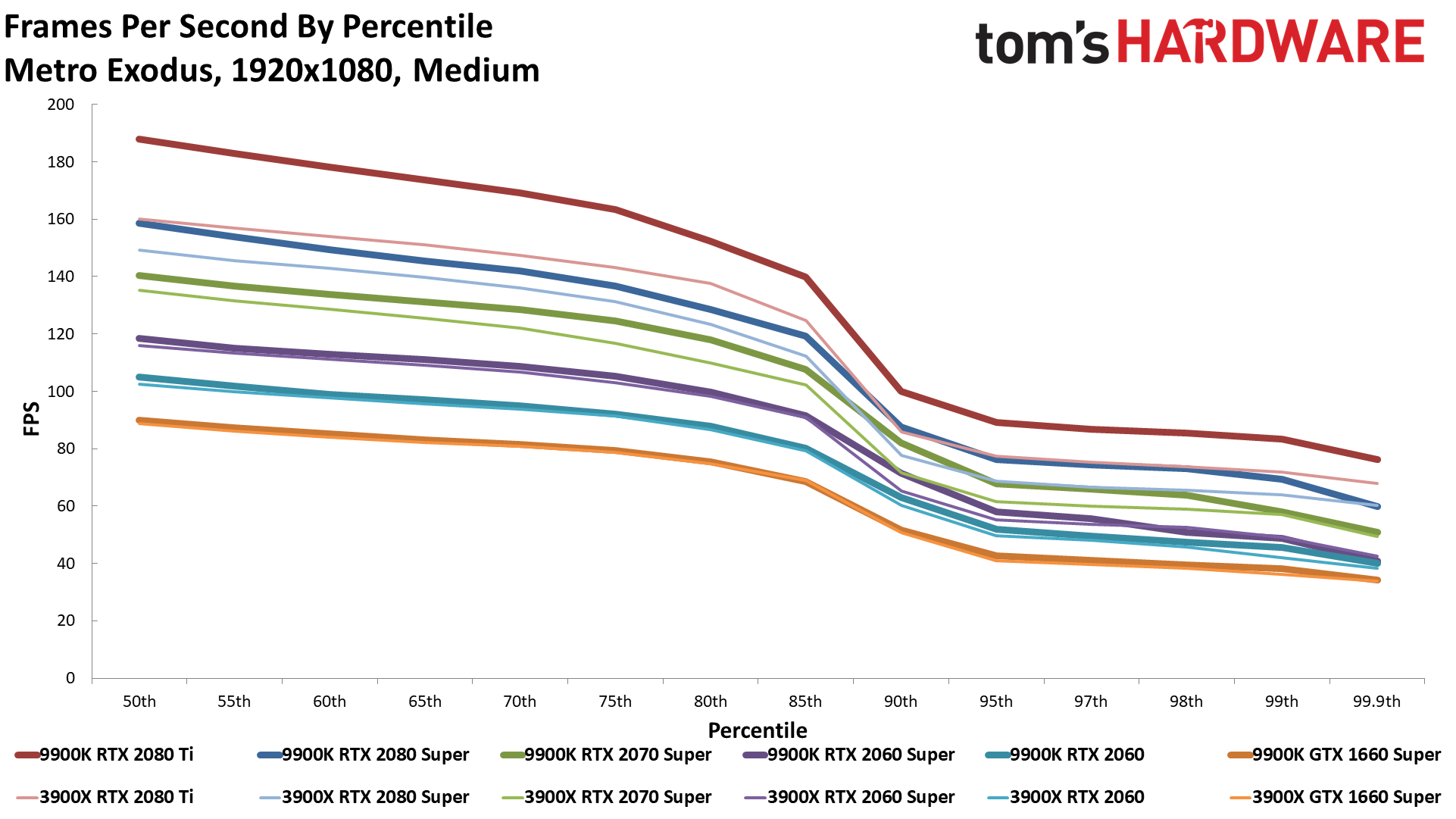

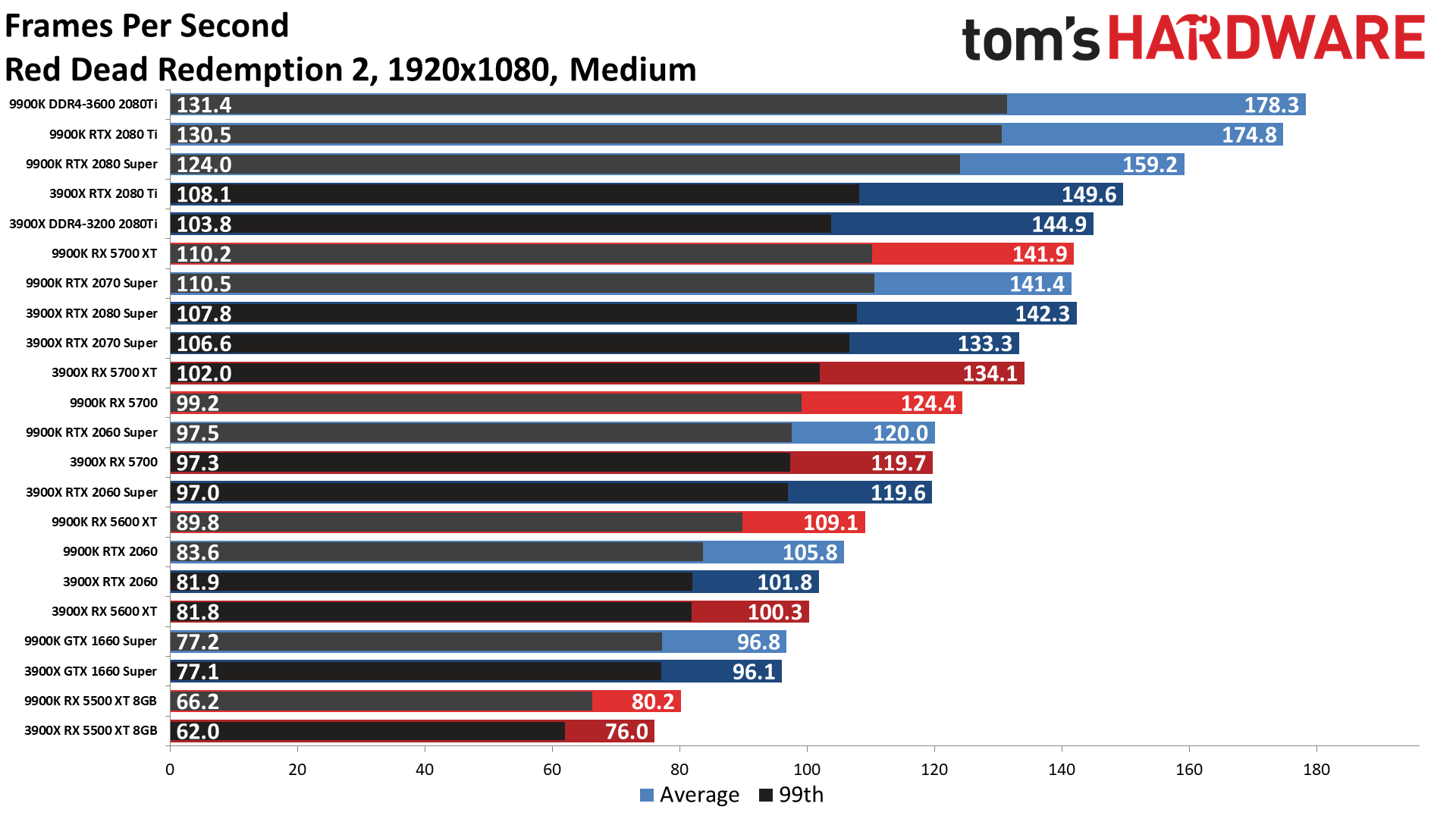

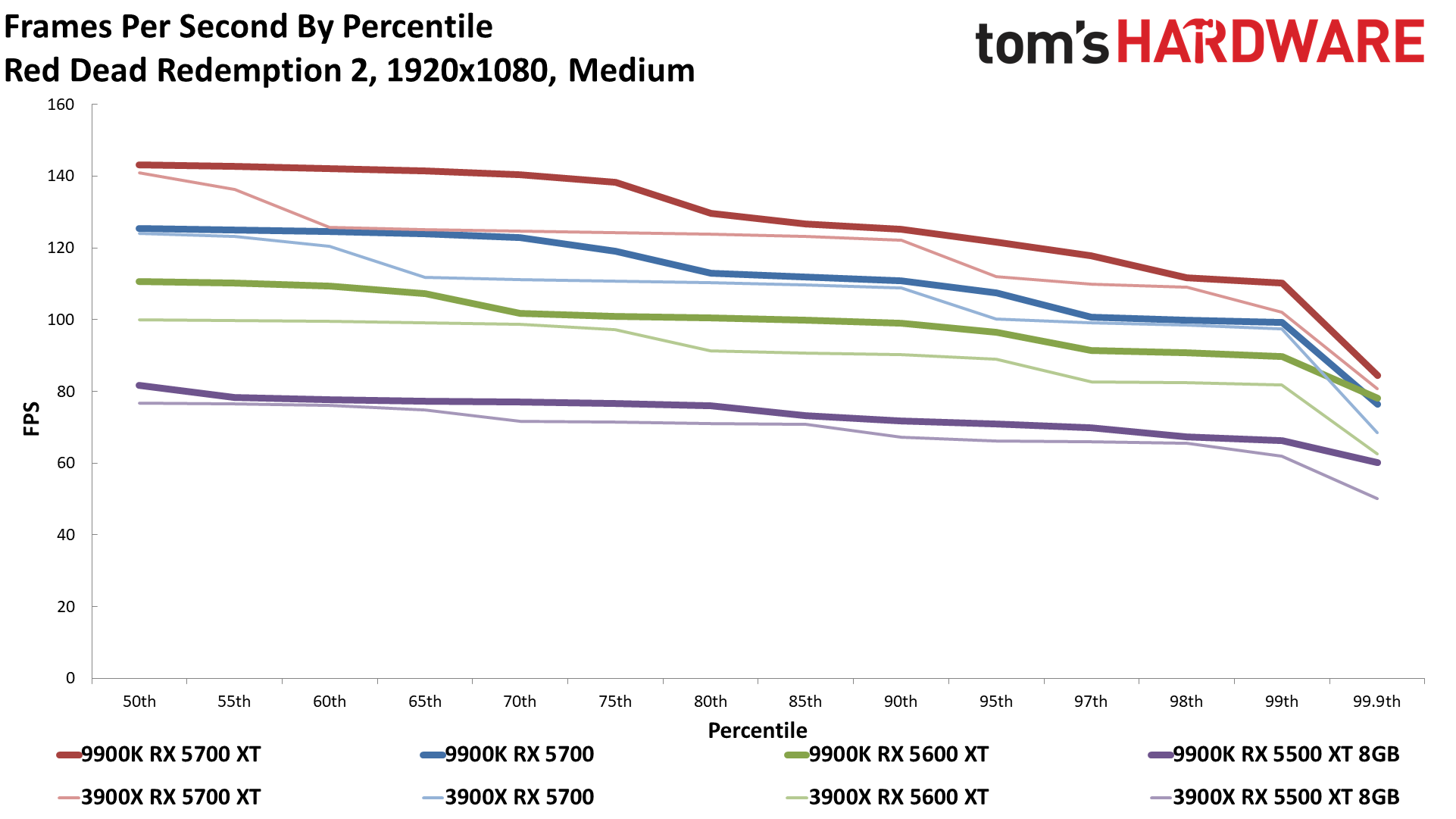

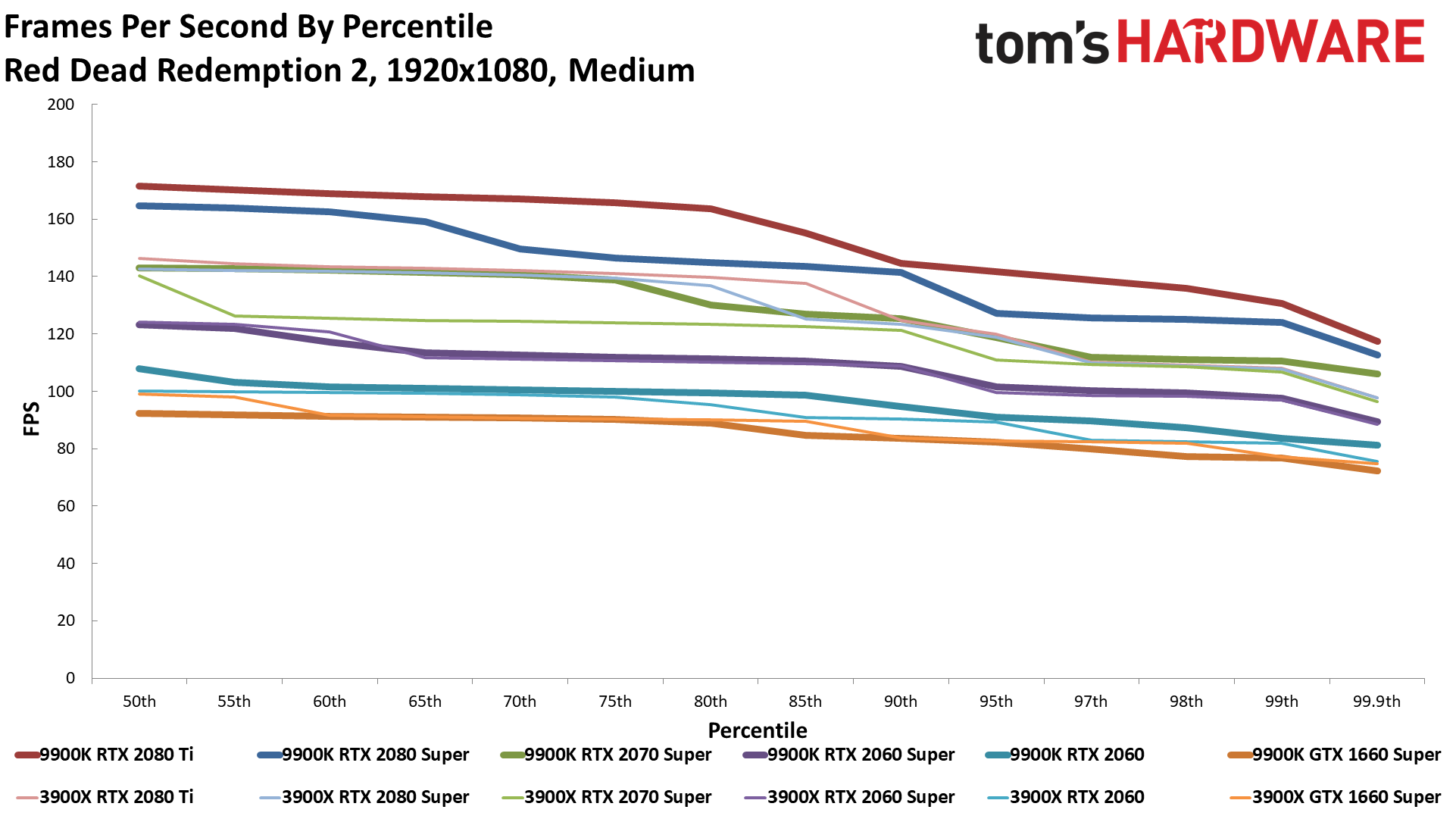

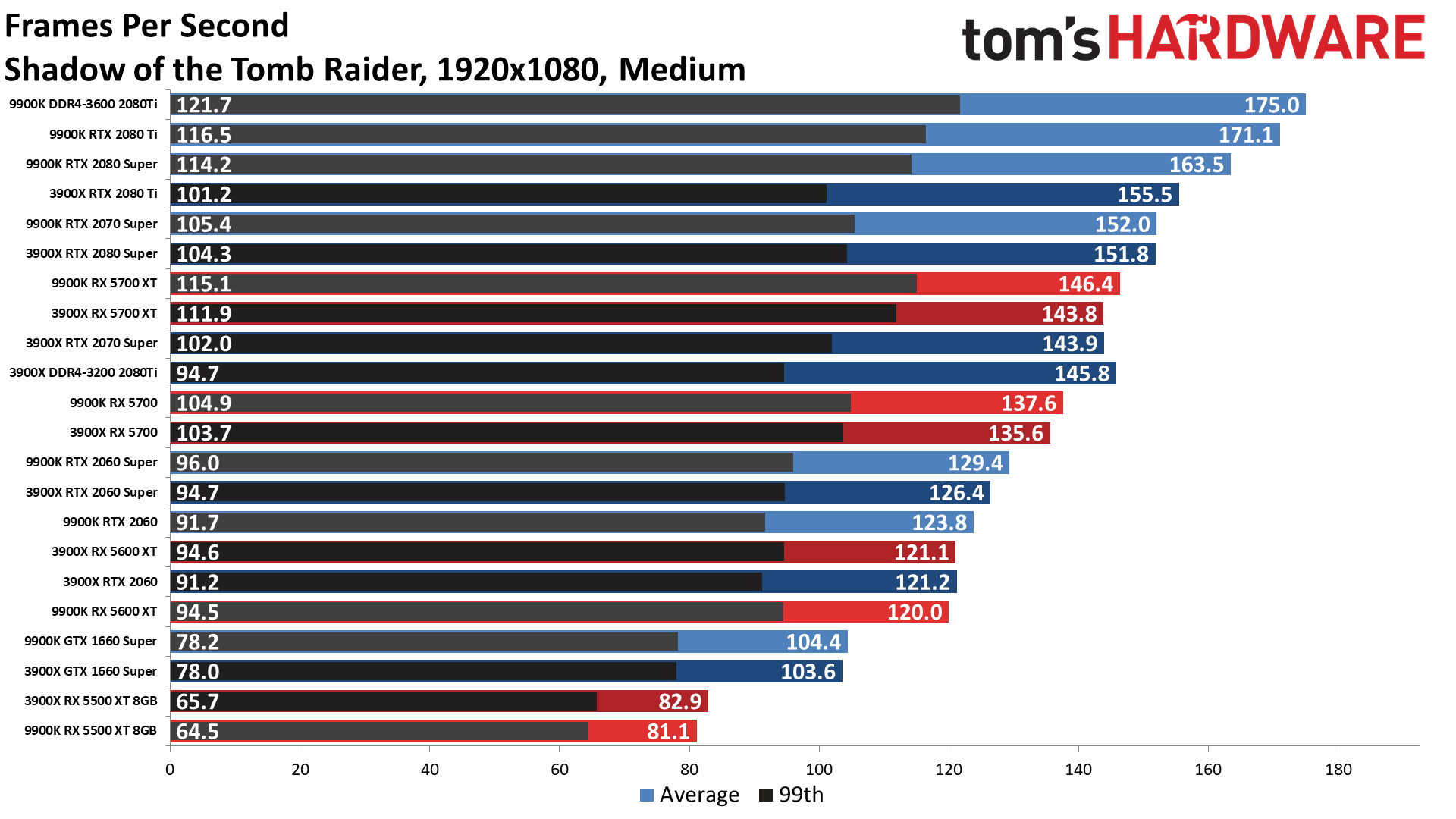

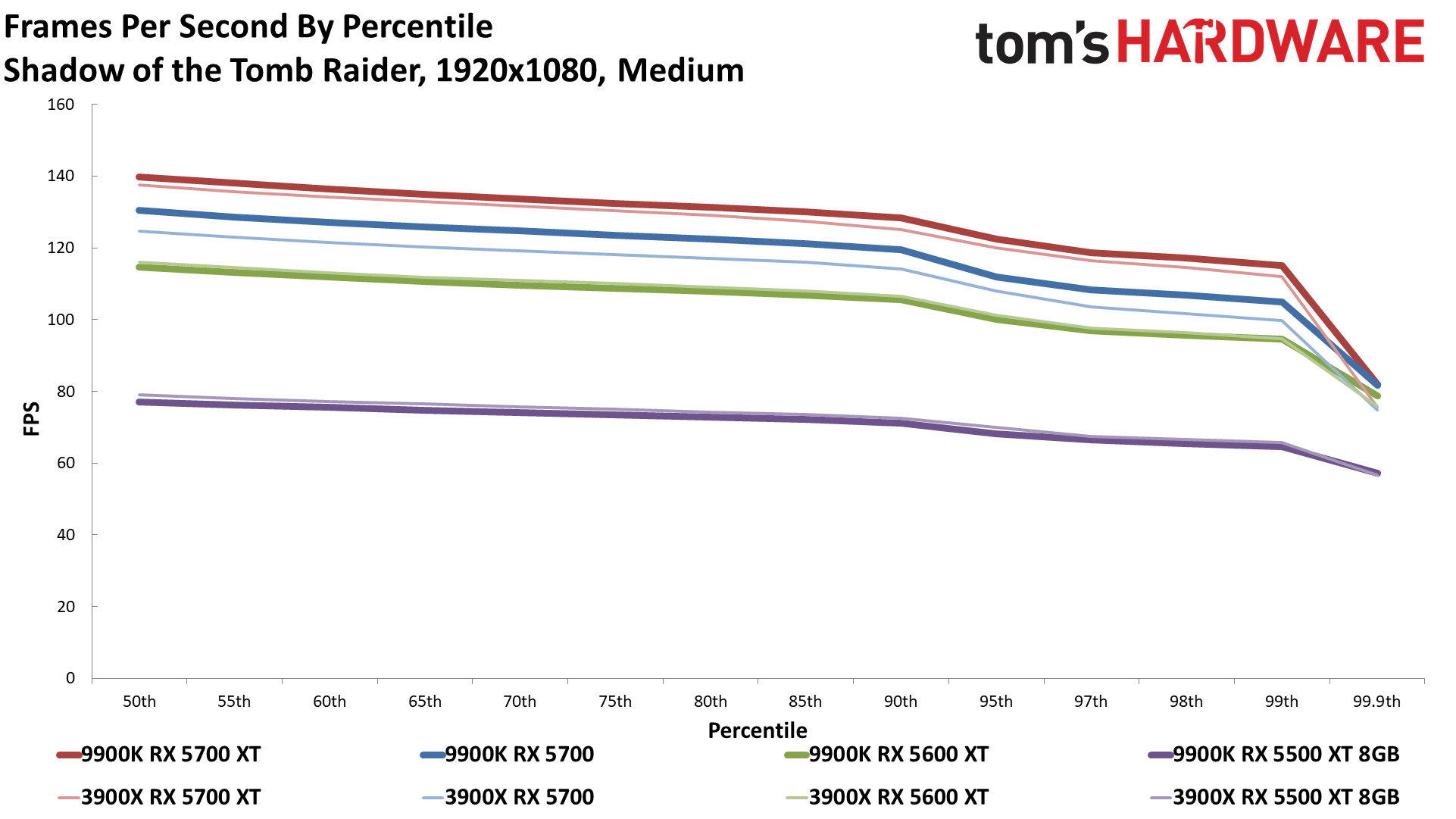

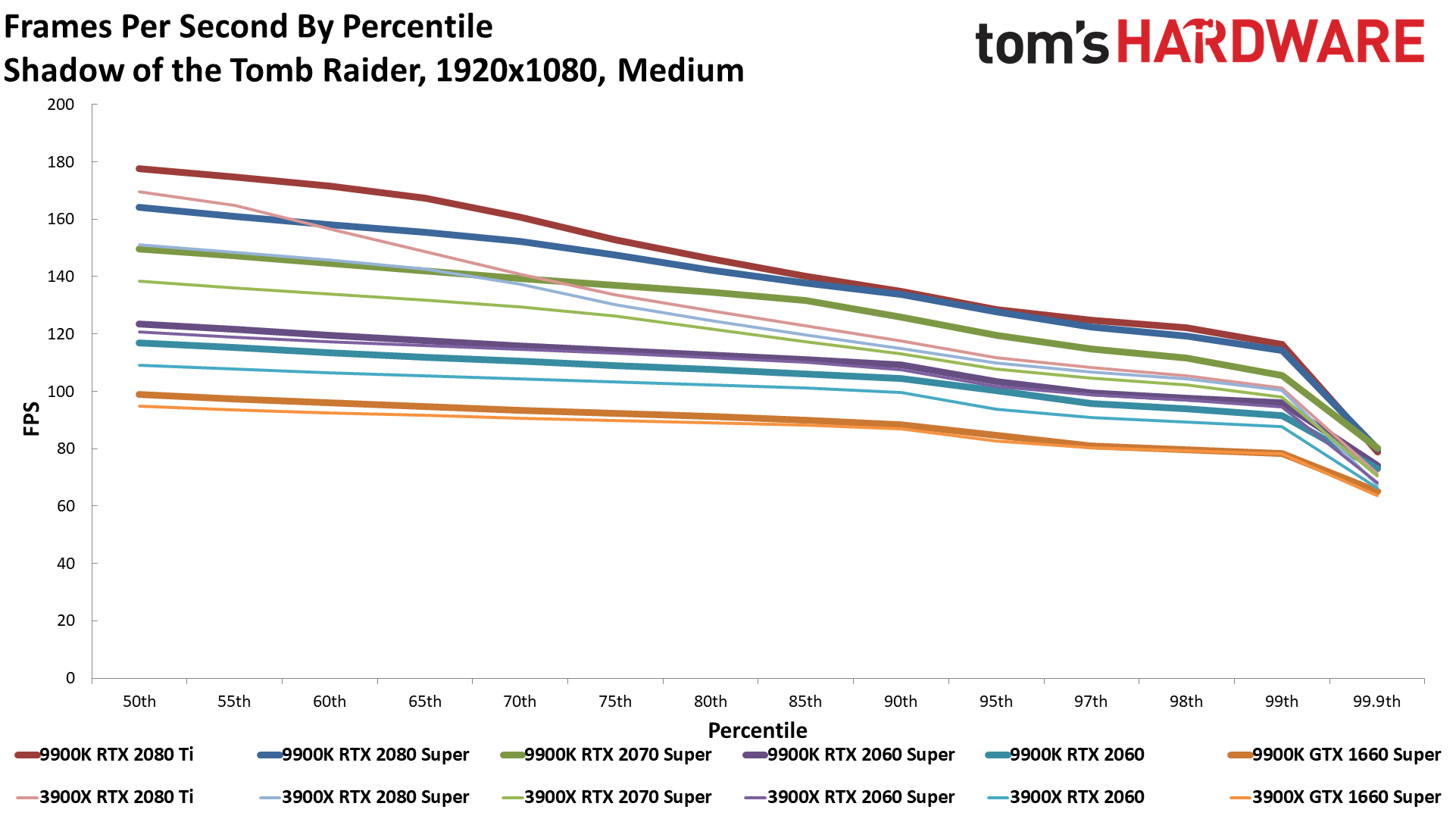

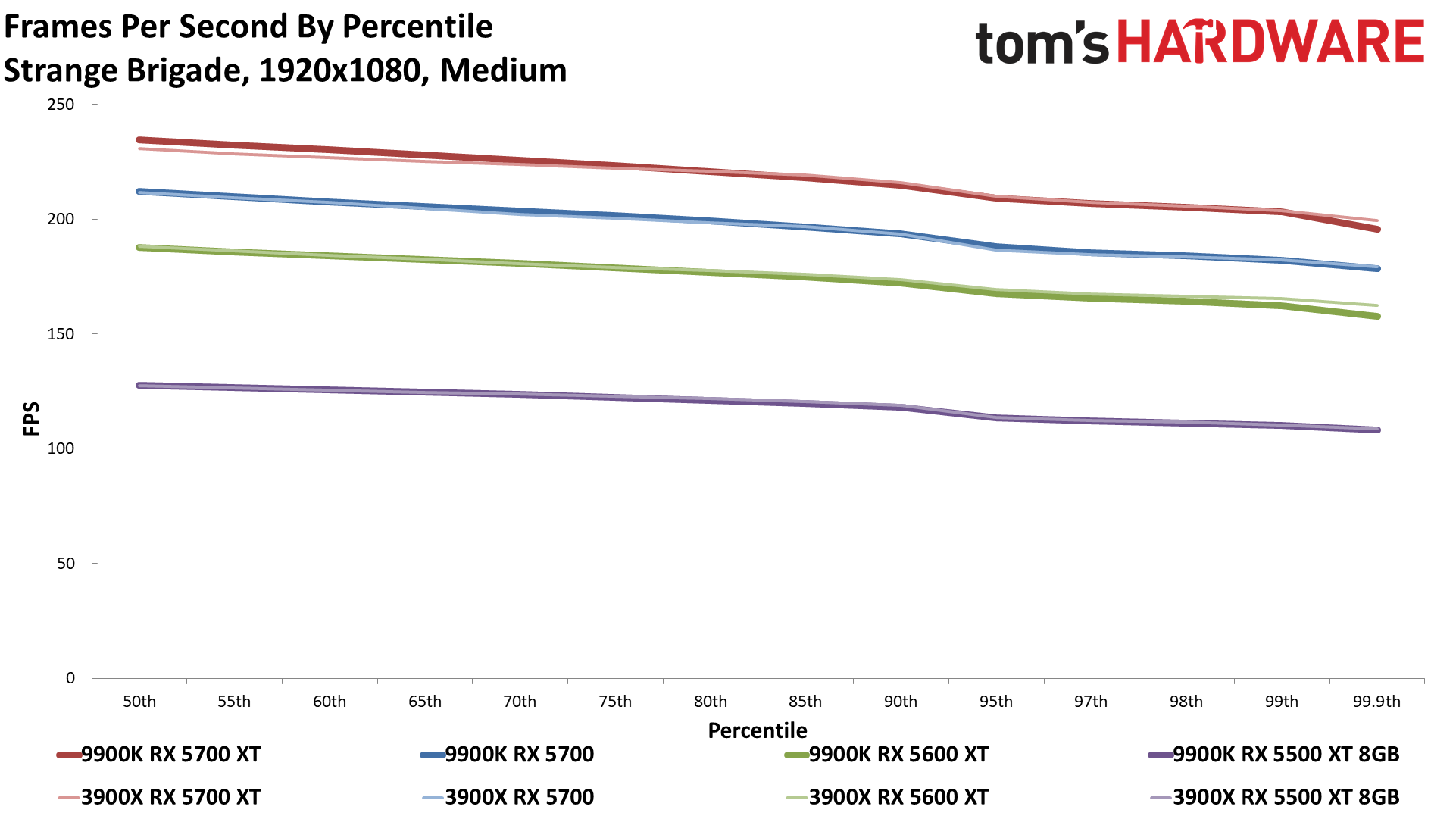

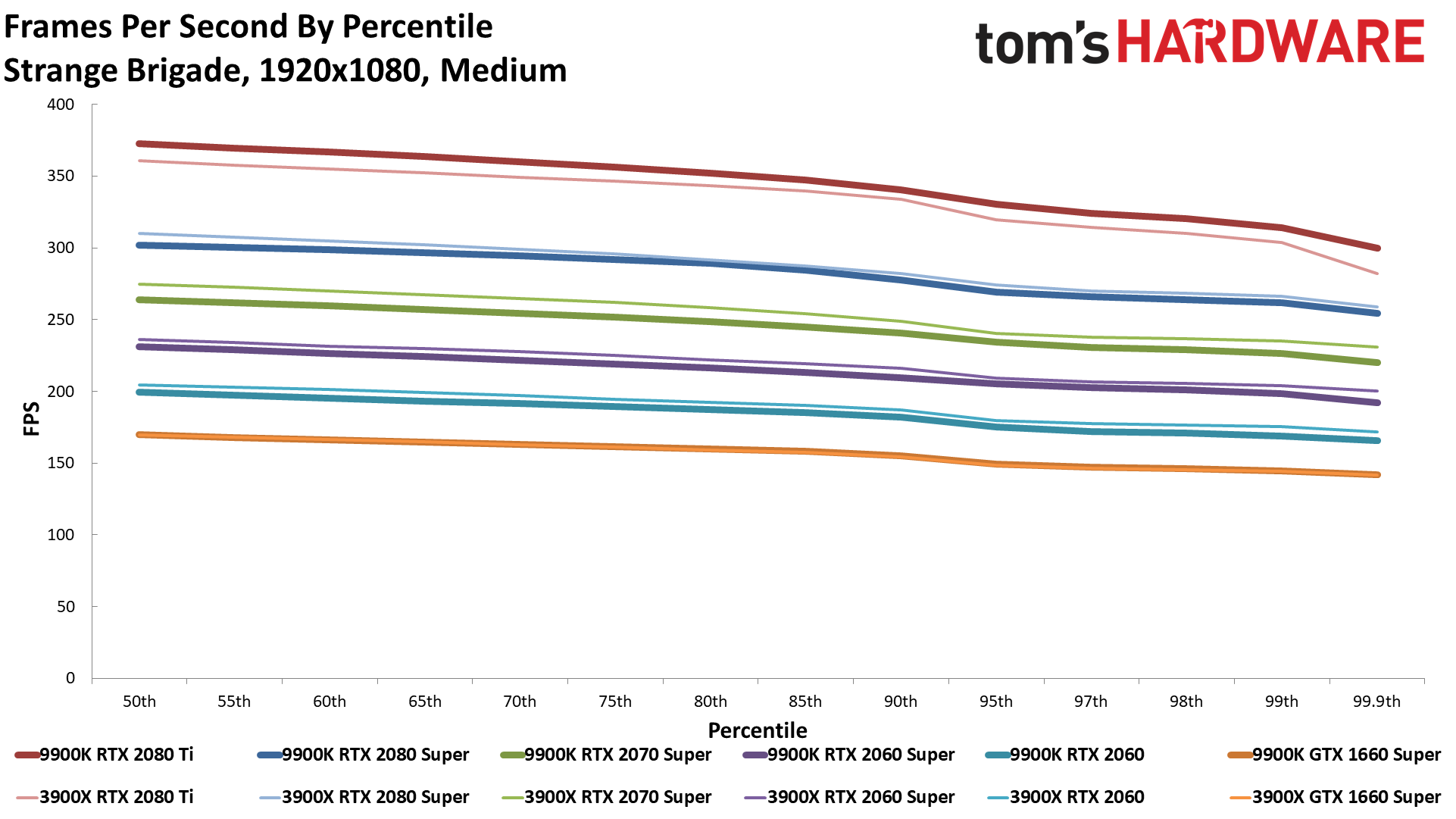

This is the baseline for our gaming performance tests. Most games still look fine at medium quality — it’s about what you get on an Xbox One or PlayStation 4 (but not the newer Xbox One X or PS4 Pro). Outside of esports where players might benefit from 240 fps or more, most people wouldn’t pair high-end CPUs and GPUs with such an easy target resolution and settings. However, this will give us the maximum fps difference you’d experience between the two CPUs.

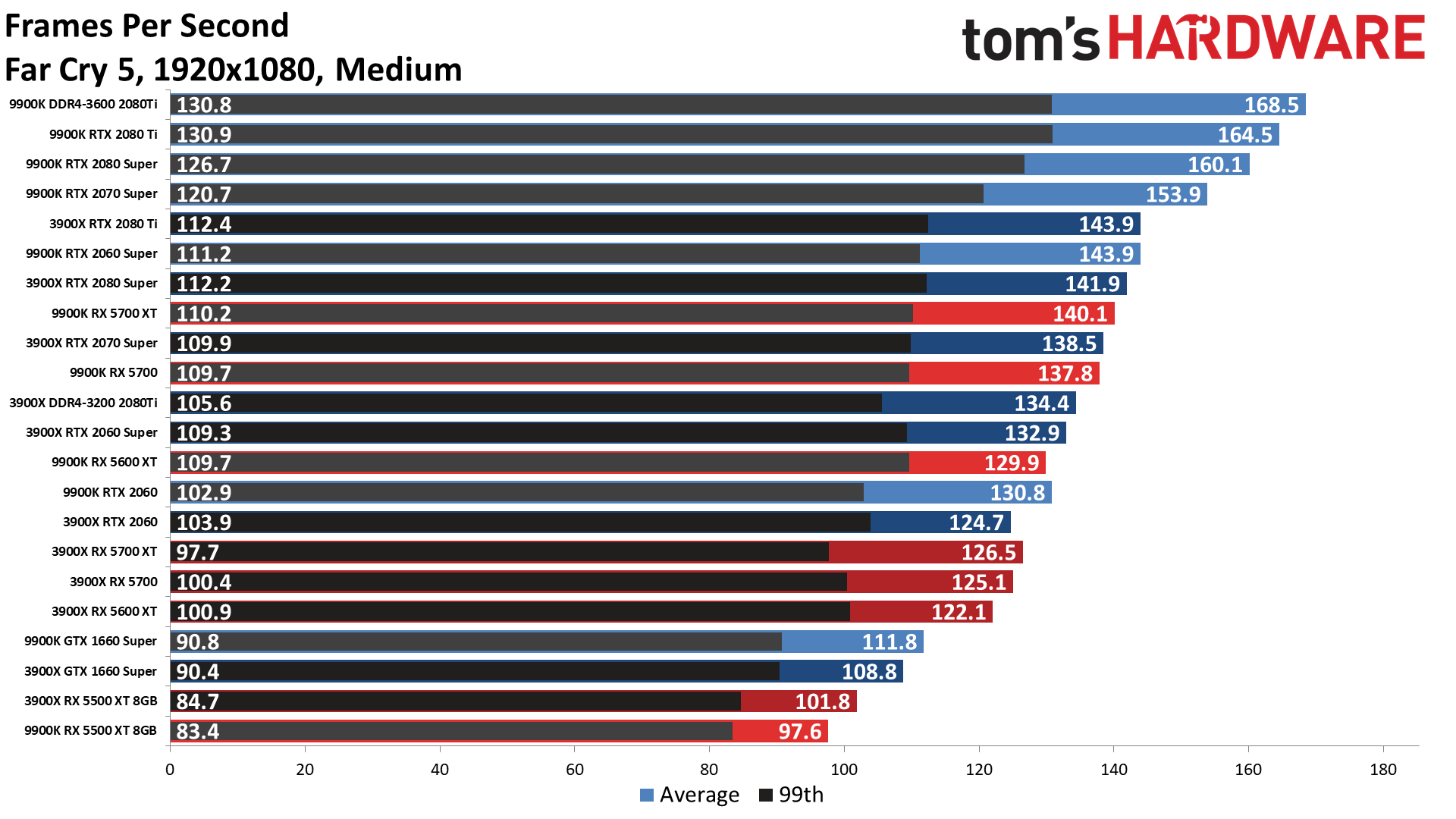

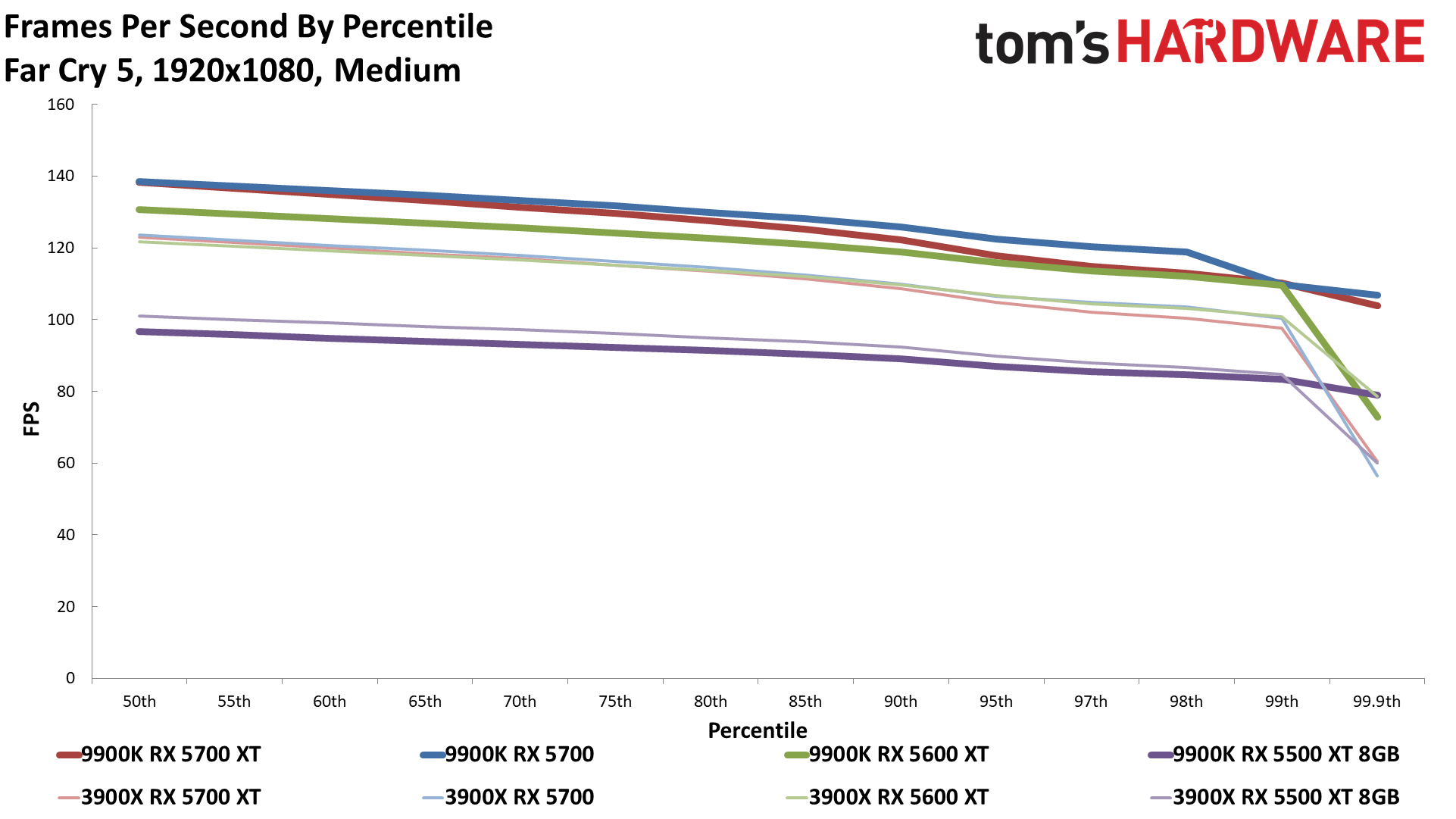

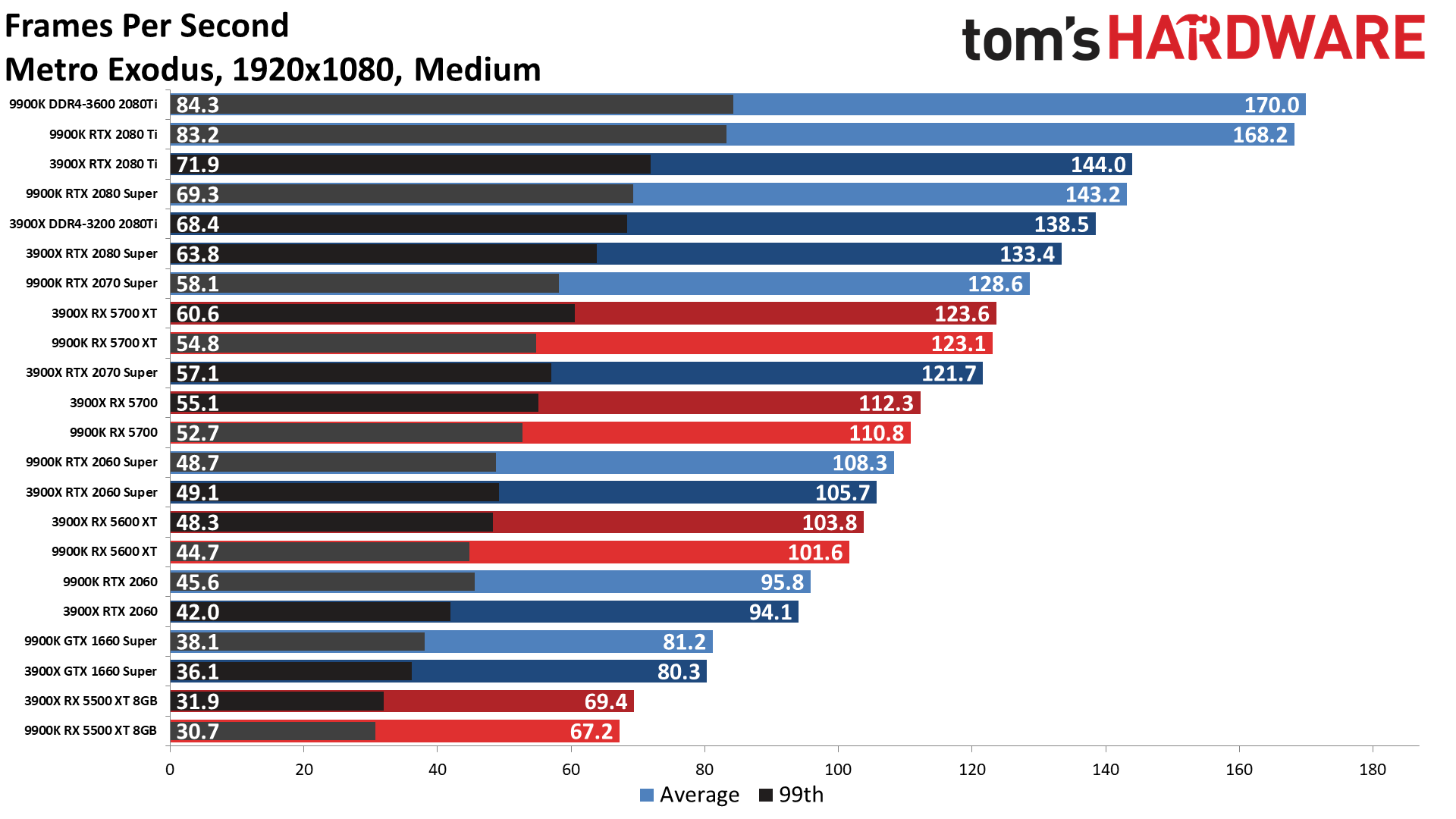

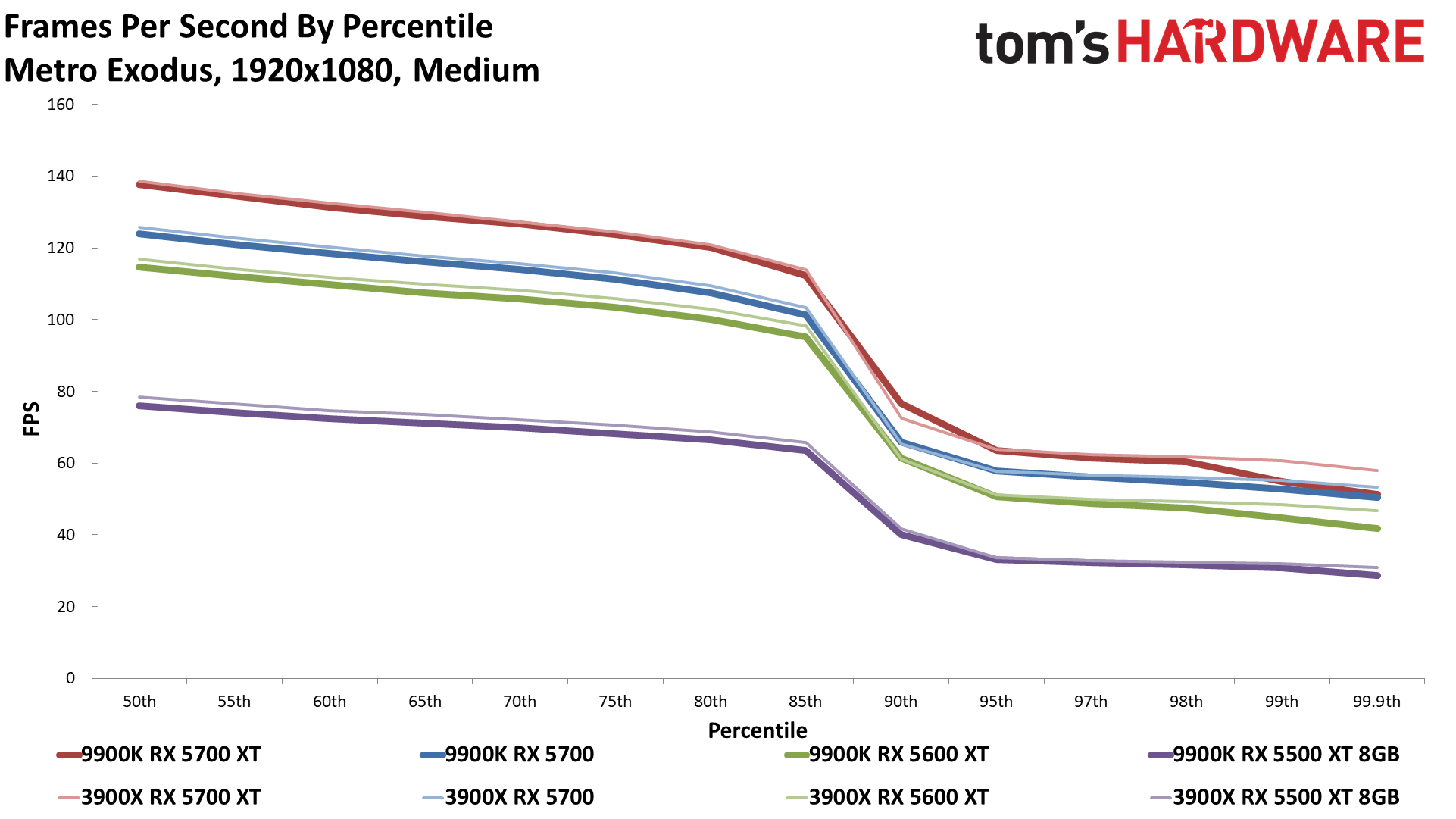

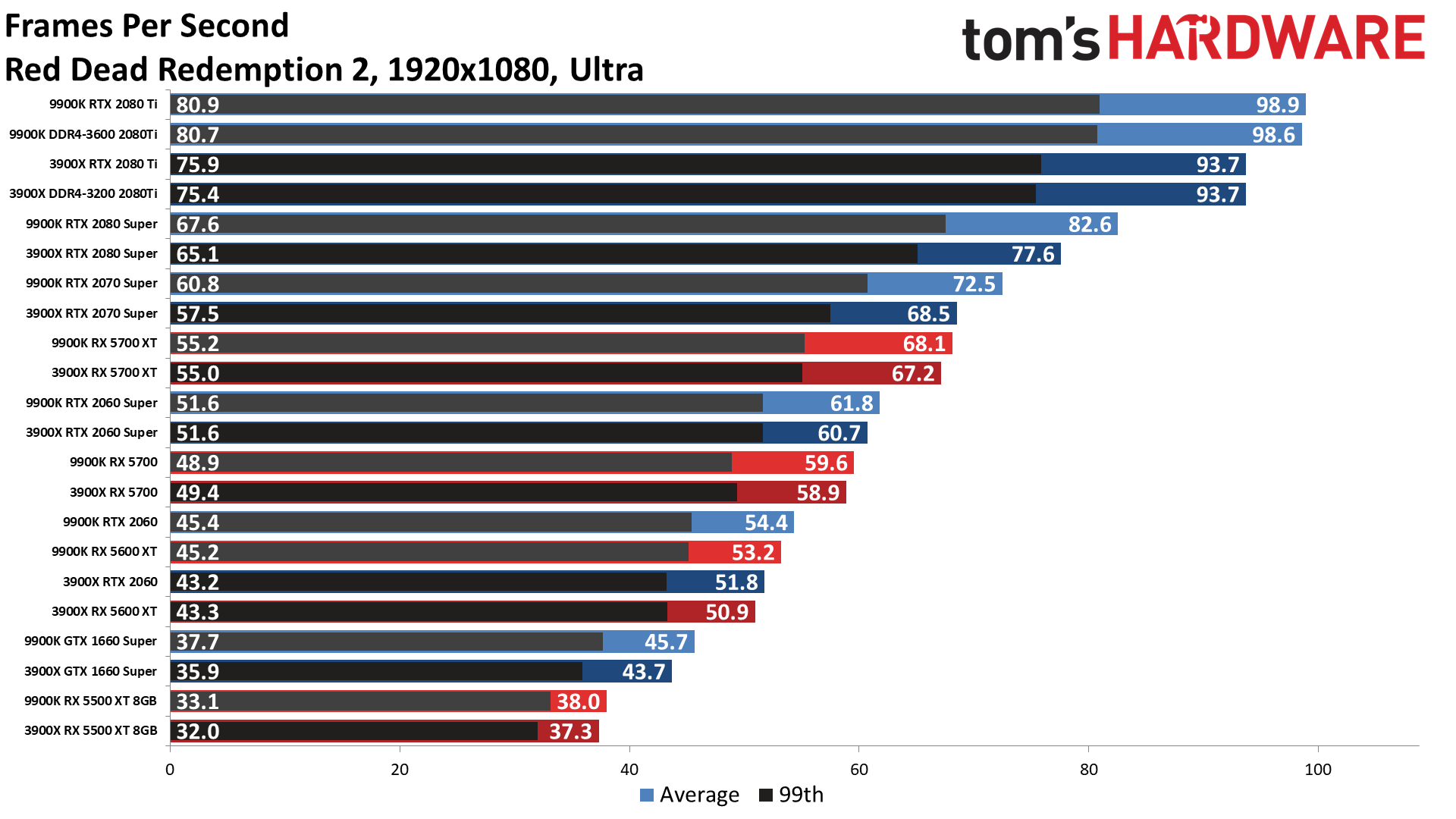

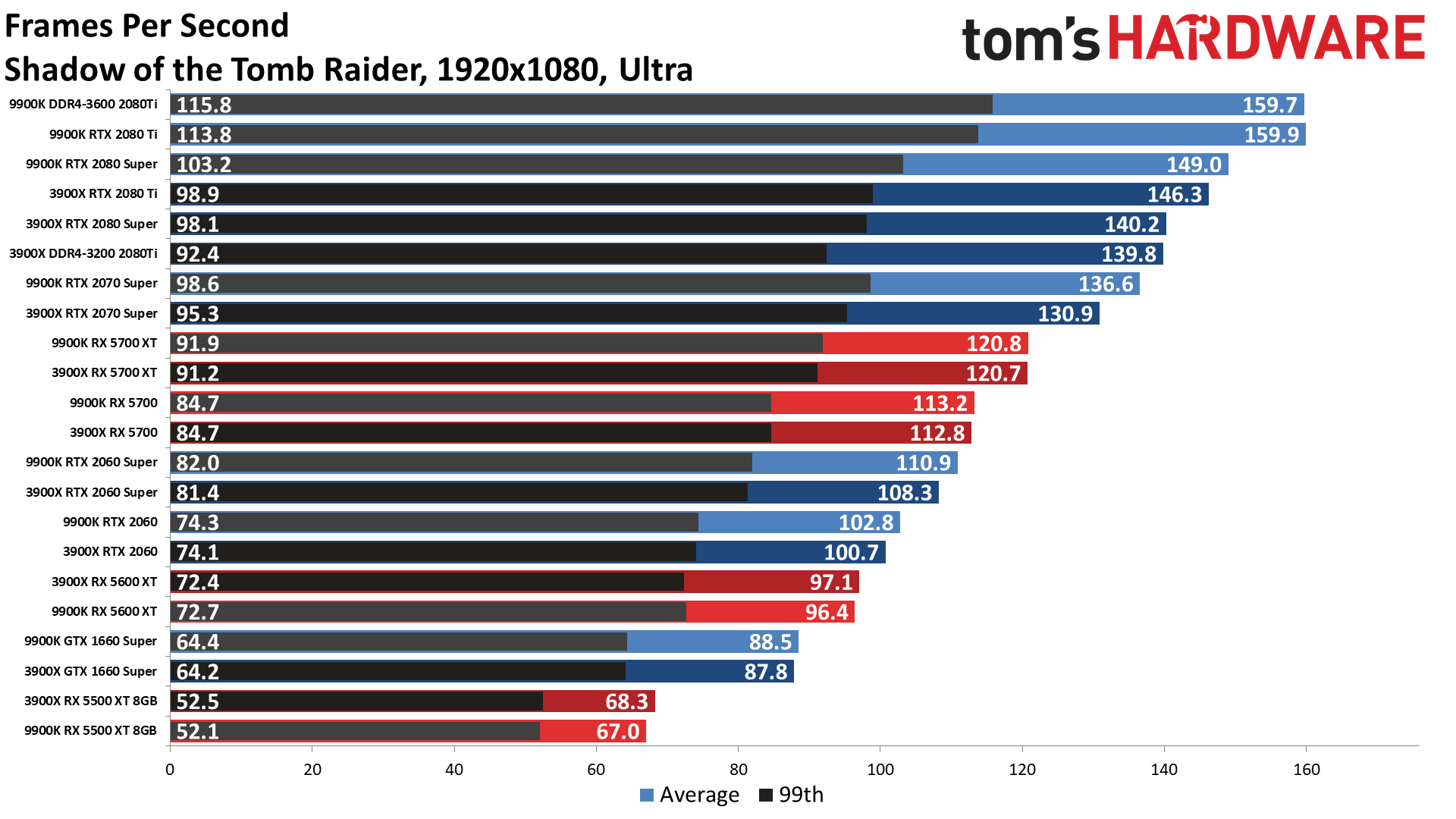

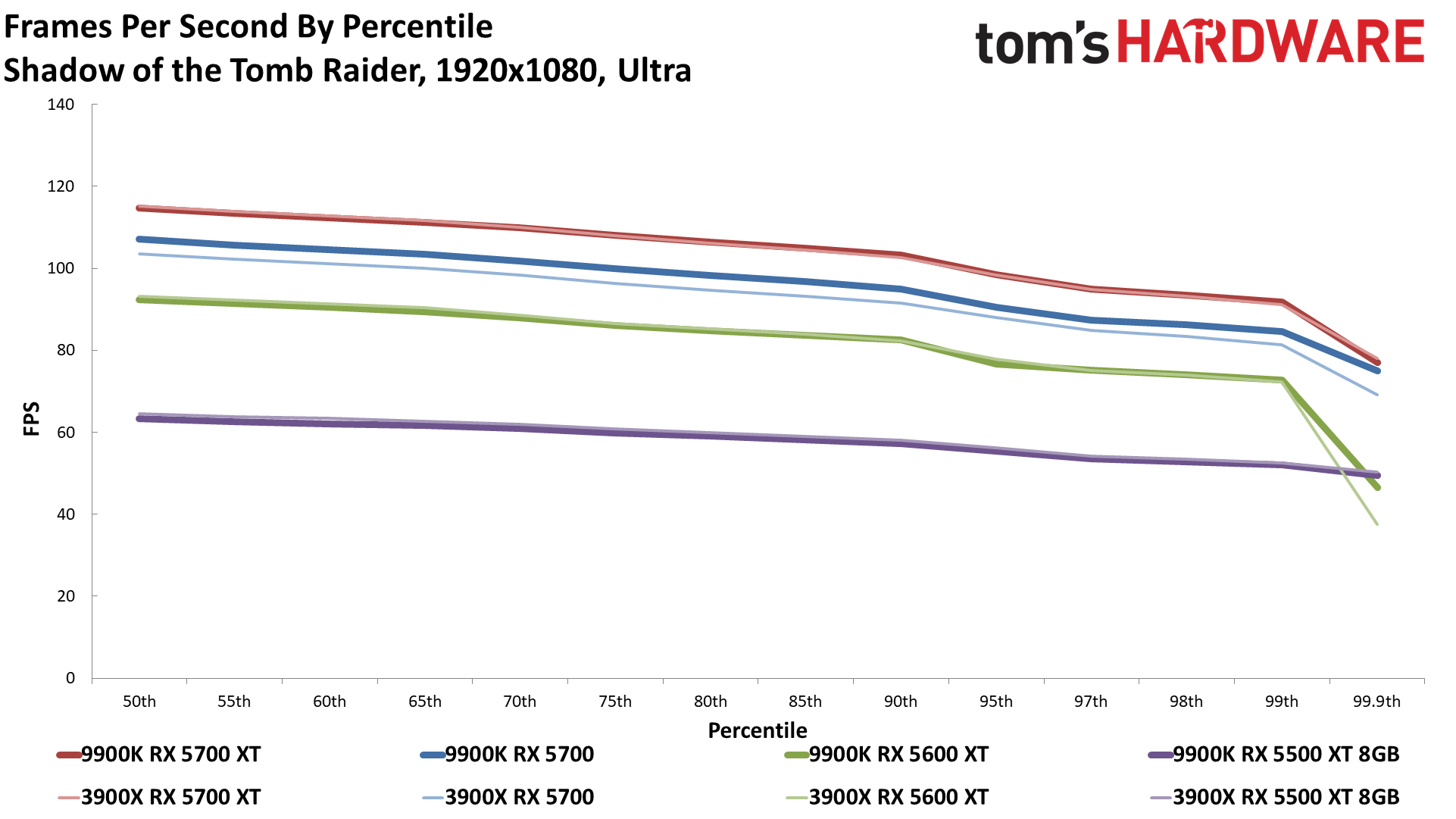

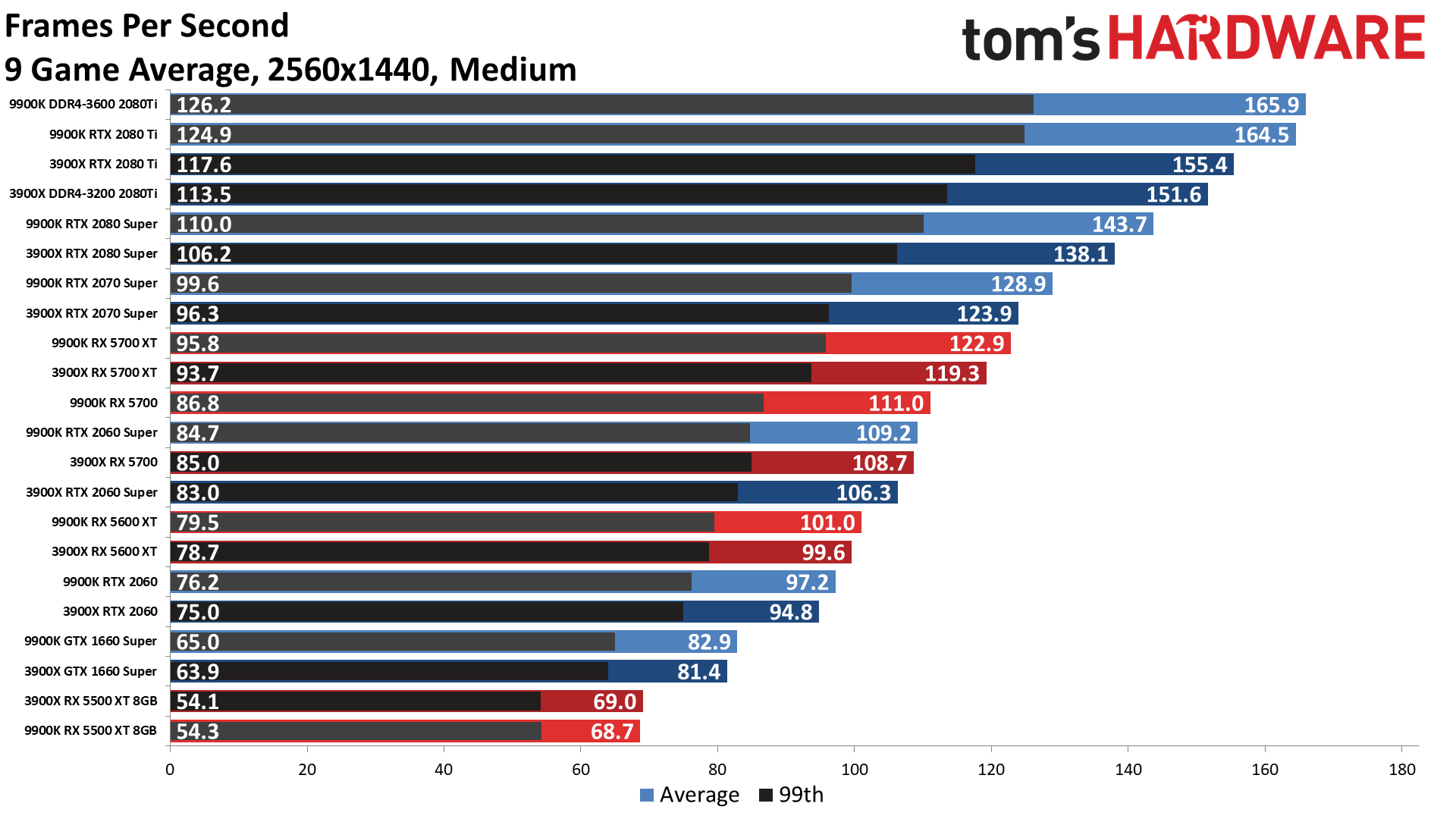

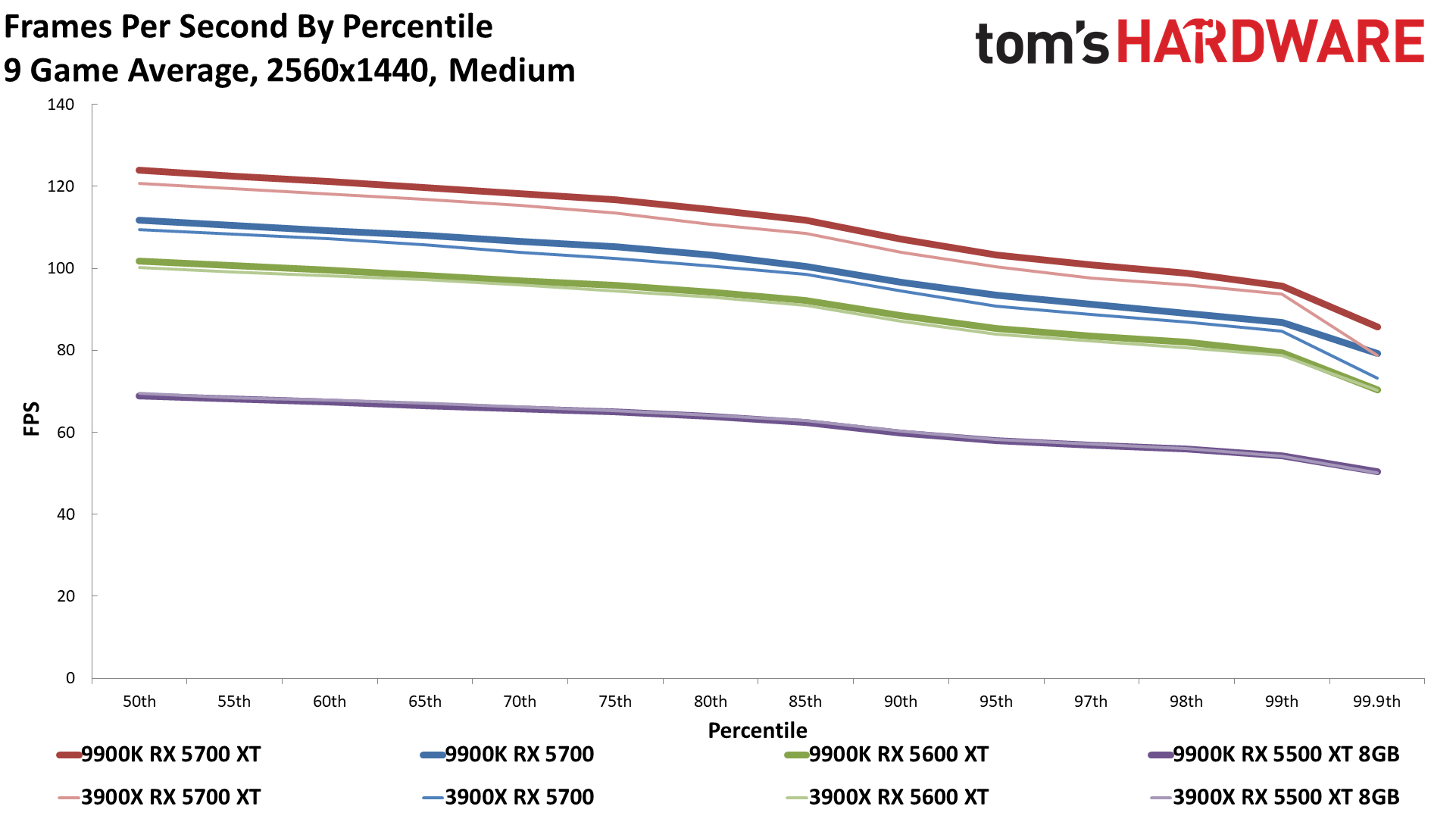

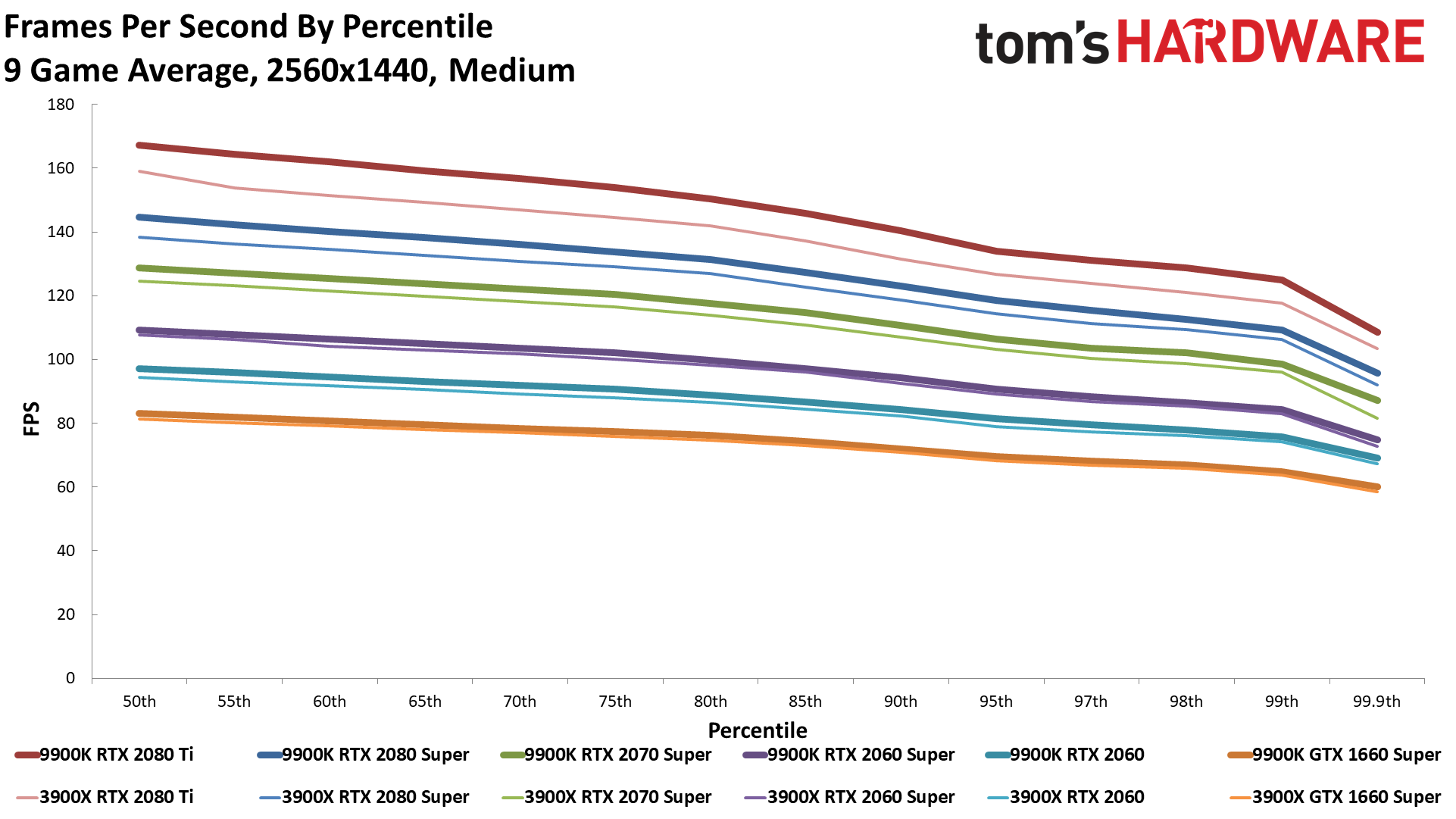

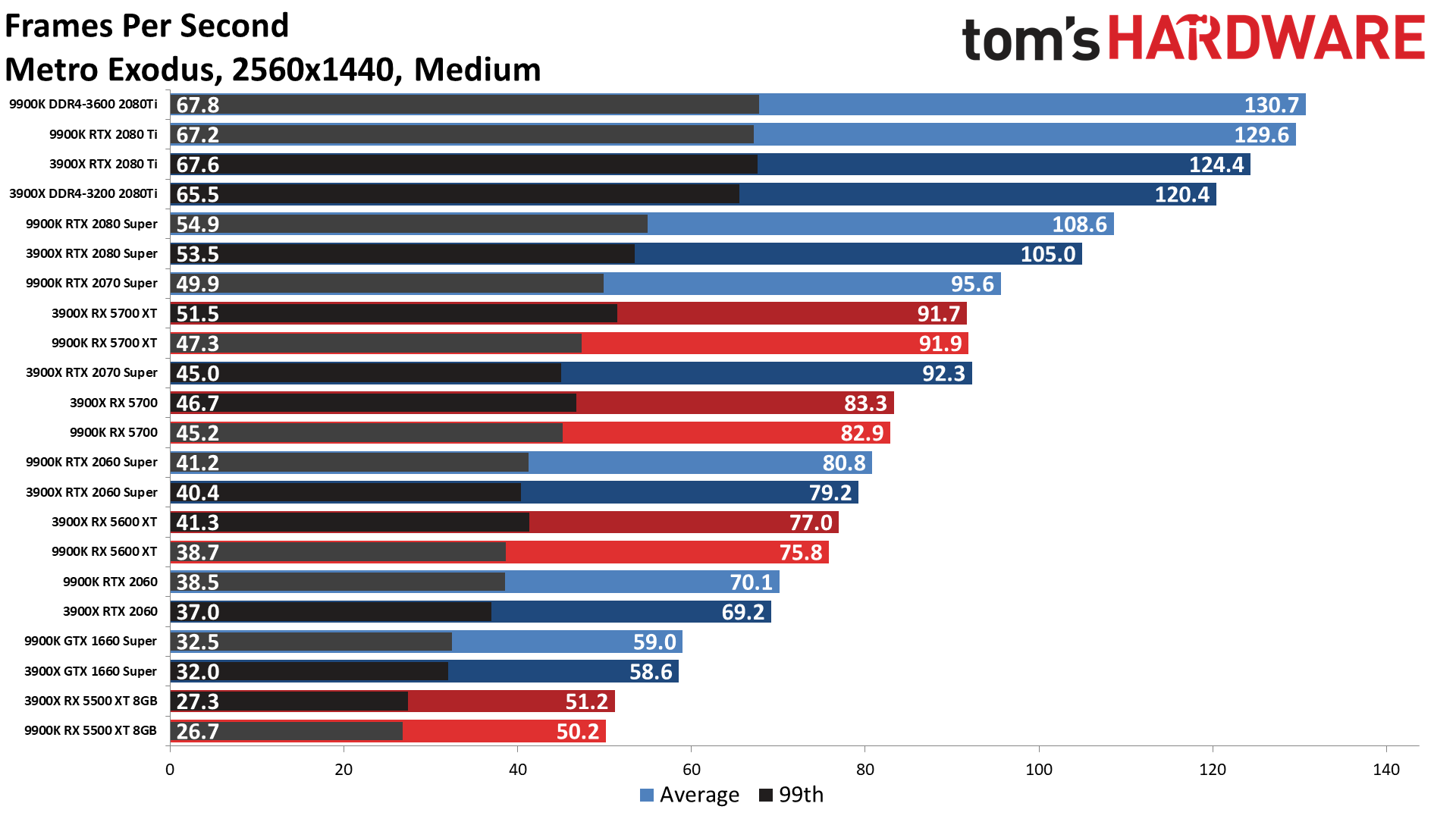

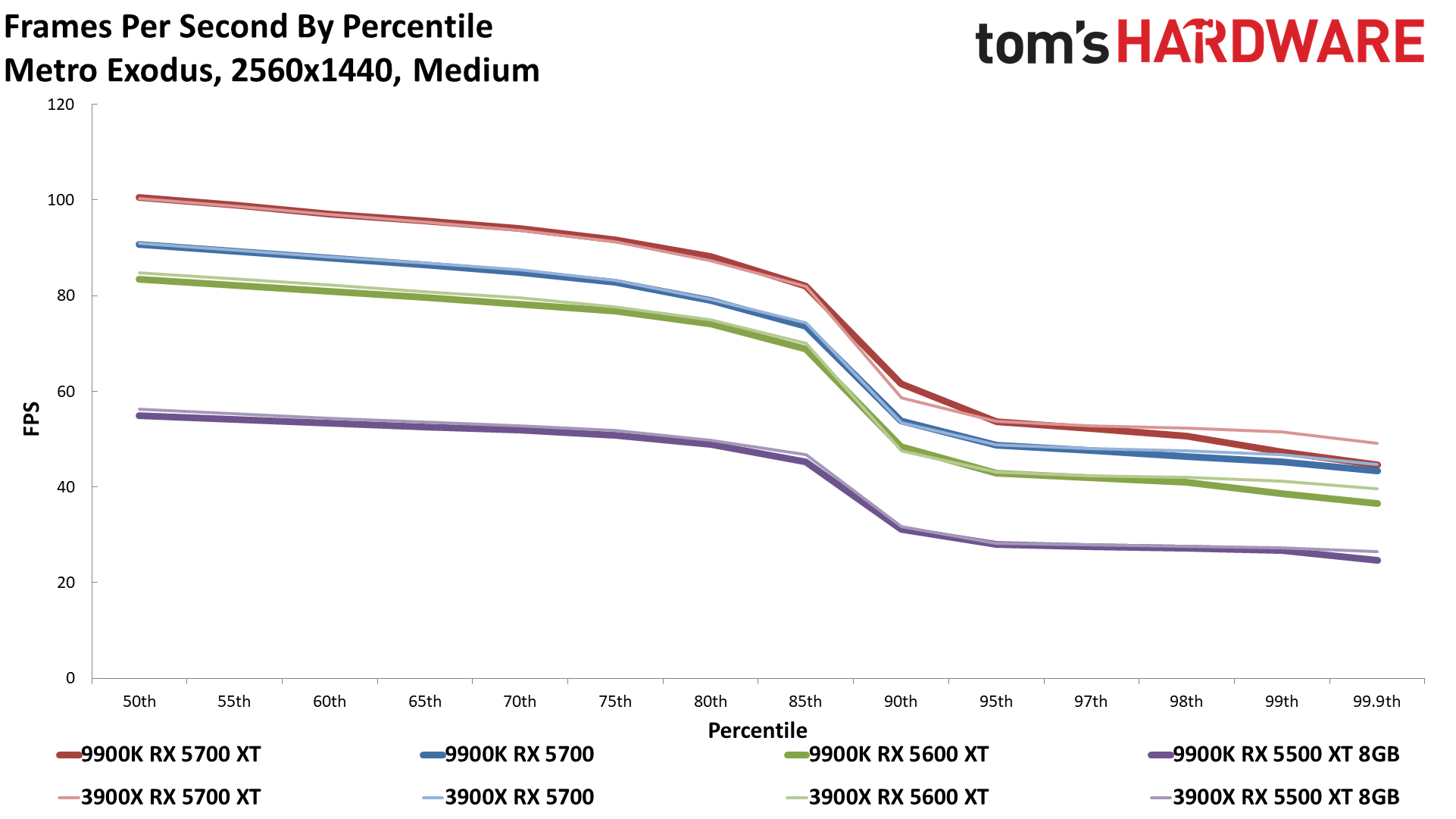

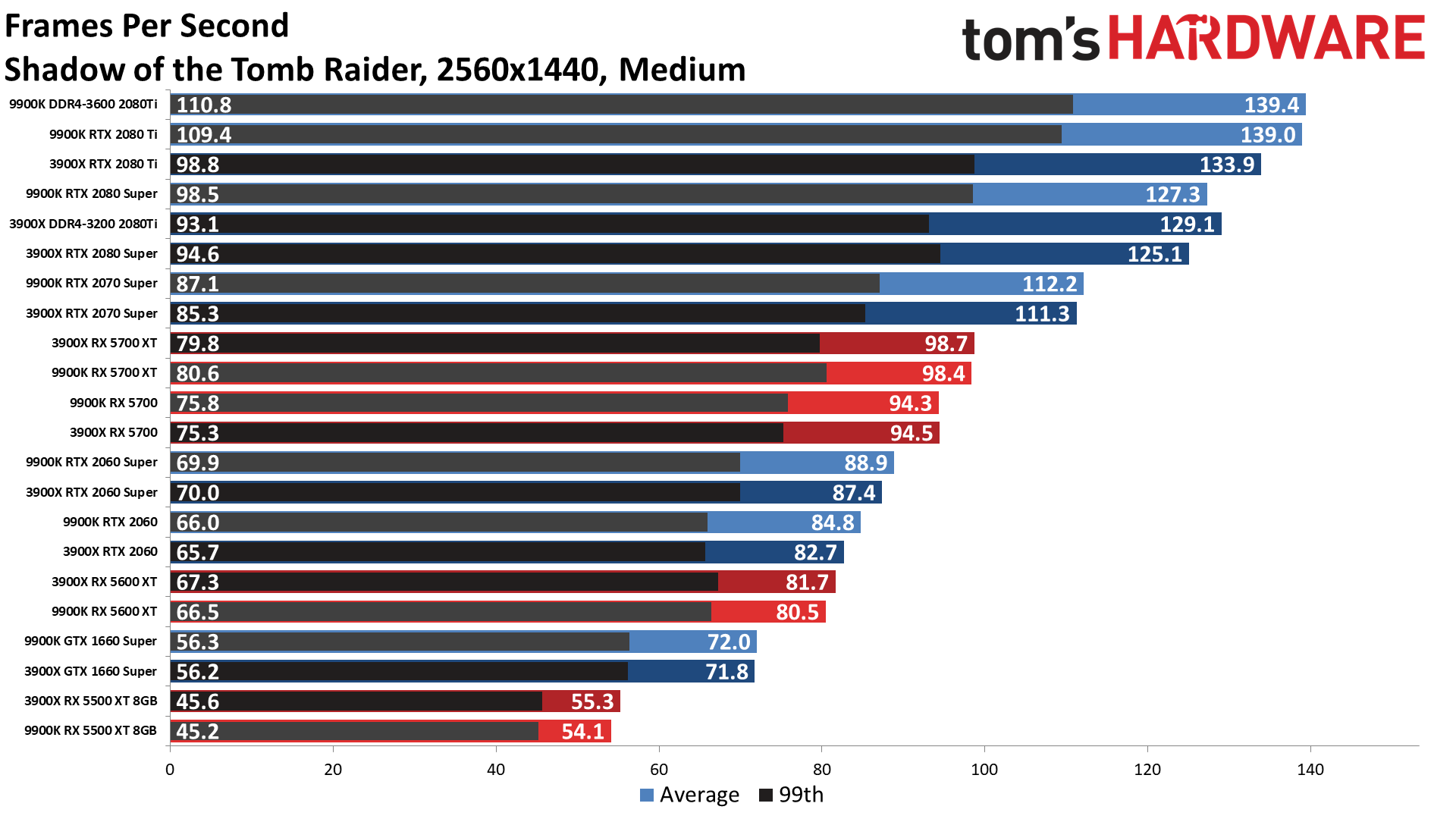

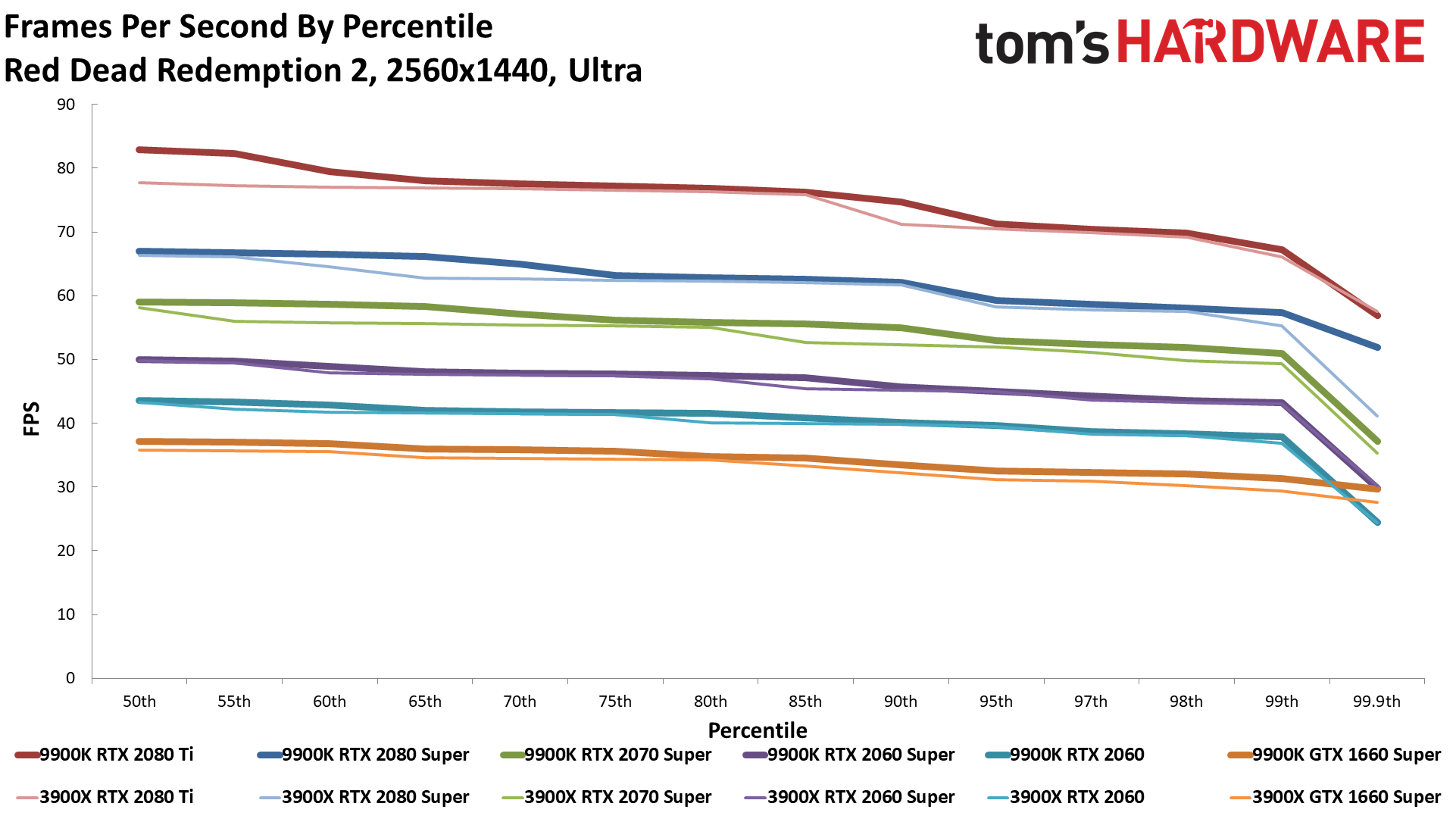

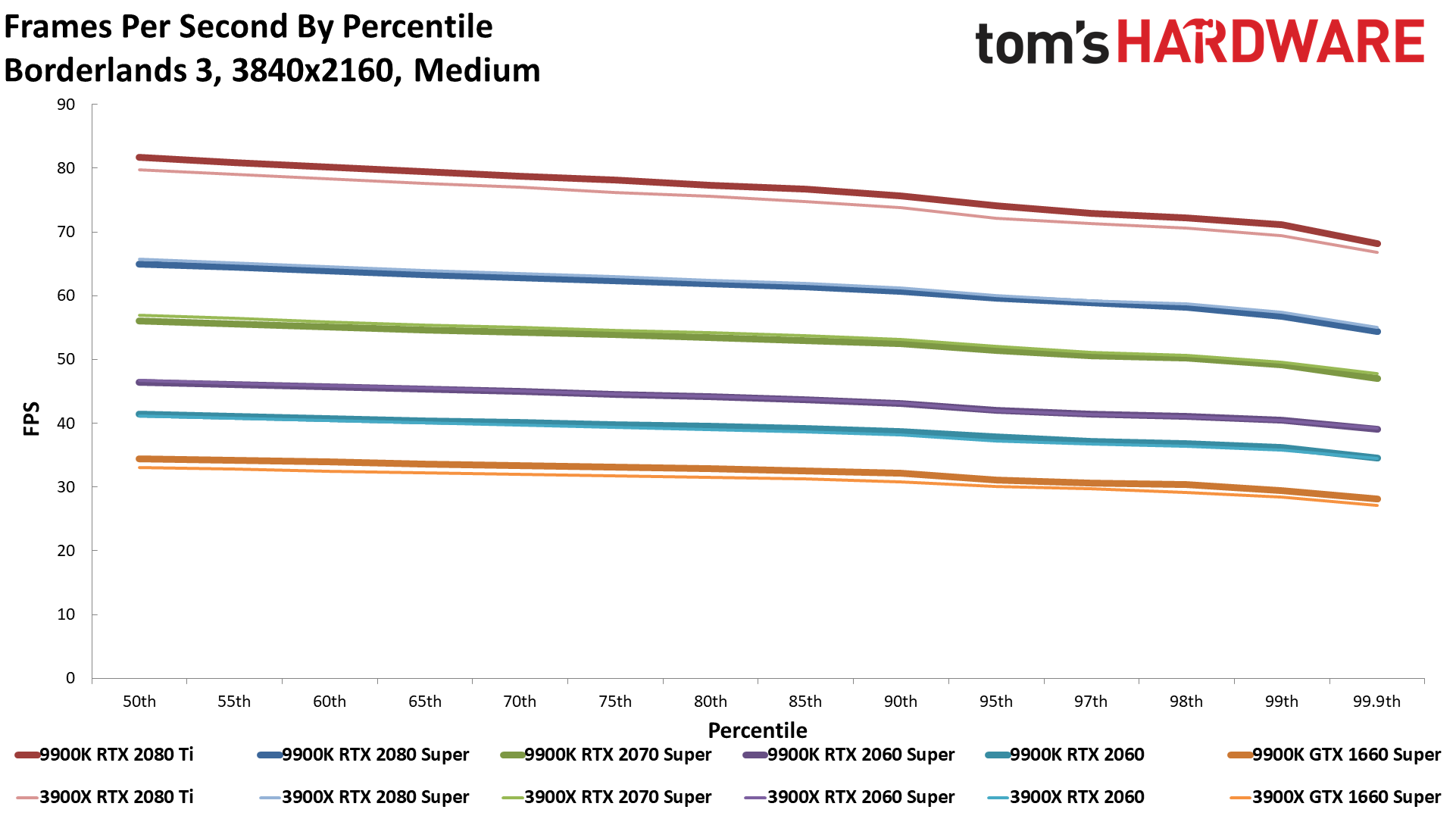

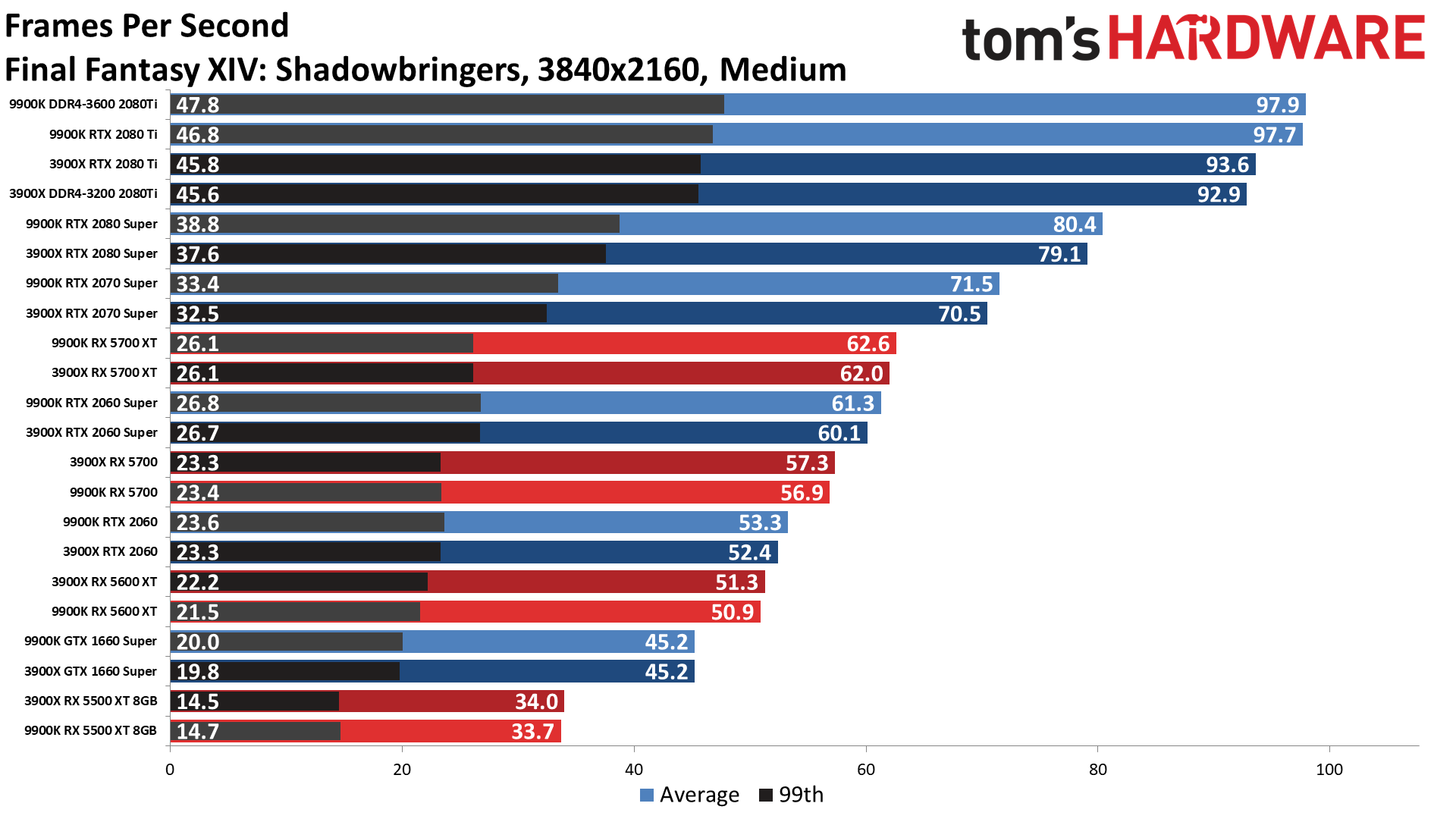

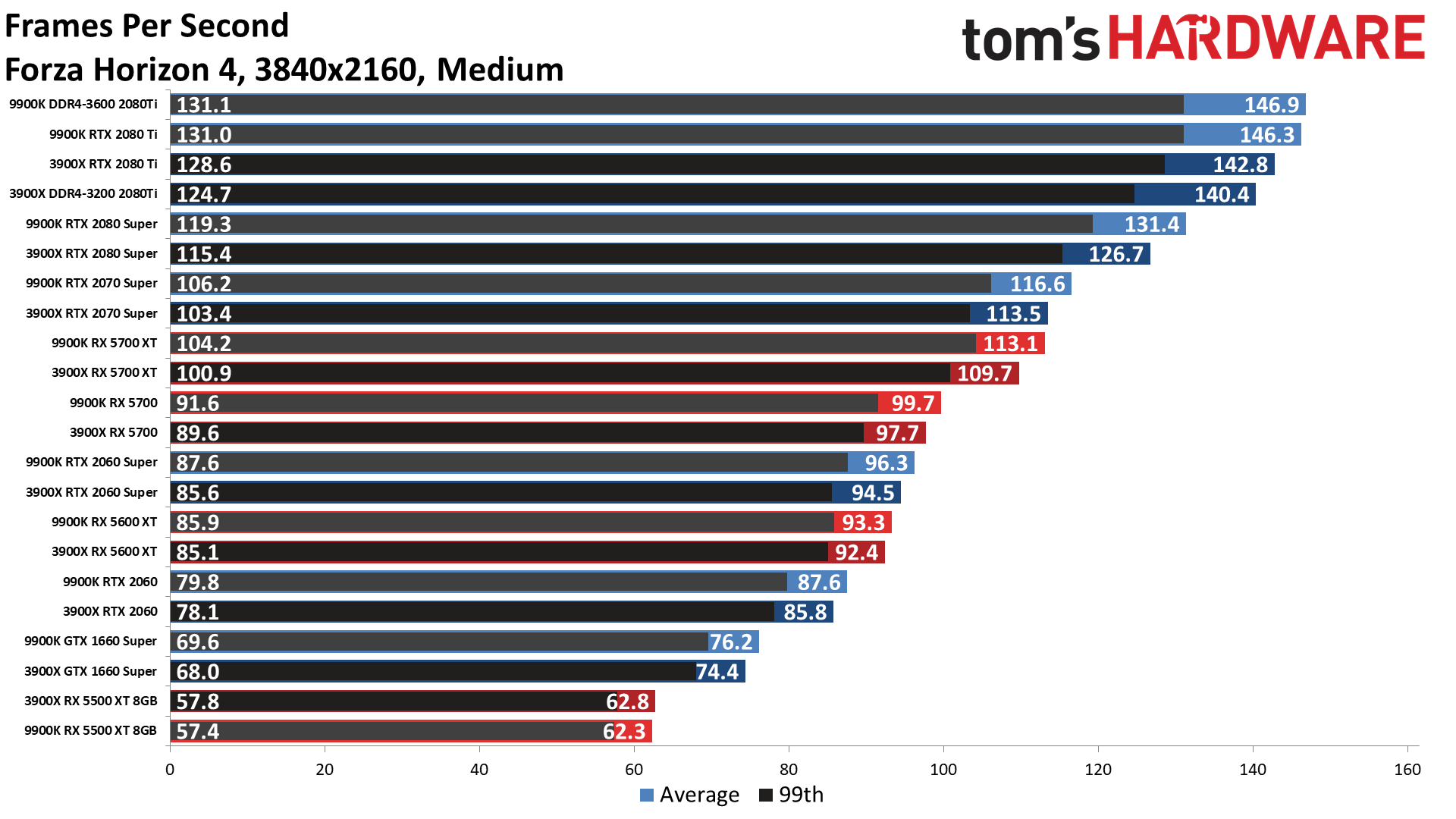

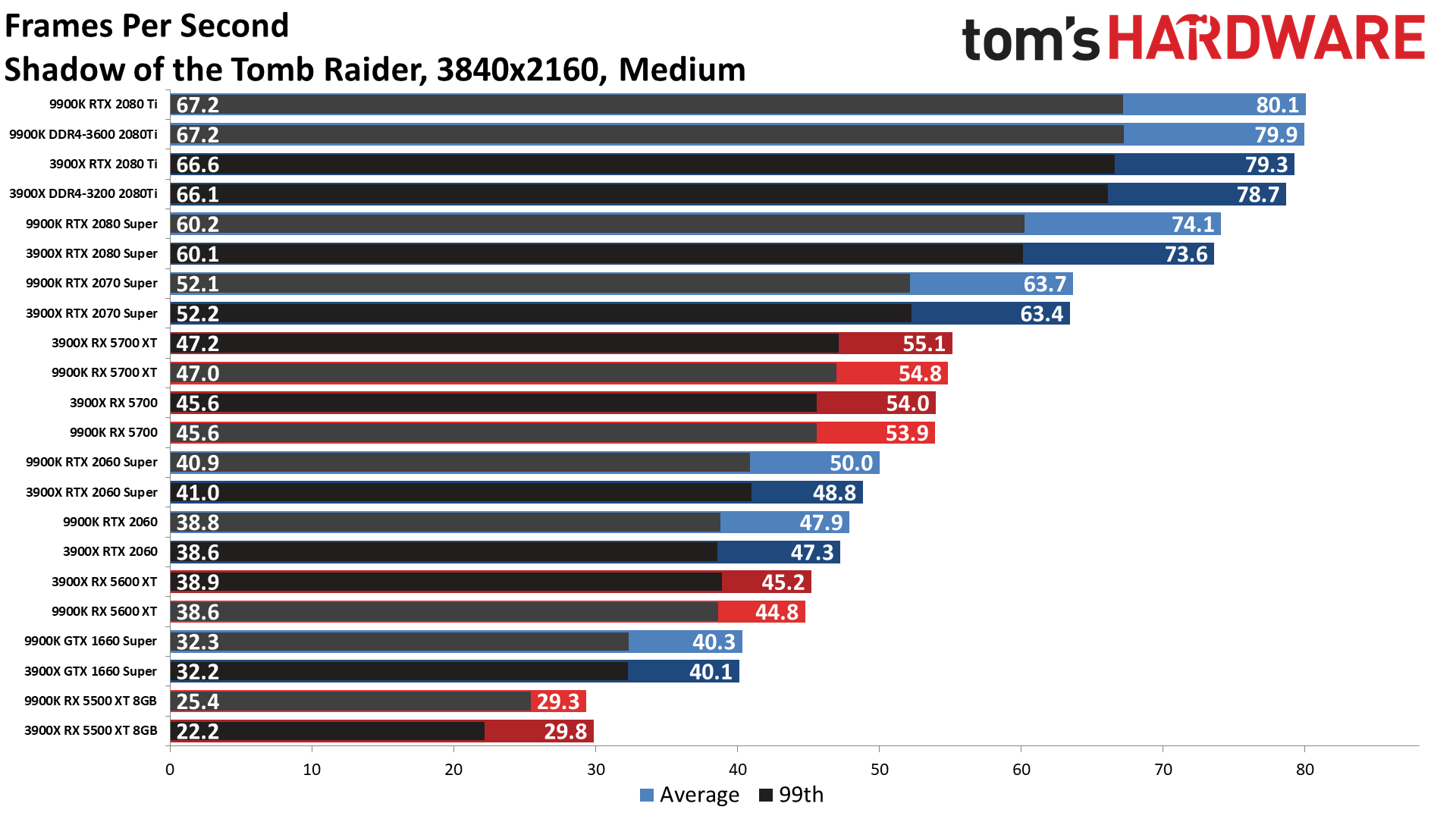

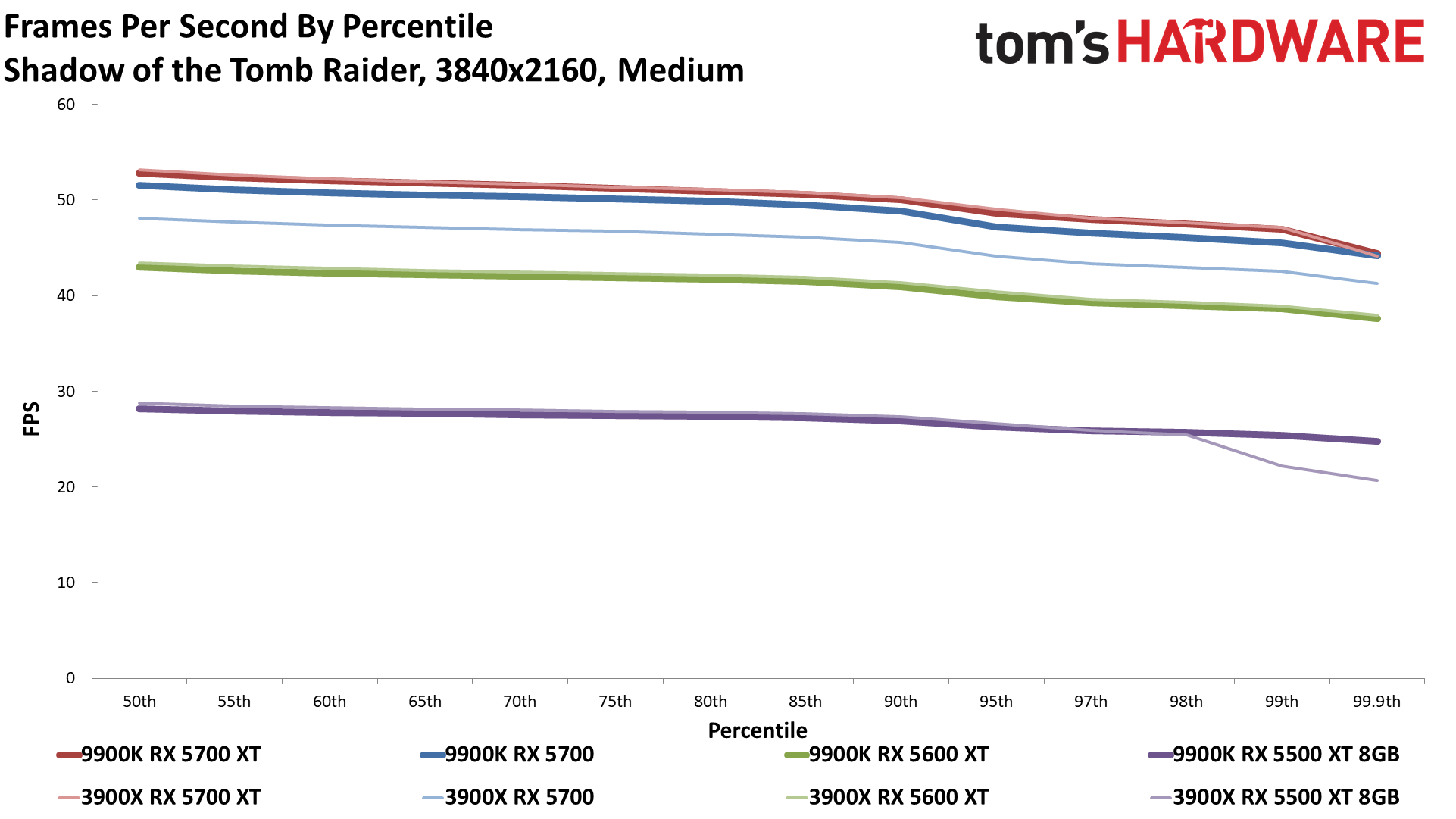

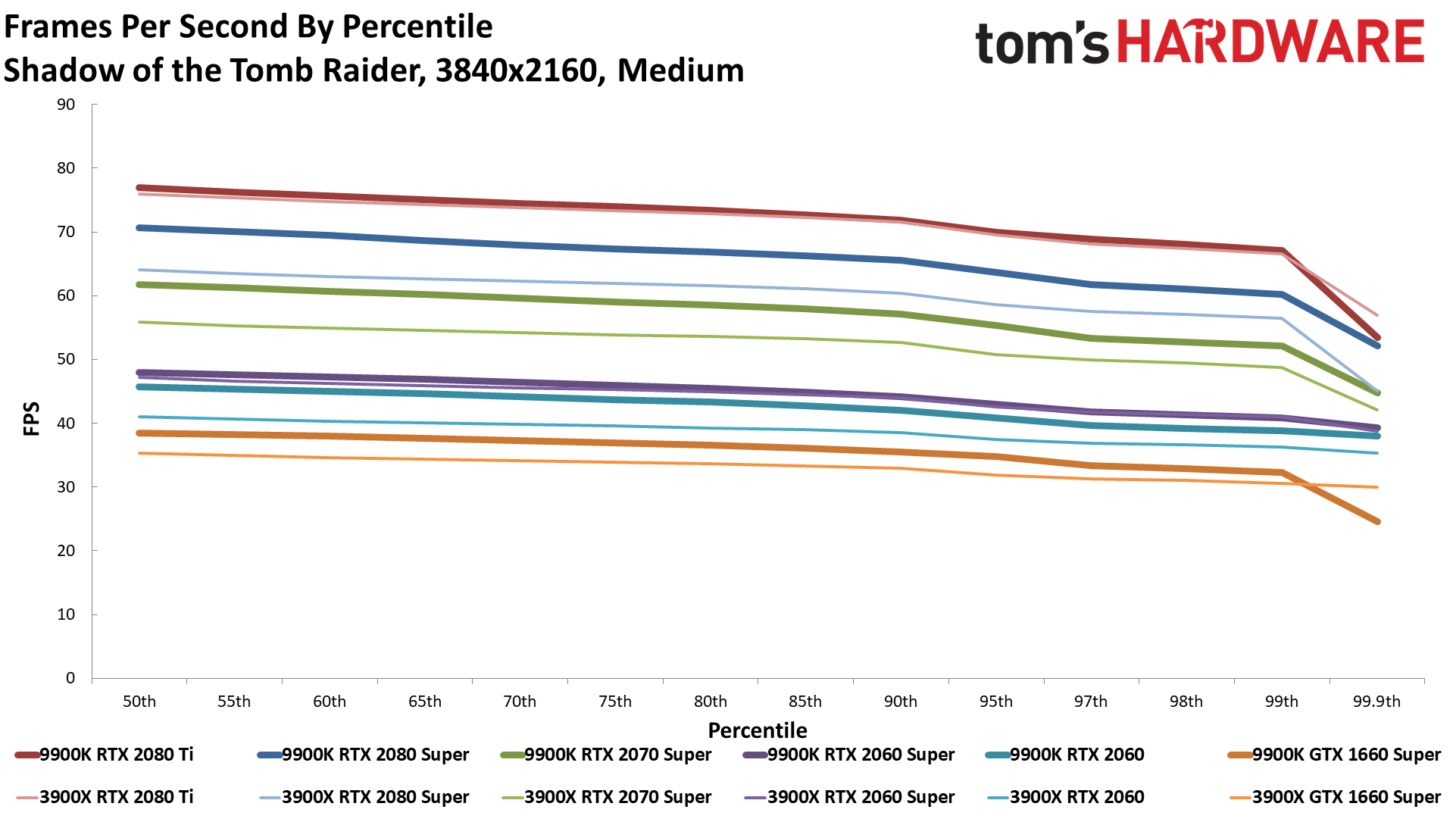

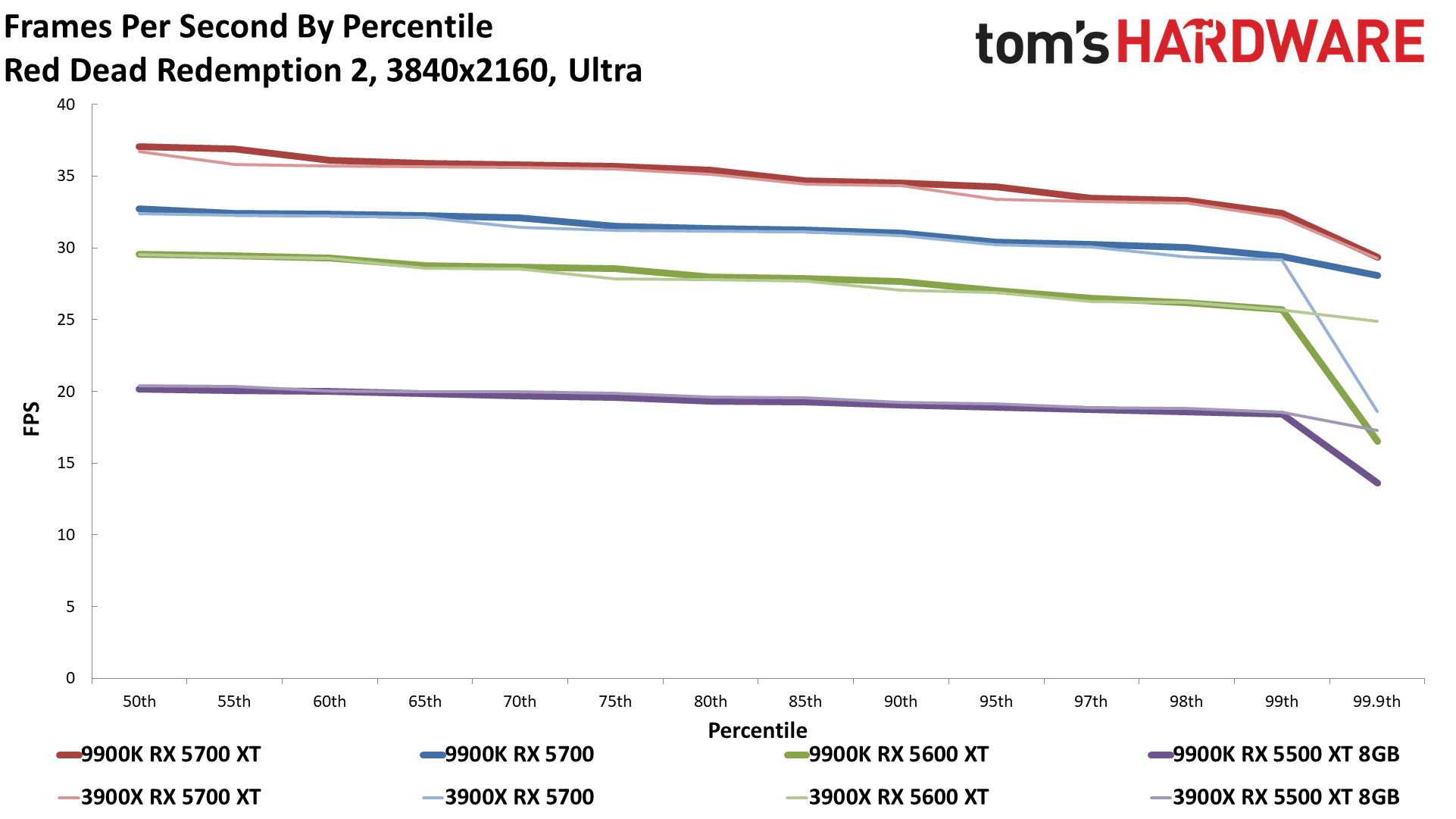

Overall, the i9-9900K ended up delivering just 9.4% higher performance than the Ryzen 9 3900X. That’s not particularly noticeable, and in several games the gap was even smaller. Far Cry 5, Final Fantasy XIV, Metro Exodus, and Red Dead Redemption 2 showed the biggest leads for Intel (14-17%), Shadow of the Tomb Raider was right near the average (10%), and the other four games showed a scant 3-6% lead.

Could you notice such a difference? 15%, sure; 5%, not so much. Is the overall result significant? Not really, and things only get closer as we move down the GPU list.

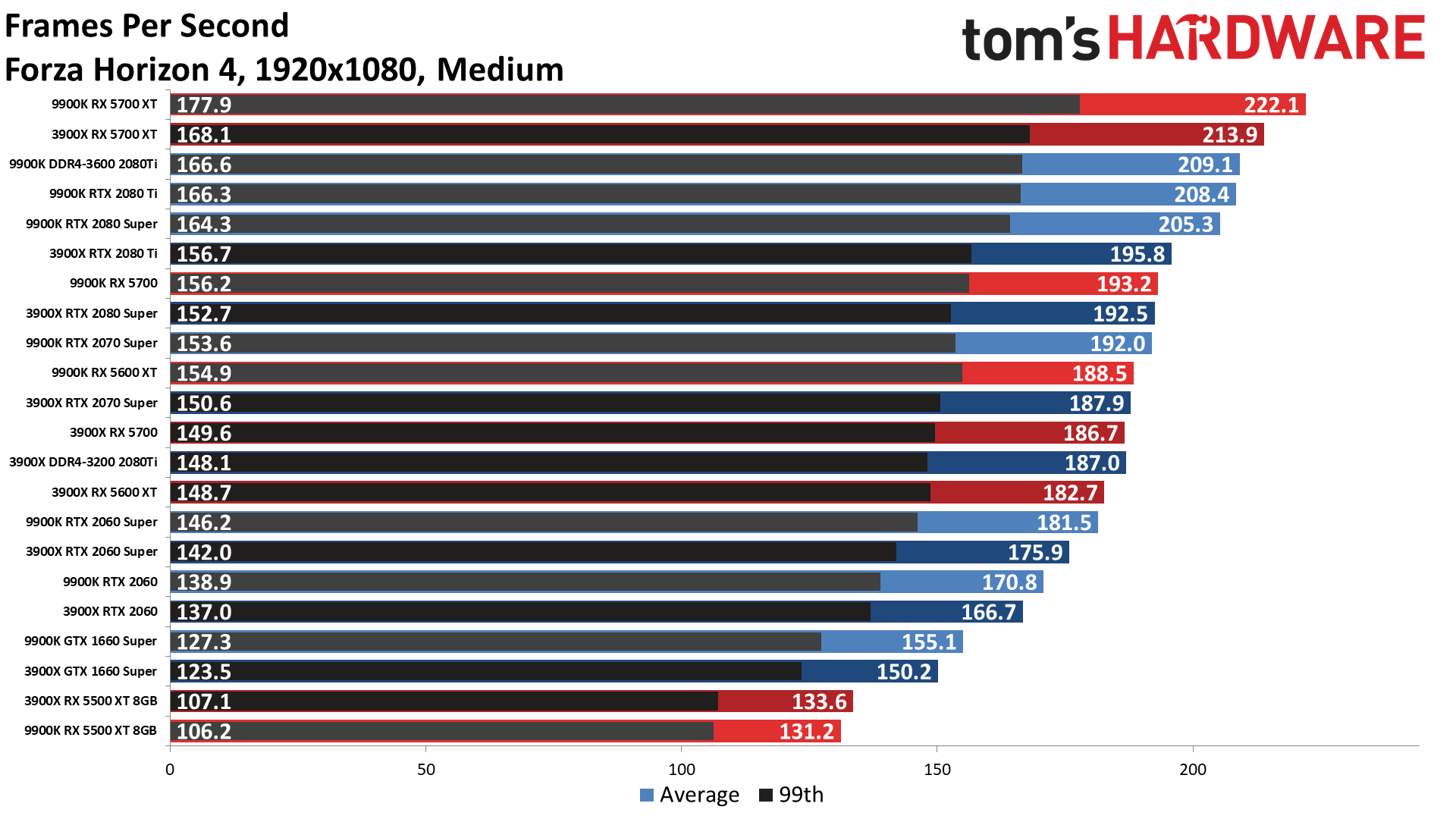

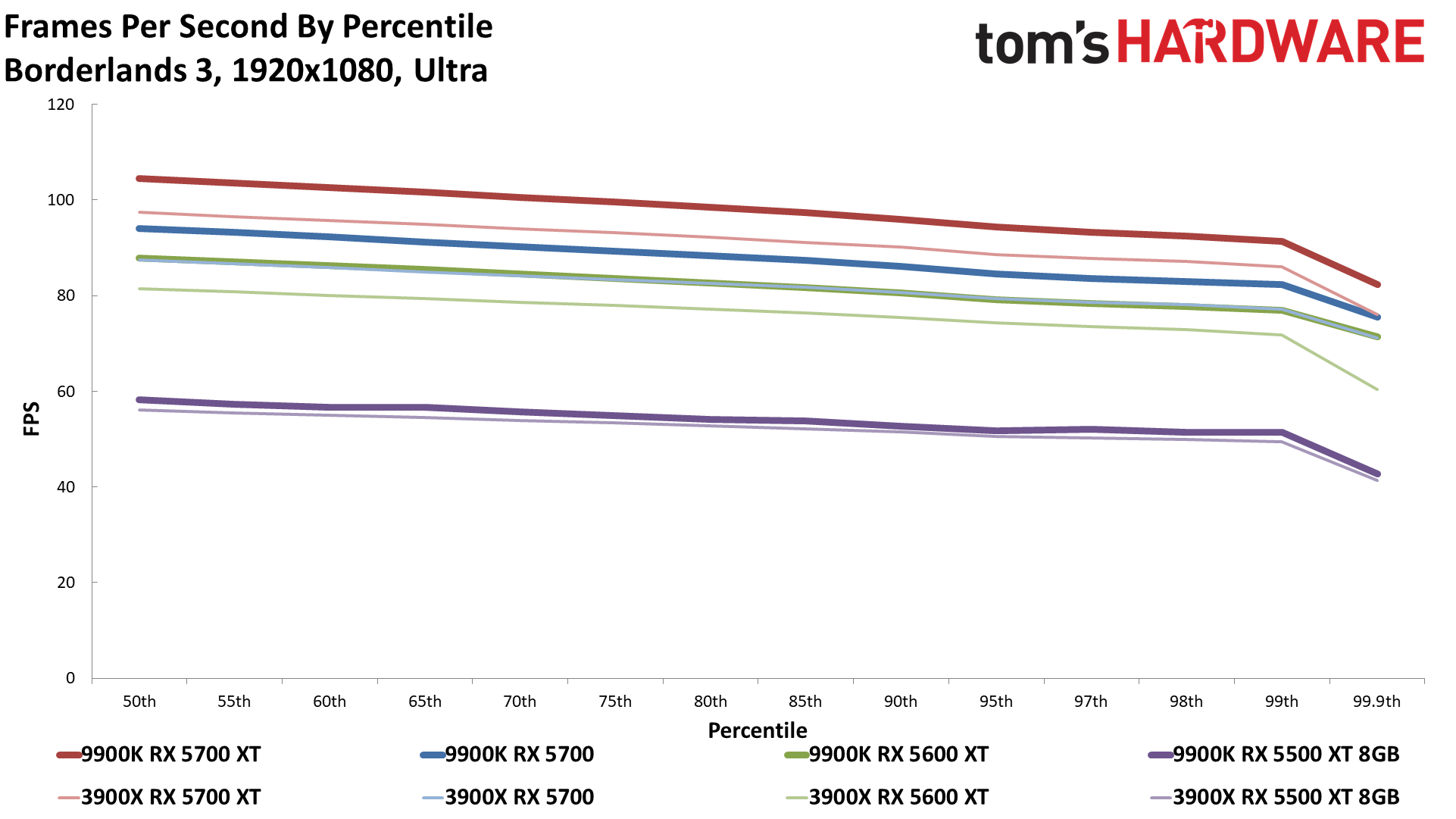

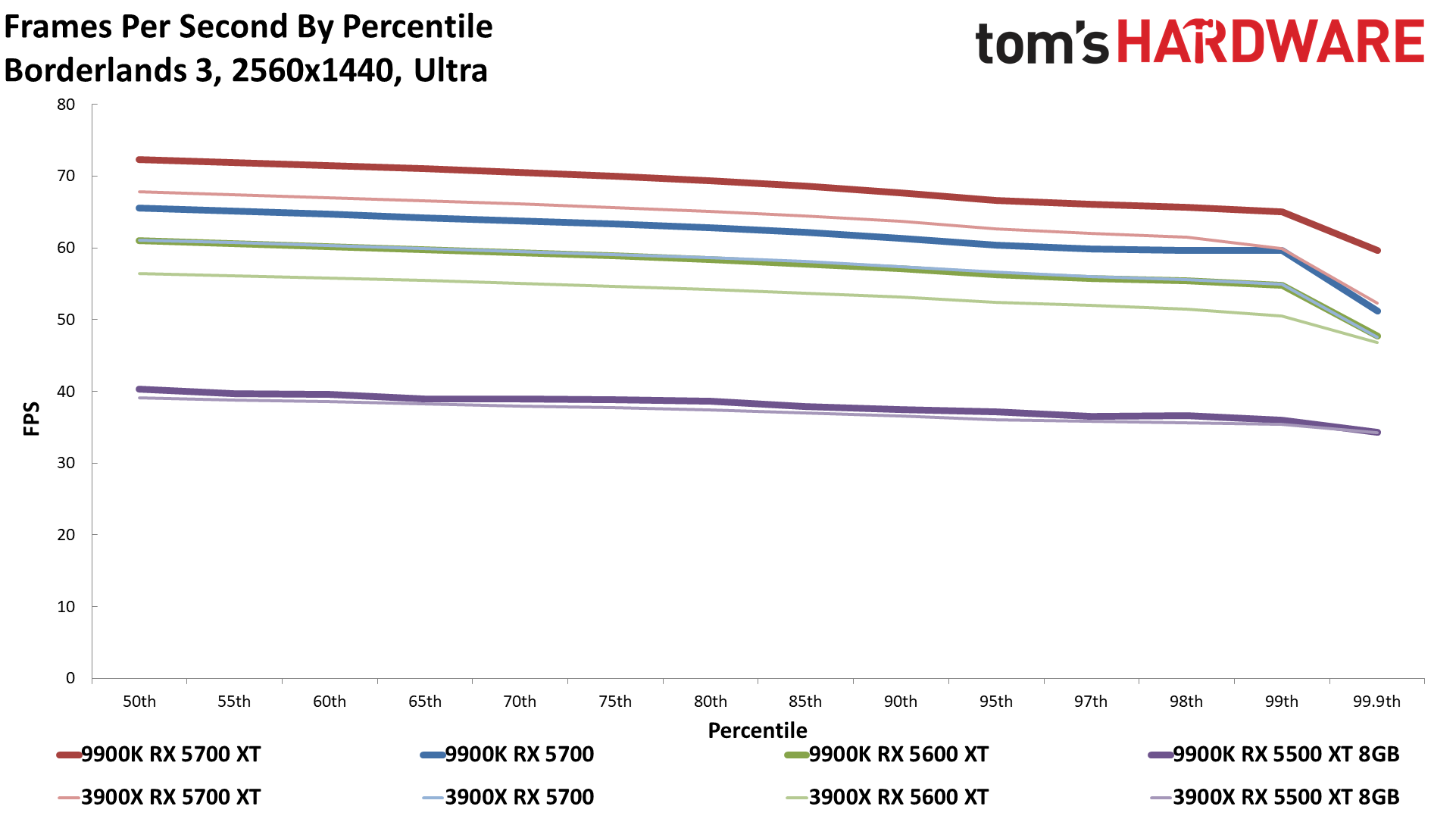

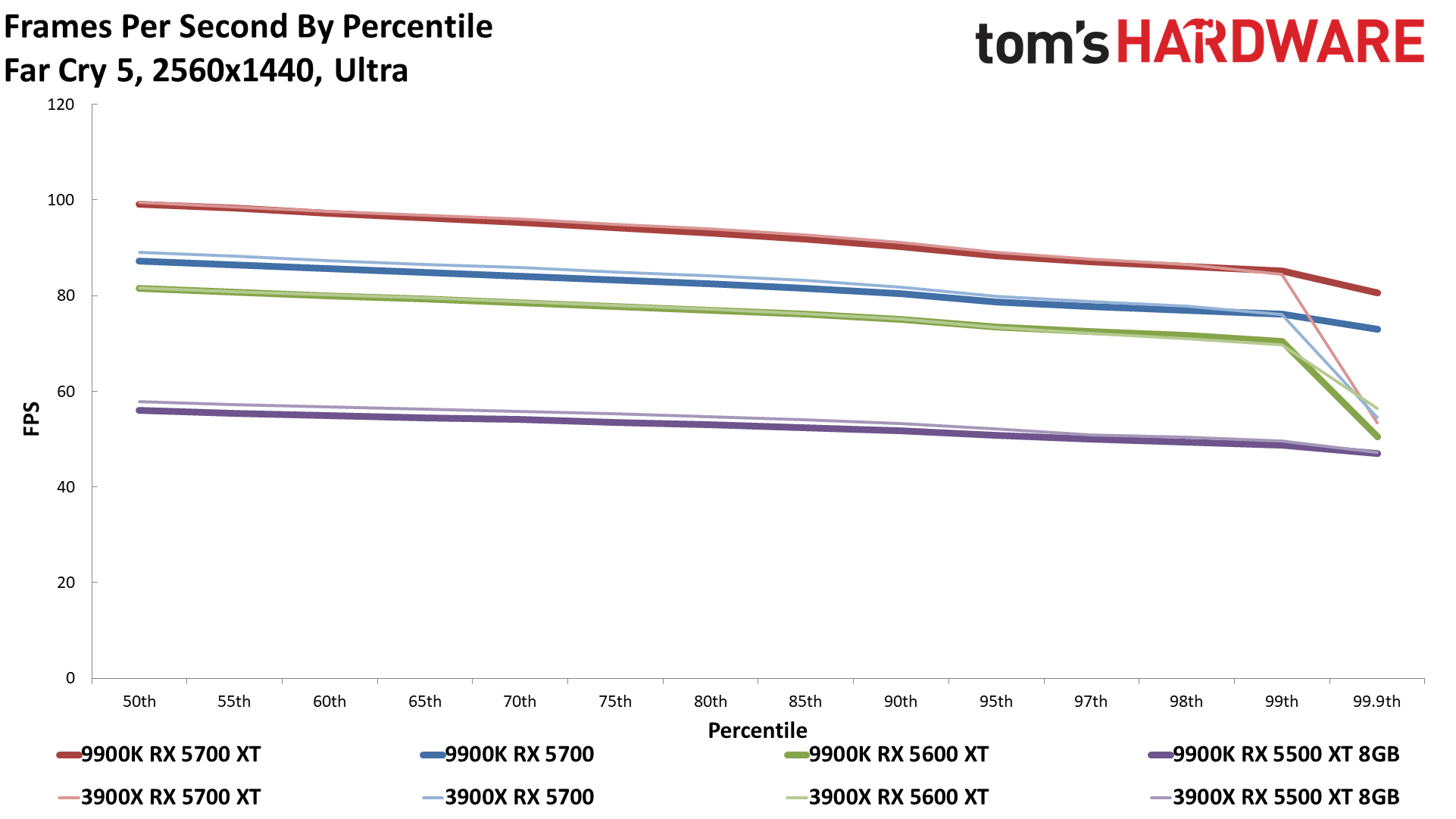

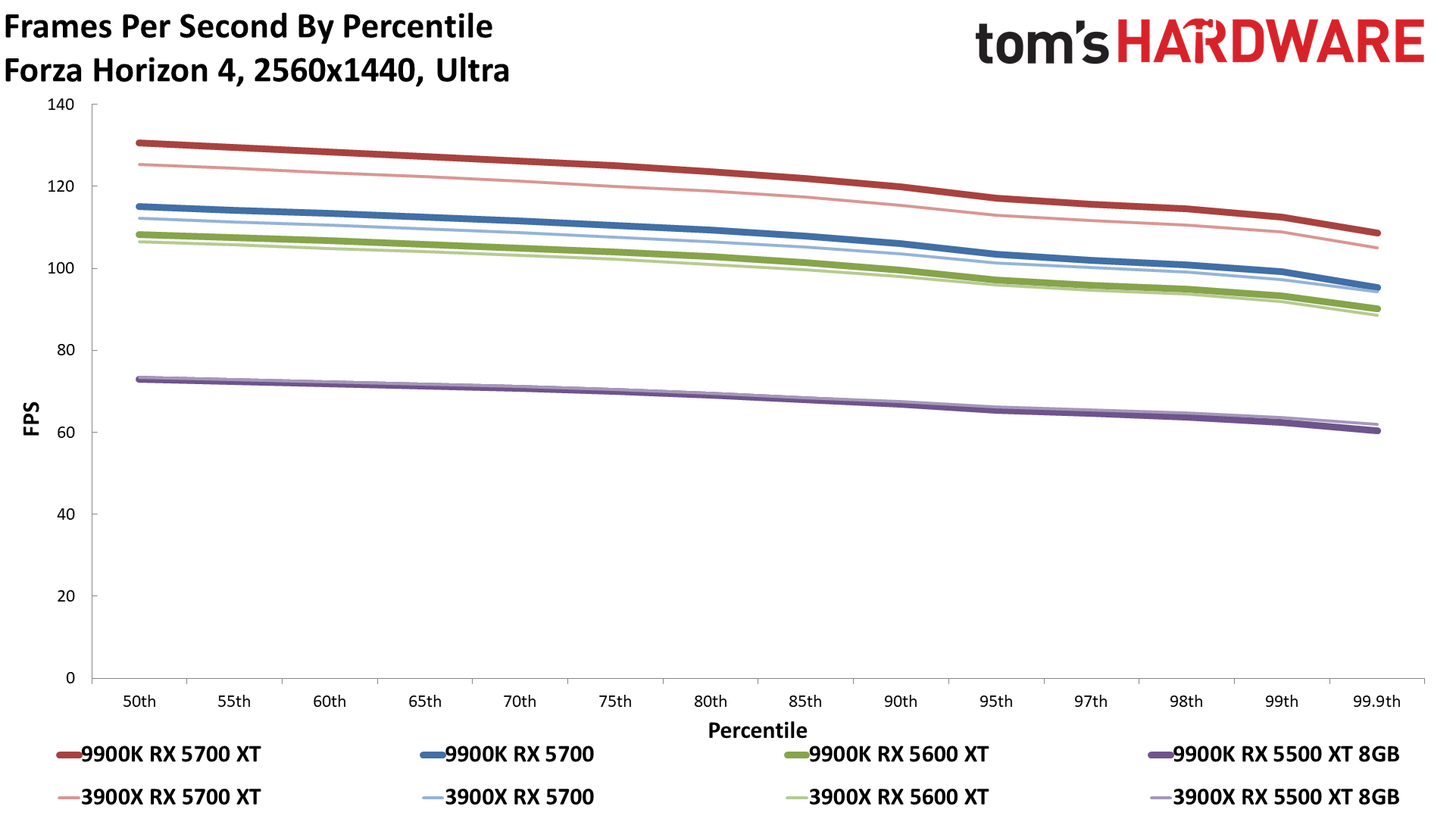

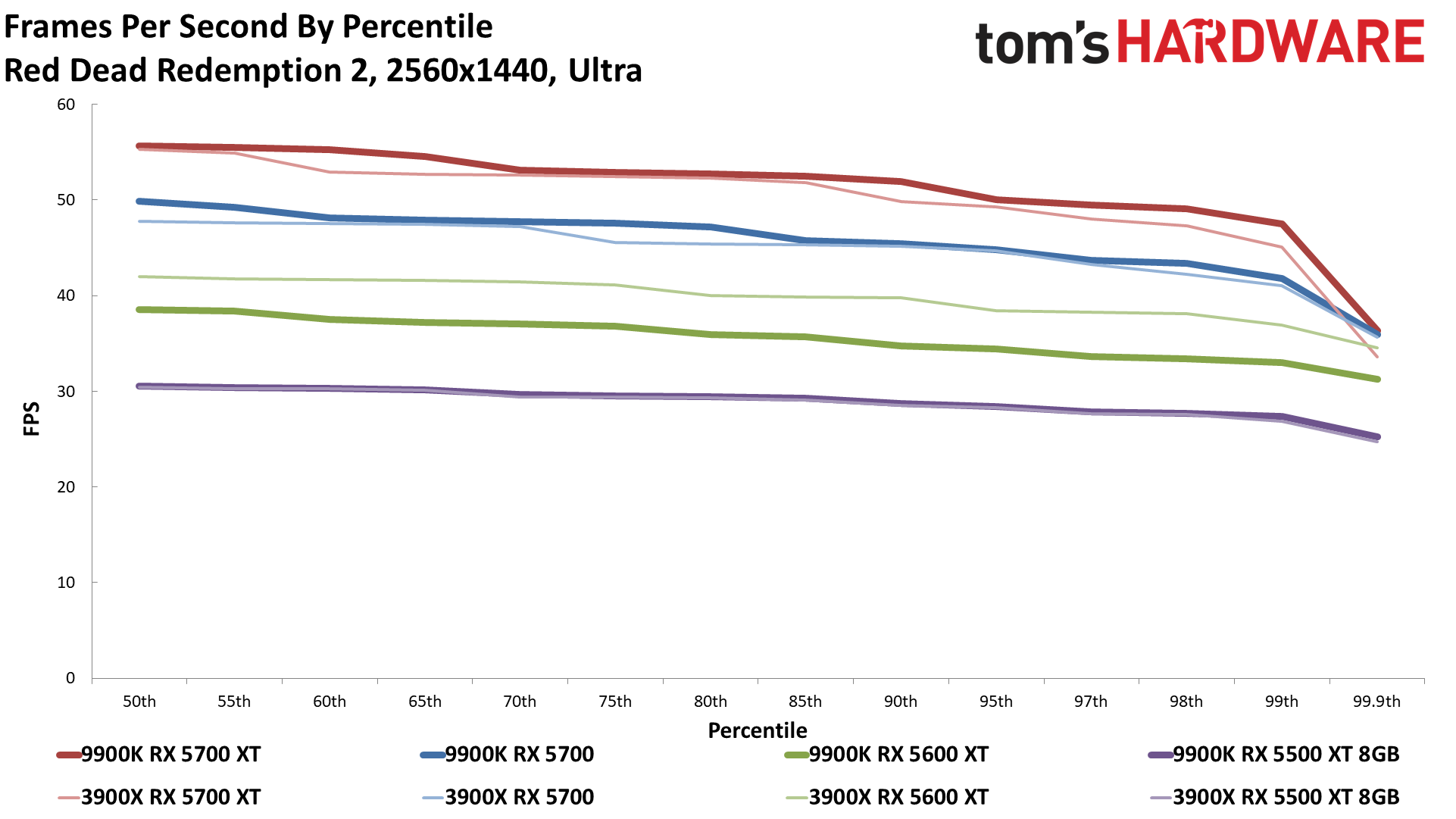

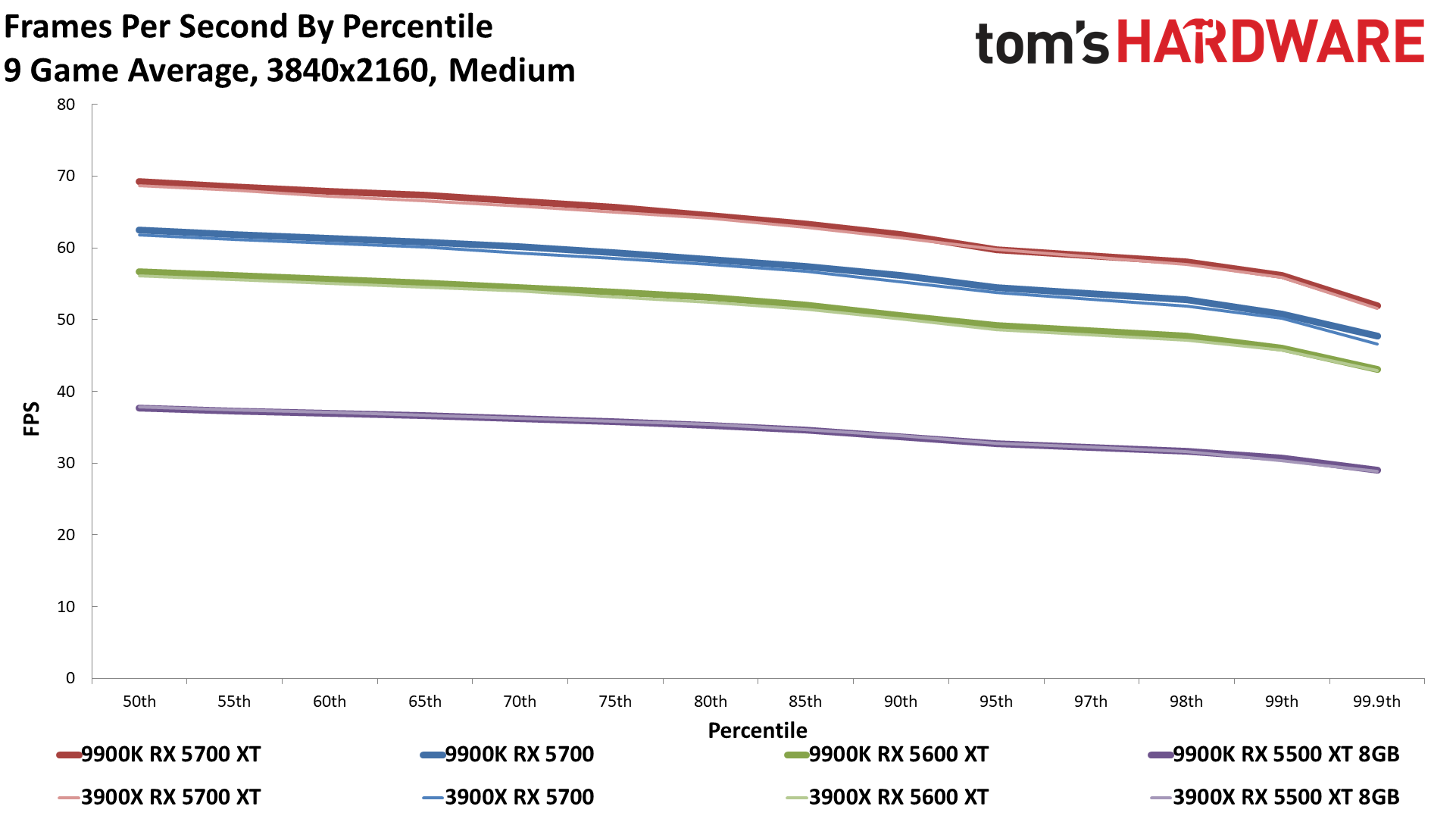

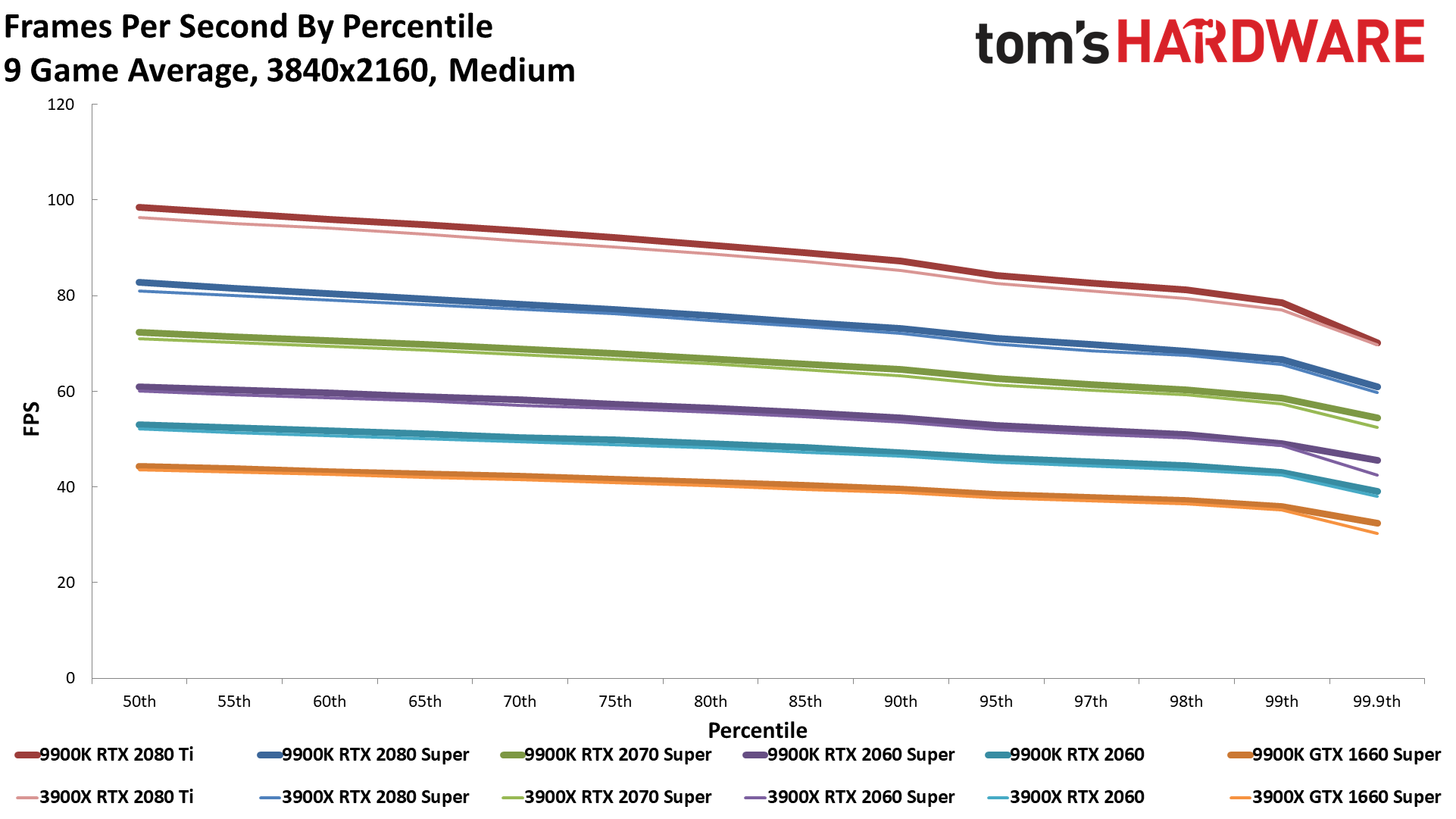

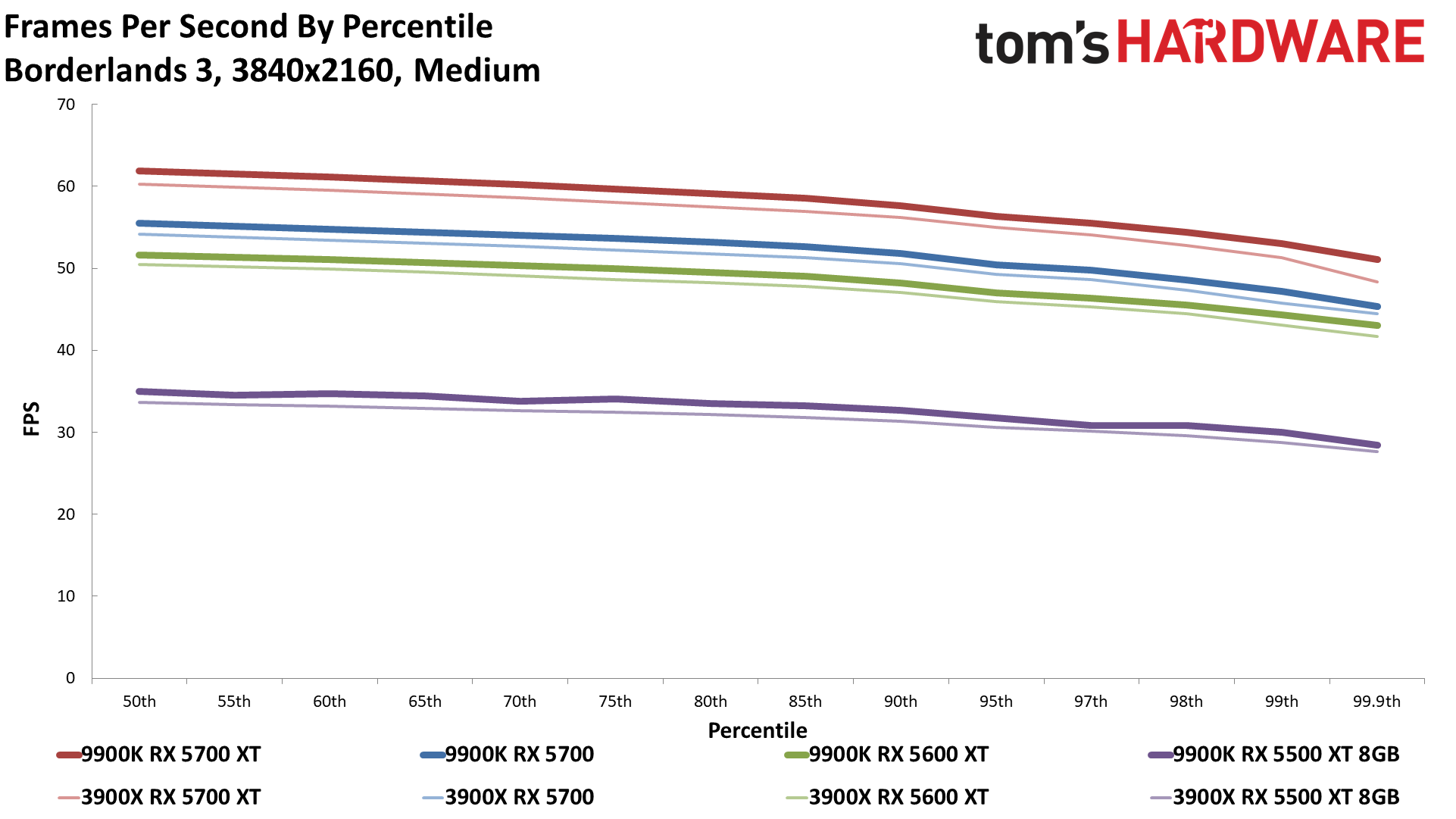

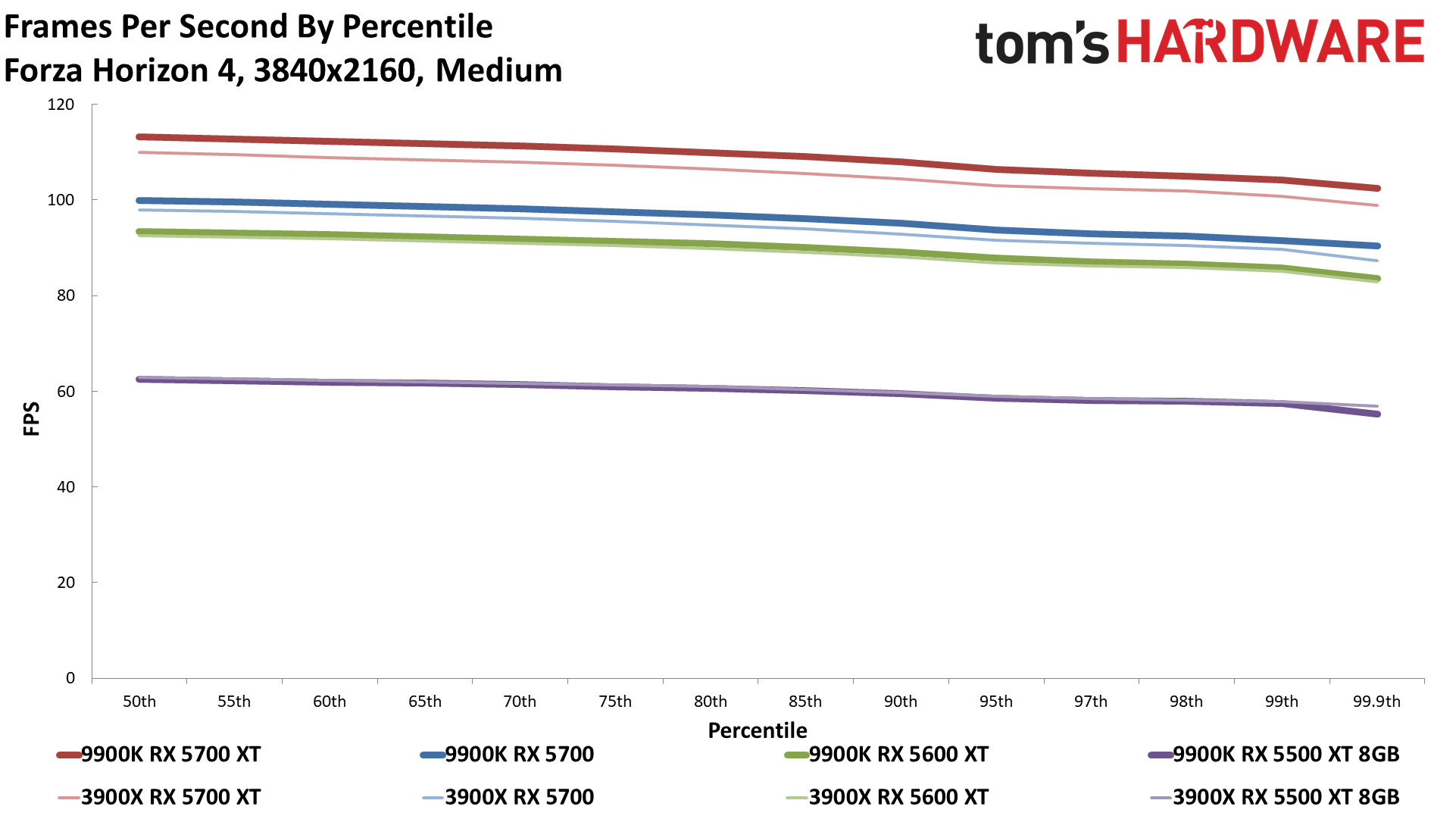

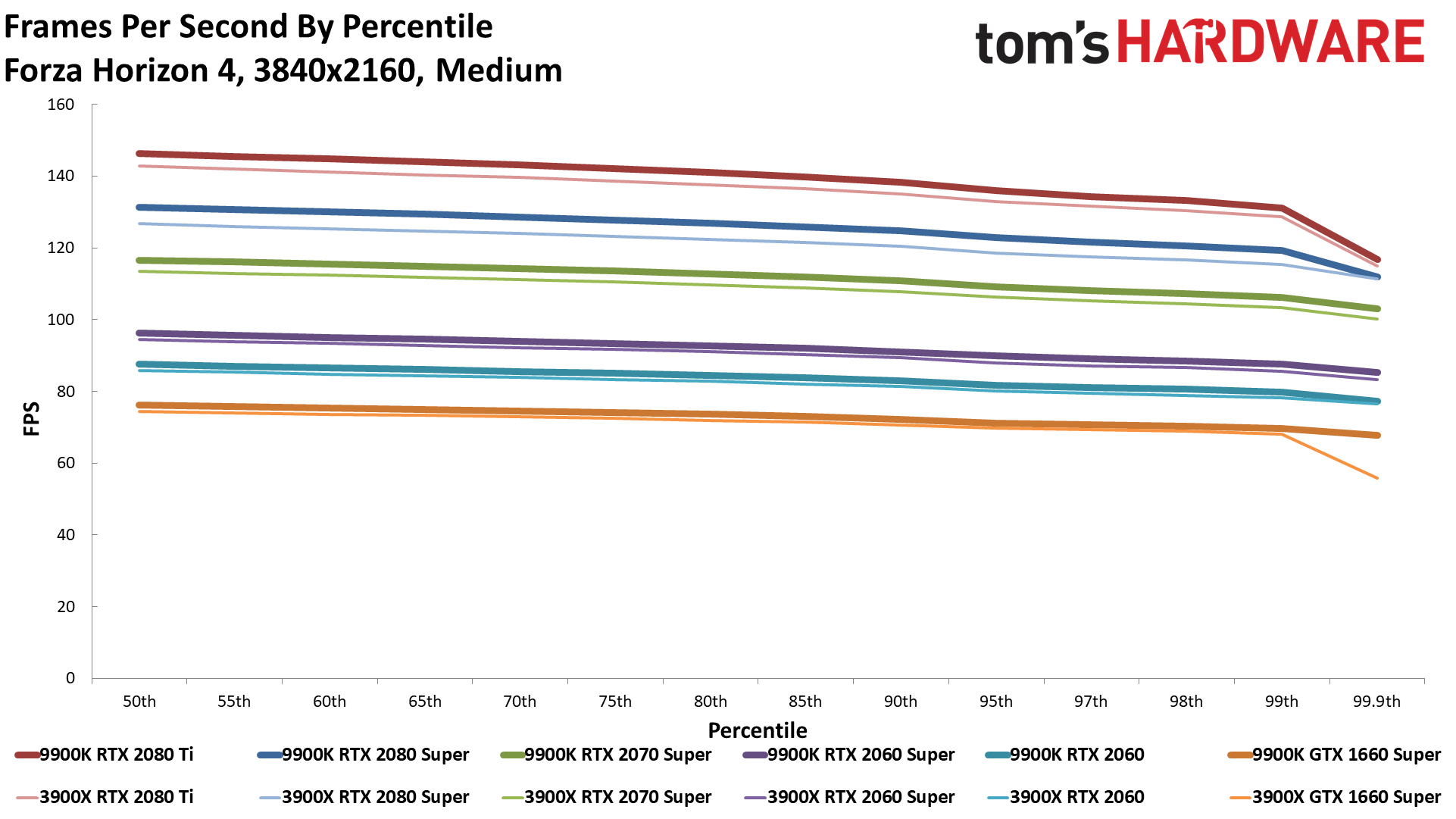

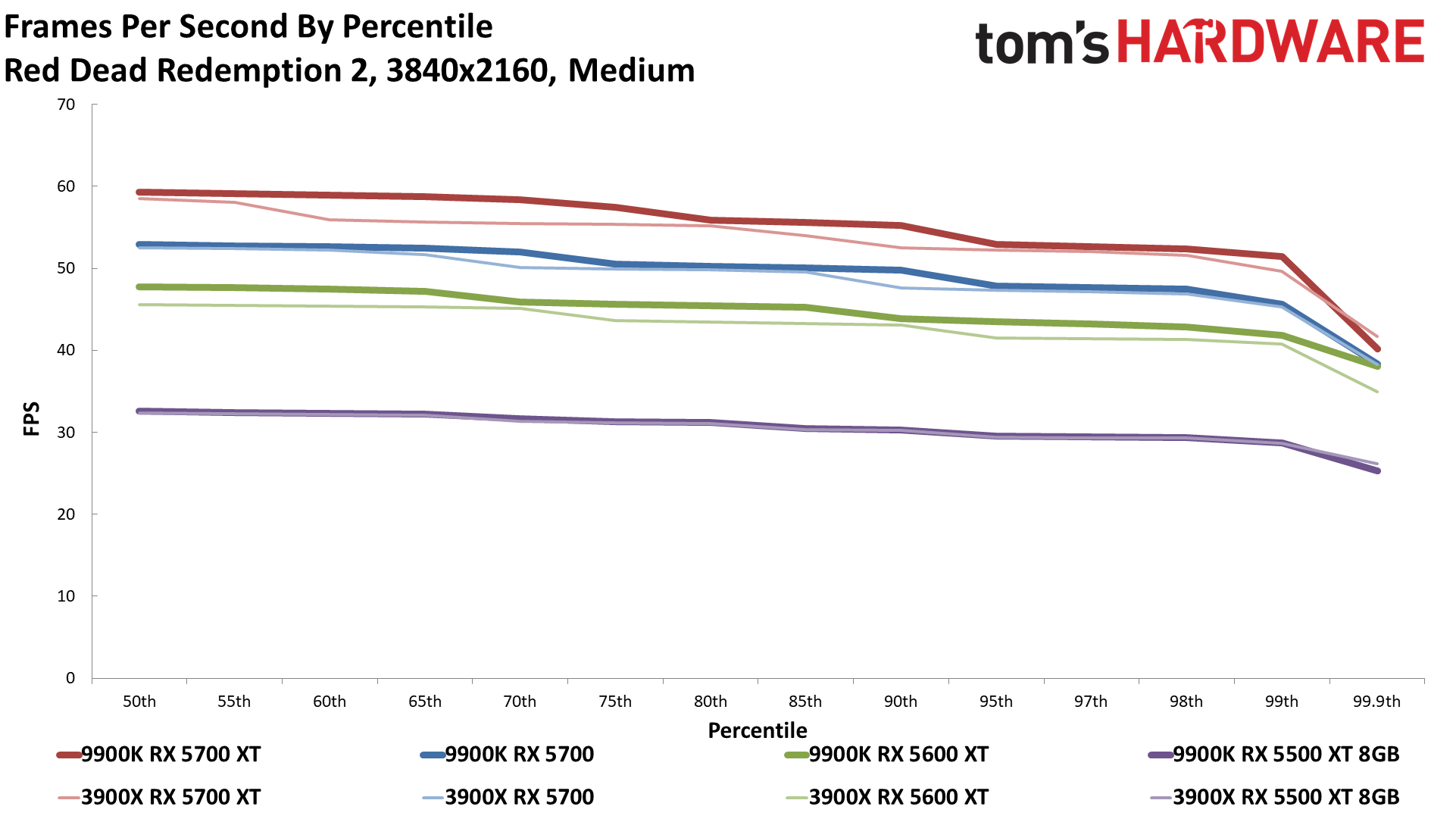

Intel only showed a 7.4% difference overall with the 2080 Super; 2070 Super had a 6.2% lead; and with the 2060 Super, RX 5700 XT, and RX 5700 the 9900K was around 4% faster. The remaining GPUs were all within 3%, with the RX 5500 XT 8GB being effectively tied. The 3900X actually comes in slightly ahead overall with the 5500 XT, and wins a few more results with the 5600 XT than elsewhere, which indicates that perhaps the PCIe Gen4 connectivity helps lower end GPUs slightly more — or it may simply be margin of error.

Again, that’s the largest difference we’re going to see in our testing. It looks like conventional wisdom was right, but it’s good now and then to do a sanity check. Let's get through the remaining GPUs to see how the difference between the two processors become essentially meaningless (for gaming purposes) with lesser GPUs as the resolution and quality are increased.

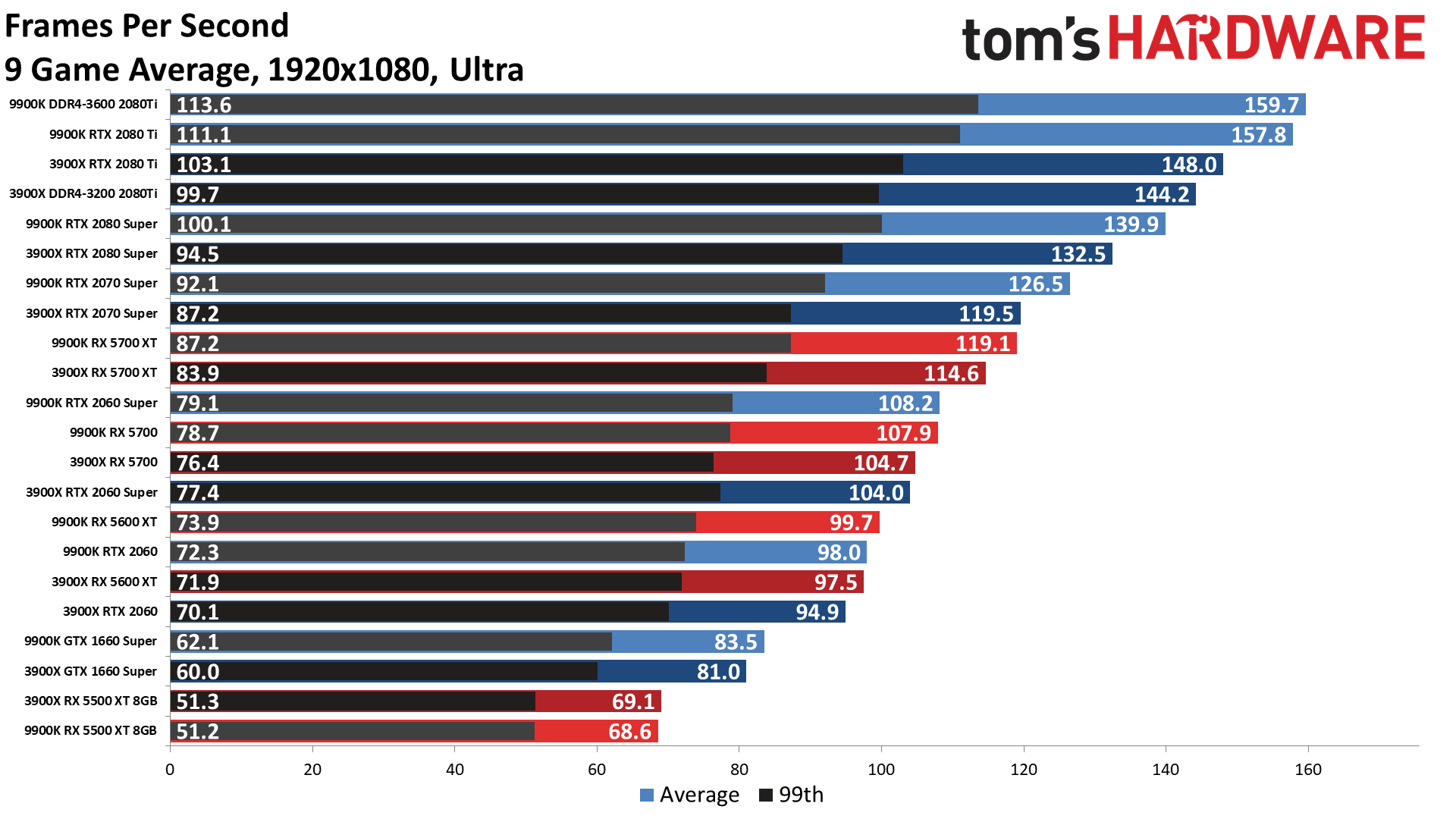

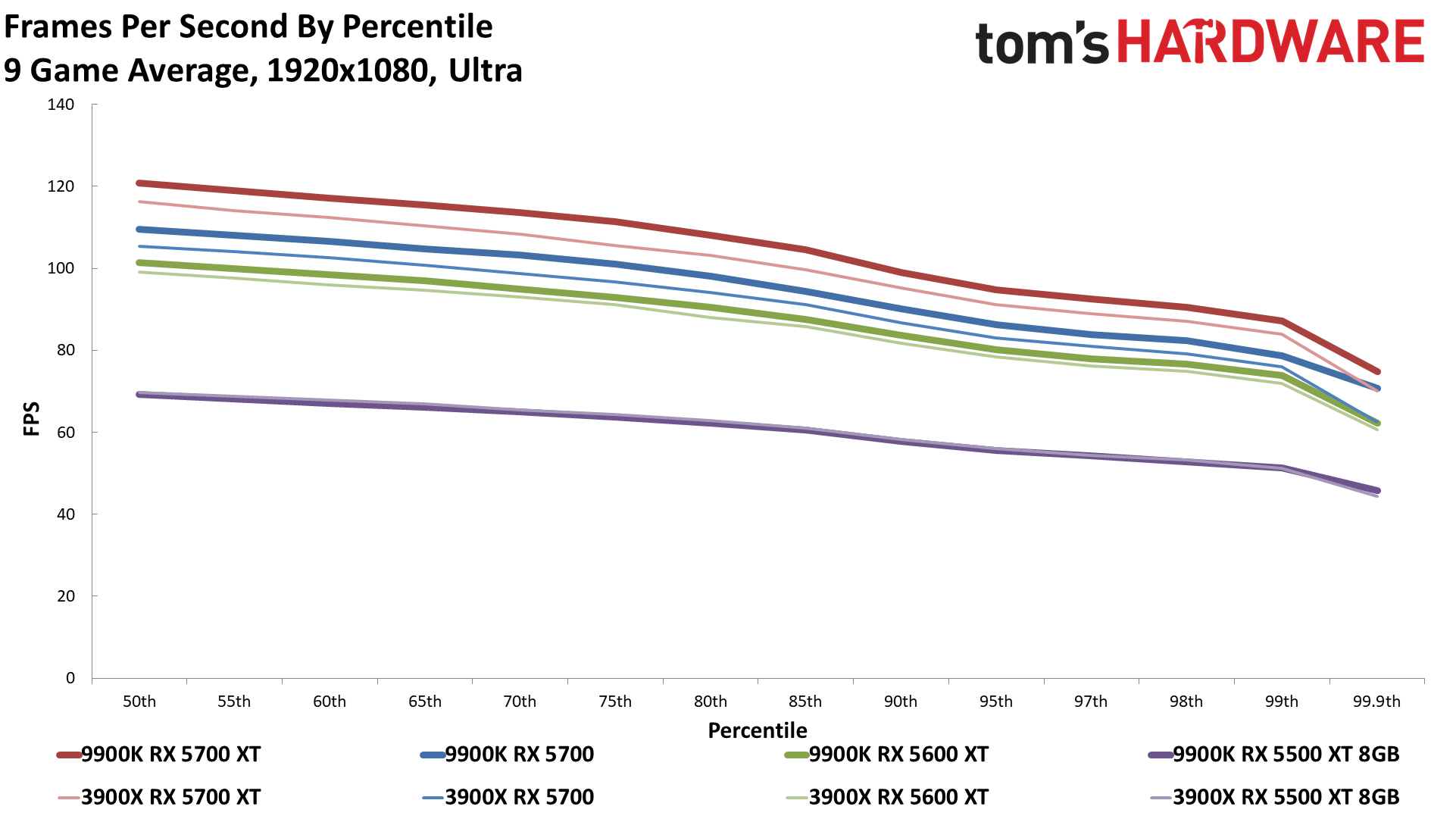

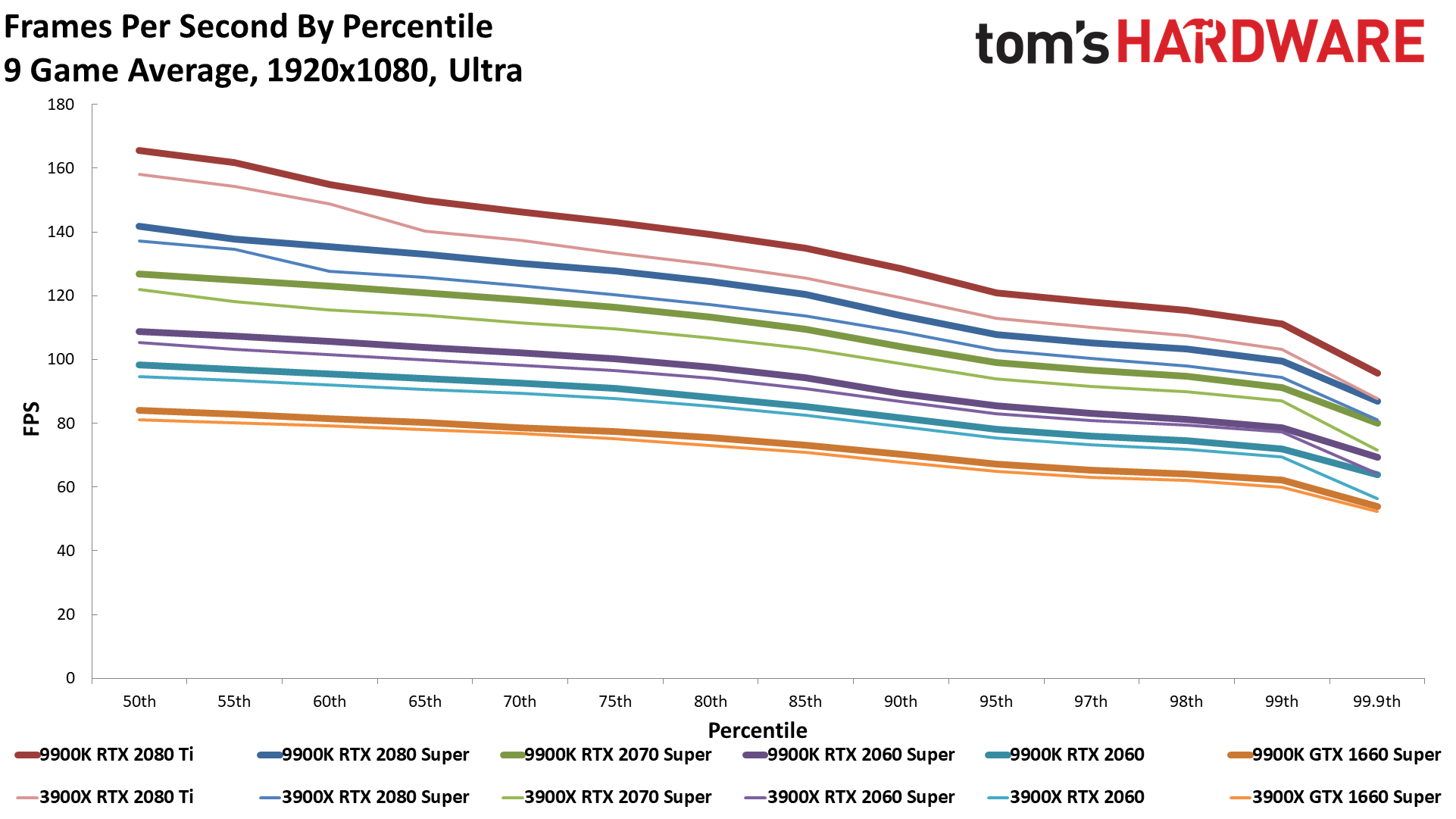

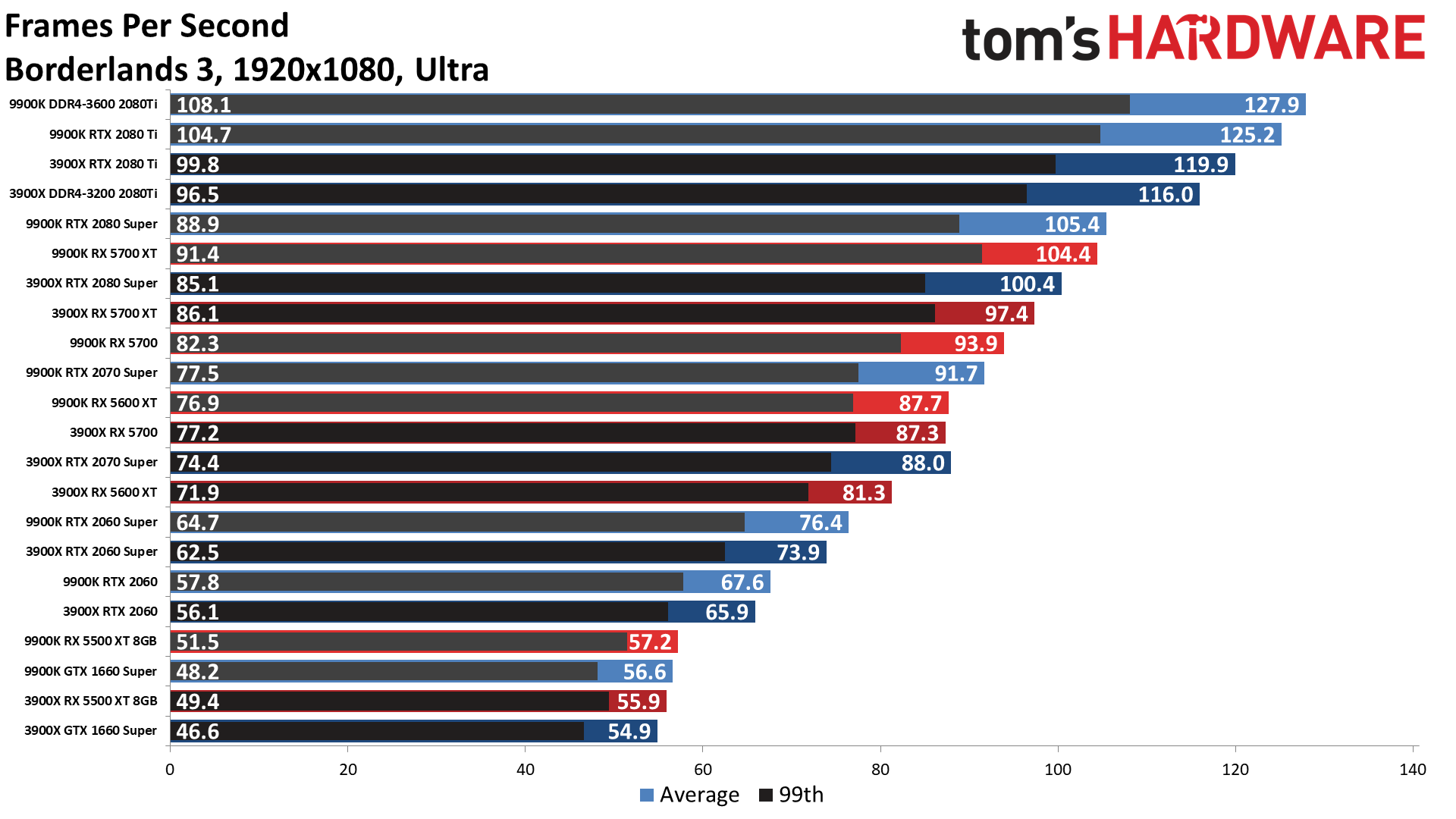

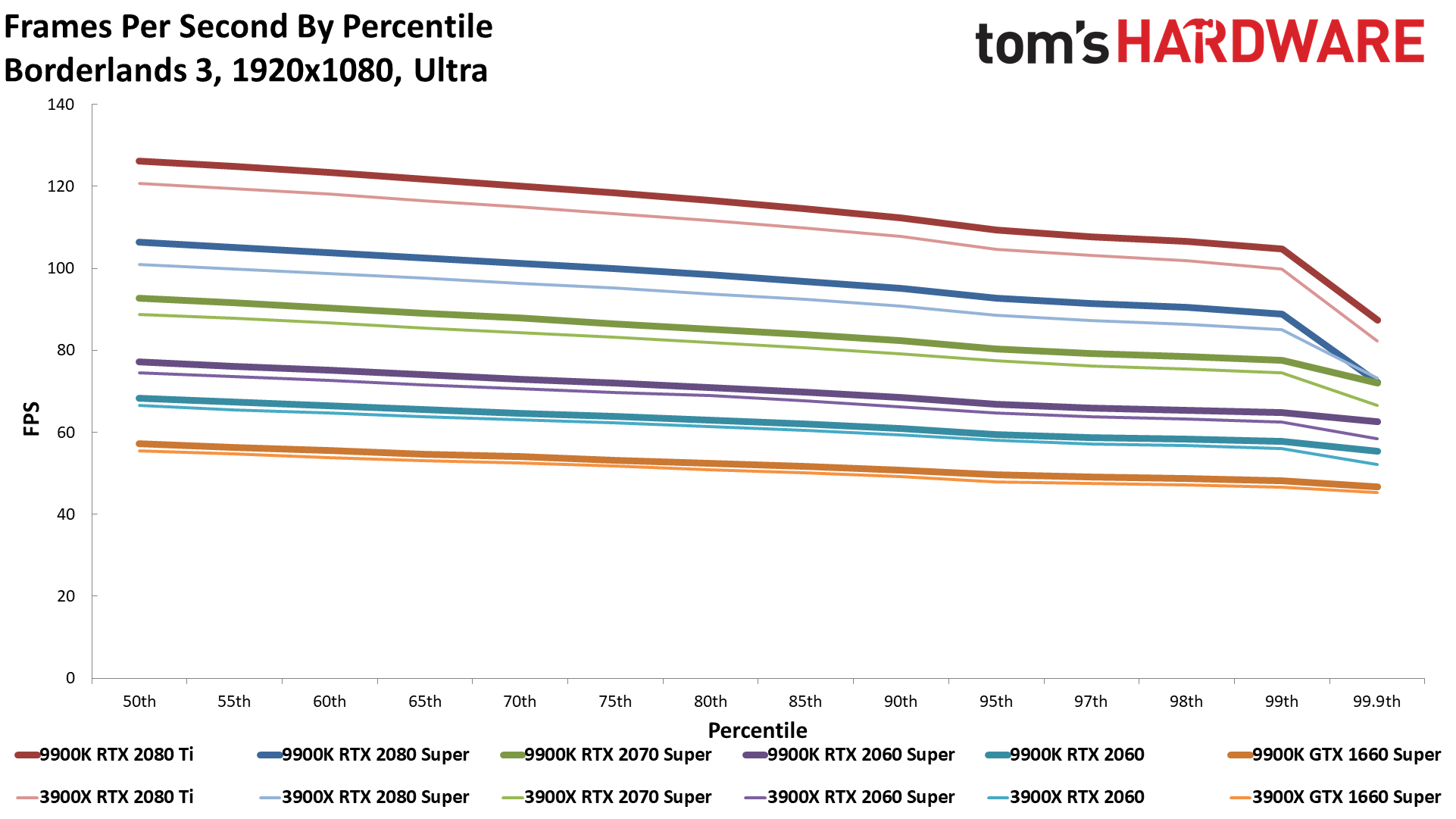

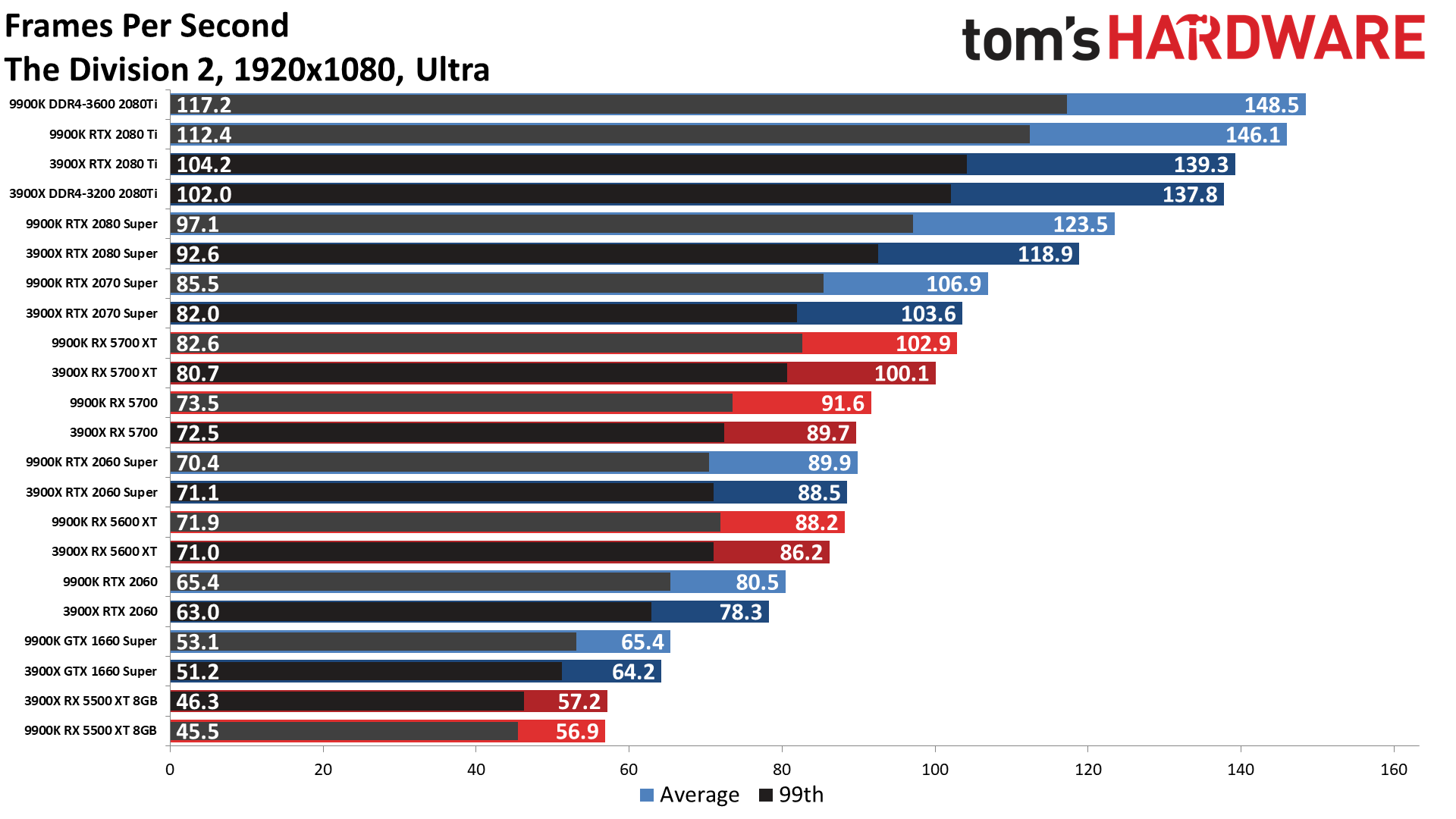

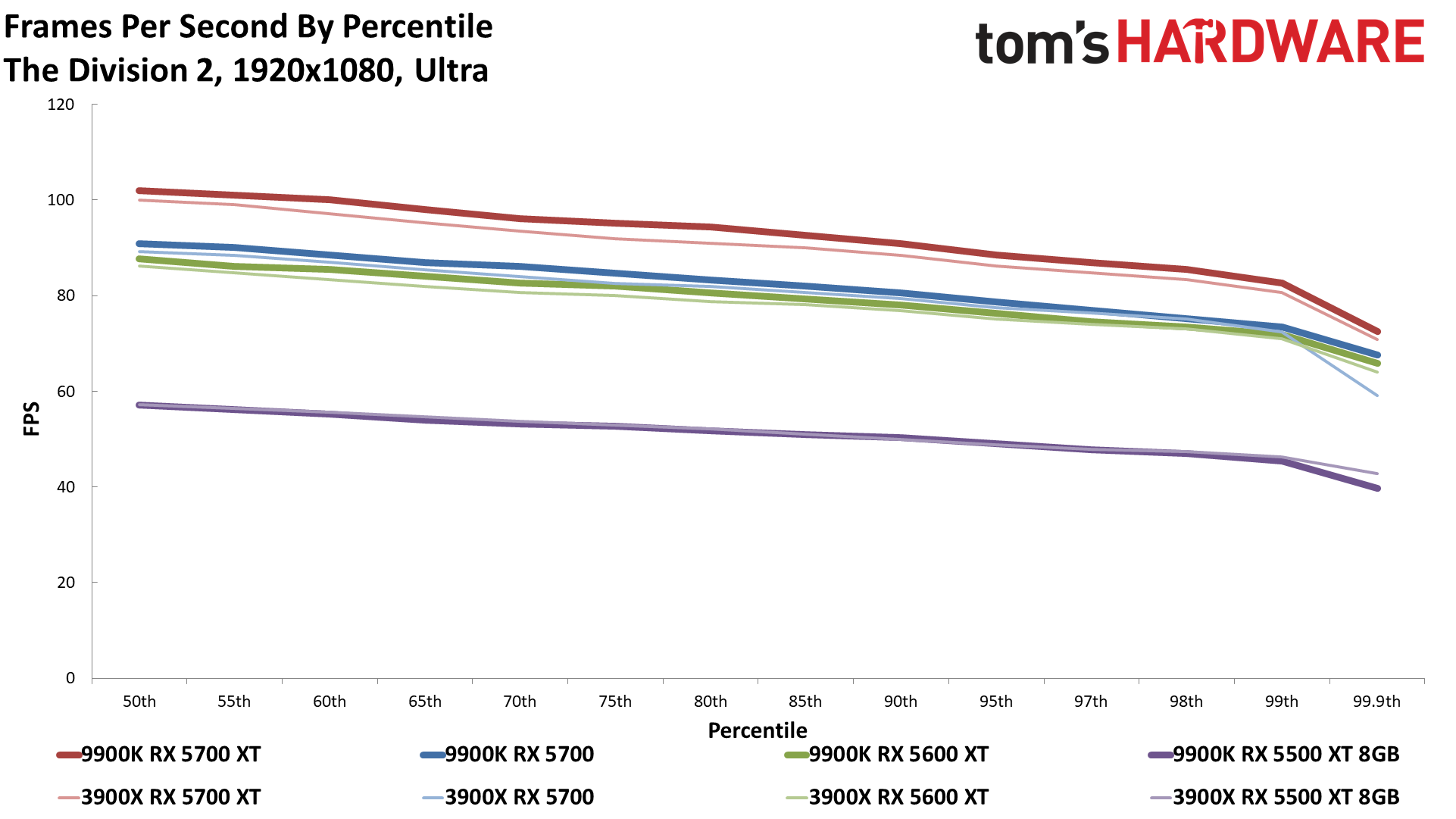

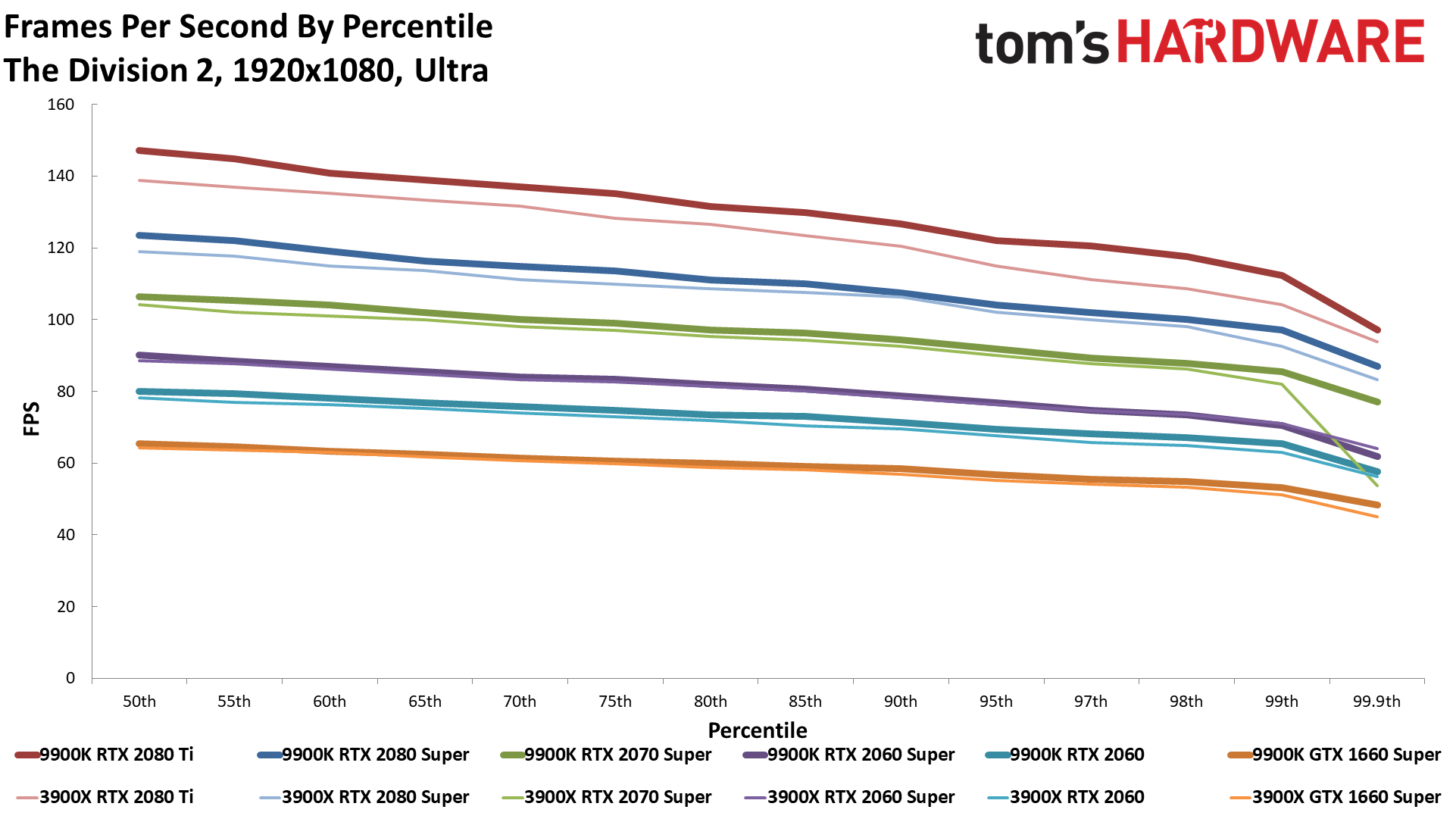

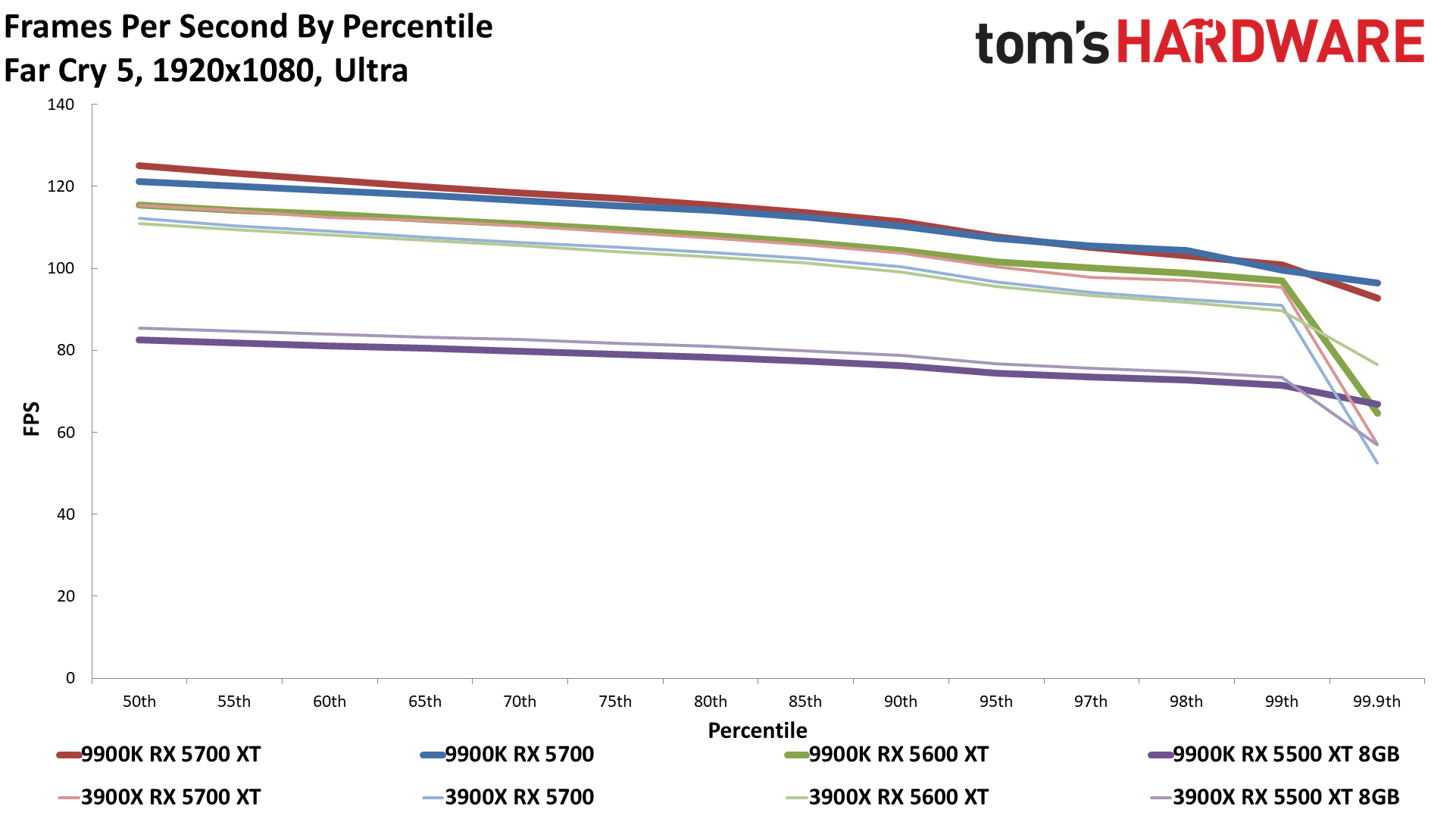

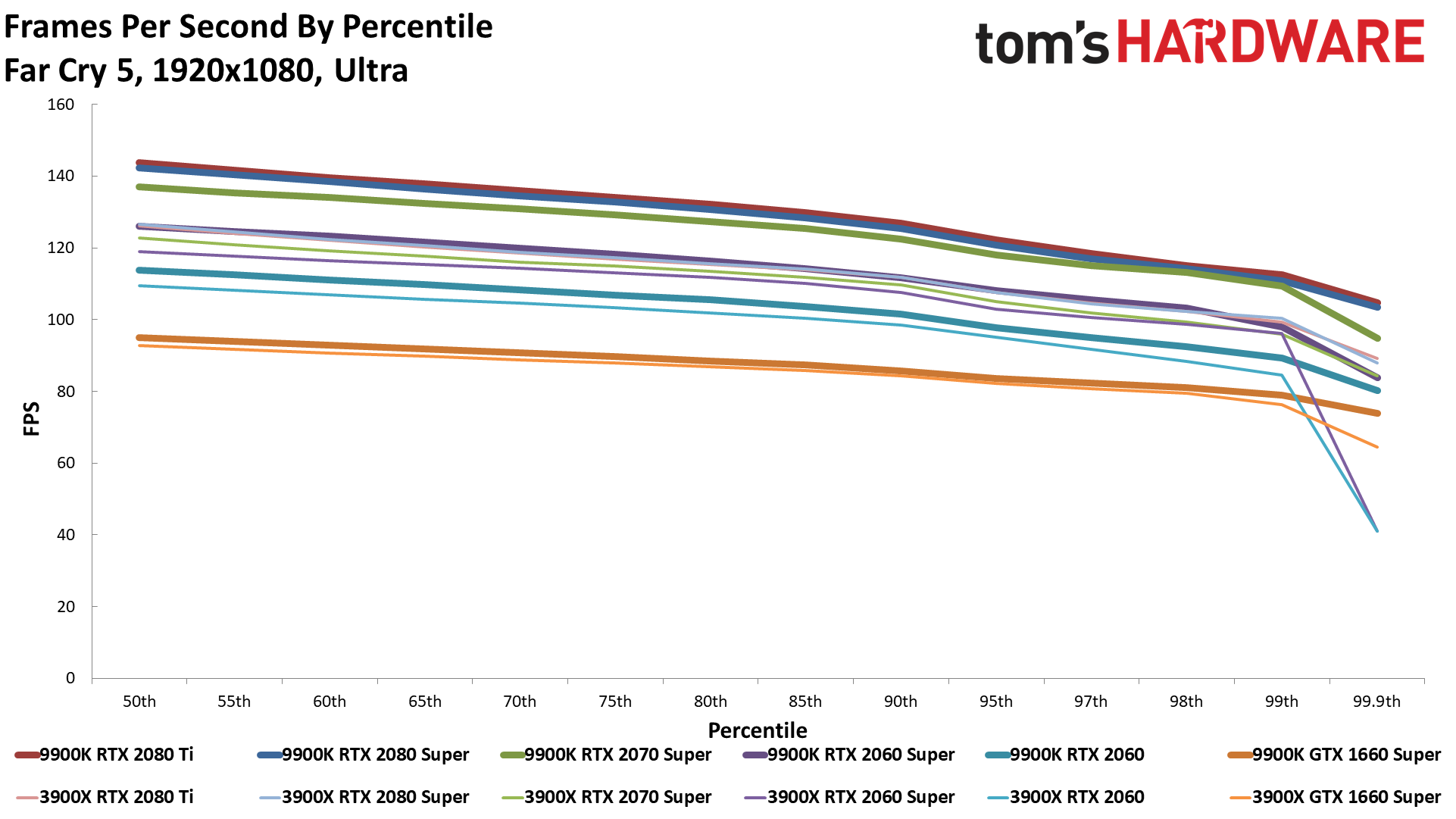

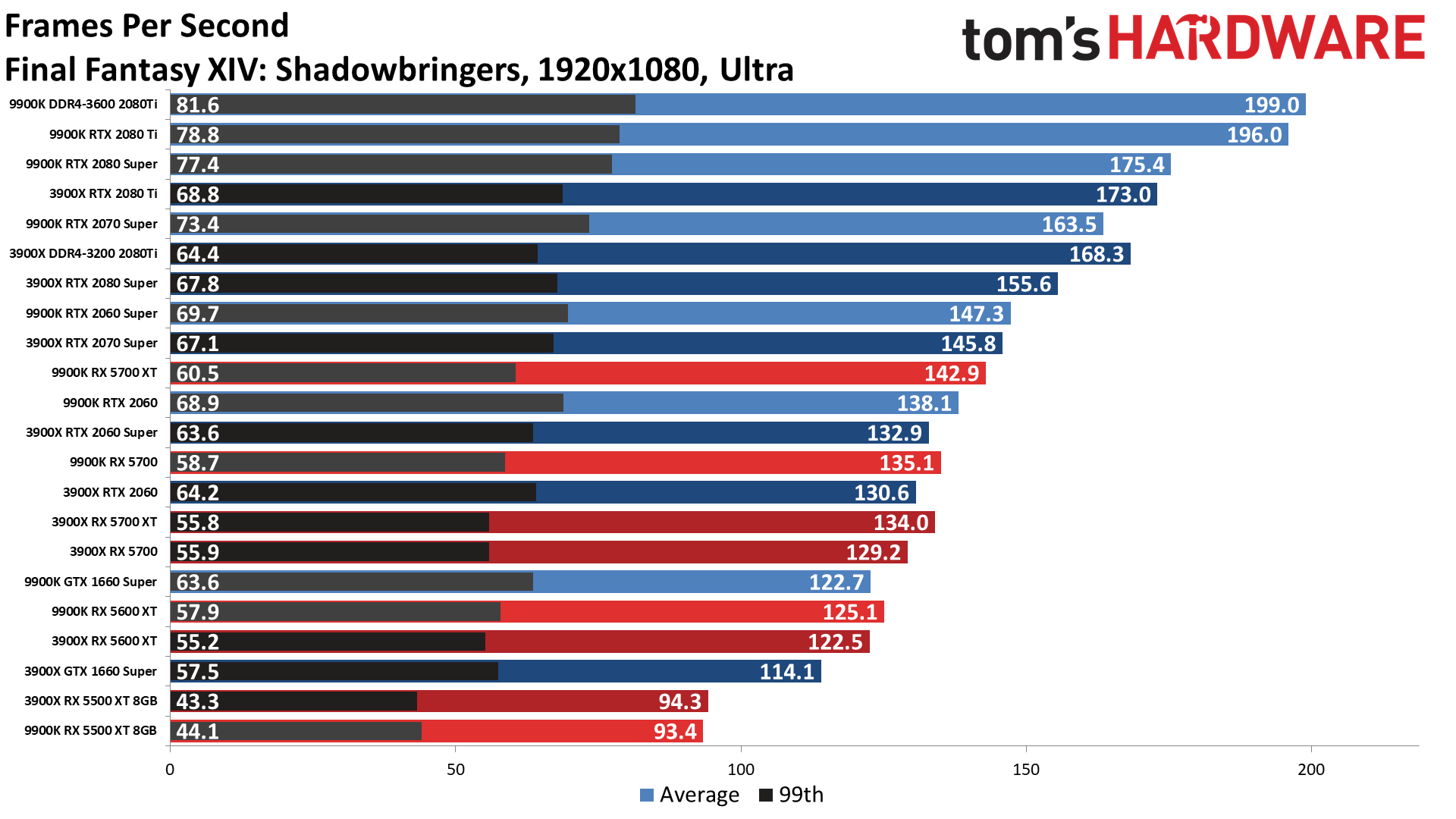

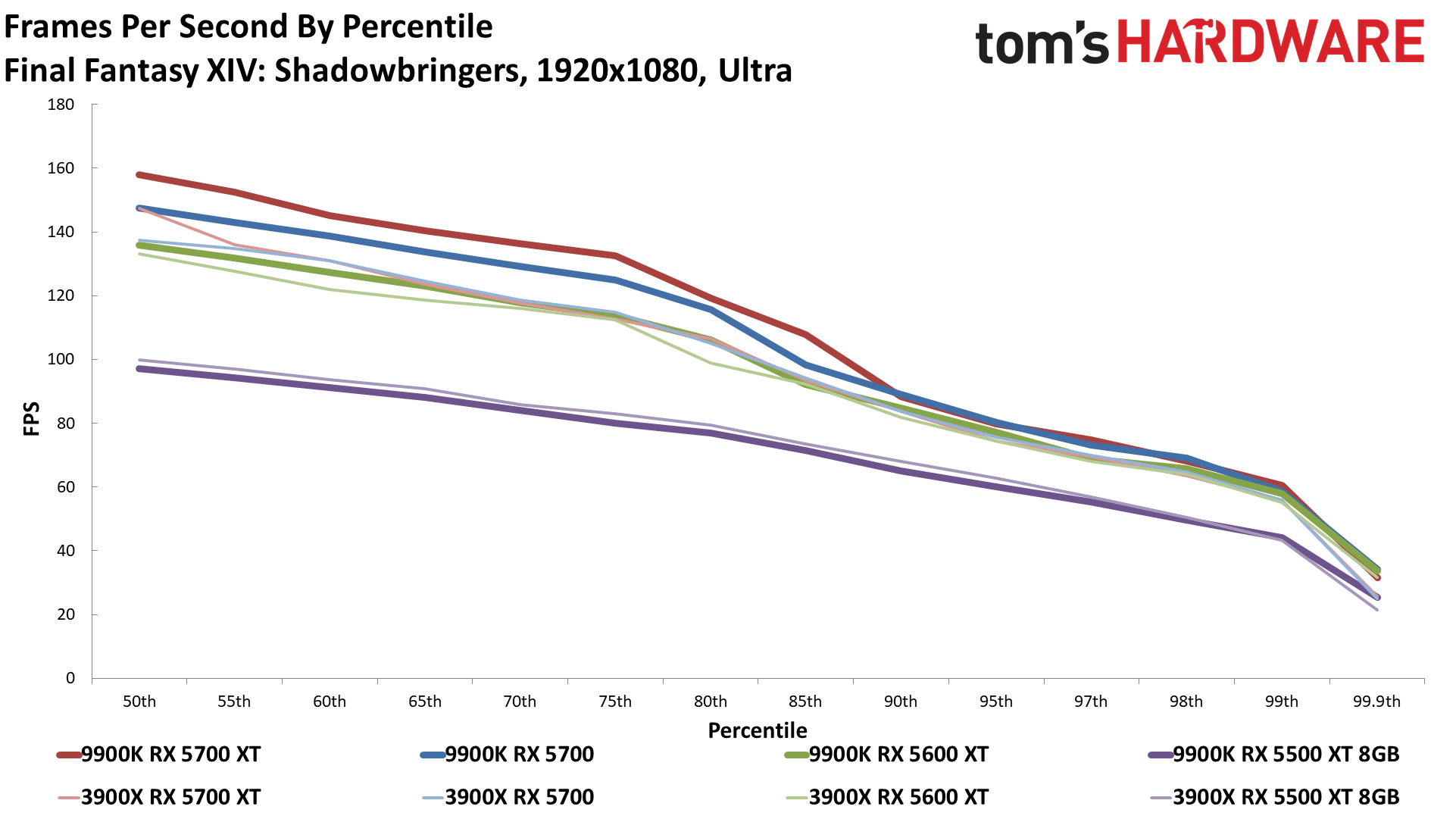

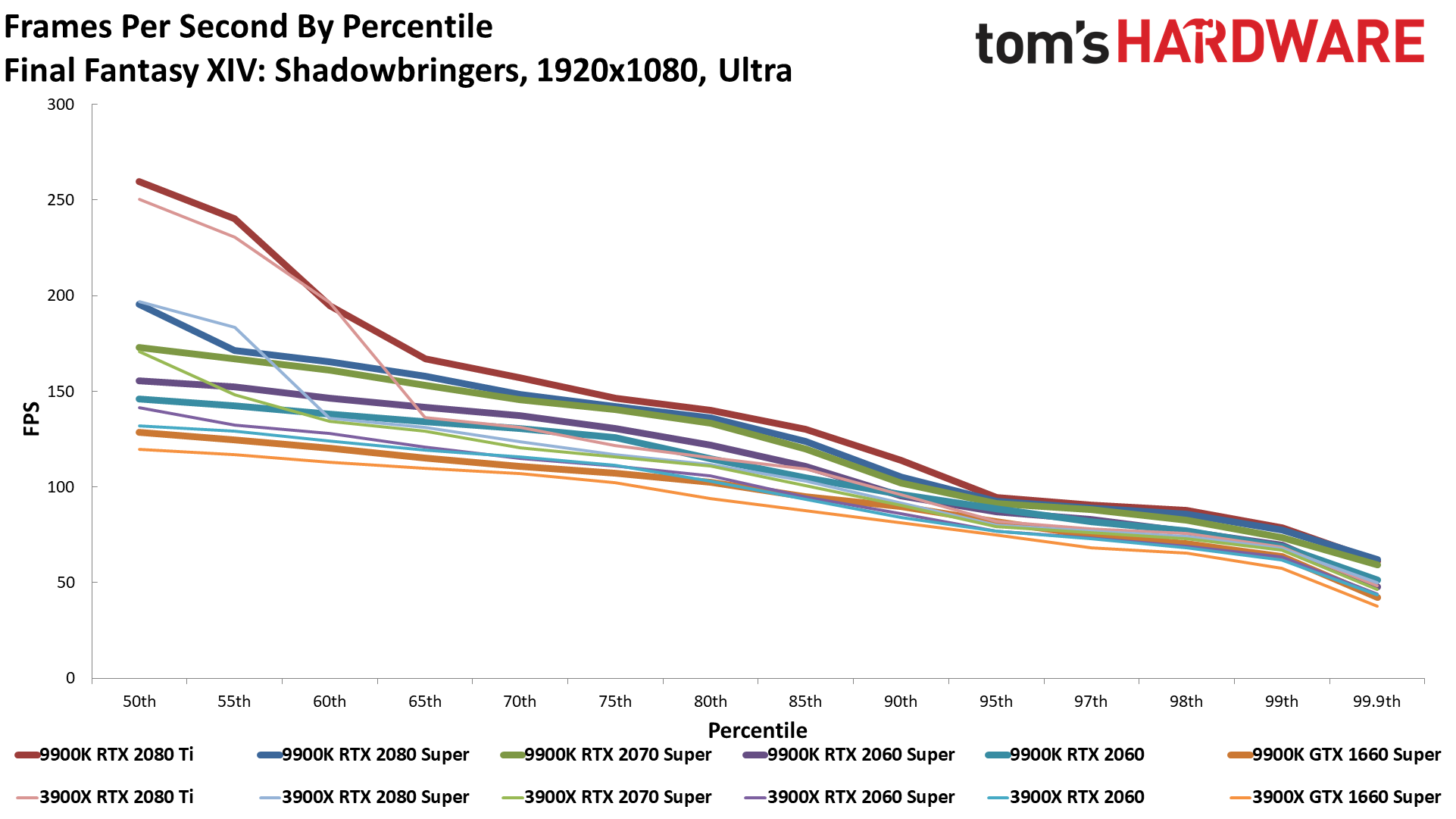

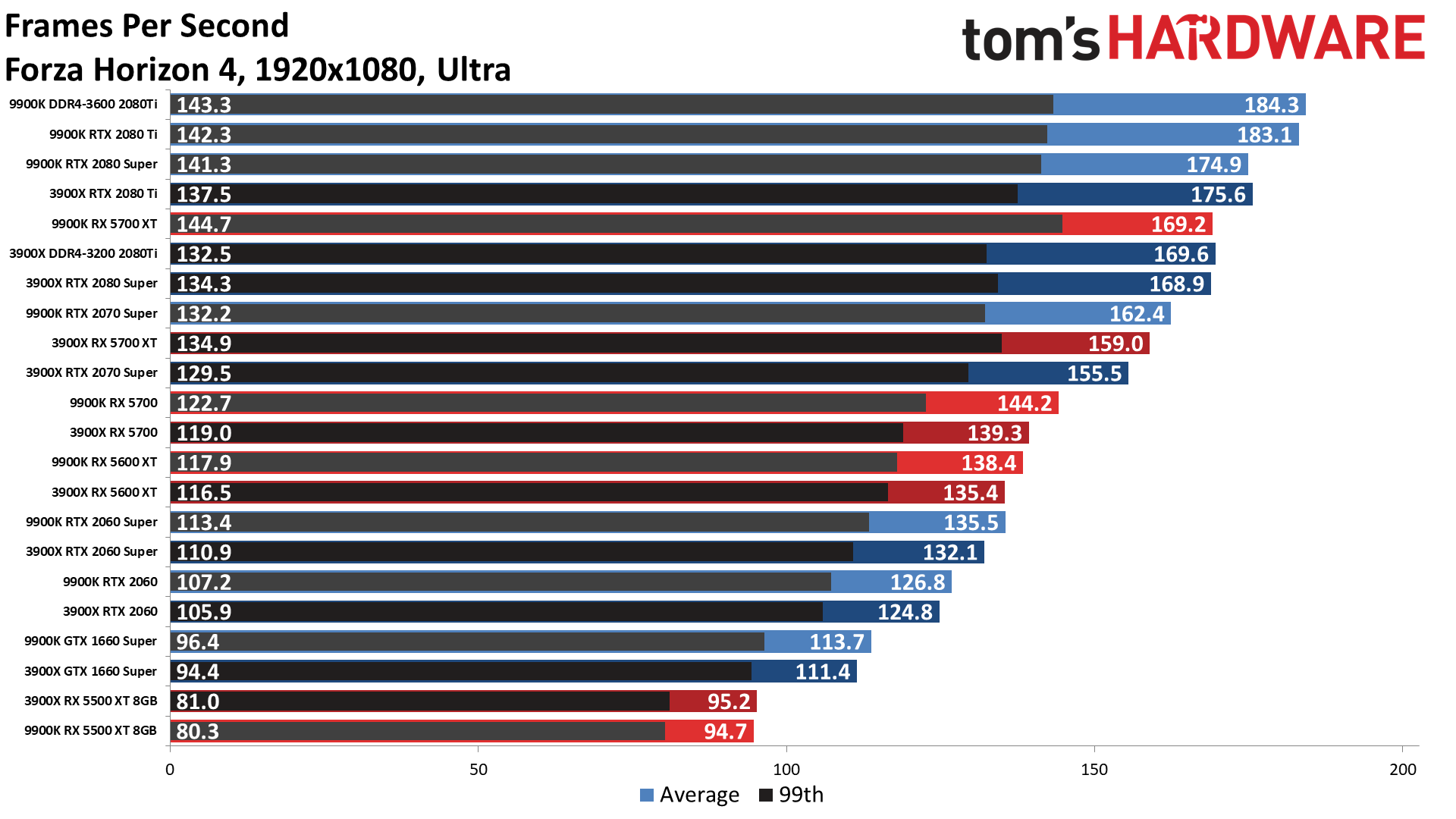

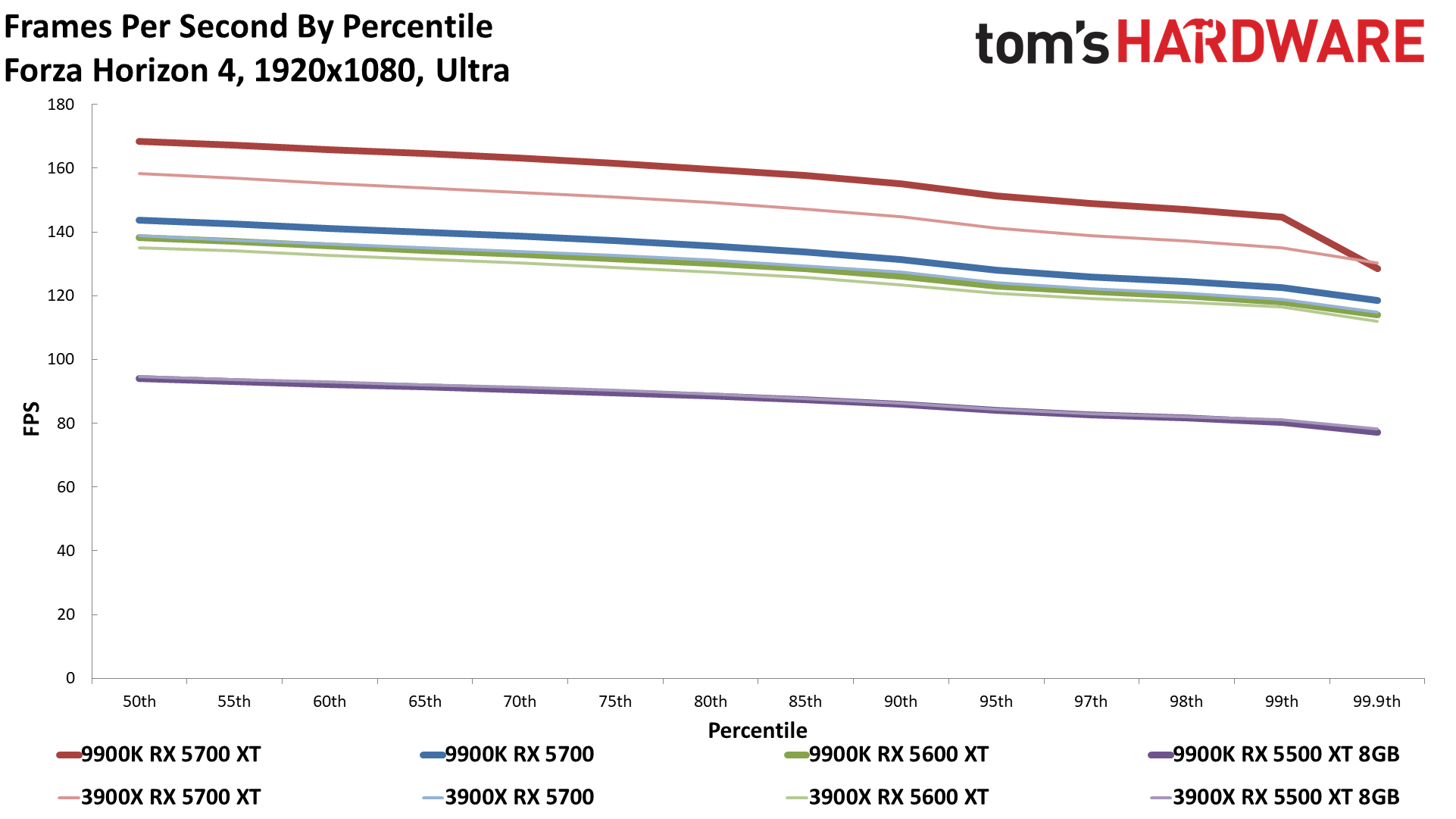

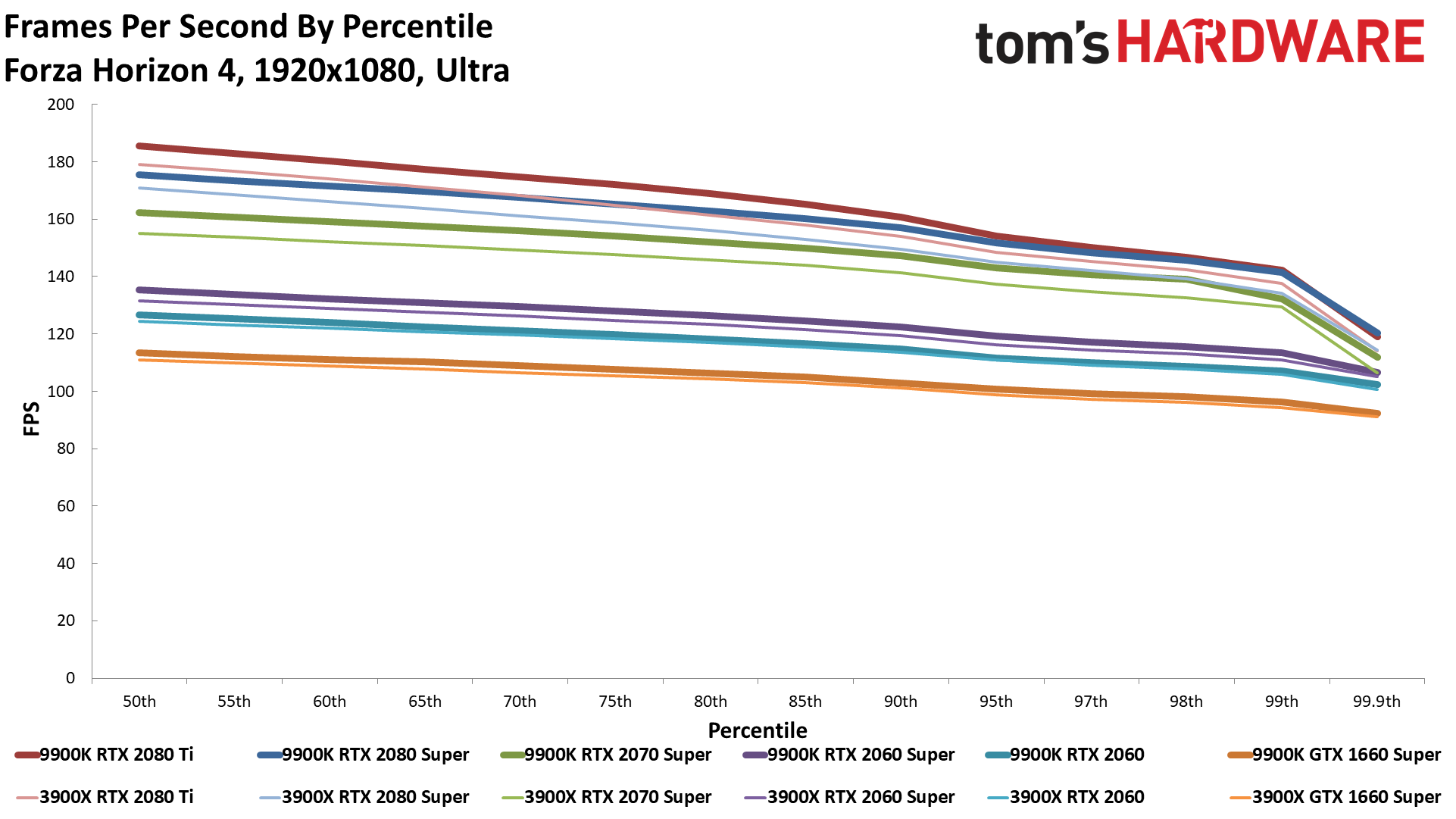

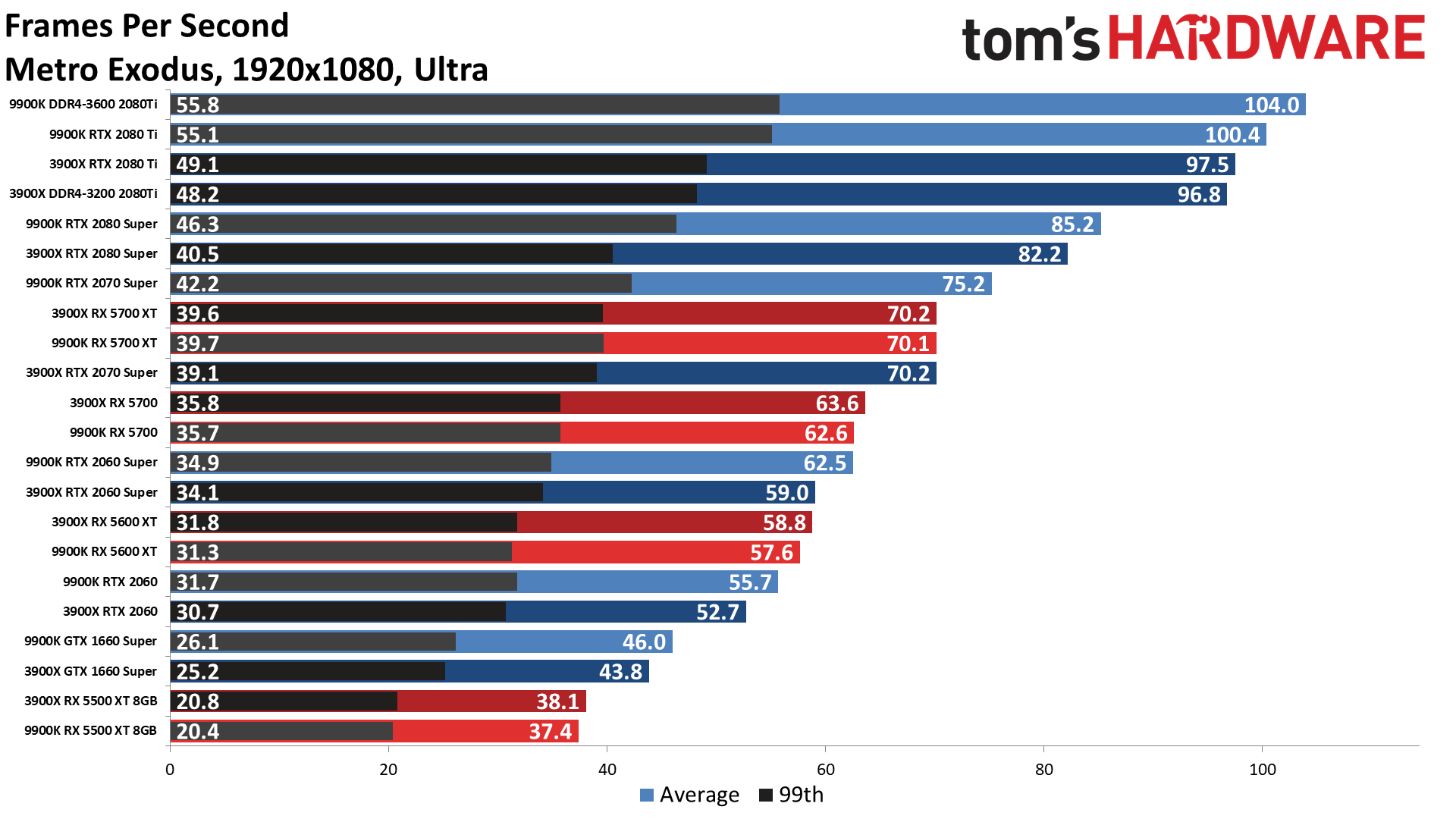

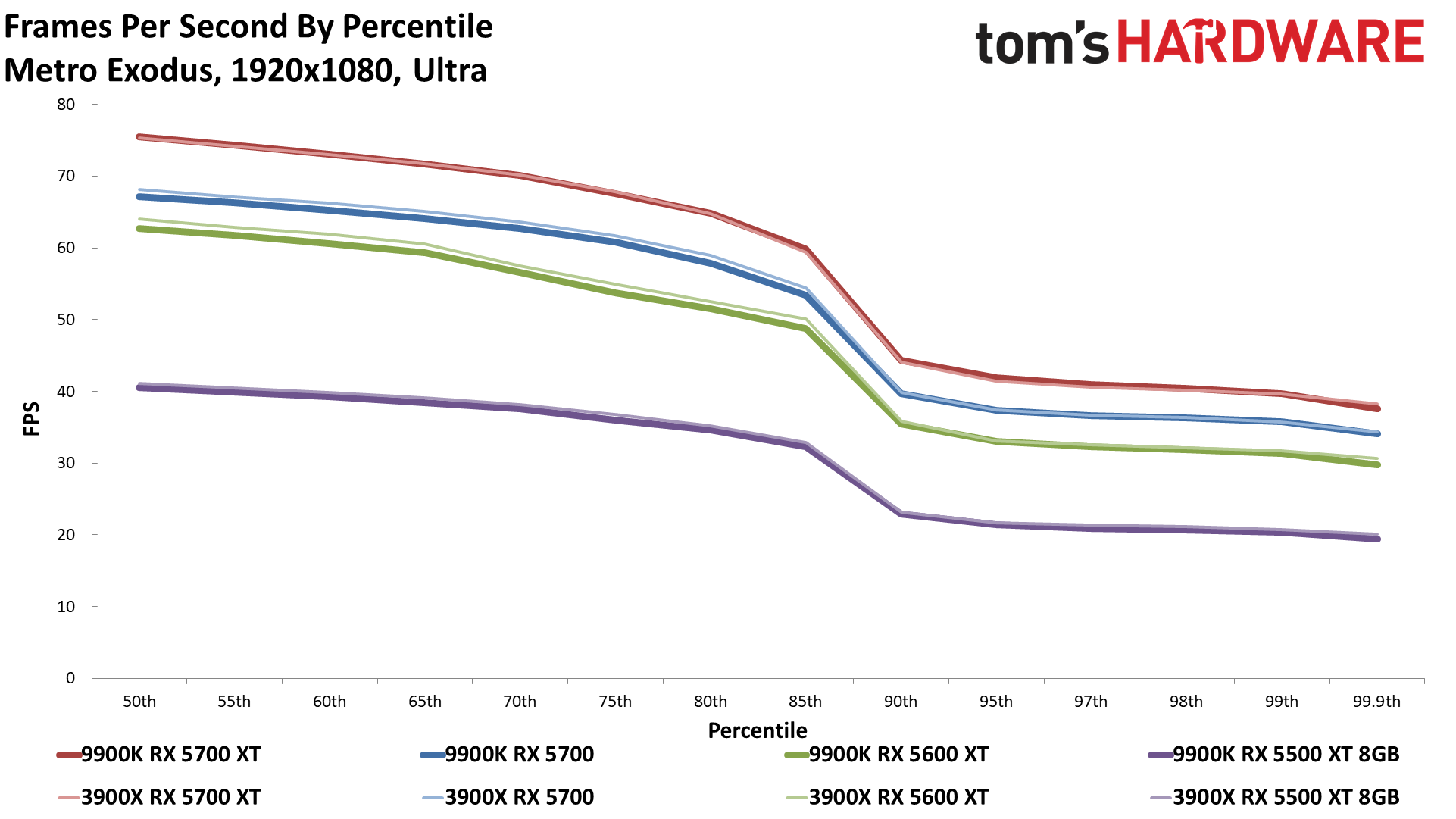

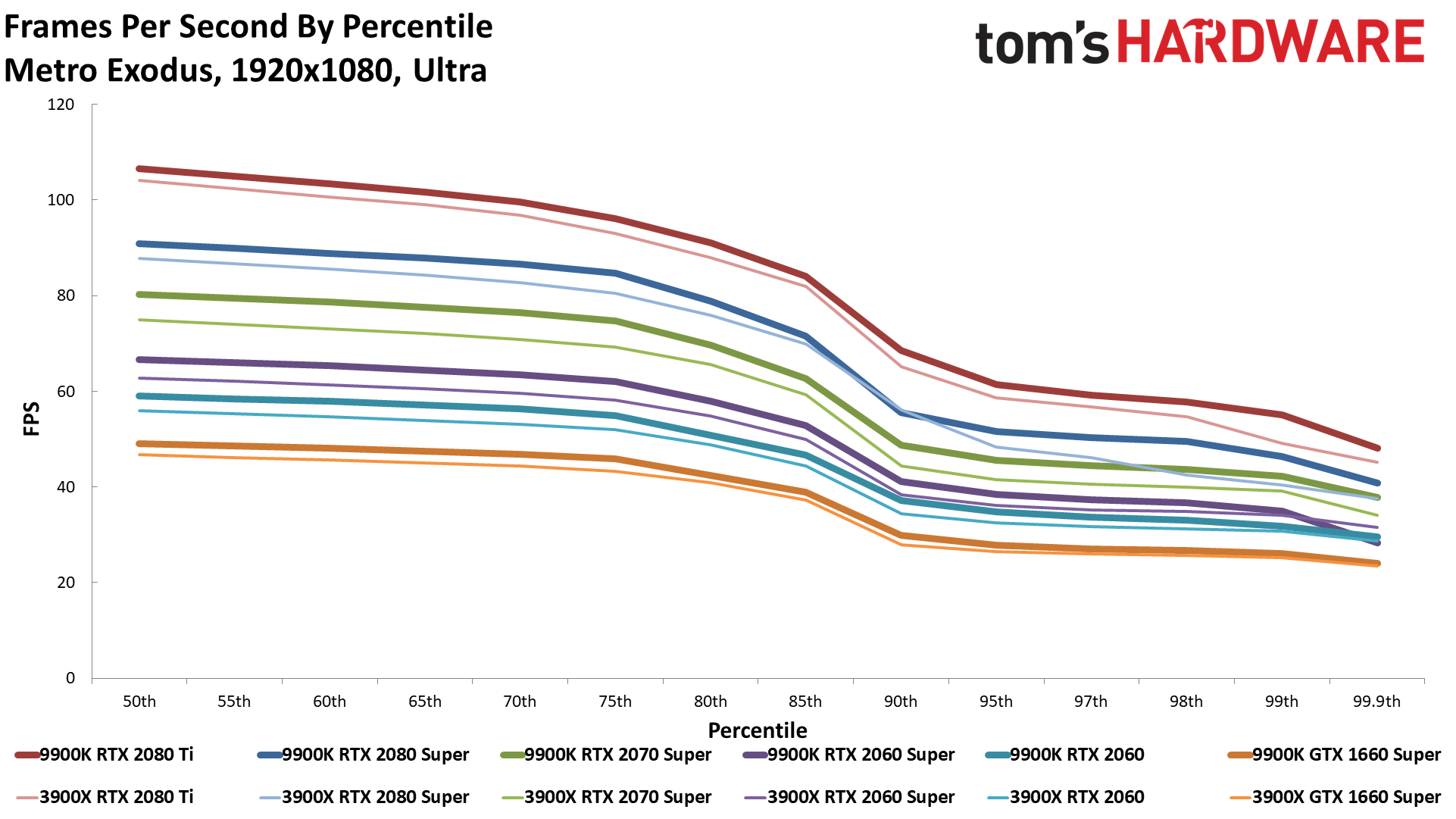

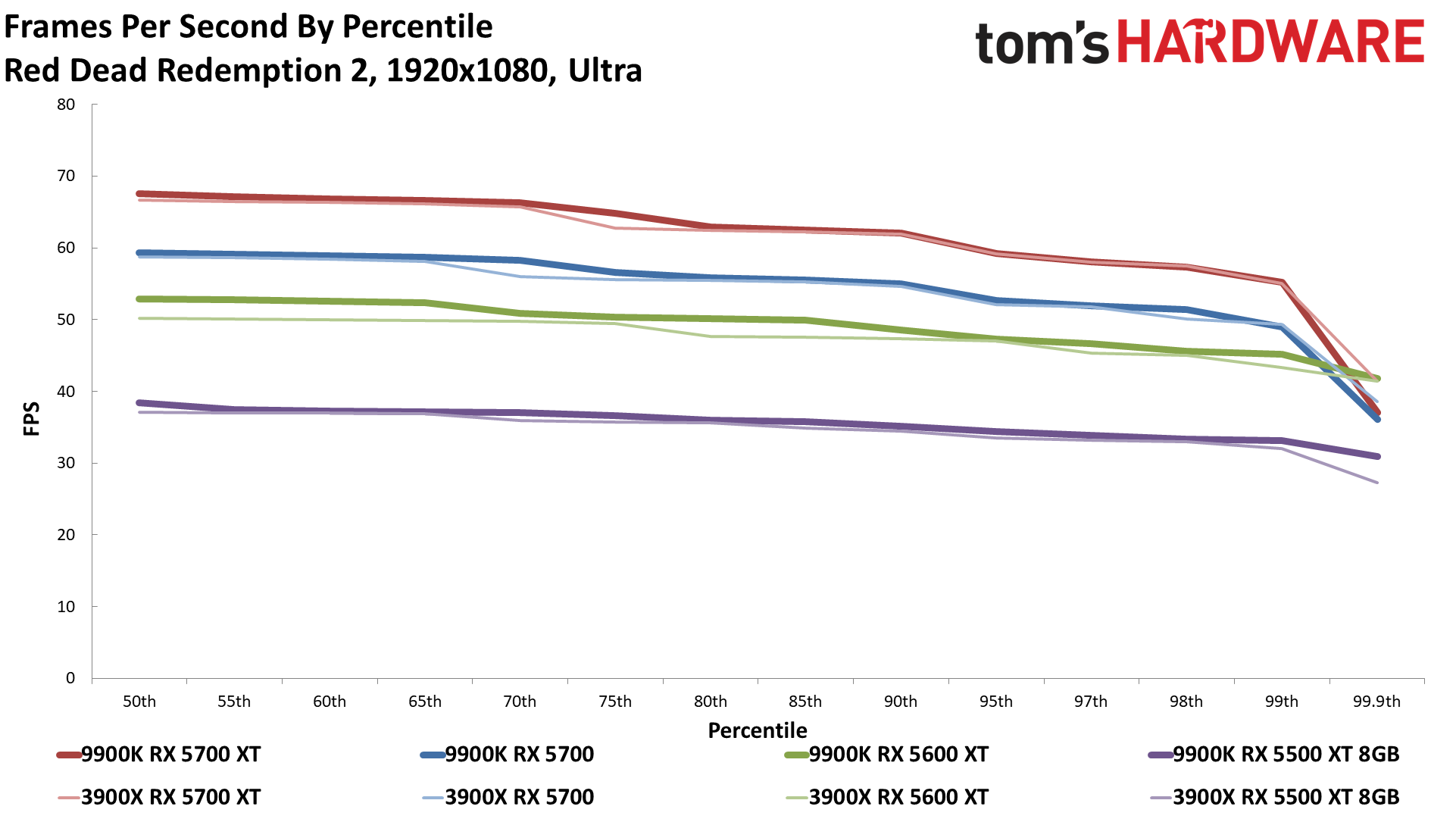

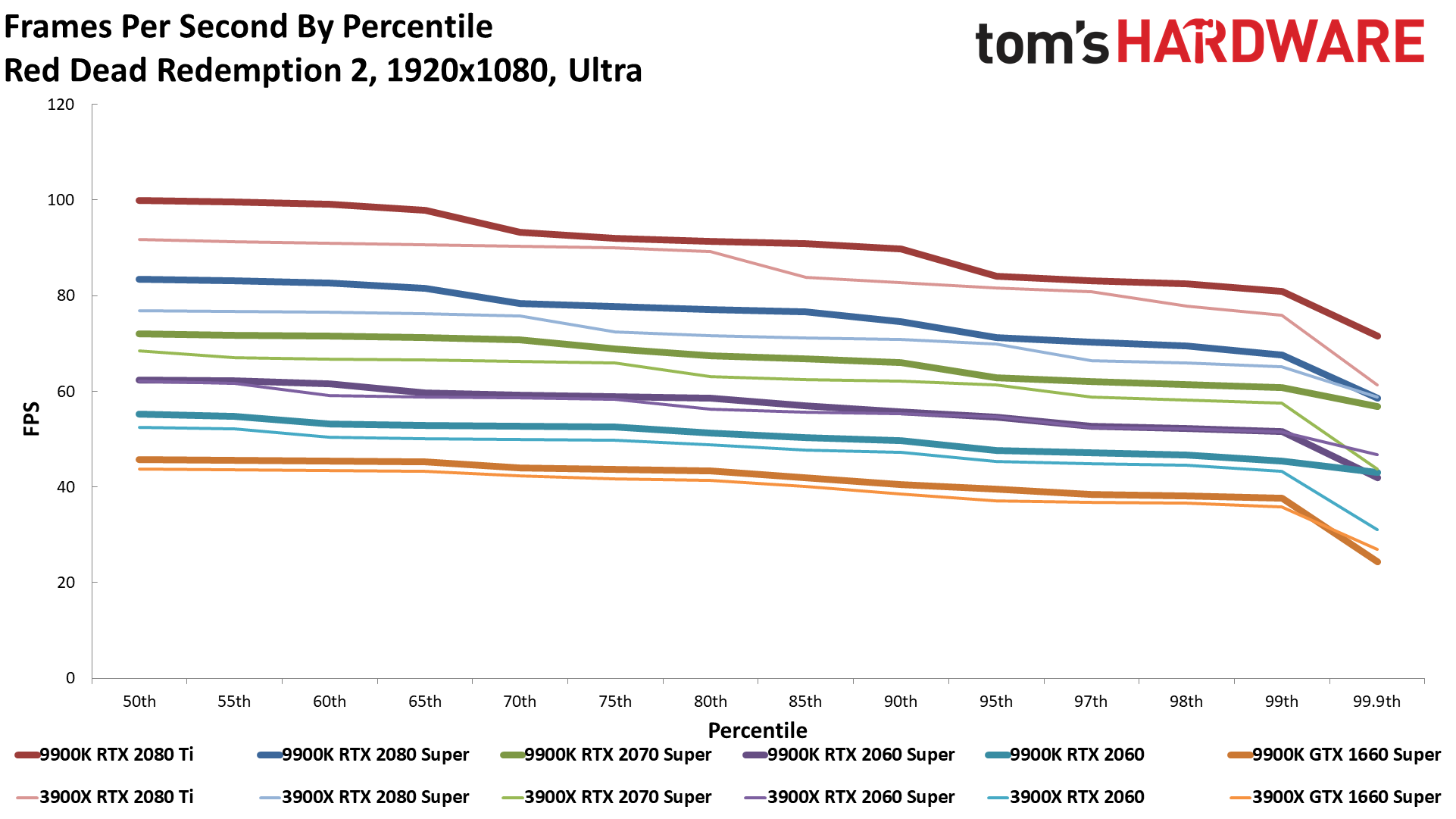

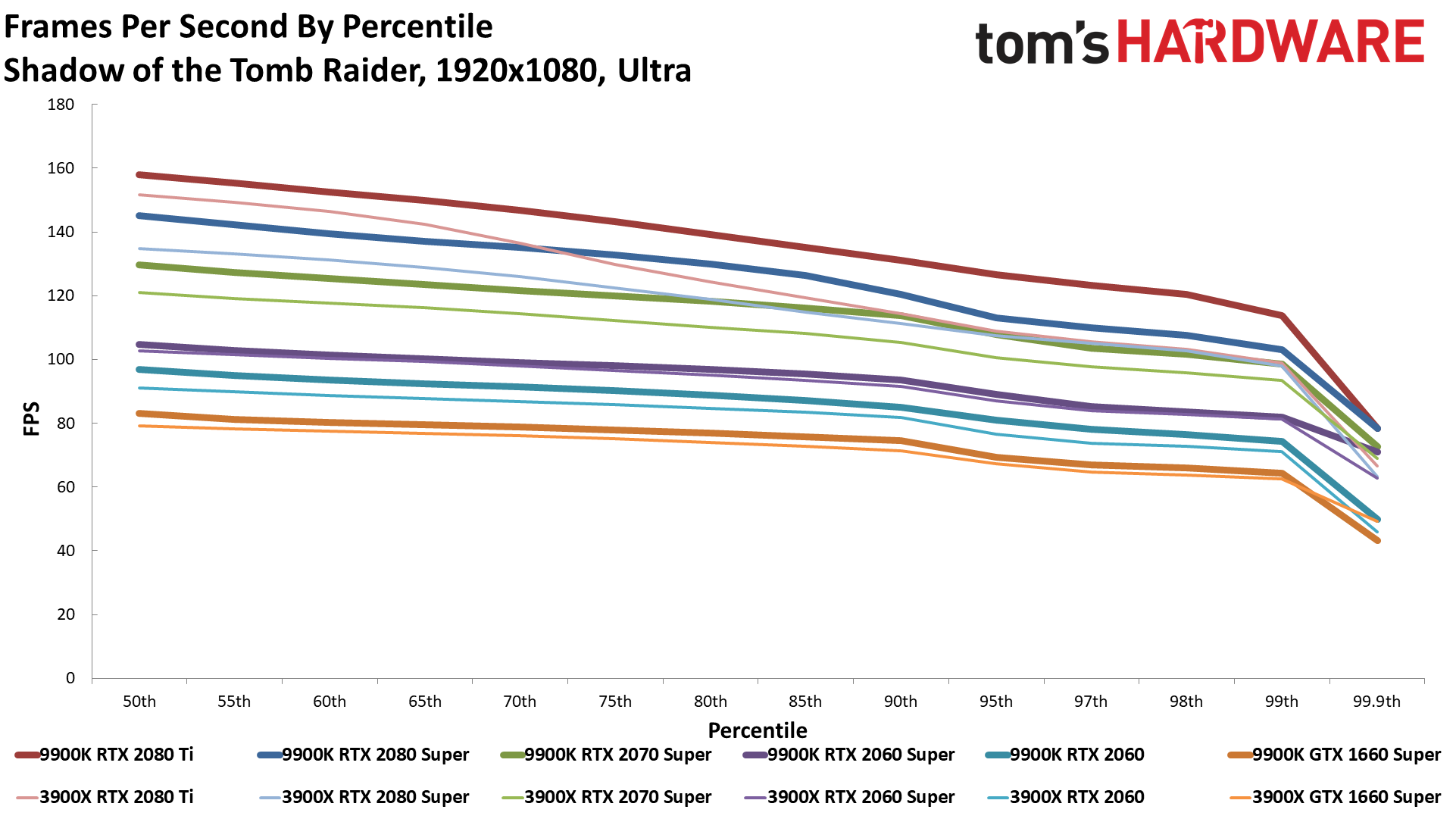

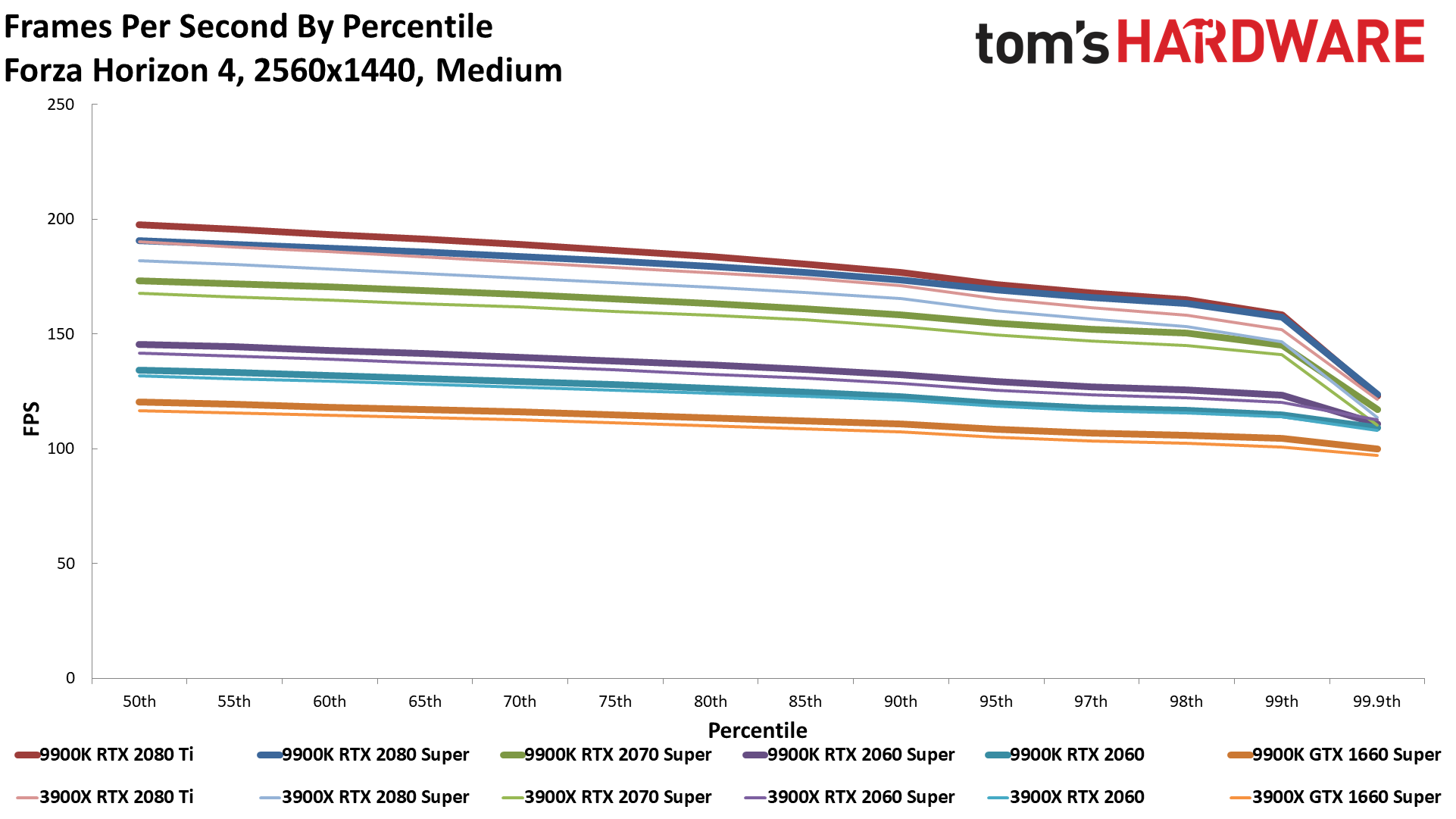

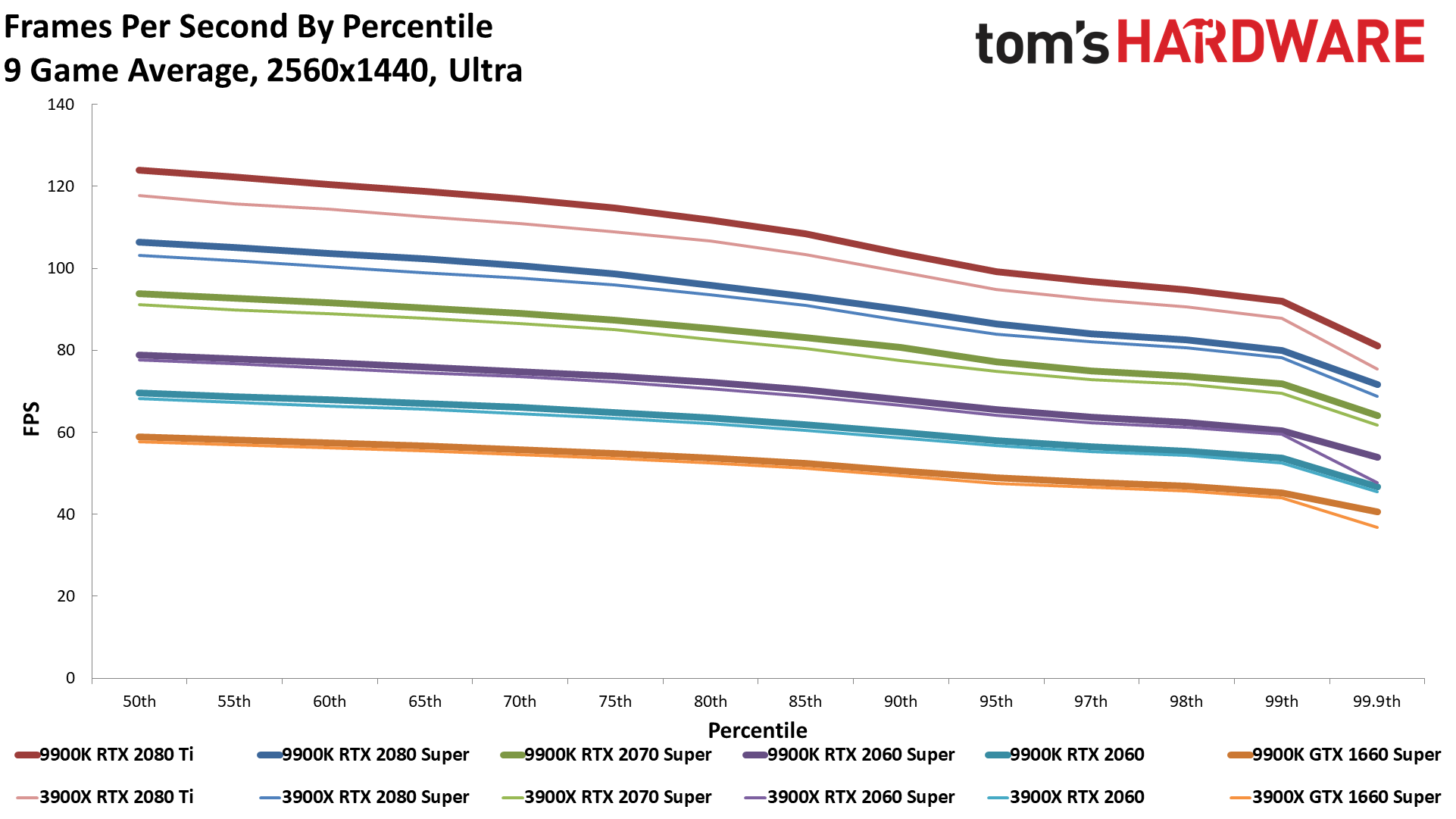

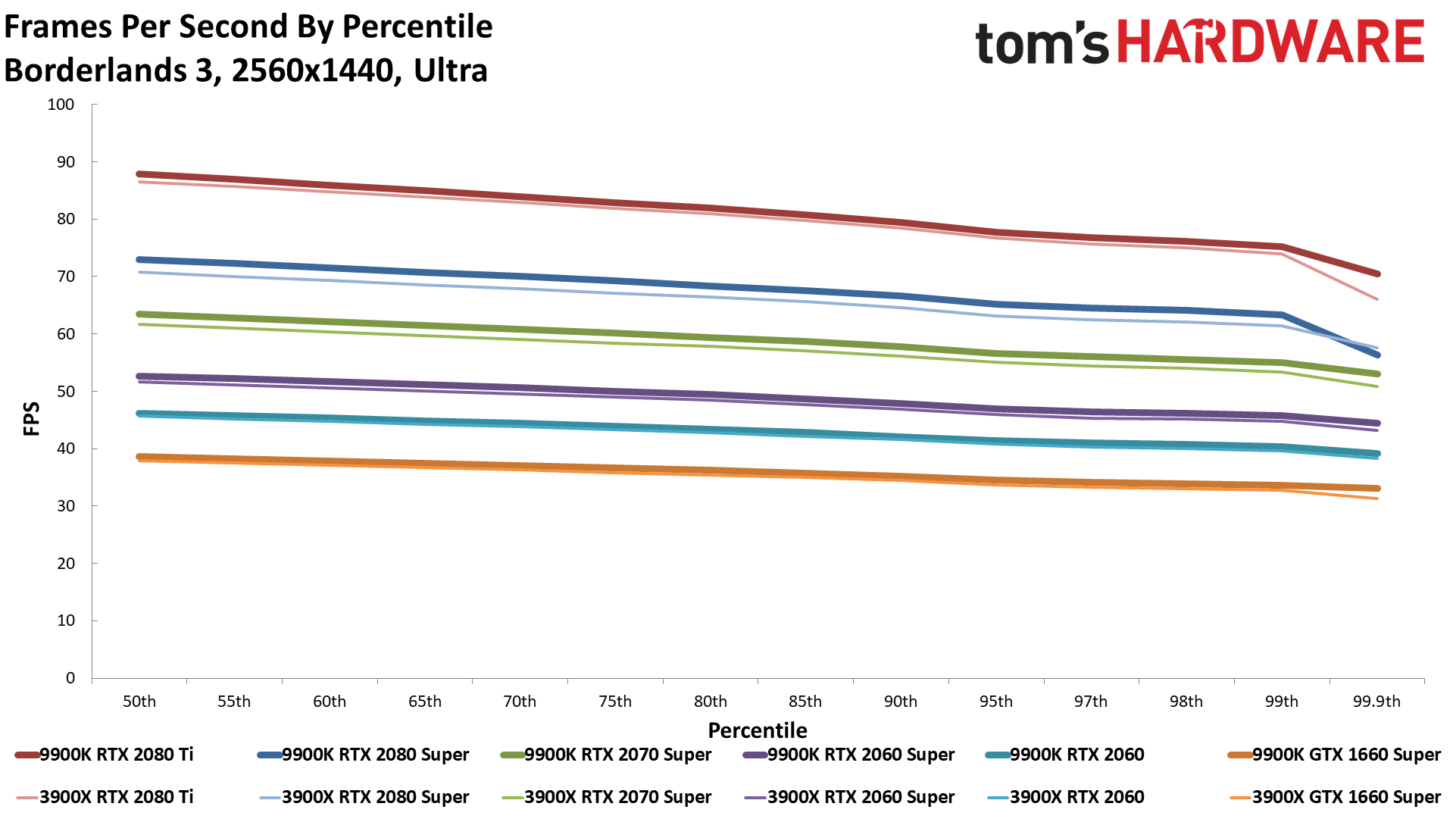

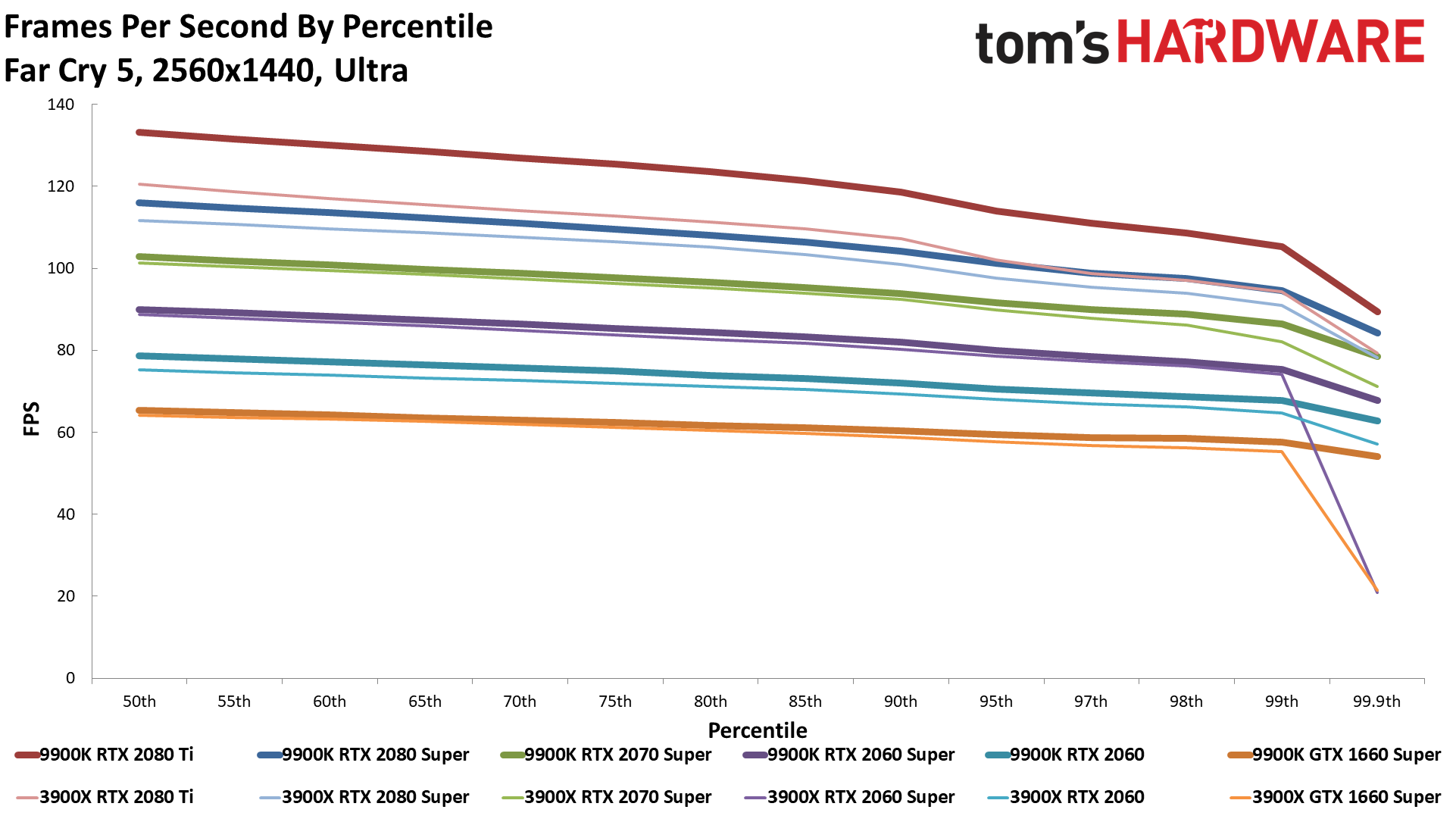

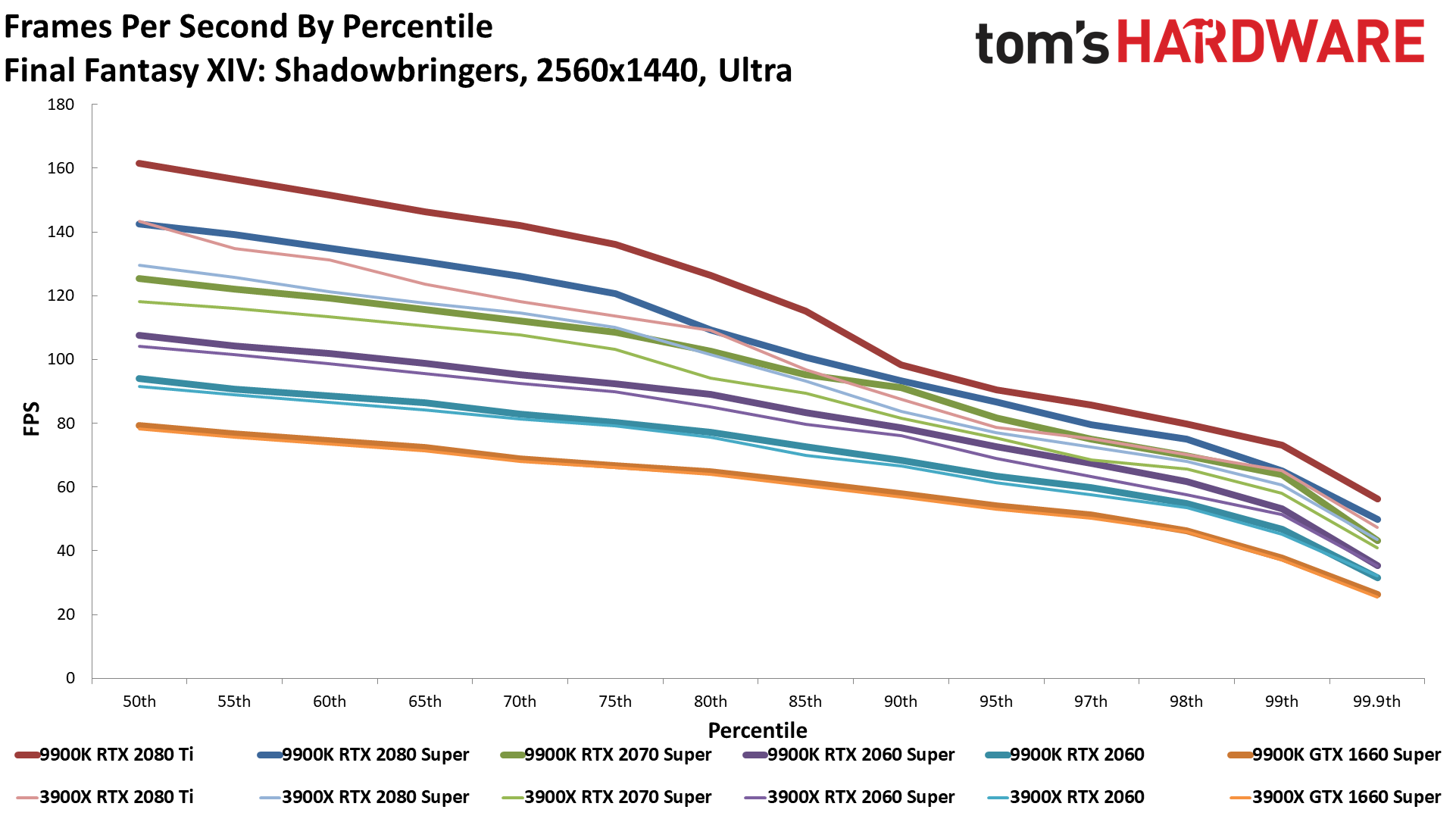

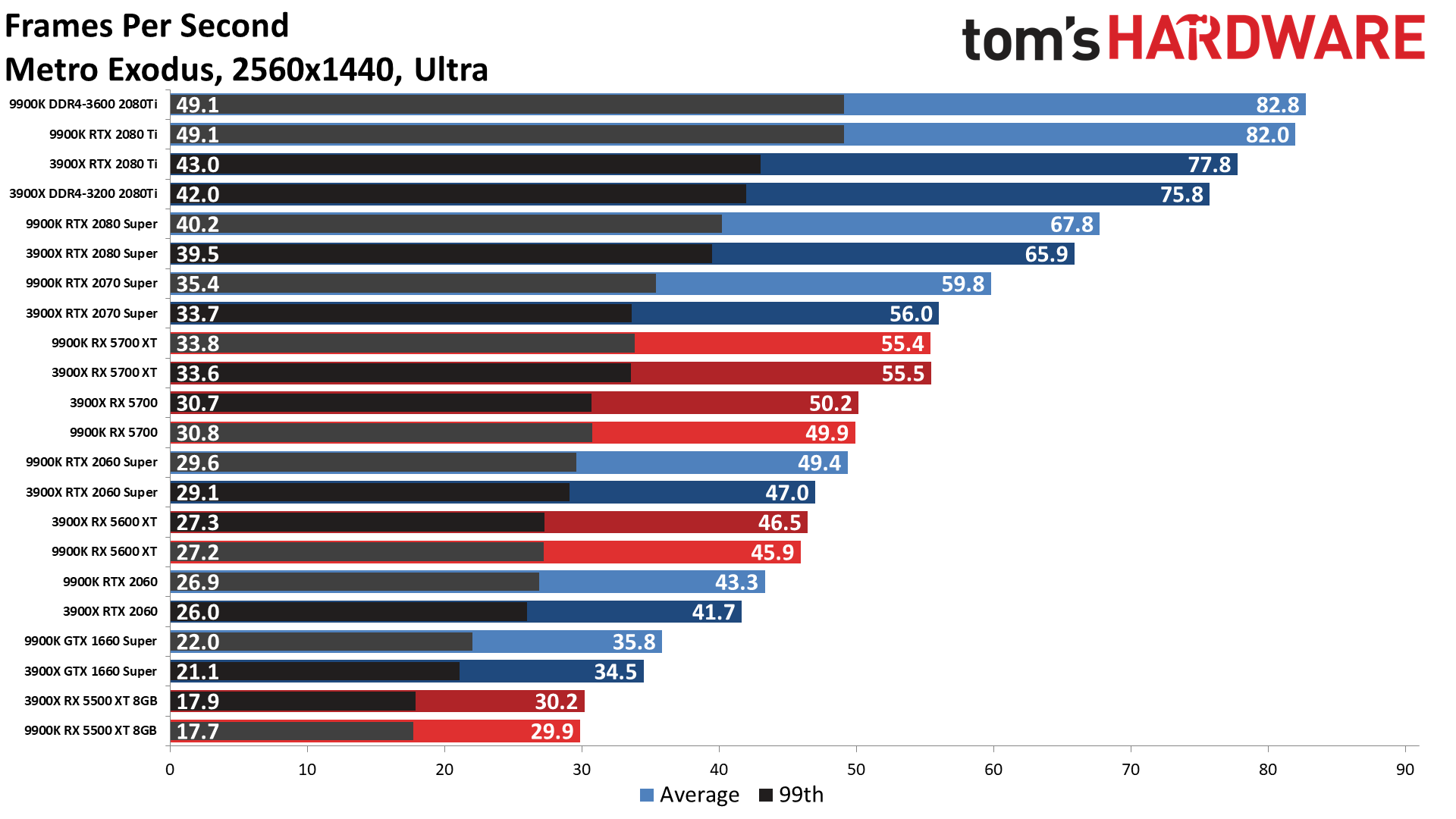

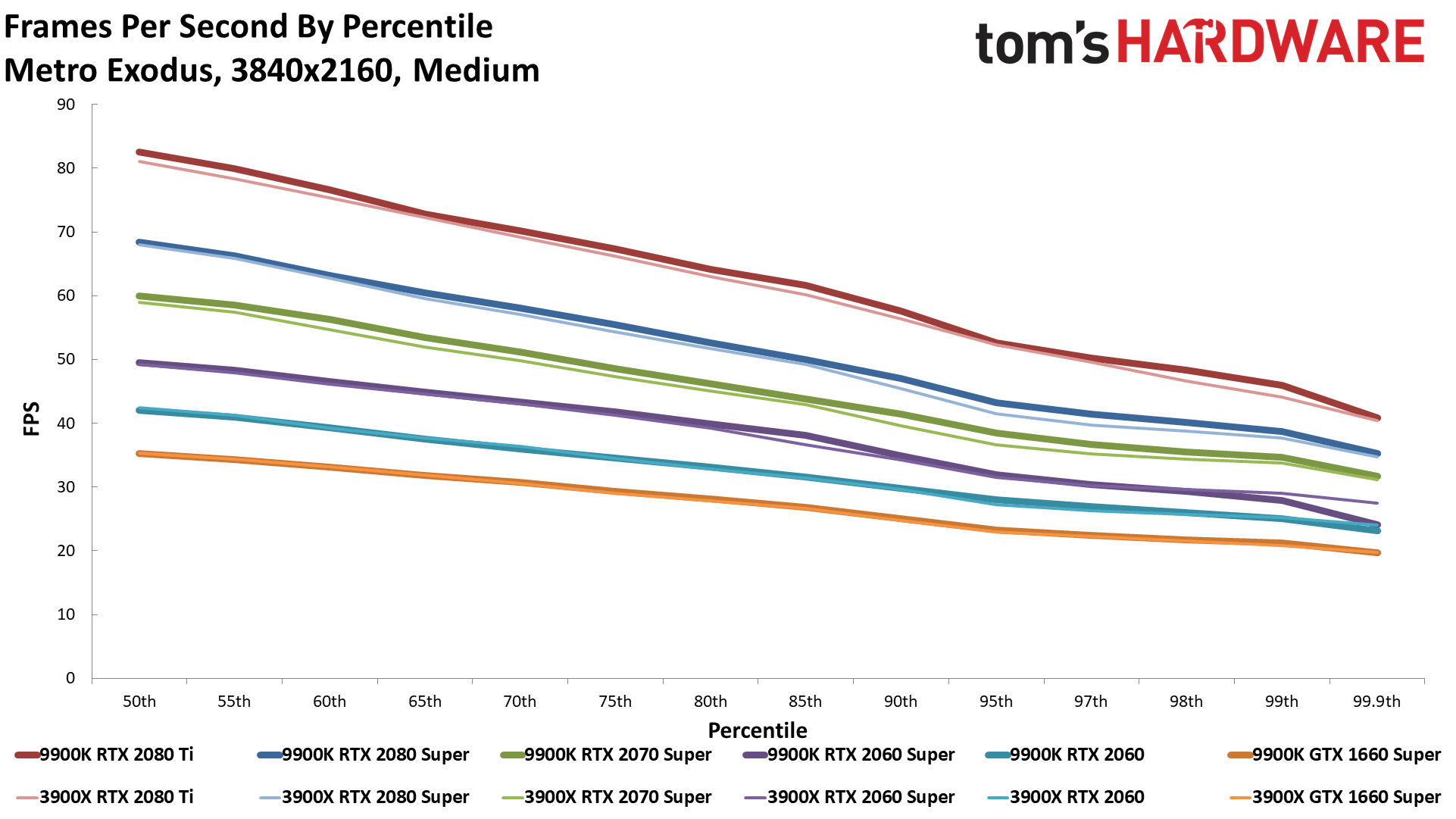

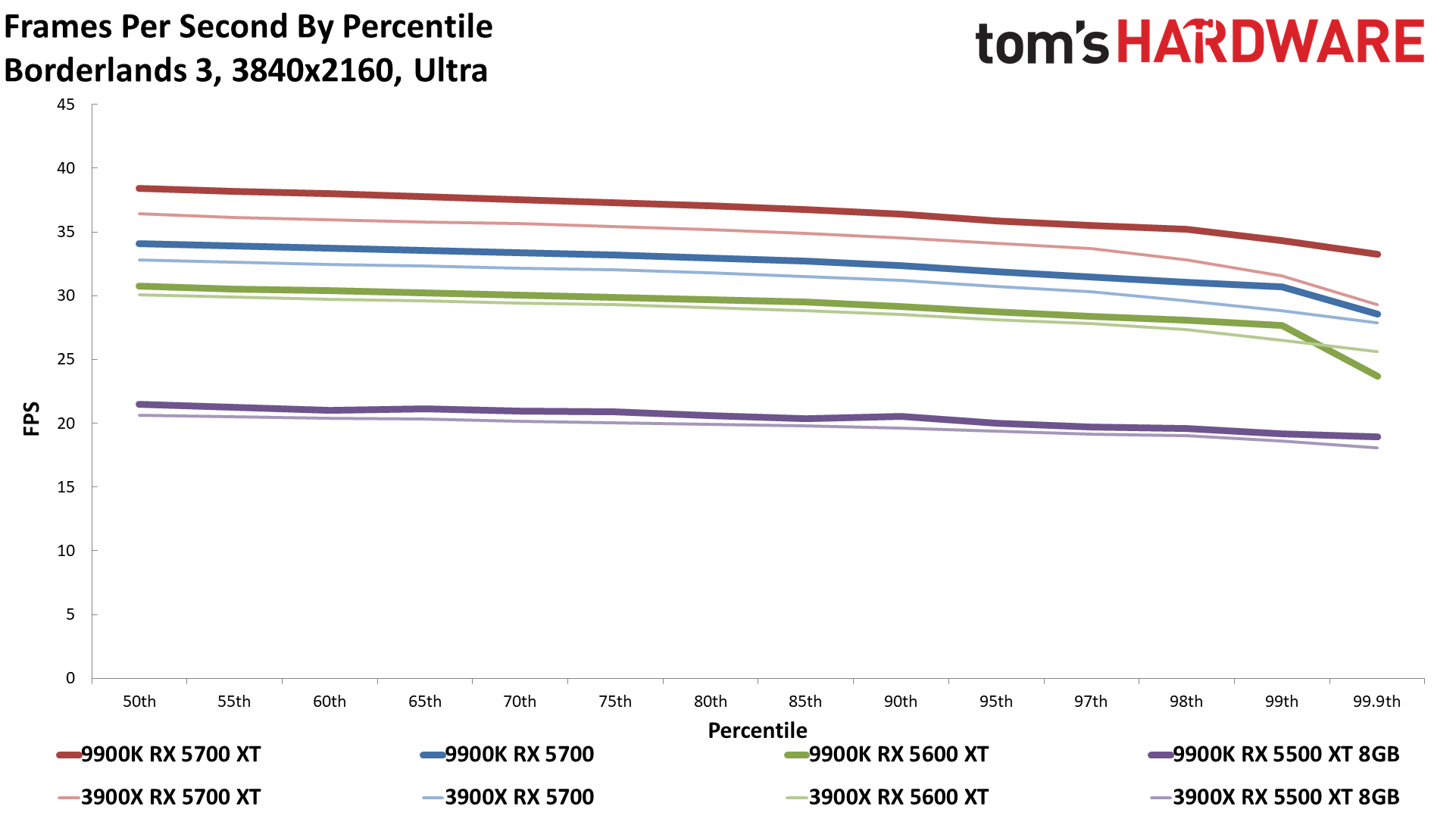

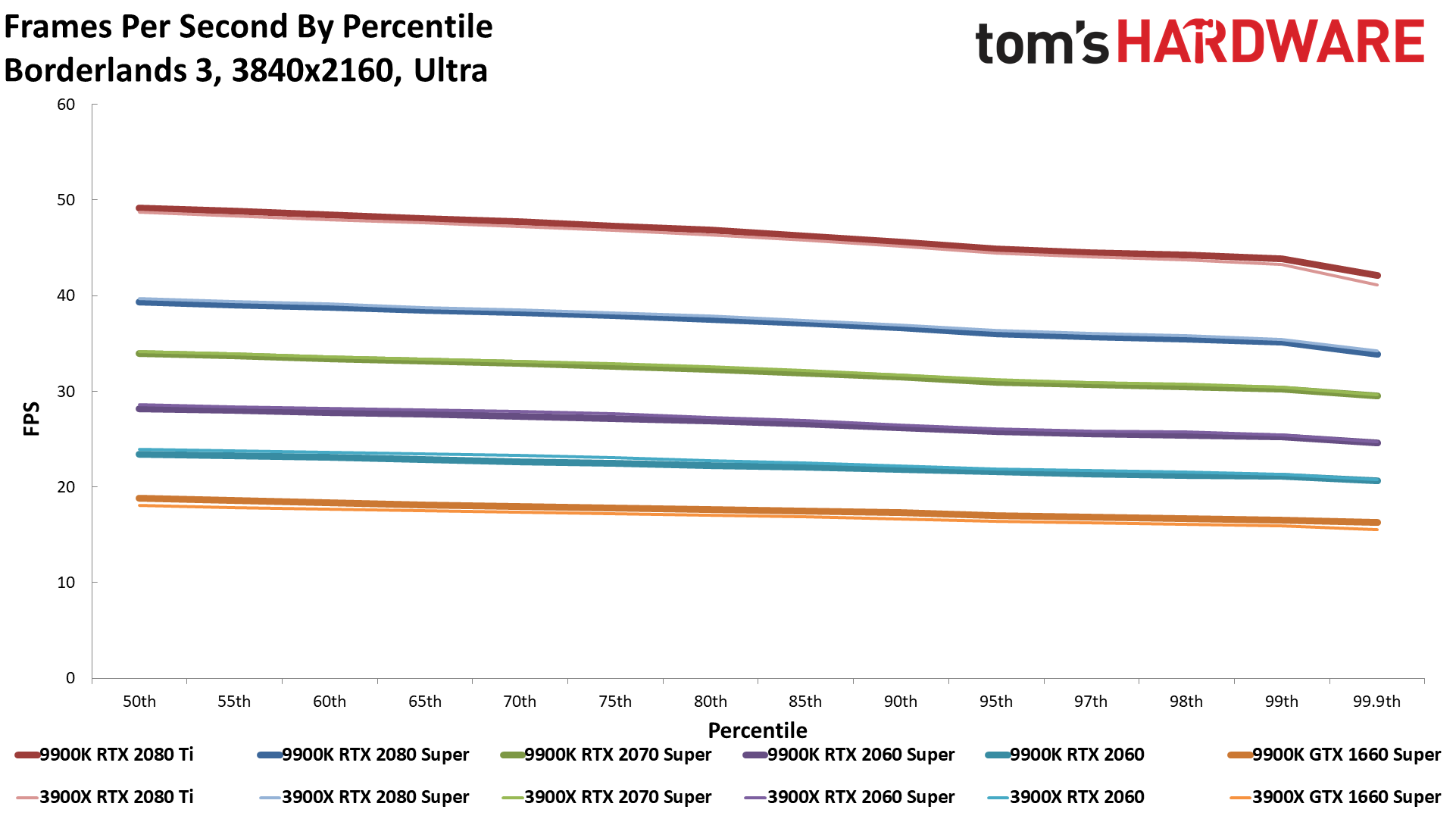

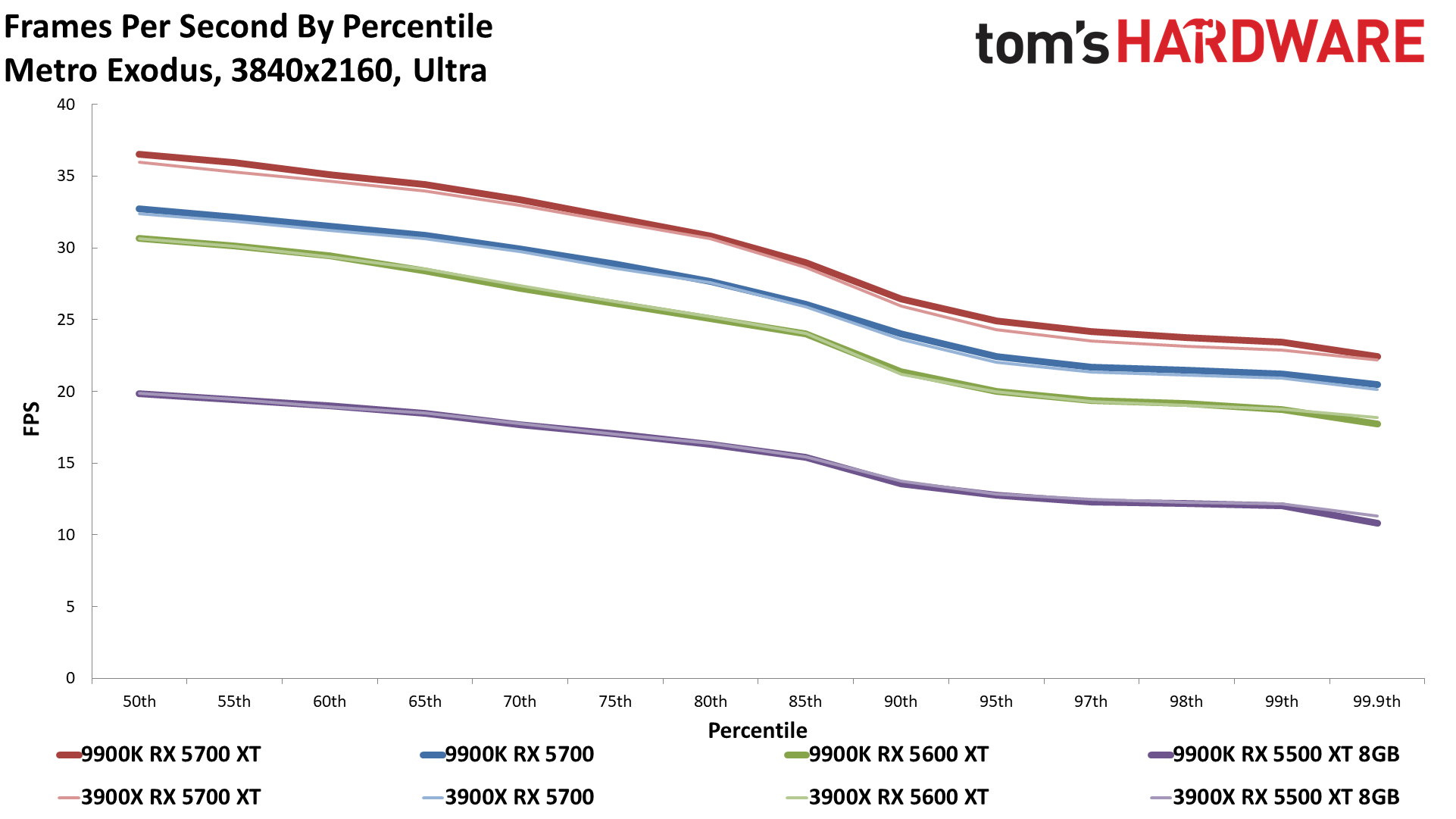

Our CPU reviews typically use 1080p ultra with an RTX 2080 Ti as the comparison point, but here we're looking at a slightly different set of games than usual. There's now Ashes of the Singularity to put a bigger focus on cores and threads, for example. Intel's overall lead was 9.4% at 1080p medium, and it drops to 6.6% with ultra quality. As you'd expect, the difference between the CPUs with lesser GPUs becomes even less.

The 2080 Super and 2070 Super still showed about a 6% lead for Intel. The 2060 Super, RTX 2060, GTX 1660 Super, RX 5700 XT, and RX 5700 all ended up with a 3-4% Intel lead, but mostly it's just splitting hairs. And the AMD RX 5600 XT and RX 5500 XT 8GB are within 2%, with the 3900X again coming out slightly ahead with the 5500 XT.

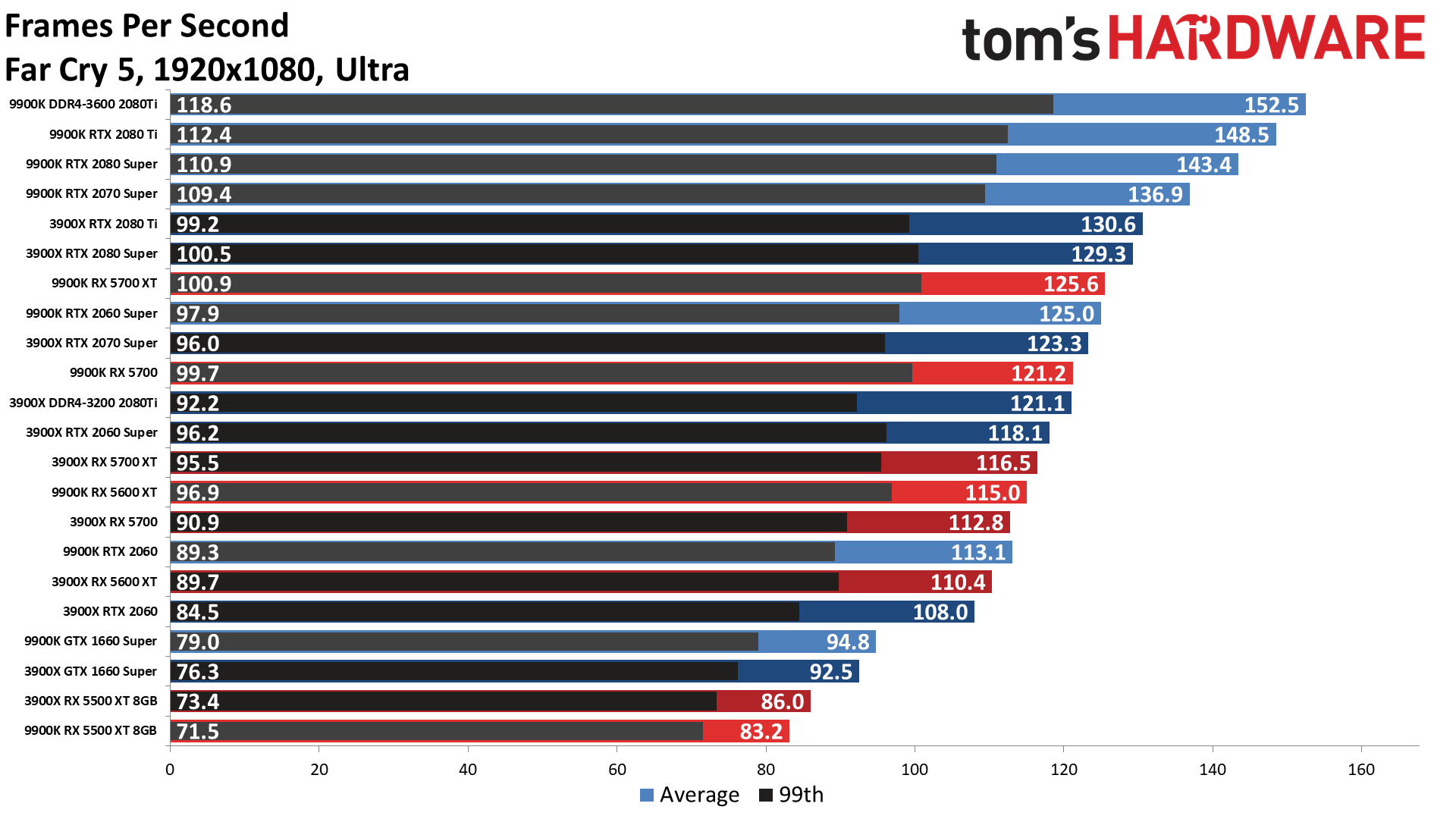

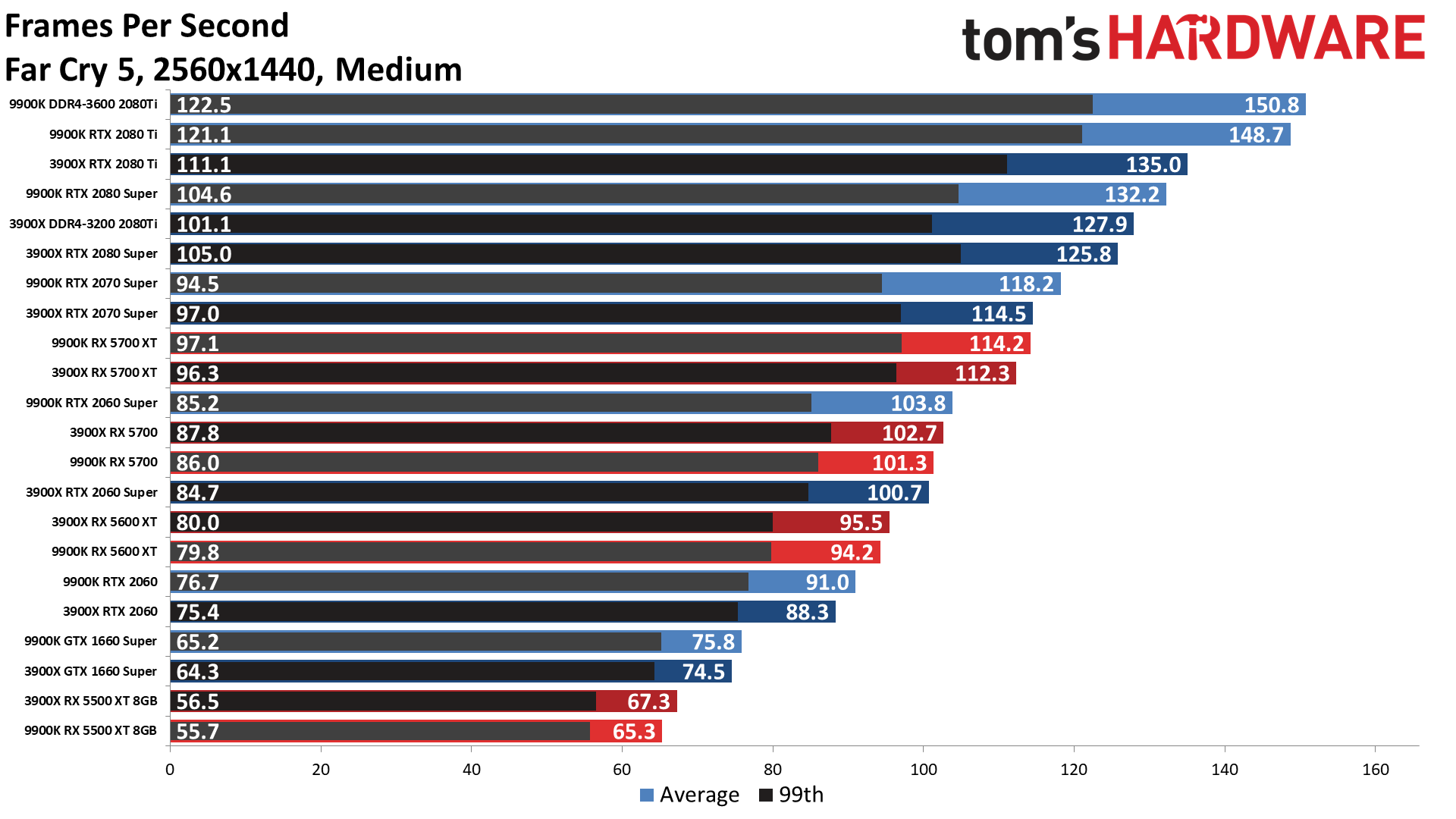

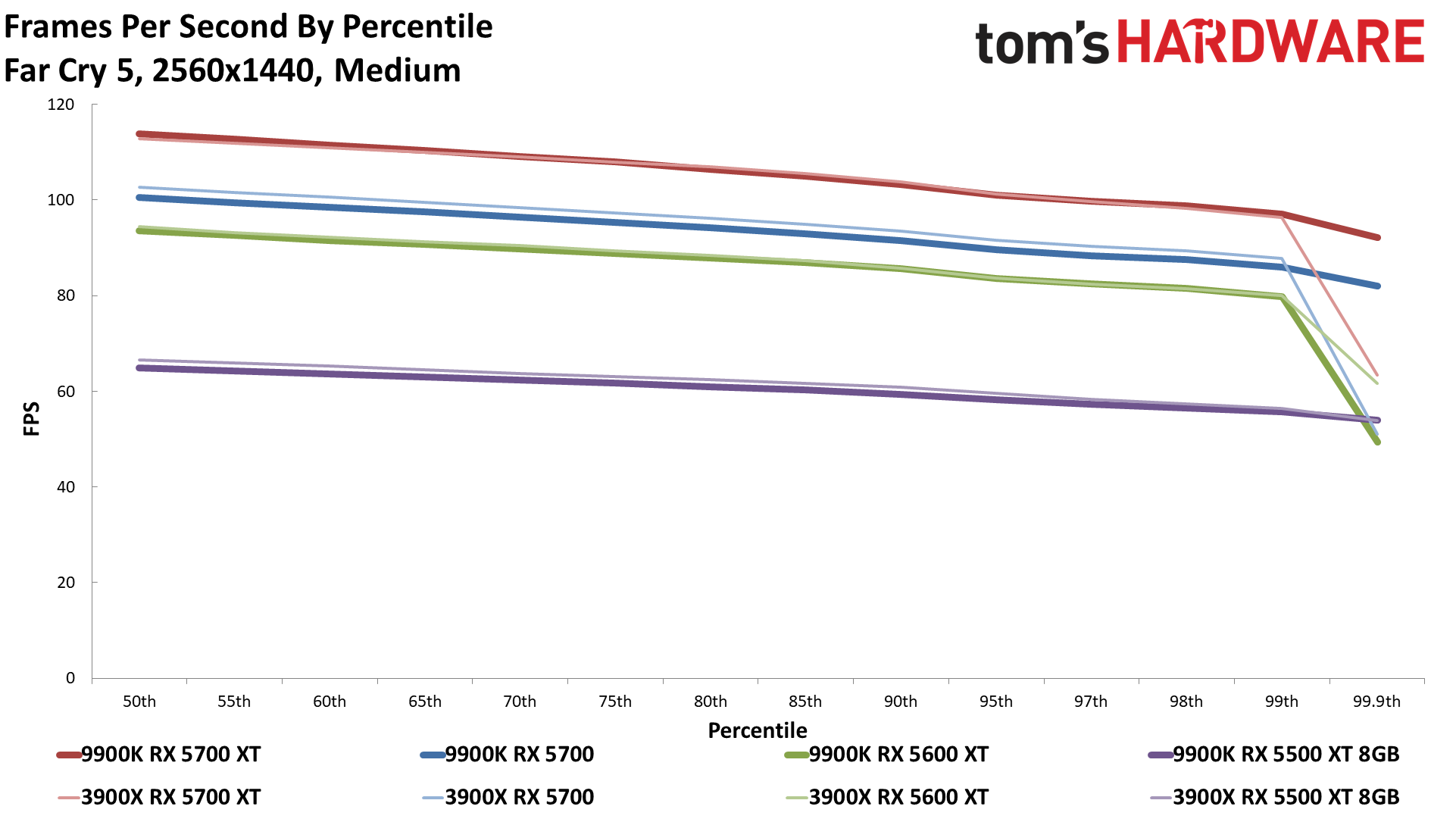

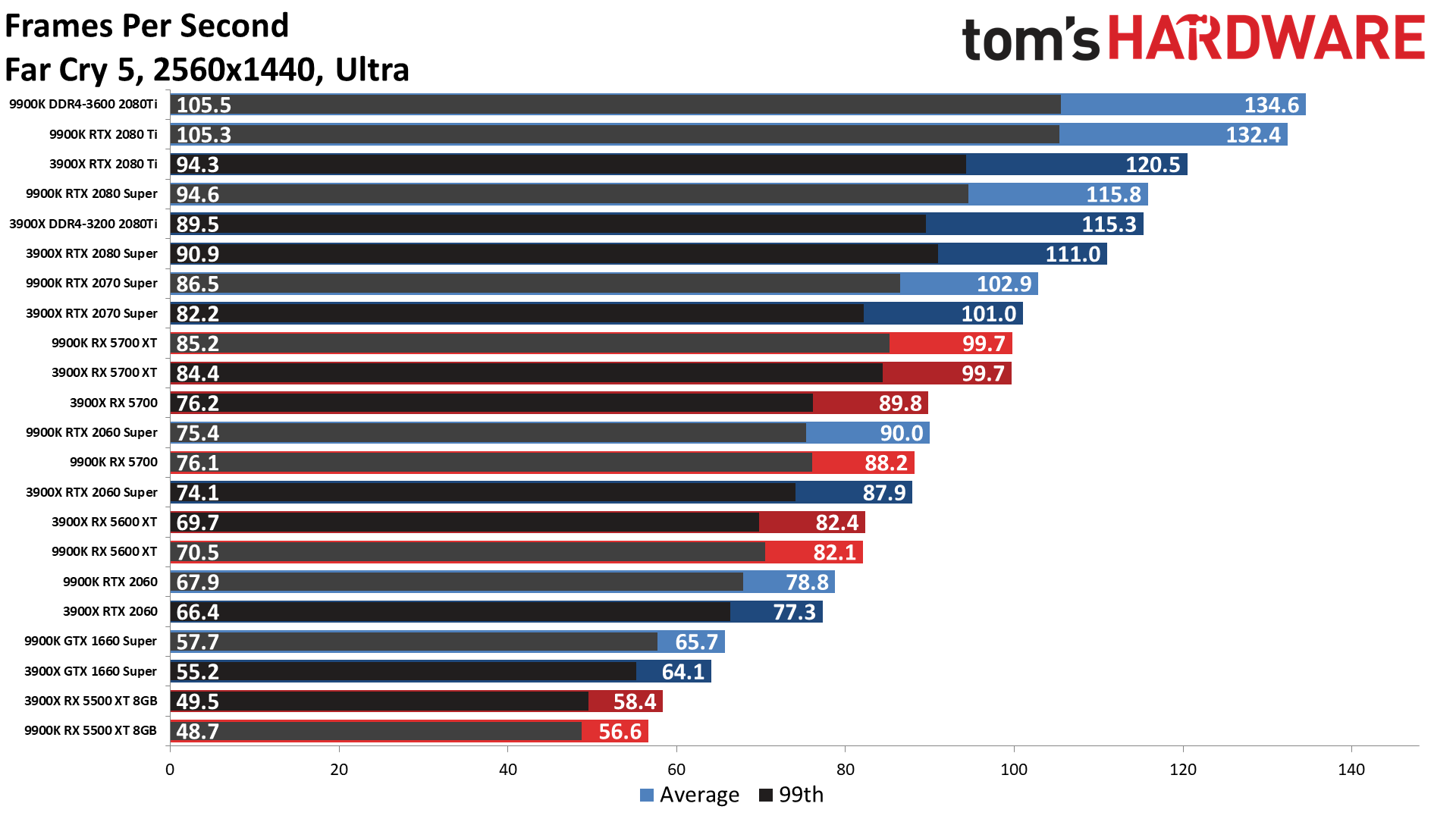

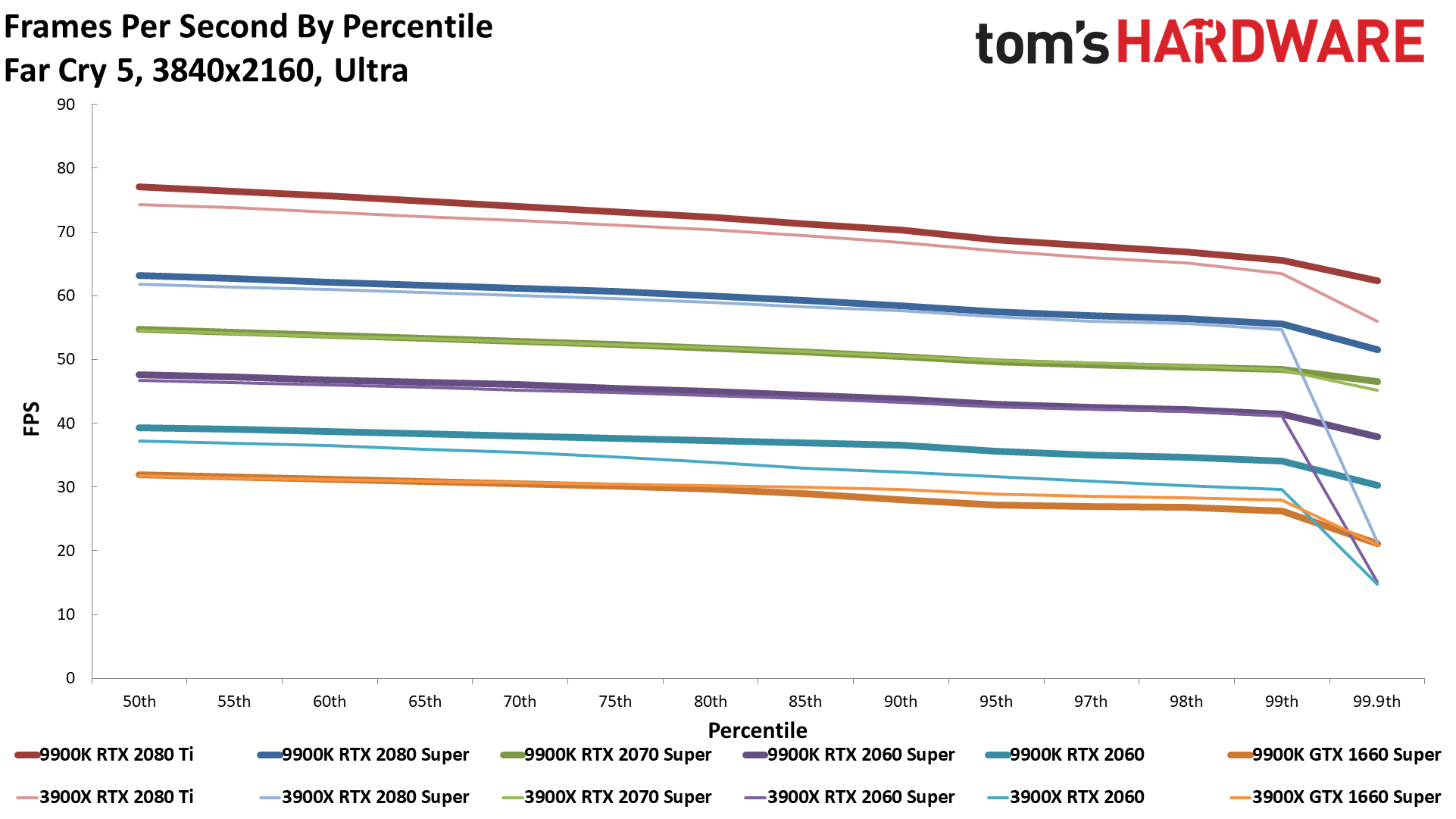

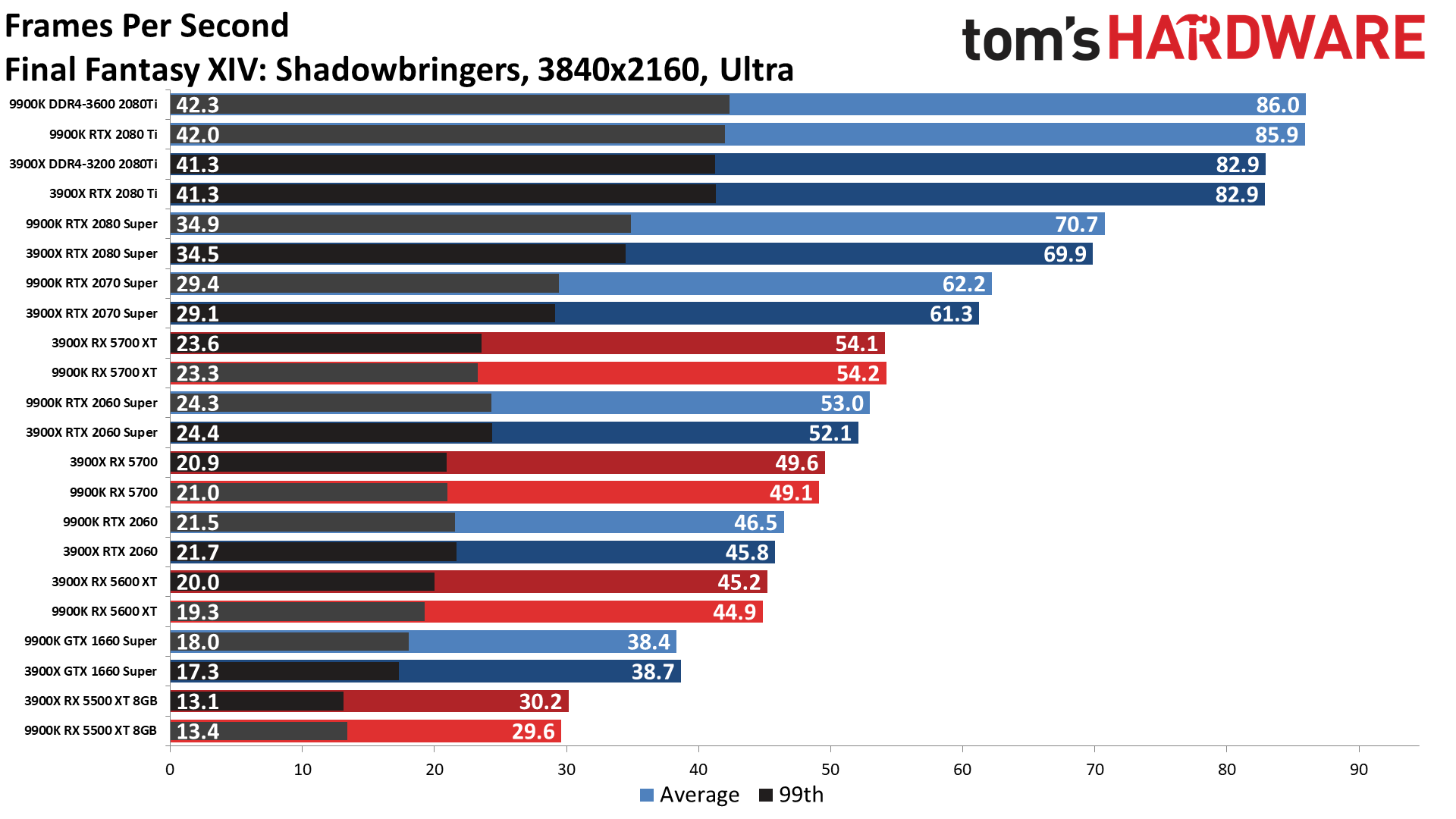

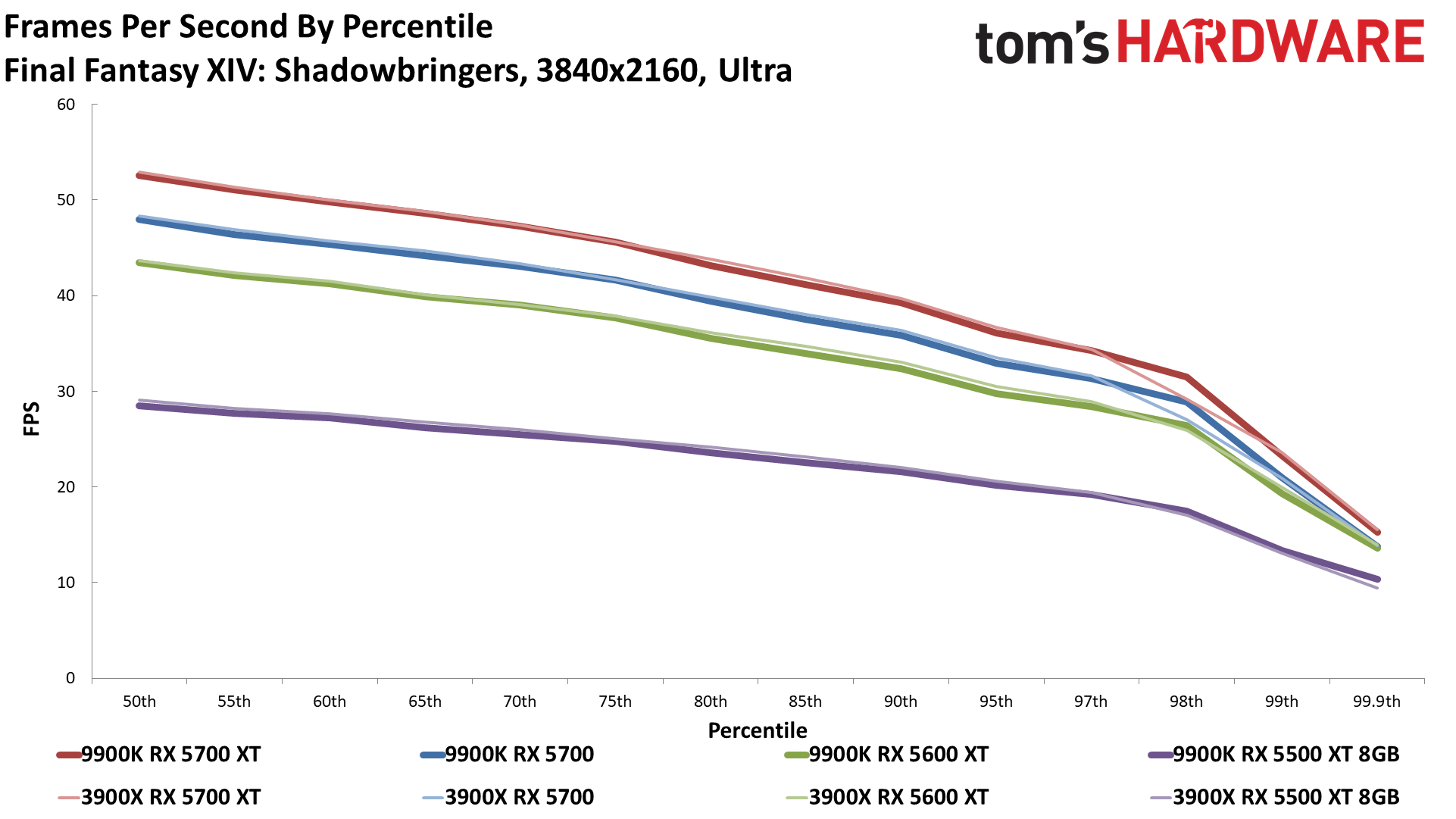

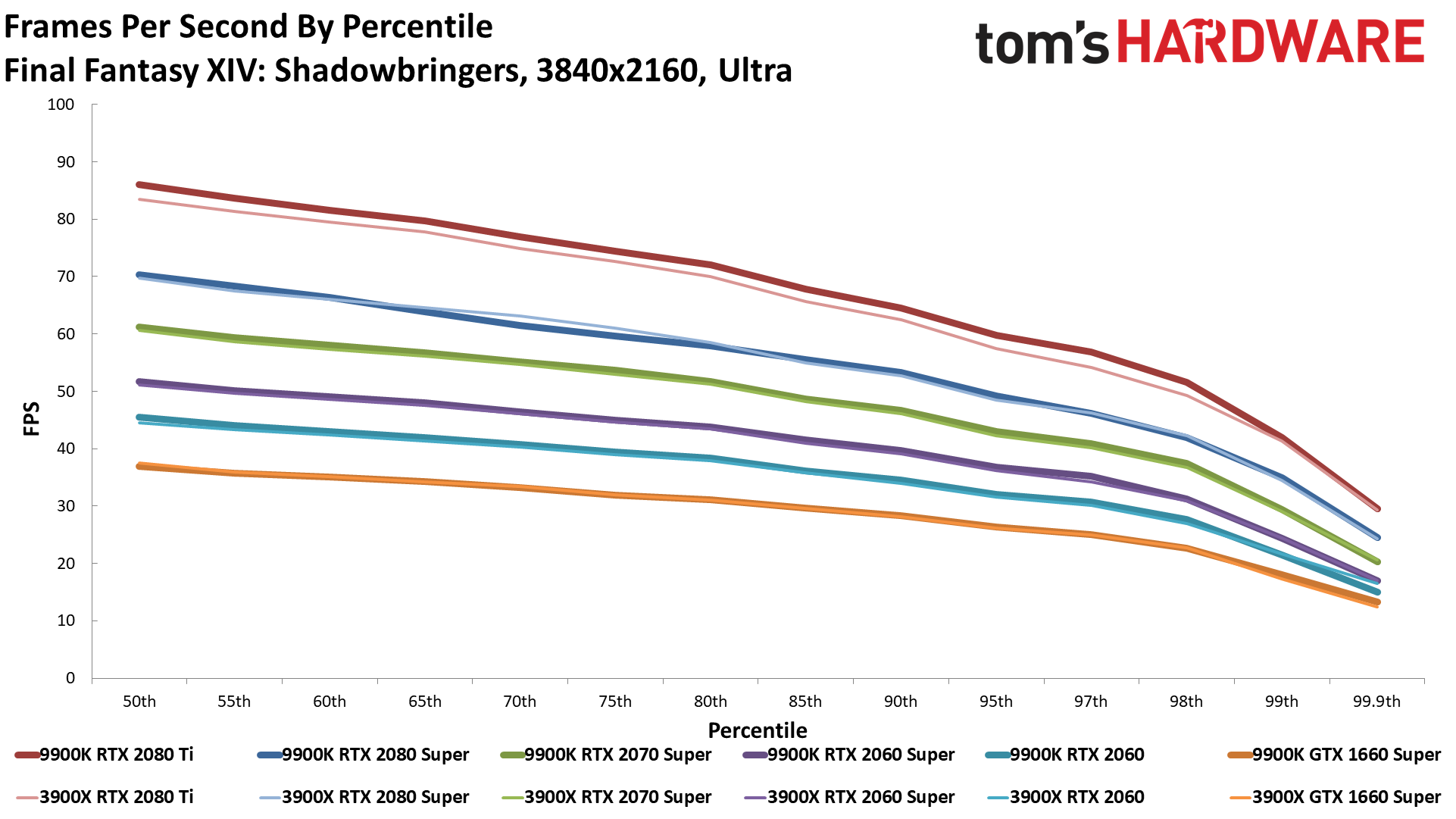

There are a few games that still favor Intel a bit more, specifically Final Fantasy XIV and Far Cry 5, but with any current GPU that costs $400 or less, the difference isn't going to be meaningful.

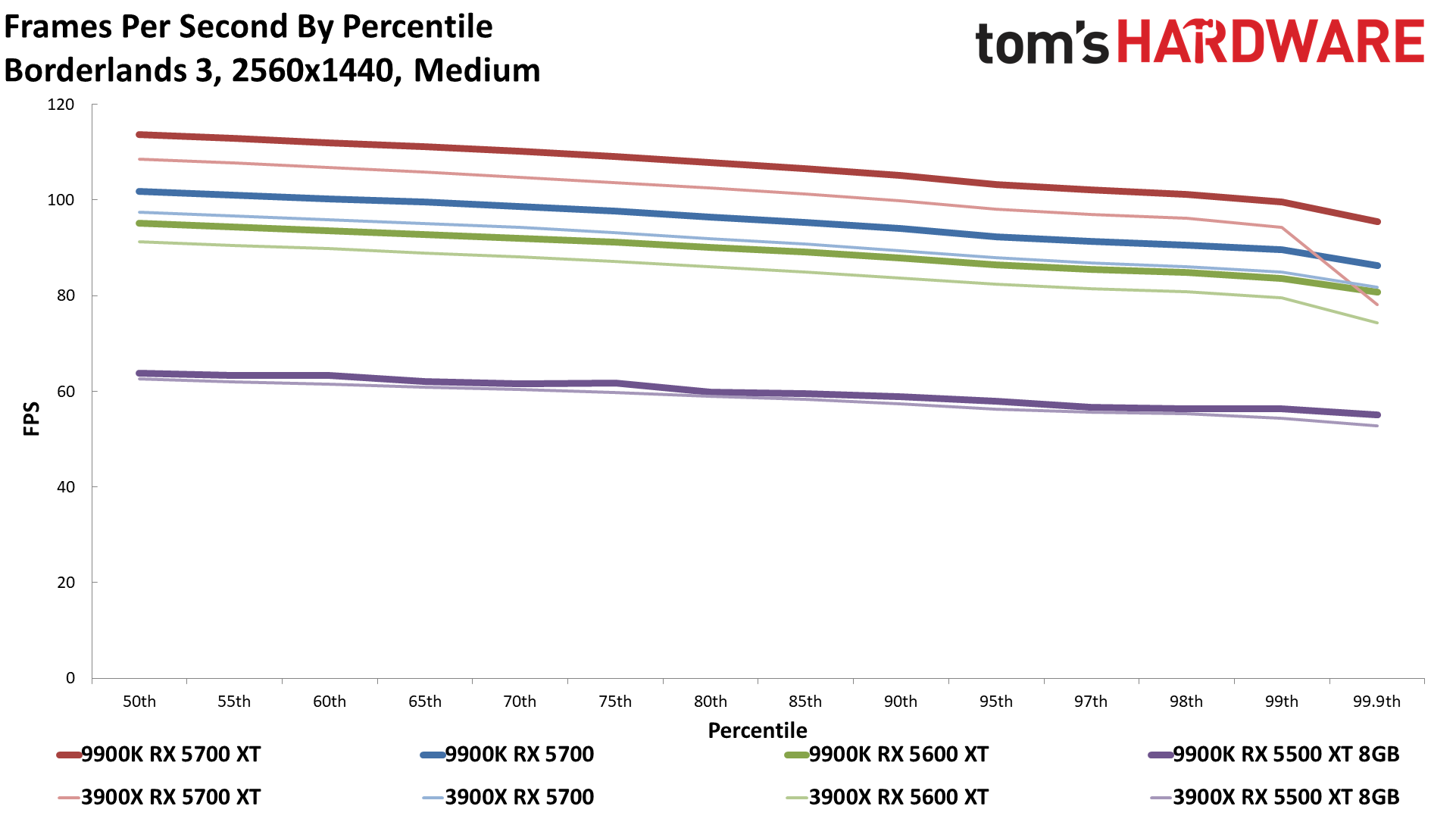

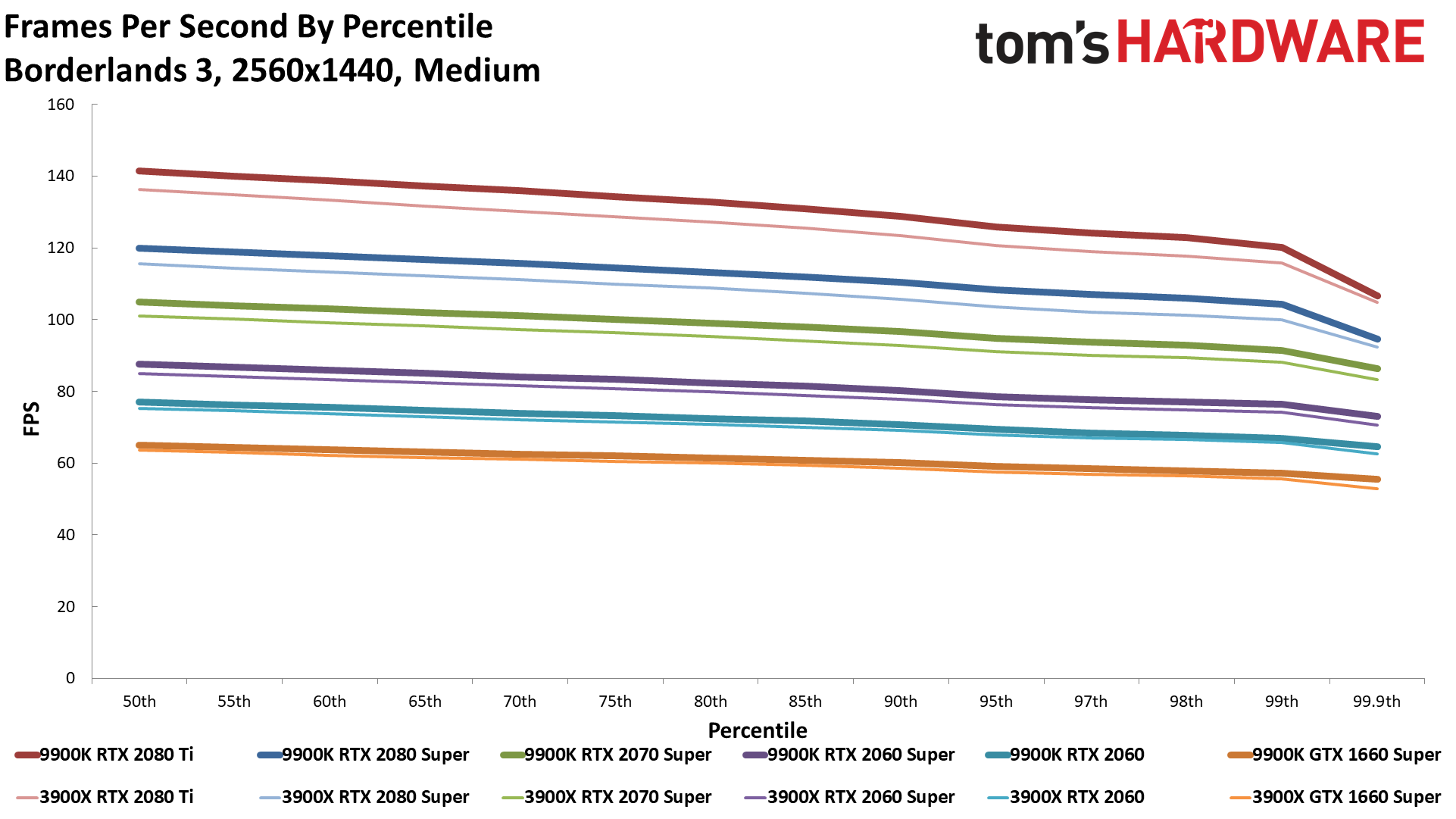

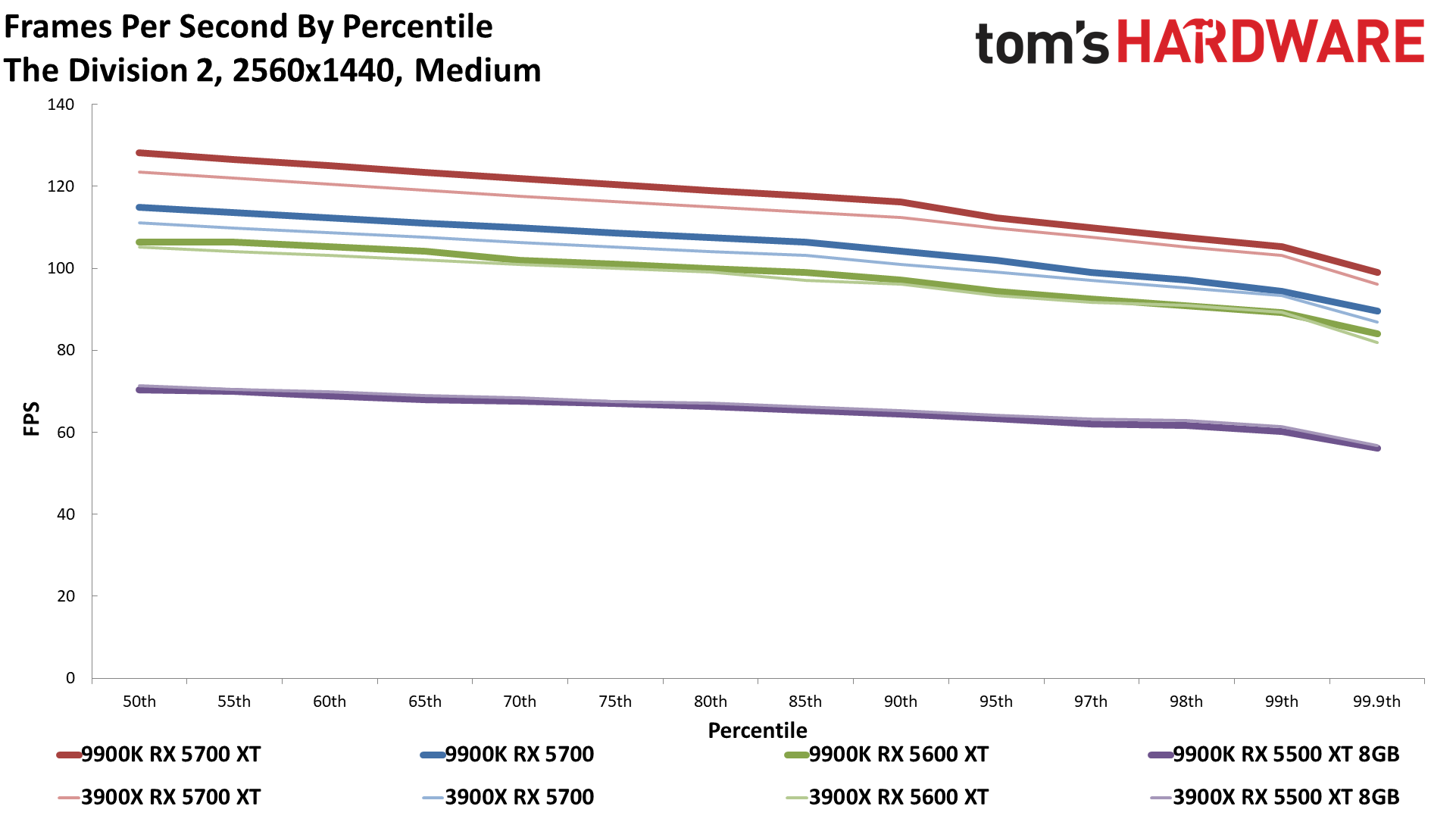

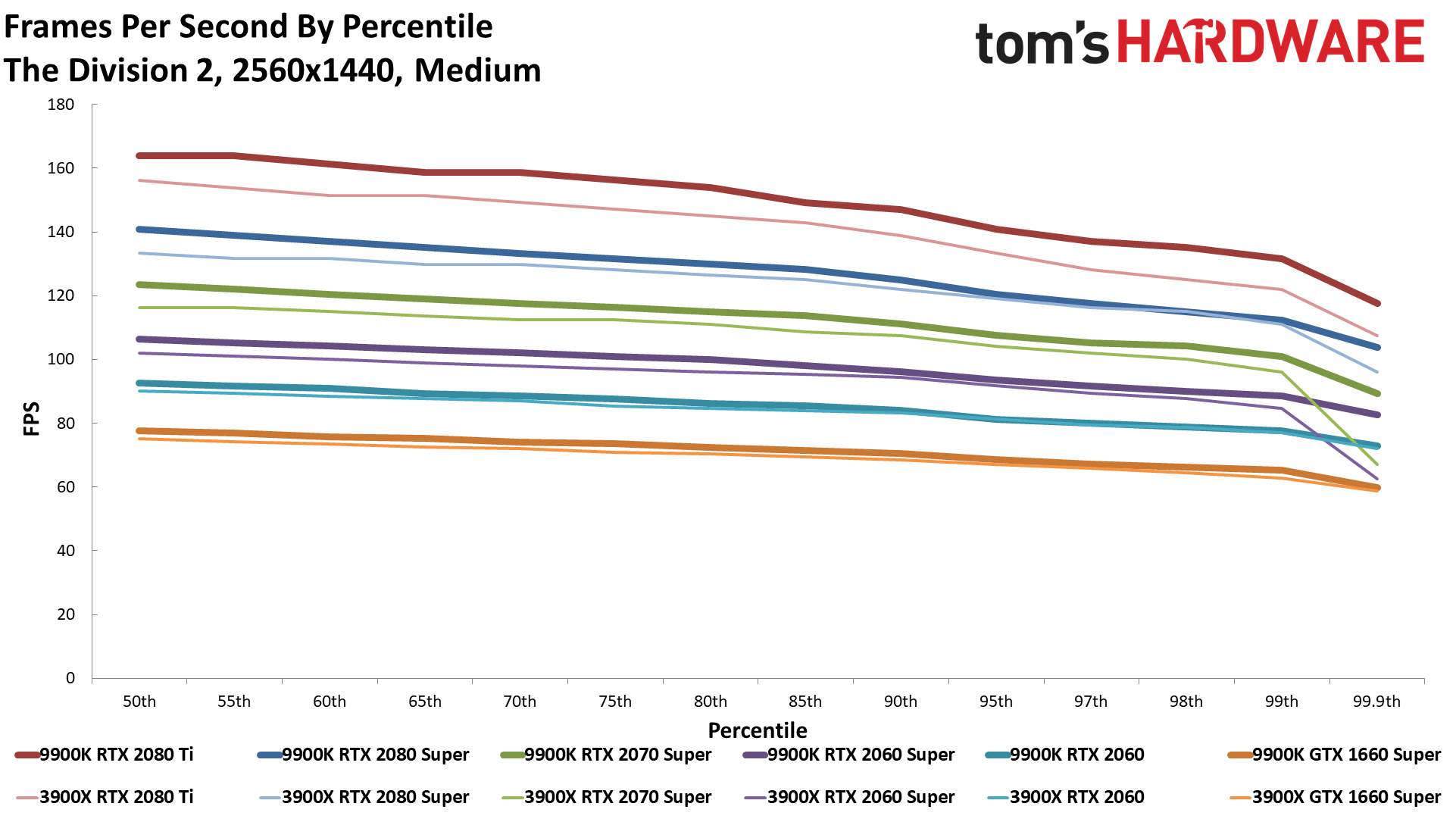

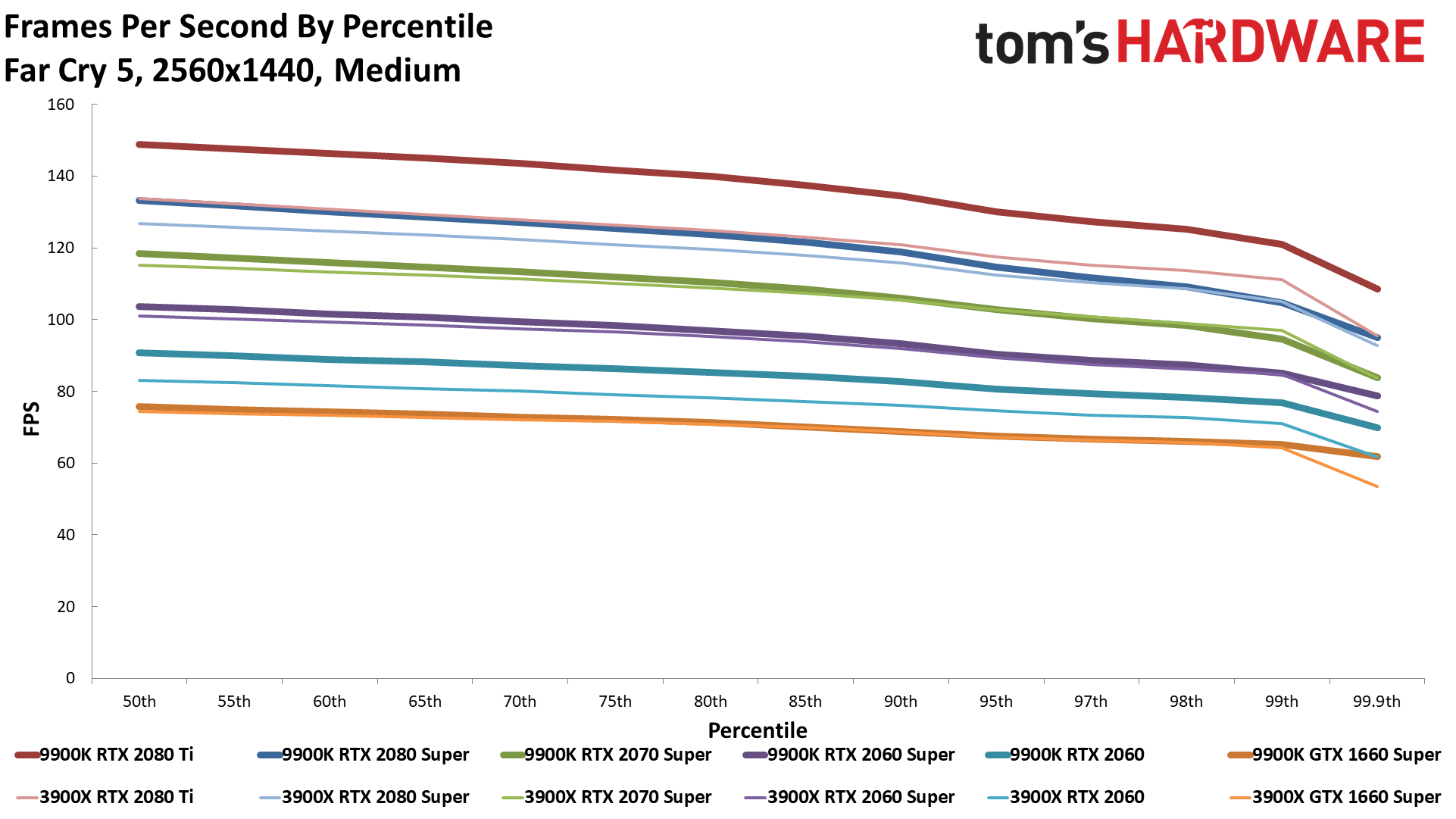

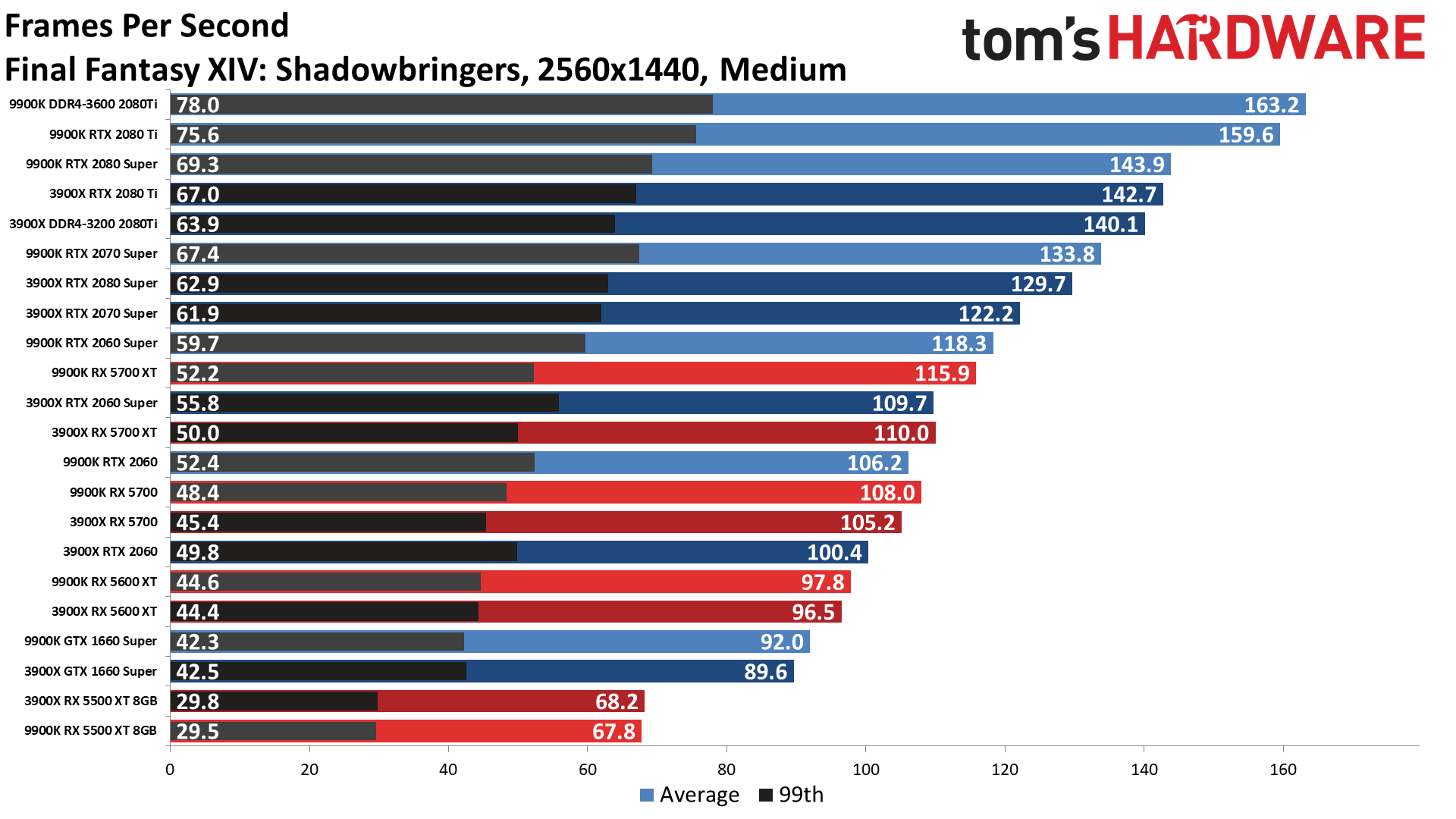

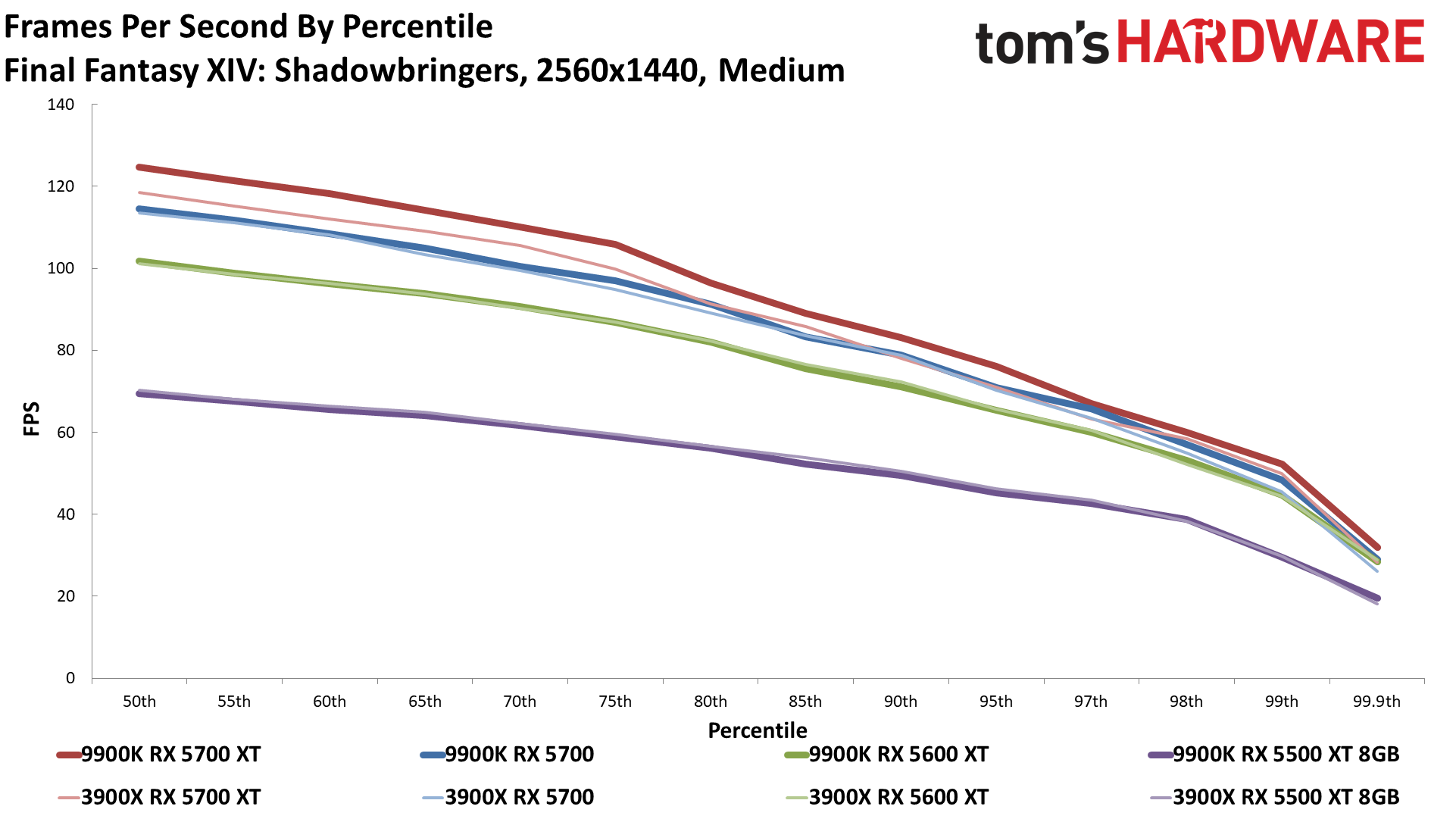

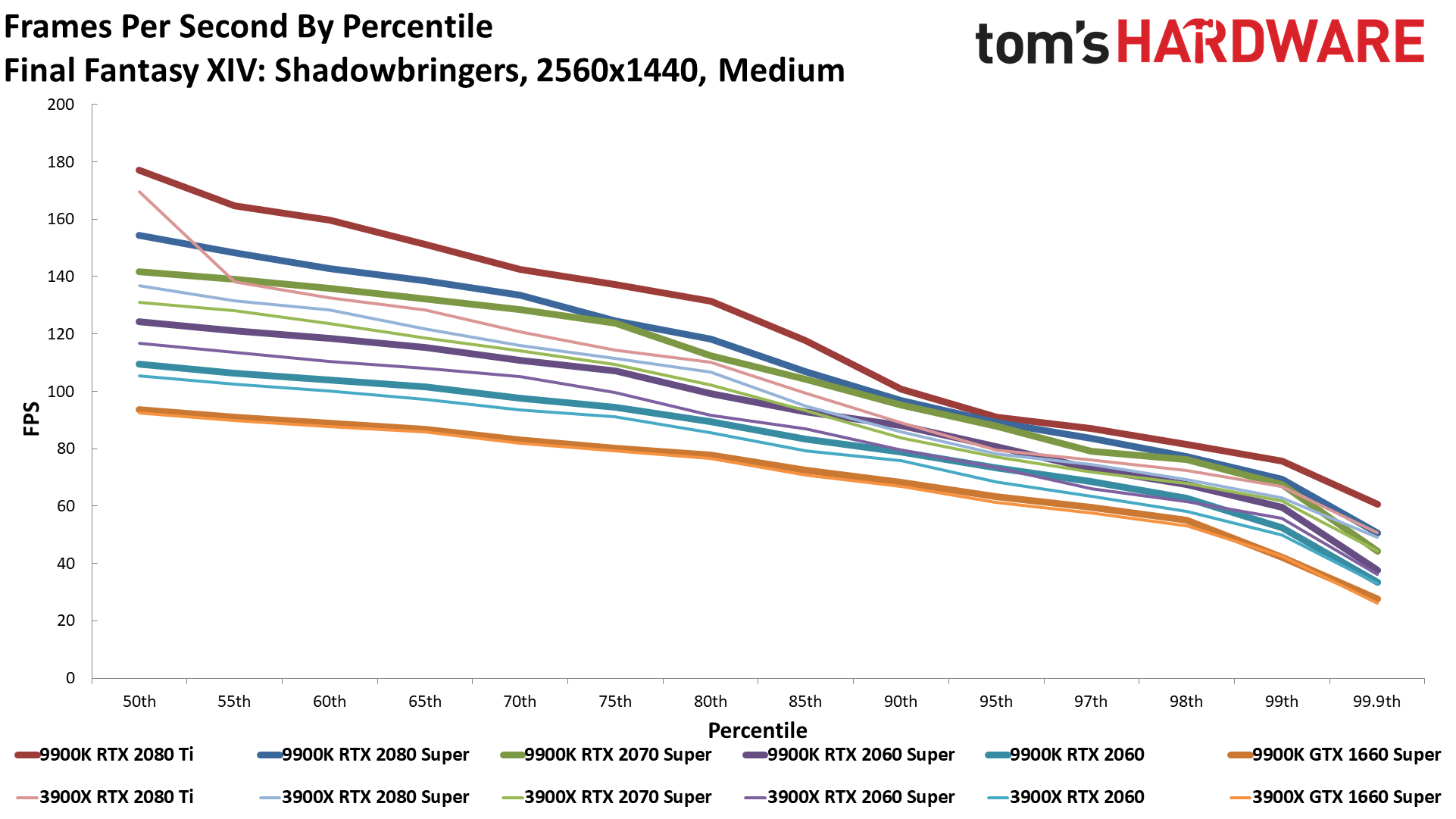

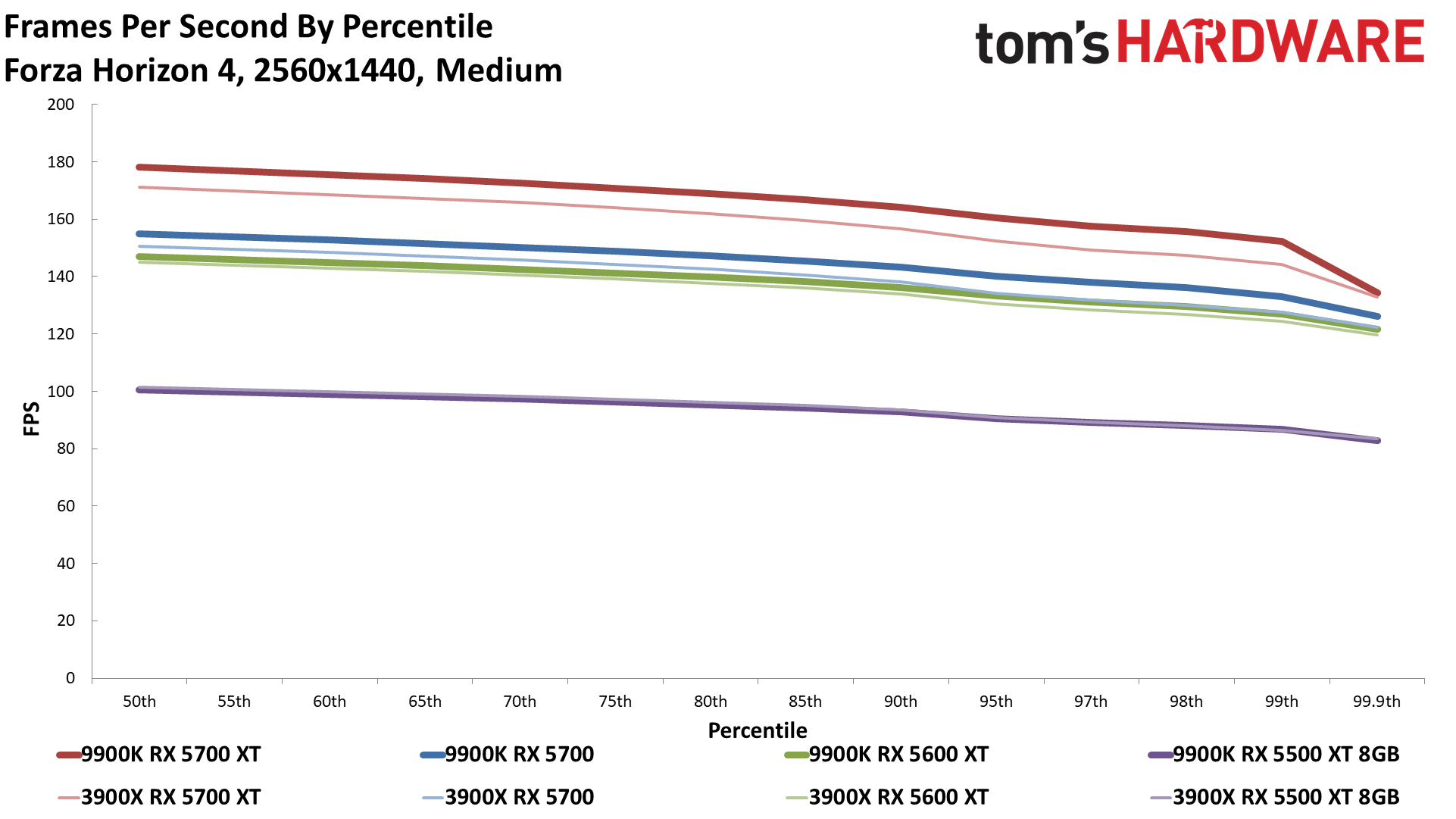

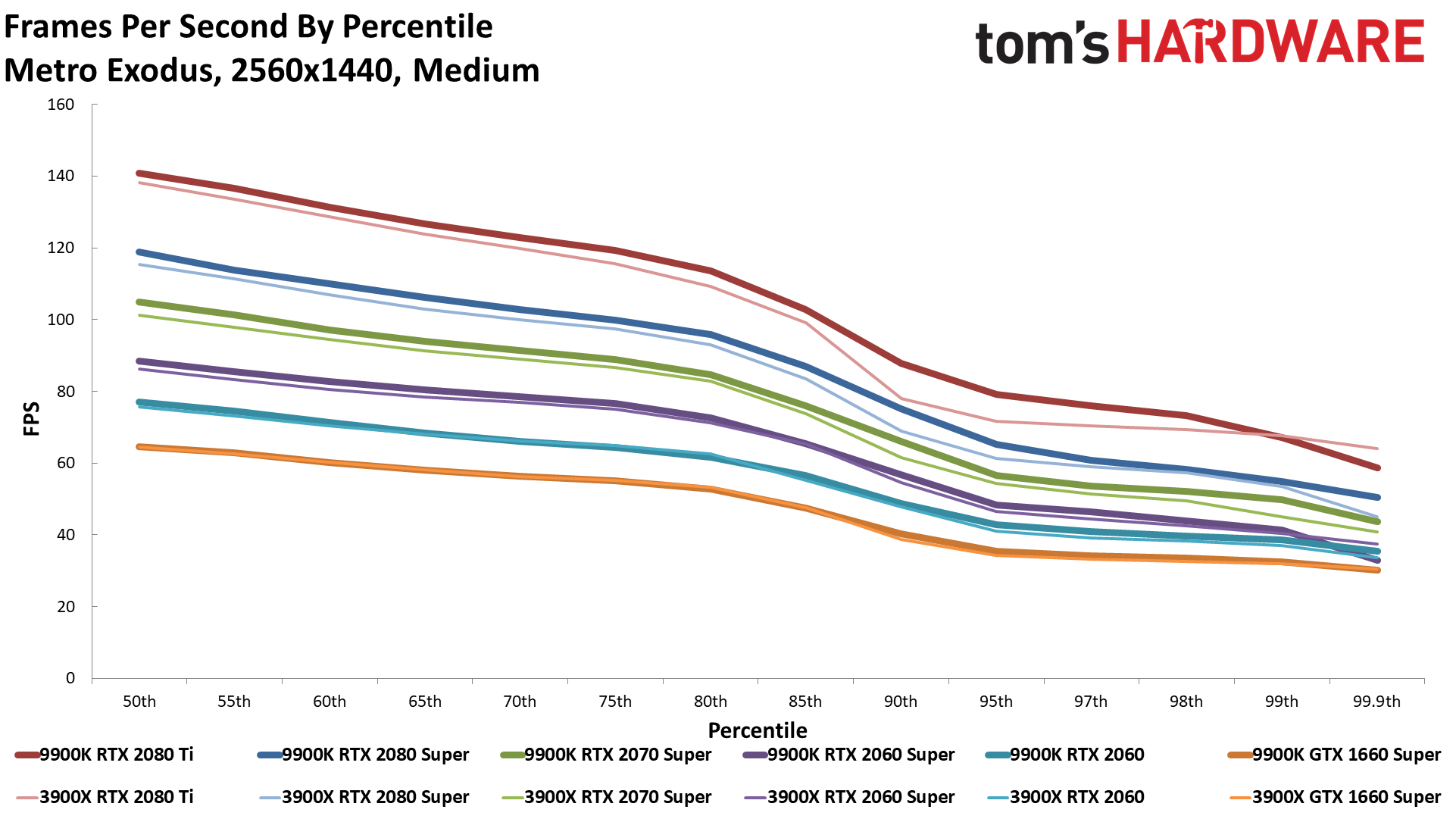

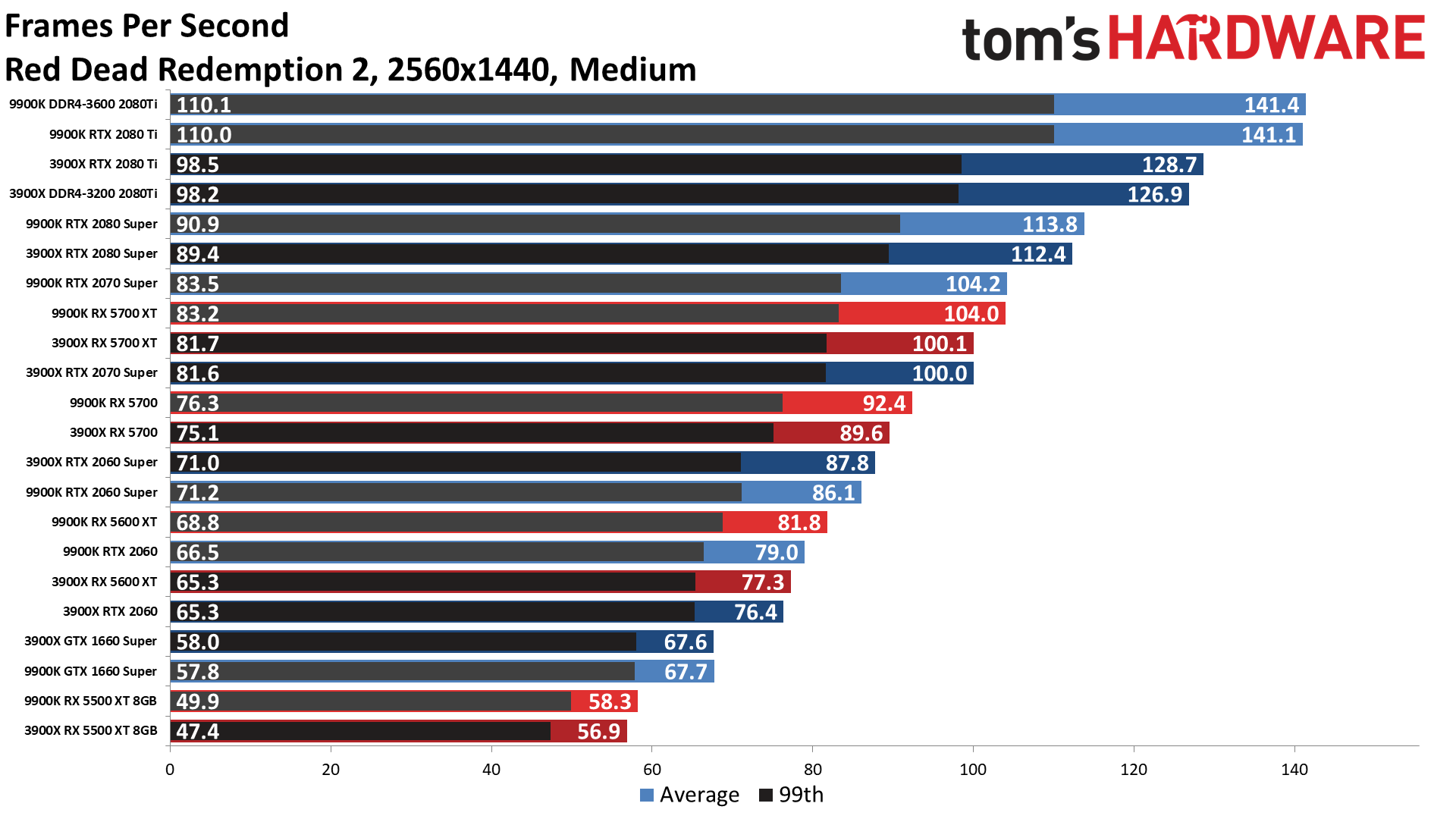

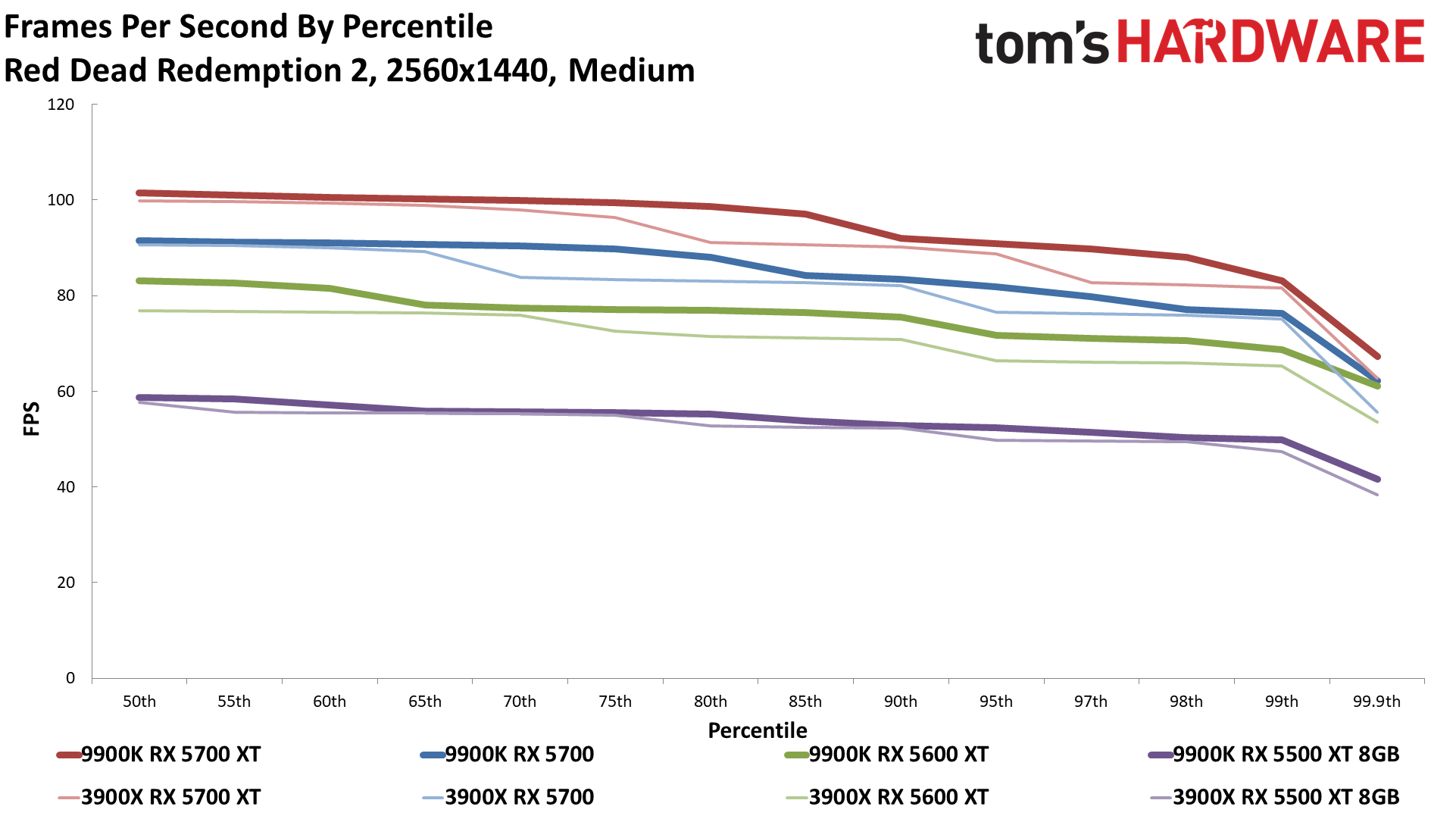

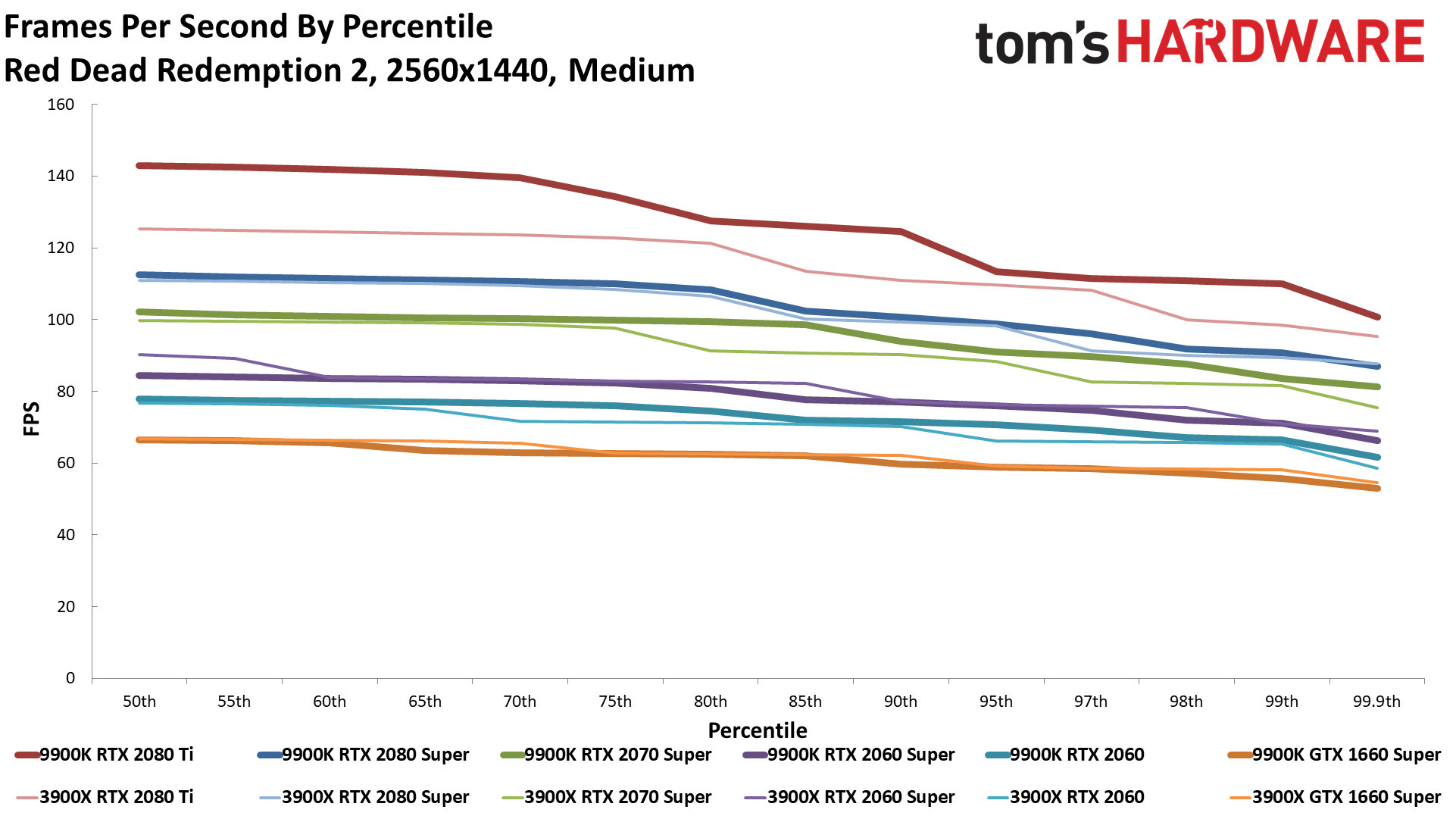

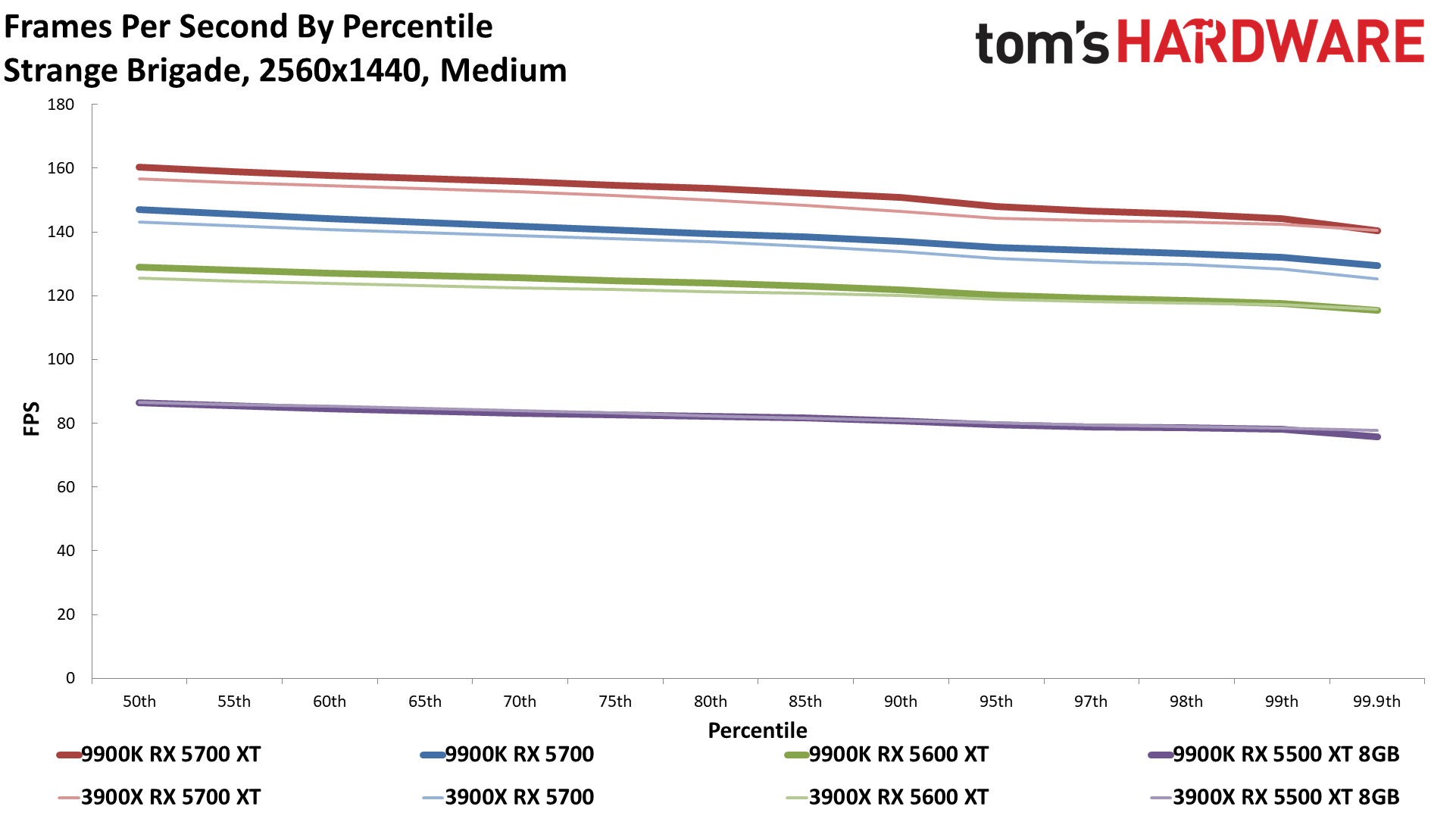

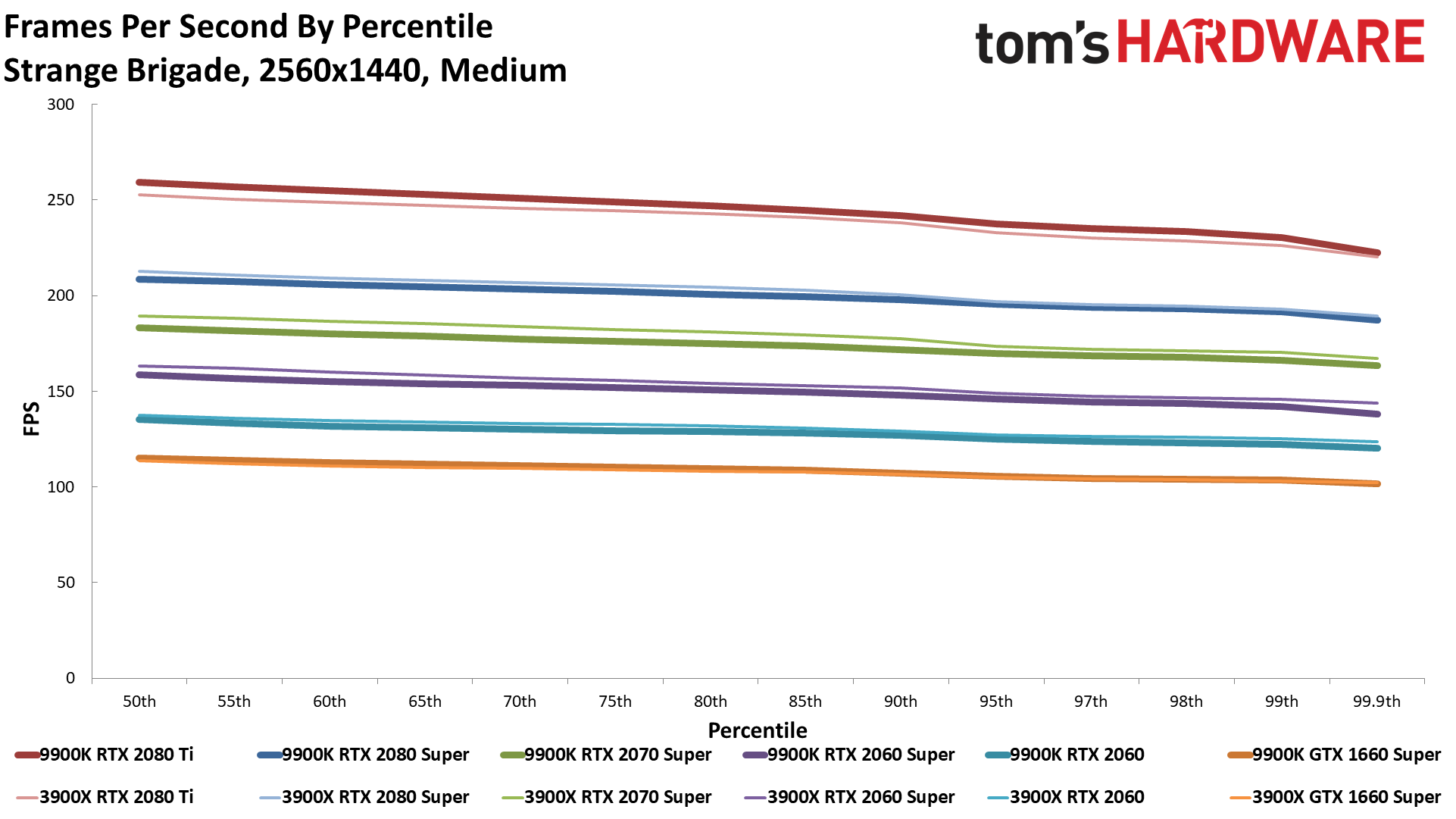

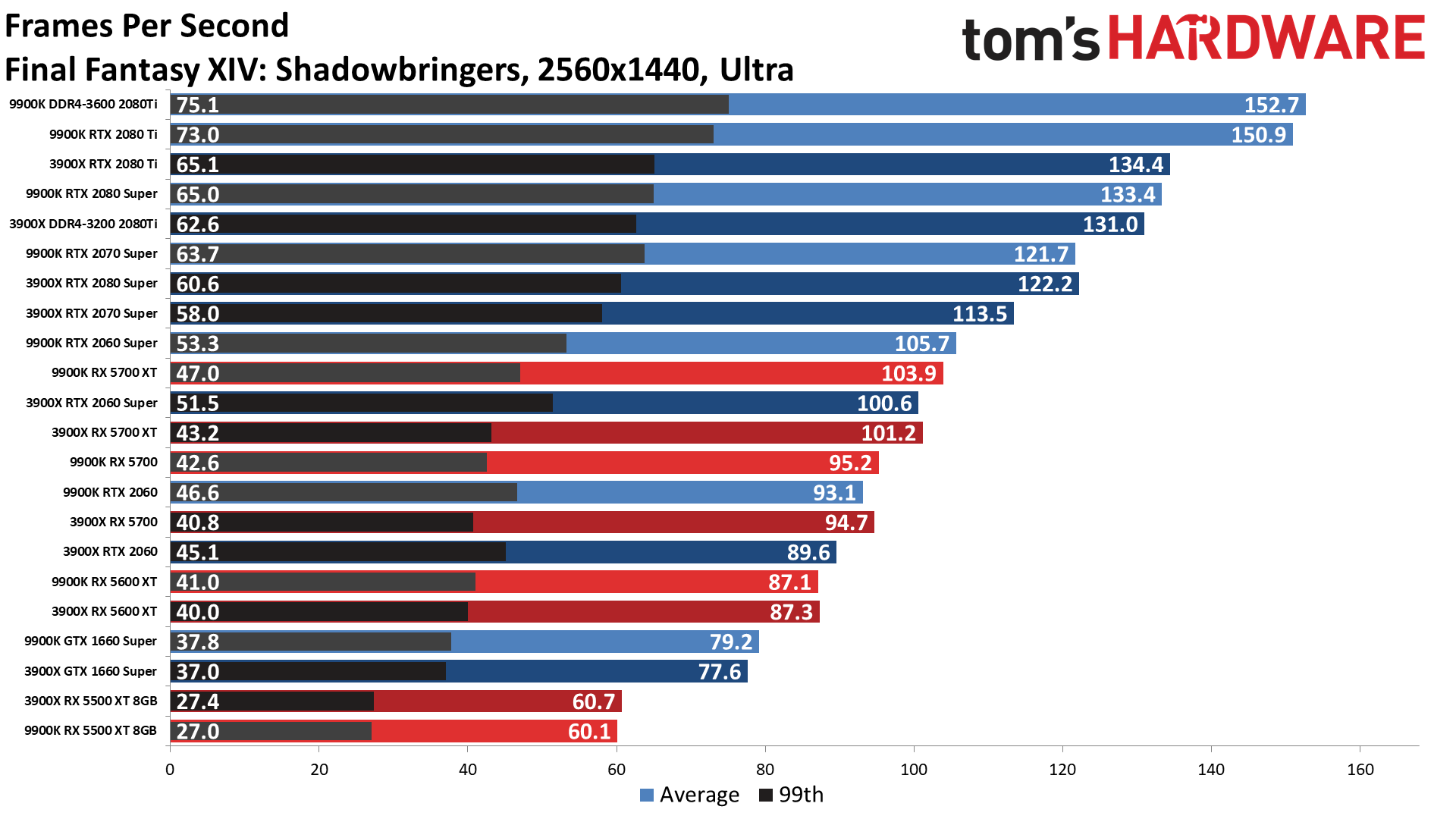

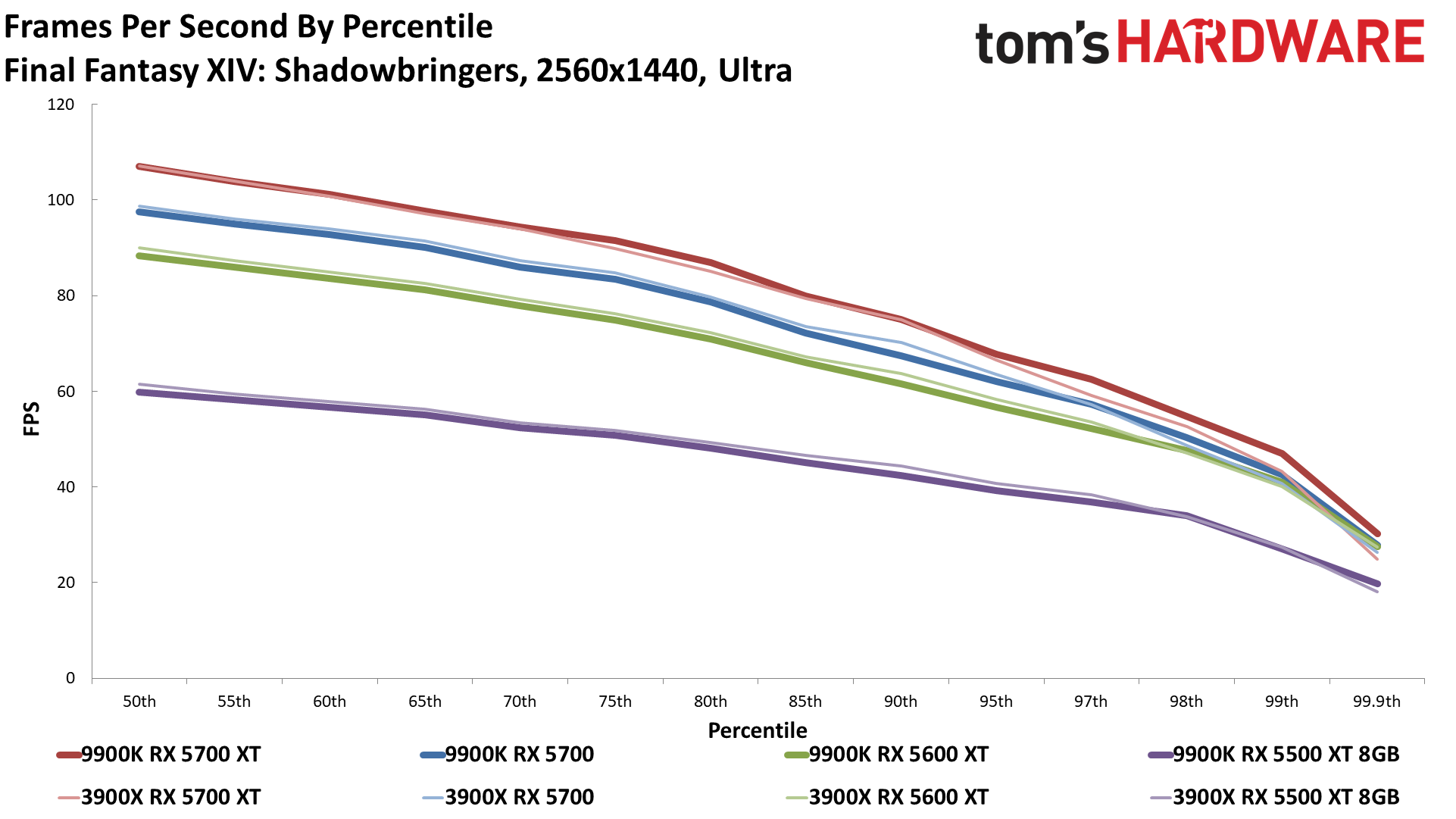

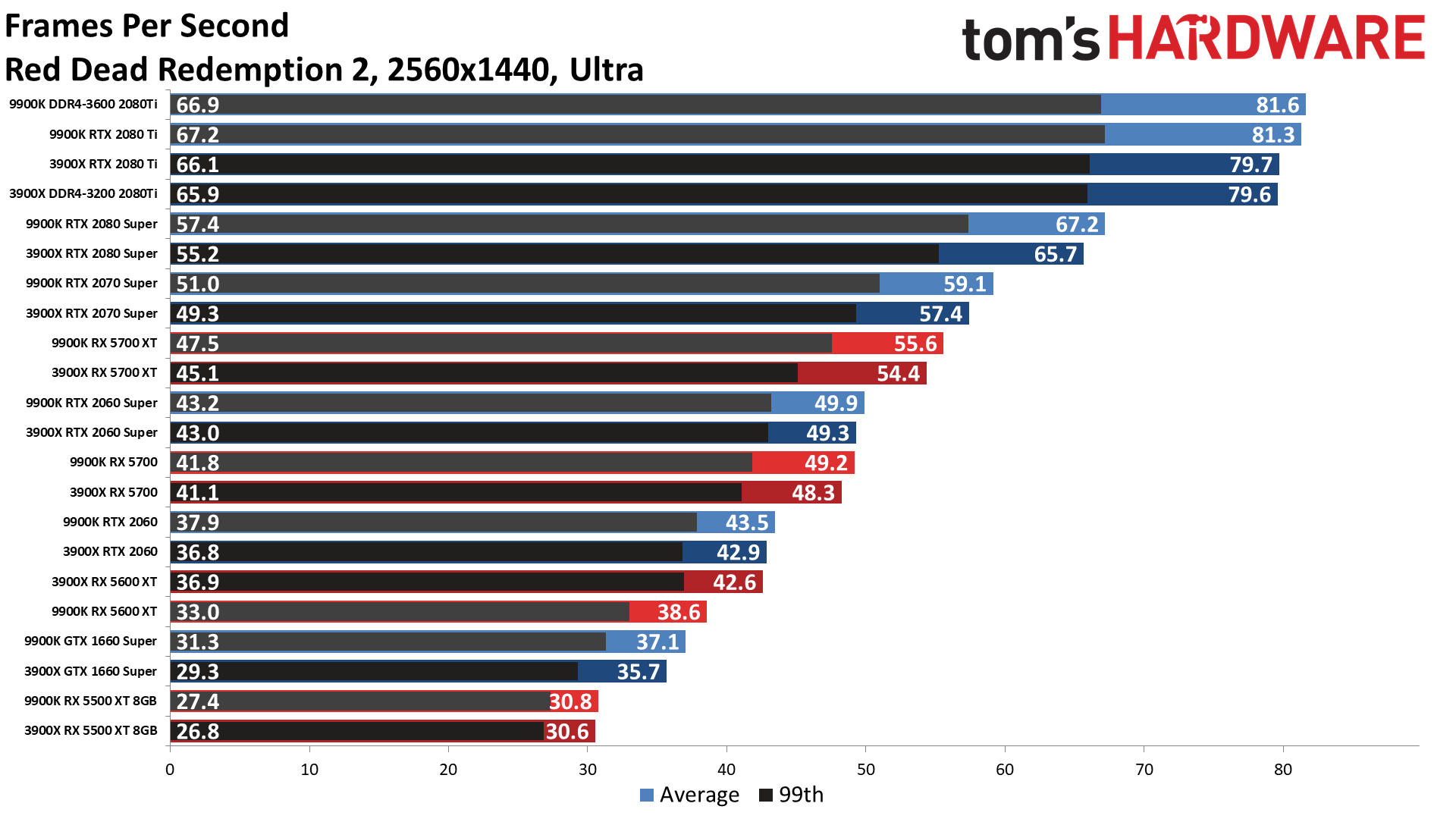

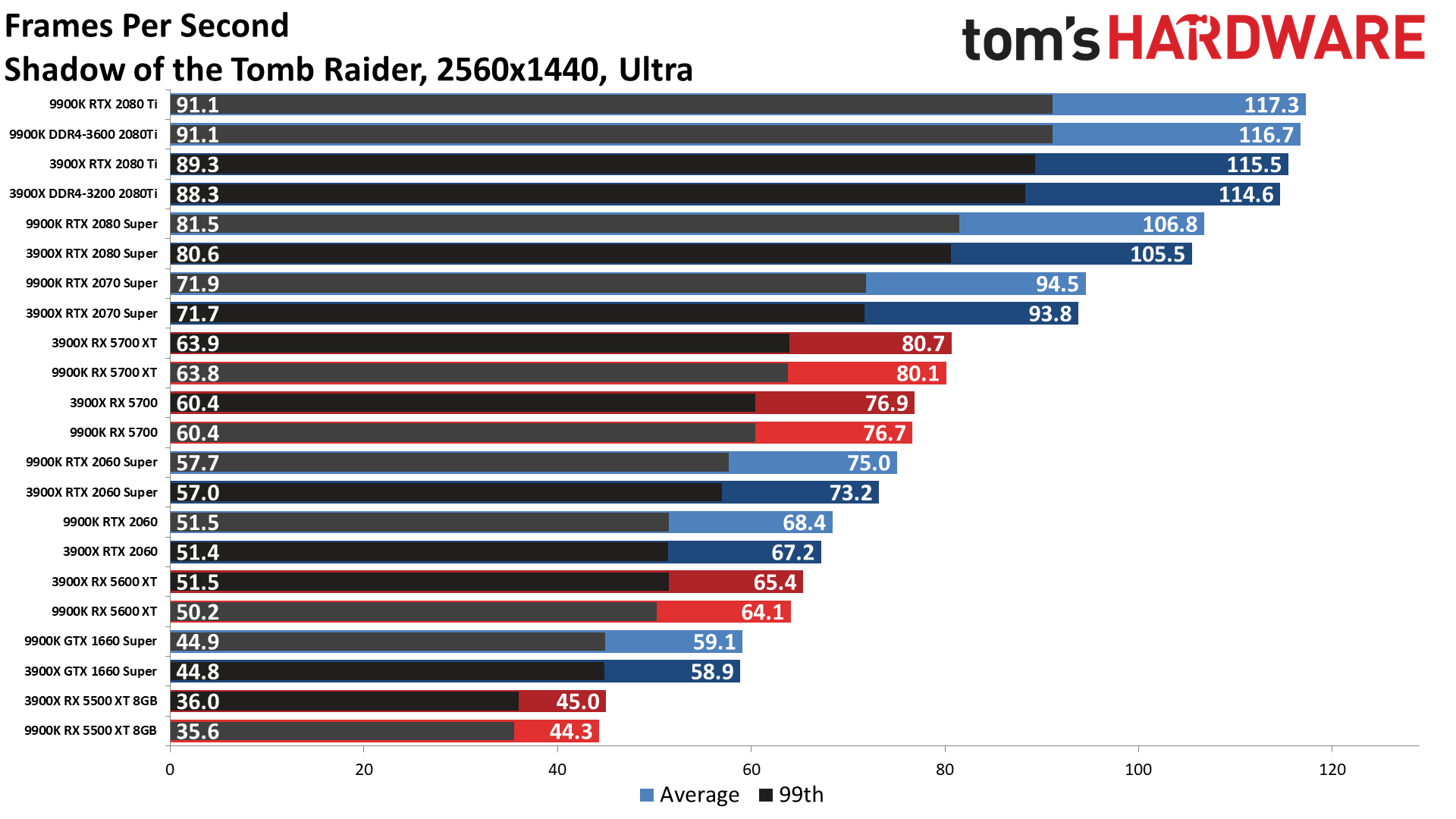

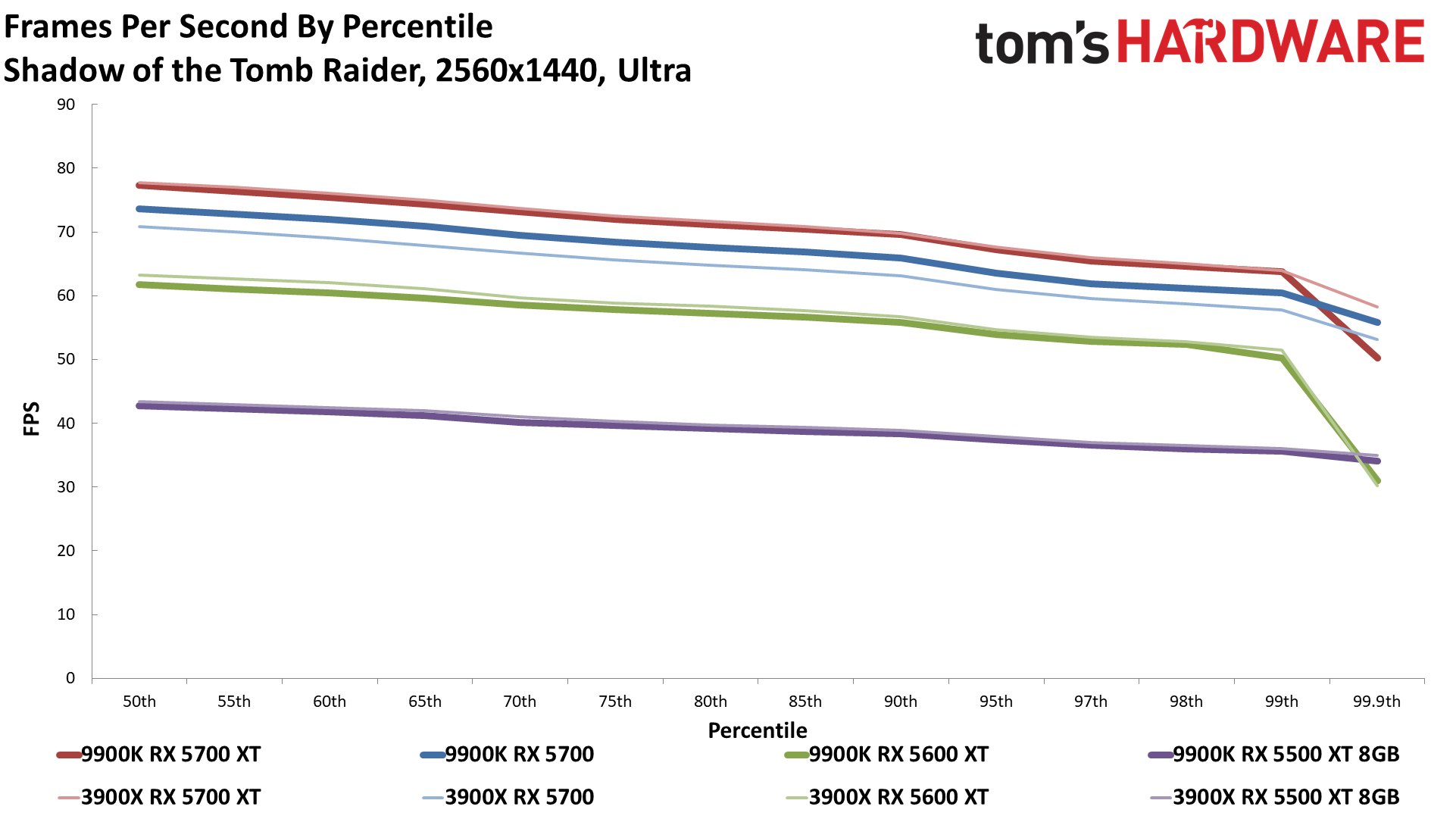

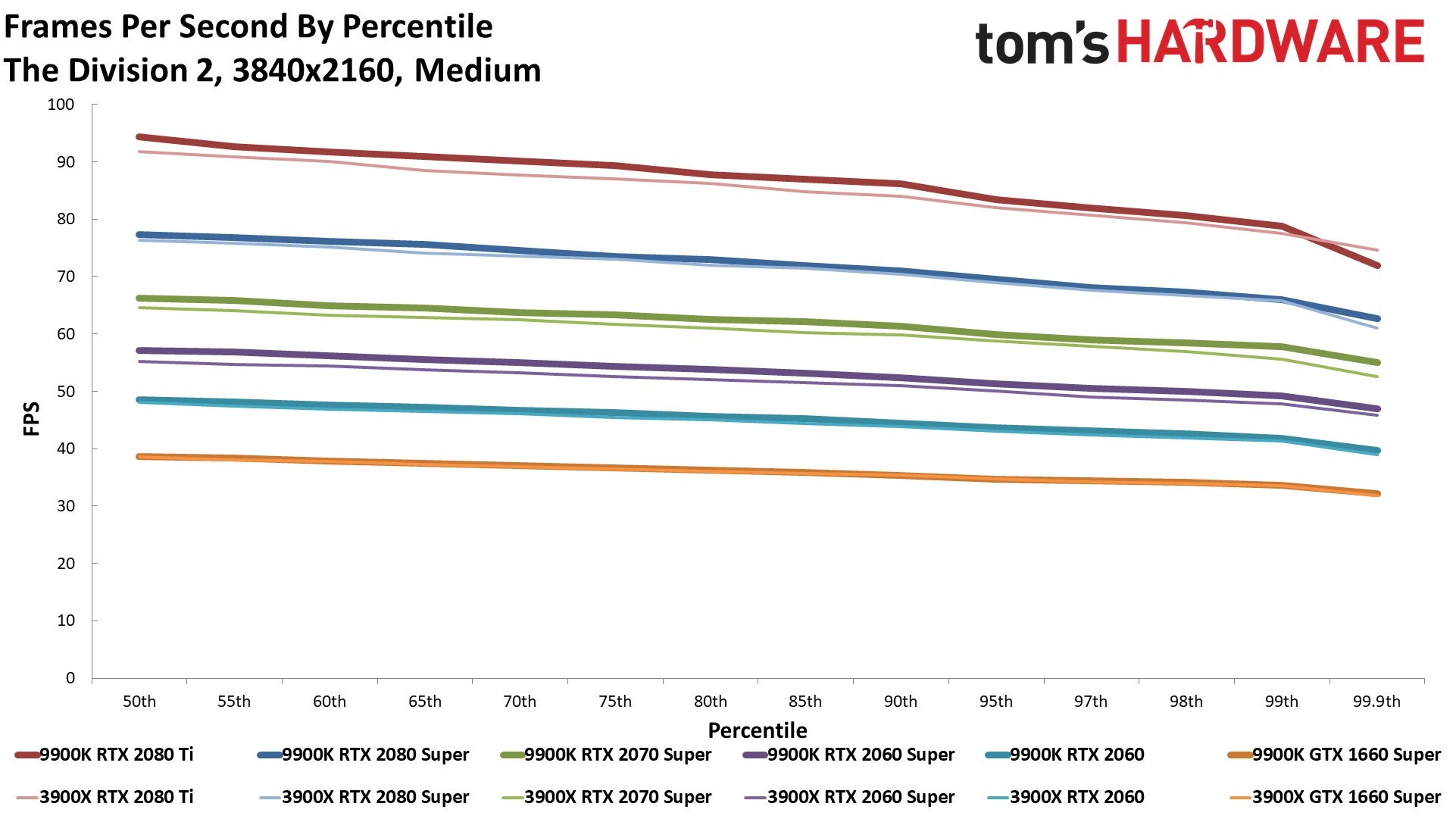

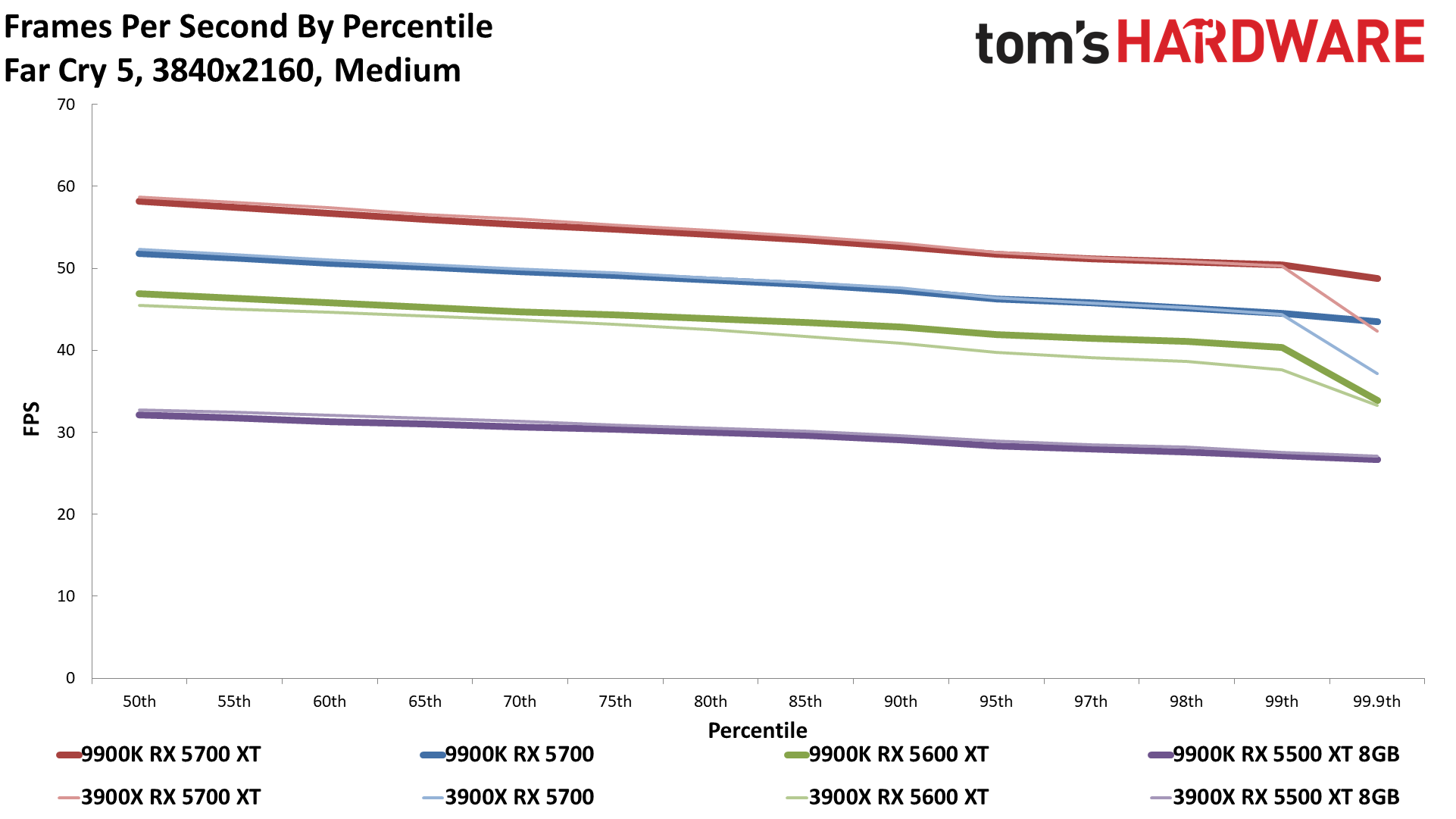

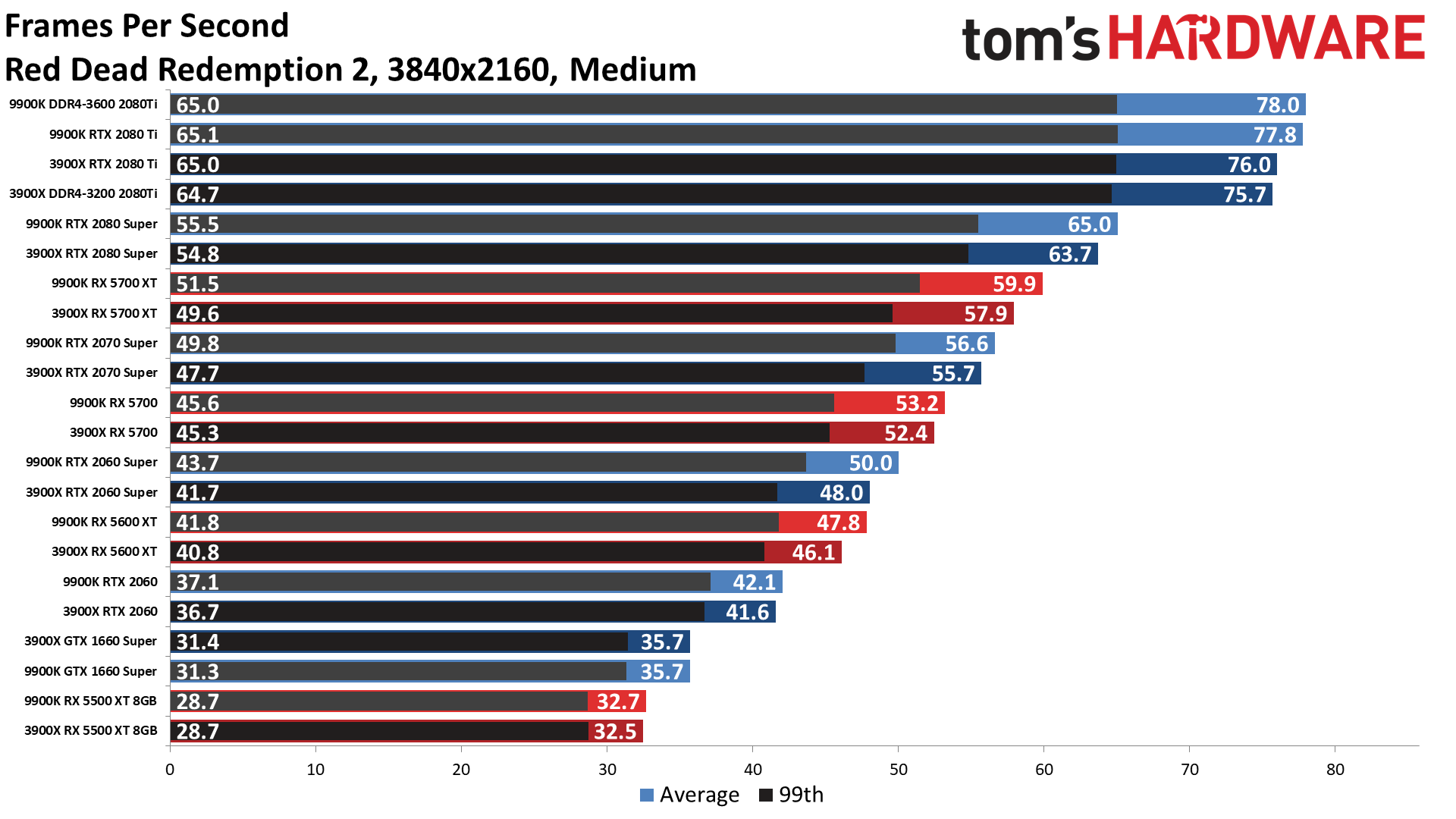

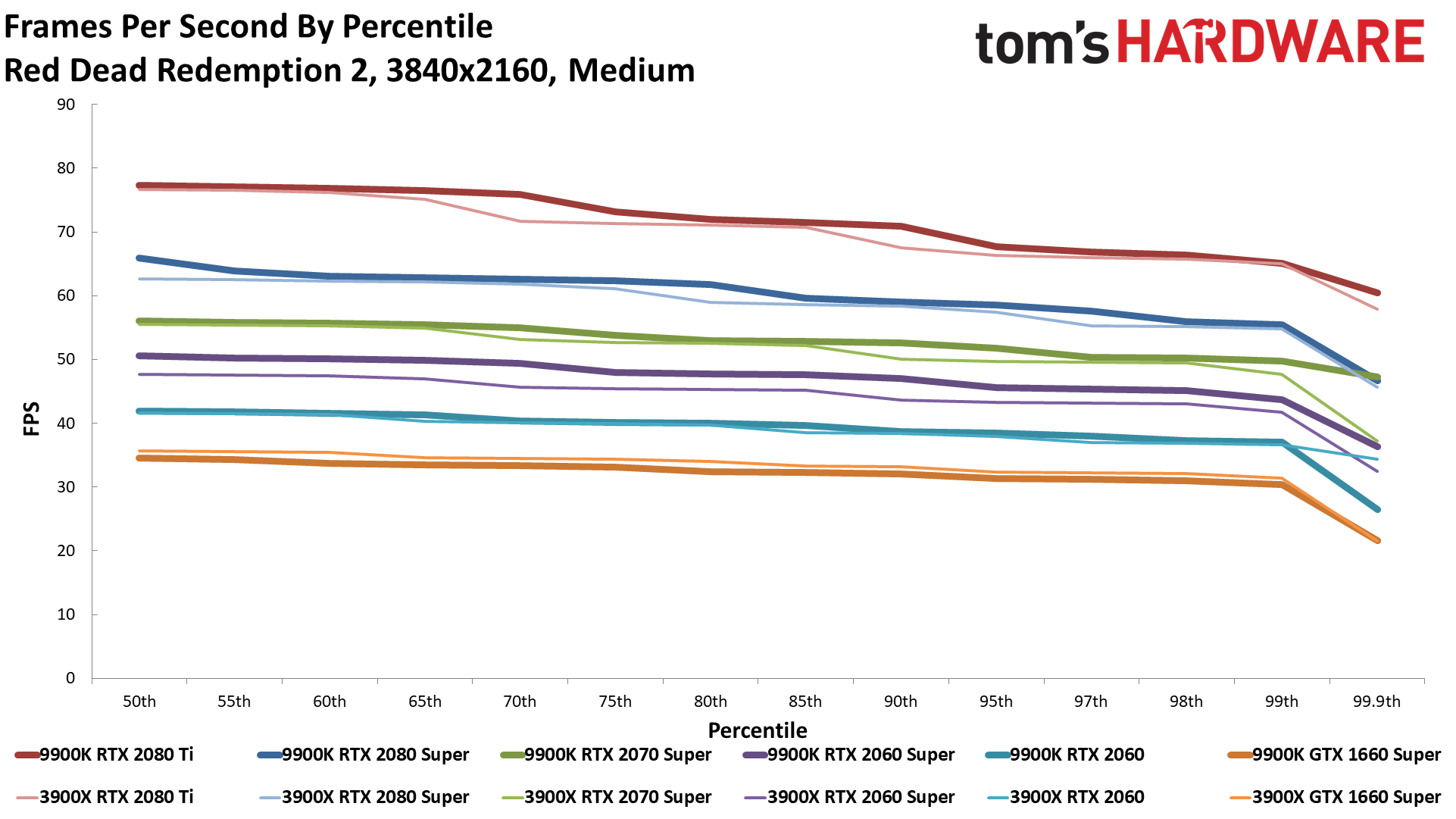

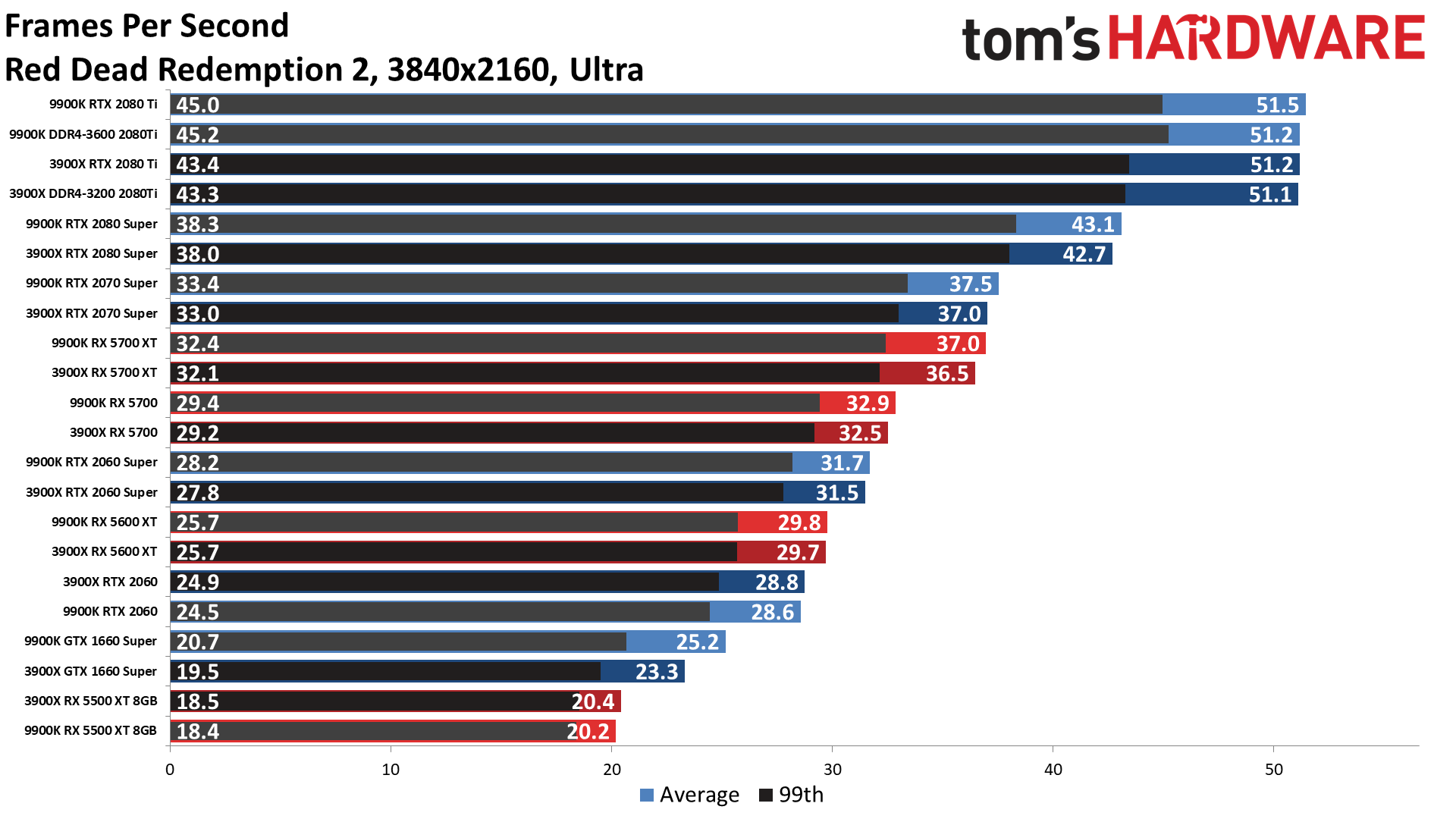

Increasing the resolution to 1440p and dropping back to medium quality, performance in general is quite similar to 1080p ultra. There's not much more to say here — you can flip through the 30 charts in the above gallery, but the only double digit percentage leads for Intel are in Far Cry 5, Final Fantasy, and Red Dead Redemption 2, and only with an RTX 2080 Ti or RTX 2080 Super.

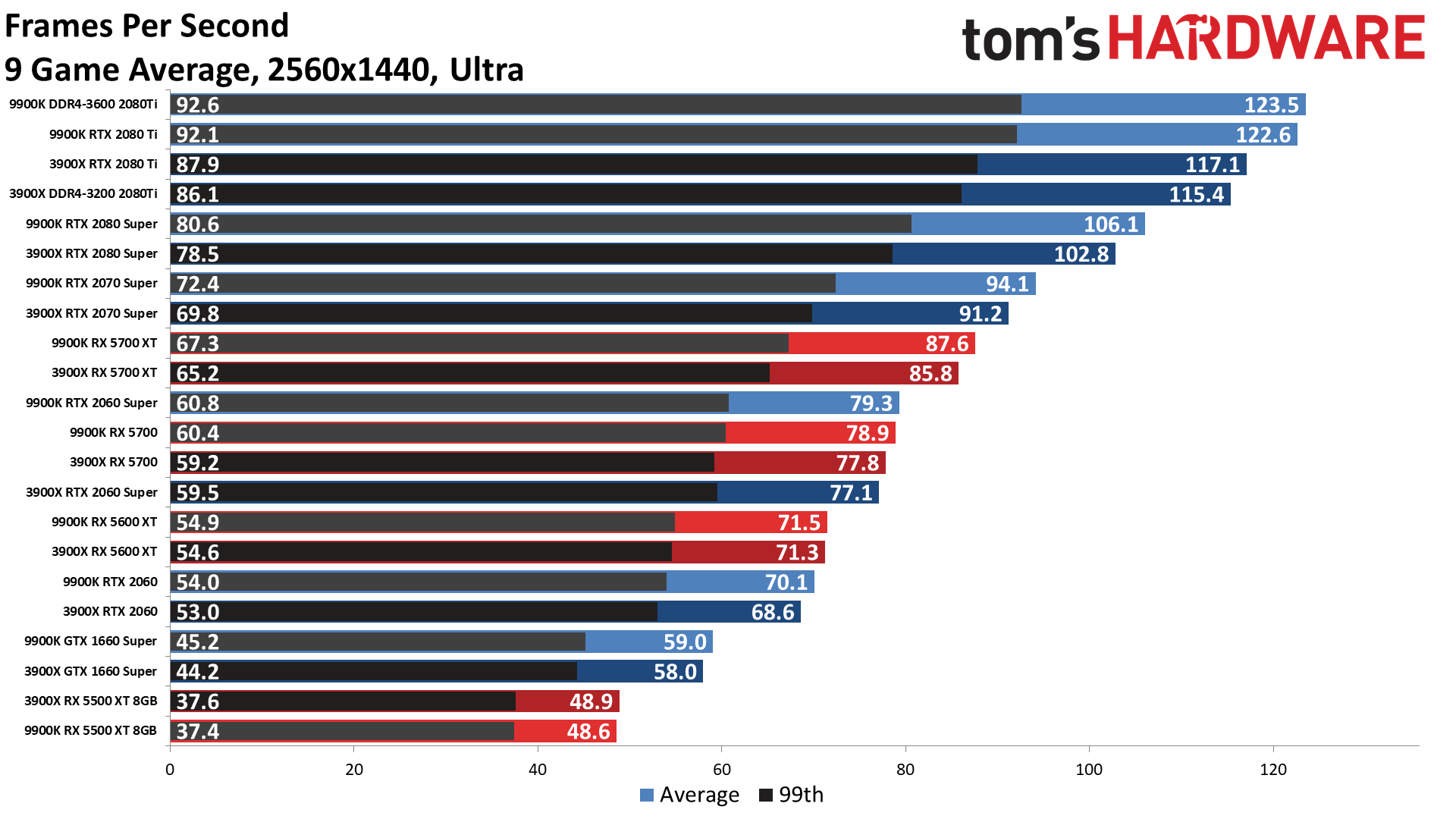

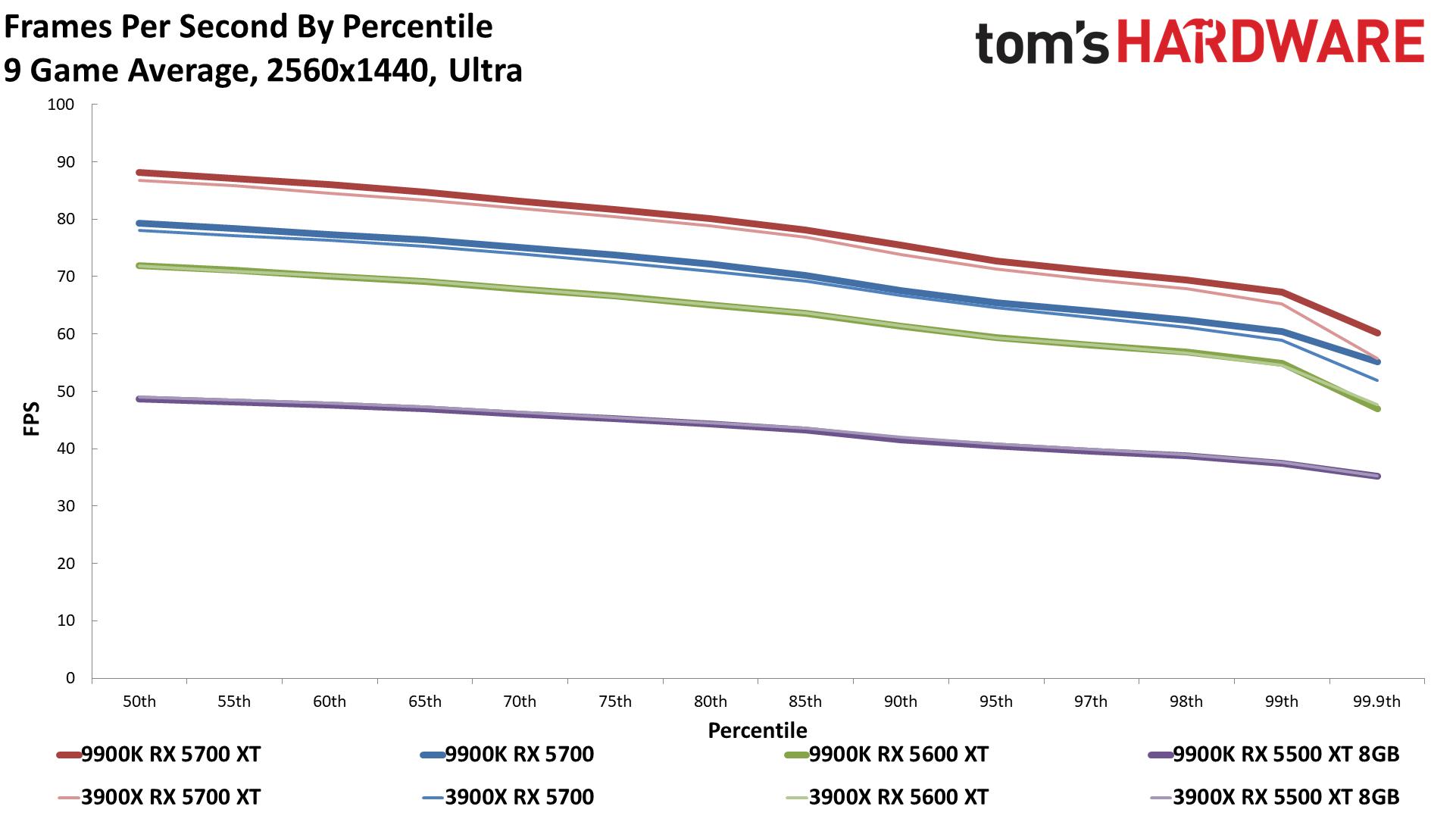

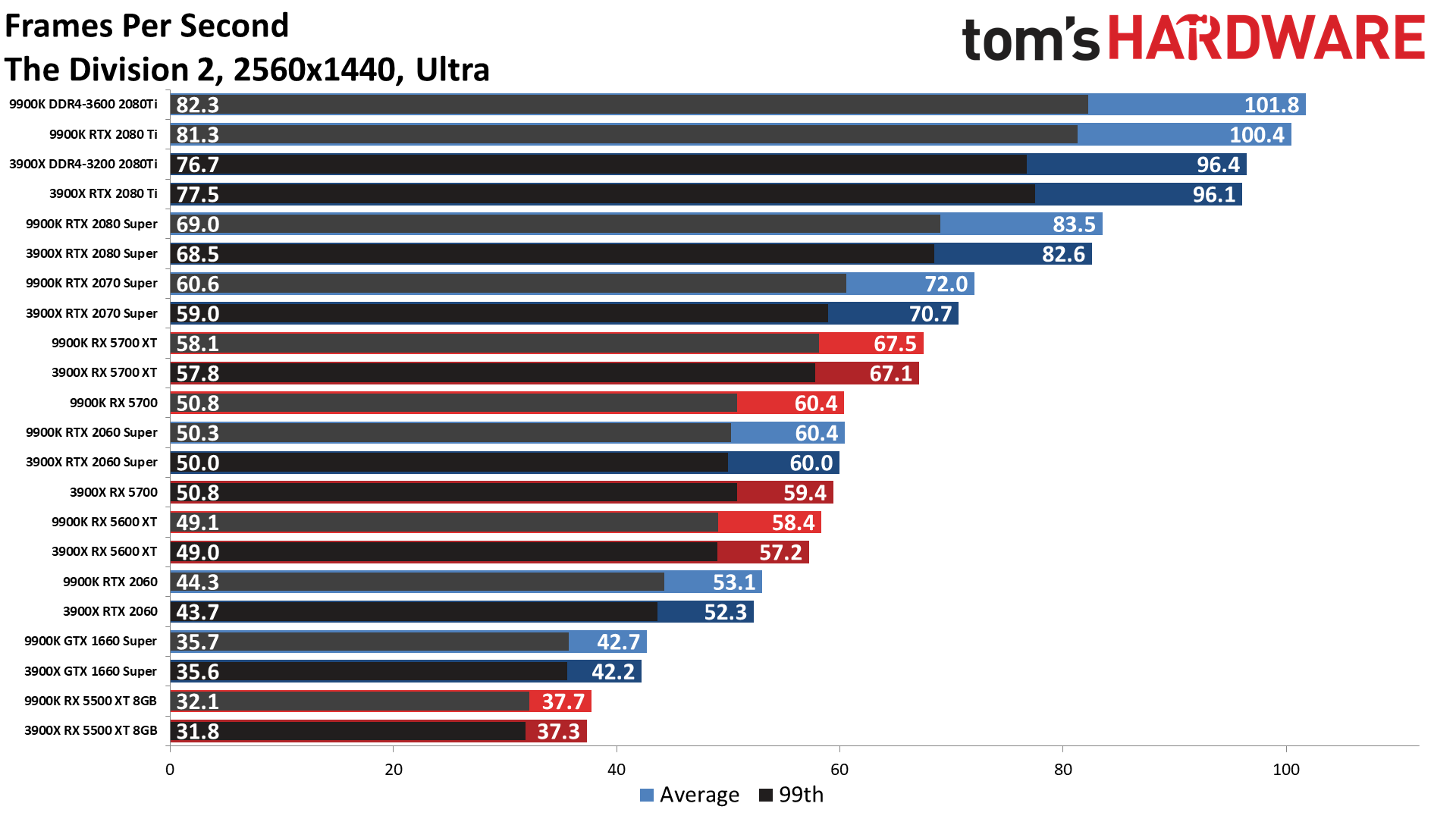

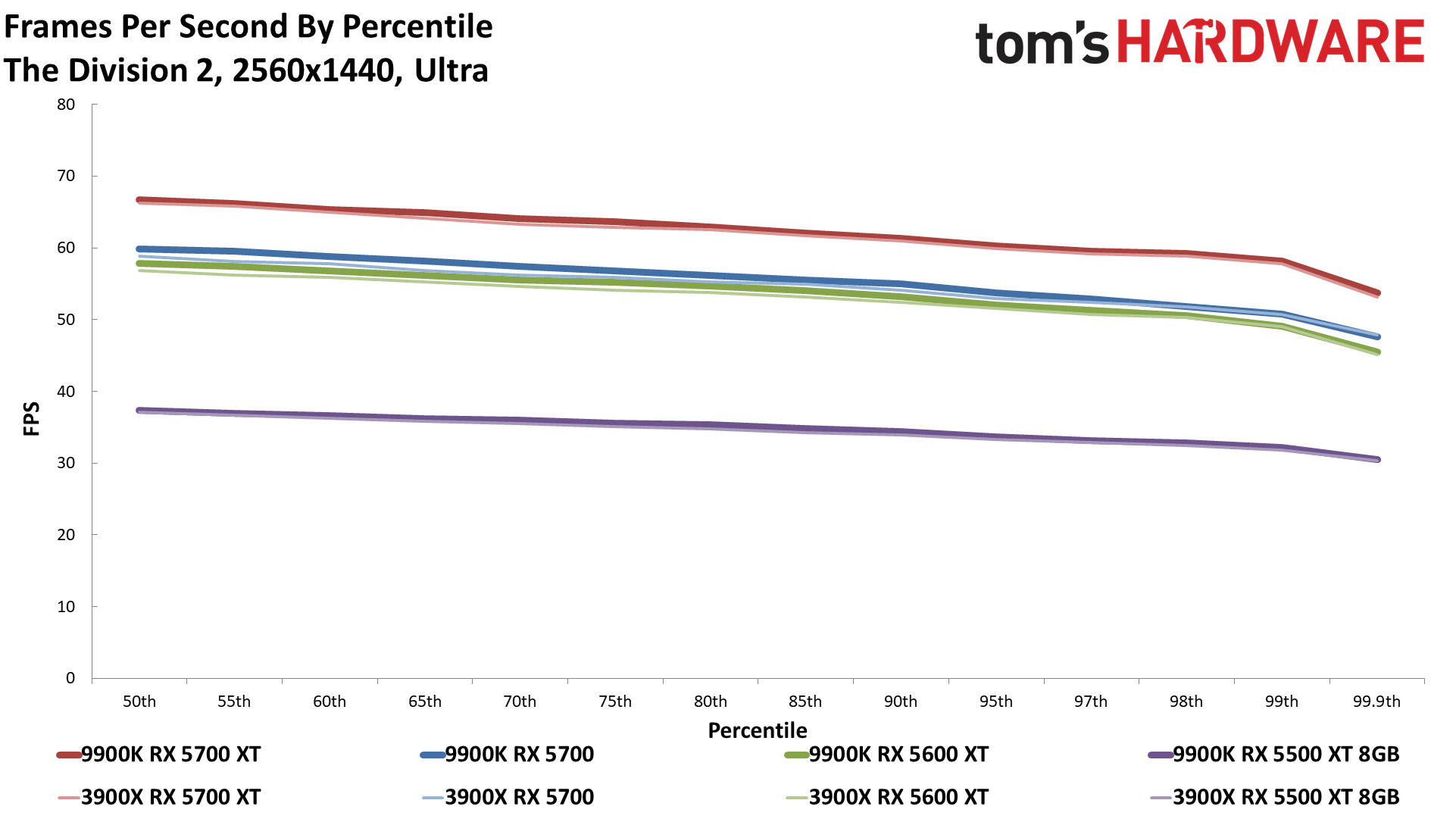

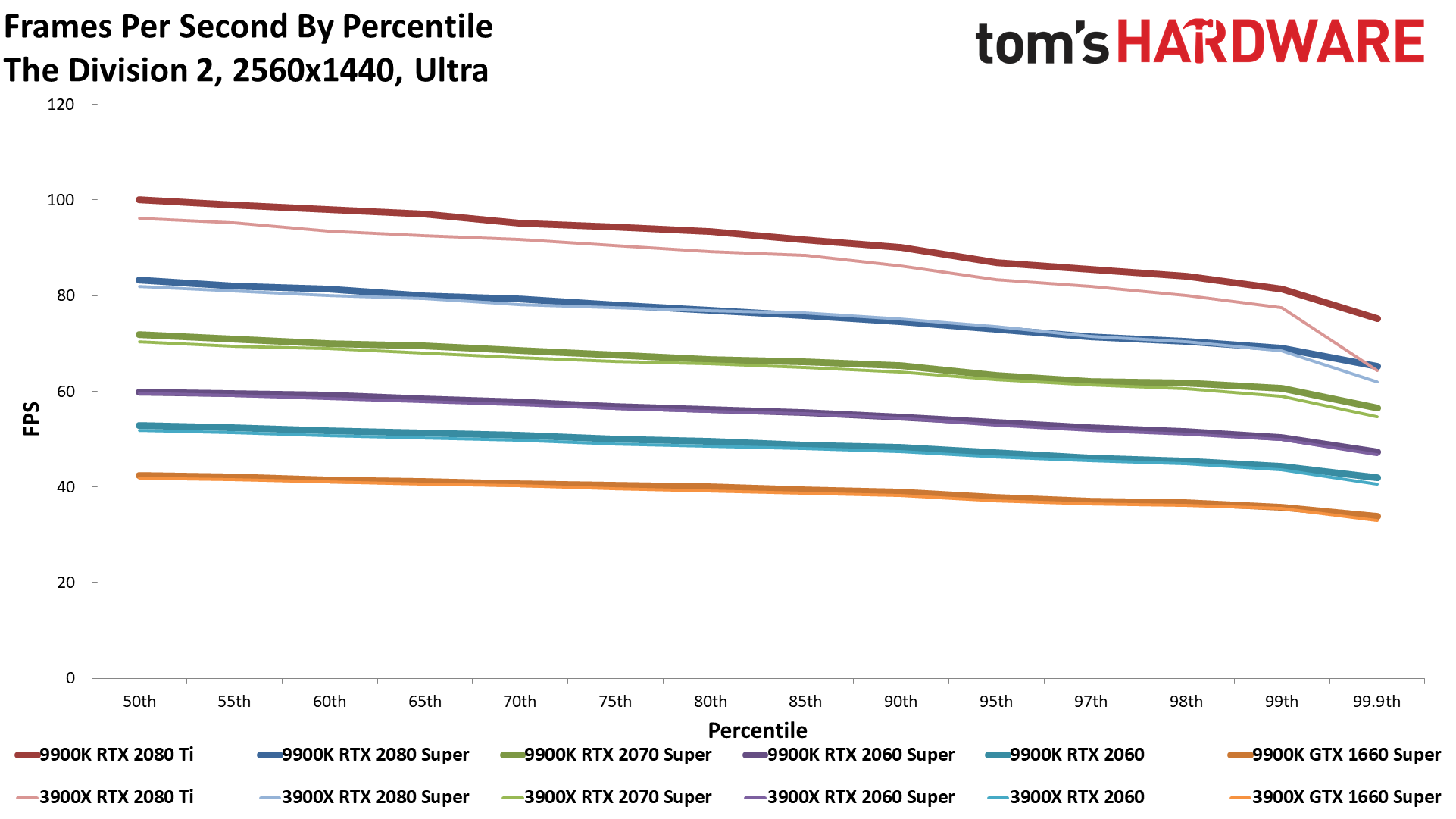

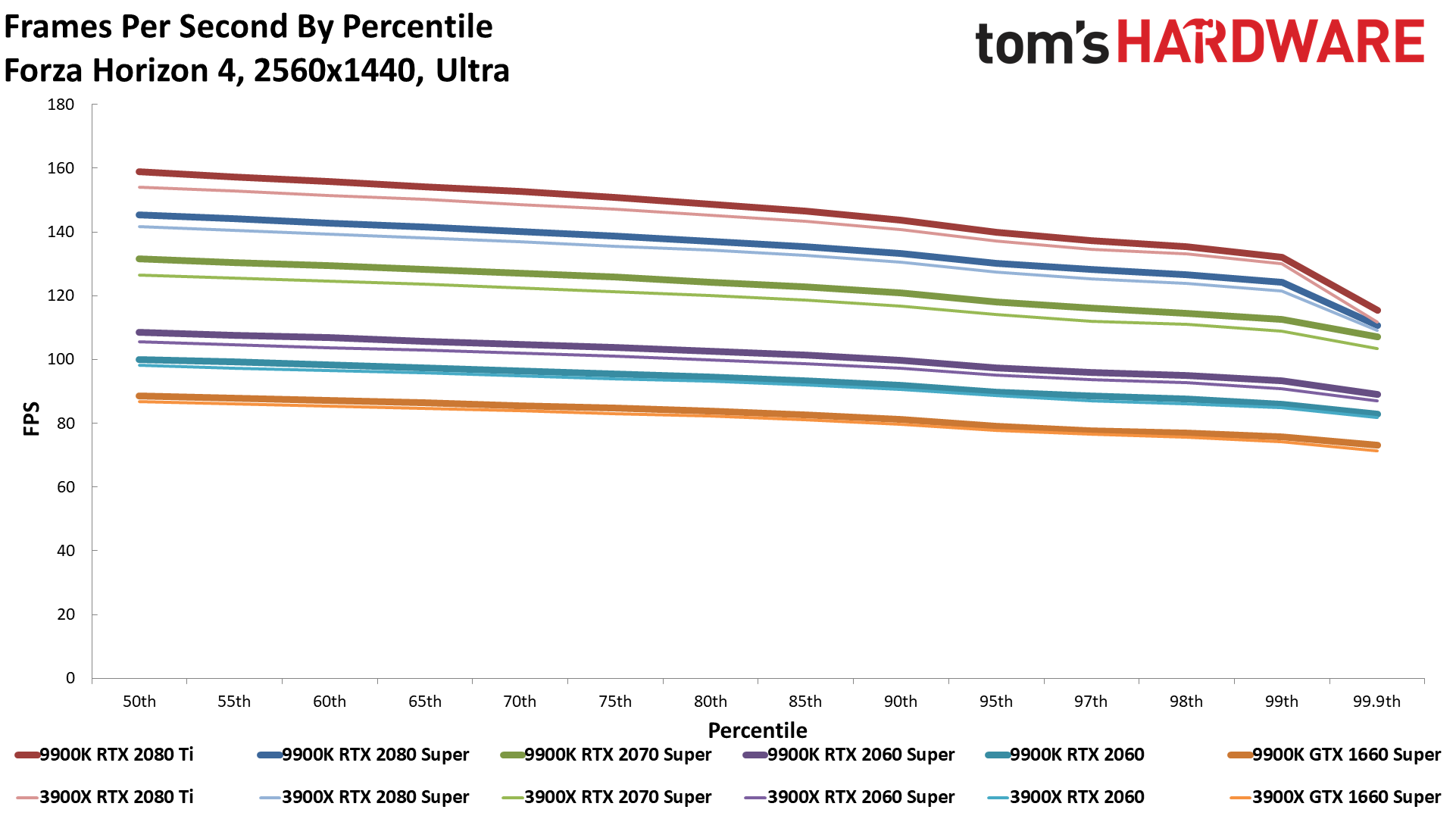

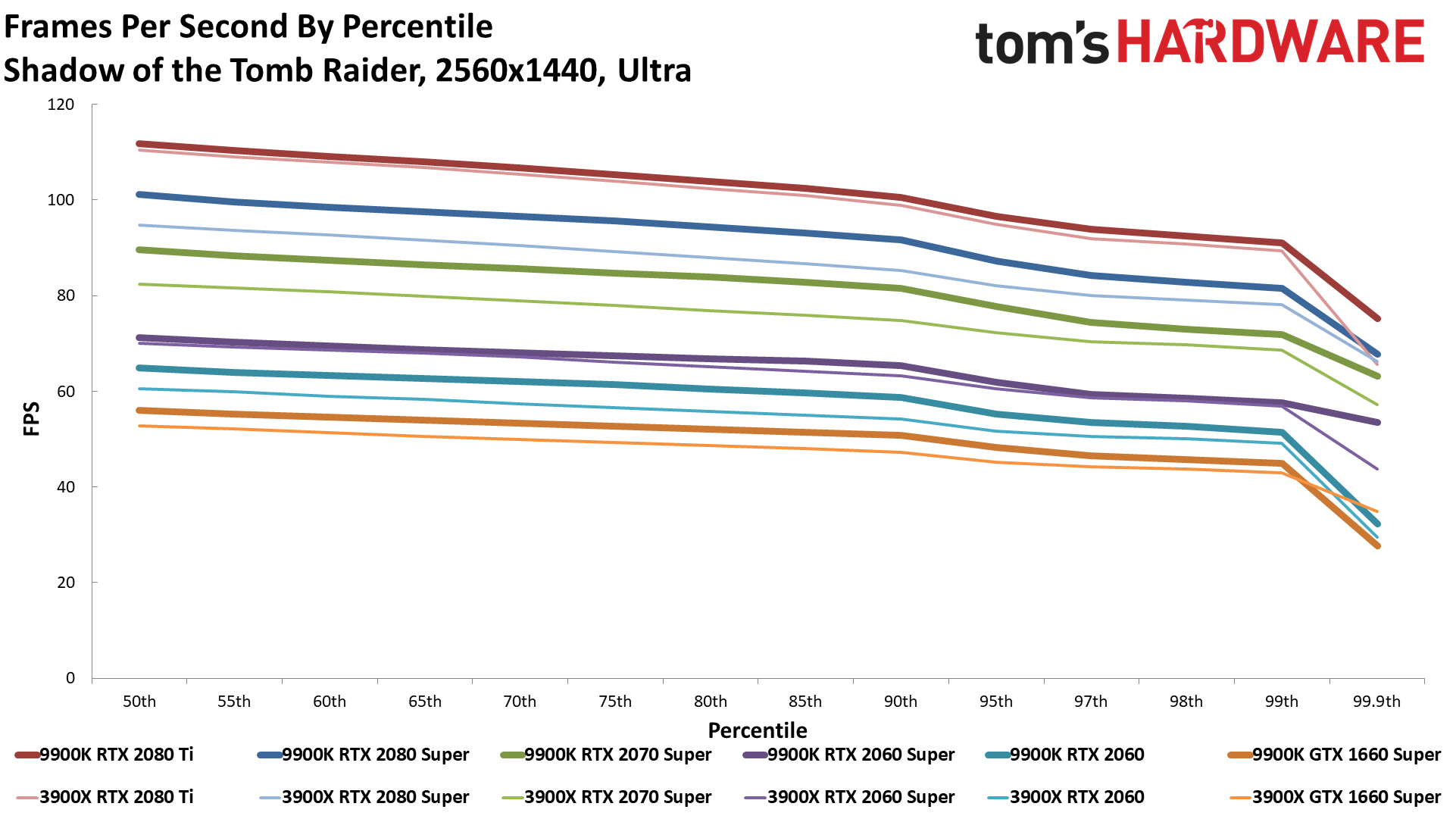

The difference between the AMD and Intel CPUs drops to less than 5% overall at 1440p ultra with the RTX 2080 Ti, and 3% or less with any of the lower priced graphics cards. And if you're wondering, a few spot checks with a Ryzen 5 3600 are within 3% of the 3900X as well, so you can certainly go lower on the CPU without giving up much in the way of 1440p ultra gaming performance. Of course there are faster GPUs coming, which will potentially shift the bottleneck back to the CPUs even at higher resolutions and settings, but we'll have to wait and see what actually happens when the new graphics cards arrive.

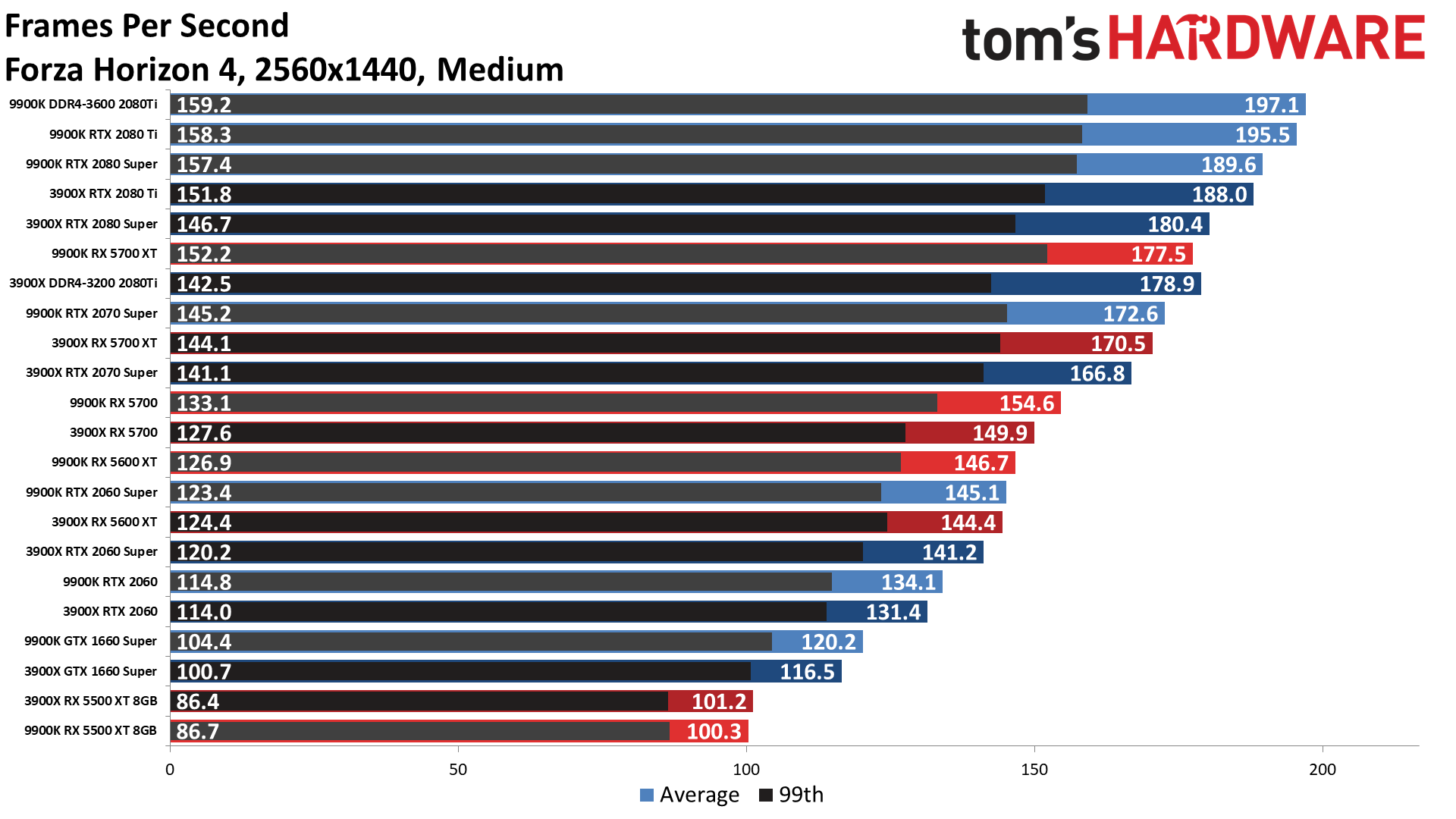

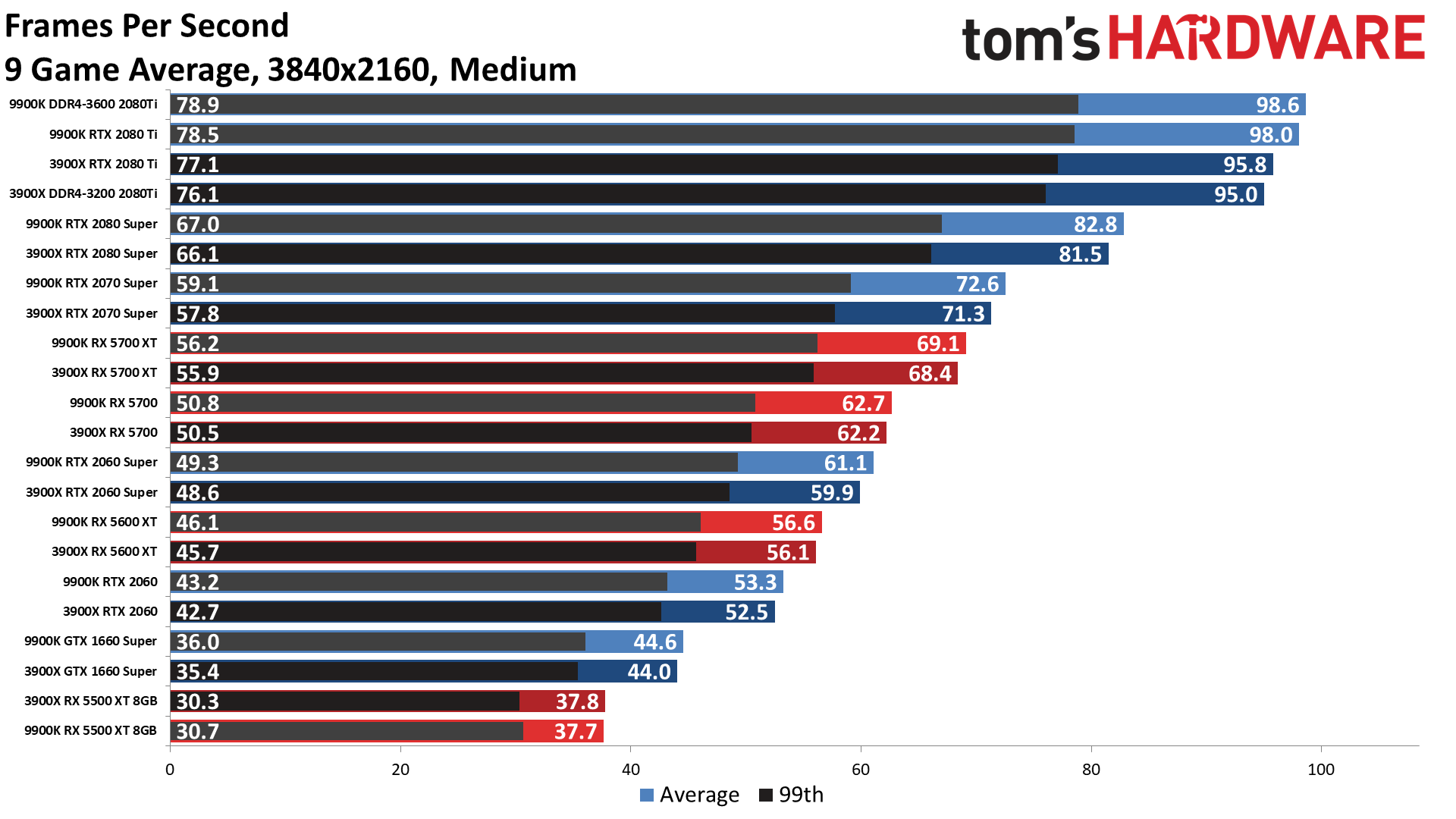

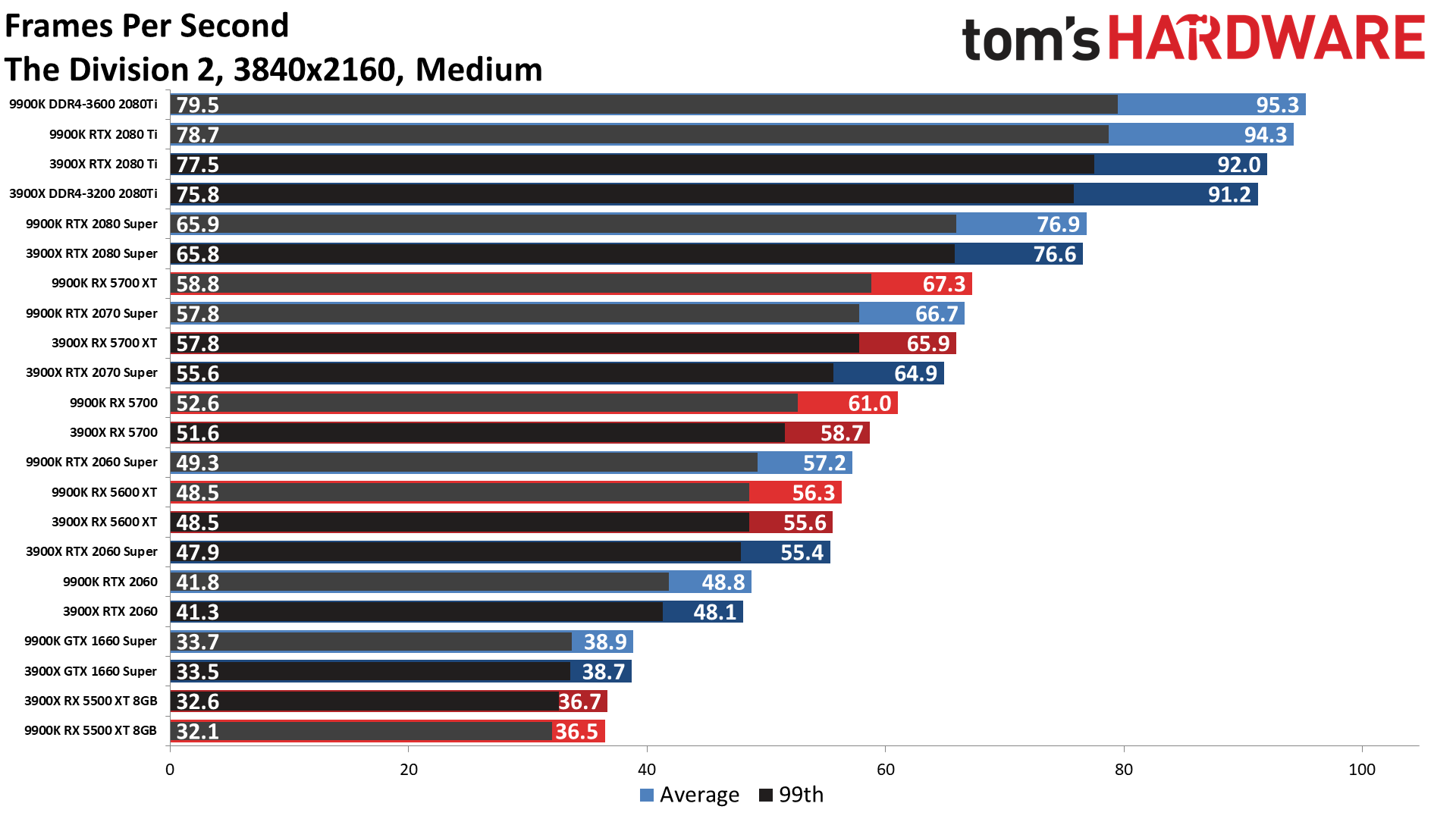

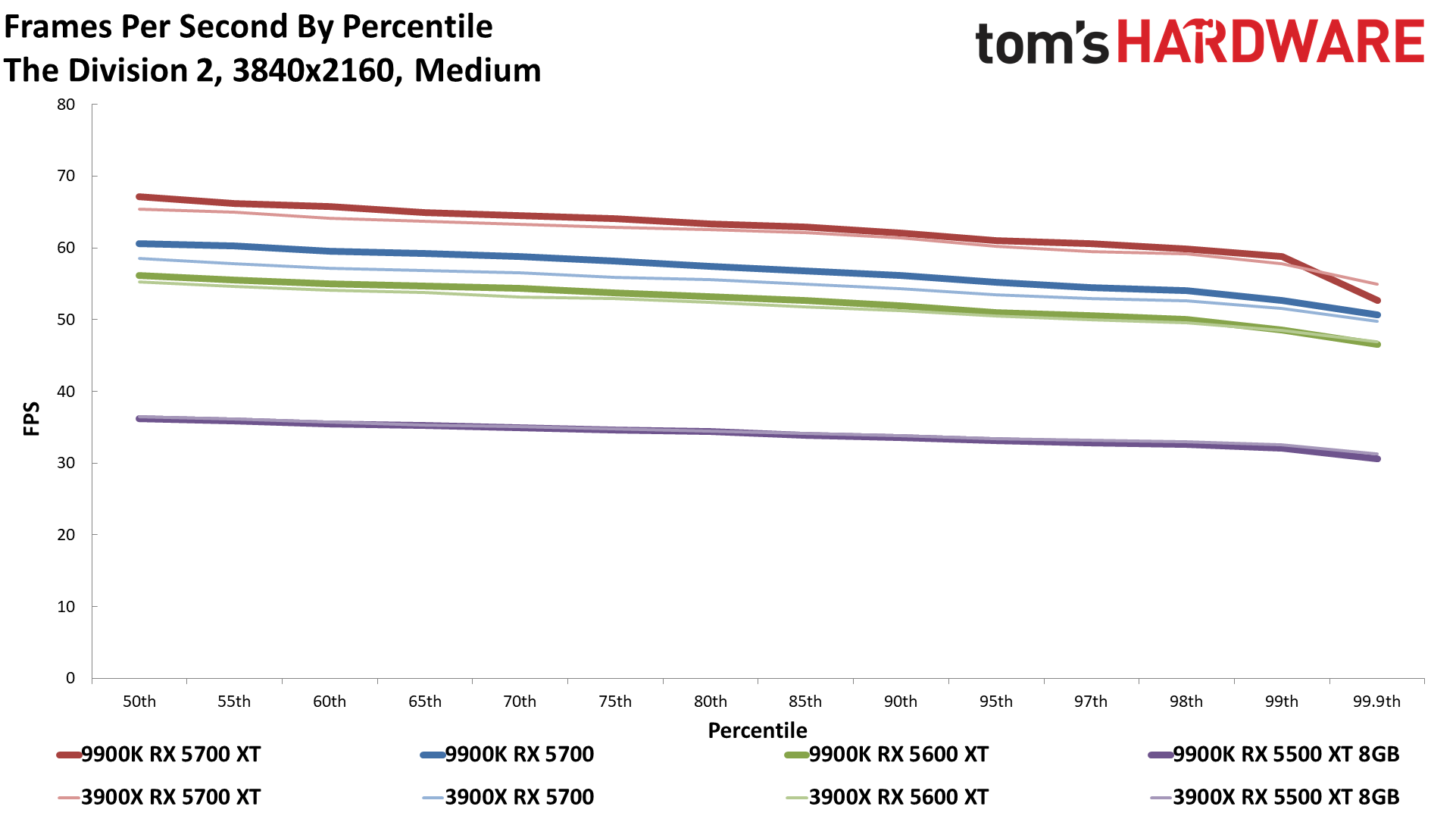

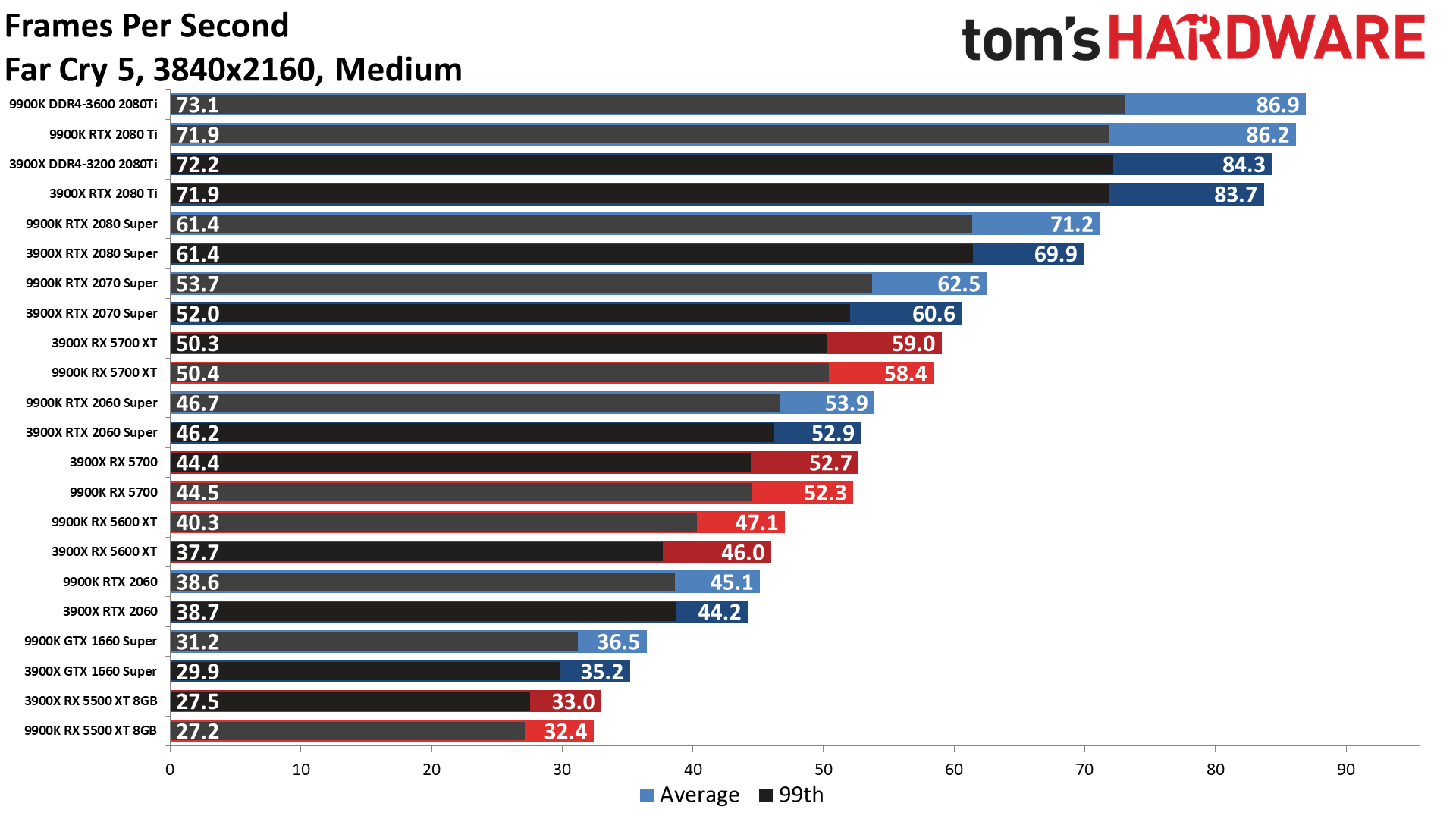

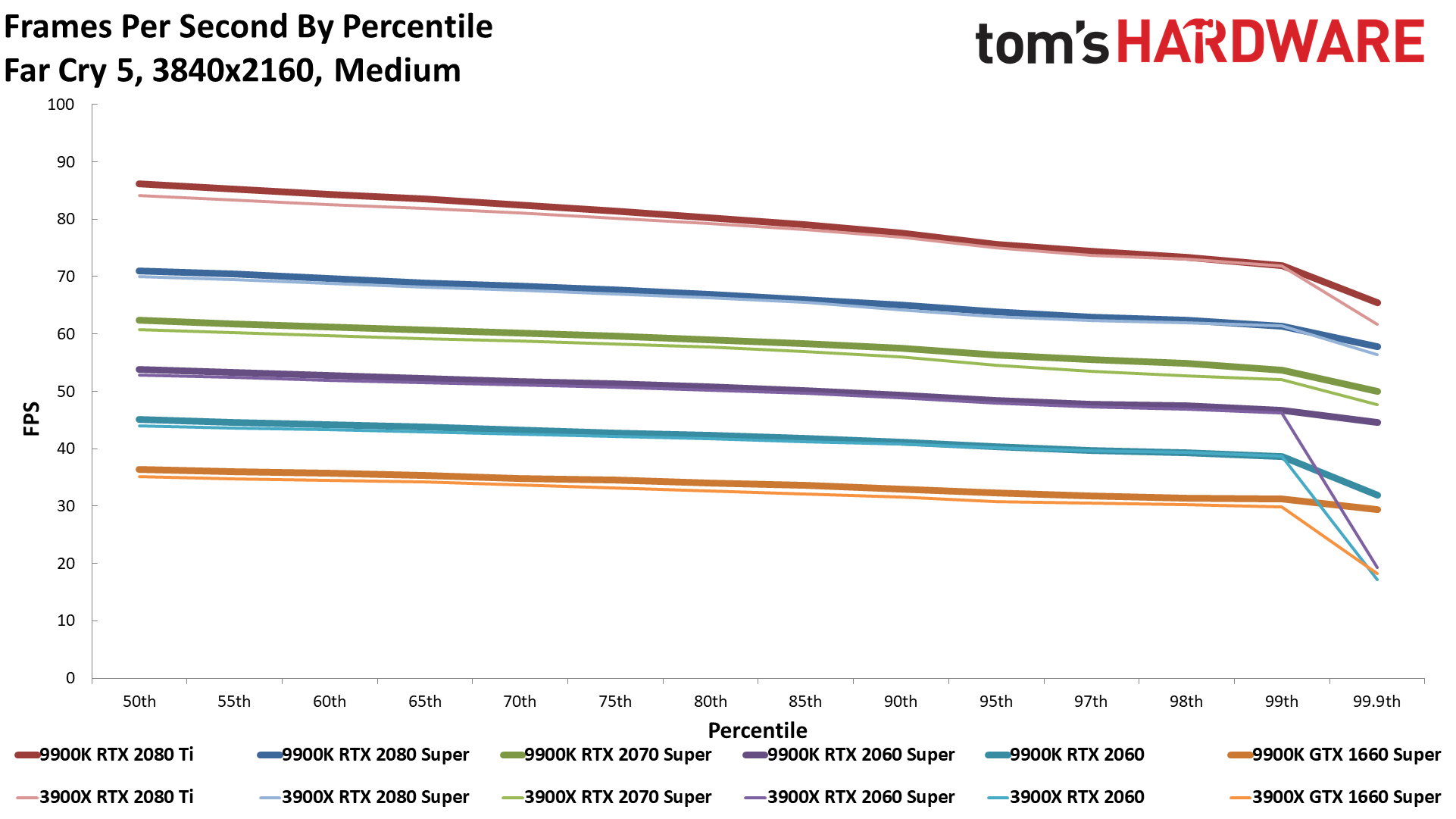

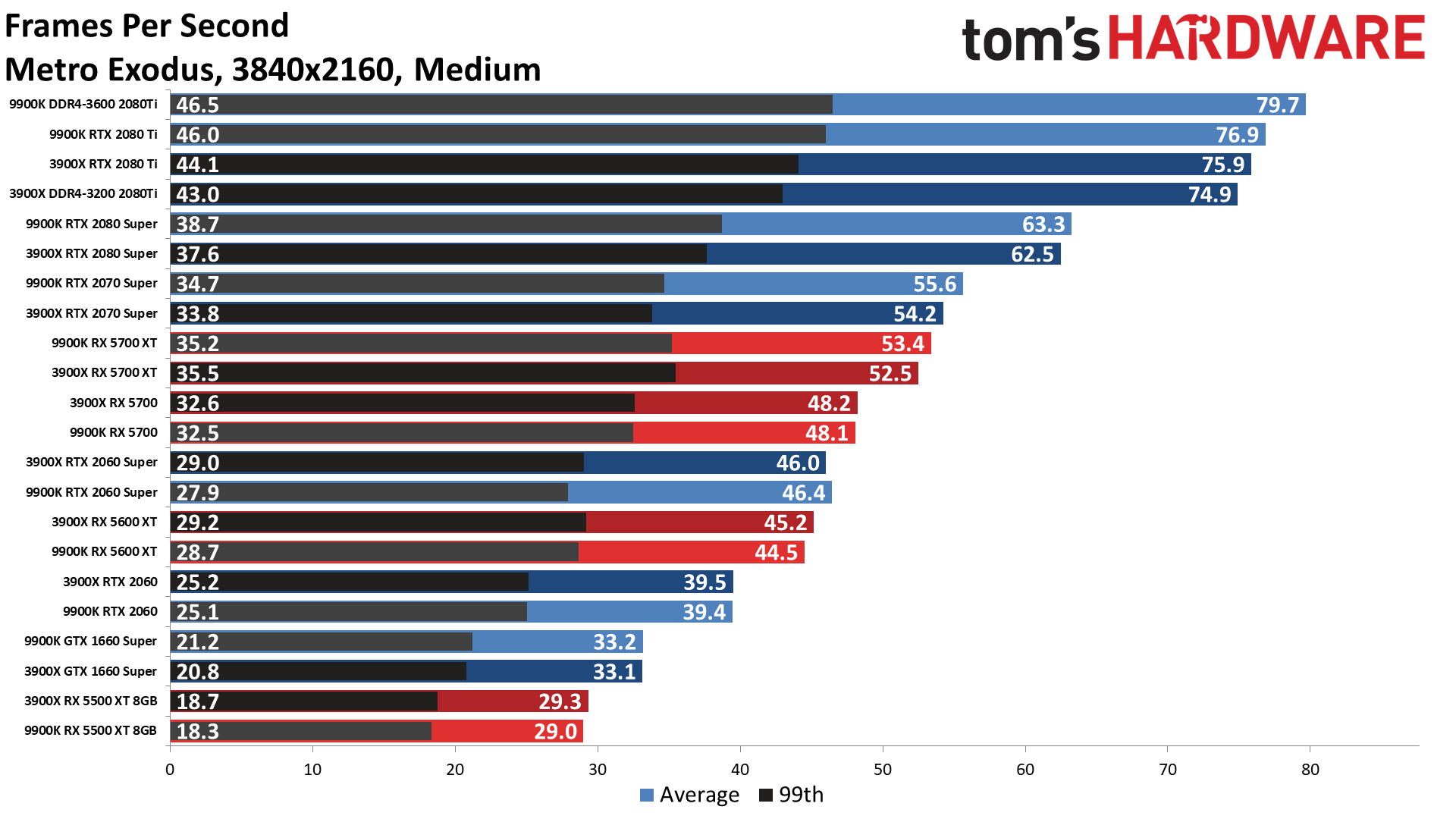

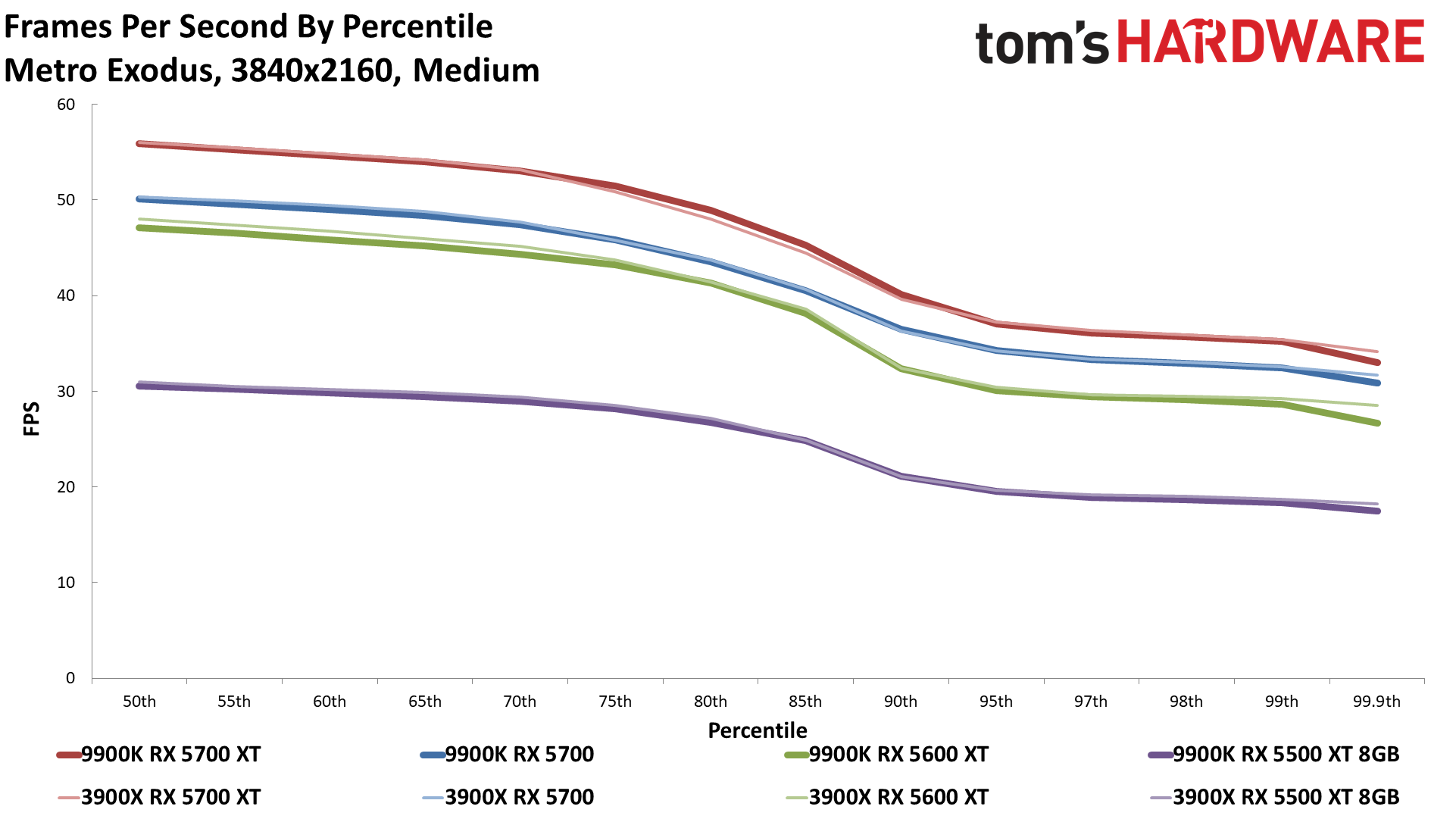

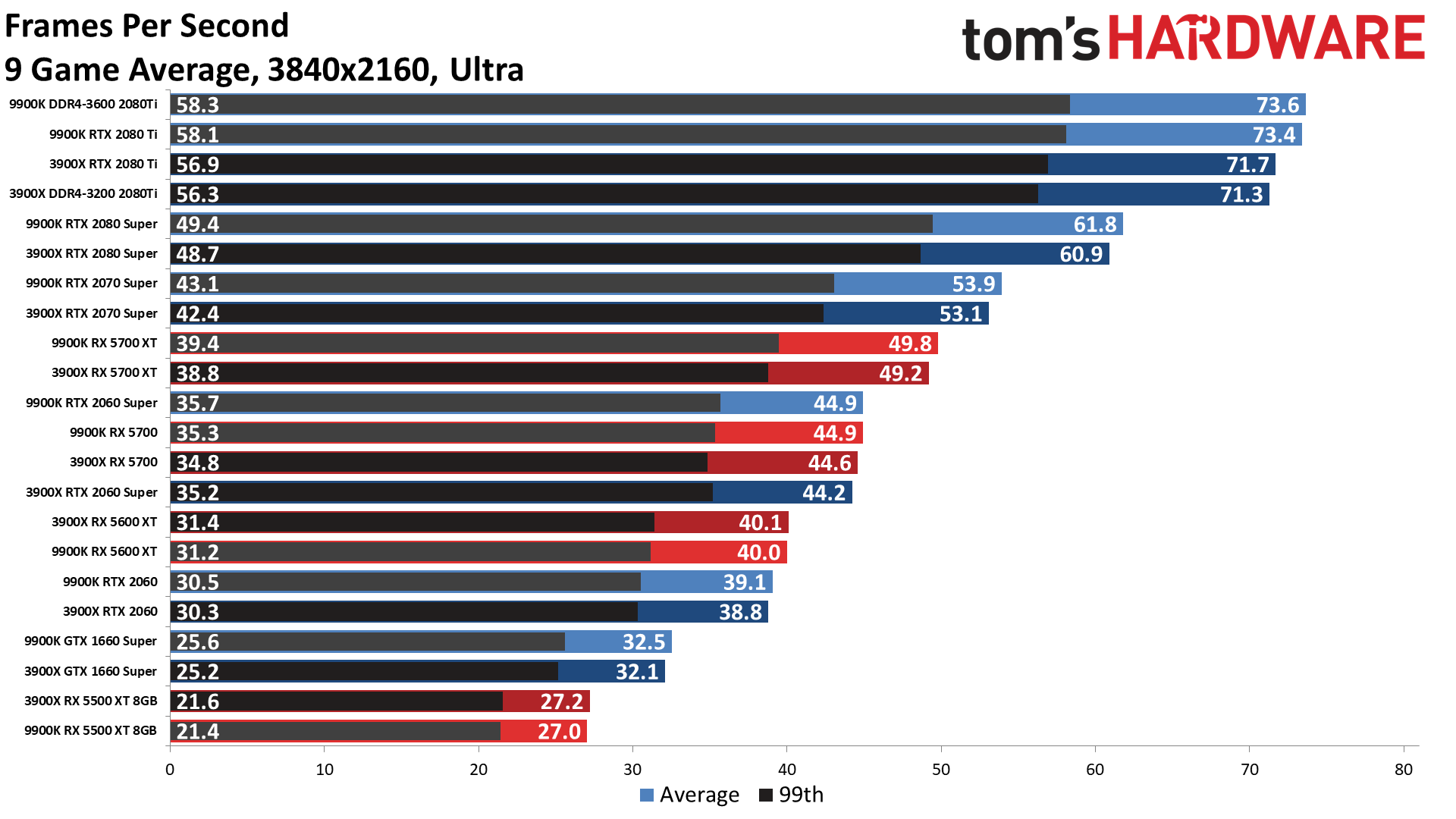

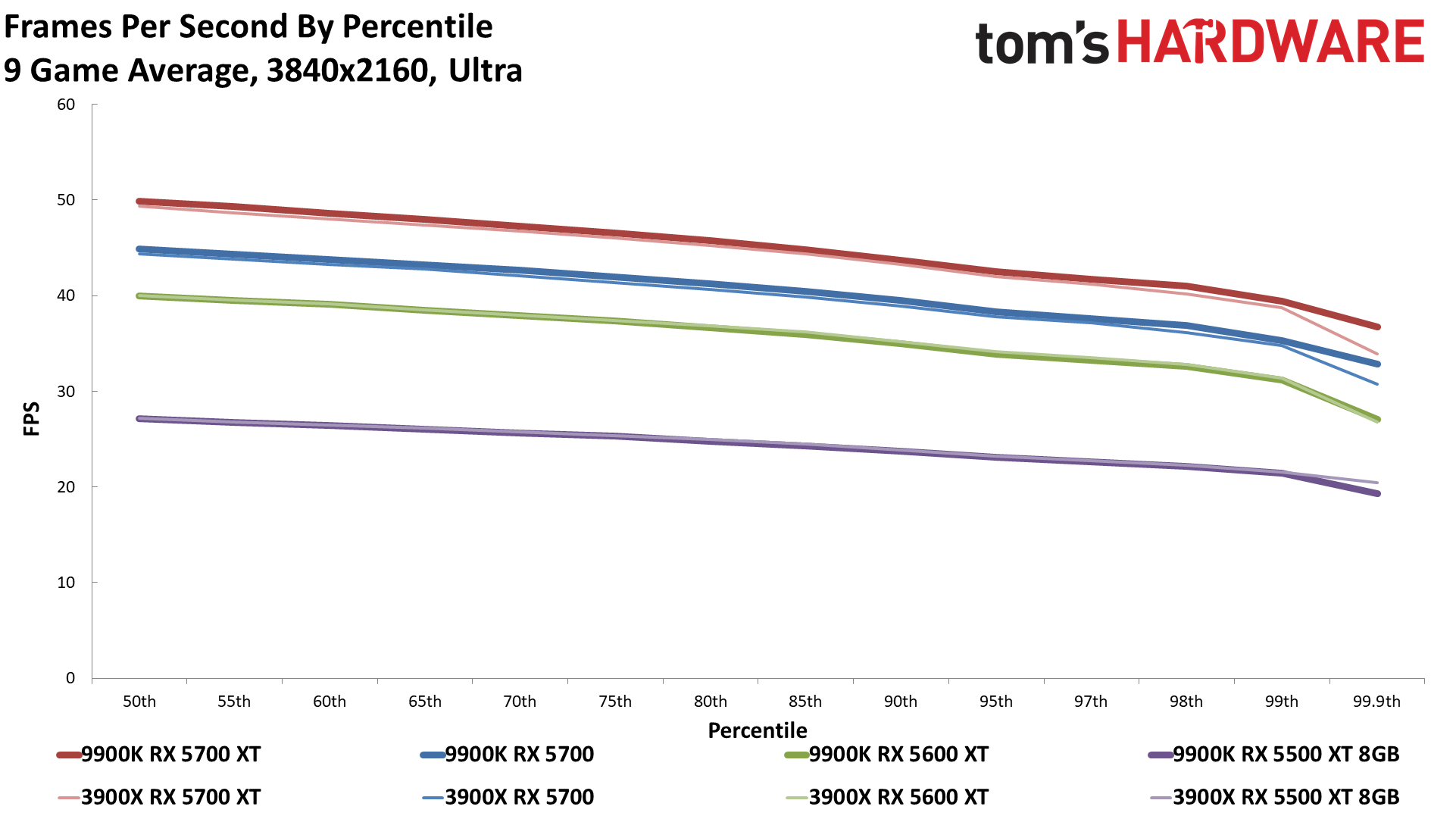

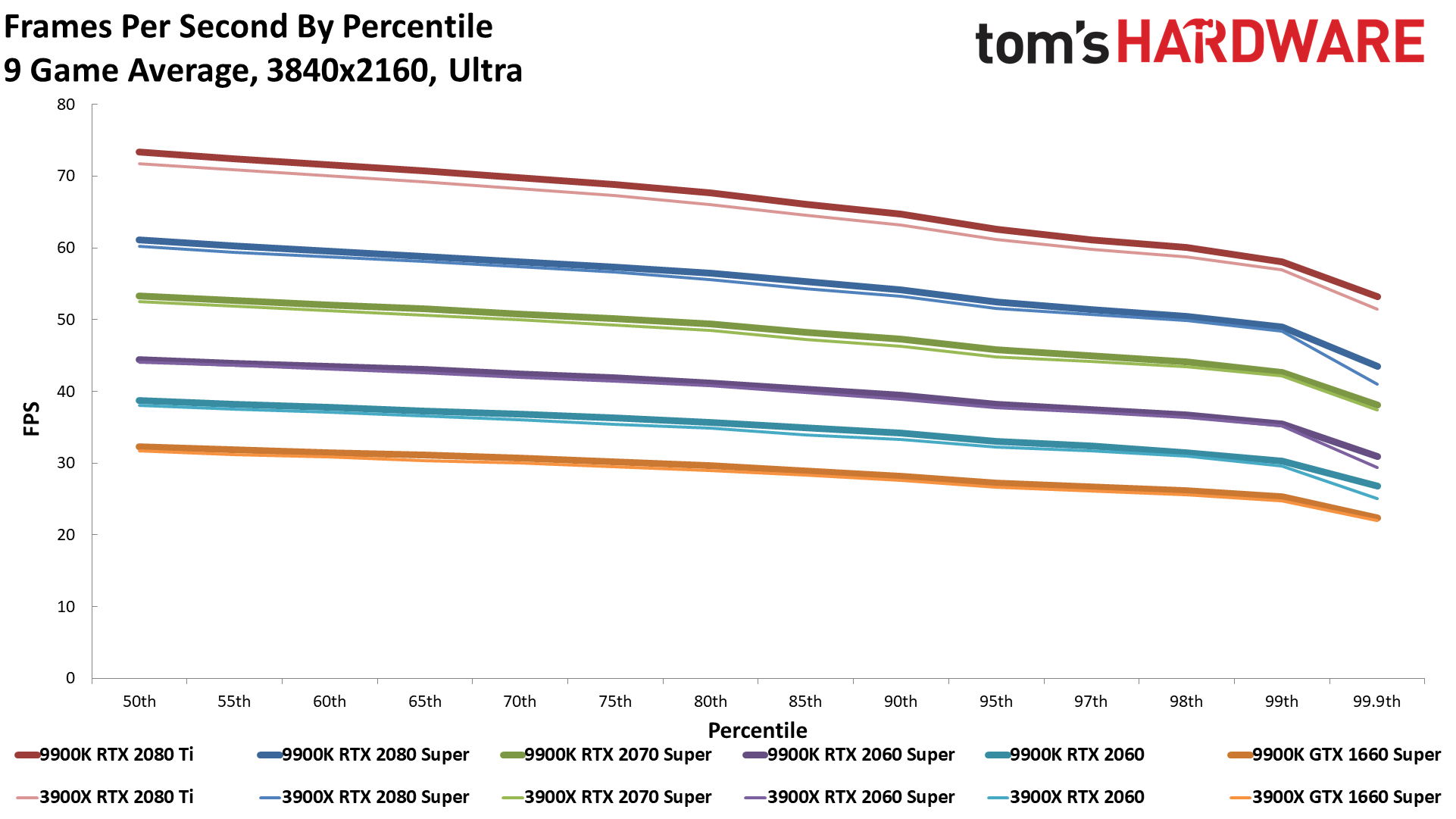

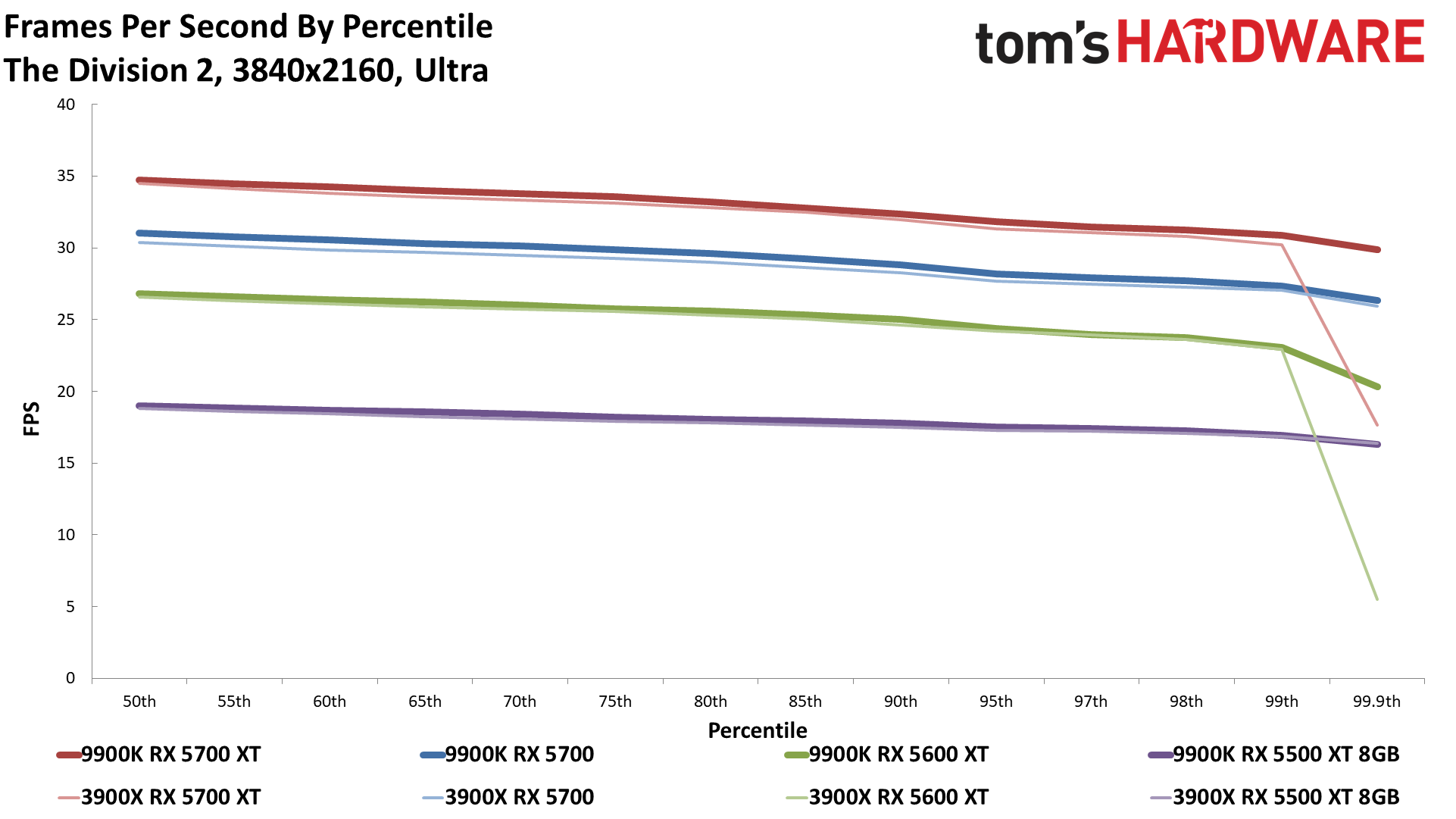

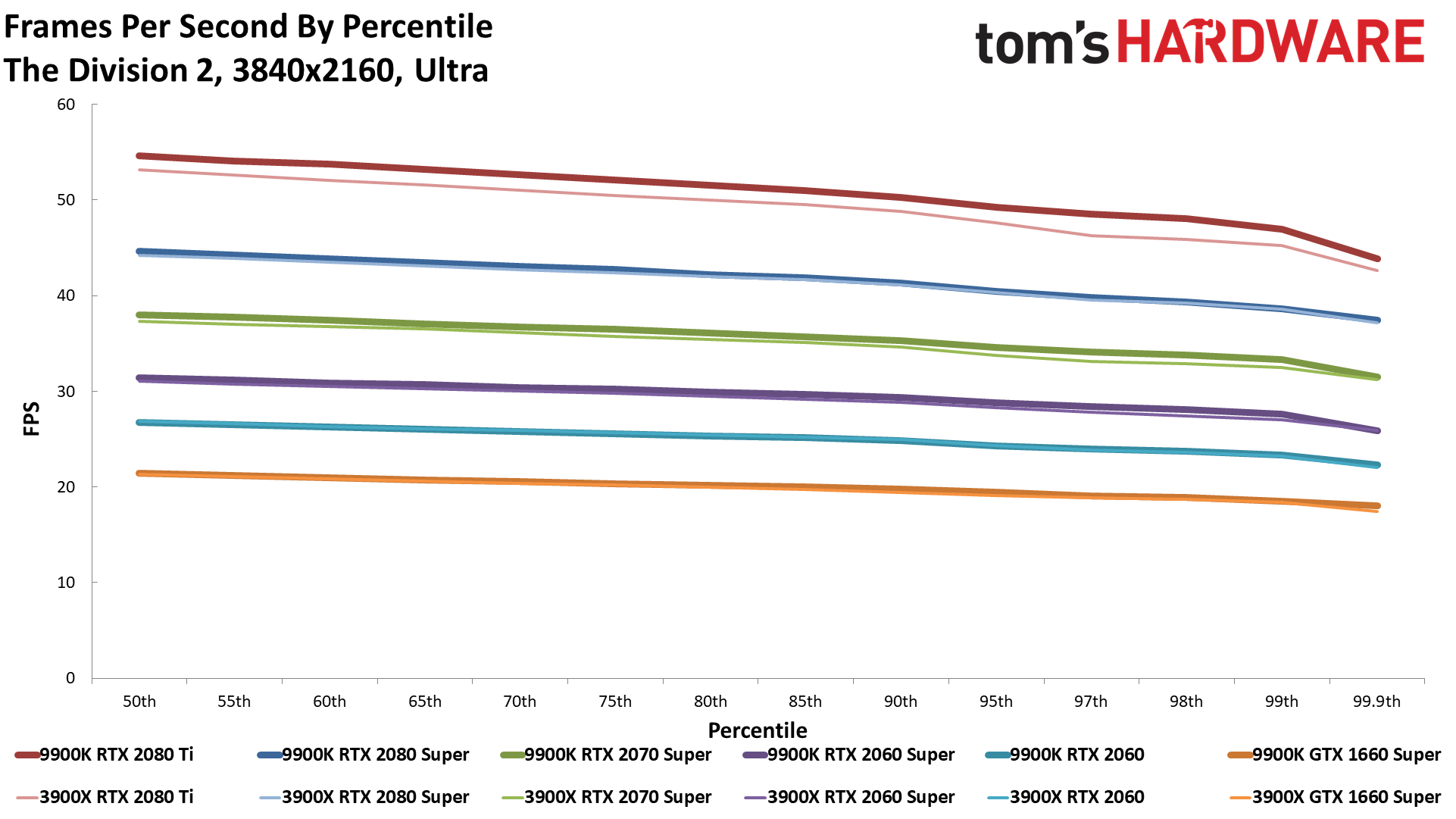

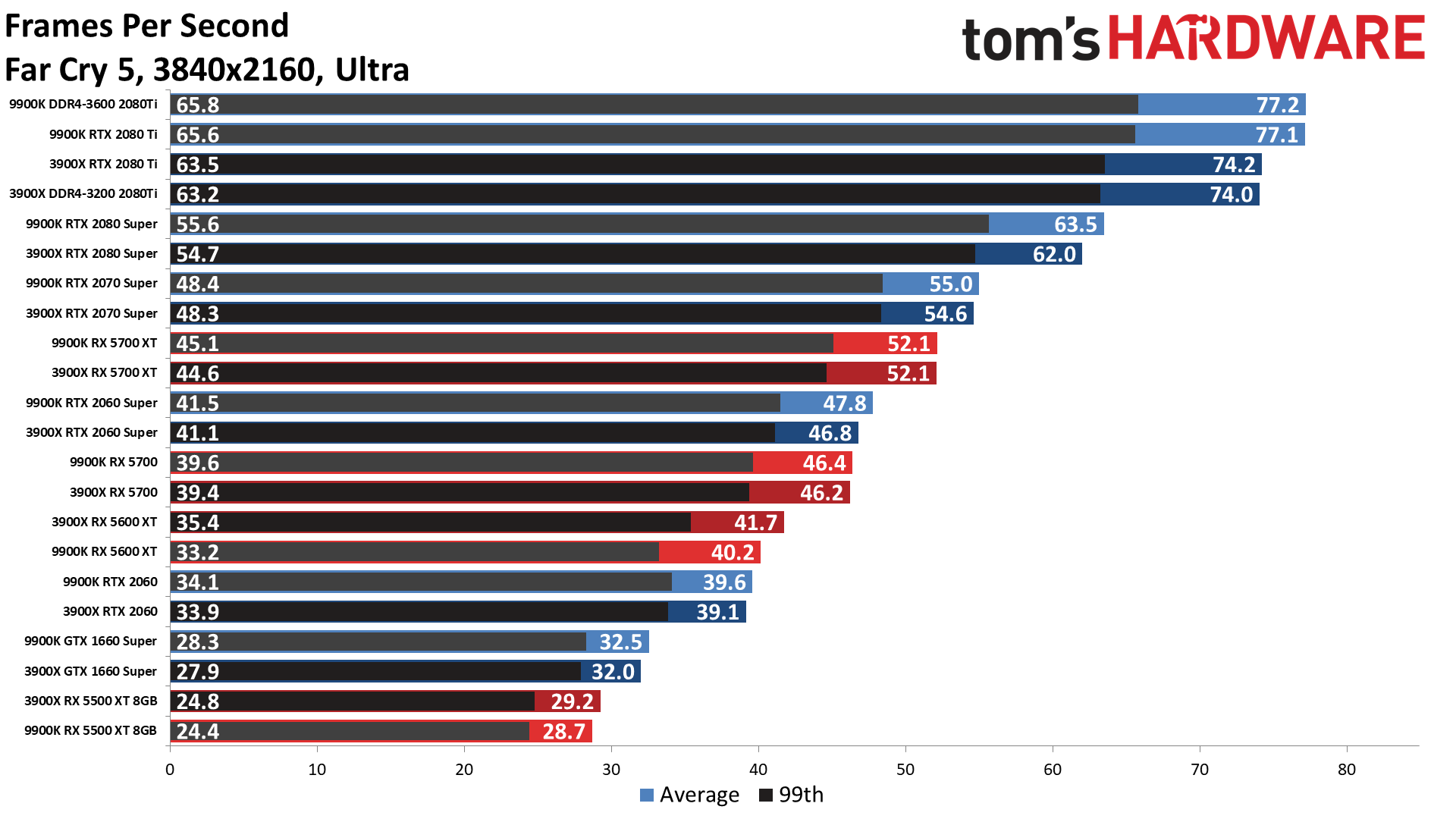

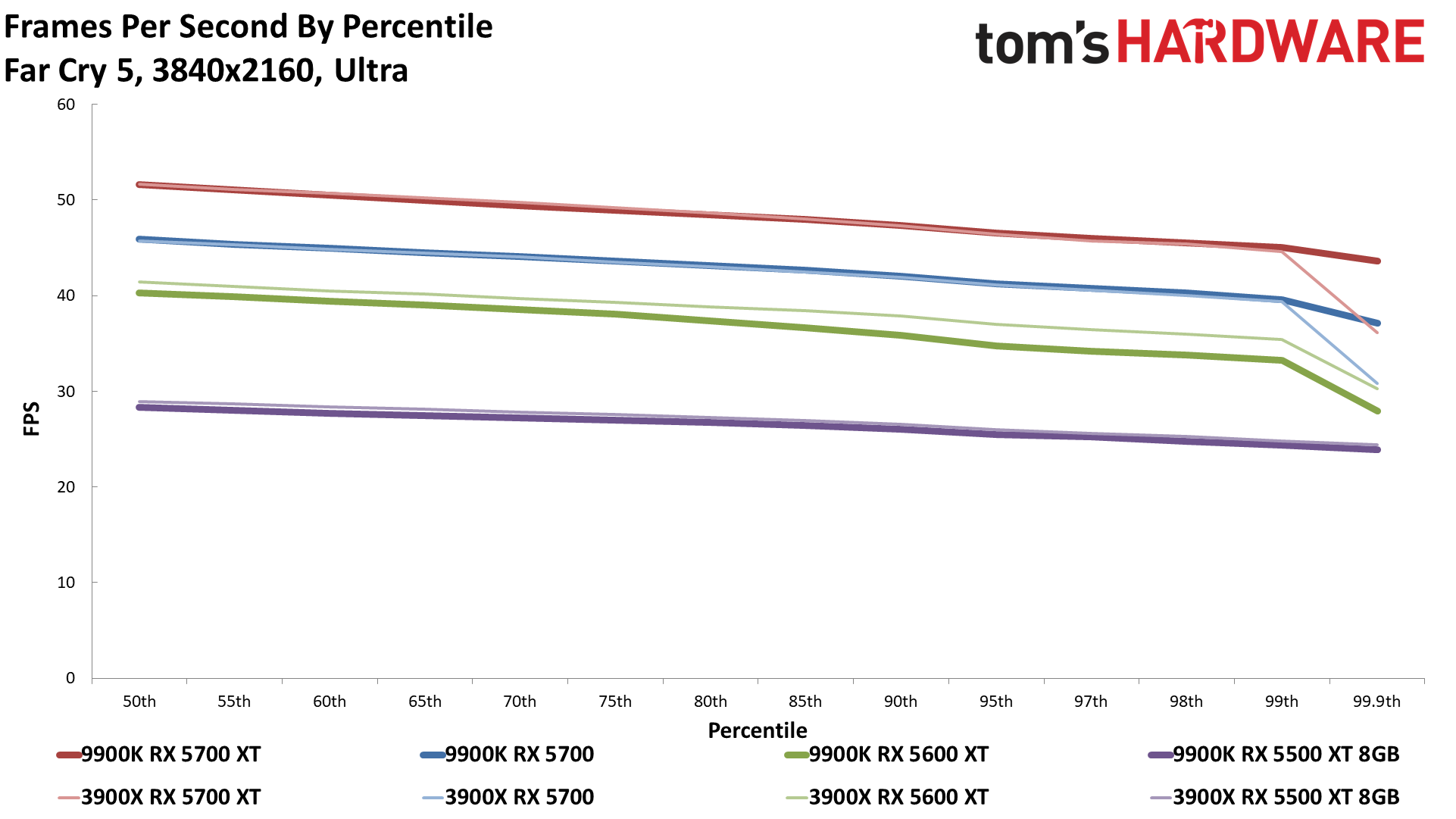

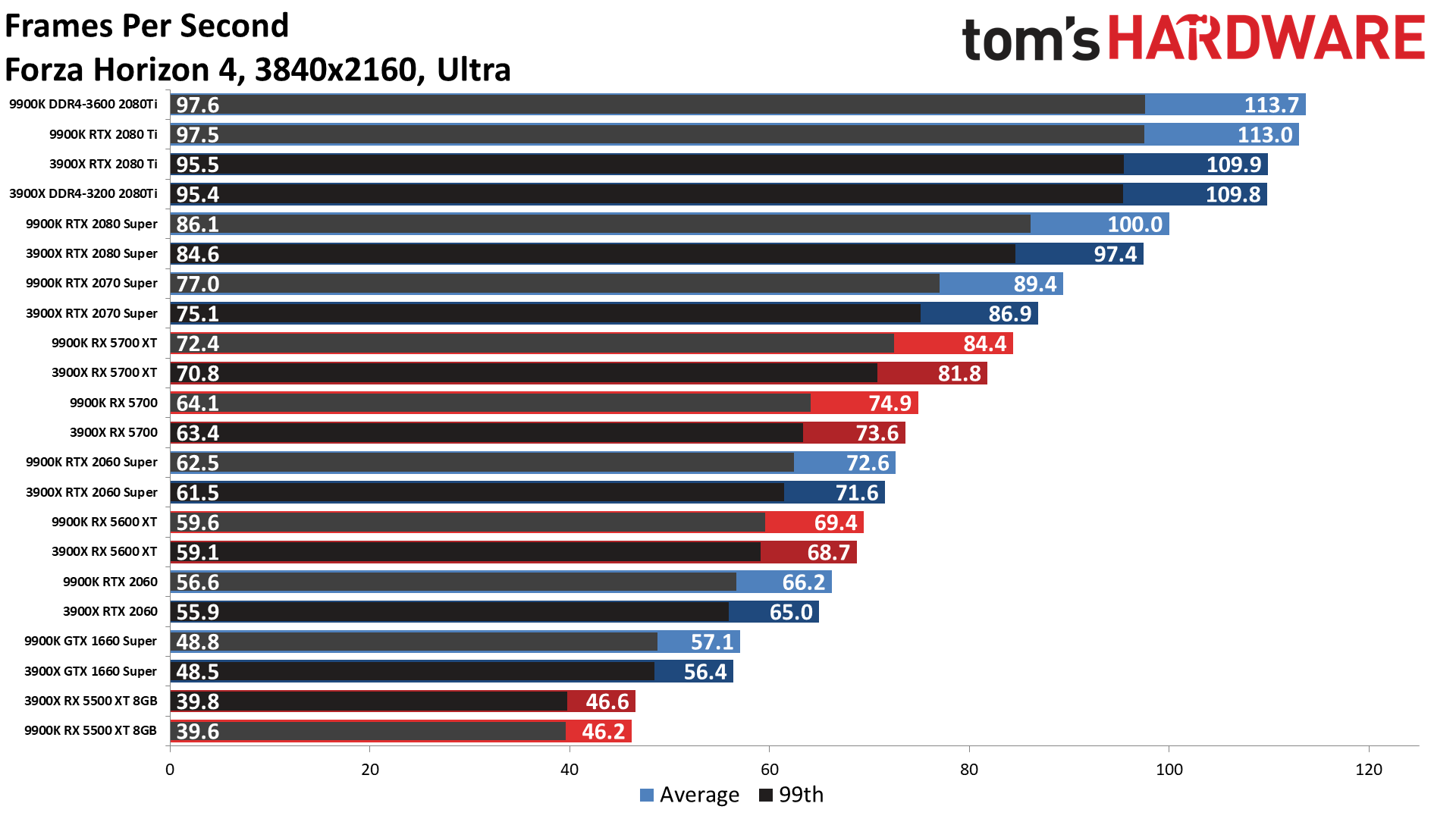

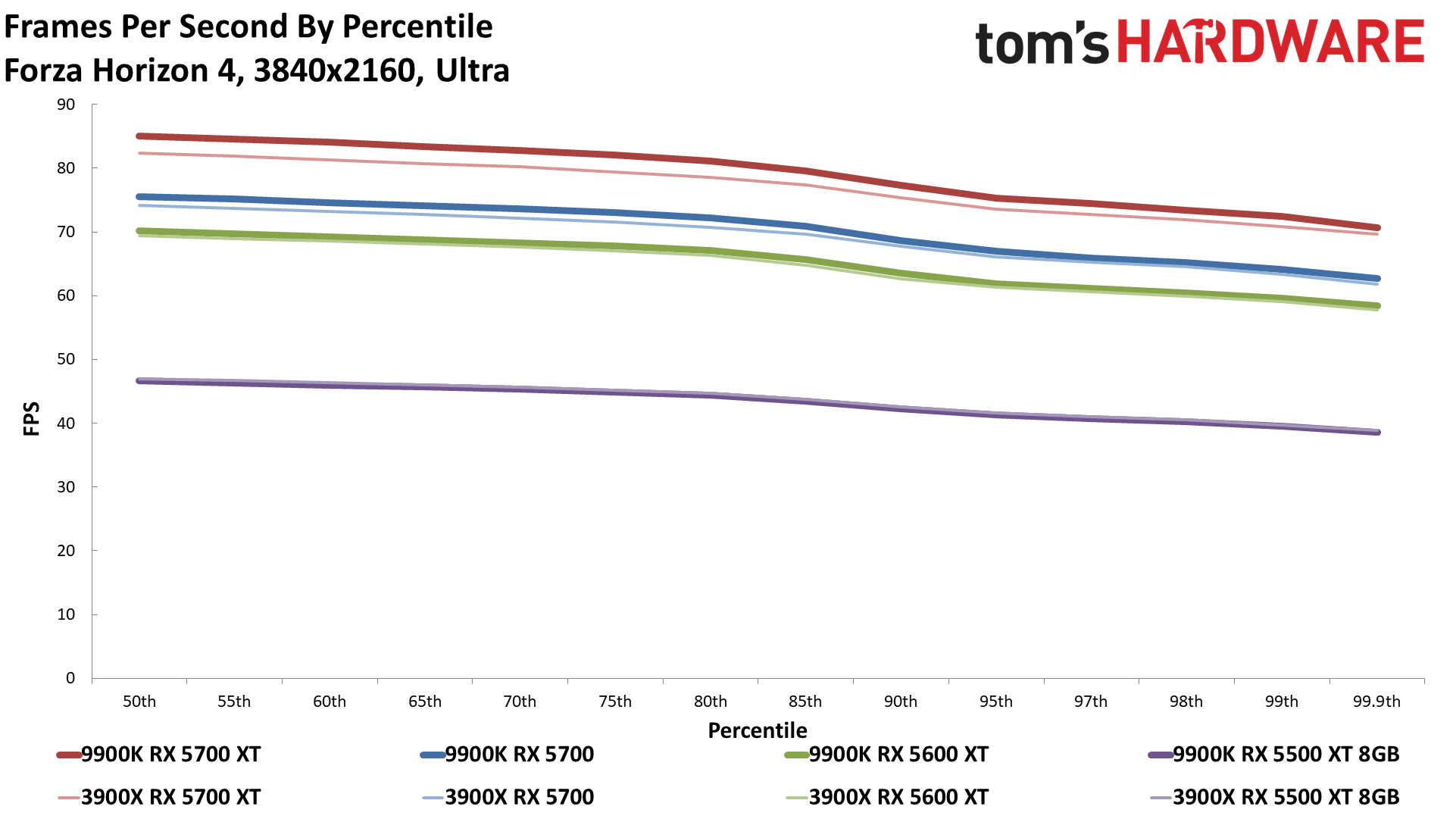

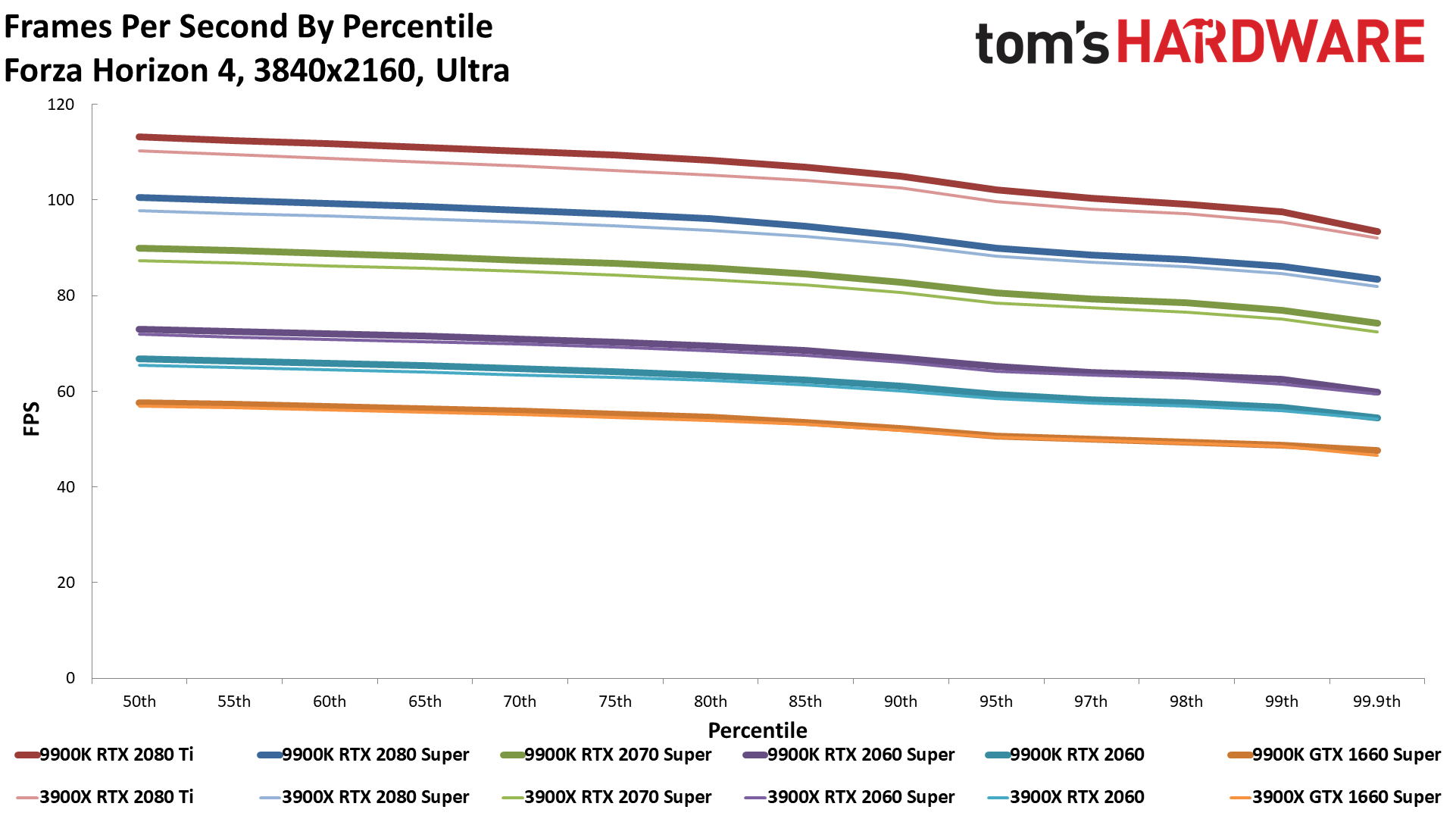

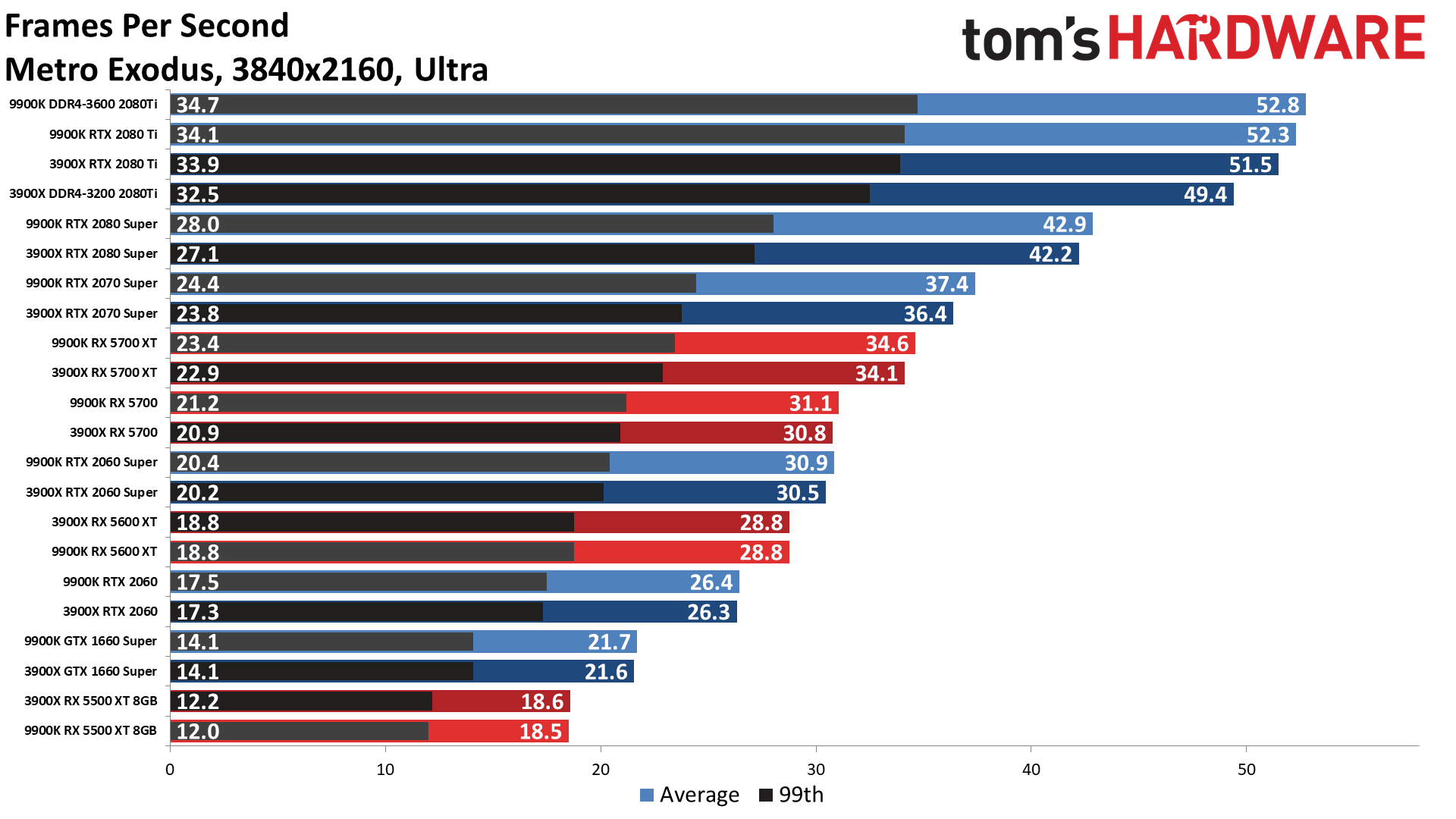

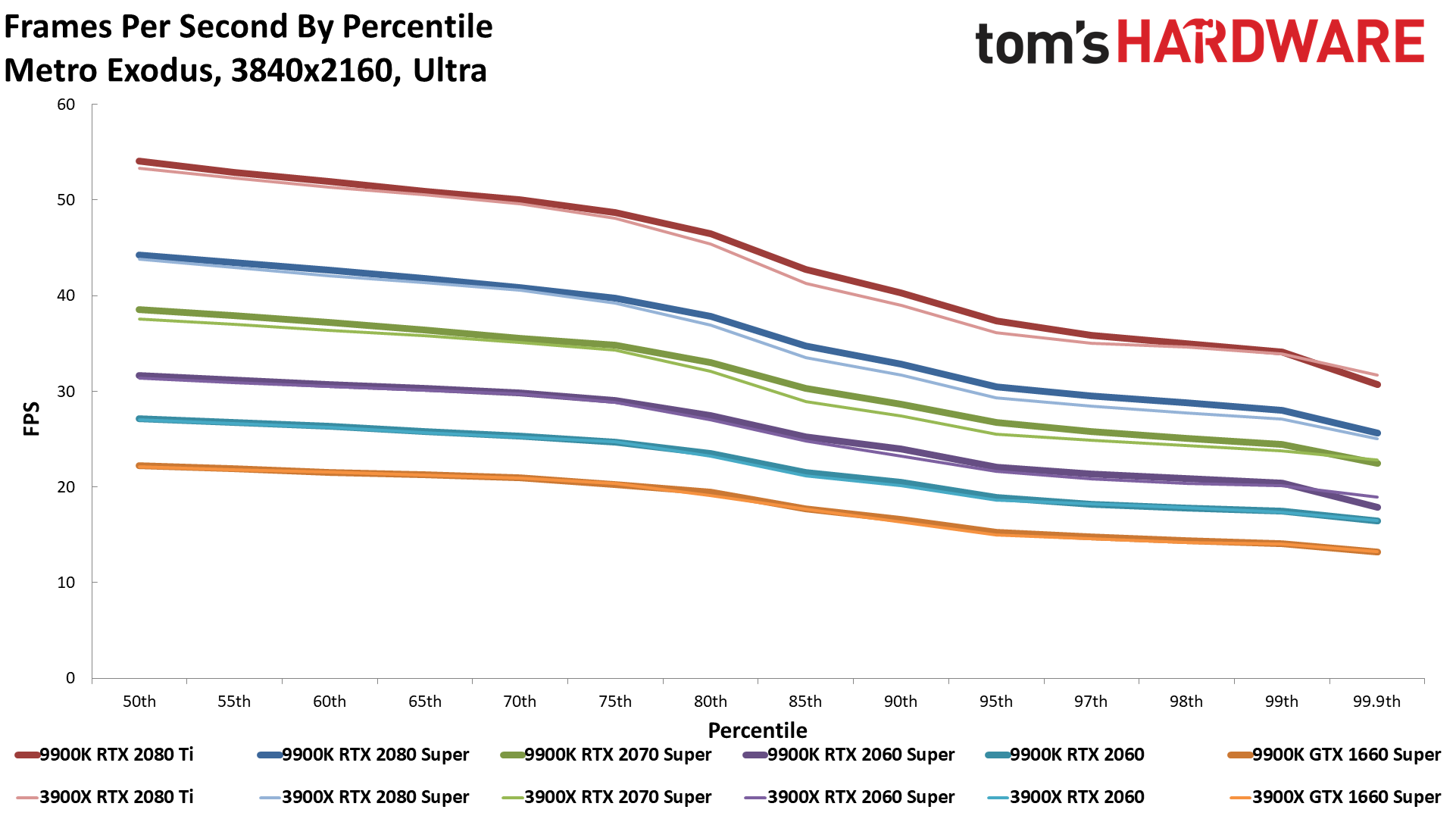

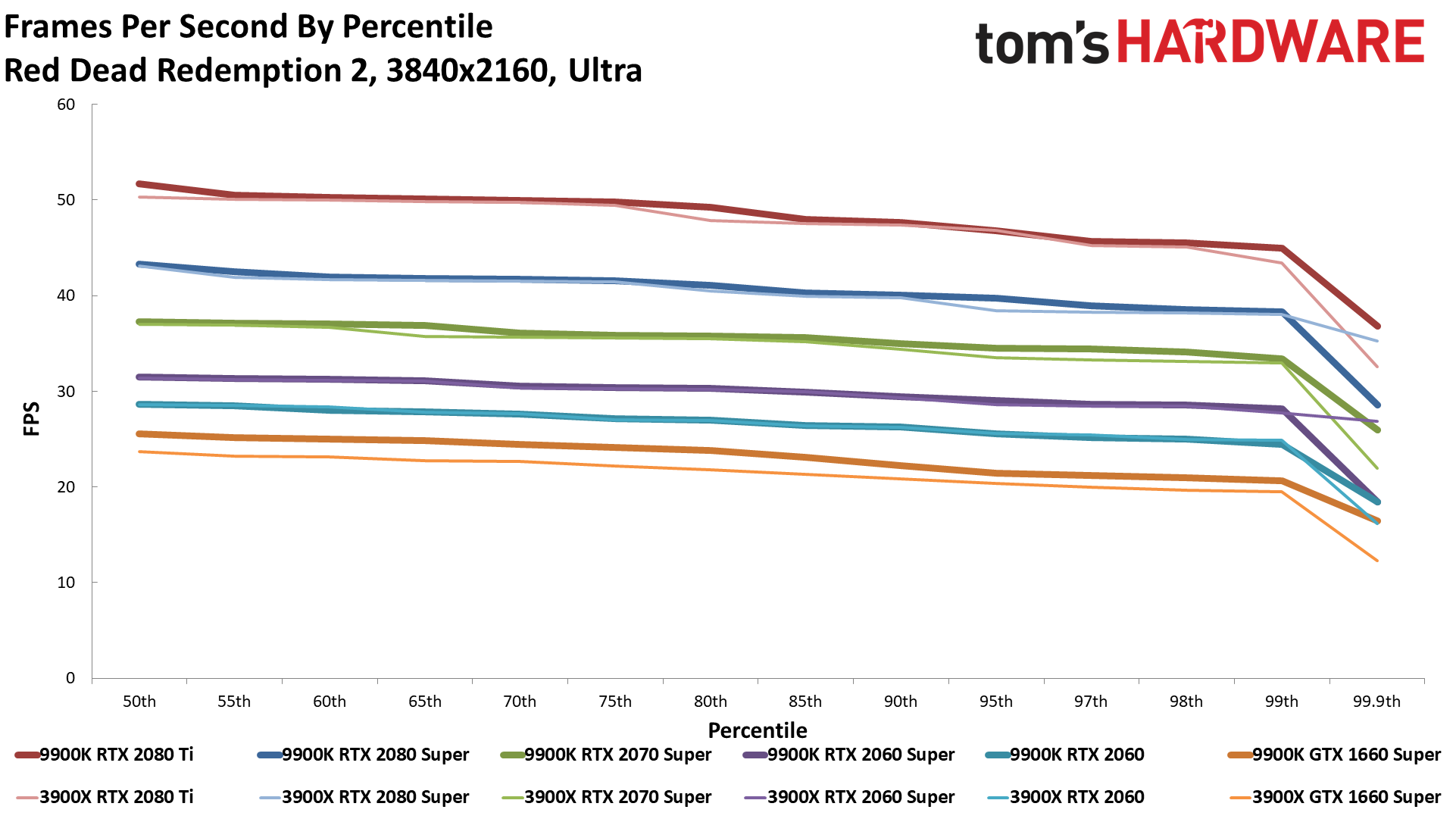

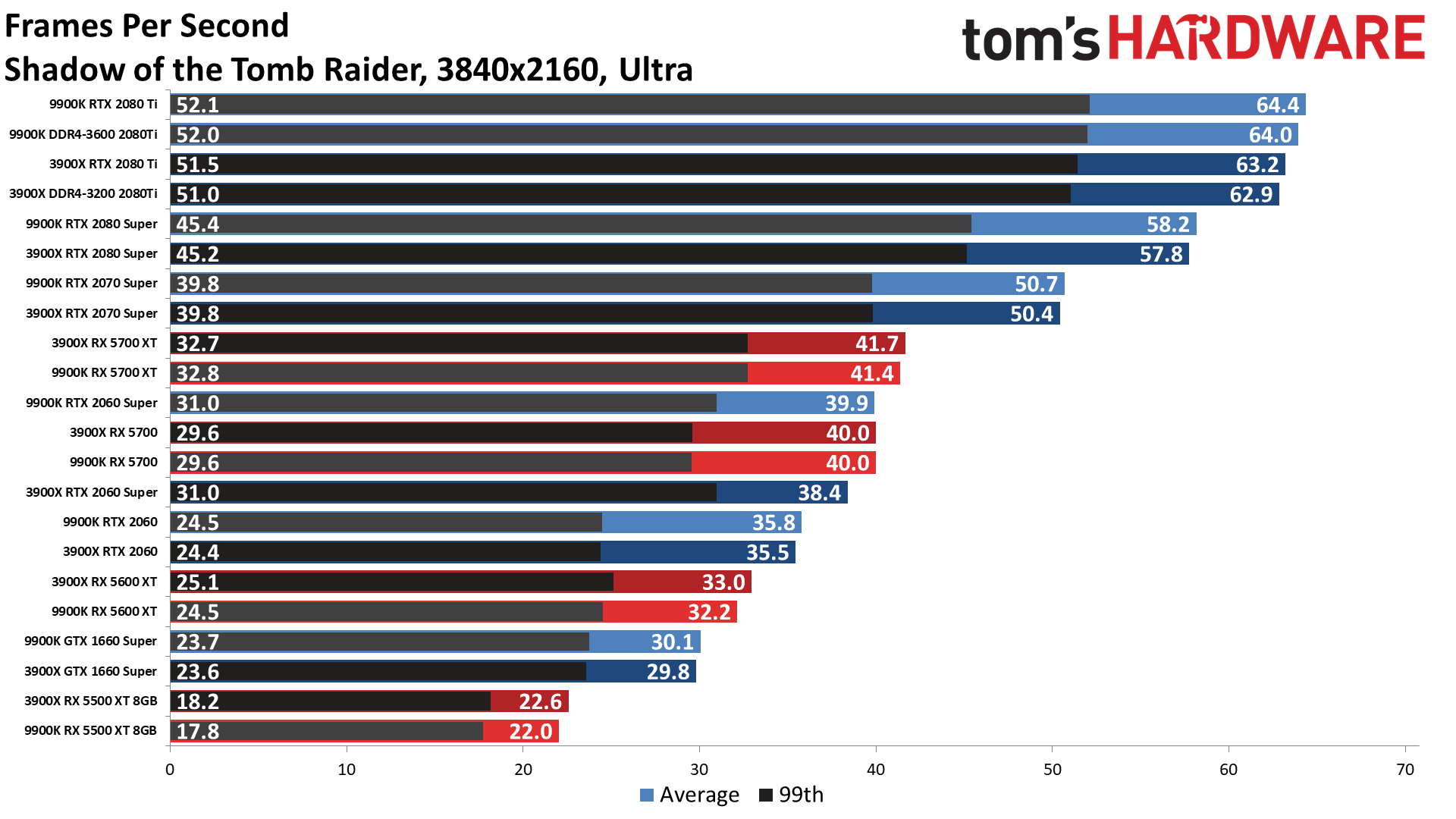

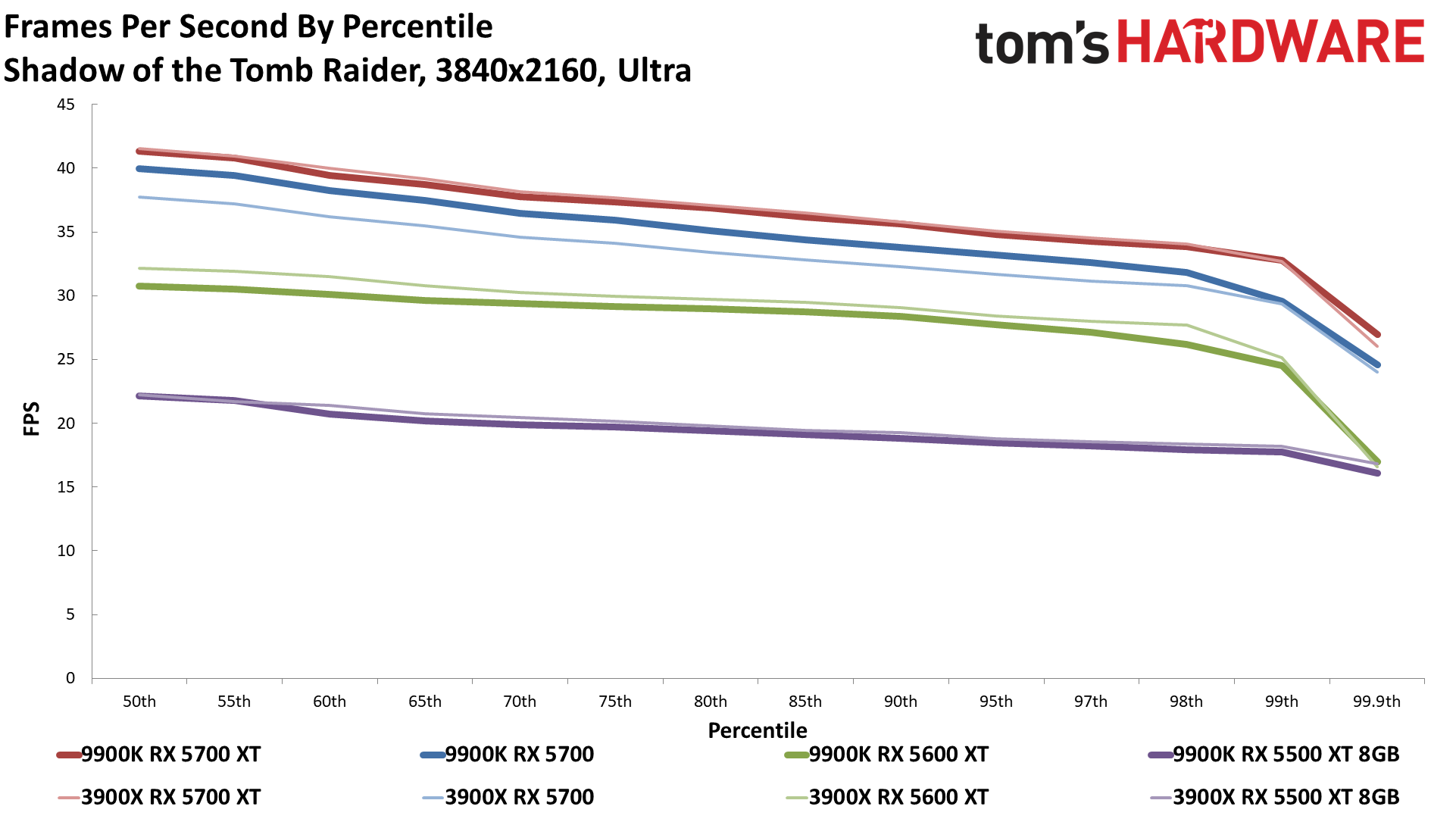

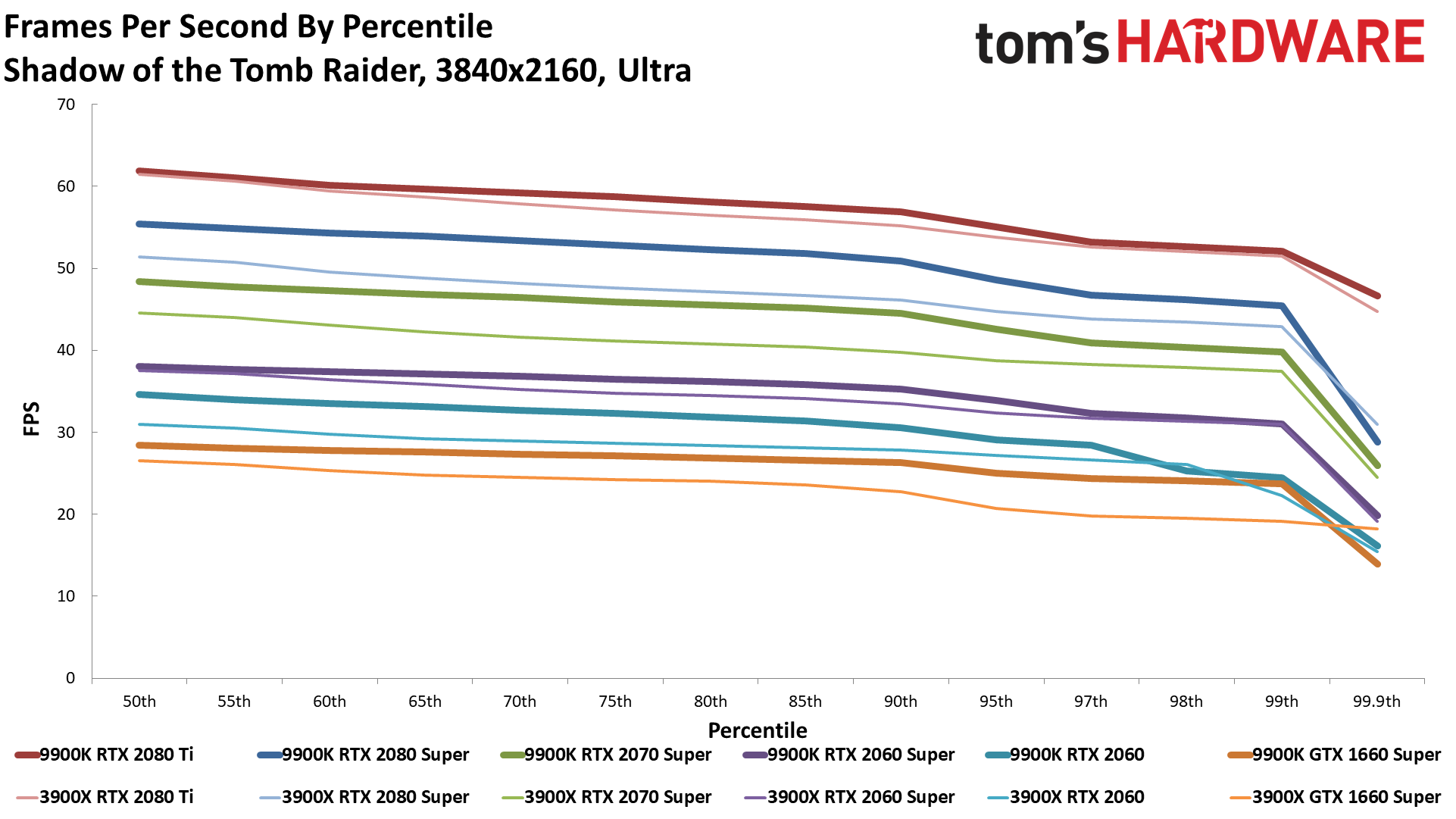

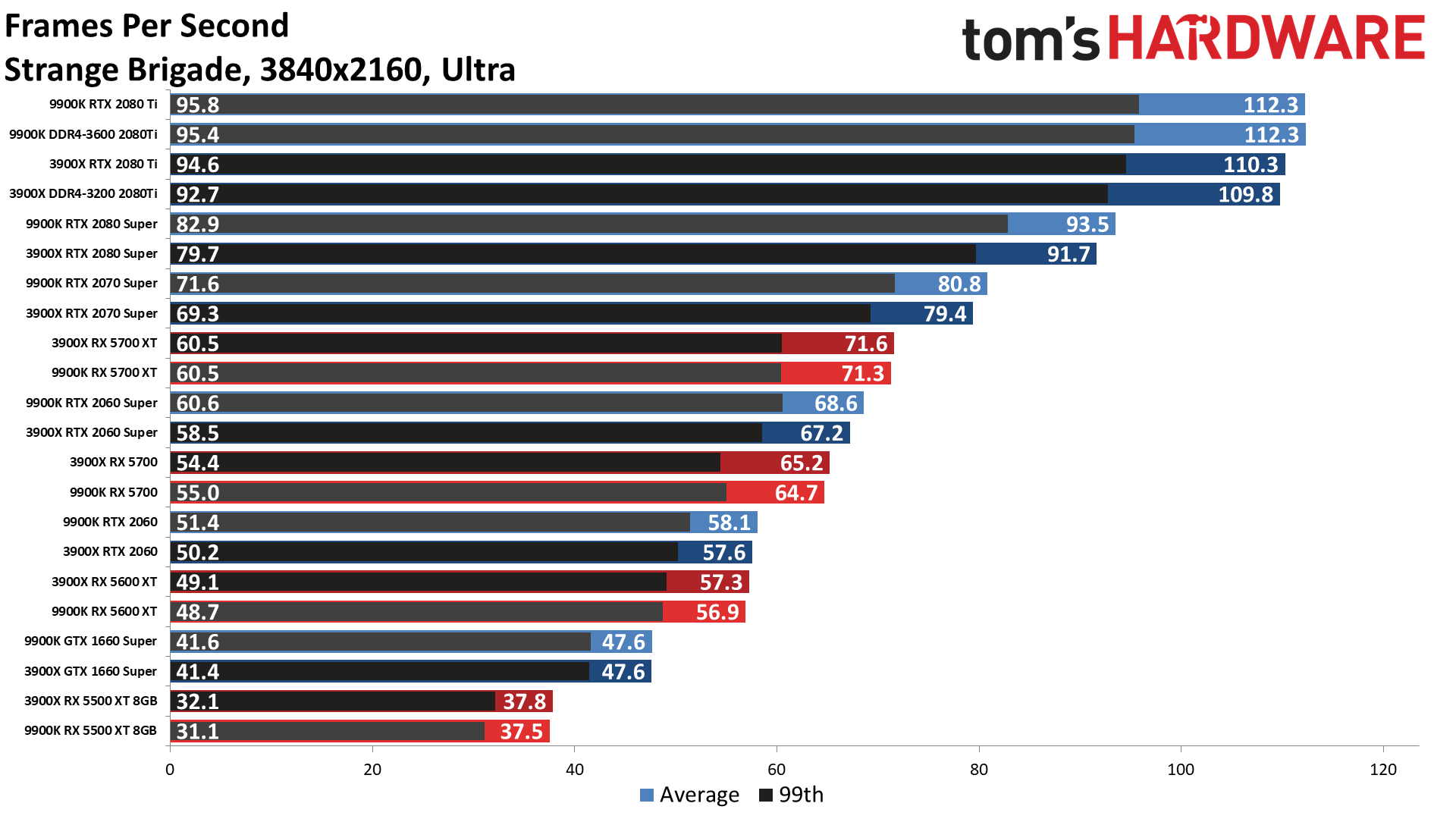

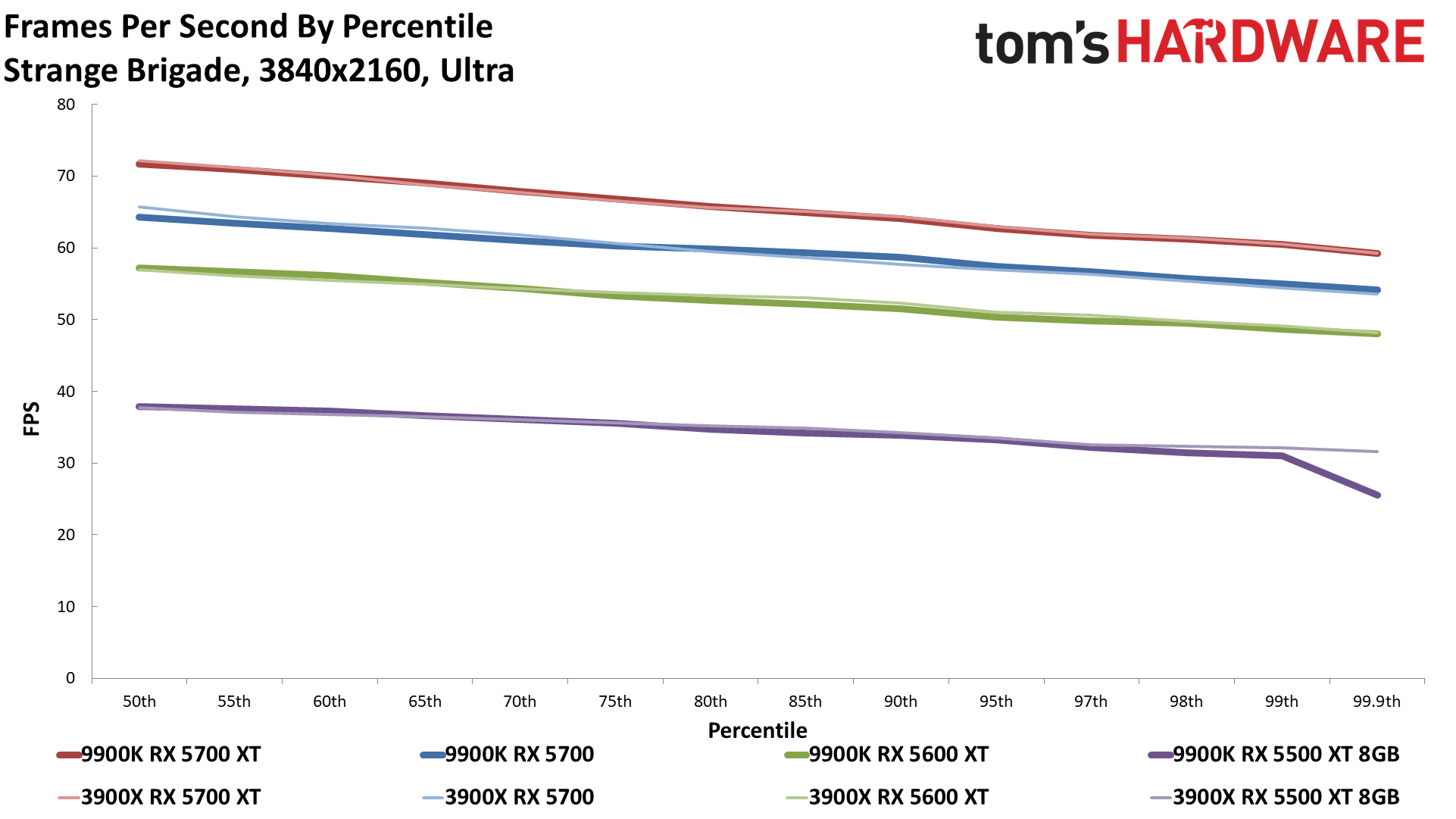

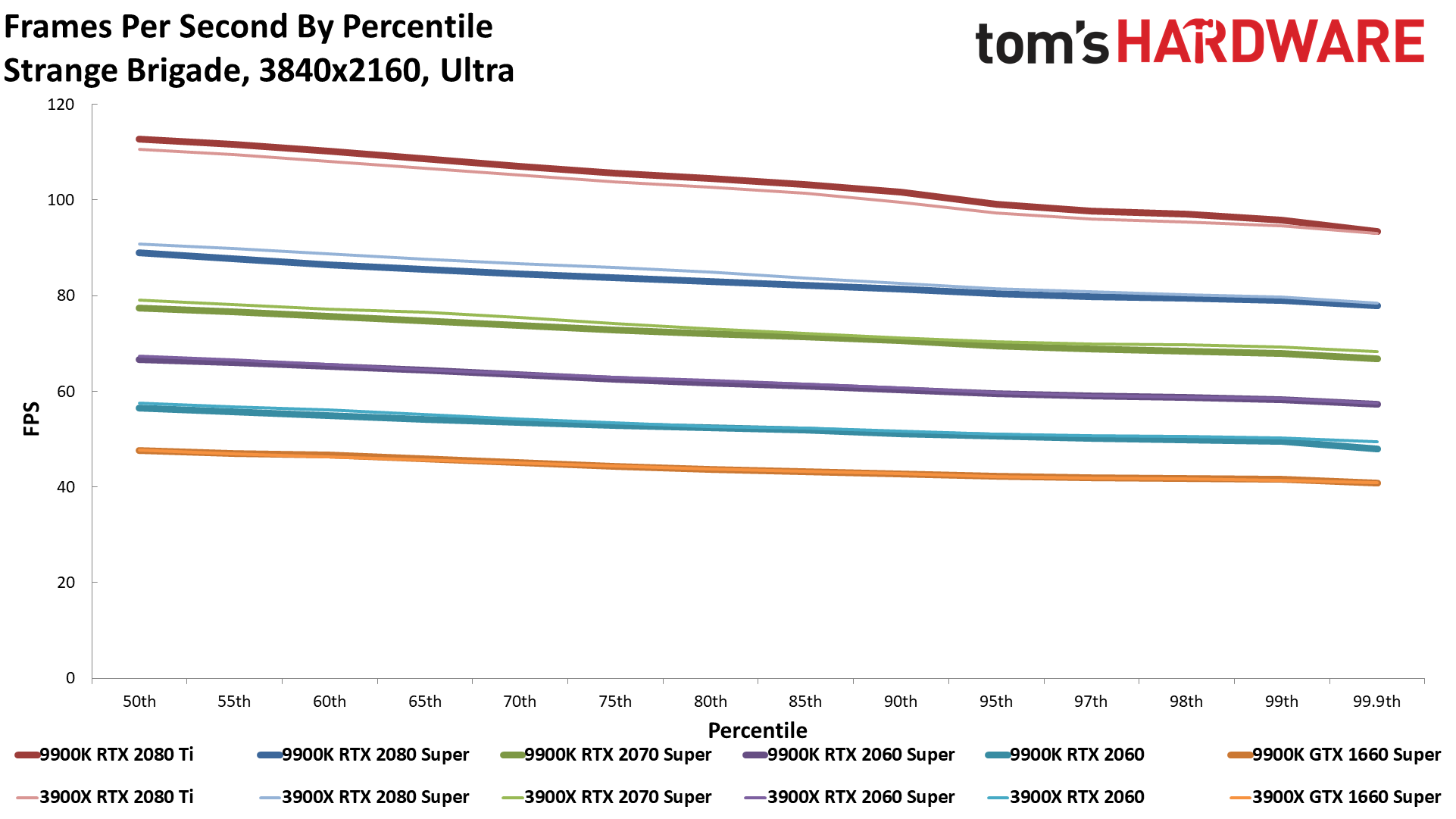

Wrapping things up, at 4K, the biggest difference in gaming performance we measured was only 4%, again with the RTX 2080 Ti, and again in Far Cry 5 and Final Fantasy. Overall, the average difference is now 2.4% with the 2080 Ti, which is basically margin of error and not something you'd notice without running a bunch of benchmarks like we've been doing.

The other nine graphics cards are even closer in performance, ranging from a minuscule 0.6% lead for the 3900X with the RX 5500 XT, to an equally negligible 1.5% lead for the RTX Super cards. Everything is close enough that even repeated benchmarks don't really make the differences meaningful.

Closing Thoughts

Just as expected, differences in CPU mattered less when we tested games at higher resolutions or with slower GPUs — or both. There are some other factors that we do need to consider, however. First, the Core i9-9900K isn't technically the fastest CPU for gaming any longer, as the Core i9-10900K holds that title. In our testing, we found the 10900K outperformed the 9900K by up to 17% in a few games (at 1080p ultra). We can also include the Ryzen 9 3900XT and Ryzen 7 3800XT for AMD, which are a few percent faster than the Ryzen 9 3900X.

Far more important than the newer CPUs is the pending arrival of the next generation of graphics cards — Nvidia's Ampere and AMD's RDNA 2 architectures. The RTX 2080 Ti only gave Intel a 10% lead over AMD CPUs at 1080p, but what happens when new GPUs arrive that are 50% faster? That shifts the bottleneck more toward to the CPU, which means with the fastest GPUs our 1440p and 4K results could become more like the 1080p results shown here. We're certainly interested in seeing how much of a difference CPU choice makes once the new graphics cards arrive, which is part of what spurred all of this testing.

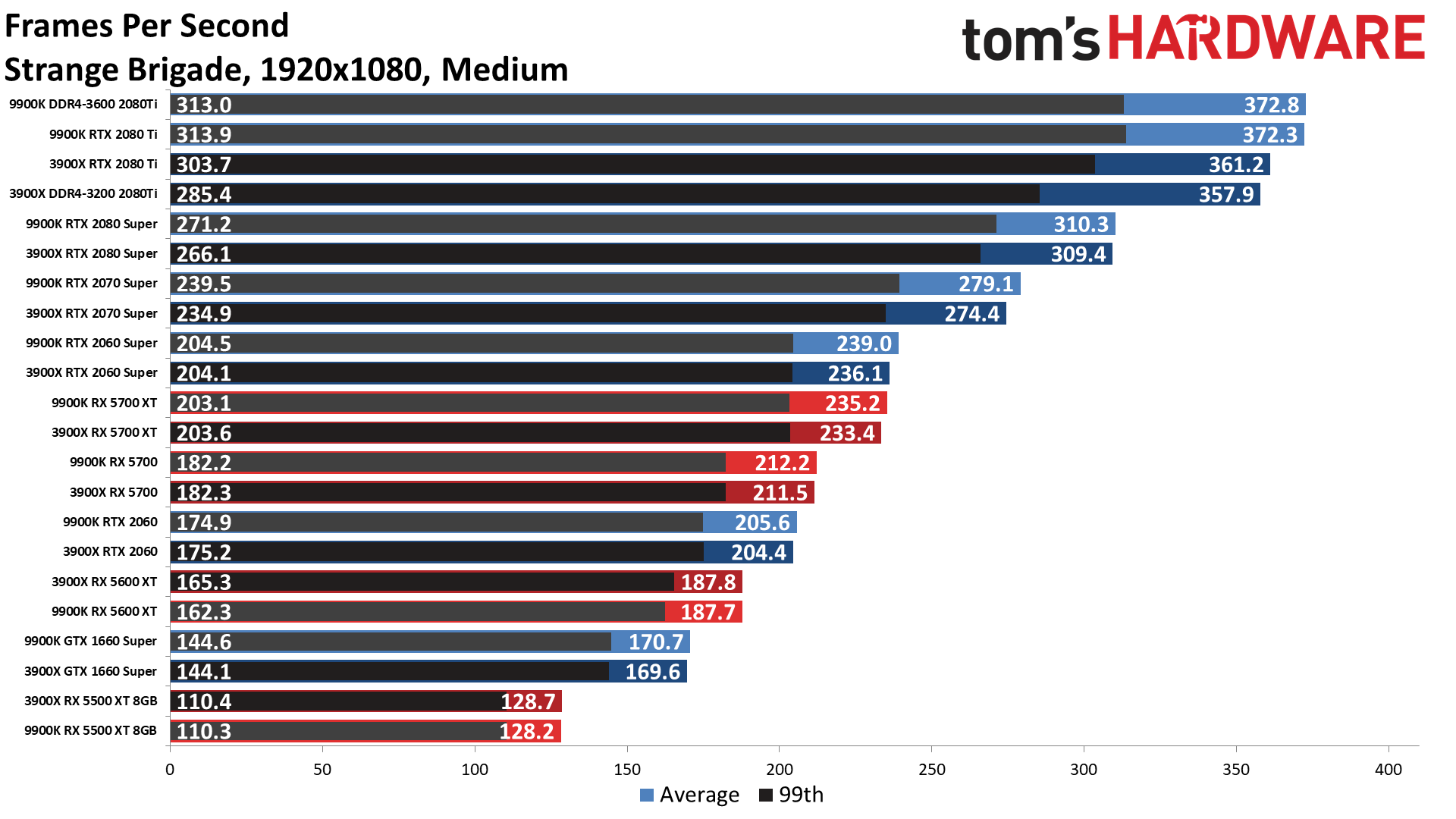

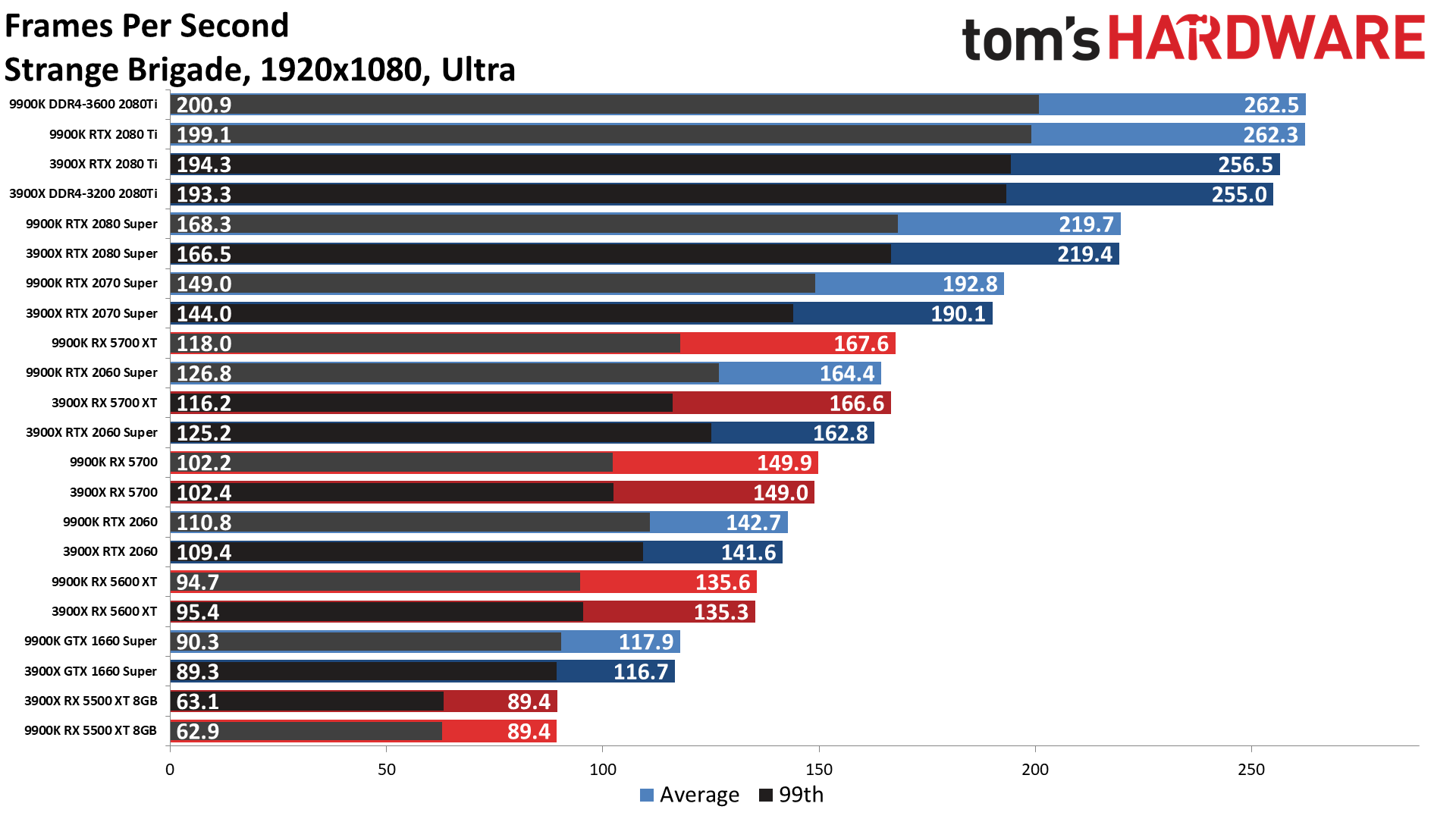

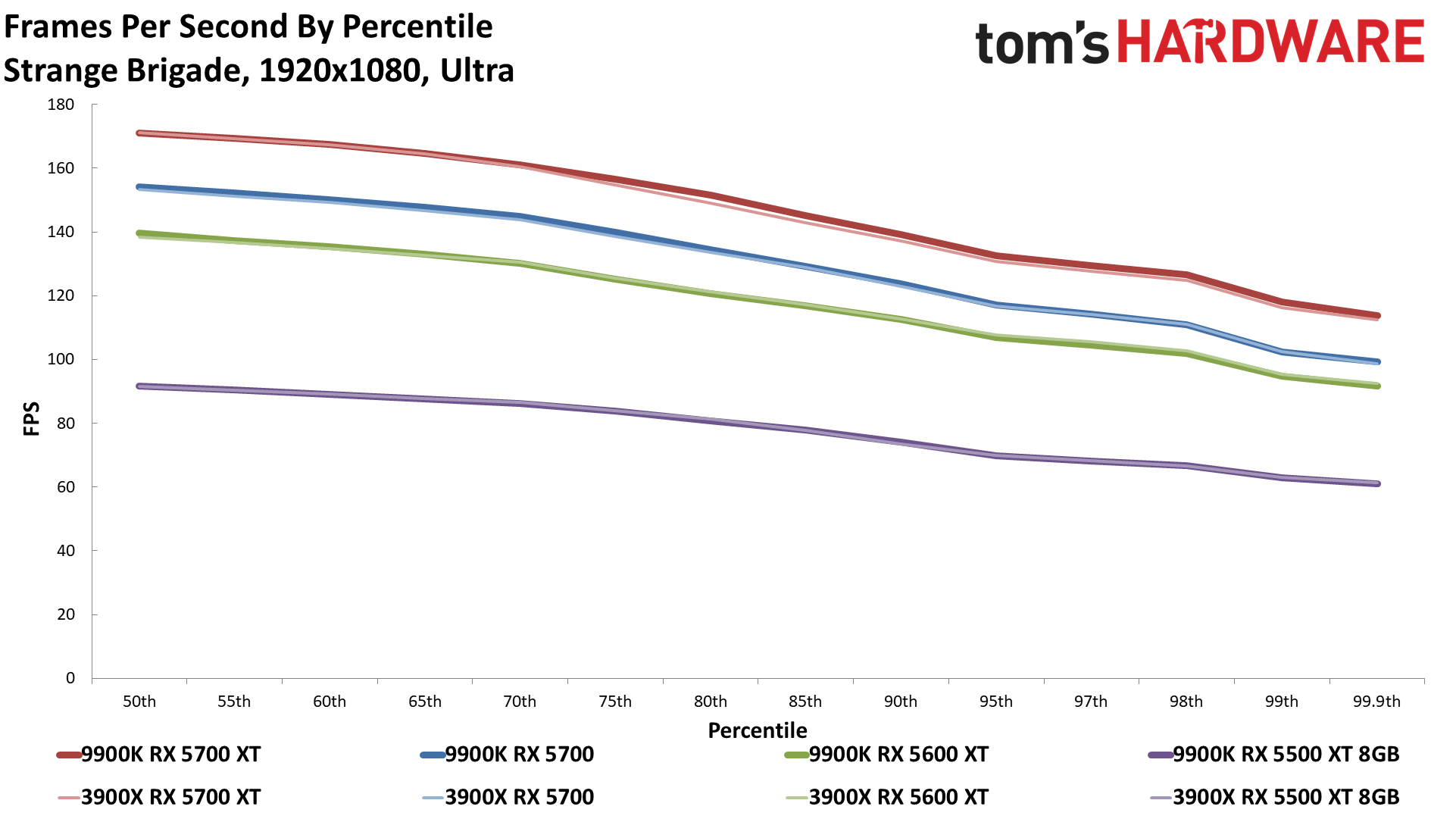

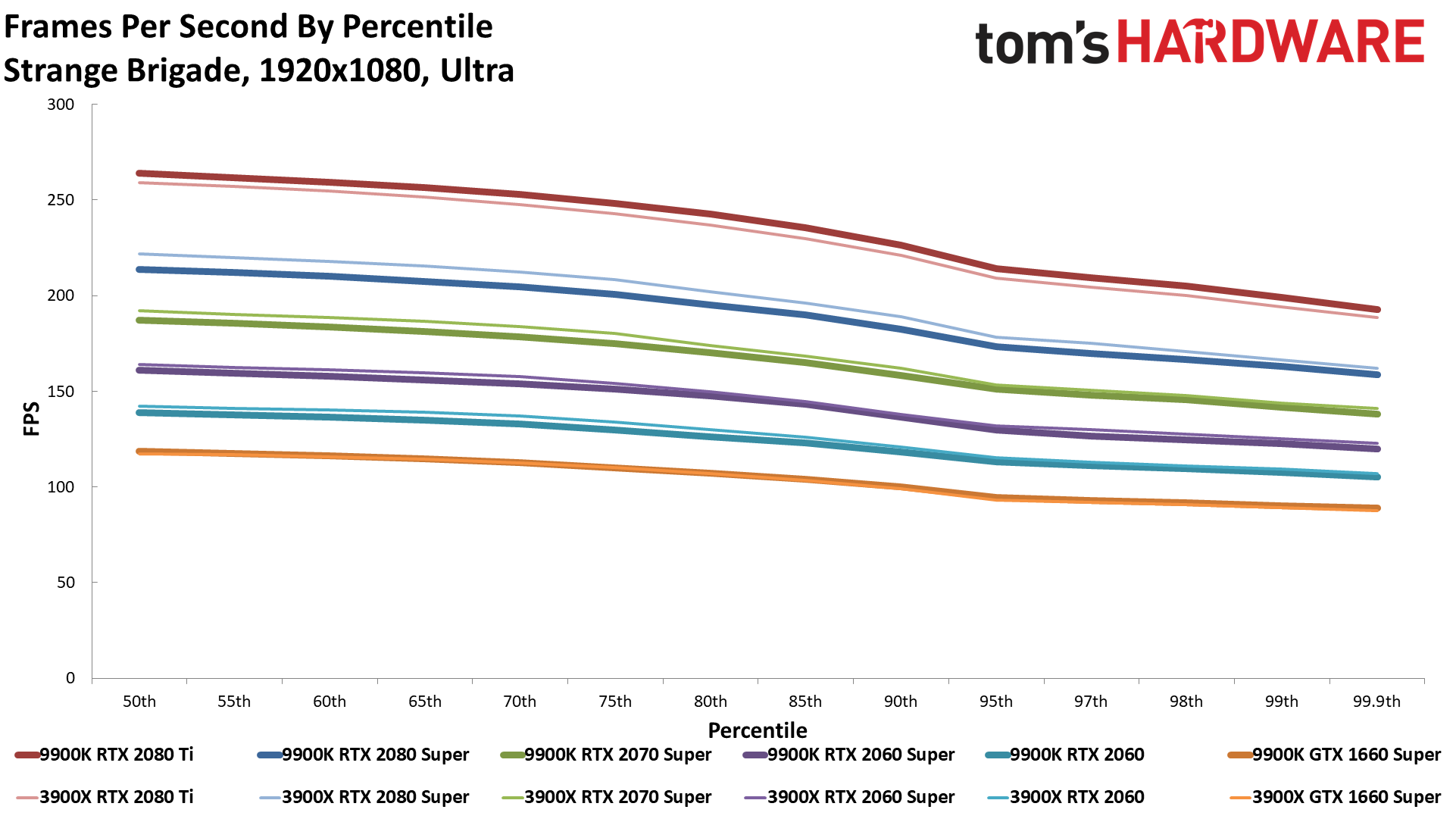

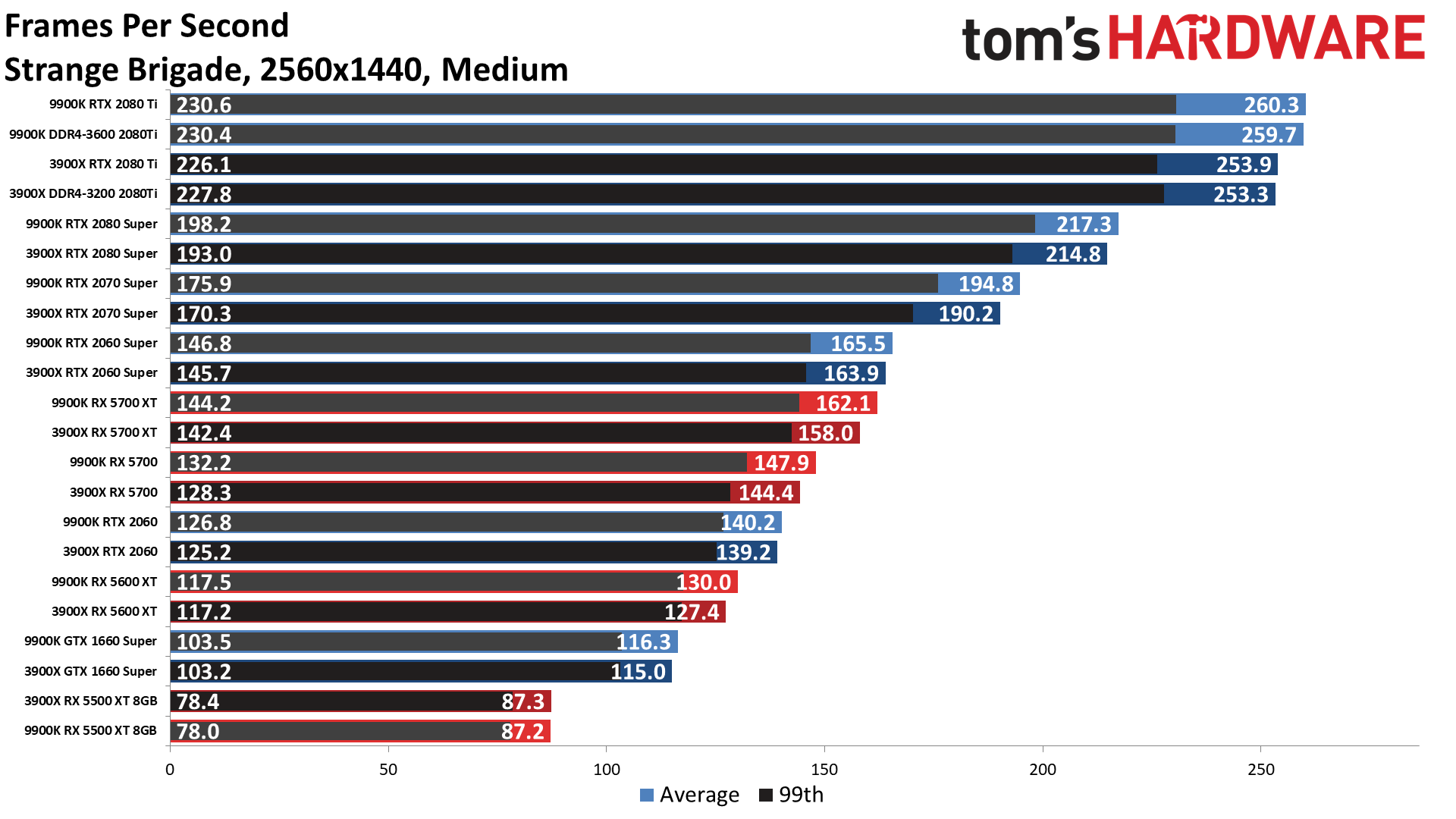

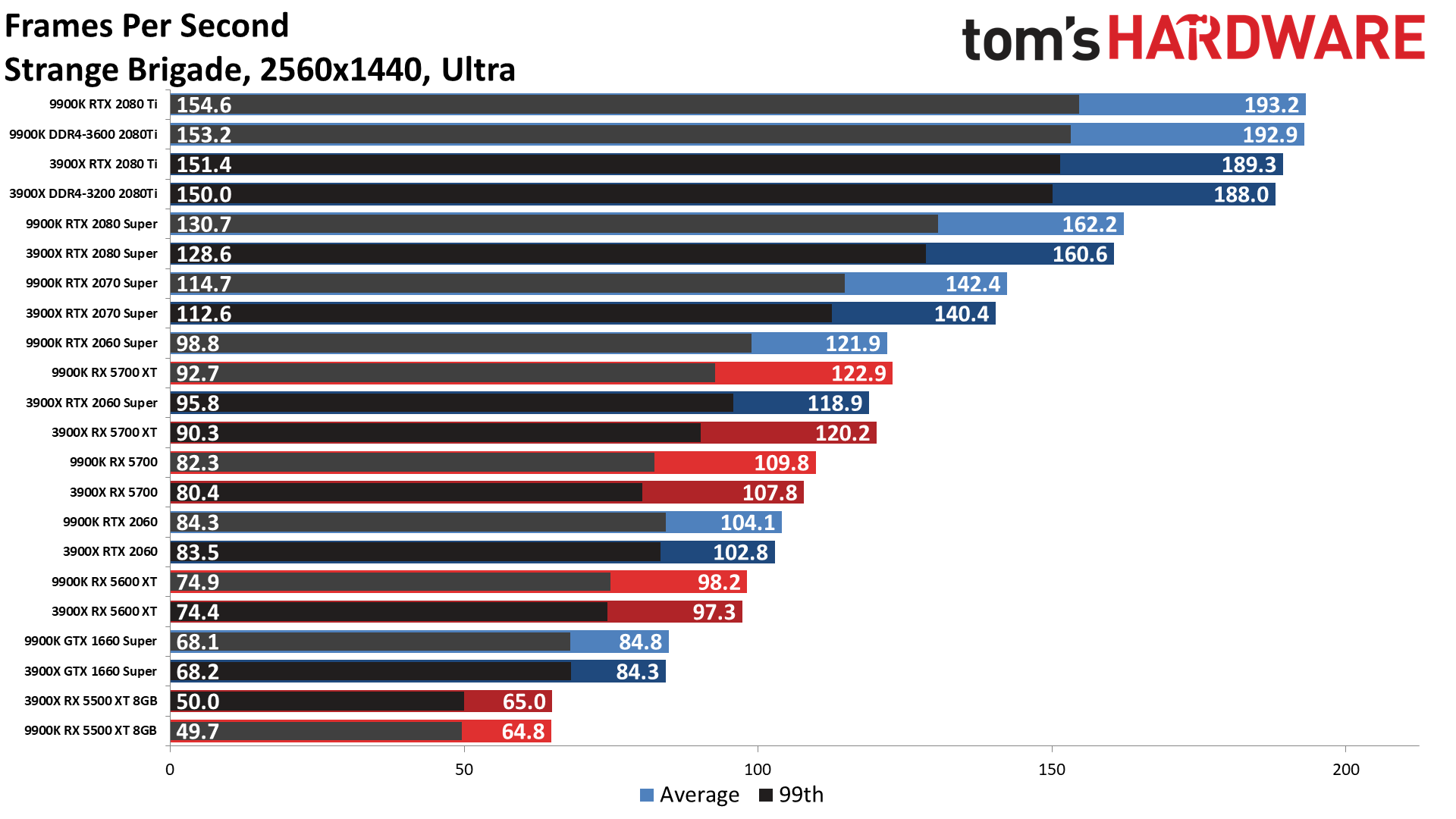

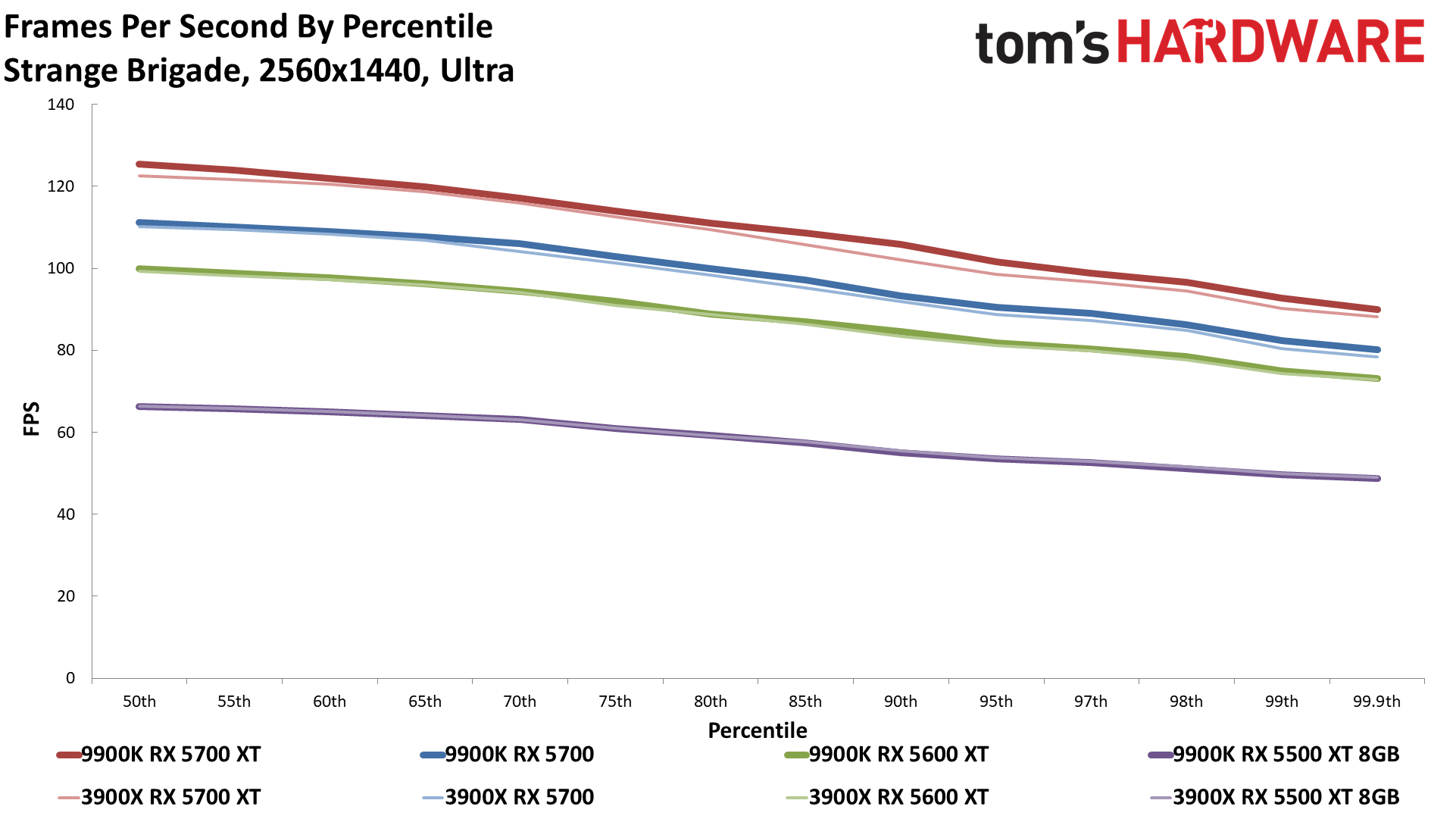

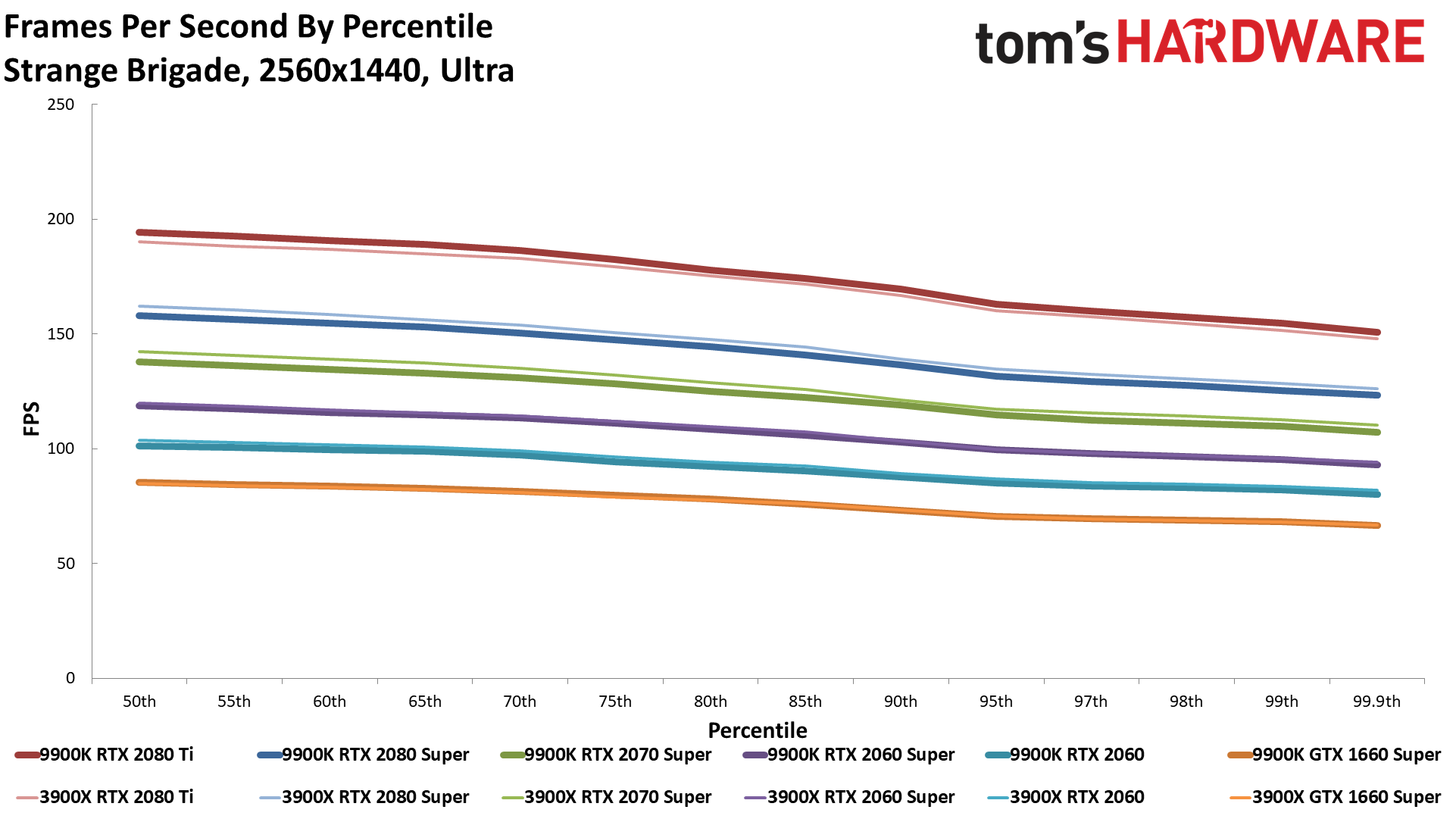

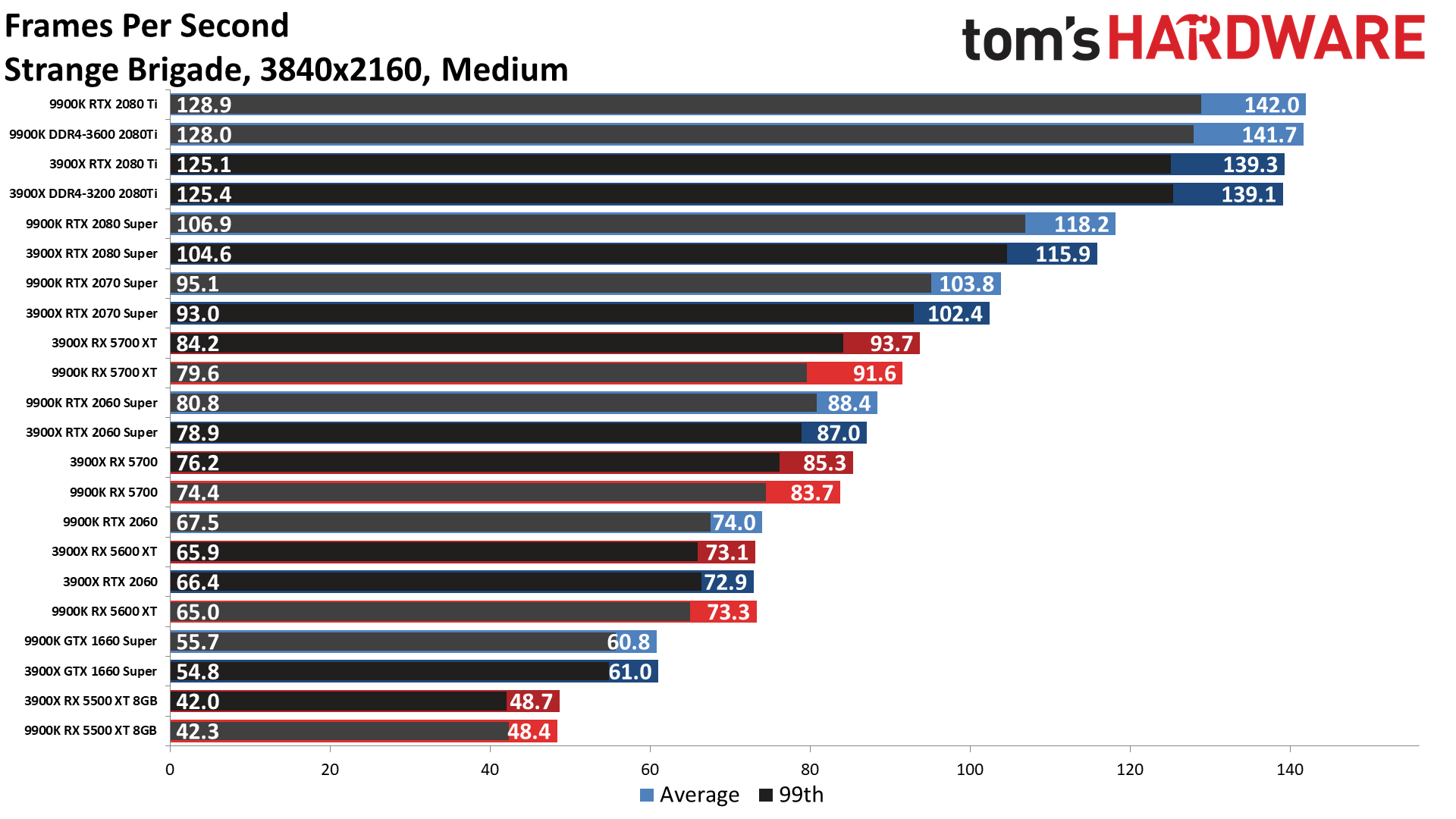

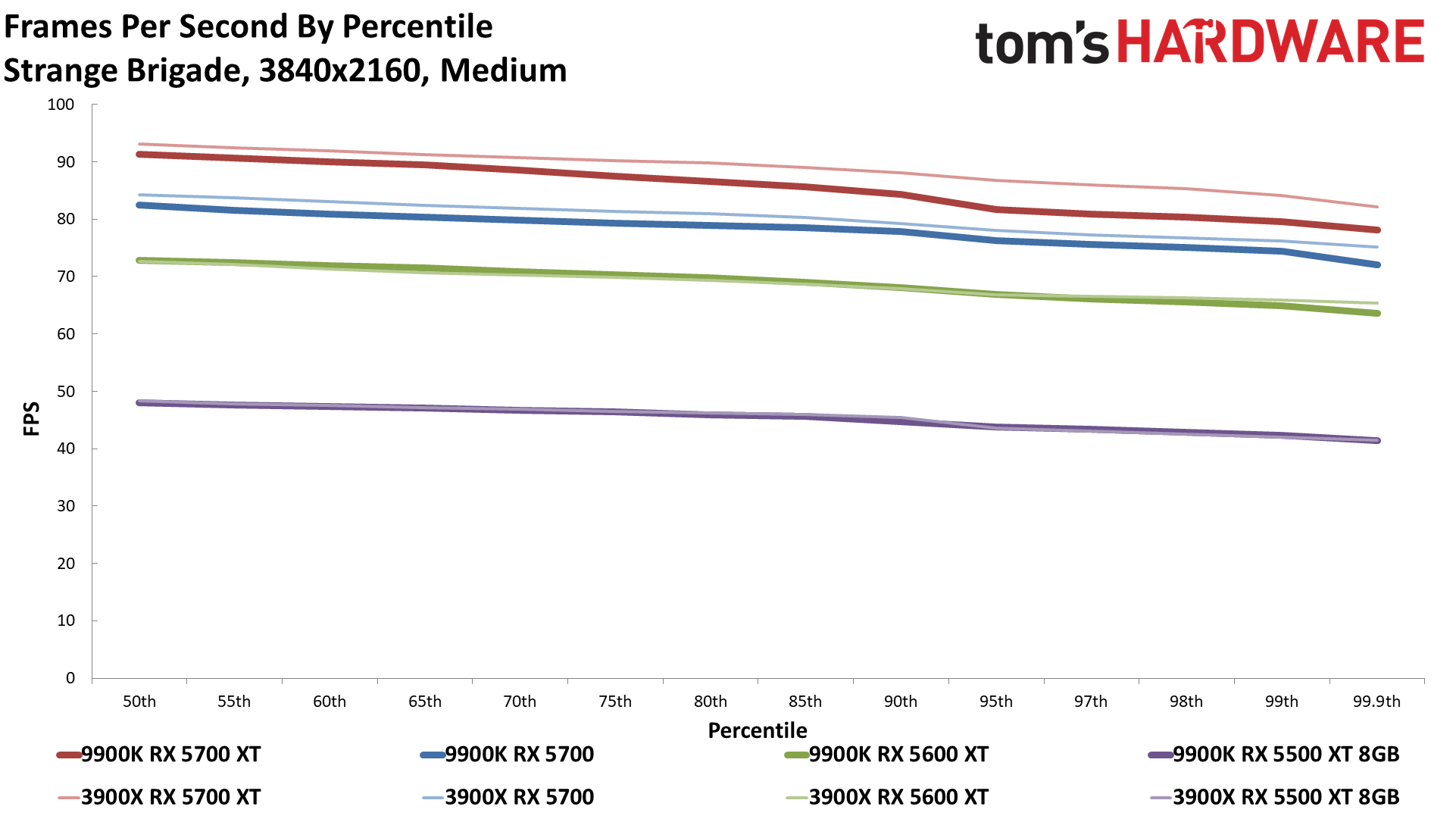

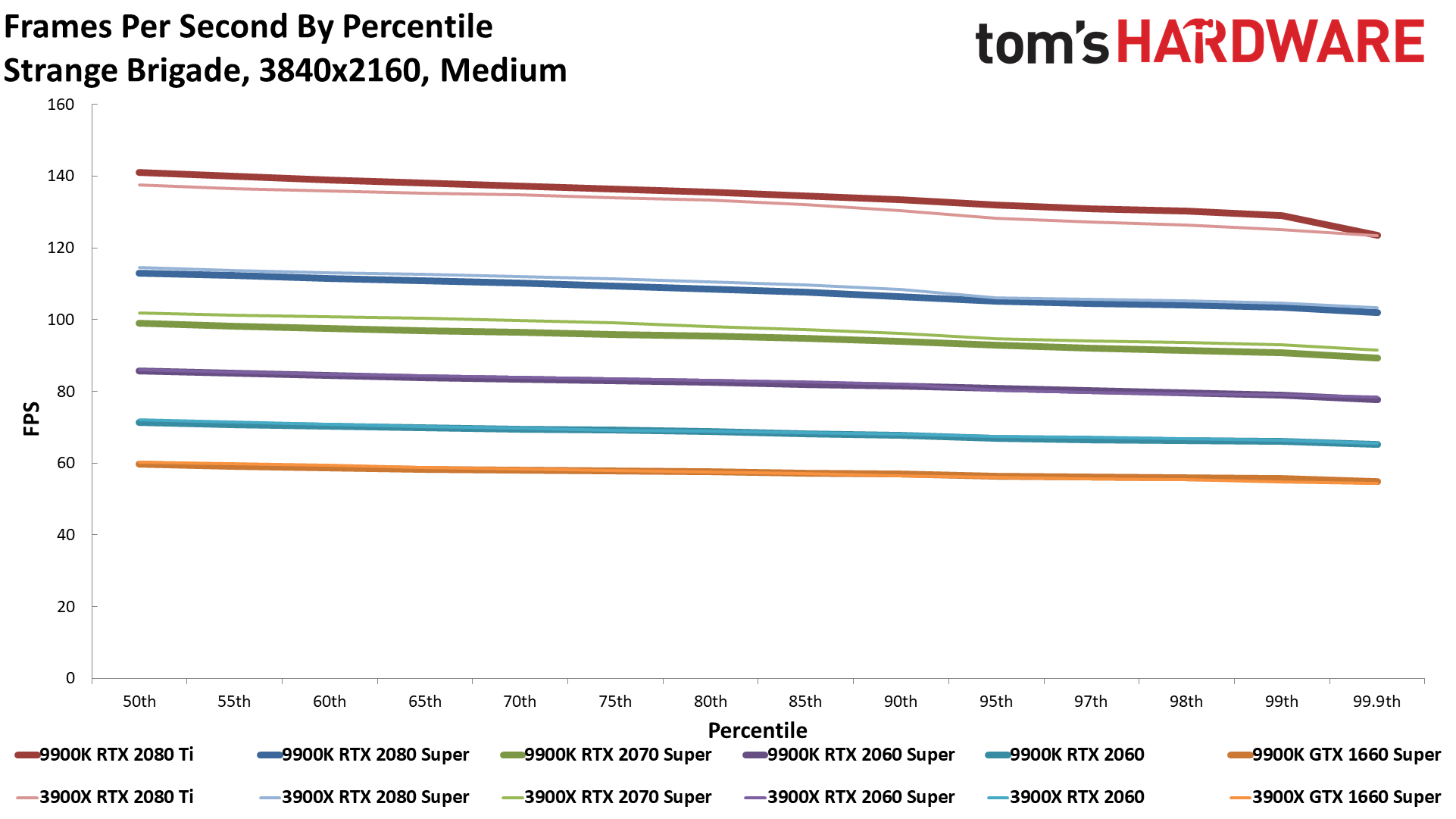

Game engines also change over time. Two years ago, when the RTX 2080 Ti first launched, the fastest gaming CPU at the time was the 6-core/12-thread Core i7-8700K. Most CPUs were still 4-core/8-thread (or 4-core/4-thread) affairs. We're still far from 8-core CPUs being the norm, but a few game engines seem to be making better use of additional CPU cores. Look at Strange Brigade for example, where the 12-core/24-thread 3900X nearly matches the higher clocked 8-core/16-thread 9900K in the tests shown here. It's no coincidence that Strange Brigade is an AMD-promoted game, but it does show there's plenty of room for optimizations that favor higher core and thread counts.

We're definitely interested in seeing what the complex environments of Night City in Cyberpunk 2077 mean for CPU requirements. It's not too hard to imagine the Cyberpunk 2077 system requirements recommending at least a 6-core or even 8-core CPU to run the game at maximum quality. Or if not Cyberpunk 2077, the next Microsoft Flight Simulator might be capable of pushing lesser CPUs to the breaking point.

For now, anyone with at least a 6-core CPU can comfortably run with up to an RTX 2080 Ti and not miss out on much in the way of framerates, particularly at 1440p ultra settings. But if you're spending that much money on an extreme performance graphics card, you'll want to pair it with the fastest CPU as well, which would be the Core i9-10900K. Once the next-gen GPUs arrive, don't be surprised if your CPU starts looking a bit weak by comparison.

MORE: Best GPU Benchmarks

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Groveling_Wyrm Replynofanneeded said:Why using 9th Gen intel for this ?

My guess was that he started the benchmarks before the 10th Gen Intel came out, and to start over was not an option. So, he continued on and released. I would expect a new set of benchmarks soon with the 10th Gen Intel compared to the Ryzen 3900x, but by then new processors will be out.... -

st379 Excellent article.Really enjoyed all the different resolution and thank you for using normal settings unlike Anandtech who are using stupid resolutions and settings.Reply

One question... why is the average fps gap remain the same although the average is getting lower and lower? I would expect that if the average fps is lower than the gap would be closed completly. -

King_V While it confirmed what was generally accepted as conventional wisdom all along, it's good to have these numbers to back it.Reply

My minor nitpick/gripe/pet-peeve/whatever is:

Could you notice such a difference? 15%, sure;

At lower frame rates, certainly, but, with 1920x1080/Medium on the 2080Ti, Red Dead Redemption 2's 149.6 vs 174.8, while a hefty 17% difference, if I were a betting man, I'd say nobody would be able to tell the difference between 150fps and 175fps.

If we were talking 15% more from, say, 60fps to 70, I'd say it's noticeable.

(of course, that ties in with my whole gripe about "MOAR HERTZES" with monitor refresh rates constantly climbing)

That all said - thanks for the article; this looks like it was a ton of work! -

Gomez Addams One small detail : R0 is not the stepping of the CPU. It is the revision level and they are two different things which is why the CPU reports them as separately. If you don't believe this then fire up CPU-Z and you will see it shows both items separately. Steppings are always plain numbers while revision levels are not. This laptop's CPU is also revision R0 and it is stepping 3.Reply

Stepping refers to one specific thing : the version of the lithography masks used. Revision level refers to many different things and stepping is just one of them. There are hundreds of processing steps involved in making a chip and revision level encompasses all of them. The word stepping is used because the machines that do the photo lithography are called steppers and that is because they step across the wafer, exposing each chip in turn.

I realize this is somewhat pedantic but as a website writing about technology you should purvey accurate information. -

mrsense "The RTX 2080 Ti only gave Intel a 10% lead over AMD CPUs at 1080p, but what happens when new GPUs arrive that are 50% faster? The bottleneck shifts back to the CPU"Reply

With Ryzen 4000, if the rumors are true, tables might turn. -

mrsense Replyst379 said:Excellent article.Really enjoyed all the different resolution and thank you for using normal settings unlike Anandtech who are using stupid resolutions and settings.

Well, when you have the top of line CPU and graphic card, your intent is to turn on all the eye candies with high (1440p and up) resolution monitors when playing games unless, of course, you're a pro gamer playing in a competition. -

JarredWaltonGPU Reply

Not really. Our standard GPU test system is a 9900K, and I don’t think it’s worth updating to the 10900K. We talked about this and I recommended waiting for Zen 3 and 11th Gen Rocket Lake before switching things up. Yes, 10900K is a bit faster, but platform (ie, differences in motherboards and firmware) can account for much of the difference in performance. So, give me another six months with CFL-R and then we’ll do the full upgrade of testbeds. 😀Groveling_Wyrm said:My guess was that he started the benchmarks before the 10th Gen Intel came out, and to start over was not an option. So, he continued on and released. I would expect a new set of benchmarks soon with the 10th Gen Intel compared to the Ryzen 3900x, but by then new processors will be out.... -

JarredWaltonGPU Reply

The gap does get smaller, especially at 1440p/4K and ultra settings, but it never fully goes away with 2080 Ti. Some of the gap is probably just differences in the motherboards and firmware — nothing can be done there, but it’s as close as I can make it with the hardware on hand.st379 said:Excellent article.Really enjoyed all the different resolution and thank you for using normal settings unlike Anandtech who are using stupid resolutions and settings.

One question... why is the average fps gap remain the same although the average is getting lower and lower? I would expect that if the average fps is lower than the gap would be closed completly. -

JarredWaltonGPU Reply

Read that “sure” as less definitive and more wry. Like, “sure, it’s possible, and benchmarks will show it, but in actual practice it’s not that important.”King_V said:While it confirmed what was generally accepted as conventional wisdom all along, it's good to have these numbers to back it.

There were a couple of games where Ryzen felt a bit better on the first pass — less micro stutter or something — but there were also games where it felt worse. Mostly, though, with your an FPS counter or benchmarks I wouldn’t notice.