GPU Face Off: GeForce RTX 3090 vs Radeon RX 6900 XT

Two GPUs enter, one leaves victorious: GeForce RTX 3090 takes on Radeon RX 6900 XT

The GeForce RTX 3090 and Radeon RX 6900 XT target the extreme performance market, though the 3090 ends up in a category of its own. These are, respectively, the two fastest GPUs currently available from Team Green and Team Red — that's Nvidia and AMD if that wasn't clear — sitting at the top of our GPU benchmarks hierarchy and claiming spots on our list of the best graphics cards. These aren't great values, but if money isn't a concern, or you're looking for ways to burn through your stimulus check, which one should you buy? We'll look at performance, features, efficiency, price, and more to determine the winner.

Nvidia's RTX 3090 launched on September 24, 2020, one week after the RTX 3080. While it's officially part of the GeForce brand, Nvidia positions it as a new take on the Titan formula. Titan cards were previously only available directly from Nvidia as a reference design, with prices ranging from $999 (the original GTX Titan and GTX Titan Black) and eventually topping out at $2,999 (the Titan V). By comparison, $1,499 for the RTX 3090 seems somewhat reasonable, but there's a catch: You give up some of the enhanced features that the Titan cards offered, like improved driver support for professional workloads.

AMD's RX 6900 XT, by comparison, has a far more mundane history. AMD launched the RX 6900 XT on December 8, 2020. It's the top of the Big Navi product stack and uses the same Navi 21 GPU as the RX 6800 XT, only this time it's fully enabled with 80 CUs instead of 72. At best, it's about 10% faster than the RX 6800 XT, while the $999 official launch price represents a more than 50% increase in cost compared to its lesser sibling.

Of course, with the current GPU and PC component shortages, exacerbated by Ethereum mining, prices are even worse. Our GPU pricing index currently (April 2021) pegs the RX 6900 XT at around $1,900 on eBay, while Nvidia's RTX 3090 goes for roughly $3,000. Mining is obviously the biggest factor in those prices, with the 3090 nearly doubling the 6900 XT's mining performance, and most people should just ignore those prices and forget about getting the cards — they were already a tough sell at the official MSRPs. But if you could buy one of these GPUs at MSRP (or close to it), or you really don't care about pricing, how do they stack up?

1. Gaming Performance

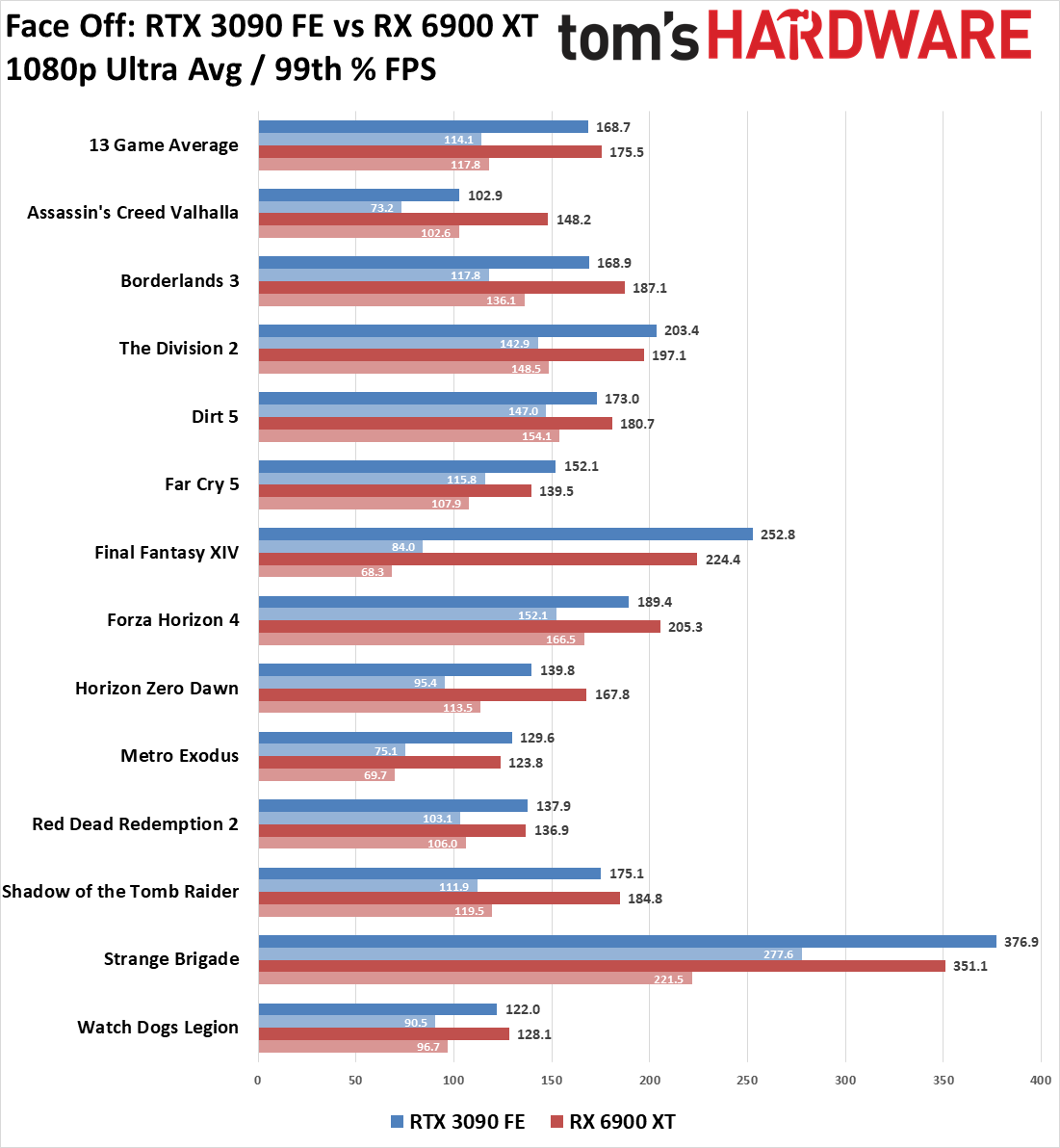

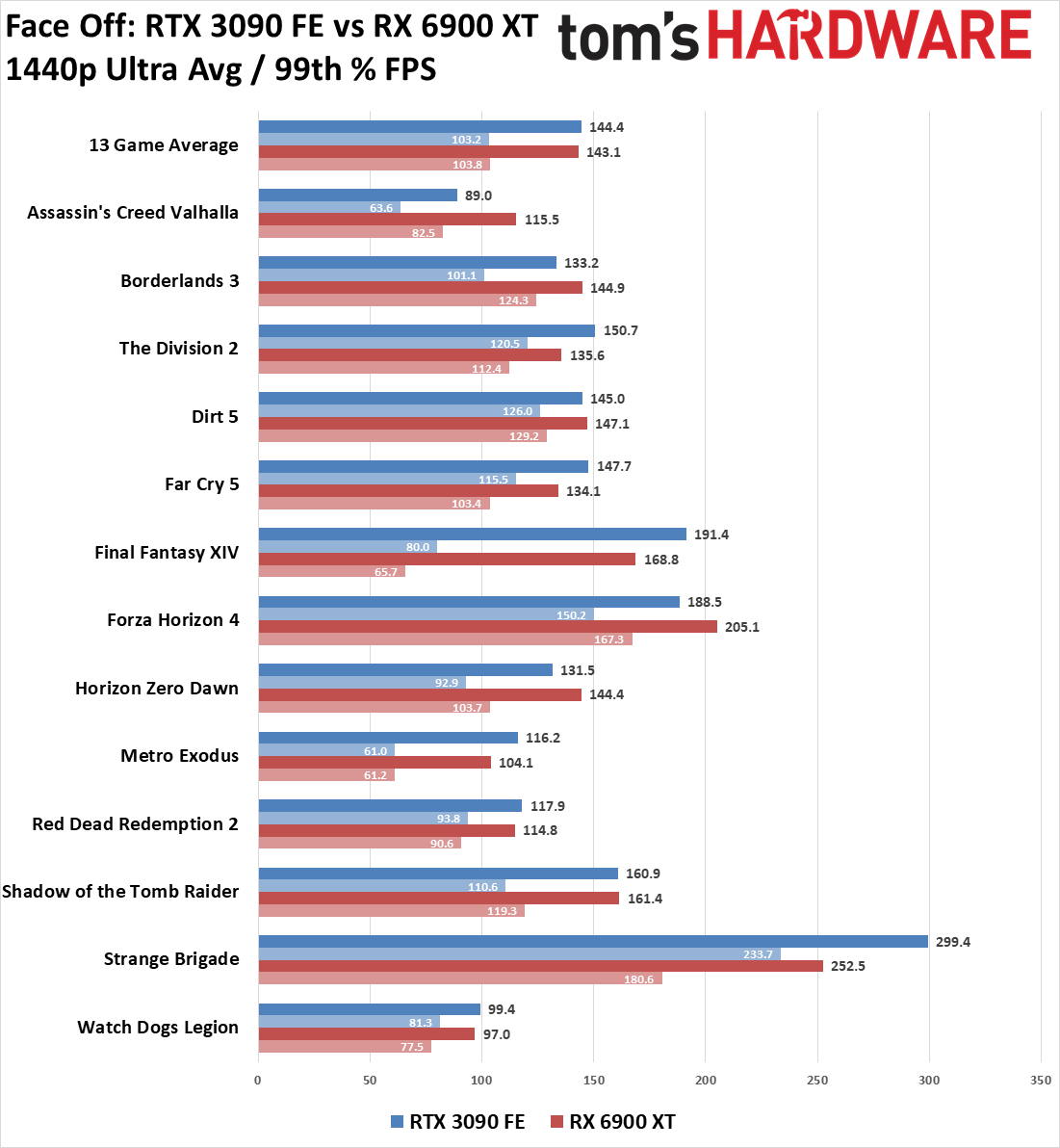

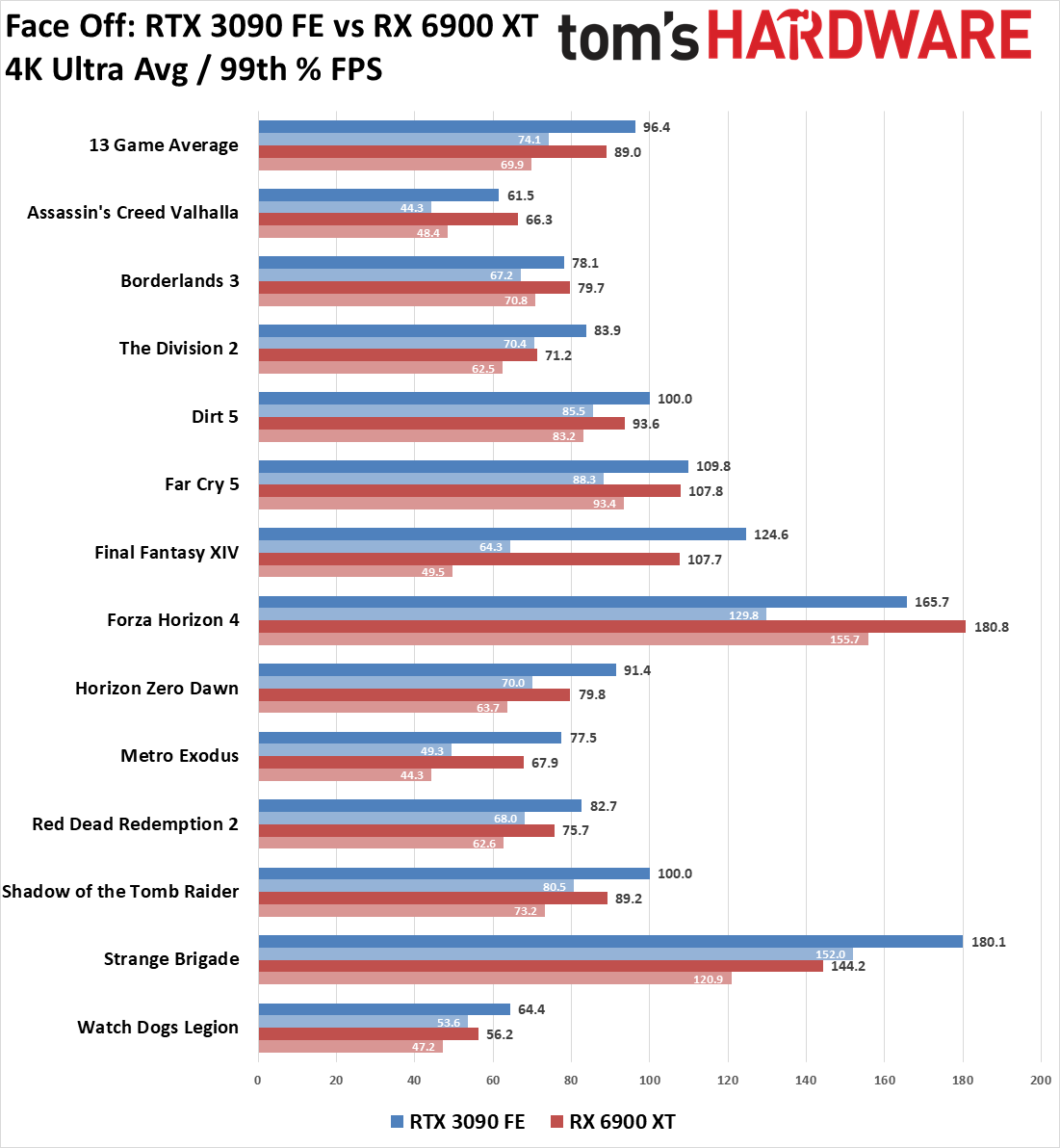

For most gamers, performance plays a critical role in determining which graphics card to buy. We've benchmarked a baker's dozen of games at 1080p, 1440p, and 4K running ultra quality (or equivalent) settings. But that's just the tip of the gaming iceberg. None of the 13 games had ray tracing enabled, so we also have ten additional benchmarks with DXR (DirectX Raytracing) turned on, plus six more results with DLSS Quality mode enabled. Let's take each of those results in turn.

In traditional rasterization performance, things are actually quite close. Of course, that depends on the selection of games, but with 13 relatively recent titles, including games promoted either by AMD or Nvidia, this should be a good representation of how the RTX 3090 and RX 6900 XT rank overall. We'll start with the 4K results since that's the most demanding scenario, and CPU bottlenecks can come into play at lower resolutions.

RTX 3090 takes a modest 8% lead, though the devil’s in the details. Of the 13 games, ten have the 3090 in front, leading by anywhere from 2% (Far Cry 5) to 25% (Strange Brigade). Three games (Assassin's Creed Valhalla, Borderlands 3, and Forza Horizon 4) end up favoring AMD, with Valhalla looking suspect at lower resolutions. Drop to 1440p, and the RTX 3090's lead shrinks to just 1%, effectively tied. The RX 6900 XT now leads in six of the games, though several are basically tied. Valhalla meanwhile jumps to a 30% lead, and as an AMD-promoted game, that's an obvious concern. On the other hand, the 3090's biggest lead comes in Strange Brigade, which is also an AMD-promoted game. Finally, at 1080p, the 6900 XT takes the overall lead by 4%, still leading in half of the games but with very large margins in Valhalla and Horizon Zero Dawn.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Rasterization is all well and good, and the Infinity Cache helps AMD's RX 6900 XT stay competitive, particularly at lower resolutions. What happens with ray tracing and DLSS, though?

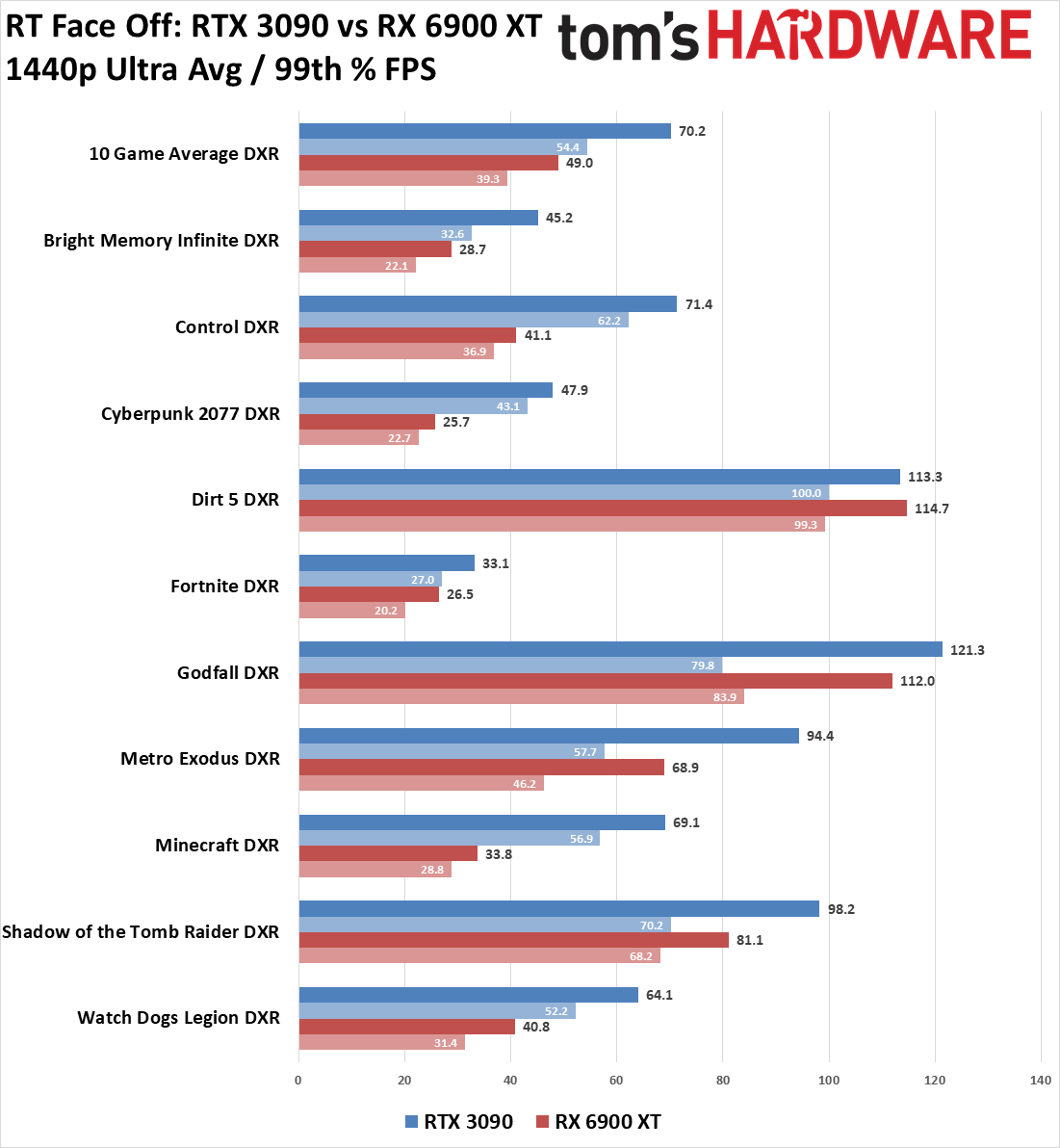

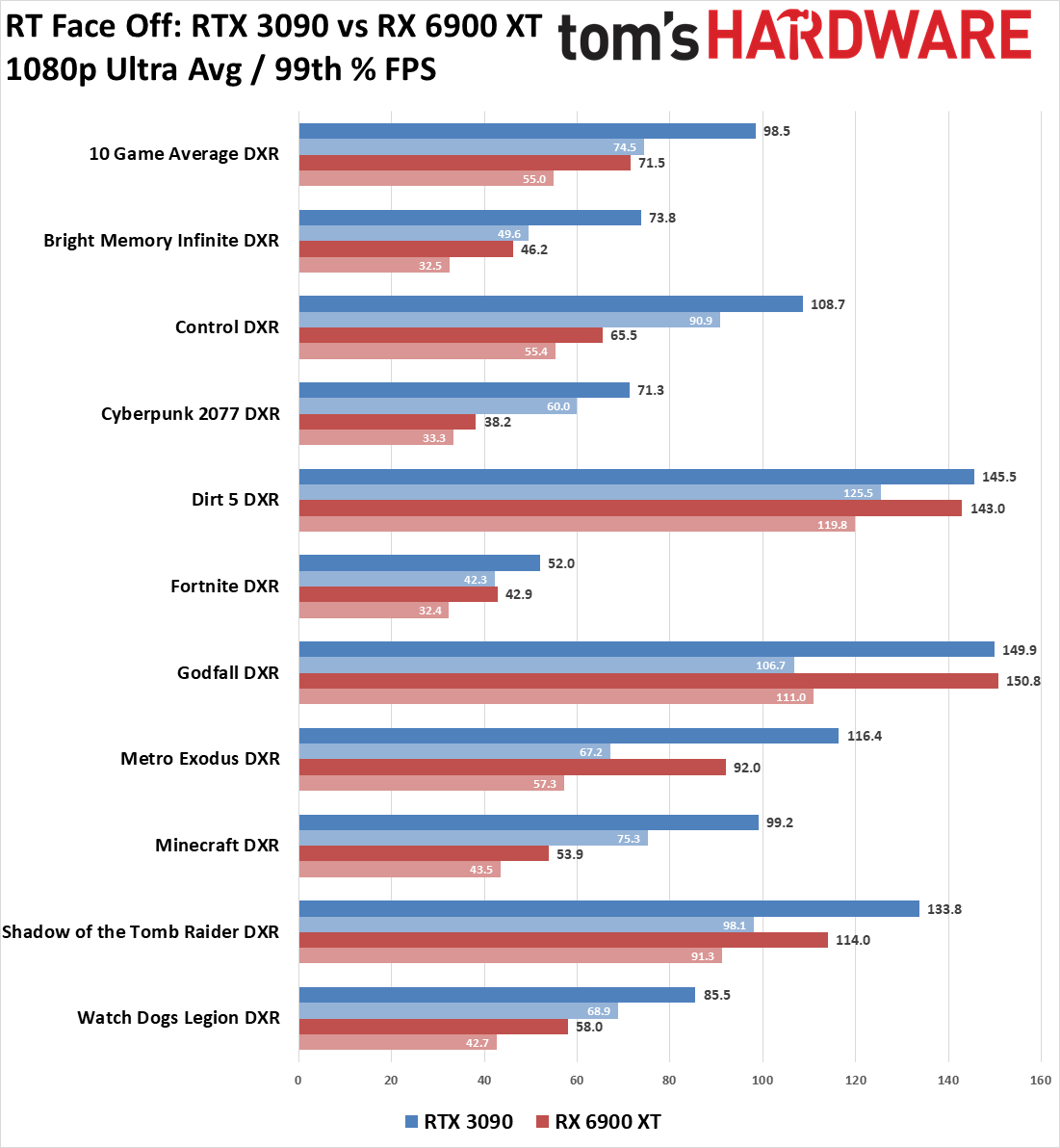

If you're interested in playing games with the enhanced visuals that ray tracing offers, things skew heavily in favor of Nvidia's RTX 3090. We've only tested at 1440p and 1080p this time because 4K proves to be too much for most GPUs — though if you're okay with 30 fps, the 3090 can still suffice. We've selected ten games with DXR support, including two AMD-promoted games. It's worth pointing out up front that the AMD-promoted games (Dirt 5 and Godfall) only use RT for shadows so far, which is arguably the worst use of ray tracing power. That means all four of the released games with AMD RT (Riftbreaker and World of Warcraft: Shadowlands are the two others) are at best on the same level of RT tech as Shadow of the Tomb Raider and Call of Duty, only this time with AMD-flavored optimizations. But that's a topic for another day; let's talk about performance.

First up, at 1440p with native rendering, the 3090 averages 70 fps in our overall metric, 43% higher than the 6900 XT's 49 fps. The 3090 has a range of 33 fps (Fortnite, at 'medium-high' RT settings — maxed out settings in Fortnite would further drop performance for a negligible increase in image quality) to 121 fps (Godfall). AMD's RX 6900 XT only comes close to the 3090 in DXR performance in two games: Dirt 5 and Godfall, both of which are AMD-promoted. The 6900 XT actually wins in Dirt 5, which seems suspicious at best, but the RT shadows really don't affect the game's visuals much. The biggest loss for the 6900 XT comes in Minecraft, which uses DXR to do "full path tracing," pushing the RT hardware to its limits — the 3090 is more than double the performance of the 6900 XT in that case.

Even 1080p native rendering with RT enabled is demanding. The RTX 3090 still leads by 38% overall, a bit less than the lead at 1440p as CPU limits and other factors come into play at lower resolutions. Most of the results are a bit closer, for example Minecraft now gives the RTX 3090 a mere 84% lead.

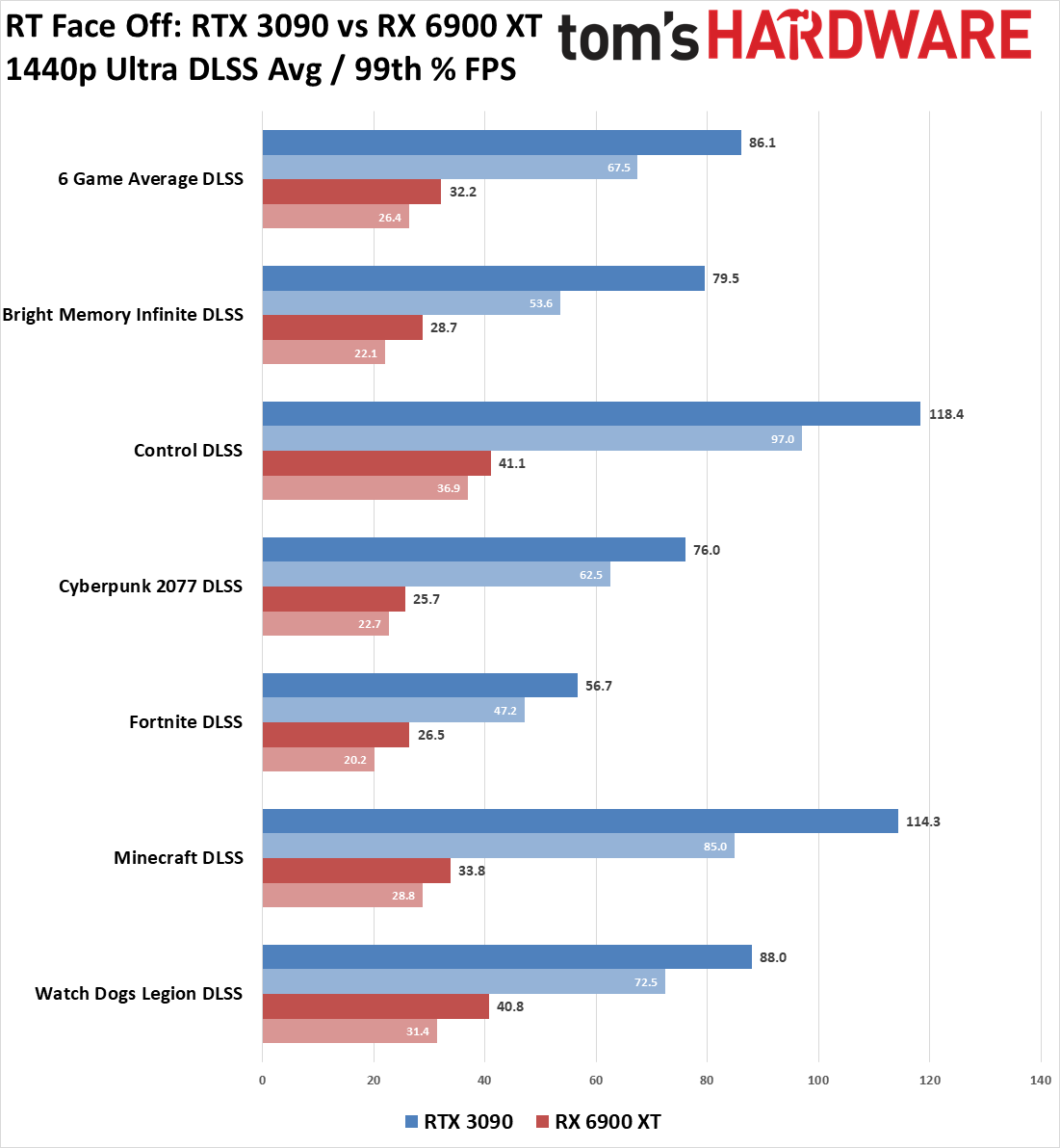

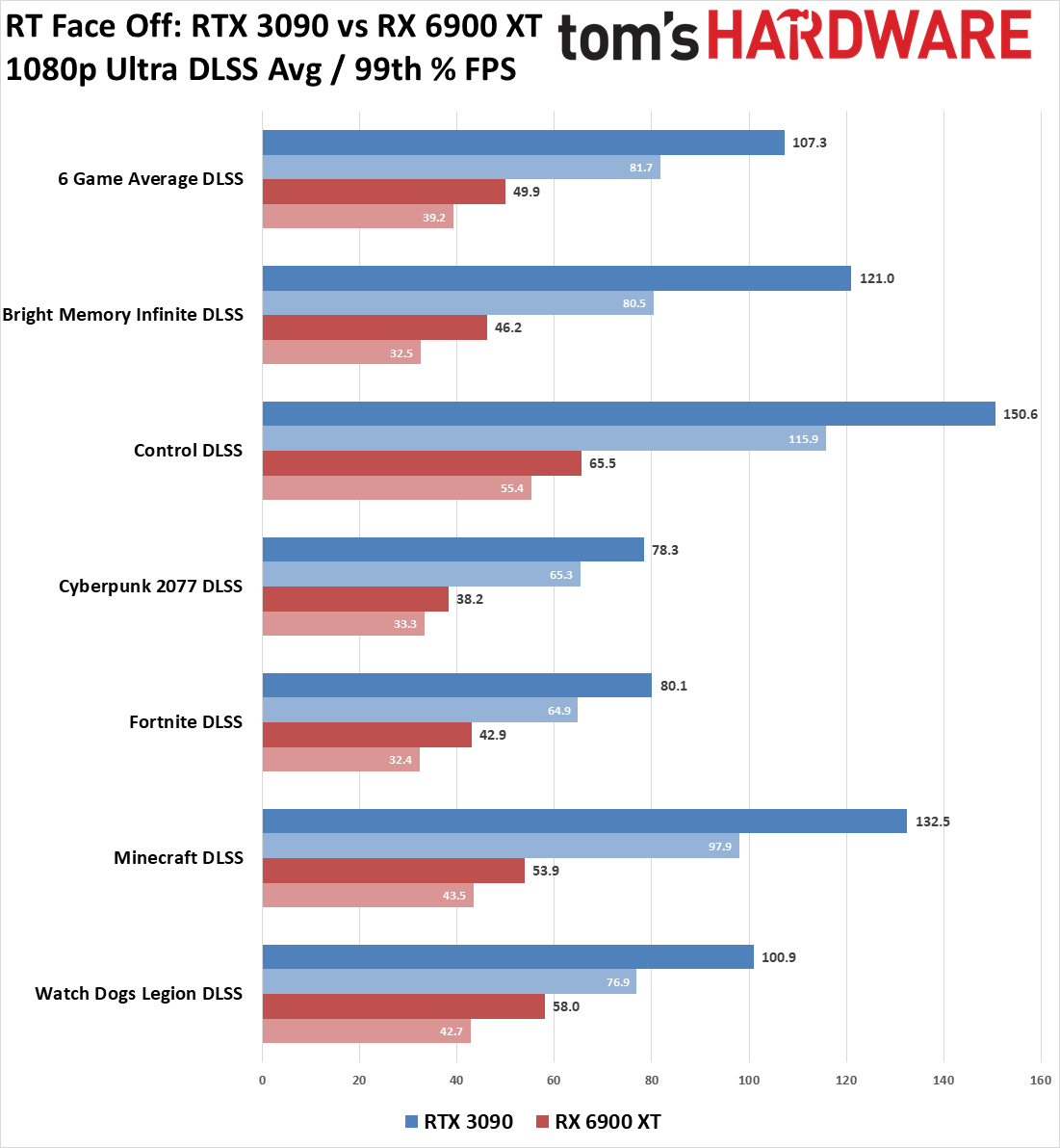

If that wasn't bad enough, enabling DLSS further increases the RTX 3090's lead. We only tested DLSS 2.0 enabled games (so we skipped Metro Exodus for now and Shadow of the Tomb Raider), and we only used DLSS Quality mode for those games. That means this is the worst performance you'll see from DLSS, but also the best visual quality. This is basically the "free performance with no truly discernable loss in image quality” setting. And the result for the 3090 is 62% higher performance on average at 1440p and 35% higher performance at 1080p.

AMD doesn't have a shipping alternative to DLSS yet — we're still waiting for FidelityFX Super Resolution (FSR), which might show up in Resident Evil Village next month, based on rumors. Even if it can boost performance, there's still the question of whether it can match DLSS 2.0 on image quality. Without FSR, right now, the 3090 more than doubles the performance of the RX 6900 XT, at both 1080p and 1440p, and the gap would be even larger at 4K. More importantly, the 3090 averages 86 fps at 1440p in the RT+DLSS games we tested, with only Fortnite falling short of the 60 fps mark.

RT isn't required for DLSS to work, either — Death Stranding, Marvel's Avengers, Outriders, and several other games support DLSS but don't implement ray tracing. It's not 'perfect' if you spend time pixel-peeping screenshots looking for artifacts, but the reality is that when playing most games that implement DLSS 2.0 (or later), especially at the Quality preset, you'll definitely notice the boost in fps and likely won't notice any loss of image quality. With Unreal Engine and Unity both providing engine-level support for DLSS 2.0, we'll likely see plenty more games flipping that switch in the future, which means AMD's GPUs will fall further behind.

Winner: Nvidia

Overall, the GeForce RTX 3090 is undoubtedly the fastest gaming GPU currently available. AMD's RX 6900 XT looks pretty good if you confine any performance results to games that don't support ray tracing or DLSS, but add in either of those, and it falls behind — often by a lot. There are more than 30 shipping games with DLSS 2.0 support and at least two dozen games with various forms of ray tracing. There are more coming in both categories. Without FSR, AMD can't hope to compete with Nvidia's best, and even FSR may not be sufficient. Until the next-generation Nvidia Hopper / RTX 40-series (or whatever it ends up being called) arrives next year, RTX 3090 will likely remain the fastest consumer graphics card.

2. Price

Short of winning the lottery, aka the Newegg Shuffle, prices and availability for the RTX 3090 and RX 6900 XT are both horrible. Nominally, the RX 6900 XT costs $1,000 and the RTX 3090 starts at $1,500. Even if you get picked for a card at Newegg, we've generally seen prices of around $1,500 or more on the 6900 XT and $2,000 and up for the 3090. That's bad, but our GPU pricing index gives an average sold price of $1,850 for the RX 6900 XT and over $3,000 for the RTX 3090. Yikes!

Again, finding either card in stock at less than eBay prices will prove difficult. Given enough time, you might get lucky, but scalpers and other opportunists aren't sitting still. We'd be curious to know what percentage of GPUs acquired via lottery systems ultimately end up being sold elsewhere at a profit.

Whether you go with MSRPs, or Newegg Shuffle prices, or even eBay pricing, however, one thing is abundantly clear: The RX 6900 XT costs a lot less than the RTX 3090. It's certainly not as fast when it comes to ray tracing, but it can hold its own in rasterization games. Bang for the buck, then AMD's RX 6900 XT comes out on top.

The problem is that the RX 6900 XT doesn't exist in a vacuum. It's nearly the same card as the RX 6800 XT, just with up to 10% higher performance (best-case). The RX 6900 XT outperforms the RX 6800 XT across our entire test suite by 6%–8%. We could throw the RTX 3080 into the mix as well, but at least the RTX 3090 has more than double the VRAM. Anyway, there are much better values to be had, and neither one of these cards is worth the current asking price.

Winner: AMD, begrudgingly

Considering current prices put the RX 6900 XT at anywhere from $500–$1,200 less than the RTX 3090, it's easy to say that AMD's card offers the better value. Unless you're actually doing cryptocurrency mining, in which case the RTX 3090 is nearly twice as fast and doesn't cost twice as much. Anyone spending this much money on a gaming GPU probably isn't overly worried about the price difference, however, and would likely pay the premium to get Nvidia's superior RT and DLSS performance. Most users should just wait for prices to come down.

3. Features and Tech

Unlike other categories where things can shift a lot based on the specific GPUs being compared, features and tech are relatively consistent across the entire range of modern GPUs. We're basically looking at how AMD's RDNA2 architecture compares to Nvidia's Ampere architecture, along with other ecosystem extras like G-Sync and FreeSync. As such, our verdict here is the same as we've offered in the RTX 3070 vs. RX 6700 XT face-off.

Most importantly, and we've touched on this already, Nvidia DLSS has no direct competition yet. Don't even get us started on Radeon Boost, which can certainly improve framerates but often causes stutters and frame pacing issues when it starts and stops. In supported games, it's very difficult to spot the differences between DLSS Quality mode and native resolution with TAA — until you look at framerates and DLSS runs 20–50% faster. FidelityFX Super Resolution will need to be very good to catch DLSS, and it will need developer support, and both will take time.

Nvidia's Tensor cores can be used for stuff besides gaming as well. Background blur and replacement features are common in a lot of video conferencing solutions, but Nvidia Broadcast offers clearly superior performance and quality. The noise elimination on the audio side is particularly impressive and can do wonders for anyone with a less than ideal recording environment. Where else will the Tensor cores end up being useful? We don't know, but we're looking forward to finding out.

Elsewhere, technologies that seem similar still end up favoring Nvidia. G-Sync and FreeSync ostensibly provide the same thing: Tear-free gaming and adaptive refresh rates. Except, in practice, G-Sync tends to work better. G-Sync Compatible can also work with FreeSync displays, while FreeSync can't work on a G-Sync display. Yes, G-Sync monitors tend to cost more, but as the one item you're constantly looking at when using a computer, it's worth spending an extra $100–$200 for a better display. The same goes for Radeon Anti-Lag and Nvidia Ultra-Low Latency and Reflex. Both can improve latency by minimizing buffering, but NULL tends to be a bit better, and games that directly incorporate Reflex deliver far superior latency.

At the extreme end of the spectrum, Nvidia also wins when it comes to VRAM. 24GB of 19.5Gbps GDDR6X on a 384-bit bus compared to 16GB of 16Gbps GDDR6 on a 256-bit bus ends up no contest. Almost no games will benefit from having 24GB vs. 16GB of VRAM capacity, but the additional bandwidth Nvidia offers proves useful, particularly at 4K.

Winner: Nvidia

This is more of a subjective category, but we simply find that Nvidia's features work better than AMD's alternatives. The RTX 3090 nabs another win, and it will be hard for AMD to close the gap in this category. Nvidia currently controls about 80% of the gaming GPU market for PCs, and while AMD basically has 100% of the modern console GPU market, that ends up not being a huge factor right now. Nvidia has superior features and tech, and the Ampere architecture beats AMD's RDNA2.

4. Power and Efficiency

Up until the latest generation GPUs, Nvidia had an advantage over AMD in GPU power consumption and efficiency. Today, thanks to TSMC's N7 process, the two companies are mostly at parity. Nvidia would probably be ahead if Ampere were also made on TSMC N7, but instead, it opted for Samsung's 8N process, a refinement of Samsung's 10nm tech. Then again, if the Ampere cards were made at TSMC, there'd be even fewer GPUs to go around.

Looking specifically at the RTX 3090 and RX 6900 XT, we've already covered features and performance, and Nvidia won those categories. The flip side of things is that Nvidia's top RTX 30-series GPUs push power requirements to new highs. The reference RTX 3090 Founders Edition peaks at around 360W of power draw, using our in-line power measurement devices and Powenetics software. The reference RX 6900 XT meanwhile sits at around 305W peak power draw. That means Nvidia uses nearly 20% more power for slightly higher performance in non-RT workloads. Turn on RT and DLSS, however, and efficiency (fps per watt) goes back to Nvidia.

All of this goes back to the fundamental designs of the respective architectures. Nvidia enhanced Ampere with faster and more capable RT cores and Tensor cores, plus GPU cores that can do either double the performance for FP32 calculations or concurrent full-speed FP32 and INT32. AMD's solution was to enhance the existing RDNA1 architecture with ray tracing support and a few other DirectX 12 Ultimate features like Variable Rate Shading (VRS) and mesh shaders. Then it wrapped everything up with a massive 128MB L3 Infinity Cache that boosts overall memory throughput and efficiency.

Winner: AMD

The two top-tier cards are pretty close overall, with AMD coming in ahead by virtue of using 50W less power. Keep in mind that these results are for the reference cards running reference clocks, however. We've looked at some custom cards, and power on both sides of the fence tends to be in the 400W–420W range on the highest clocked models. We also don't think a 50W difference in pure power consumption is a huge deal at the top of the performance spectrum. Still, in redlining the GPUs for these exotic offerings, Nvidia pushed into muscle car territory with a massive engine that delivers a lot of horsepower while sucking down fuel. Just make sure your card's cooling is up to snuff — particularly on the GDDR6X memory.

5. Drivers and Software

Both AMD and Nvidia offer drivers on a regular cadence, typically with at least one release each month, more when there are a lot of new games coming out. Not surprisingly, depending on the game, performance and support can swing wildly in favor of one side or the other. A few examples from the past year are worth mentioning.

Watch Dogs Legion ran better at launch on Nvidia hardware, and even though ray tracing worked on AMD's latest generation GPUs, it was sort of broken — it didn't render all the RT effects correctly. It took several months for game patches and driver updates to finally rectify that situation, and Nvidia's GPU still easily wins WDL head-to-head. That was expected, as it was an Nvidia-promoted game: The RTX 3090 manages to run 1440p Ultra with DXR at higher performance than the RX 6900 XT can manage at 1080p. The same thing happened with Cyberpunk 2077, which only just recently received AMD RT support. Forget the buggy launch for a moment; the game simply ran better and looked better on Nvidia's RTX cards. Nvidia still holds the performance advantage, and given it was an Nvidia promotional title, we expected as much.

AMD has promotional games as well, however. Assassin's Creed Valhalla, Borderlands 3, and Dirt 5 fall into that category and generally run better on AMD's latest GPUs — sometimes markedly so. We're not sure what's going on at a lower level in the drivers and software, but Valhalla, in particular, favors the RX 6900 XT by as much as 44% at 1080p. Yeah, that looks incredibly suspicious, but is it any worse than what we see in WDL and CP77 with DXR? That's difficult to say without access to the code.

Zooming out to a higher-level view, looking at a wide swath of games, day-0 driver support and performance tends to be far less extreme than the few examples cited here. AMD and Nvidia mostly have driver updates down to a science, and while occasional problems slip through, they're usually corrected quickly.

What about stability and other aspects of the drivers? There was a lot of noise about black screens with the first-generation AMD Navi cards. We didn't encounter much in the way of problems that couldn't be fixed by a clean driver install, but AMD did acknowledge the issue and patched things up. We haven't noticed any significant problems in the past year or so, and we're not aware of any ongoing major issues with RDNA2 GPUs. Nvidia's Ampere launch drivers for the RTX 3080/3090 also had stability problems, but those were fixed with updates rolled out within a week or so of launch.

AMD most recently added some improvements to its drivers with the Adrenalin 21.4.1 update, which mostly seems to focus on game streaming technologies. Basically, using another device to stream games from your desktop should now work better, and livestreaming games to the internet should also be easier. These were areas where we previously gave Nvidia a slight lead, and while we haven't had time to do extensive testing, we're comfortable in saying both sides do pretty well at drivers.

Winner: Tie

It's easy to get caught up looking at minutiae when discussing drivers, but the reality is that both AMD and Nvidia have been doing this for a long time, and the current state of GPU drivers hasn't caused us any major grief on either side. If you've only used Nvidia GPUs and drivers for the past five or ten years, they're familiar and offer plenty of features. The same can be said for anyone who has only used AMD GPUs and drivers during that time — except AMD has improved more, so that where we previously would have given Nvidia the edge, AMD has closed the gap in the past few years. We switch between both vendors' products regularly, which can be a bit problematic sometimes (hint: Display Driver Uninstaller works great to truly clean out most cruft), and rarely have any cause for complaint.

| Round | AMD Radeon RX 6900 XT | Nvidia GeForce RTX 3090 |

|---|---|---|

| Gaming Performance | ✗ | |

| Price | ✗ | |

| Features and Technology | ✗ | |

| Power and Efficiency | ✗ | |

| Drivers and Software | ✗ | ✗ |

| Total | 3 | 3 |

Bottom Line

The Radeon RX 6900 XT and GeForce RTX 3090 are closely matched, with several areas that are very close, and even one category where we declared a straight-up tie. While we end up with a 3-to-3 scoring verdict, we put more weight on the categories at the top of the table, so we've listed criteria in order of importance. That's the tie-breaker, and Nvidia's wins in raw performance and features beat out AMD's wins in price and power.

The sad reality is that price and availability right now are still deal breakers for most gamers. It doesn't matter how good a graphics card is if you can't find one for sale, and that's where we've been for most of the past seven months. Things aren't likely to improve any time soon, either, based on the latest rumblings. Whoever can produce the most cards will inevitably win the overall battle. Based on what we see from the Steam Hardware Survey and our GPU pricing index, Nvidia leads in availability. There were 4.5 times as many RTX 3090 cards scalped on eBay compared to RX 6900 XT cards, and while the 3090 only shows up at 0.33% of all GPUs for the Steam survey (grains of salt with regards to Steam's obscure statistics, naturally), RX 6000-series GPUs don't show up at all.

The current rumors are that we'll see RTX 3080 Ti sometime in the near future, which will theoretically deliver similar performance to the RTX 3090 for a lower price — for those that don't need 24GB of GDDR6X. It's rumored to also come with an improved version of Nvidia's anti-Ethereum mining limiter, which should mean miners won't be as quick to gobble up supplies. While we wait for round two of Nvidia's Ampere GPUs, AMD still hasn't fully fleshed out its RDNA2 lineup, and likely the RX 6900 XT will sit at the top of the stack for the next 18 months at least. This means it might be fall of 2022 before we get the next generation Nvidia Hopper and RDNA3, both potentially using 5nm process technology and hopefully blowing our minds with performance improvements.

For now, Nvidia reigns as the king of the GPU hill. You just need to pay, dearly, for the privilege. Anyone with the funds and wherewithal to acquire either of these cards should be happy with the resulting performance. However, buyer's remorse when looking at the street prices remains a distinct possibility. Let's hope we see some real improvements in availability over the remainder of 2021.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

watzupken I am not sure what is the point of comparing DLSS with one that runs at native resolution. The fact that the 2 cards don't differ that greatly in games in the first place is a clear indication that DLSS will be faster, assuming the game supports DLSS. While its true that AMD will have their DLSS competitor at some point this year, but till then, this comparison is moot.Reply -

chalabam Nvidia is the only one that supports Tensorflow, and even witouth Tensorflow, 24 Gb matters a lot in AI.Reply -

d0x360 Replychalabam said:Nvidia is the only one that supports Tensorflow, and even witouth Tensorflow, 24 Gb matters a lot in AI.

We are talking gaming here. Anyways I've been looking to upgrade my 2080ti ftw3 ultra and I figured this generation is get a 3080ti when it gets released but next generation... It's highly likely I'll be going AMD.

They are right up there in performance except with ray tracing BUT it's going up against nVidia 2nd gen rtx so I wasn't expecting AMD to win there. Navi 3 is going to be a different story. Improvements to ray tracing, their own version of DLSS and likely a lower price.

Hell AMD could probably beat the 3090 with Navi 2. Increase the memory bus, increase the infinity cache, switch to gddr6x annnd that's probably all it would take.

Truth is ray tracing still isn't in that many games. That will obviously change but for now you really aren't missing out on much...

My biggest concern is how DLSS is becoming a crutch to get better performance especially at 4k. Yes it does look better than native 4k with TAA simply because of how TAA works. Surely a better method of anti aliasing is in the world somewhere. Something that can handle everything like TAA but with the quality of MSAA.

I just don't want devs to skip proper optimization and just toss in DLSS like they did with Horizon ZD & Death Stranding. The fact that a 2080ti needs DLSS to run death Stranding at 4k60 is ridiculous... It's a game designed for the ps4. A high end pc should not have needed DLSS to get to 60fps... Hell it should probably run at 4k90 no problem. Then we have Horizon.. that game doesn't have DLSS and it runs terribly. It's the same engine so whats the issue?

By comparison I can run Forza Horizon 4 at max settings at 4k with 8xMSAA! at a locked 60.

If I drop it down to 4xMSAA which is still insane at 4k in terms of performance penalty the game runs at a solid 90fps.

So this is a game with insanely detailed car models, really good ai, a 300hz physics engine thats far more taxing than anything in HZD or Death Stranding... So it must come down to optimization because 4k with 8xMSAA is essentially the same performance penalty is ray traced gi, shadows and reflections all turned on... It might even be a bigger penalty because MSAA is expensive at 1080p let alone 4k.

Ok rant/ramble over lol -

Jim90 What an utter shocking and misleading sales pitch attempt by Jared/Nvidia, almost on a par with Tom's infamous "Just buy it" piece. Truly pathetic.Reply

This site IS STILL a joke. -

Karadjgne 3090 vs 6900XT: Which is the better card.....Reply

Obviously the one that you can actually get your hands on. I certainly do not care about a few measly % differences or if one card gets 200fps on 4k and the other one gets 185fps. All that matters is having either one of the cards actually physically inside your pc.

You'd have to be a complete and total moron to pass up the option for either card at this point in time, just for a few fps or better quality picture on a 4k monitor. -

carocuore AMD is dead to me. It took the ripofftracing bait just to justify a 50% increase in their prices.Reply

AMD cards died with the 5700XT. It's not an alternative anymore, it's the same with a different logo. -

watzupken Reply

I am confused. What are you comparing to show that 50% increase in price? 6700XT vs 5700XT? And in case you have not noticed, the prices of ALL graphic cards have gone through the roof.carocuore said:AMD is dead to me. It took the ripofftracing bait just to justify a 50% increase in their prices.

AMD cards died with the 5700XT. It's not an alternative anymore, it's the same with a different logo. -

dehdstar ReplyAdmin said:GeForce RTX 3090 takes on Radeon RX 6900 XT in this extreme GPU match up, where we look at performance, features, efficiency, price, and other factors to determine which GPU reigns supreme.

GPU Face Off: GeForce RTX 3090 vs Radeon RX 6900 XT : Read more

Yeah, I found it really interesting what AMD did to brag about their efficiency, this gen. We knew it was coming, when they abstained from releasing a high-end GPU after Vega failed. I mean, there's speculation as to why GCN 3.0 failed. Some articles proposed that the adoption rate of optimizing for Graphics Core Next was the issue. So, we never seen it really shine. The same was said about the 1000 and 2000 series, even though the former did really well and won that generation.

This time around, AMD had the more efficient tech...and they still do. AMD ups the TDP to 450W, next gen and Nvidia will be pushing closer to 600W, with some articles even reporting 900W, but that might just be for the 4090 TI. My wife and I notice our energy bill, even with me gaming on my 300W card alone lol. I am not going to an Nvidia, until they overhaul their tech, as AMD did and make it efficient. But someone, to whom cost is not objective? A die-hard Nvidia fan? Well, they will do everything in their power to swallow that cost and pretentious Mac-like pricing scheme lol that Nvidia have been known to impose. At least, where their high-end cards are concerned. You'll pay 3,000 for a high-end GPU that runs 1000W, if that's what Nvidia expects you to do, for high end gaming. That's just the enthusiast market for you.

And here where it gets interesting. I'm reminded of the time Phil Dunphy says "My, how the turn tables have..."

Just as the Radeon 1800XT did a long time before it, they schooled Nvidia. Of course, this was known to happen with ATI designed cards...all the way up till the AMD takeover, and Radeon finally dissipated into a more budget-minded brand, as did their CPUs at the time. Only in modern times, has AMD finally stepped up to become a true competitor that is after much more than just the middle market.

The 6000 series represents the Ryzen 1000 series. In that it steps up the game, to provide a major headache to the competition and I think this reborn AMD is here stay...it has to be, if Intel is not coming into the GPU market. Anyway, I feel like the 5700 XT released to send a message: "Be glad we're not releasing a high-end GPU this year!" I knew something was up, when I saw that the 5700 XT, with only half the cores, outperforming Vega 64 and Radeon VII, which have 64 or 60 CUs respectively (something equivalent to a 6800).

The 6900 XT released with one arm tied behind its back...which is to say, "power starved," but only to make a point... "We can draw little over 250W (something around 255W, tops) and nearly match par with the 3090. But should you open up a little, known tool, called, "More Power Tool?" You can bring the 6900 XT neck and neck and neck with a 3090, maybe even more so. Don't touch the clocks...just set the TDP to something closer to the 3090 (just under 350W) and? Done. It's an, effectively, a budget-minded 3090. Next gen should be interesting, to see what Nvidia does to tighten things up a bit. You can only push existing tech so far, before a redesign is warranted and they are effectively riding the same tech as they had with RTX 2000 series. It's no lie, they're going to have to throw more processors and power to compete. If AMD plan to throw a whopping 450W at the 7900 XT? Things are going to get ugly at Nvidia's side of the table...especially with power and gas pricing going up.