Intel Xe DG1 Benchmarked: Battle of the Weakling GPUs

The little engine that couldn't

We first heard about Intel's Xe Graphics ages ago, and even got a preview of the Xe DG1 "Test Vehicle" at CES 2020. As time continues ticking by, what once might have looked like something with the potential to show up as a budget option on our list of the best graphics cards feels increasingly like a part with no real market. Intel looks to be using this as more of a proof of concept than a full-fledged release — a chance to work out the kinks, as it were, before the real deal DG2 launch sometime, presumably later this year or in early 2022. Technically, the DG1 is available, but it can only be purchased with select pre-built PCs. That's because it lacks certain features and requires special BIOS and firmware support from the motherboard to function. But if you could buy it, or if you think one of these pre-built systems might be worthwhile, how does it perform? That's what we set out to determine.

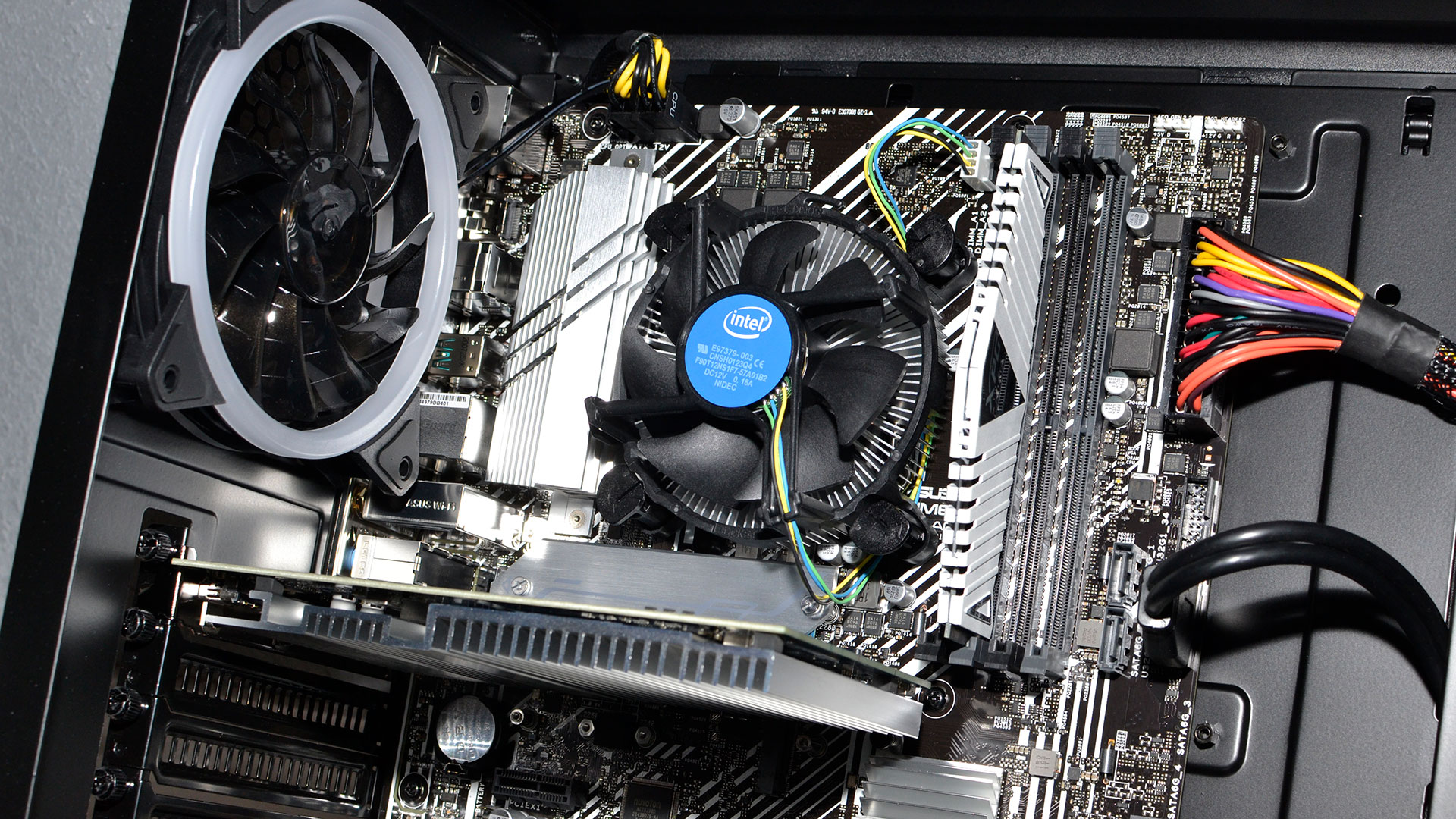

Best Buy currently sells precisely one PC that has an Xe DG1 card. The system comes from CyberPowerPC with a passively cooled DG1 model from Asus, and it might be the only pre-built and DG1 model considering what you get. Besides the graphics card, the PC otherwise has reasonable-looking specs, including a Core i5-11400F, a 512GB M.2 SSD, 8GB DDR4-3000 memory, and even a keyboard and mouse. One problem, however, is the use of a single 8GB DIMM from XPG. Another concern is the limited storage space — 512GB is enough for typical SOHO use, perhaps, but it can only hold a few of the larger games in our test suite. We fixed both problems by upgrading the memory and adding a secondary storage device.

The system costs $750, and given the specs, we'd normally be able to build our own similar system for around $575, not including the graphics card. Unfortunately, that means we have about $175 to work with on the GPU. If these were normal times, we'd be looking at GTX 1650 or even GTX 1650 Super cards for that price. Sadly, these are still not normal times, as seen in our GPU price index, which means you'd be lucky to find even a GTX 1050 for that price. And that's part of what makes the Xe DG1 potentially interesting: Can it compete with some of the modest AMD and Nvidia GPUs, like the RX 560 and GTX 1050? Spoiler: Not at all, at least not in gaming performance. We also encountered quite a few issues along the way, too.

Intel Xe DG1 Specifications and Peculiarities

The Xe DG1 straddles the line between dedicated graphics and integrated graphics. Take Intel's mobile Tiger Lake CPU, rip off the Xe Graphics, turn that into a standalone product, and you sort of end up with the DG1. Of course, Intel had to add dedicated VRAM for the card, since it no longer relies on shared system memory. As noted above, it also requires a special motherboard BIOS that supports Intel Iris Xe, which generally limits it to certain B560, B460, H410, B365, or H310C chipset-based motherboards.

The reasons for the motherboard requirement are a bit convoluted. In short, Xe LP, the GPU at the heart of the DG1, was designed first as an integrated graphics solution for Tiger Lake. As such, the VBIOS and firmware get stored in the laptop's BIOS. The DG1 cards don't have an EEPROM to store the VBIOS, which necessitates putting that into the motherboard BIOS. Which of course raises the question of why Intel didn't just put an EEPROM on the DG1, since that should have been possible. Regardless, you can't buy a standalone DG1 card, and even if you buy a system with the card like we did, you can't put the card into a different PC and have it work.

Here's the full rundown of the Xe DG1 specs, with the Nvidia GT 1030 and AMD Vega 8 (AMD's top integrated graphics) that we'll be using as our main points of comparison.

| Graphics Card | Xe DG1 | GT 1030 GDDR5 | GT 1030 DDR4 | Ryzen 7 5700G | Ryzen 7 4800U |

|---|---|---|---|---|---|

| Architecture | Xe LP | GP108 | GP108 | Vega 8 | Vega 8 |

| Process Technology | Intel 10nm | Samsung 14N | Samsung 14N | TSMC N7 | TSMC N7 |

| EUs / SMs / CUs | 80 | 3 | 3 | 8 | 8 |

| GPU Cores | 640 | 384 | 384 | 512 | 512 |

| Base Clock (MHz) | 900 | 1227 | 1152 | ? | ? |

| Boost Clock (MHz) | 1550 | 1468 | 1379 | 2000 | 1750 |

| VRAM Speed (Gbps) | 4.267 | 6 | 2.1 | Up to 3.2 | Up to 3.2 |

| VRAM (GB) | 4 | 2 | 2 | Shared | Shared |

| VRAM Bus Width | 128 | 64 | 64 | 128 | 128 |

| ROPs | 20 | 16 | 16 | 8 | 8 |

| TMUs | 40 | 24 | 24 | 32 | 32 |

| TFLOPS FP32 (Boost) | 1.98 | 1.13 | 1.06 | 2.05 | 1.79 |

| Bandwidth (GBps) | 68.3 | 48 | 16.8 | 51.2 | 51.2 |

| TDP (watts) | 30 | 30 | 30 | 65 (CPU+GPU) | 15 (CPU+GPU) |

So, there are two different GT 1030 models, and let's be clear that you don't want the DDR4 version. It's terrible, as we'll see shortly. The GDDR5 model has more than twice the memory bandwidth and shouldn't cost too much more, but some people might be thinking, "Yeah, we can save $20 and forego the GDDR5." They're wrong. Less than half the bandwidth makes a slow card even slower, and Intel can easily surpass at least that level of performance, though it will be a closer match between DG1 and the GT 1030 GDDR5.

At the other end of the Nvidia spectrum, we'll also include a GTX 1050 to show what a faster card can do. That was nominally a $110 card at launch, though the current GPU shortages mean it now goes for around $175 on eBay (that's the average price of sold listings for the past couple of months). But that card has 2GB VRAM while the Intel DG1 has 4GB, so we also tossed in the GTX 1050 Ti (originally $139) and GTX 1650 ($149 MSRP) for comparison in our charts. Those have average eBay pricing of $208 and $295, respectively, even though the MSRPs are much lower — and neither one can effectively mine Ethereum these days. It might feel like we're maybe going too far, as those cards end up in an entirely different league of performance compared to Intel's DG1, but that was the market when the DG1 was in development.

We don't just want to look at Nvidia and Intel, though, so we've also got AMD's Vega 8 integrated GPU via two different processors as another comparison point. One comes thanks to the Asus MiniPC with a 15W TDP AMD Ryzen 7 4800U, to which we've also added 2x16GB HyperX DDR4-3200 memory and a Kingston KC2500 2TB SSD. The other Vega 8 comes via the desktop Ryzen 7 5700G with a much higher 65W TDP, and we've also equipped that system with 32GB of DDR4-3200 memory (CL14, though). The 5700G uses a Zen 3 CPU with eight cores and 16 threads while the mobile chip is a Zen 2 architecture, still with eight cores and 16 threads. We've also included an RX 560 4GB card, which has as much VRAM as the DG1 and GTX 1050 Ti.

In the current market where even slow GPUs are sold at premium prices — the GT 1030 is supposed to be a $70 card, but currently sells for $110–$200 — the DG1's price to performance ratio could be its main selling point. Actually, Intel put some effort into the video encoding and decoding capabilities of the DG1 and seems to be pitching that as another potential use case, but we're mostly interested in the graphics performance right now. On a related note, the DG1 felt quite laggy at 4K just running the Windows desktop at times (when resizing windows, for example), which isn't perhaps the primary intended use, but the 4K decoding and encoding prowess might be overshadowed by other factors.

In raw specs, of the GPUs we've listed above, the DG1 theoretically comes out on top. It has a wider memory bus and more memory bandwidth than the other options, though the Ryzen 7 5700G could spoil the party. It also has more shader cores, coming in with a theoretical 2.0 TFLOPS. But the Intel GPU architectures are less of a known quantity, and drivers are also a concern. Will the DG1 be able to run every game we wanted to test? (Spoiler: Almost.) More importantly, how does it perform while running those games at 720p low and 1080p medium?

Where AMD and Nvidia have Compute Units (CUs) and Streaming Multiprocessors (SMs) that are roughly analogous, Intel's Xe layout continues to use Execution Units (EU). Each EU has eight ALUs (arithmetic logic units), which is basically what AMD and Nvidia refer to as a GPU "core." It's more like a processing pipeline than a core, but regardless, 80 EUs (out of a theoretical 96) and 640 'cores' should do okay, especially with a 30W TDP.

If Intel were to simply scale this up by a factor of 10X, it could potentially create a 300W TDP chip that could do 20 TFLOPS. Rumored plans call for multiple different models of the DG2 GPU, with potentially anywhere from 128 to 512 EUs. Intel has said the architecture for DG2 will also change to include some form of ray tracing support, along with switching to GDDR6 memory, which should provide substantially better performance and features than the DG1. But let's see where Intel's DG1 lands before looking to the future DG2 products.

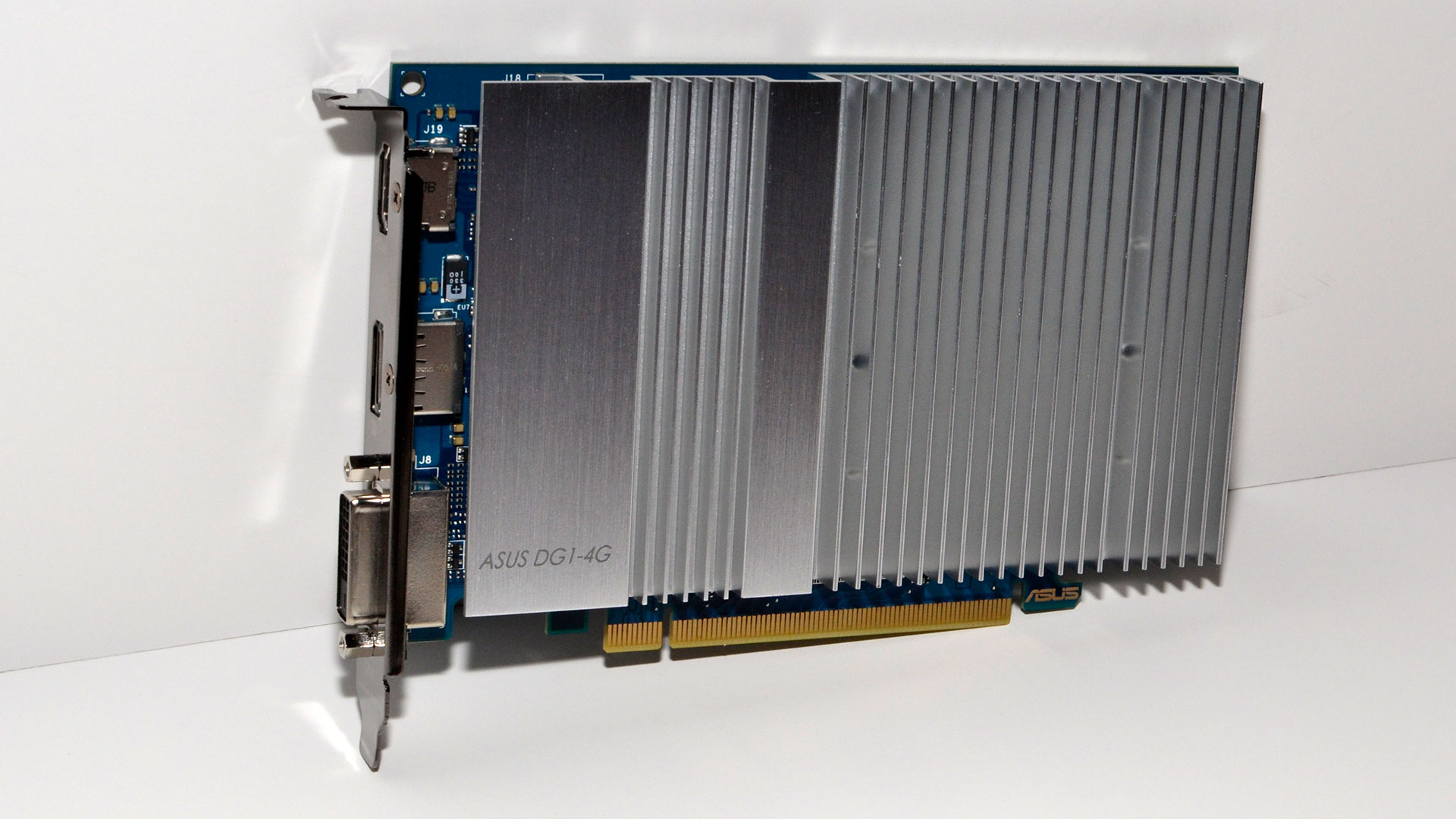

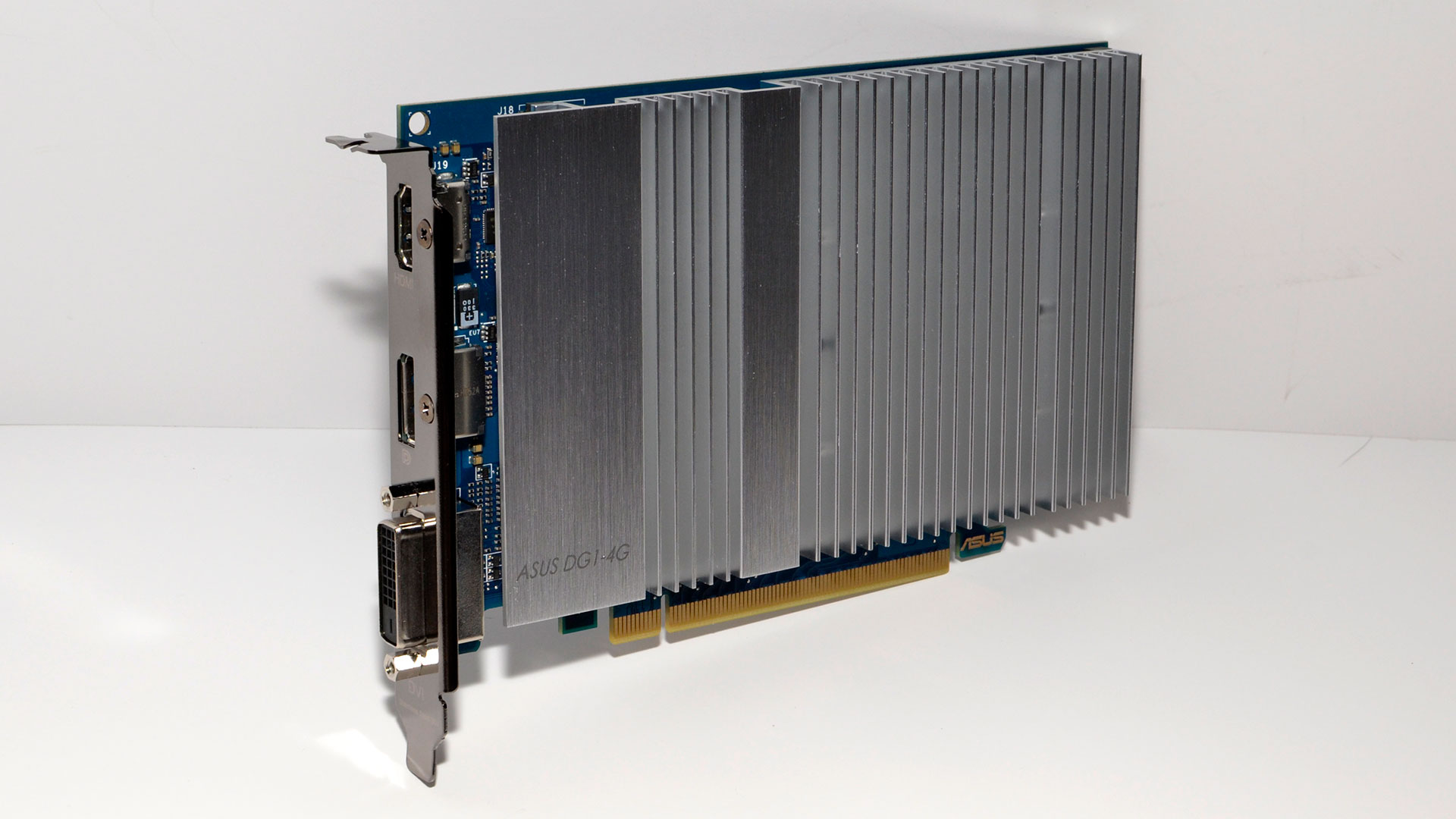

Intel Xe DG1: Asus's Passively Cooled Card

Before we get to the testing, we did want to take a closer look at the Asus DG1. It's an interesting design, opting for a large heatsink covering the entire front portion of the card. After so much time spent testing higher performance graphics cards, it feels almost weird to see a single-slot, passively cooled card. The DG1 only has a 30W TDP, so it should be fine, though a bit of extra airflow near the GPU could likely help. With the default configuration of the system, we didn't see any performance issues, but we were running in a relatively cool 70F environment.

Naturally, the 30W TDP means there's no need for a PCIe 6-pin power connector. The card gets everything it needs from the PCIe x16 slot, though the CyberPower system does include a 600W PSU with two 6/8-pin connectors if you want to upgrade it down the road, like say when graphics cards start to become affordable again. We also tested the GTX 1050, GTX 1050 Ti, GTX 1650, and RX 560 4GB on the same CyberPowerPC, so there's no other factors influencing our performance numbers.

Video outputs cover most of the bases, with single HDMI, DisplayPort, and DVI-D connectors. If you're hoping to do a multi-monitor setup, that's less than ideal, since you'd normally want to have all the displays running off the same type of connector. Still, it means anyone with a monitor built in the last decade or so should be okay — unless you're hoping for an old school VGA connection, in which case you probably need to upgrade more than just your graphics card.

Intel Xe DG1 Test Setup

Because the Xe DG1 requires a special motherboard, our test comparisons will be a bit different from our normal GPU reviews. We normally benchmark graphics cards on a Core i9-9900K system with different RAM, storage, power, etc. But for a low-end GPU, the rest of the components shouldn't matter much — it's not like we're going to end up CPU limited here, particularly with a 6-core/12-thread CPU running at 4.2GHz. Still, the best comparison won't be any recent graphics card.

Besides being sold out, even the GTX 1650 packs significantly more firepower than the DG1. Integrated Vega 6/7/8 graphics solutions from AMD along with Nvidia's GT 1030 theoretically deliver less performance, depending on what metrics we're looking at, so those are the primary comparison points (obviously with a different CPU on the AMD rig). There's more to the DG1 than gaming performance, but that's where we want to start.

Our normal GPU benchmarks hierarchy includes nine games running at 1080p and medium quality, as well as testing at 1440p and 4K, plus additional tests at ultra quality for all three resolutions. All these low-end GPUs struggle a bit even at 1080p medium, never mind the higher resolutions and settings. To get around that, we also tested at 720p and low settings, which is an incredibly low bar to clear — and a good indication of what we might be able to expect from the Steam Deck hardware. Our hope is that the cards should at least deliver playable performance (i.e., more than 30 fps) with these lower settings.

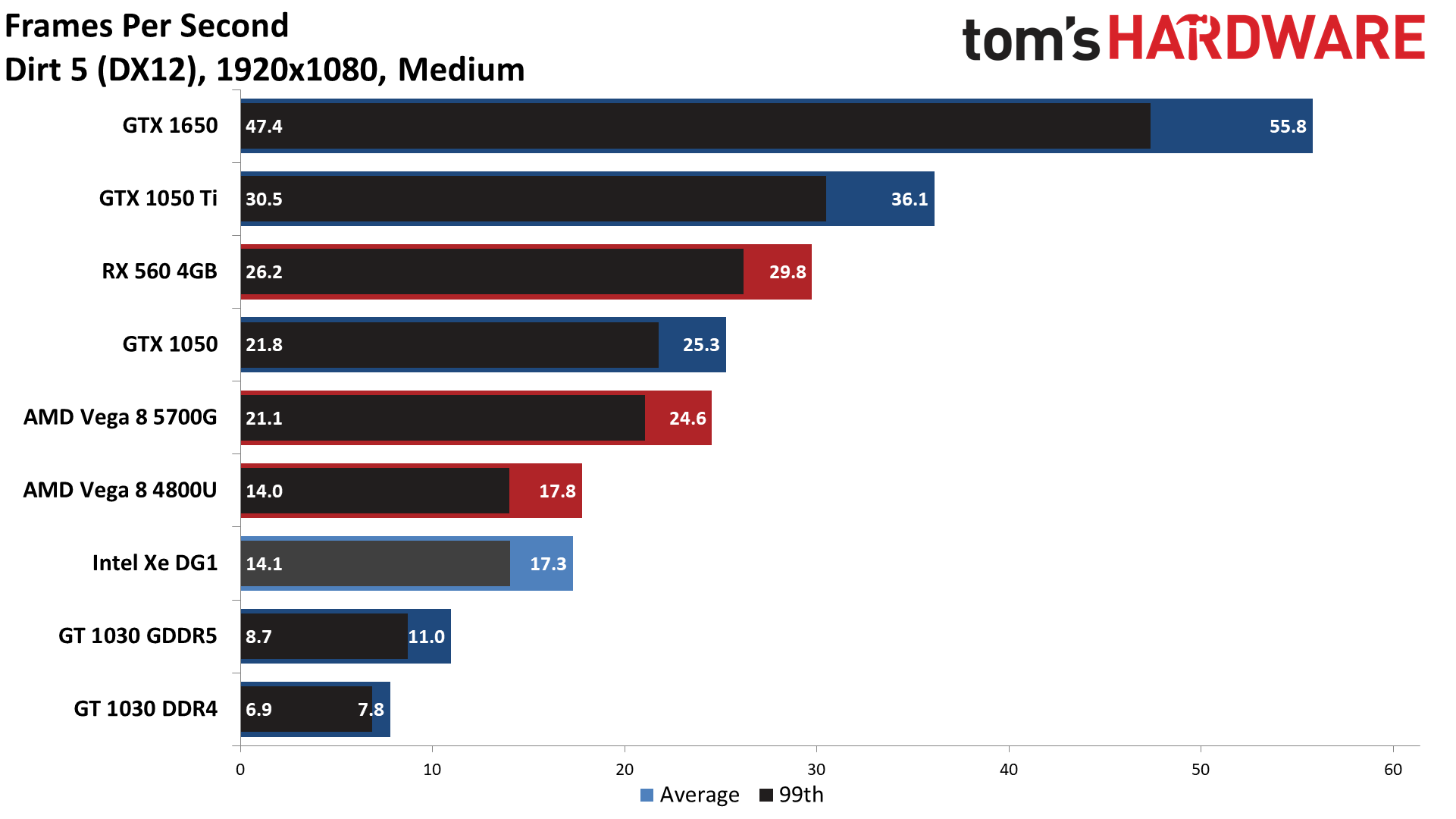

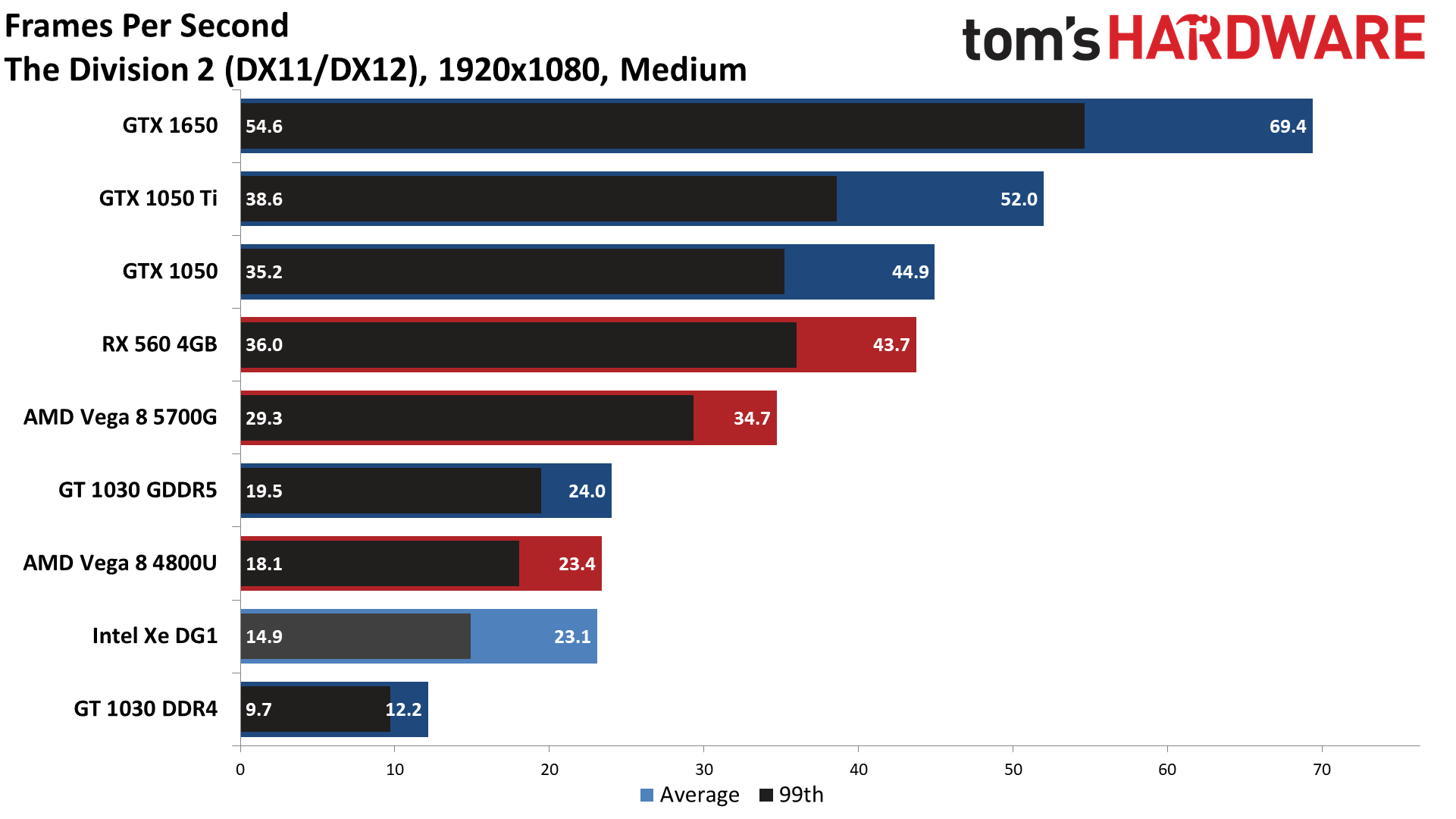

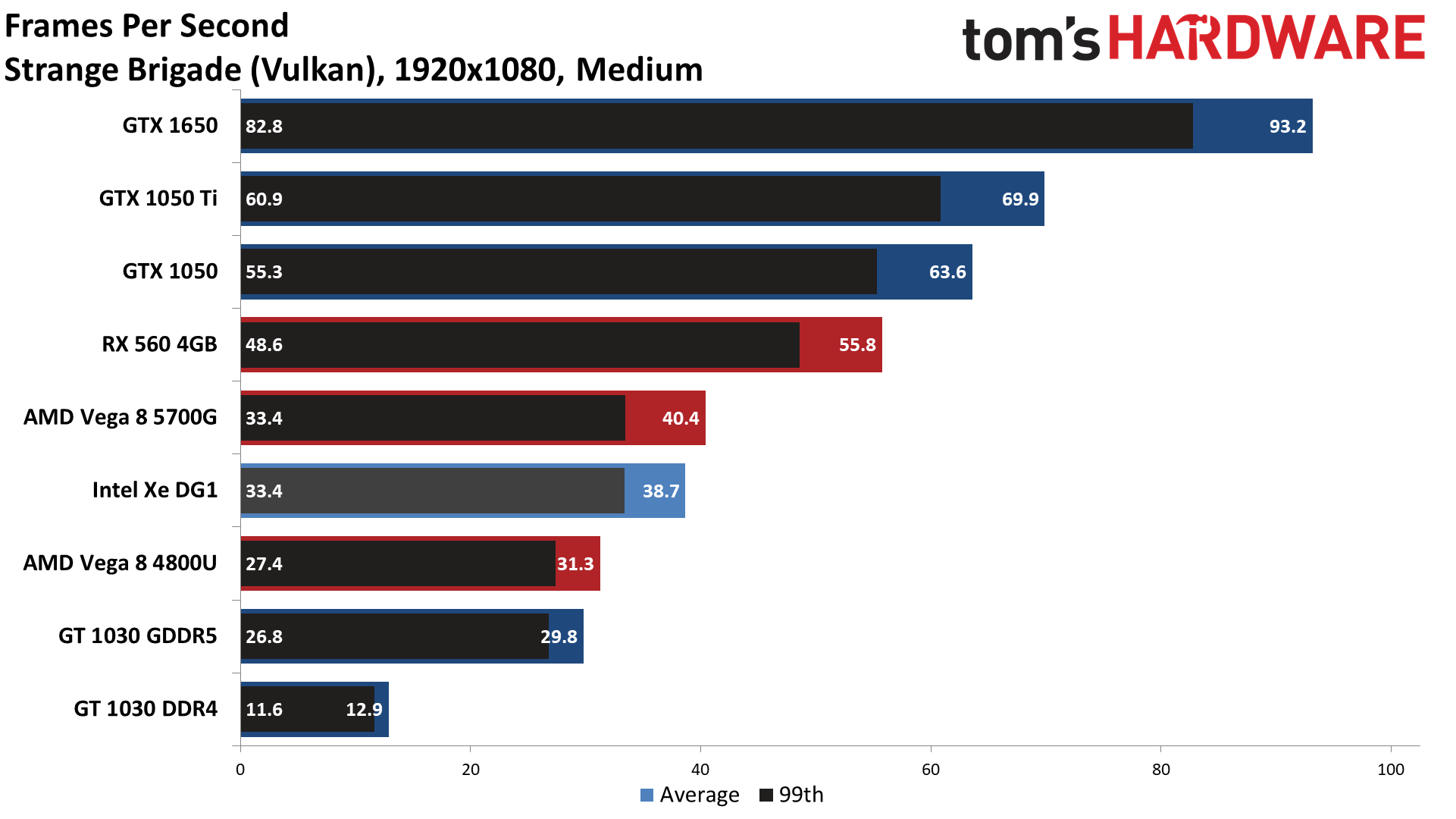

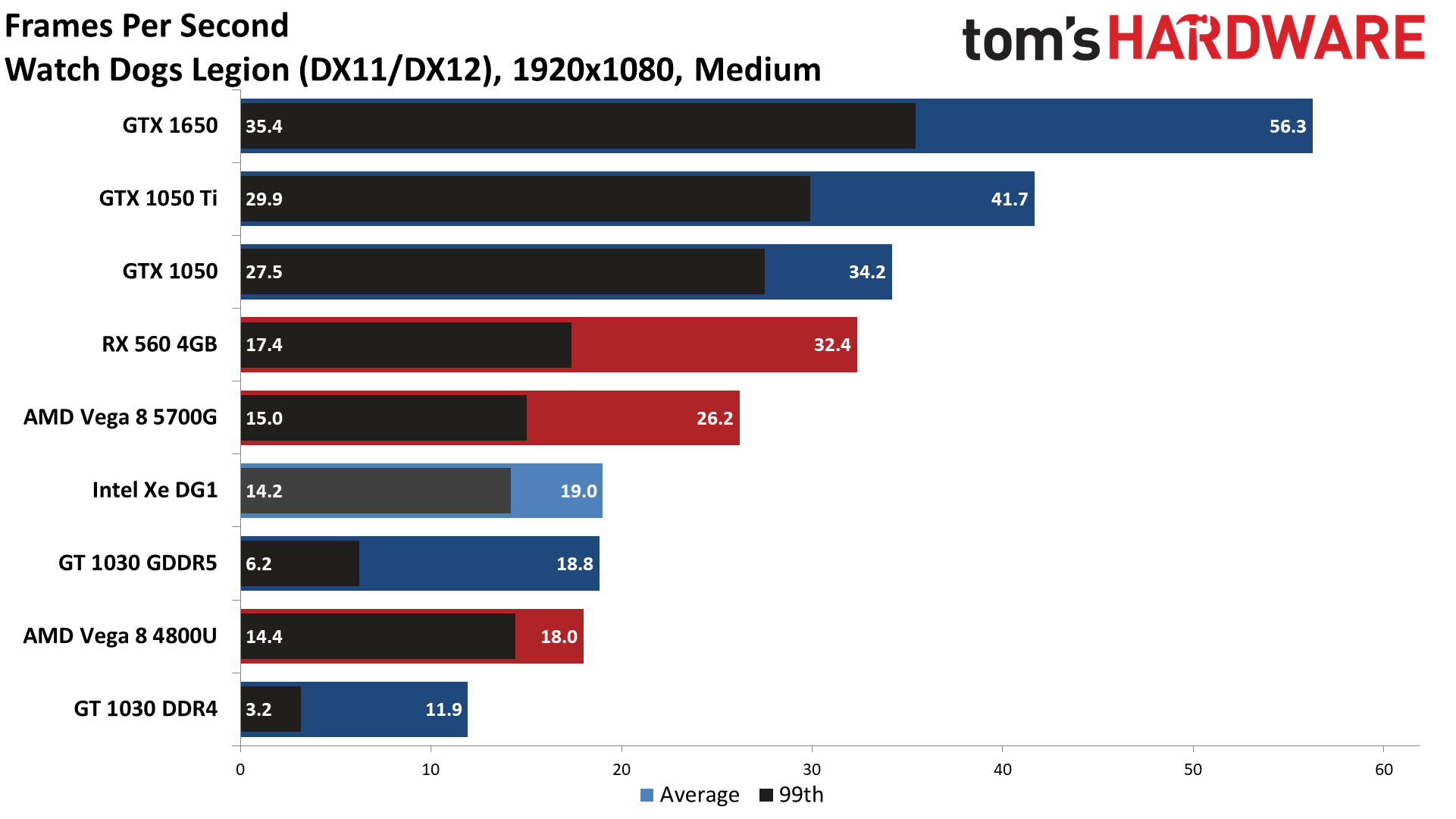

For these tests, we're using an expanded suite of games as well: All thirteen of the games we've been using in recent graphics card reviews (Assassin's Creed Valhalla, Borderlands 3, Dirt 5, The Division 2, Far Cry 5, Final Fantasy XIV, Forza Horizon 4, Horizon Zero Dawn, Metro Exodus, Red Dead Redemption 2, Shadow of the Tomb Raider, Strange Brigade, and Watchdogs Legion), plus Counter-Strike: Global Offensive, Cyberpunk 2077, Fortnite, and Microsoft Flight Simulator. We're interested not just in performance but also compatibility. Theoretically, every one of these games should run okay at low to medium settings on the Xe DG1, as well as the Nvidia and AMD GPUs. Any incompatibilities will be a red mark against the offending GPU(s).

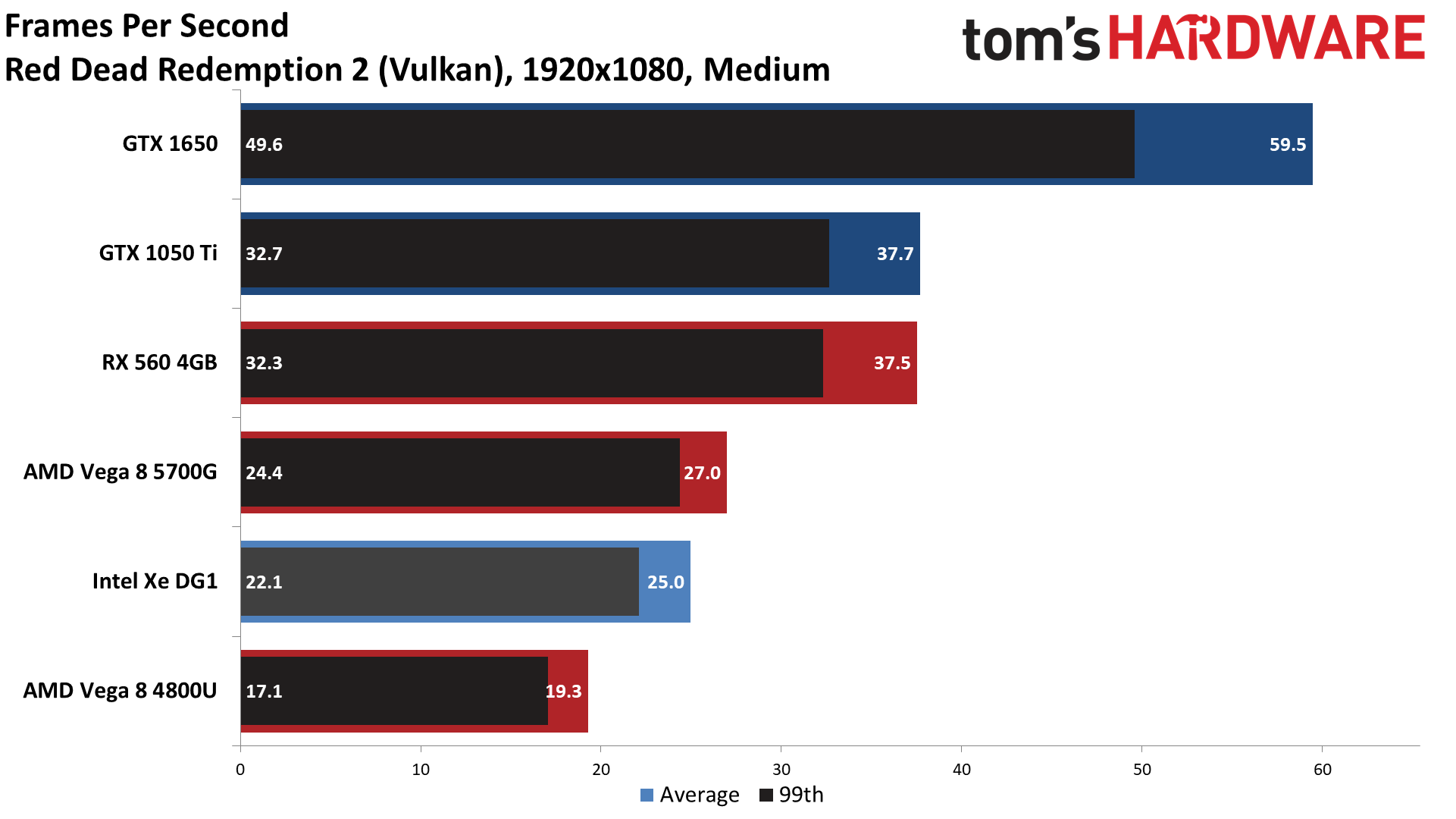

Our test suite covers a fair range of requirements, from older or lighter games to more recent and demanding games that might struggle to run at all. For example, Assassin's Creed, Dirt 5, Horizon Zero Dawn, Red Dead Redemption 2, and Watch Dogs Legion all tend to have higher VRAM requirements — 4GB is just barely sufficient in some cases, and the 2GB cards were blocked from attempting RDR2 at anything more than our minimum 720p low settings. CSGO meanwhile ought to run fine, considering it works on potato PCs.

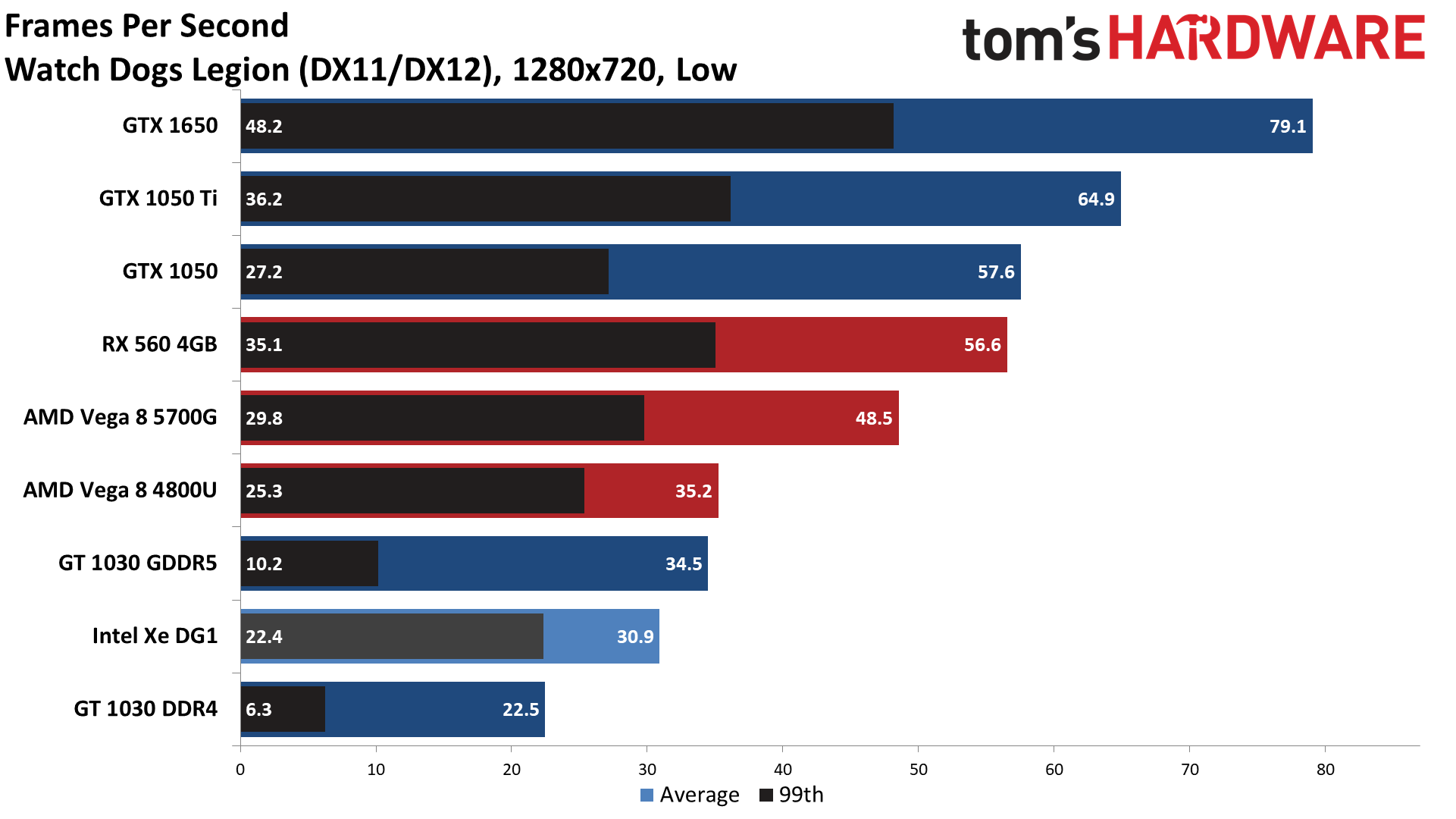

Intel Xe DG1 Graphics Performance at 720p Low

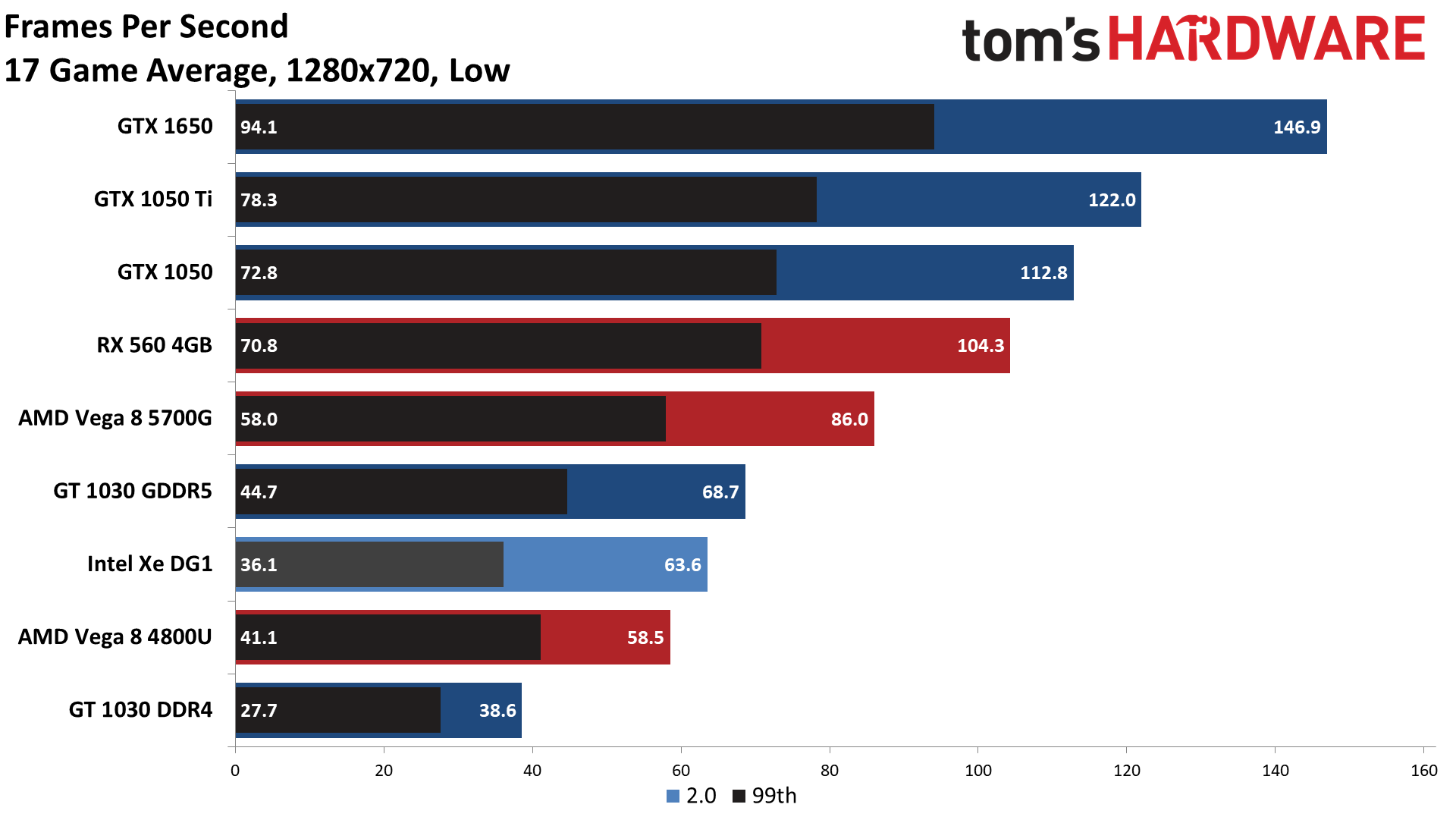

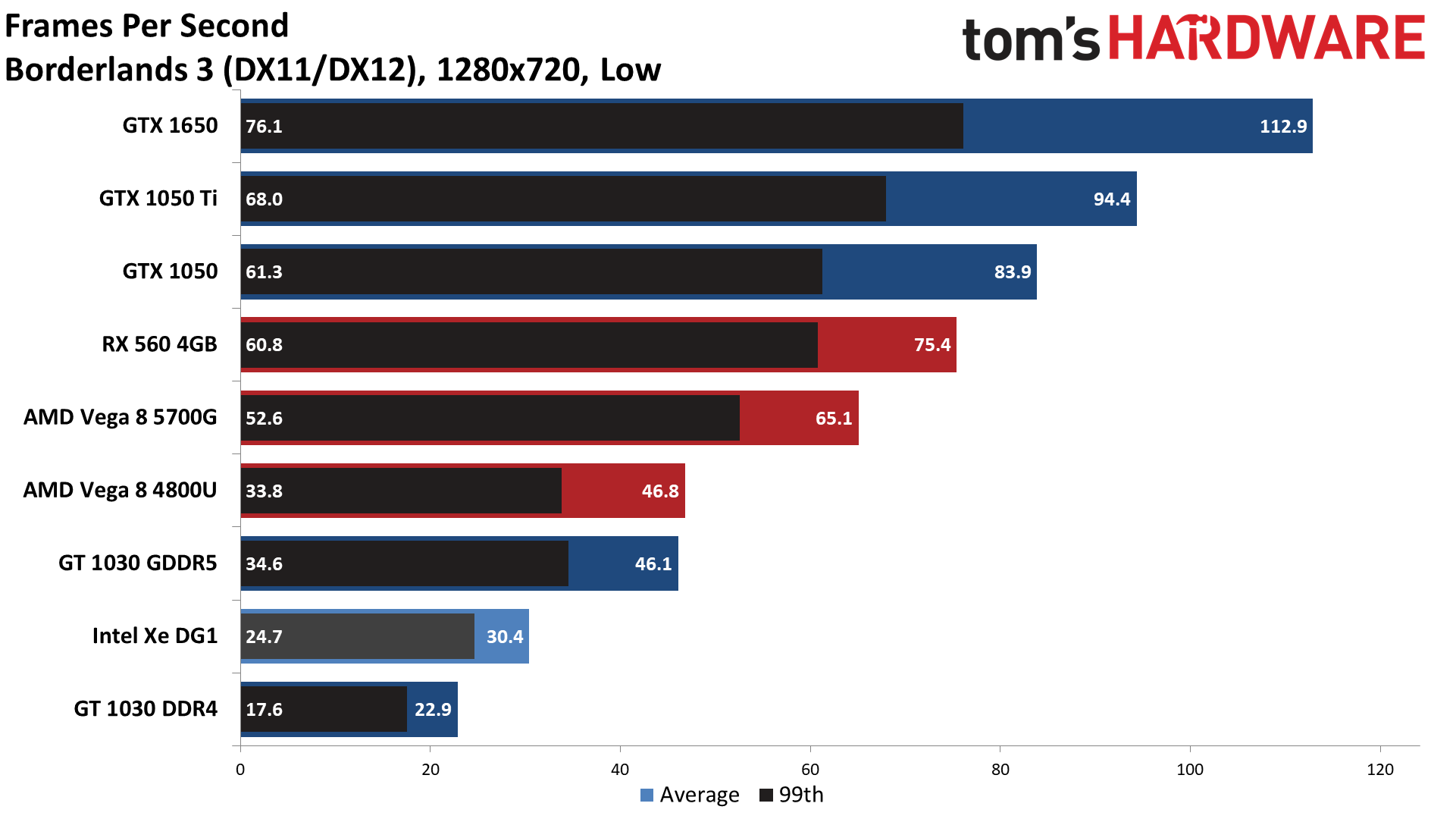

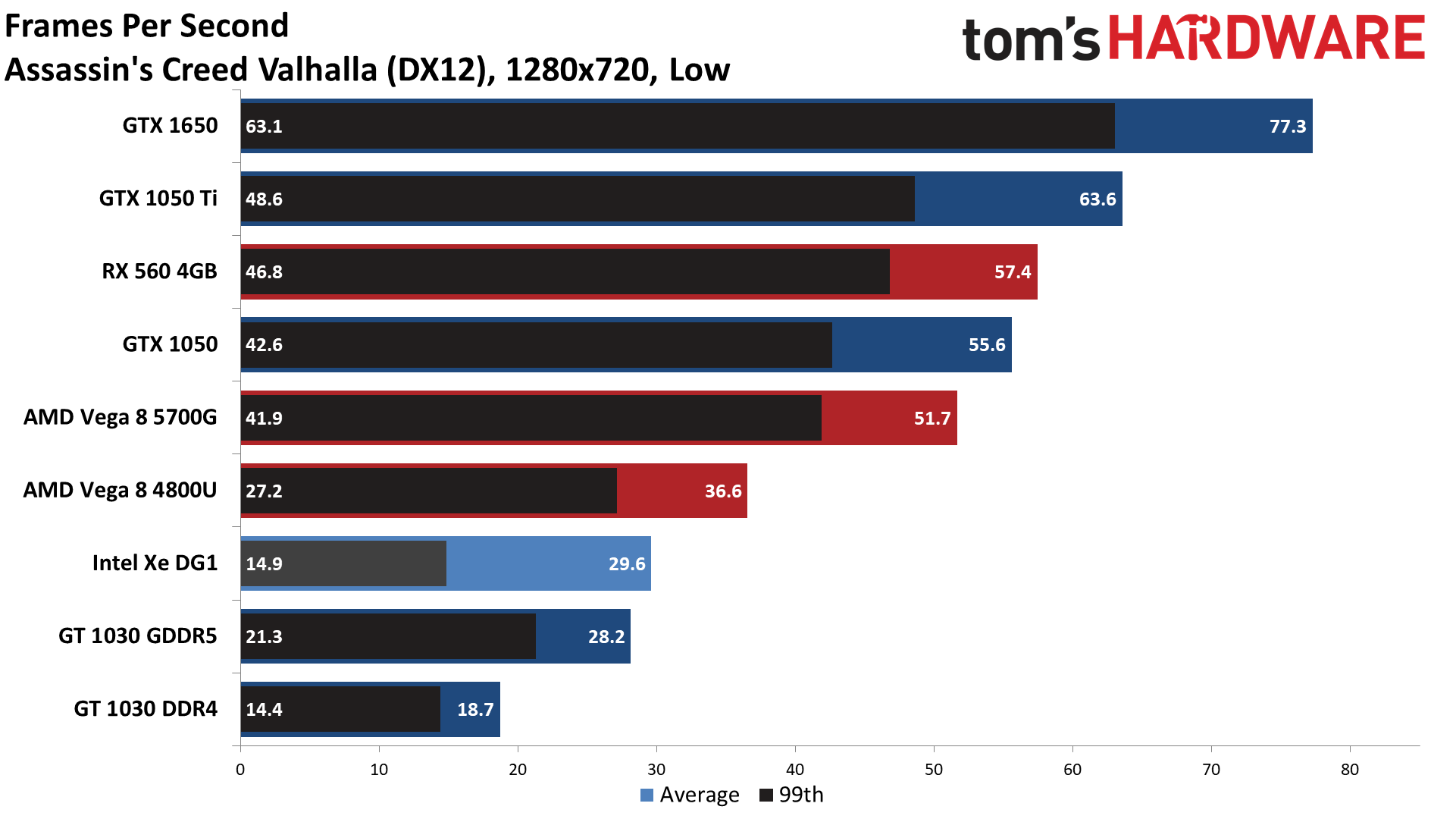

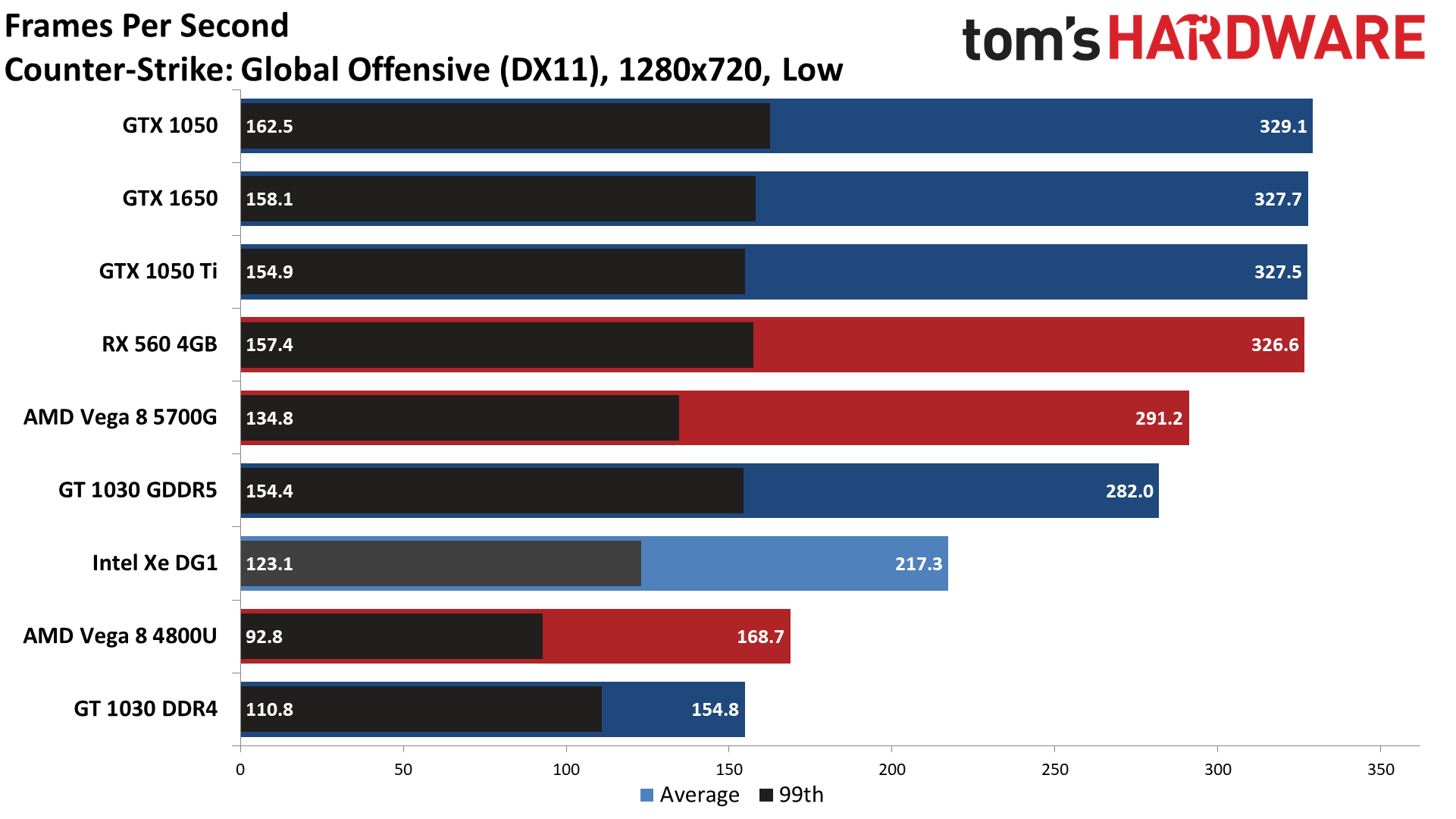

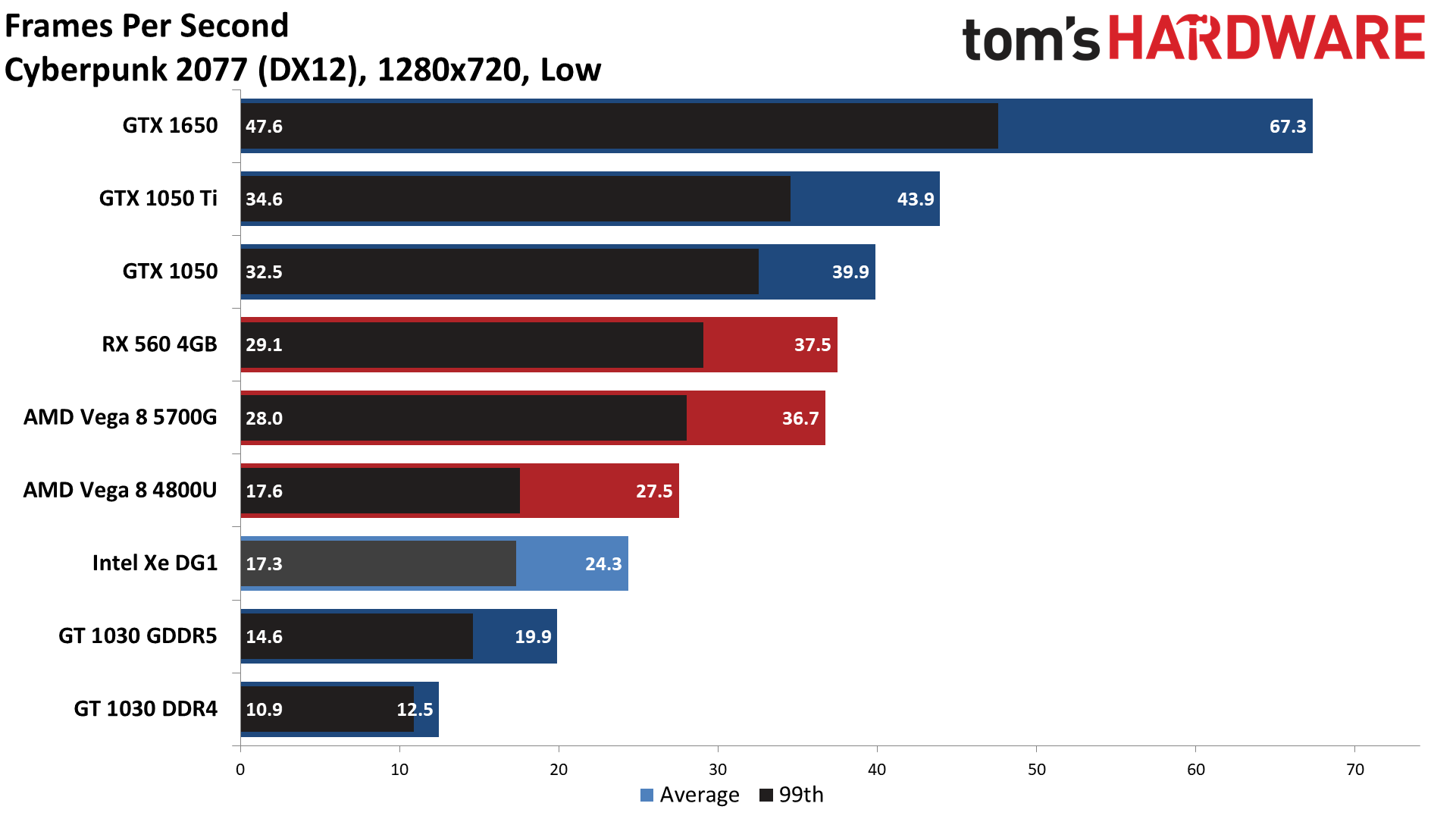

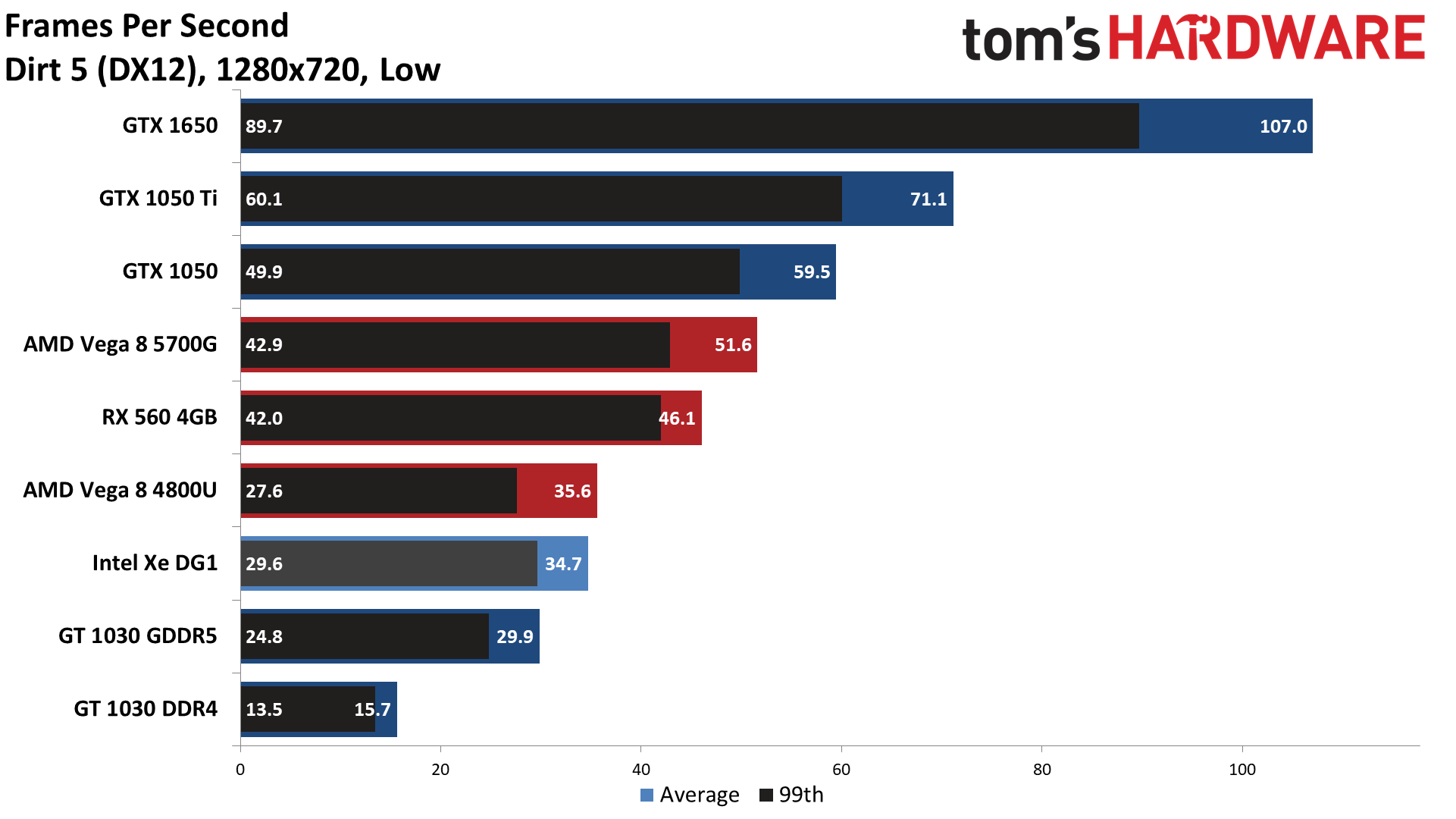

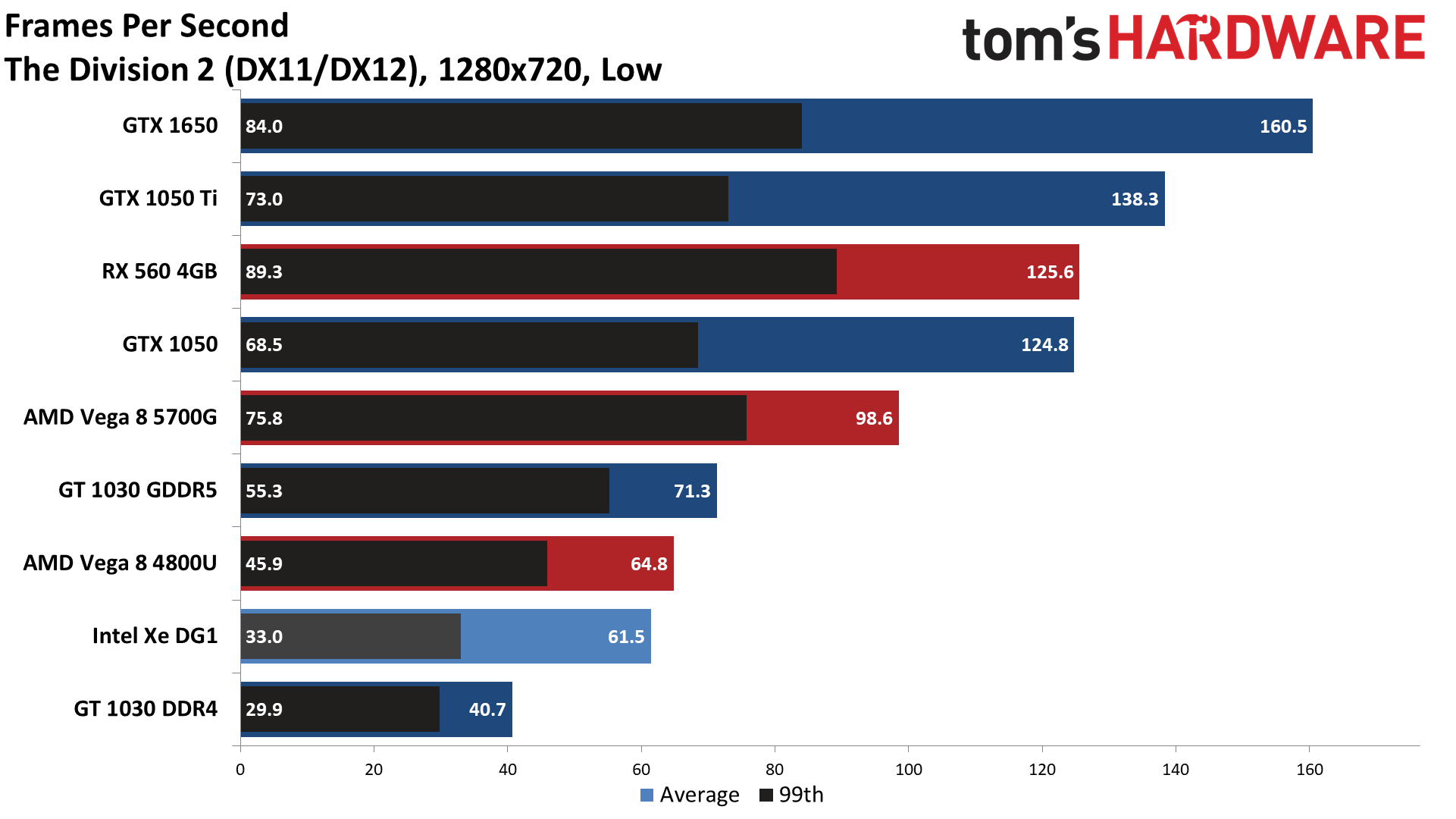

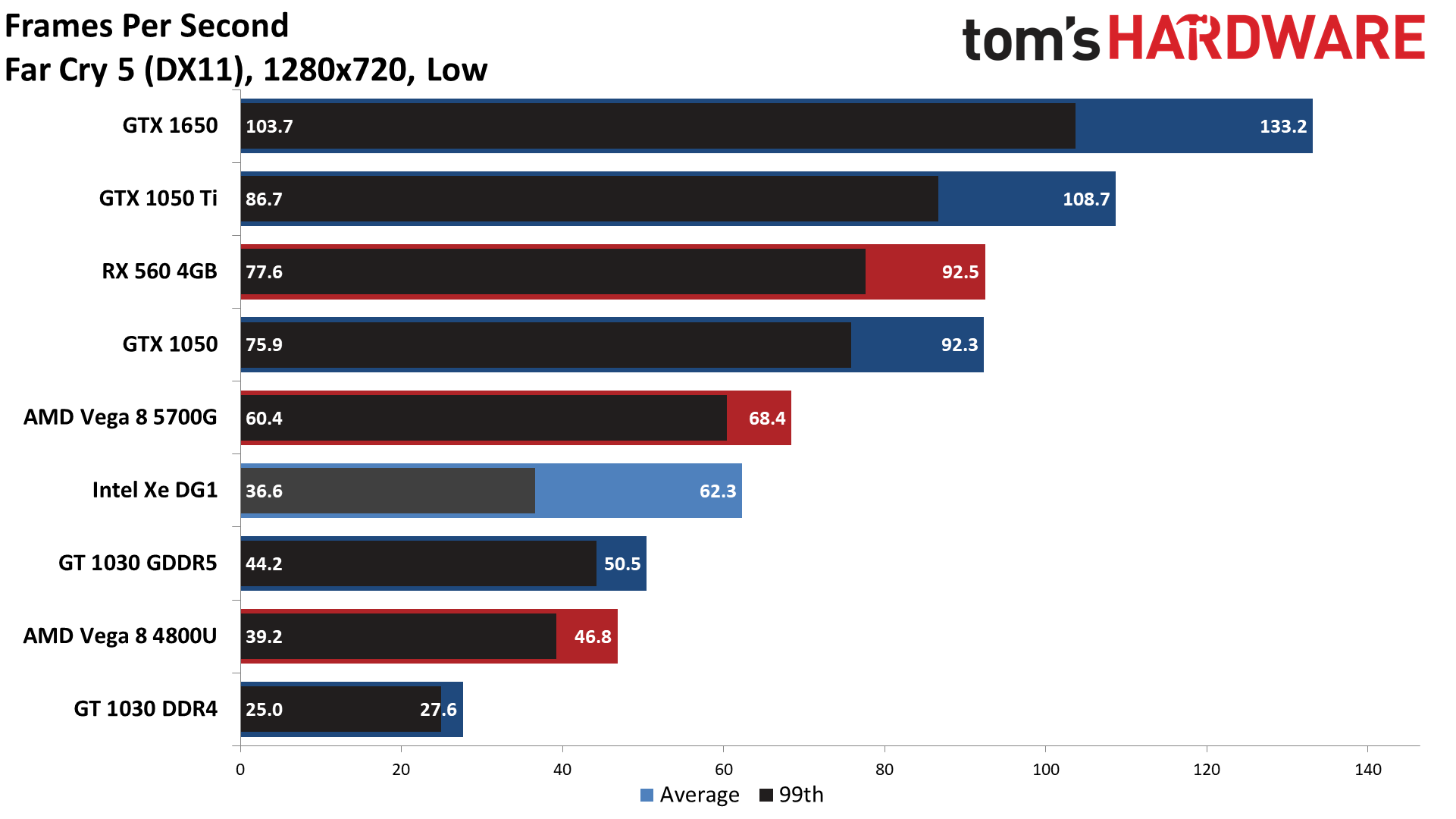

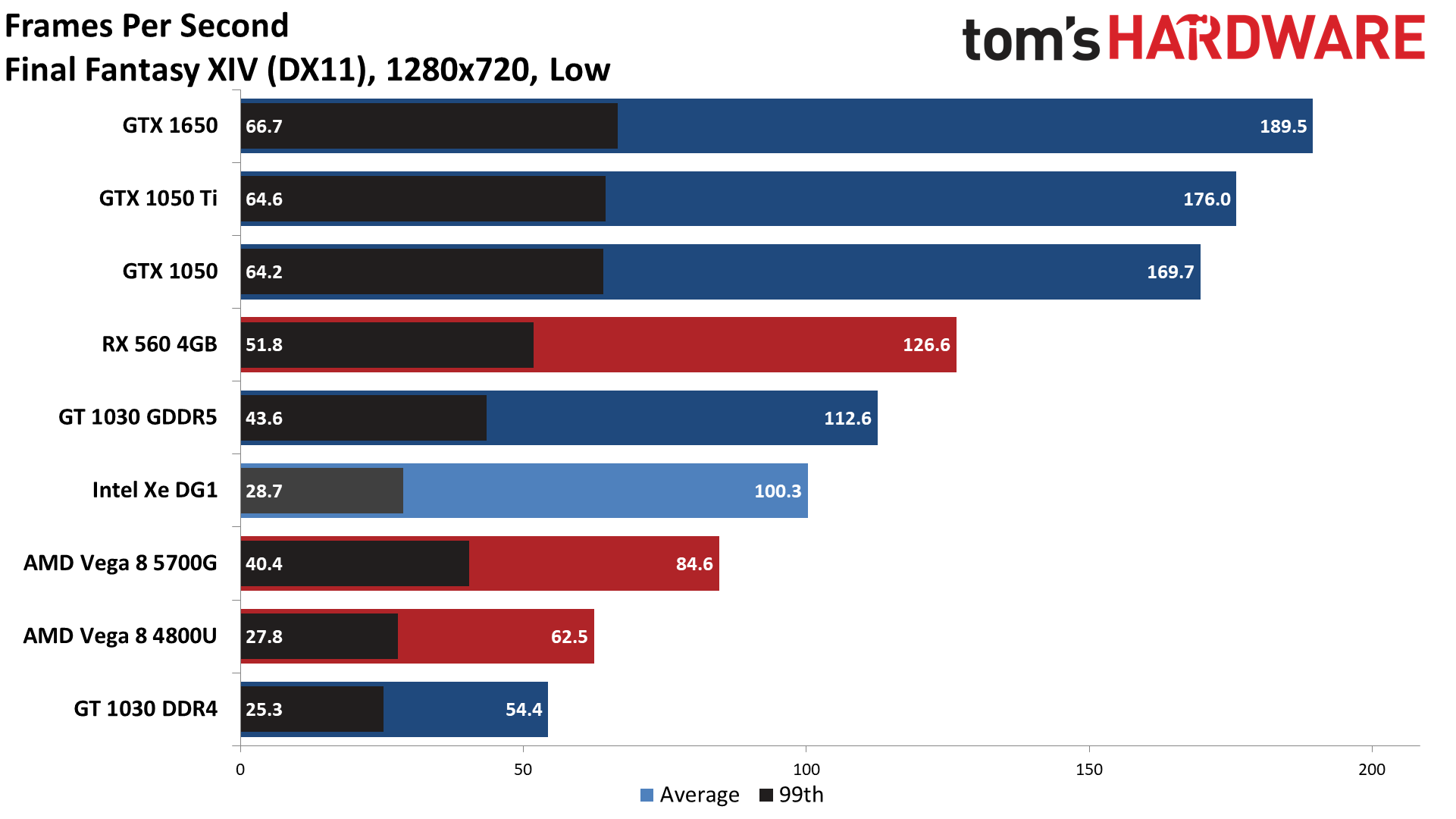

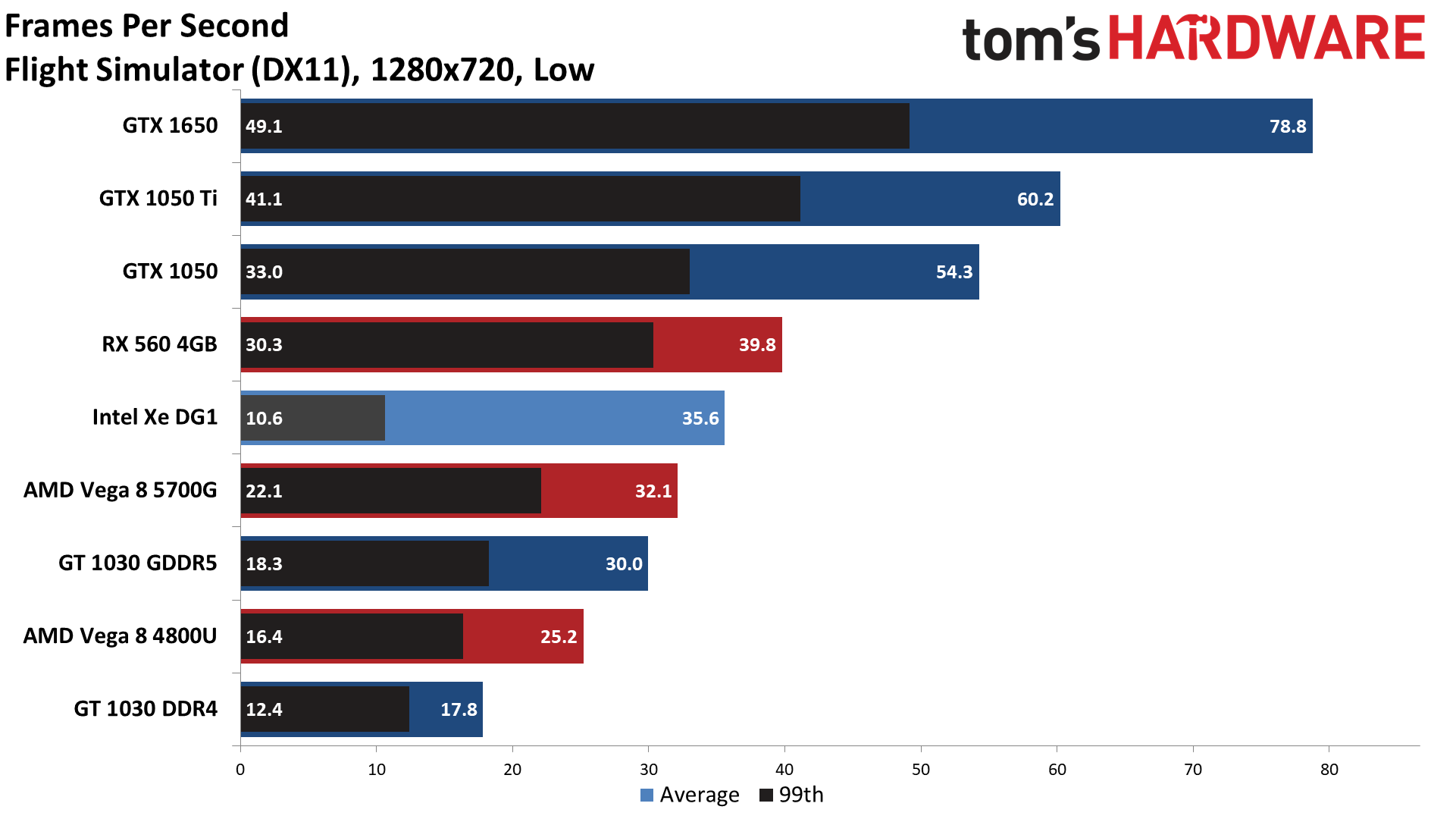

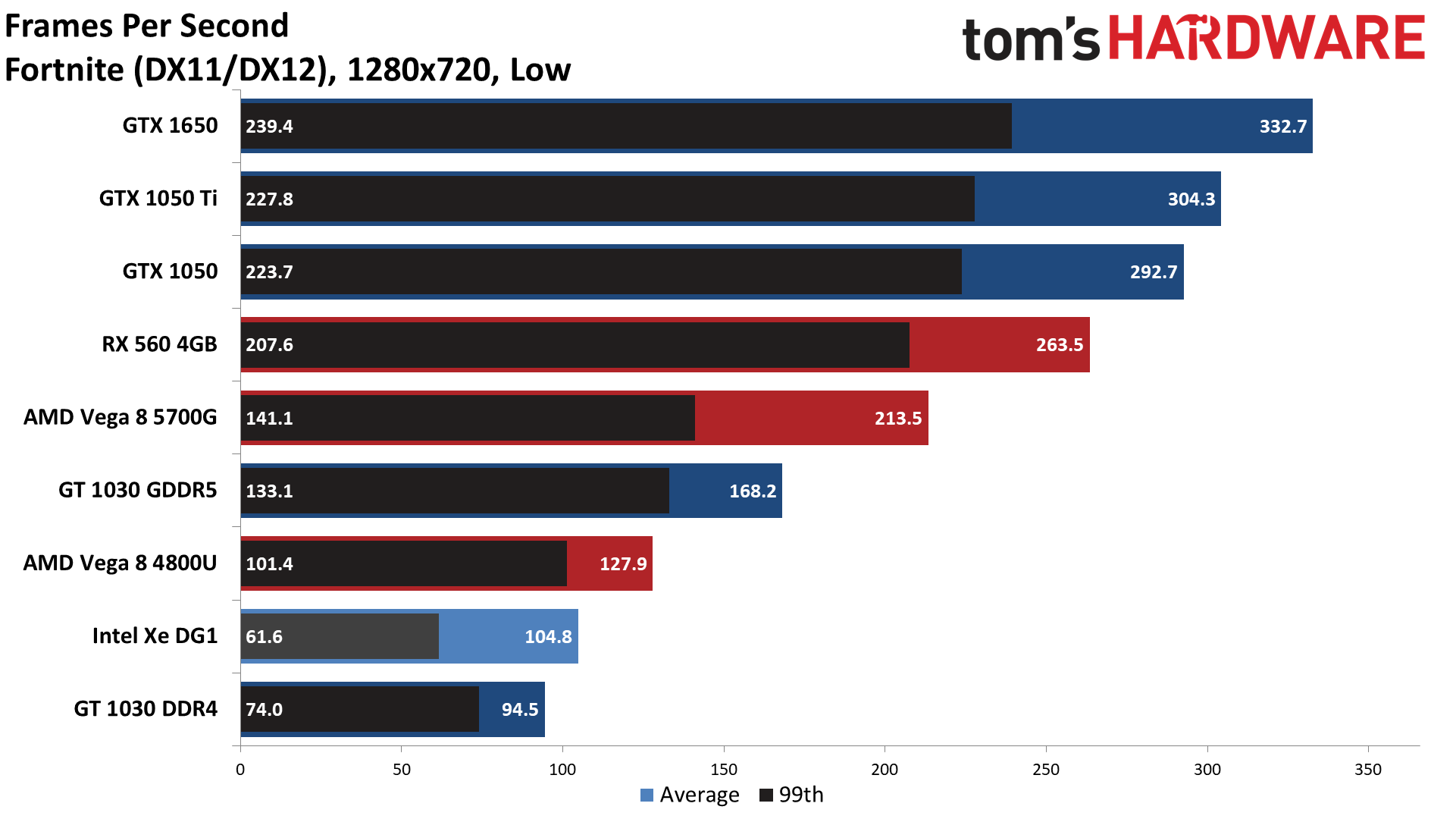

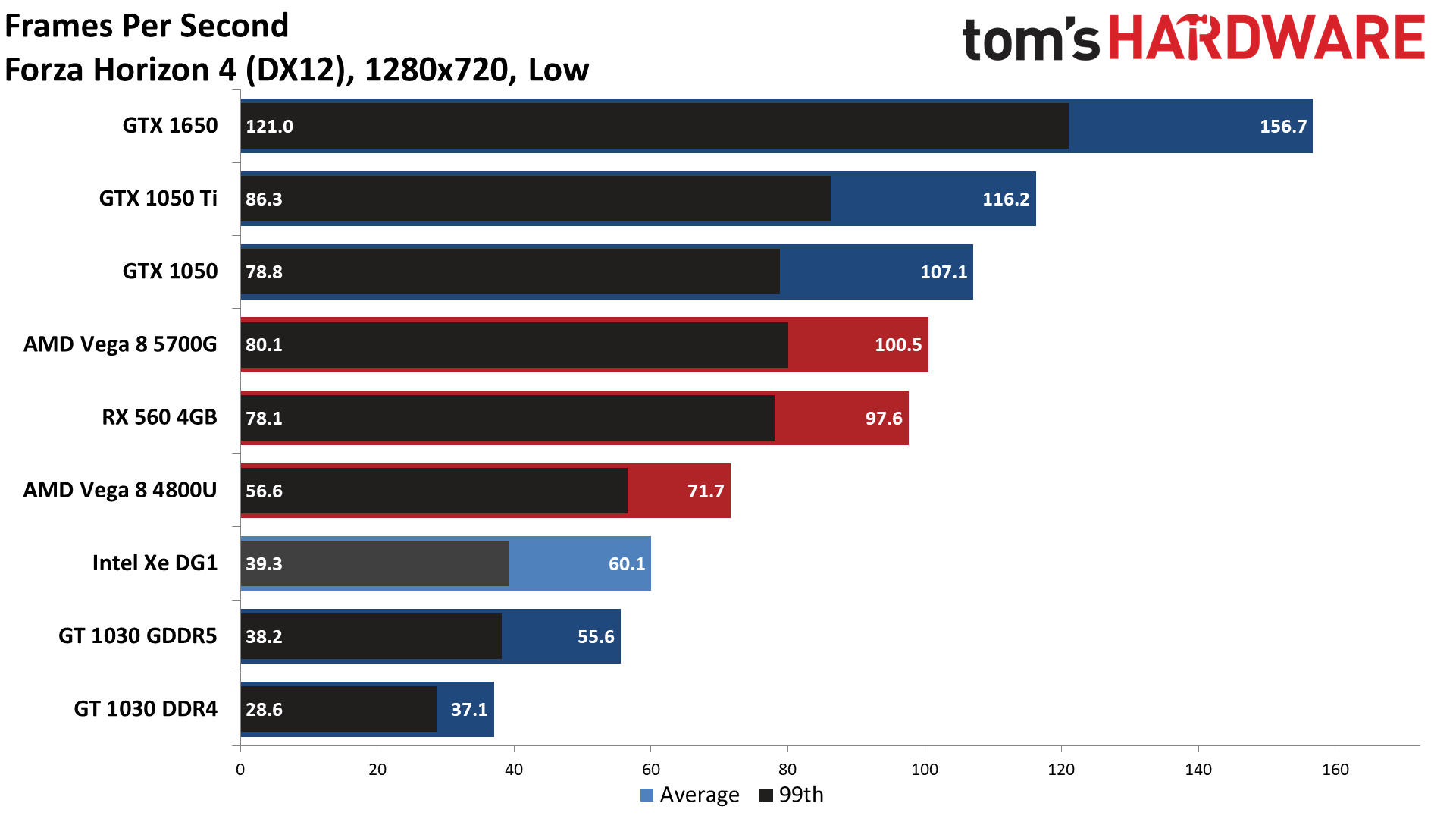

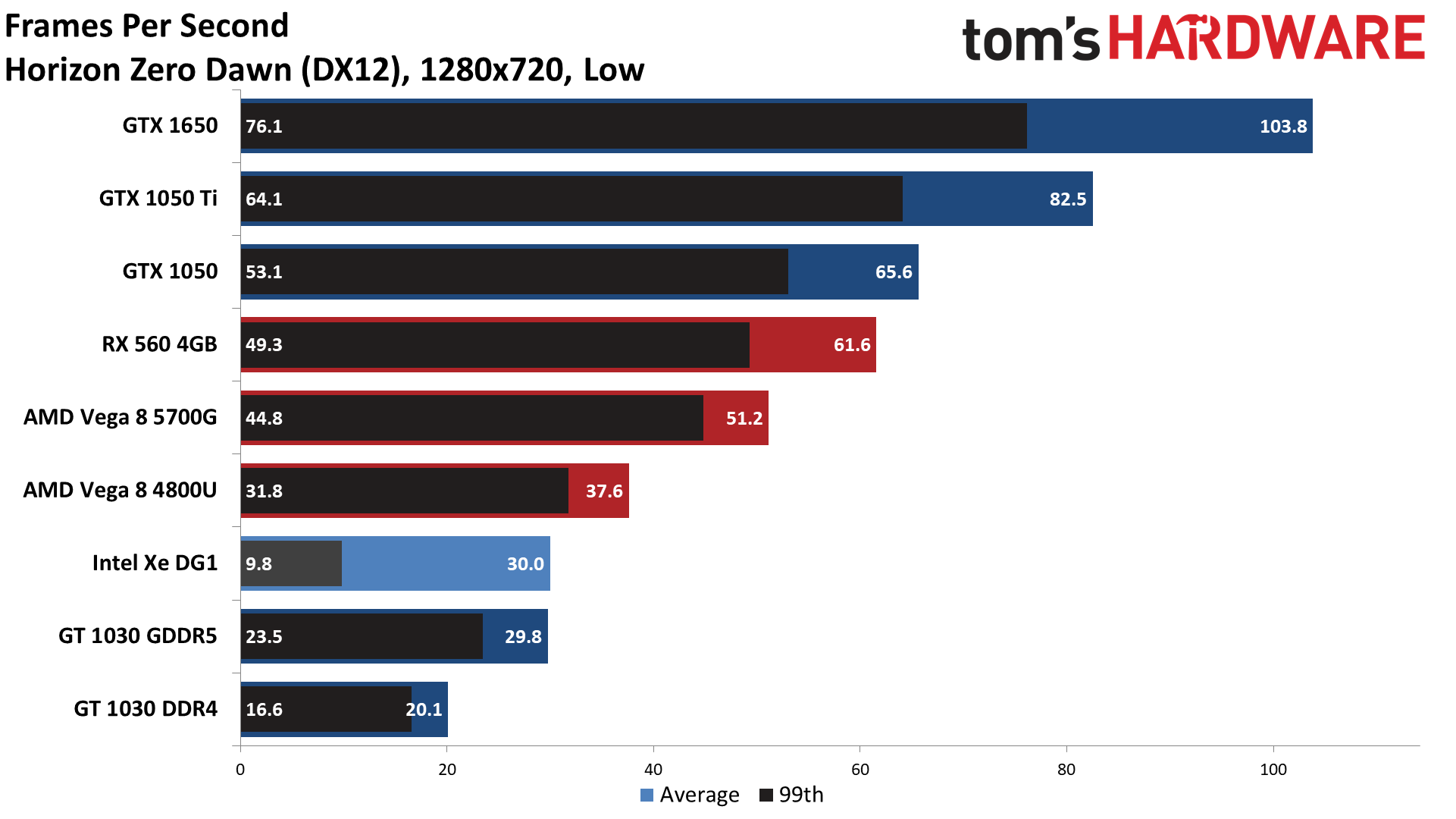

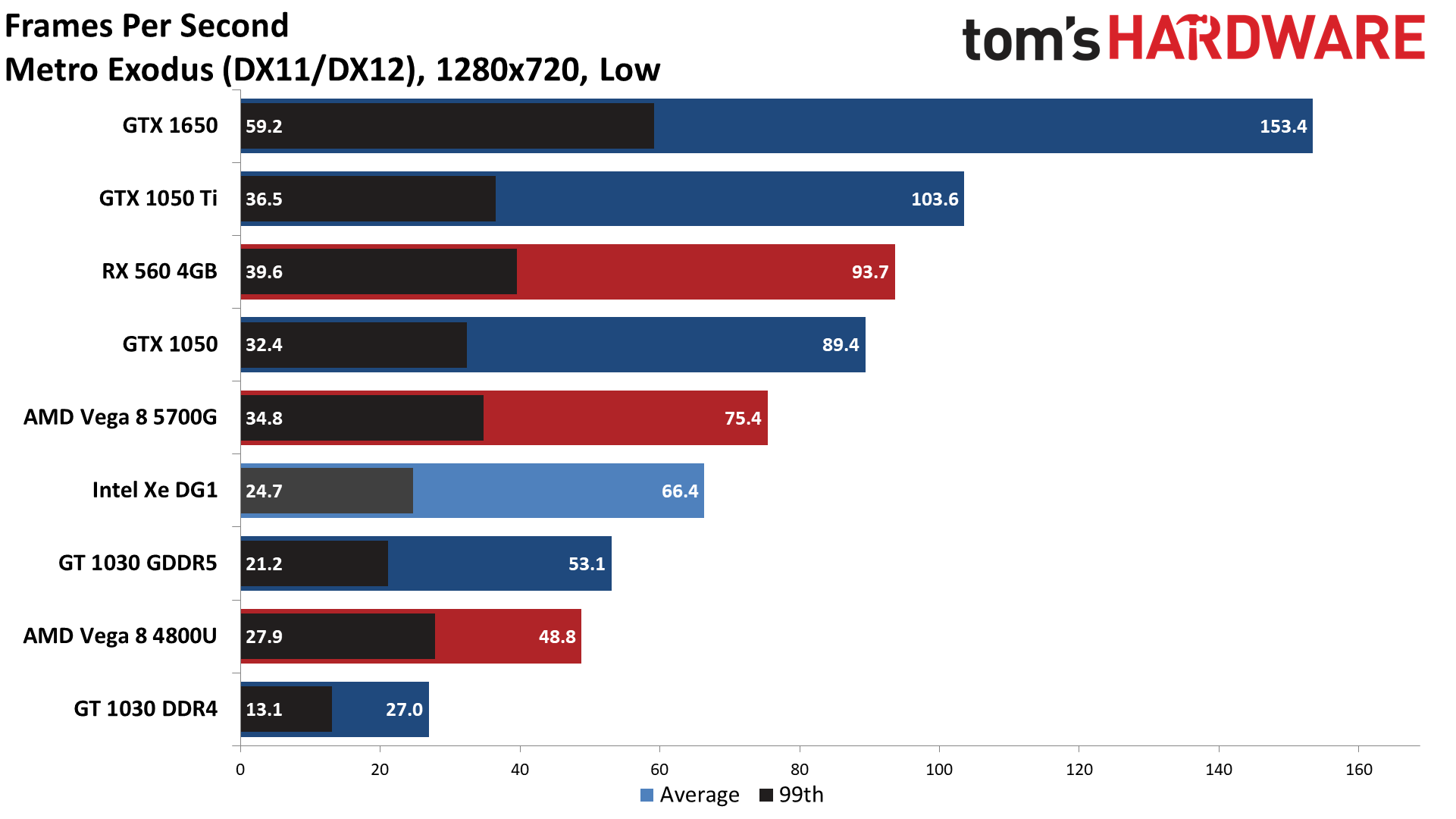

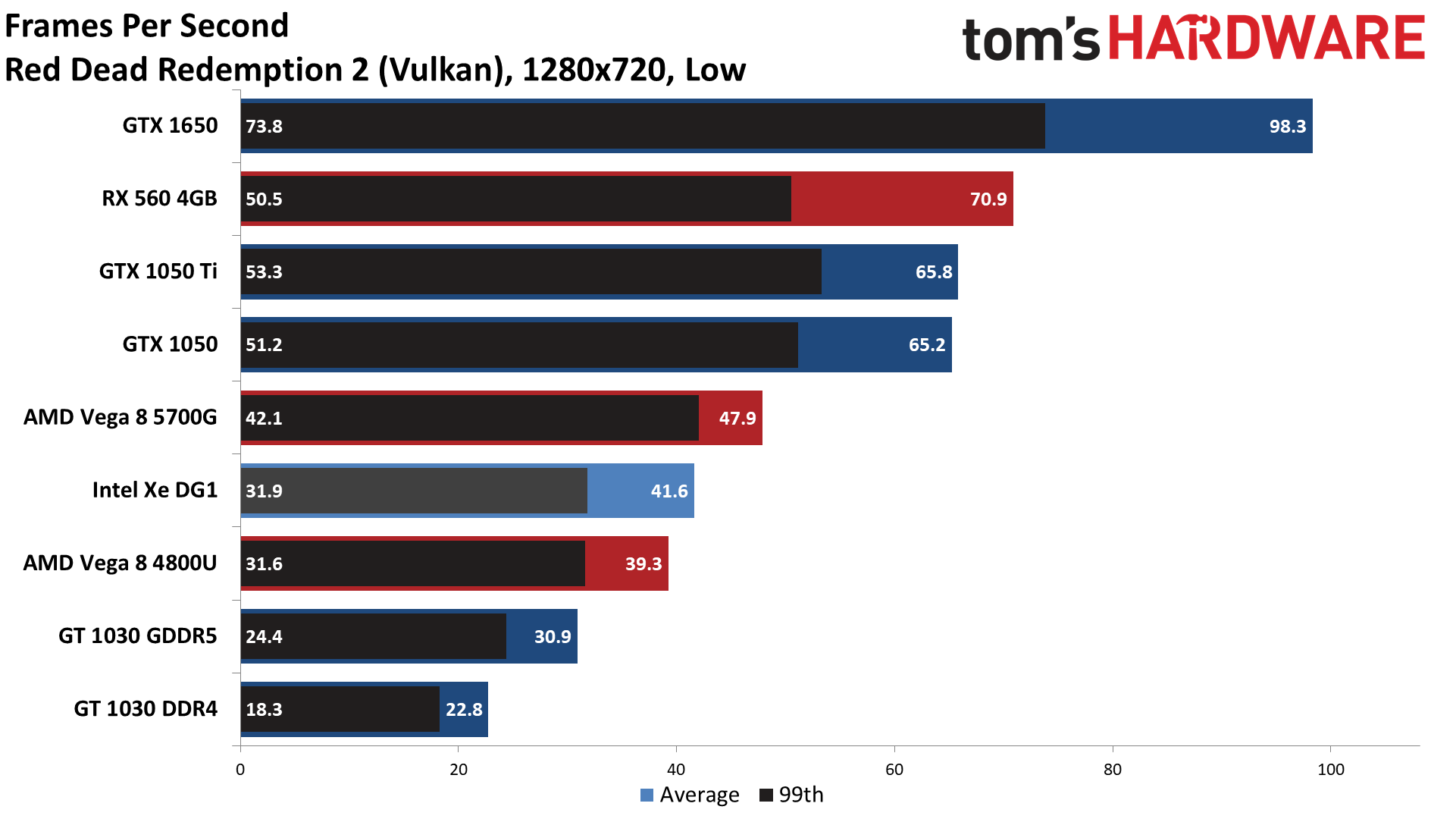

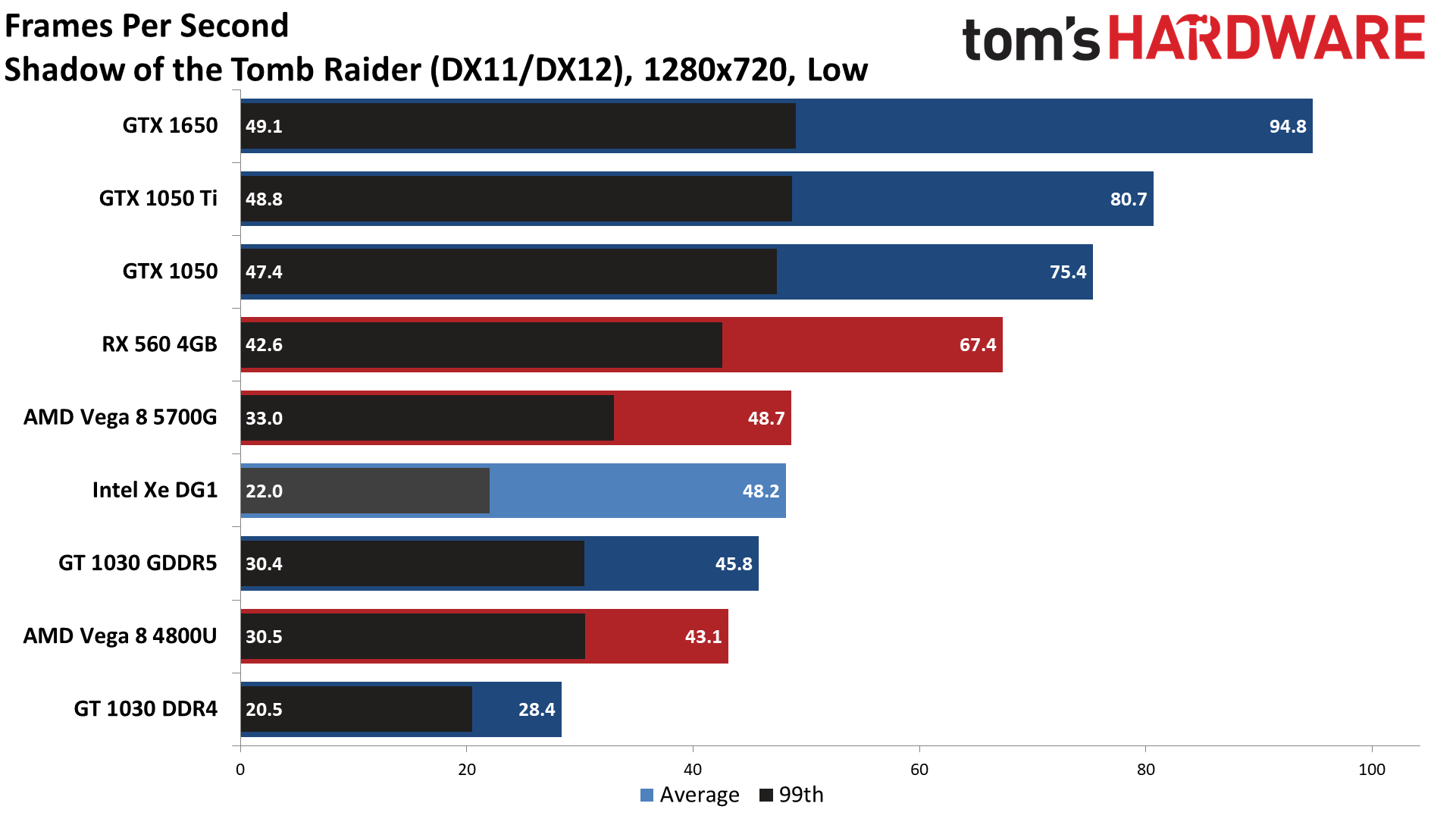

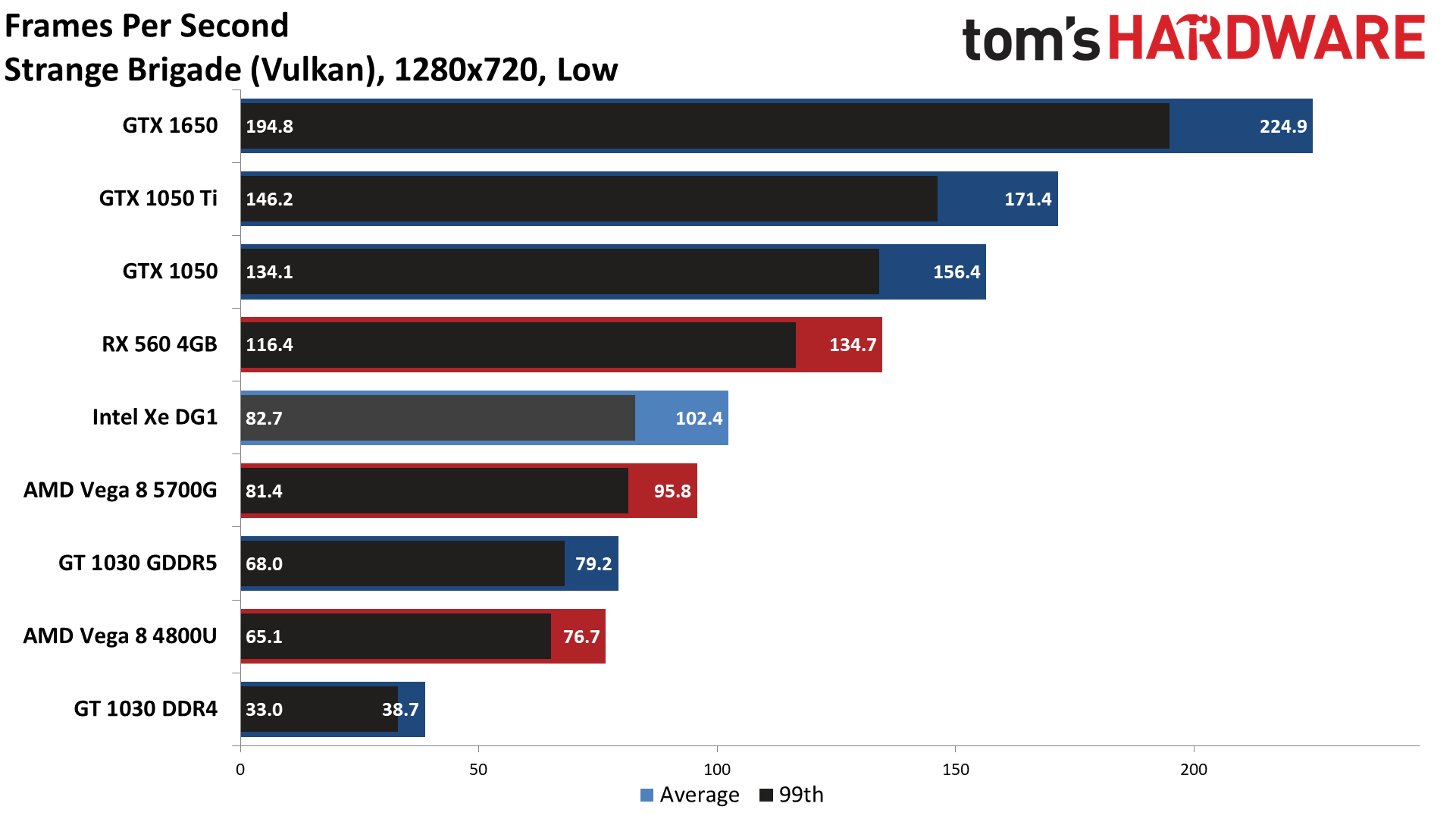

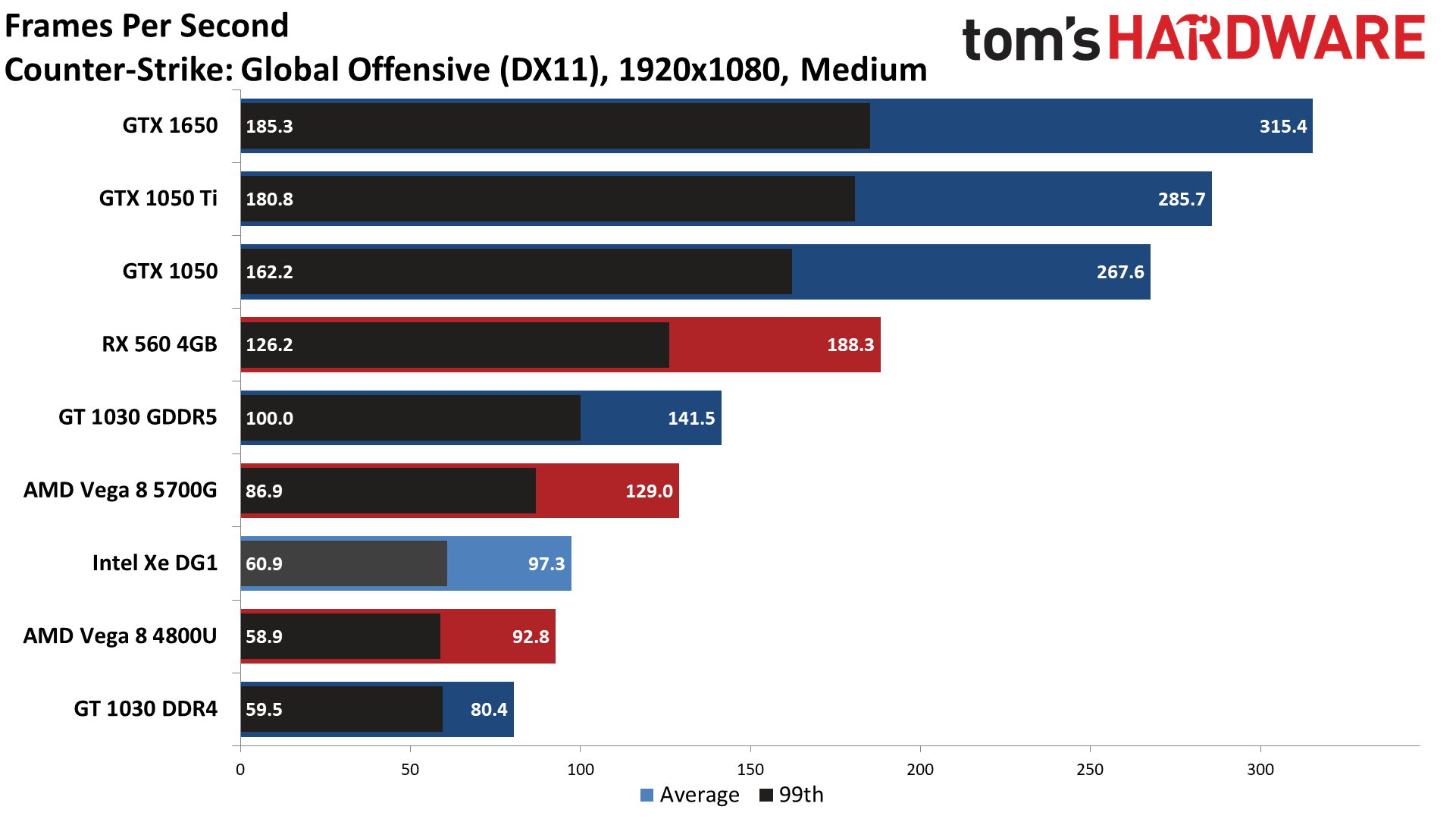

Here are the performance results, starting with the more relevant 720p low performance. The wheels tend to fall off at 1080p medium for several of the GPUs, but considering that's our minimum test setting for the standard GPU benchmarks hierarchy, we did want to include those as a point of reference.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Right off the bat, this is an inauspicious start for Intel's Xe DG1. Overall, it did average more than 60 fps across the test suite, but the 99th percentile fps clearly shows there's a lot of stuttering going on in some games. We'll see that in the individual results in a moment, but of the GPUs we tested, the DG1 only manages to surpass the GT 1030 DDR4 and the AMD Vega 8 running in a 4800U.

The GT 1030 GDDR5 came in 8% faster in average fps and 24% faster in 'minimum' fps, and that's by no means a fast graphics card. You can see AMD's Vega 8 in the 5700G easily beats the 1030 GDDR5, and the other higher performance dedicated graphics cards are anywhere from 35% faster (RX 560) to 130% faster (GTX 1650). It's not just about pure performance, though. As noted above, compatibility with a large number of games is also critical for Intel.

For reference, of the AMD and Nvidia GPUs, the only ones that had any real compatibility issues were the 2GB cards. Red Dead Redemption 2 won't even let you try to run 1080p low, never mind 1080p medium, on a card with 2GB VRAM. You can try to hack the configuration files, but we experienced rendering errors and other problems, so we just left that alone. Assassin's Creed Valhalla and Watch Dogs Legion also seem to need more than 2GB, except the GTX 1050 seems to do okay so I'm not quite sure why the GT 1030 minimum fps tanks so hard.

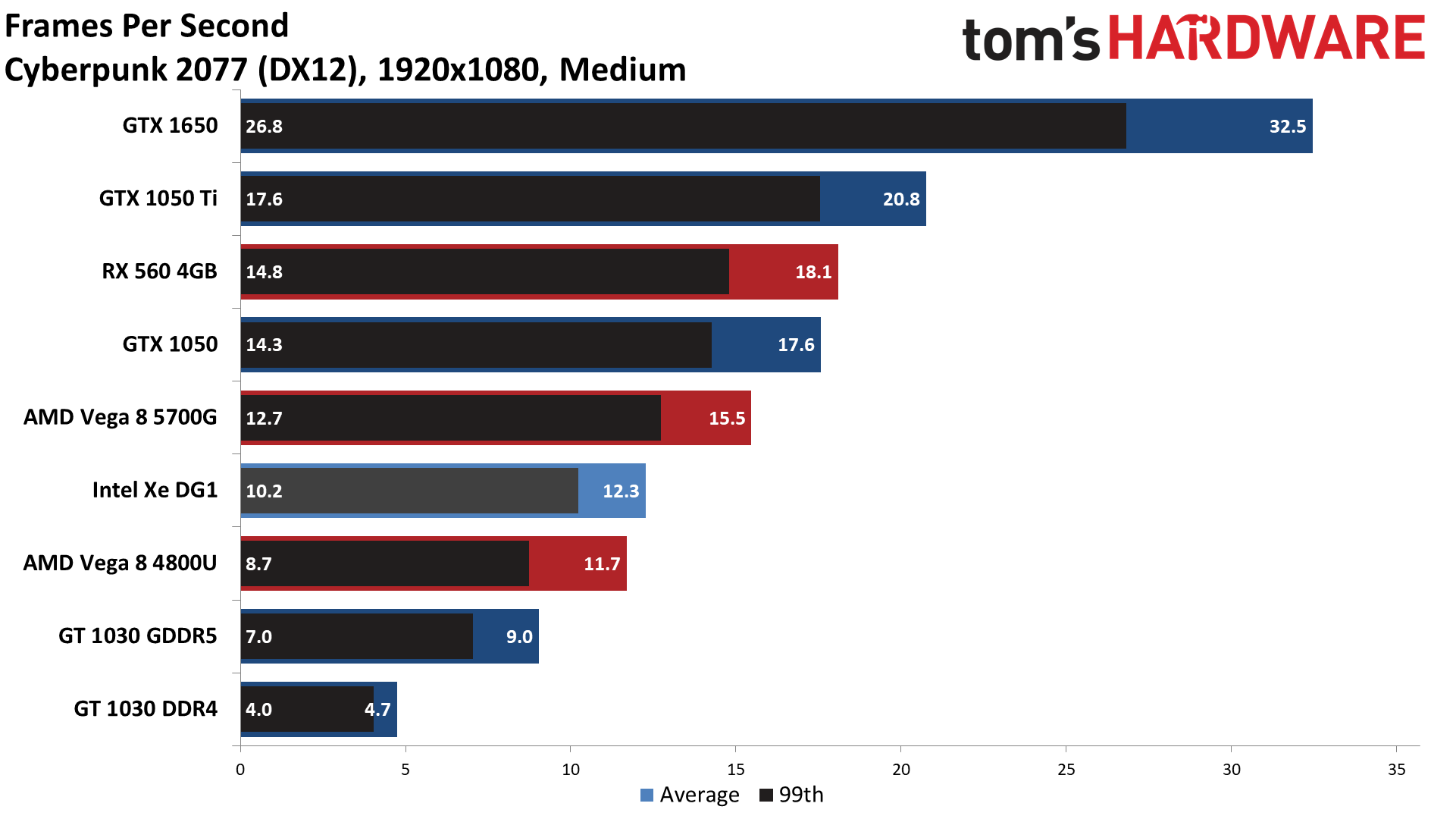

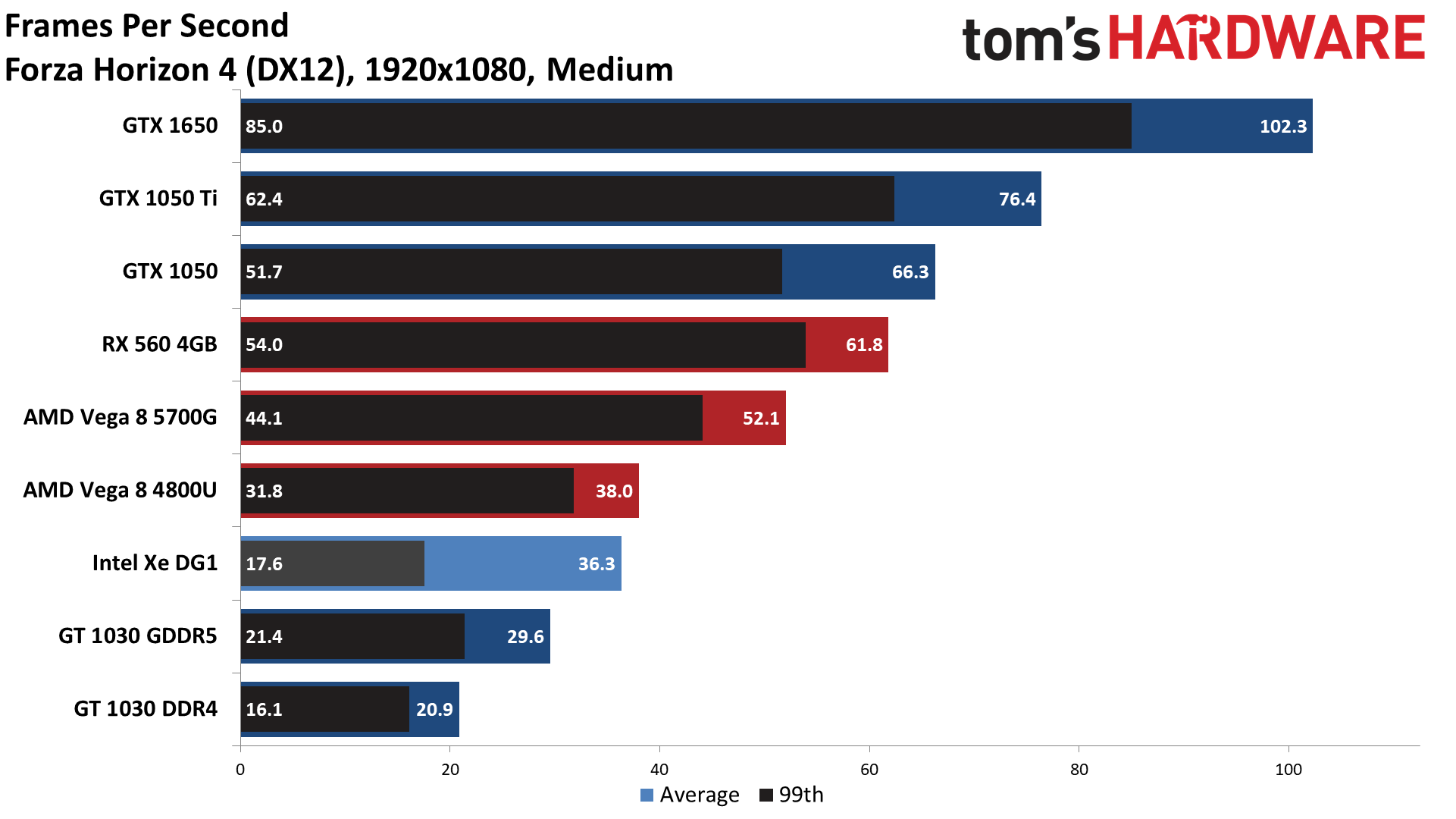

I should note that DirectX 12 (DX12) tends to also really dislike 2GB cards, and sometimes even 4GB cards. We checked performance with DX11 and DX12 where possible, and for the Nvidia cards and DG1 DX11 was universally faster — and in some cases, it was required to even get the game to run on the DG1. For the AMD GPUs we tested, DX12 was a touch faster in a few cases, but in general DX11 was also preferrable. The problem with not working well under DX12 is that several of the games we included in these tests — Assassin's Creed Valhalla, Cyberpunk 2077, Forza Horizon 4, and Horizon Zero Dawn — only support DX12. Let's hit the individual gaming charts before we continue.

Depending on the game, the Xe DG1 as high as fifth on the charts — behind the 1650, 1050 Ti, 1050, and 560 4GB cards — and as low as eighth. The only card it beats without question is the GT 1030 DDR4, a travesty of a GPU that really never should have been released. Not to beat a dead horse, but the GT 1030 GDDR5 launched at $70 in May 2017 and the GT 1030 DDR4 came out almost a year later in March 2018 with a price of $79. Of course, cryptocurrency induced GPU shortages were also in play in late 2017, which likely explains why the DDR4 model even exists.

Against the 15W Vega 8, the Xe DG1 results vary quite a bit. Intel gets a big win in fps over the 4800U in CSGO, but that's likely because the CPU and GPU share 15W so the 4800U CPU can't clock as high. Far Cry 5, FFXIV, Flight Simulator, Metro Exodus, Red Dead Redemption 2, Shadow of the Tomb Raider, and Strange Brigade are also poor showings for the 4800U Vega 8, and the DG1 outpaces even the 5700G in FFXIV, Flight Simulator, and Strange Brigade.

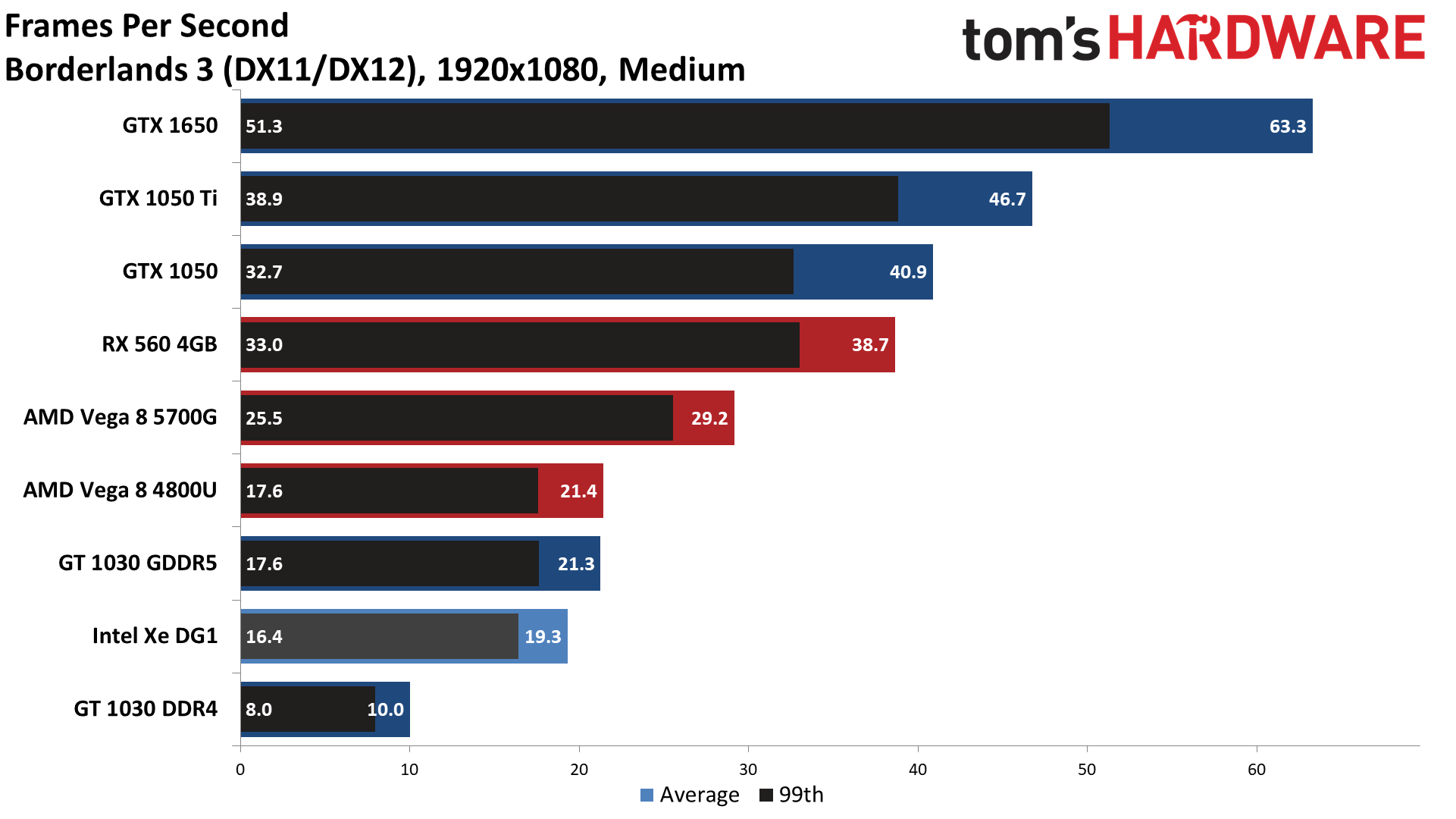

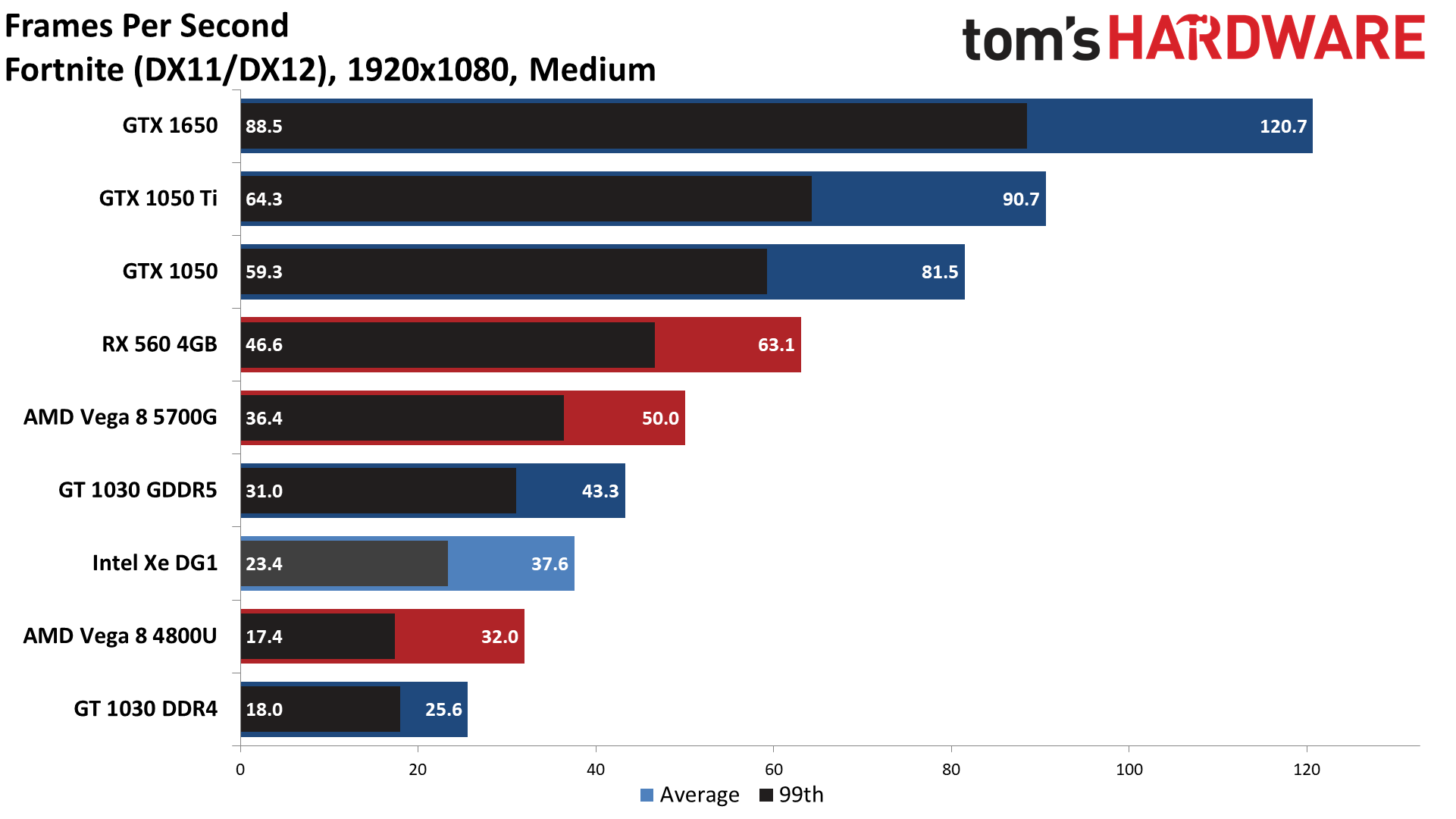

Nvidia's GT 1030 GDDR5 results are generally more consistent, since it's in the same test system and doesn't need to share memory bandwidth. The DG1 took the lead in Assassin's Creed Valhalla, Cyberpunk 2077, Dirt 5, Far Cry 5, Flight Simulator, Forza Horizon 4, Metro Exodus, Red Dead Redemption 2, Shadow of the Tomb Raider, and Strange Brigade. That's more than half of the games we tested, and yet overall the GT 1030 GDDR5 came out ahead. That's because many of the DG1's wins are by a narrow margin, while when the GT 1030 GDDR5 comes out ahead it's often by a wide margin. The GT 1030 GDDR5 was over 50% faster in Borderlands 3 and Fortnite. Generally speaking, though, we'd give the DG1 a slight edge in desirability over the GT 1030 GDDR5, mostly because drivers can be fixed, but there's no way around the 2GB hardware bottleneck.

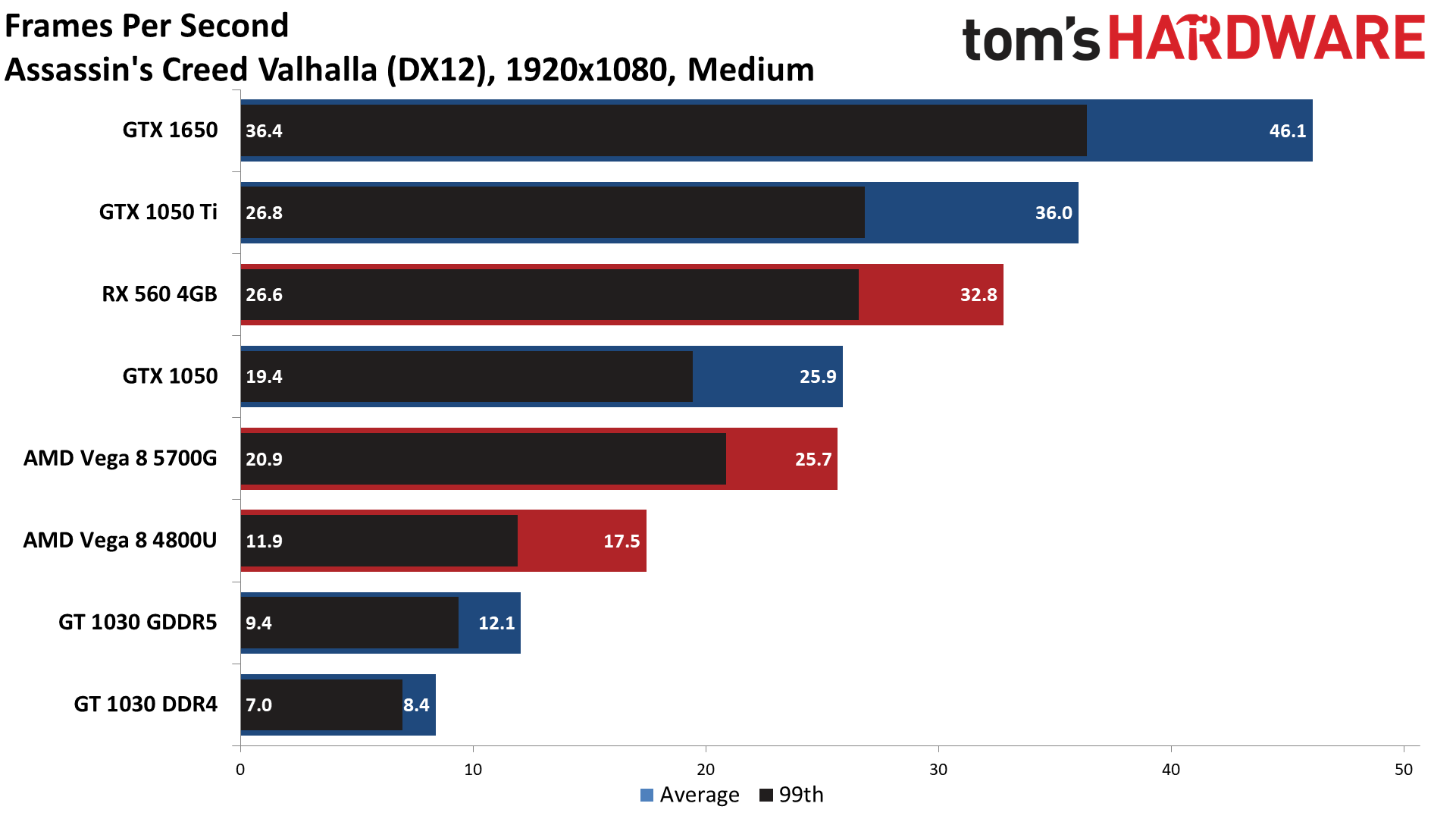

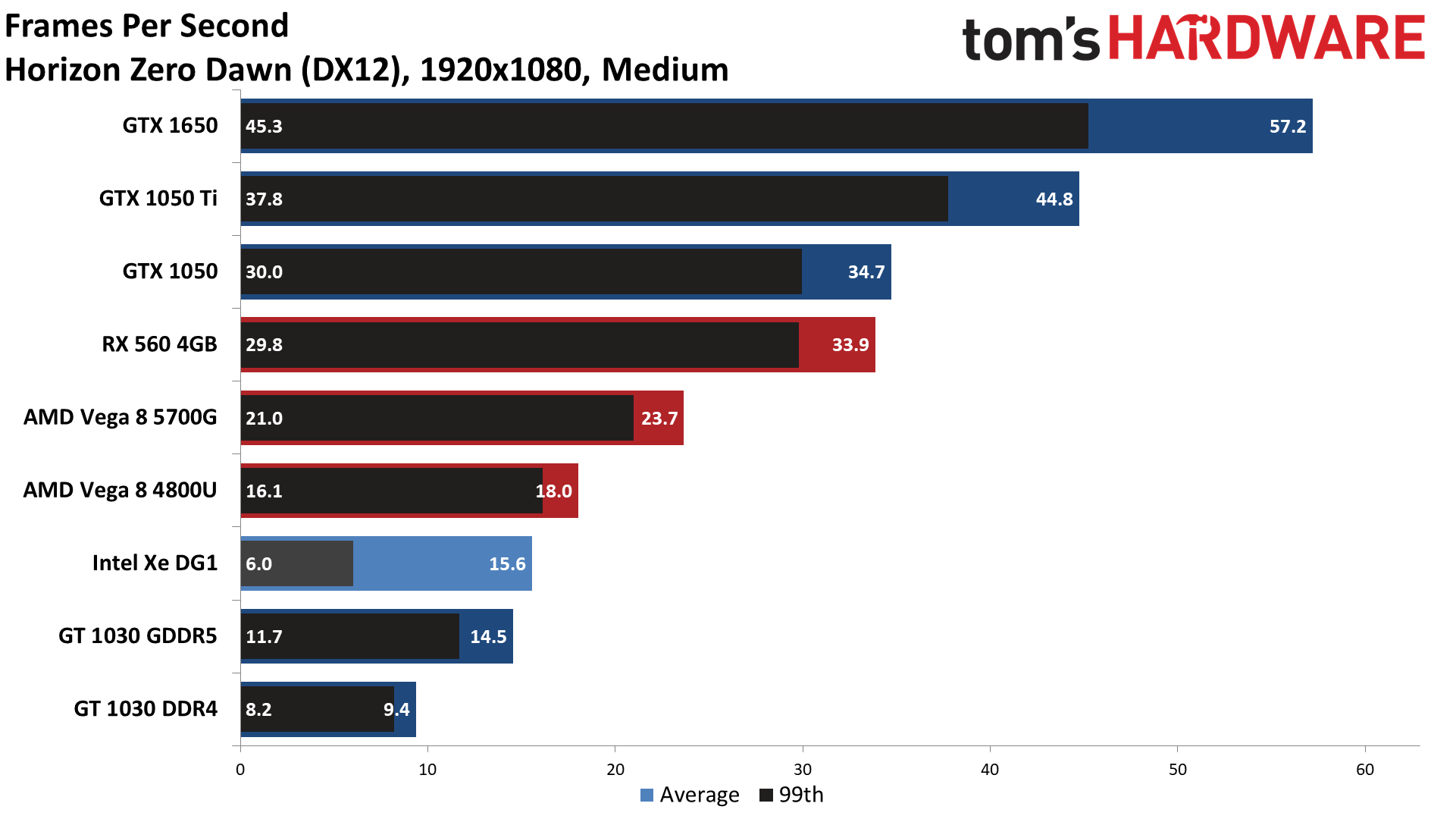

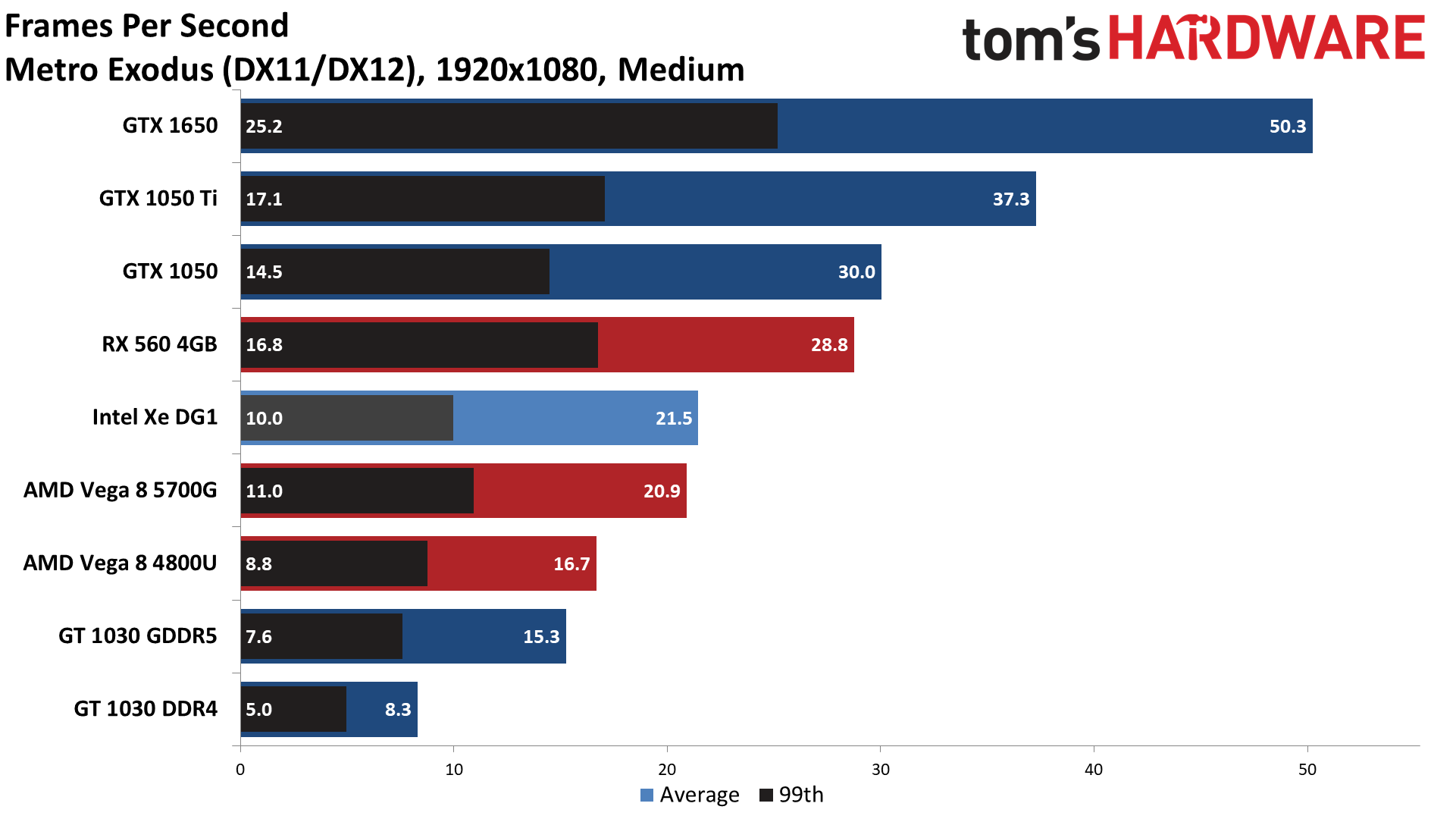

Which is not to say the DG1 had a strong showing at all. Besides generally weak performance relative to even budget GPUs, we did encounter some bugs and rendering issues in a few games. Assassin's Creed Valhalla would frequently fade to white and show blockiness and pixelization. Here's a video of the 720p Valhalla benchmark, and the game failed to run entirely at 1080p medium on the DG1. Another major problem we encountered was with Horizon Zero Dawn, where fullscreen rendering failed but the game could run in windowed mode okay (but not borderless window either). Not surprisingly, both of those games are DX12-only, and we encountered other DX12 issues. Fortnite would automatically revert to DX11 mode when we tried to switch, and Metro Exodus and Shadow of the Tomb Raider both crashed when we tried to run them in DX12 mode. Dirt 5 also gave low VRAM warnings, even at 720p low, but otherwise ran okay.

DirectX 12 on Intel GPUs has another problem potential users should be aware of: sometimes excessively long shader compilation times. The first time you run a DX12 game on a specific GPU, and each time after swapping GPUs, many games will precompile the DX12 shaders for that specific GPU. Horizon Zero Dawn as an example takes about three to five minutes to compile the shaders on most graphics cards, but thankfully that's only on the first run. (You can skip it, but then load times will just get longer as the shaders need to be compiled then rather than up front.) The DG1 for whatever reason takes a virtual eternity to compile shaders on some games — close to 30 minutes for Horizon Zero Dawn. Hopefully that's just another driver bug that needs to be squashed, but we've seen this behavior with other Intel Graphics solutions in the past, and HZD likely isn't the sole culprit.

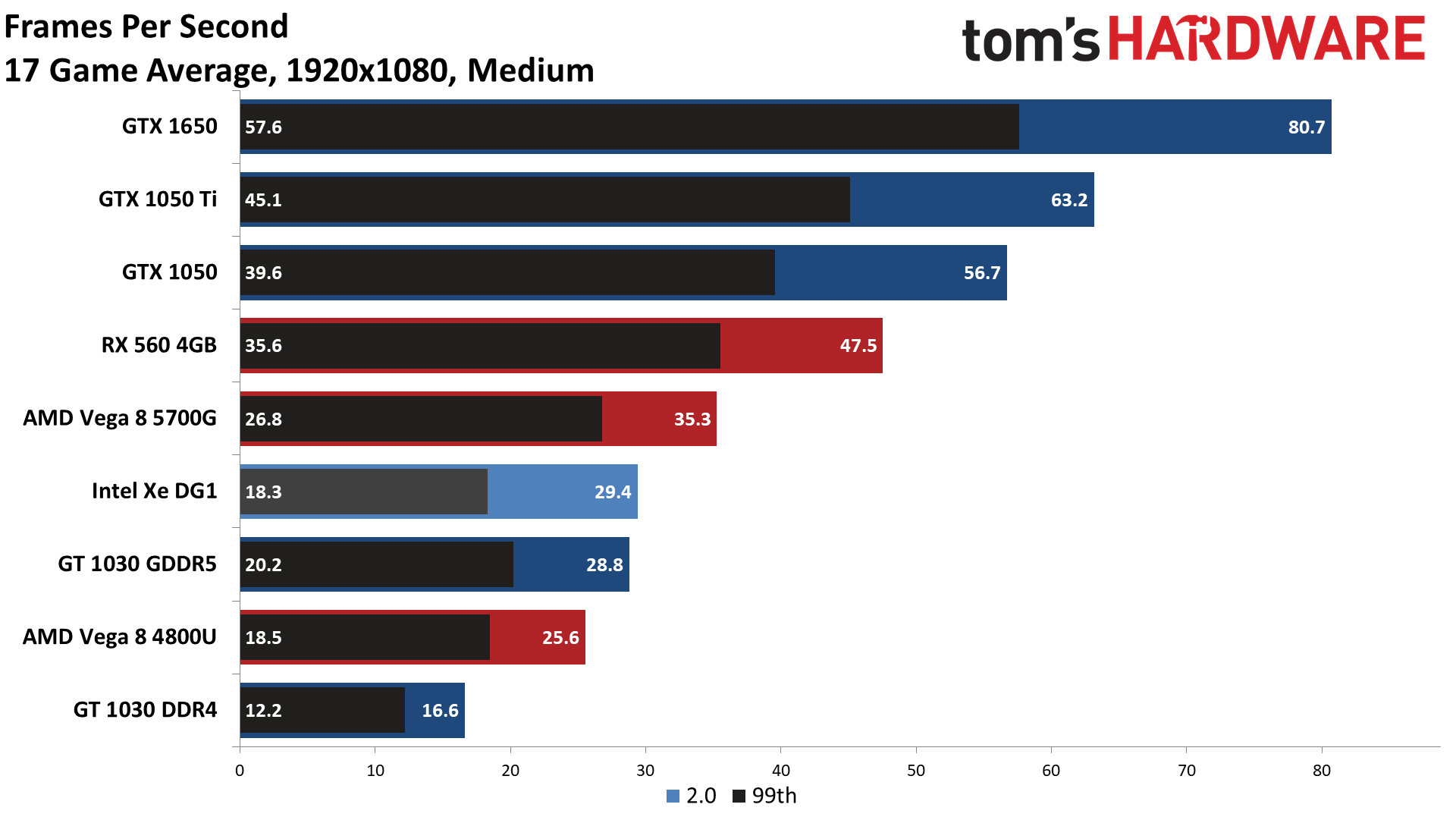

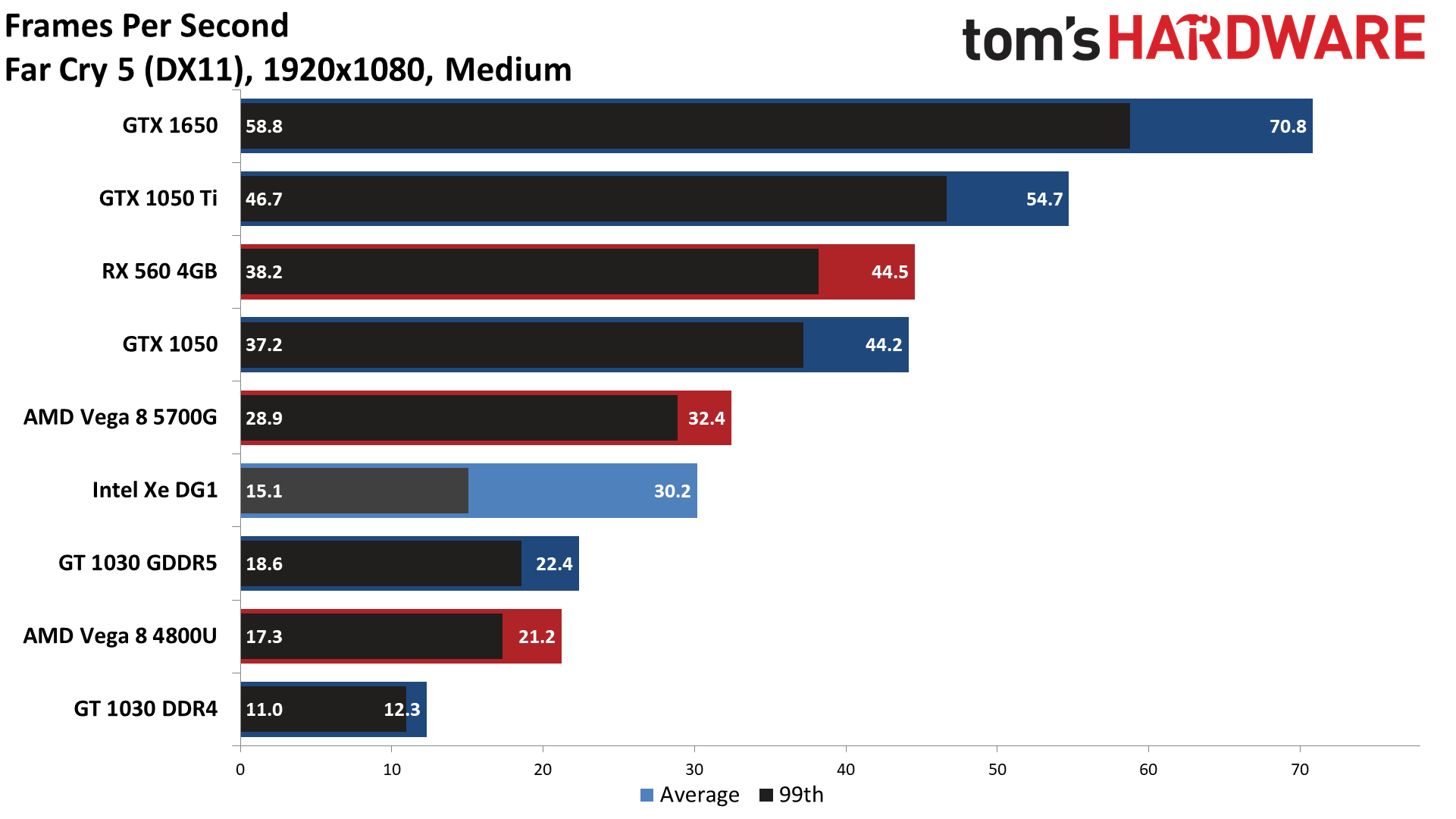

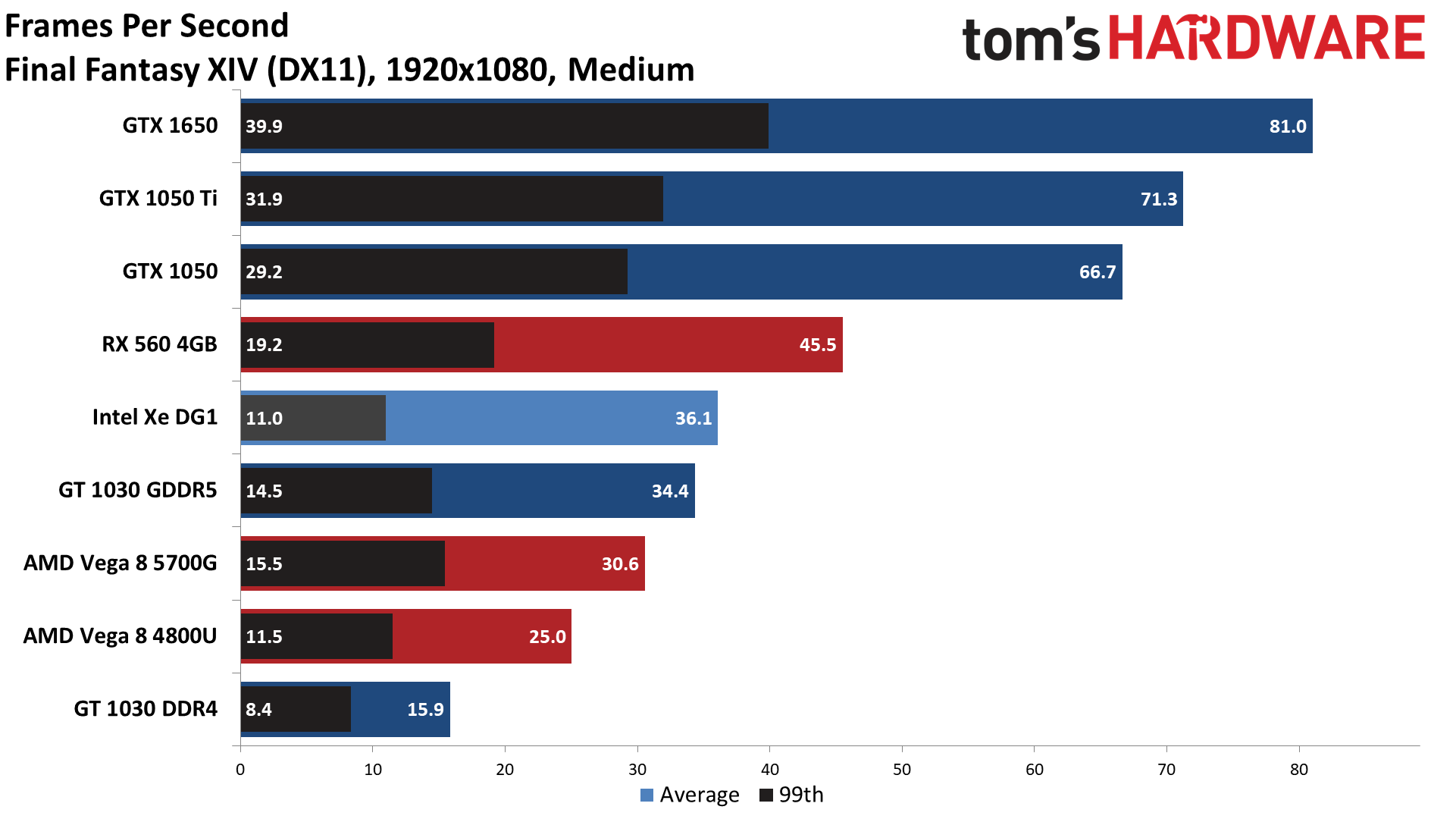

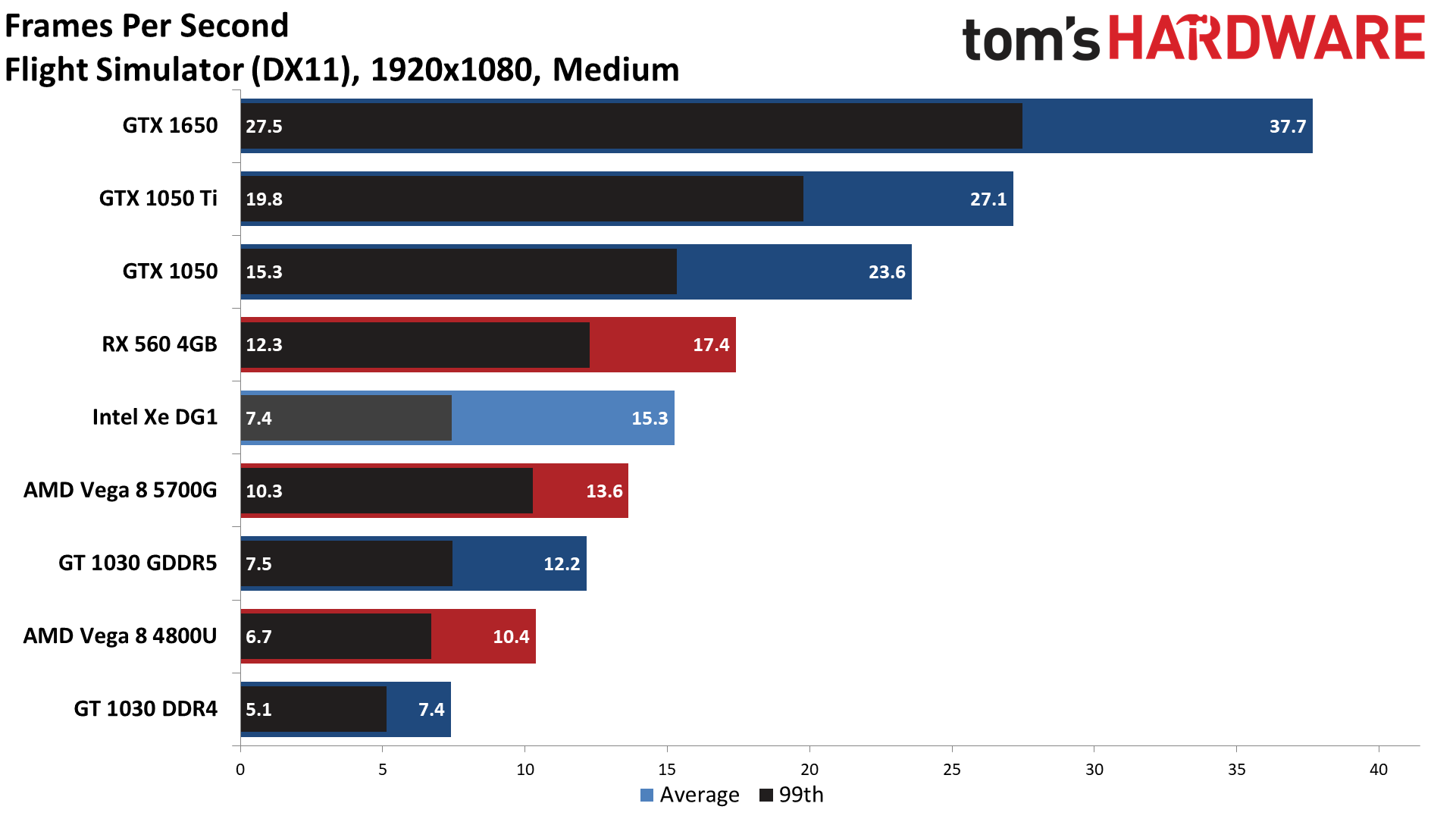

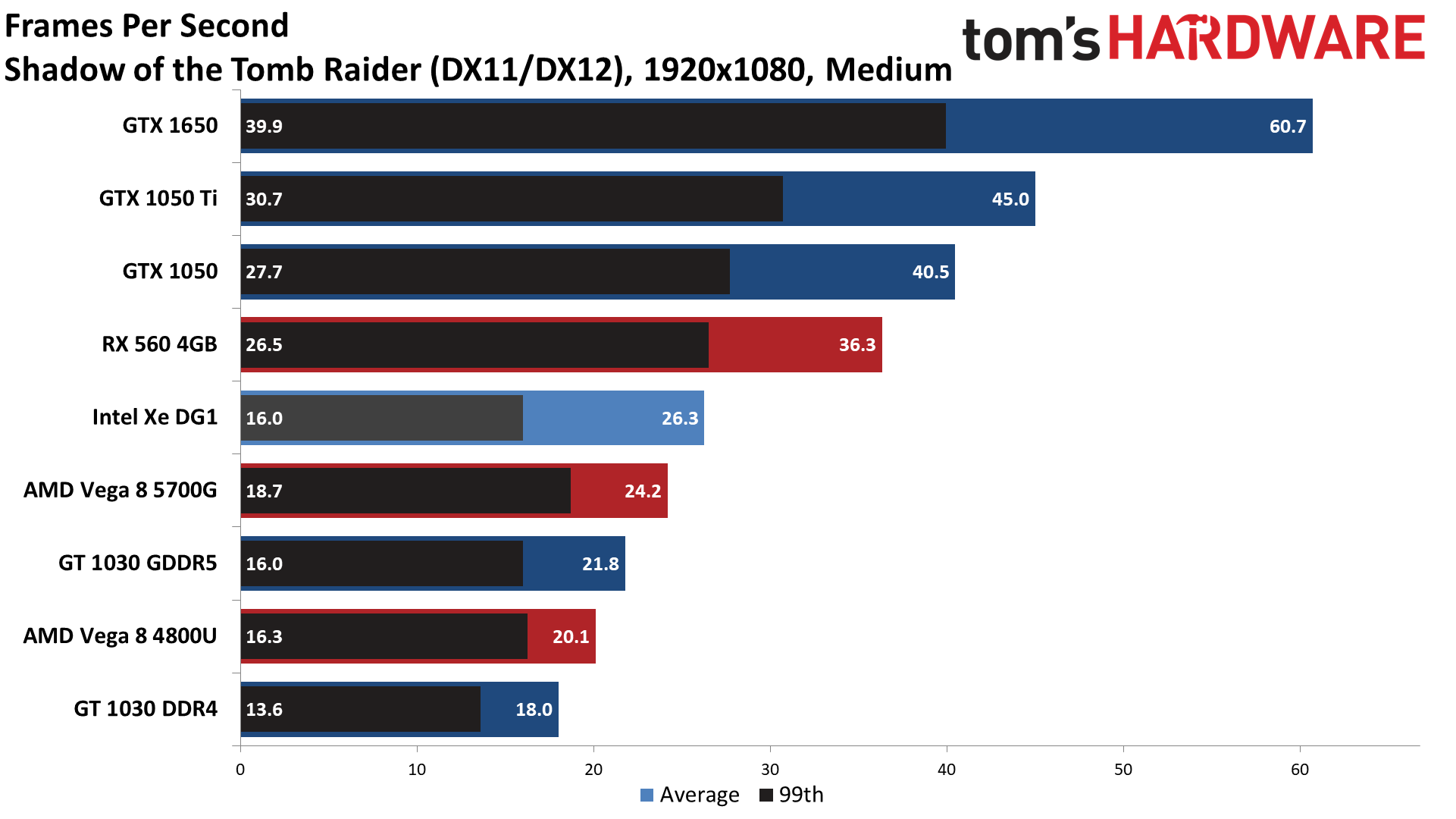

Intel Xe DG1 Graphics Performance at 1080p Medium

Given what we saw during our 720p testing, obviously most of the games we tested are going to run poorly at 1080p medium on many of the GPUs we've included in our charts. Some might say it's unfair or wrong to test the DG1 and integrated graphics solutions at 1080p medium, but pay attention to the GTX 1050 and RX 560. Those are both budget cards that were readily available for about $100 prior to the pandemic and crypto driven shortages of 2020. Here's the overall performance chart that shows the average performance across all 17 games.

The DG1 nearly makes it to 30 fps average, while the RX 560 is over 50% faster and the GTX 1050 is nearly double the performance. Except, the DG1 gets a bit of help by failing to run Assassin's Creed Valhalla, where it likely would have scored in the high teens. The 2GB GPUs also get a potential boost by not being able to run Red Dead Redemption 2, where the GTX 1050 likely would have averaged close to 30 fps if it had enough VRAM.

Hitting nearly 100 fps in CSGO also skews the overall average quite a bit, but lighter games can certainly run fine at 1080p medium on the DG1 and other low-end GPUs. Still, out of the 17 games we tested, only six of them ran at more than 30 fps on the DG1.

We won't spend a lot of time discussing the individual 1080p results, as there's not much more to say. It's possible to lower the setting in some of the games, which might allow the DG1 to hit 30 fps or more, but you can see there are a few games where the GTX 1050 offers more than twice the performance, even with half the VRAM.

If Intel had used faster GDDR5 memory on the DG1, that might have helped performance. Higher resolutions generally need more bandwidth, and while 1080p isn't really what we'd call a high resolution these days, you can see how the Vega 8 GPUs struggle as well, likely due to the lack of bandwidth. But let's not sugarcoat things: Intel clearly has a lot of work to do with drivers and hardware to get performance where it needs to be for the DG2 launch.

Right now, it's difficult to say exactly what's holding the DG1 back. Drivers are certainly part of it, but the RX 560 has about 30% more theoretical compute and 64% more bandwidth, and overall performance at 1080p medium was 62% higher than the DG1. It's possible that some driver tuning and more memory bandwidth would get Intel's individual GPU 'core' performance close to AMD's Vega architecture. Of course, AMD is ready to move beyond Vega and GCN now, so Intel needs to set its sights higher.

Intel's DG1 and Future Dedicated Graphics Ambitions

Let's be blunt: For a budget GPU, GTX 1650 levels of performance are the minimum we'd like to see, and the GTX 1650 is currently 175% faster than the DG1 across our test suite. And that's looking at the vanilla GTX 1650; the GTX 1650 Super nominally costs $10–$20 more than the vanilla model and boasts about 30% higher performance, which would make it more than triple the performance of the DG1. Drivers can certainly improve compatibility and performance in the future, but Intel needs more than that.

Could a refined DG2 architecture with 128 EUs and GDDR6 memory potentially match a GTX 1650 Super? Never say never, but that looks like an awfully big stretch goal right now. On the other hand, the fact that an Intel graphics card actually exists is already a lot more than many of us would have expected a few years ago. After 20 years of sitting on the sidelines and watching AMD and Nvidia battle for GPU supremacy, Intel has apparently decided that it wants to throw its hat into the ring. Considering how the supercomputing market has evolved over the past decade to use GPUs for a lot of the heavy computational work, and there are many workloads where GPUs can deliver an order of magnitude more performance than even the biggest CPUs, there are plenty of reasons for Intel to expand its graphics portfolio.

If Intel can fix up some of the shortcomings of the DG1, and then get that to scale up to 512 EUs — or more — performance could be quite promising. Using 16Gbps GDDR6 memory on a 256-bit memory interface would give Intel nearly eight times the memory bandwidth of the DG1, and 512 EUs running at slightly higher clocks would land in roughly the same 8x ballpark. How fast would such a GPU be compared to the best graphics cards? Theoretically, an 8x improvement would put it right in the ballpark of the fastest GPUs in our GPU benchmarks hierarchy, at least for 1080p medium. We'll still have to see how the architecture deals with higher image quality settings and resolutions, but achieving that sort of performance is obviously possible — for example, both the RTX 3080 and RX 6800 XT get there in slightly different ways.

Yet the question remains: Can Intel match AMD and Nvidia? As a first-gen dedicated GPU architecture, DG1 comes up short. It only works in certain PCs (none of which use an AMD CPU), and even then, performance is pretty lackluster. But Intel already knew all of this more than a year ago. DG1 is the harbinger for what comes next, paving the way with wider compatibility and better drivers. We have very few details about the DG2 changes, other than we know there will be far larger and more powerful chip configurations. DG1 was literally just a "test vehicle" for discrete graphics, and hopefully it has given Intel the data it needs to build a second-gen part that will be far more competitive.

It will need to be, particularly if the current trend of reduced demand from the mining sector continues and graphics card prices return to normal. DG1 gets a bit of a pass, as there are so few other options right now. Yeah, it's not really any better than the GT 1030 GDDR5 (for gaming at least), but it has similar power constraints, and it's not completely sold out and overpriced. It also requires an entire PC purchase to go with the GPU, which isn't going to help it gain widespread use. If the GTX 1050 or RX 560 were still readily available, never mind the GTX 1650 and RX 5500 XT, DG1 would have almost nothing to offer.

Intel's biggest task from our perspective is to improve the architecture and scale up to much higher levels of performance. There's a niche market for entry-level discrete graphics cards, but the big demand for dedicated GPUs comes from gamers that want a lot more than just a modest improvement over integrated graphics. And beyond the hardware, Intel still has more work to do on its drivers.

Everything should just run, without complaint, whether it's a DX11, DX12, OpenGL, Vulkan, or even an old DX9 game — or any other application that wants to leverage the GPU. An occasional bug or glitch is one thing, but we encountered multiple issues along with some seriously slow shader compile times in our testing. Even the best graphics chip hardware won't matter much without working drivers, which is a lesson we hope Intel learned from its Larrabee days.

| CPUs | Core i5-11400F, Ryzen 7 5700G, Ryzen 7 4800U |

| Motherboards | Asus Prime B560M-A AC, MSI X570 Gaming Edge Wi-Fi, Asus MiniPC PN50 |

| Memory | G.Skill TridentZ 2x8GB DDR4-3200 CL14, HyperX Impact 2x16GBGB DDR4-3200 CL20 |

| SSD | Kingston KC2500 2TB |

| Drivers | AMD 21.7.1, Intel 30.0.100.9684, Nvidia 471.41 |

MORE: Best Graphics Cards

MORE: GPU Benchmarks and Hierarchy

MORE: All Graphics Content

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Zarax ReplyAdmin said:The little engine that couldn't

Intel Xe DG1 Benchmarked: Battle of the Weakling GPUs : Read more

Given the current situation any new entry in the GPU market is welcome.

Intel, Zhaoxin entering or even if someday Matrox will want to get back in the game would mean more GPUs in the hands of the gamers.

Price/performance ratio has been stagnant and the current generation could have been the turning point but sadly shortages crushed our hopes.

Let's hope that late 2021/2022 will bring us some refreshing and a chance of getting a good GPU at a fair price. -

w_o_t_q The price for the gpu is fair always consider consider market situation. This GPU is designed for the data center no point to buy one for pc much rather go for cloud gpu services ... After a couple a year buy whatever Nvidia or AMD or Intel (if they will got proper GPU and drivers) GPU. Problem with current market prices is that these are not obtainable for a lot of people due to the rise. normal market situation after all hyper inflation come :(Reply -

JarredWaltonGPU Reply

Initial testing started with 30.0.100.9667, then I updated to 30.0.100.9684 when those drivers were released. Both drivers exhibited the same issues discussed in the article.MrMilli79 said:Which driver did you use?

Not mentioned in the article. -

vinay2070 Lol, thats one week GPU. Didnt expect that low performance. Will it fine wine like AMD for a little better formance in the future? Not that it matters anyway.Reply -

vinay2070 @JarredWaltonGPU did you get a chance to OC it, measure power consumption at idle/load etc? Also where do you think thier high end card would land in terms of performance?Thanks.Reply -

escksu The DXG 1's performance is rather inconsistent. Driver issues perhaps??Reply

However, i think i am more keen in 5700G's benchmarks instead. It pretty much says, unless you are playing only CS and similar games, just screw it and get a graphics card instead. Or you arent gaming at all. -

jkflipflop98 Replyvinay2070 said:Lol, thats one week GPU. Didnt expect that low performance. Will it fine wine like AMD for a little better formance in the future? Not that it matters anyway.

This isn't meant to blow your socks off at 600fps. It's literally the integrated GPU from a -lake cpu strapped to it's own external board. The big boys are coming and they're quite a bit faster. -

usiname Replyvinay2070 said:Lol, thats one week GPU. Didnt expect that low performance. Will it fine wine like AMD for a little better formance in the future? Not that it matters anyway.

45% faster memory , and still lose to gt 1030, if the full version is 220W as the rumors claim maybe will land around gtx 1080 - rtx 2060 and wont be even close to rtx 3060 and rx 6600 non xt -

JarredWaltonGPU Reply

There's not much support for doing overclocking on the card, and frankly I don't think it would matter much. A combination of lacking drivers, bandwidth, and compute mean that even if you could overclock it 50%, it still wouldn't be that great. And for power testing, my equipment to measure that requires a lot of modifications and runs on my regular testbed, so I'll need to poke at that a bit and see if I can transport that over to the CyberPowerPC setup for some measurements. I suspect the 30W TDP is pretty accurate, but I'll have to do some additional work to see if I can get hard measurements.vinay2070 said:@JarredWaltonGPU did you get a chance to OC it, measure power consumption at idle/load etc? Also where do you think thier high end card would land in terms of performance?Thanks.

For the high-end DG2 cards, it's very much a wild card. Assuming Intel really does overhaul the architecture -- more than it did with Xe vs. Gen11 -- it could conceivably land as high up the scale as an RX 6800 XT / RTX 3080. That's probably being far too ambitious, though, and I expect the top DG2 (with 512 EUs) will end up looking more like an RTX 3070/3060 Ti or RX 6700 XT in performance. It could even end up being more like an RTX 3060 (and presumably RX 6600 XT).

Wherever DG2 lands, though, I expect driver issues and tuning will remain a concern for at least 6-12 months after the launch. I did some testing of Tiger Lake with Xe LP and 96 EUs back when it launched, and saw very similar problems to what I saw with the DG1 -- DX12 games that took forever to compile shaders, games that had rendering issues or refused to run, etc. It's better now, nearly a year later, but there are still problems. Until and unless Intel gets a graphics drivers team that's at least as big as AMD's team (which is smaller than Nvidia's drivers team), there will continue to be games that don't quite work right without waiting weeks or months for a fix.