AMD's Radeon RX 6600 XT Will Likely Be Capped At PCIe 4.0 x8

Hot on the heels of the latest Radeon RX 6600 XT leak, German news outlet Igor's Lab has shared more juicy details on AMD's forthcoming Navi 23 offerings. Wallossek's information seemingly helps to confirm some of the rumored specifications for the Radeon RX 6600 XT.

As we've suspected for a while now, the Radeon RX 6600 XT and Radeon RX 6600 will use AMD's Navi 23 silicon. Navi 23, commonly known as "Dimgrey Cavefish," should be one of the more compact RDNA 2 dies in AMD's lineup. We suspect that Navi 24 will have the smallest die.

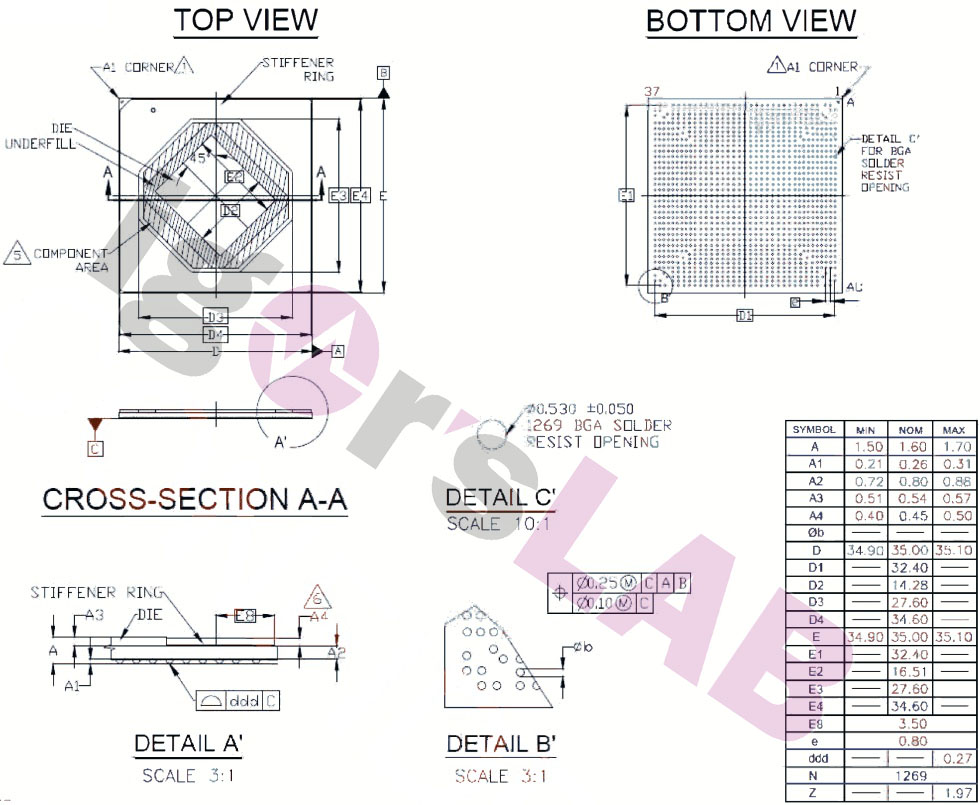

According to Wallossek, the Navi 23 silicon measures 16.51 x 14.28mm, which works out to an area of 235.76mm². For comparison, Navi 22 has a die size of 335mm² big, so we're looking at a 29.6% reduction with Navi 23. The entire Navi 23 package reportedly checks in a 35 x 35mm with a height tolerance of 0.1mm, with the Navi 23 rotated in a 45-degrees position.

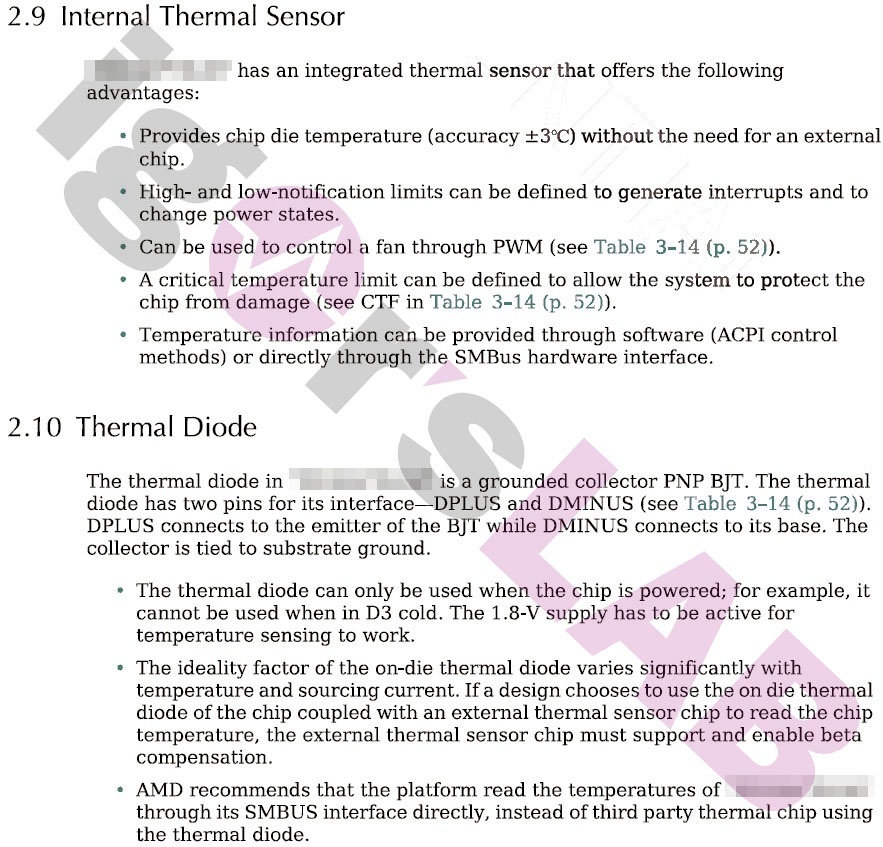

AMD may be planning to release three Navi 23-based mobile discrete graphics cards with different TGP (total graphics power) ratings. Laptop vendors will allegedly get to choose between 90W, 80W, and 65W variants. Logically, the thermal envelopes will be the limiting factor for each variant's base and boost clock speeds. Wallossek shared a screenshot of a mobile Navi 23 graphics card with a 2,350 MHz boost clock, 334 MHz slower than the rumored clock speed for the desktop Radeon RX 6600 XT.

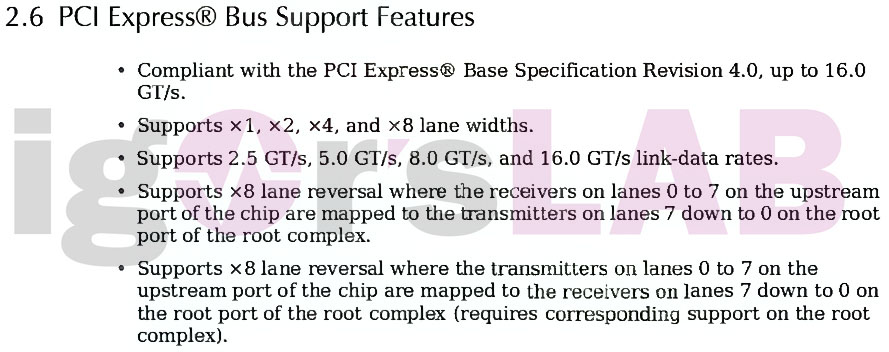

Coming as no surprise, the Radeon RX 6600 XT and Radeon RX 6600 continue to exploit the PCIe 4.0 interface. However, Navi 23 is seemingly restricted to eight PCIe 4.0 lanes. The hard cap shouldn't affect the graphics card's performance since PCIe 4.0 x8 offers the same bandwidth as PCIe 3.0 x16. Wallossek speculates that the limit imposed by AMD will prevent the Radeon RX 6600 XT from catching up to the Radeon RX 6700 through overclocking.

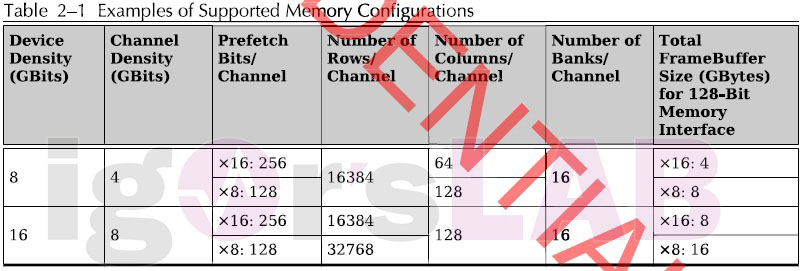

In the memory department, Navi 23 is very likely to sport a 128-bit memory interface with eight independent memory channels. This configuration opens the door to connect up to four GDDR6 memory chips in x16 mode or eight chips via a x8 connection. The maximum amount of memory supported on Navi 23 would be 16GB.

Given the segment in which Navi 23 competes, it's unlikely that the corresponding Radeon products arrive with 16GB of GDDR6 memory. Furthermore, we've already seen convincing GPU-Z screenshots of the Radeon RX 6600 XT and Radeon RX 6600 with 8GB of GDDR6 memory. That's not to say that we won't see any Navi 23-based units with 16GB, but if so, they'll probably be professional-grade graphics cards, such as the Radeon Pro series.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Surprisingly, Radeon RX 6700 XT benchmarks haven't started to pop up yet. However, the Navi 23-powered graphics card is rumored to offer equivalent or slightly better performance than the Radeon RX 5700 XT.

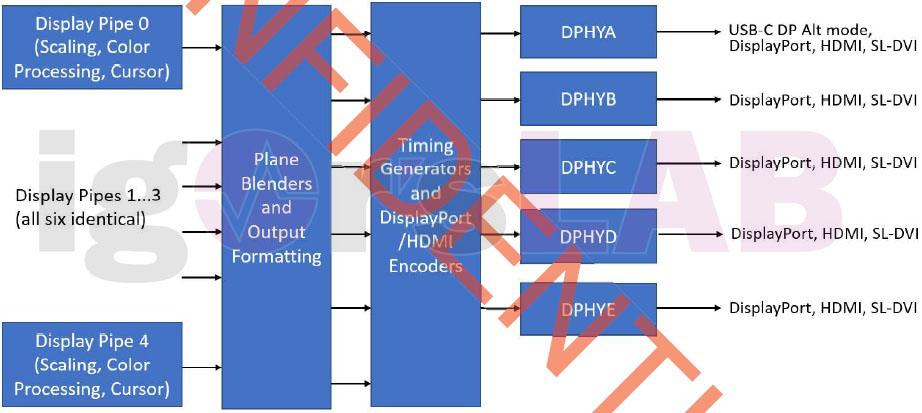

There is support for up to five display outputs, depending on how AMD wants to equip the Radeon RX 6600 XT. Navi 23 also supports USB Type-C, but we don't expect to see that interface on the desktop variant. If anything, the USB Type-C port will make its way to the mobile variant since it could be a valid replacement for a DisplayPort on laptops.

The Radeon RX 6700 XT comes equipped with one HDMI 2.1 port and three DisplayPort 1.4a outputs. The Radeon RX 6600 XT might feature the same design, maybe with one or two fewer DisplayPort 1.4a outputs. Wallossek's data also points to a plethora of supported hardware video decoders on Navi 23, including VP9, HEVC, H.264, and VC1 decoders.

We still haven't seen any signs of when AMD will unleash the Radeon RX 6600 XT or Radeon RX 6600. Nonetheless, Computex 2021 is approaching so it's plausible that we could see an official Navi 23 announcement then, if not before.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

InvalidError If the RX6600 only gets an x8 interface, then the likely 4GB RX6500 is screwed since the 4GB RX5500 sorely needed its 4.0x8 to hold up decently well against its 8GB counterpart..Reply -

vern72 How many extra power plugs will it have? I don't like double plugs that come with most GPUs nowadays.Reply -

hotaru.hino Reply

The 4GB had problems if its VRAM filled up. Otherwise it was fine in most other cases. It still makes me scratch my head why AMD is choosing to limit it to 8 lanes when PCIe 4.0 adoption isn't quite at critical mass.InvalidError said:If the RX6600 only gets an x8 interface, then the likely 4GB RX6500 is screwed since the 4GB RX5500 sorely needed its 4.0x8 to hold up decently well against its 8GB counterpart..

Since the RX 6700 was a 230W card, I'd image at worst the RX 6600 will float around 180W-200W, which would only require an 8-pin plug.vern72 said:How many extra power plugs will it have? I don't like double plugs that come with most GPUs nowadays. -

escksu Replyhotaru.hino said:The 4GB had problems if its VRAM filled up. Otherwise it was fine in most other cases. It still makes me scratch my head why AMD is choosing to limit it to 8 lanes when PCIe 4.0 adoption isn't quite at critical mass.

Since the RX 6700 was a 230W card, I'd image at worst the RX 6600 will float around 180W-200W, which would only require an 8-pin plug.

Its to reduce cost and pin count. PCIE 4.0 8x is pretty much the same as PCIE 3.0 16x. With B550 becoming mainstream and 11 gen Intel out now, -

hannibal Maybe 6500 use that navi 24 chips… that would mean that 8 bit memory 4gb would not be botlenec because the chip itself is not wery powerfull.Reply -

InvalidError Reply

Having only x8 on the 4GB RX5500 was a huge mistake since it made it perform 30-40% slower than it could on 3.0x8 and could probably have closed the gap even tighter against the 8GB version with 4.0x16. The RX6600 is faster, which means it is going to suffer even more severe performance degradation if it ever runs out of VRAM. A 30-40% potential performance hit for going slightly over 8GB on a likely $300+ card is a steep price to pay to save maybe $2 on the assembled PCB.escksu said:Its to reduce cost and pin count. PCIE 4.0 8x is pretty much the same as PCIE 3.0 16x. With B550 becoming mainstream and 11 gen Intel out now,

Perhaps most important: this is hypothetically lined up to compete against the RTX3060 which does have 4.0x16 and 12GB of VRAM. -

hotaru.hino Reply

Unless the RX 6600 XT is also an x8 card, then that argument goes right out the window. The RX 5600 was a x16 slot card with the pins (at least most AIBs kept all of the pins), so there was literally no cost saving done, except maybe the minute amount they saved on the PCB traces.escksu said:Its to reduce cost and pin count. PCIE 4.0 8x is pretty much the same as PCIE 3.0 16x. With B550 becoming mainstream and 11 gen Intel out now, -

InvalidError Reply

There is no cost difference on traces whatsoever since traces are formed by etching copper away to isolate traces from the formerly solid copper plane. The whole board gets covered in photo-resist regardless of how many traces are on them and the whole board has exposure masks too.hotaru.hino said:there was literally no cost saving done, except maybe the minute amount they saved on the PCB traces.

Well, I suppose there is an absolutely minute saving in not needing to test those extra traces and $0.001 DC-blocking capacitors on data traces going one direction, forgot which, so $0.02 saved there. Yay!