AMD, Xilinx Claim World Record for Machine Learning Inference

AMD and Xilinx partnered to create high-performance inference systems for data centers that Xilinx this week claimed breaks the world record for inference performance. The new systems include Xilinx’s new machine learning accelerator cards, called Alveo, which promise real-time machine learning inference, as well as video processing, genomics and data analytics.

A New Inference World Record

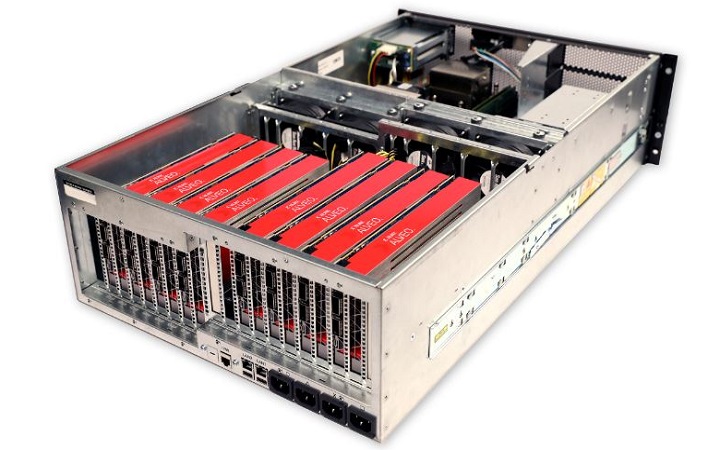

AMD and Xilinx created a new system for data centers that includes a 32-core EPYC 7551 CPU and eight Alveo U250 accelerator cards. The cards will be powered by Xilinx’s ML Suite, which also supports ML software frameworks, such as TensorFlow.

The two companies said that their system reached an inference throughput of 30,000 images per second on the GoogLeNet convolutional neural networks. Such high inference performance is seemingly being requested these days by companies that need to analyze massive amounts of data.

After joining Xilinx CEO Victor Peng onstage at a Xilinx event showcasing this, Mark Papermaster, AMD CTO and senior vice president of technology and engineering, said that new workloads can take advantage of the whole system and not just the CPU.

Xilinx Alveo Accelerator FPGA

Xilinx introduced two new FPGA cards (Alveo U200 and U250), which for the first time are optimized to “accelerate” real-time machine learning inference. The focus here seems to be “real-time” inference because the Alveo cards promise three times lower latency than GPUs with four times the throughput for low-latency applications.

The Alveo cards also promise 20x the performance of a CPU for inference tasks, reaching up to 90x the performance for database searches. They start at $8,995 each, and Xilinx said that it’s now working with OEMs, including Dell EMC, Fujitsu, Hewlett Packard Enterprise and IBM, to qualify them for data centers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

bit_user Reply

The original blog post specifies a batch size of 1. This caught my attention, as it's not usually necessary to use such a small batch size. I have to wonder if their performance lead quickly evaporates, as batch size goes up.21372529 said:The focus here seems to be “real-time” inference because the Alveo cards promise three times lower latency than GPUs with four times the throughput for low-latency applications.