Copper Plate Mod Reduces RTX 3080 GDDR6X Memory Temps by 25 Degrees

A great solution for badly cooled graphics cards

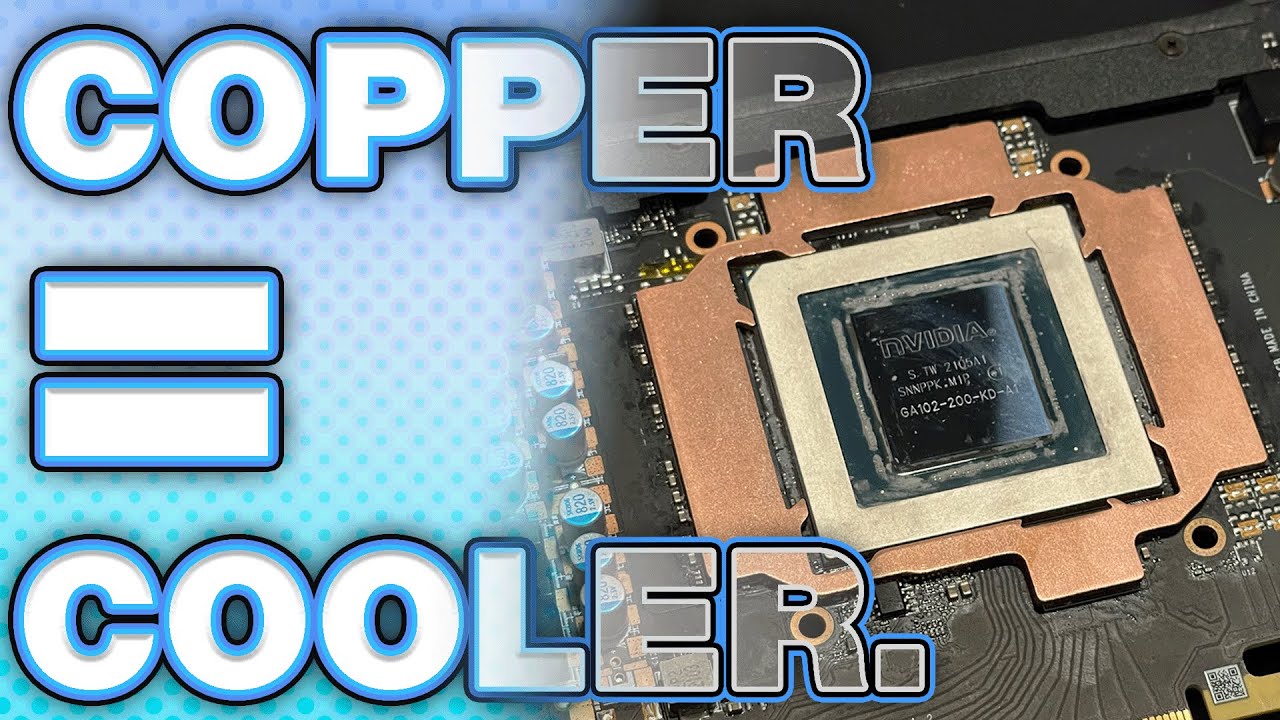

It's no secret that GDDR6X memory can run very hot on graphics cards equipped with it, most notably the GeForce RTX 3080, RTX 3080 Ti, and RTX 3090, some of the best graphics cards on the market. If modding copper shims to your GPU isn't your cup of tea, there's an easier way to reduce memory temperatures. YouTuber DandyWorks released a video demonstrating the use of a copper plate for memory, made specifically for an Nvidia RTX 3080. The copper plate was able to reduce GDDR6X temperatures by up to 25C.

The copper plate used in the video was made by CoolMyGPU.com and is specifically designed for select RTX 3080 AIB partner cards. The company has an assortment of other copper memory cooling pates as well that are designed for a range of RTX 30-series GPU models, including the RTX 3080/Ti, RTX 3090, RTX 3060/Ti, and RTX 3070/Ti. It has plates for Nvidia's Founders Edition SKUs as well as some third party designs.

For RTX 3090 owners, CoolMyGPU.com also sells a rear memory copper plate to help cool the rear GDDR6X modules on the card. This plate could be even more useful than the front plate, since the RTX 3090's rear G6X modules are notorious for running extremely hot, with the backplate being the only source of heat dissipation for the rear modules.

In the video, DandyWorks showcases how to install the copper memory cooling plate onto an MSI Ventus RTX 3080 graphics card. First, the graphics card's original cooler needs to be removed. Next, all the thermal pads and thermal paste cooling the GDDR6X and main GPU core need to be removed, along with any additional residue left from the thermal pads.

After a test fit, the copper cooling plate can now be installed, sandwiched between a layer of thermal paste on the top and bottom, along with the installation of the GPU heatsink. No additional screws are required since the plate is held down by the GPU's cooling solution.

For Dandy's RTX 3080 Ventus graphics card, the copper plate dropped memory temperatures 20%, going from 94C to just 75C in mining applications, with even lower temperatures reported while gaming.

When Should This Mod Be Used?

In our experience testing Nvidia's flagship RTX 30-series cards — including AIB partner models — the ideal GDDR6X temperatures should peak at 100C or lower to ensure thermal throttling doesn't occur. You might be okay with 105C peak temps, but if your GPU's memory is operating at 110C or higher, the graphics card will throttle clock speeds and better cooling is required.

Gaming-specific workloads tend to be a bit less demanding than mining, but we've tested cards (like the RTX 3080 Ti Founders Edition) where memory temperatures hit 104C on a regular basis. Fire up a mining utility and 110C happens on a lot of GDDR6X-equipped cards, including nearly all of Nvidia's Founders Edition models (with the exception of the RTX 3070 Ti, which only has 8GB VRAM). Only the 'good' AIB partner cards with improved memory cooling capabilities stay below that mark.

So if you find your GPU is running at 110C — while mining or gaming — this copper cooling plate mod would be a way to reduce GPU temperatures. It should allow the GDDR6X to run at its maximum clock rate, and thereby improve performance and card longevity. It might be an even better option if all you do with your GDDR6X card is mining, though with mining profits dropping, that might not be as necessary these days.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

InvalidError Someone publishes a piece about using copper shims, someone else makes a custom-fit copper shim that does fundamentally the same thing in a tidier form factor.Reply

Since the DRAM packages are plastic/epoxy at 1-2W a pop, they could have used steel or aluminum and achieved pretty much the same cooling performance for a fraction of the material cost. -

VforV Nvidia doing pathetic things as always, charging the highest prices for their GPUs only to make Ampere run extremely hot and power hungry for that +5-10% (if any) over RDNA2 to claim supremacy.Reply

I don't see any aricles on RDNA2 about needing extra hardware mods after you bought it to lower it's horribly high temps, why? Because it does not have this problem.

RX 6950X XT refresh will one again prove it's a more efficient high end GPU vs that laughable 3090 Ti and I really can't wait for RDNA3 to feed nvidia a little bit of humble pie, although I doubt they will admit that even if they lose the gaming crown... meh. -

wifiburger Reply

:ROFLMAO:... yes, NVIDIA bad; AMD supreme ?VforV said:Nvidia doing pathetic things ... -

watzupken Reply

Objectively, I feel the problem with Nvidia’s high end Ampere line up is mostly due to the power hungry GDDR6X memory. It is faster than normal GDDR6, but at the expense of power and therefore, heat output. To get a sense how power hungry it is, you just have to look at the difference between a RTX 3070 and 3070 Ti.VforV said:Nvidia doing pathetic things as always, charging the highest prices for their GPUs only to make Ampere run extremely hot and power hungry for that +5-10% (if any) over RDNA2 to claim supremacy.

I don't see any aricles on RDNA2 about needing extra hardware mods after you bought it to lower it's horribly high temps, why? Because it does not have this problem.

RX 6950X XT refresh will one again prove it's a more efficient high end GPU vs that laughable 3090 Ti and I really can't wait for RDNA3 to feed nvidia a little bit of humble pie, although I doubt they will admit that even if they lose the gaming crown... meh.

It is actually not all bright and sunny on AMD’s RDNA2 when it comes to high heat output. After trying out both the RX 6800 XT and 6600 XT, I generally find that the GPU hotspot temps can get pretty high. The delta between the skin vs hotspot temp can be between 15 to 25 degrees celcius. Whereas on Apmere cards, my testing on the RTX 3070 Ti and 3080 shows a smaller delta between skin and hotspot temp of 10 degrees celcius and below. -

InvalidError Reply

The GDDR6X memory only accounts for about 50W, a relatively small fraction of the 400+W peak board power. Nowhere near 'most' power. Most of the power comes from pushing the GPU die to its limits. The closer to limits you get, the worse power efficiency gets.watzupken said:Objectively, I feel the problem with Nvidia’s high end Ampere line up is mostly due to the power hungry GDDR6X memory. It is faster than normal GDDR6, but at the expense of power and therefore, heat output. -

watzupken Reply

I actually don’t think it is just 50W. If you look at the difference in power consumption between the RTX 3070 and 3070 Ti, that slight increase in CUDA cores is not going to result in a 70W difference in power on top of the power required by GDDR6. That is a 220W part, “upgraded” to 290W. When you move up to RTX 3090, you have an extra 16 pieces of GDDR6X, running at a higher clock, which will demand more power. As we move up again to the RTX 3090 Ti, the number of memory chips have dropped, but pushed even harder for both the GPU and VRAM. So no surprises the power consumption went up. After all, I believe the sweet spot clockspeed of Ampere sits close to 1.9 to 2. Once you push it beyond 2 GHz, it starts to pull in a lot more power.InvalidError said:The GDDR6X memory only accounts for about 50W, a relatively small fraction of the 400+W peak board power. Nowhere near 'most' power. Most of the power comes from pushing the GPU die to its limits. The closer to limits you get, the worse power efficiency gets. -

VforV Reply

So it's worse for +15 degrees on the hot spot vs Ampere?watzupken said:Objectively, I feel the problem with Nvidia’s high end Ampere line up is mostly due to the power hungry GDDR6X memory. It is faster than normal GDDR6, but at the expense of power and therefore, heat output. To get a sense how power hungry it is, you just have to look at the difference between a RTX 3070 and 3070 Ti.

It is actually not all bright and sunny on AMD’s RDNA2 when it comes to high heat output. After trying out both the RX 6800 XT and 6600 XT, I generally find that the GPU hotspot temps can get pretty high. The delta between the skin vs hotspot temp can be between 15 to 25 degrees celcius. Whereas on Apmere cards, my testing on the RTX 3070 Ti and 3080 shows a smaller delta between skin and hotspot temp of 10 degrees celcius and below.

Sure, let's say I agree, but the fact remains none of the RDNA2 get to 100 degrees or more like Ampere does on their most expensive GPUs they have... not to mention power consumption, not to mention price...

Ampere is horrible in the high end. End of Story. -

Remmington_Flex Buyer BewareReply

I’m posting my experience with CoolMyGPU.com so that potential buyers will be aware of the risks associated with purchasing their products.

I have an EVGA RTX 3090 FTW3. I purchased their compatible front plate and bracket backplate.

I have swapped pads numerous time on my GPU’s as I have a mining farm, and I’ve become quite proficient at it.

This particular 3090 had Gelid Extreme pads on the VRam and the power modules (1 mm to replace the oem thermal puffy). The mosfet pads are stock. With the Gelids, my mem temps fluctuated between 86C-88C at 72F ambient temp.

I installed the CoolMyGPU plates strictly following their instructional video.

Upon testing, I discovered that my Temps jumped to 108C. My first guess was that contact was not adequate. I compressed the card to prove my hypothesis that poor contact was made with these plates, dropping my temperatures to 102C.

I opened the GPU to troubleshoot and Found nothing impeding contact, so I reapplied thermal paste, and tried it again.

Temperatures were still over 106C! After opening this card up about 6 times to test different methods of remedying contact issues, I discovered that the only way to get the temps under 100C with this was to add 1mm Gelid pads on top of each plate. The lowest temp accomplished with this configuration was 98C.

After days of troubleshooting, and over $50 in thermal paste and pads, I finally decided to remove the plates all together and put Gelid Extremes back into the card.

This immediately brought my temps back down to 86C-88C.

It was clear that the plates provided by CoolMyGpu.com were either the incorrect plates, or they were milled too thin.

I reached out to CoolMyGPU via email. No response.

I reached out again via email. No response.

I then reached out via discord, and was able to make contact with their customer support after a couple of days.

This person informed me that the owner was the only person who could approve refunds, and that he reached out to him about my order.

A week later, still no response. Finally, after informing support that I was sick of being jerked around on this, he referred me to the owner’s Discord.(NoSo-CMG)

I have sent multiple messages to the owner about this, including a testimonial from another customer that his plate was too thick, and have yet to get a response.

I’ve looked these guys up, it does not look like they are an officially registered business in the state of Georgia, and their shipping address is a little townhouse.

Any legitimate business would provide refunds or at least try to fix the issue to help customers. I hope potential buyers take this information into account when making their buying decision.