Intel's Pat Gelsinger on 'Super Moore's Law,' Making Multi-Billion Dollar Bets

A Pat Gelsinger Interview

We had a chance to sit down with Intel CEO Pat Gelsinger for a roundtable Q and A session shortly after his opening keynote at the company's InnovatiON event. We've included the full transcript at the end of our own commentary.

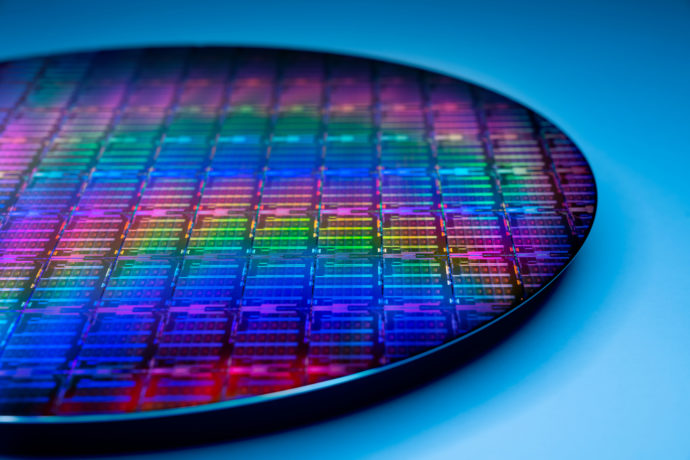

Intel CEO Pat Gelsinger's return has reignited the company's roadmaps as it re-emerges from years of stagnation in several of its key technologies. Gelsinger's deep engineering expertise has helped forge expansive plans that will substantially alter the company's DNA, transforming it from an ODM that designs and produces its own chips into an even more sprawling behemoth that also designs and produces chips for others — even its competitors — as it executes on its new IDM 2.0 model.

To further this quest, Gelsinger is doing what was once unthinkable — the company is opening up its own treasure trove of IP to produce x86 chips for other firms, but based on Intel's own core designs. Gelsinger even revealed during the roundtable that the company would license future Performance core (P-core) and Efficiency core (E-Core) designs, much like those found in the new Alder Lake processors.

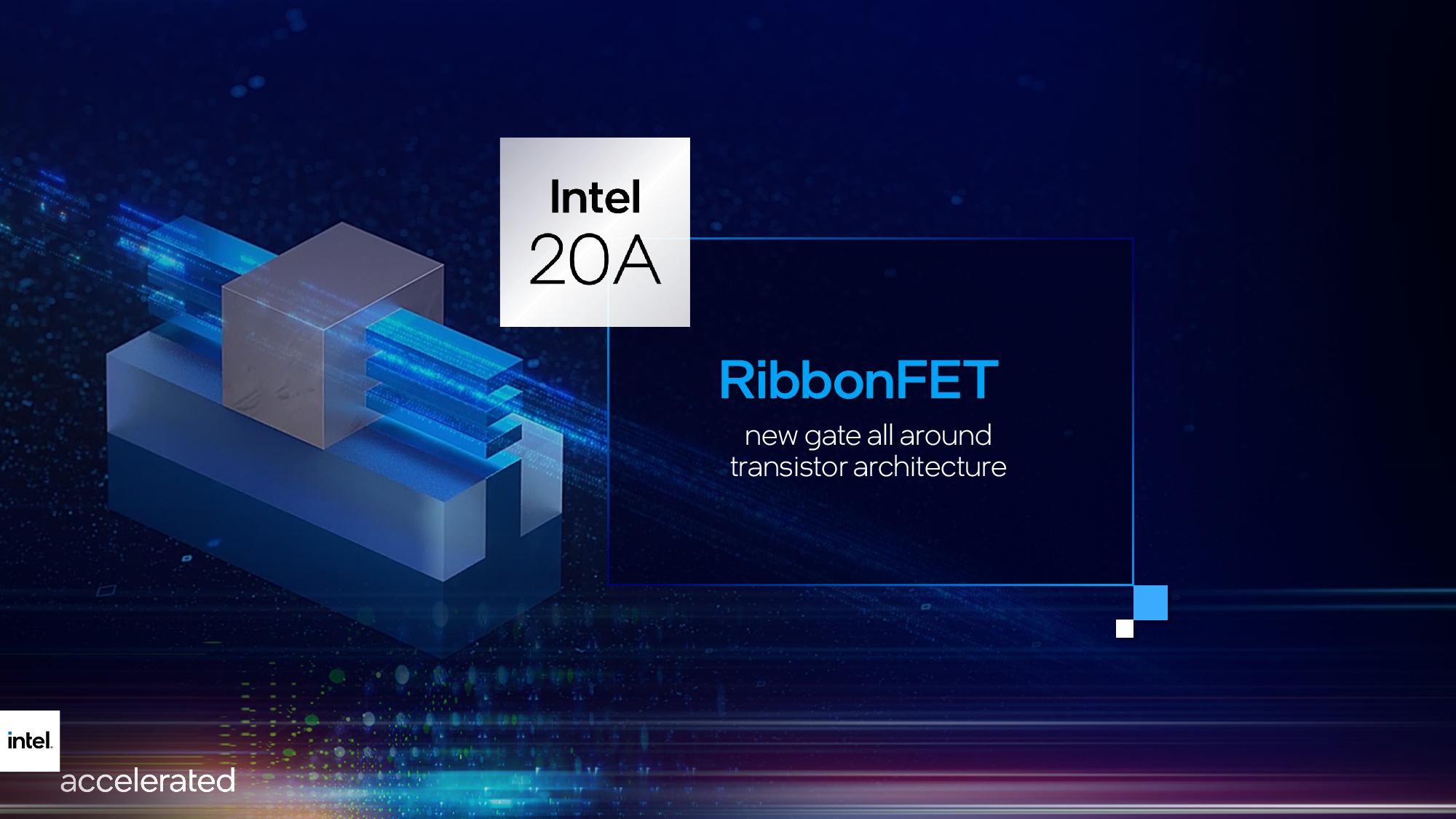

Intel's IDM 2.0 plan is shockingly ambitious in its scope, but it all hinges on the company's process technology. Gelsinger says the company is well on track to regain manufacturing superiority, particularly as it moves to new gate-all-around transistor designs, saying, "I think we're going to be comfortably ahead of anybody else in the industry."

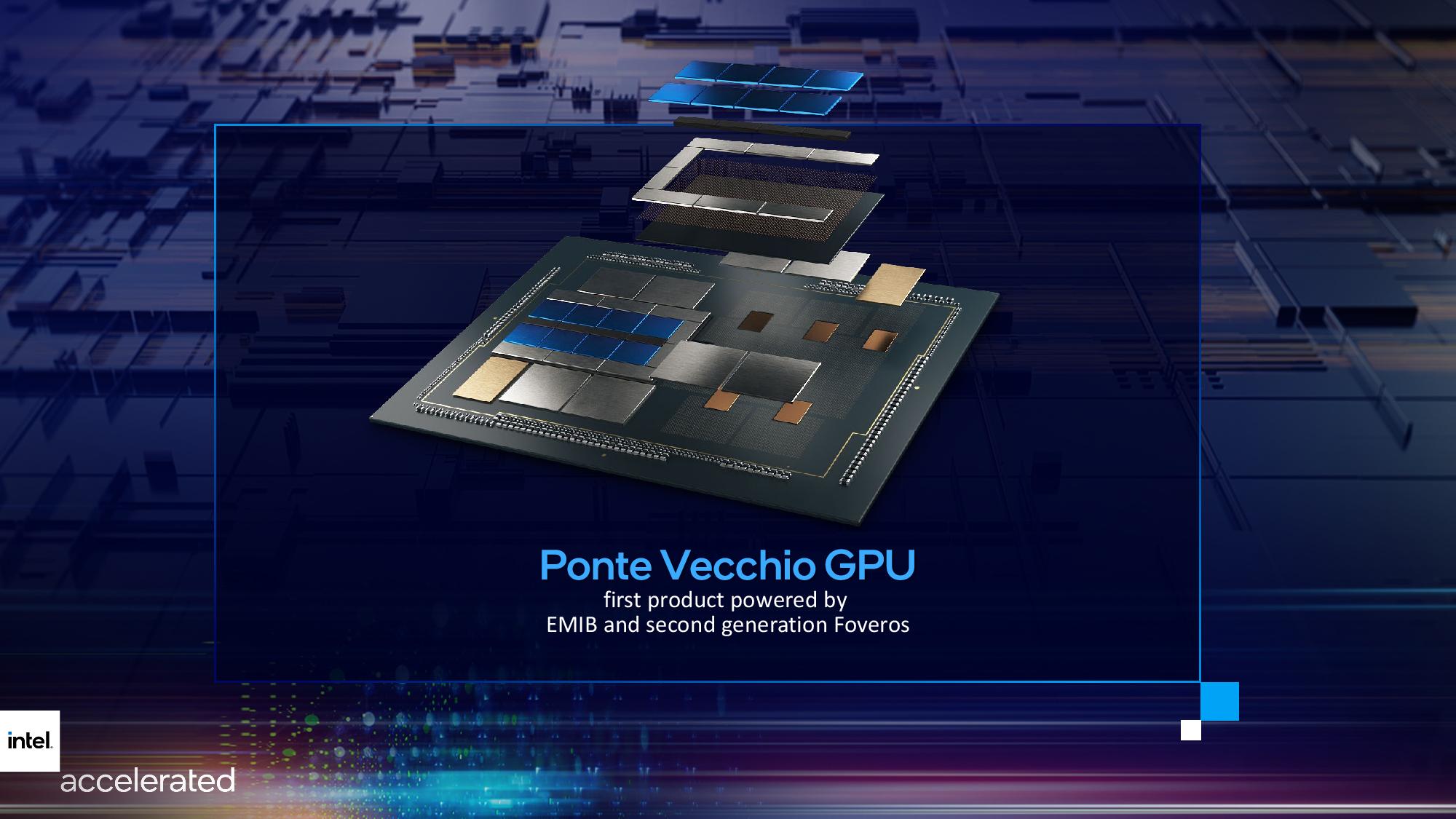

Intel now predicts it will maintain or surpass Moore's Law for the next decade. Gelsinger also coined a new "Super Moore's Law" phrase to quantify a strategy that involves using advanced packaging techniques, like the 2.5D and 3D techniques enabled by the company's EMIB and Foveros technologies, to boost effective transistor density. "Moore's Law is alive and well. As I said in the keynote, until the periodic table is exhausted, we ain't done," said Gelsinger.

Intel has charted out its new path with cutting-edge tech, like RibbonFET and Back-Side power delivery, but now it's time to pay for it: The company has to build new leading-edge factories to manufacture those nodes for its Intel Foundry Services (IFS) customers.

Intel has already started spending $20 billion of its own money on two fabs in the US, and plans to build up to $100 billion in fabs in Europe, partially funded by the EU. But what if the industry enters a downturn, and these multi-billion dollar bets end up paying for fabs that aren't needed, and therefore underutilized and not profitable? Gelsinger has a multi-pronged plan to avoid that possibility, and it partially hinges on building fab shells that aren't stocked with equipment until production demand is assured.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Gelsinger also said that the company has several options if an over-capacity situation presents itself, including regaining market share that Gelsinger admitted the company has lost in the desktop PC and server markets.

There's plenty of other interesting information in the transcript below, including details about Gelsinger's close relationship with Microsoft CEO Satya Nadella as they engage in a new "virtual vertical" strategy to work closely together on new projects.

The transcript, lightly edited for clarity, follows:

Linley Gwennap, The Linley Group: When you're talking about Moore's law, I'm wondering how you are defining Moore's Law these days? Is it transistor density? Is it transistors per dollar? Is it power/performance or a particular cadence? Or is it just, "we're still making progress at some rate?"

Gelsinger: I'm defining it as a doubling of transistors. Obviously, it's going from a single tile to multi-tile, but if you look at the transistor density of the nominal package size, we'll be able to double the number of transistors on that in a sustained way. And trust me, before my TD [Technology Development] team let me make those statements today, they analyzed this from quite a few dimensions, as well.

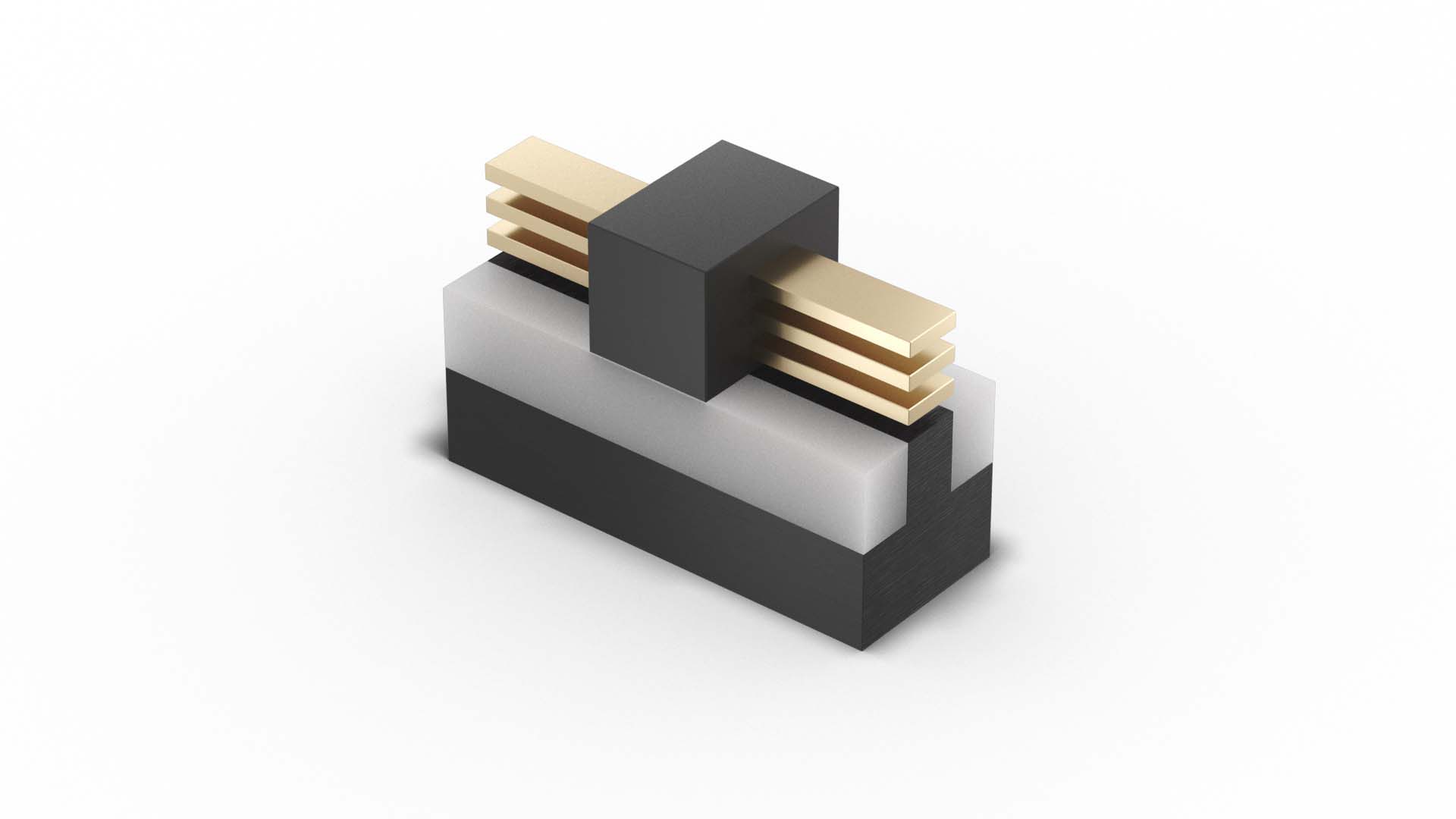

Now, if you click inside of it, we do expect that transistor density per unit of silicon area. We are in a pretty good curve right now with the move to Ribbon FET and EUV solving lithography issues, and Power Via solves some of the power delivery issues. I think the transistor density per unit area by itself, that doesn't give you a doubling of the number of transistors per two years, but it's going to be in a pretty good curve for the next ten years is our expectation.

When you then combine that with 2.5D and 3D packaging, that's when you go "Super Moore's Law," and you can start to break the tiles into smaller, more manufacturable component components. With technologies like EMIB, we can tie them together essentially with almost effectively on-die interconnect characteristics.

You can also do a better job at doing power management, both across-die and in-die with Power Via, so you're not hitting your head on any of the power limits, even though power will be a big issue there. So, it really is the combination of lithography breakthroughs, power breakthroughs, new transistor structures, but most importantly, the 2.5D and 3D packaging technologies.

Mark Hachman, PCWorld: Just a very simple high-level manufacturing question. Your statement about keeping up or superseding Moore's Law in the next decade? Is this something that you think is going to be unique to Intel, or do you think your competitors will also keep pace?

Gelsinger: I described four things today that I think enable us to go at Moore's or "Super Moore's Law." You know, I said EUV, which is obviously available to the industry. But we're going to be advantaged at High NA, the second generation of EUV. We said that RibbonFET, a fundamental new transistor architecture. And as we look at where we are versus others, we think we're comfortably ahead of anybody else with a gate-all-around structure that we'll be putting into mass manufacturing in '24.

We feel comfortably ahead, and I would also say that almost since the planar transistor design through to the strained metal gate FinFET, Intel's always led in transistor architecture. So, I think that we're advantaged meaningfully in RibbonFET. Power Via, nobody else has anything like it. I think we're fundamentally highly differentiated with our backside power delivery architecture and the packaging technology, with Foveros, EMIB, Omni Foveros — these technologies are comfortably ahead of where others are in the industry.

So, if we bring that together, this idea with a multi-tile, multi-chip 2.5D or 3D packaging approach, we're going to be well above Moore's Law by the end of the decade.

I think we're going to be comfortably ahead of anybody else in the industry. It's not that nobody else is going to be participating, but I expect as we look at those coming together, we're just going to be adding advantage over these four domains as we look out over the rest of the decade.

And that's what we've said as we think about our IDM 2.0, that we'll get back to parity, get to leadership, and then have sustained leadership. We're laying down the tracks for sustained leadership across the process technology. Moore's Law is alive and well. As I said in the keynote, until the periodic table is exhausted, we ain't done.

Paul Alcorn, Tom's Hardware: Hello, Pat. Investing in fab capacity is one of the most capital-intensive exercises imaginable, and those big multi-billion dollar bets have to be placed years in advance. Can you tell us what type of measures Intel is taking to ensure that it doesn't over-invest and have excess fab production capacity in the event of an industry downturn?

Gelsinger: Great question. One thing is, you know, obviously, we do extraordinary amounts of market modeling, industry modeling for PC growth, server growth, network growth, graphics growth, etc. A lot of that goes into what we call 'LRP,' our five-year long-range plan. So, we want to have a better bead on where the market is going than anyone else.

But, then against that, we're applying what we call smart capital. For instance, right now I lust for more fab capacity; Intel has under-invested for a number of years. Intel has always had a spare shell, as we would call it, and this is one of the principles of smart capital, is that you always have spare shells. [A shell is effectively a fab that doesn't have tools installed.]

If you take a modern Intel 20A fab, it's going to be right around $10 billion, but you invest about $2 billion in the first two years. So, it's actually a fairly capital-efficient time, where you get a lot of time benefit — two years for only $2 billion, and you populate it with equipment in years two to three-and-a-half when the fab comes online. Part of our initiative is to build shells. Get greenfield capacity in place so we can build shells and have more flexibility and choice for the timing of the actual capital build. One is to get more of the shell capacities in place.

Secondly, with our IDM 2.0 strategy, we're going to consistently use foundries. And with that, maybe a quarter of my capacity is from my external foundry partners as well. But I'm also going to have tiles [chip components] that run on [produced at] internal and external foundries, so that I'm going to have flexibility as I move up or down that curve, to be able to flex things in or flex things out appropriately.

Third, we've said we're going to capitalize some of these expanded capabilities based on government investments as well as customer investments. If a customer of foundry wants to be at 10K wafer starts per week in their capacity from us, wow, that's a whole fab! Snd we're going to have prepays and other contractual relationships with them that offset our capital risk, in a fair way. This is exactly what TSMC and Samsung and Global Foundries are doing now, so we'll leverage their capacity, Clearly, you've heard of the CHIPS Act and the equivalent CHIPS Act in Europe. There's government willingness to invest in this area, so that's going to help us moderate some of that capital risk.

So those are the three tenants of smart capital. Now, underneath that, let's say, miserably, that I have too much [production] capacity. That's something I can't even fathom for the next couple of years. But let's say that we're actually in a situation that I actually have a little bit too much capacity. What am I going to do?

Well, I'm going to go win more market share. We've had declines in market share in some areas, we're going to go apply that capital to gain more share back in PC, servers, and other places. If we're not capacity constrained, we'll go gain share. And we believe we're in a position, as our products get better, to do that in a very profitable way.

You know, secondly, we'll go gain more foundry customers. We have an exciting new business, and right now all of the foundry industry is highly constrained on capacity, so go win more foundry.

Or third, I'll go flex more capacity [to] internal from external, from our use of external foundries as well. The strategy that we're setting up gives me extraordinary capital efficiency, as well as capital flexibility. Ultimately, all three of the ones I just told you are highly margin-generative for the company. Whether I'm gaining market share, whether I'm gaining more foundry customers, or moving from external to internal at a much better margin structure.

So overall, this is how we're laying out our smart capital strategy. And as that comes to scale over the next couple of years, I think it just positions us in a phenomenal way.

Timothy Pricket Morgan, The Next Platform: I'm trying to understand the new Aurora system; the original machine was supposed to be north of an exascale and $500 million. Now it's two exaFLOPS, or in excess of two exaFLOPS. You've got a $300 million write-off for federal systems coming in the fourth quarter. Is that a write-off of the original investment? Is Argonne getting the deal of the century on a two exascale machine? Pat, help me understand how this is working.

Gelsinger: You know, clearly, with the original concept of Aurora, we've had some redefinitions of the timelines and the specs associated with the project. And obviously, some of those earlier dates when we first started talking about the Aurora project, we've moved out and changed the timelines for a variety of reasons. Some of those changes will lead to the write-off that we're announcing right now. Basically, the way the contract is structured, part of it is, is the moment that we deliver a certain thing, we incur some of these write-offs simply from the accounting rules associated with it. As we start delivering it [Aurora], some of those will likely get reversed next year as we start ramping up the yields of the products. So, some of it just ends up being how we account for and how the contracts were structured.

On the two versus one exaFLOP, largely Ponte Vecchio, the core of the machine, is outperforming the original contractual milestones. So, when we set it up to have a certain number of processors, and you can go do the math of what two exaFLOPS is, we essentially overbuilt the number of sockets required to comfortably exceed one exaFLOP. You know, now that Ponte Vecchio is coming in well ahead of those performance objectives for some of the workloads that are in the contract, we're now comfortably over two exaFLOPS. So, it's pretty exciting at that point, that it will go from one to two pretty fast.

But to me, the other thing that's really exciting in this space is our Zetta Initiative. We've said that we're going to be the first to Zettascale by a wide margin. And we're laying out as part of the Zetta initiative what we have to do in the processor, the fabric, the interconnect, and the memory architecture, what we have to do for accelerators and the software architecture to do it. So Zettascale in 2027, a huge internal initiative that's going to bring many of our technologies together. 1000X in five years, that's pretty phenomenal.

Ian Cutress, AnandTech: Earlier in the year, as part of this IDM 2.0 initiative, one of the big announcements was a collaboration with IBM. IBM is obviously big in the field, with lots of manufacturing expertise. But we were promised more color on that partnership by the end of year. Is there any chance you can provide that color today?

Gelsinger: I don't have a whole lot more to say, so let me just characterize it a little bit. You know, it's one of the things I think you will be hearing more from us more before the year is out. But basically, we're partnering with IBM. Think of them as another components research partner with us, and advanced semiconductor research as well as advanced packaging research. So, we're partnering with IBM in those areas. IBM is looking for a partner as well on their manufacturing initiatives and what they require for their products and product lines, and we're aligning together on many of the proposals that you've seen, such as the RAMP-C proposal. We were just granted phase one of that, and IBM to partner with us in those areas. So, it's a fairly broad relationship, and you'll be hearing more from us before year-end, I expect.

Unknown participant: So, Pat, you've been talking a lot about IDM 2.0 and the concept, but I'm wondering what is it that makes it different from 1.0? What elevates it above buzzword status? I've come to believe that IDM fab-less, fab light concepts are really distinctions with very little difference.

Gelsinger: Well, for us, when we talk about IDM 2.0, we talked about three legs: One is a recommitment to IDM 1.0, and design and manufacturing at scale. Second is the broad leveraging of the foundry ecosystem. Third, and most importantly, is becoming a foundry and swinging the doors of Intel fabs, packaging, our technology portfolio, and being wide open to engage with the industry — designing on our platforms with our IP and opening up the x86 architecture, as well as all the other IP blocks and graphics, I/O, memory, etc., that we're doing.

The third element of that, to me is what makes IDM 2.0, IDM 2.0. The other thing I'd add to that Dan is, and I use this language inside and outside the company, IDM makes IFS (Intel Foundry Services) better, and IFS makes IDM better.

In a quick example of both — IDM, my foundry customers get some leverage all of the R&D and IP that I'm creating through my internal design, right? Foundries have to go create all of that. I get to leverage 10's of billions of capital, many billions of R&D, the most advanced components research on the planet…essentially for free for my foundry customers. It's an extraordinary asset, making x86 or the design blocks available for foundry customers. At the same time, foundry is driving us to do a better job on IDM. We're engaging with the third-party IP ecosystem more aggressively, the EDA tool vendors more aggressively.

For instance, our engagement with Qualcomm. They're driving us to do a more aggressive optimization for power/performance than our more performance-centric product lines would be, so they're making IDM better by the engagement with IFS customers, standardized PDKs, and other things. So IDM and IFS, if this really gets rolling as I'm envisioning it to be, they're powerfully going to be reinforcing each other.

Marco Chiappetta, Hot Hardware: So based on what your competitors are doing in the client space, it seems like a tighter coupling with software and operating systems is necessary to extract maximum performance and efficiency from future platforms. Is Intel's engagement with Microsoft and other OS providers changing at all? And will the OS providers perhaps affect how processes are designed moving forward?

Gelsinger: I'm going to comment, and then I'll ask Greg to help a little bit. The case in point right now is what we just did with Intel Bridge Technology. Panos [Panay] from Microsoft was here, and they took a major piece of technology and are making it now part of standard Windows that they're shipping. And I'll say, it's not the first time that Microsoft has done that with Intel. But it's been a long time since we've had that major functionality.

Microsoft and Intel, we're redefining our relationship between Satya [Nadella] and I, and we've simply termed it as "virtual vertical." How do we bring the same benefits of a much more intimate, dedicated partnership with each other to revitalize and energize the PC ecosystem? I think Bridge Technology is a great example, it brings the entire Android application ecosystem into the PC environment. So, this is a great example, and more such things are well underway. So Greg, your thoughts?

Greg Lavender, Intel CTO and SVP/GM: So I'd say I have hundreds of resources in software engineering working very closely with Microsoft every day all day, every night now, with the core internals of Windows optimized for our platforms. We co-developed this [garbled] capability that we talked about, which provides machine learning heuristics to the Windows scheduler for our E-core and P-core capabilities. So, we are basically looking, real-time, during execution of Windows and applications on Windows to assign those threads to the most optimal cores at the right time.[…]

Cutress: One of the goals of IFS is to enable Intel's extensive catalog of IP for foundry customers looking to build on their designs. What I'm going to ask here is very specific, because it's kind of been hinted at, but not explicitly stated: (a) Will Intel be offering its x86 IP to customers, (b) will that be as an ISA license or as a core license, (c) will that IP only be able to be manufactured at Intel or is there scope for other foundries, and (d) will there be an appreciable lag between the x86 designs that Intel uses in its leading-edge products compared to what IFS customers to use?

Gelsinger: A lot in this, so let's tease that apart a bit. What we've said is that we are going to make x86 cores available as standard IP blocks on Intel Foundry Services. So, if you founder on Intel processes, we will have versions of the x86 cores available. You know, I do say cores, because there's going to be, you know, based on the cores that we're building, E- cores, P-cores, for our standard product lines, so we'll be making them available. So you could imagine an array of E-cores or P-cores as a Xeon-like product that's combined with unique IP for a cloud vendor. Well, that'd be a great example of where we'd have what I call hybrid designs. It's not their design, it's not our design, it's bringing those pieces together.

We're working on the roadmap of cores right now. So which ones become available, big cores, little cores, which ones first, the timing for those. Also, there's a fair amount of work to enable the x86 cores with the ecosystem. And as you all know very well, the Arm ecosystem has developed interface standards and other ways to composite the entire design fabric. So right now, we're working on exactly that. A fair amount of the IP world is using Arm constructs versus x86 constructs. And these are interrupt models, things like memory ordering models, and there's a variety of those differences that we're haggling through right now.

And working with customers, we have quite a lot of interest from customers for these cores. So we're working with them, picking some of the first customers and they have a range of interests from fairly embedded-like use cases up to HPC use-cases with data center and cloud customers somewhat in the middle of those. So, a whole range of different applications, but we're working through the details of that now.

We clearly realize the criticality of making this work inside of our design partner and customers' EDA environments and the rest of their IP that they're bringing to the discussion. Does that help to frame it better?

Cutress: I think the last piece of that is going to be understanding the lag between what you use yourself and what the customers use.

Gelsinger: There's going to be minimal lag. For instance, on my IP teams internally, they act like a Synopsys IP team. Because they're delivering, for instance, an E-core or P-core to the Xeon design team or to the Meteor Lake design team, whichever one it would be. Largely, as those cores become available, we're going to be making those available to our internal teams and our external team, somewhat coincidently going forward. So, there's not a lag, we're making the IP available and as customers start getting firmed up on their design approaches, there will be a more mature answer to your question, but we do not expect that there will be any meaningful if any lag.

Gelsinger's closing comments:

I look forward to seeing you all in person again. I hope that you're feeling today like what's old is new again. Many of the characteristics that Intel was famous for in the past, we've lost. Some of those genetics, we are rebuilding those in real-time.

I think Intel On today was a good example of that to step forward. And we look forward to building on the great relationships with many of you as we look to the future. Today was a great day for geeks. Thanks for joining us.

- MORE: Best CPUs for Gaming

- MORE: CPU Benchmark Hierarchy

- MORE: AMD vs Intel

- MORE: All CPUs Content

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

-Fran- Those were some very interesting answers, for sure.Reply

So Intel is not quite licensing X86, but doing the same thing AMD is doing trying their best to offer the same exact "bleeding edge" design IP they would themselves use for their own CPU/accelerator lines. That's interesting and highly dangerous to AMD. It was also interesting the example of Qualcomm and how you can read between the lines there that most of their low power nodes are probably (or most likely) used by them instead of Intel itself. And the awkward explanation around Moore's Law; I'm not sure if it should be taken seriously, but I guess I can understand what he's trying to say.

What I'm a bit wary of is this explicit mention of "WIntel is back with a vengeance" hint. Sure, get in bed with MS, but don't do your monopolistic garbage anymore Intel, please. Also, I hope AMD can keep MS happy, so they keep on getting their business for the consoles and Surface stuff going forward.

Regards. -

warezme Intel has been stagnant for so many generations of chips because it was on top and felt no need to move beyond 4 cores and a slight bump in performance while racking in the cash. It got caught with it's pants down. Catching up now and reaching competitiveness isn't necessarily doing anything super Moore's Law or whatever they want to call it. It's finally providing tangible performance and feature updates they have ignored for generations. I have no sympathy for them.Reply -

waltc3 Interesting to see Intel finally interested in the future (since the present is not so hot for them...;)) Neither Intel nor AMD has manufactured and sold "x86" CPUs for many years (something Apple can't quite figure out.) The CPUs they sell are compatible with the x86 instruction set but not even close to being limited by it. All of the bunk you may read on Mac sites about how "limited" current x86 CPUs are is pure bunk. It did apply in the 80's and 90's--it no longer does, and hasn't for a long time. It's been a long time since either company has sold chips like 8086/88 80/2/3/4/86 CPUs that were authentic "x86" CPUs in their day. Anyway, it's good to see Intel hustling for a change...;)Reply -

passivecool ReplyWe feel comfortably ahead…

This has been the problem of the last ten years. Intel got way to comfortable being ahead.

Now I am comfortably sure that they have felt the breath of the wolf in the small of their neck and are have been feeling anything other than comfortable.

But since 'corfortable' was quoted seven times in the article, we can all rest assured that intel has the intent to use the crushing force of many billions to get itself comfortable again.

So that it can go back to sleep, undisturbed, like a man rudely woken by a loud neighbor or a bad dream. -

PapaCrazy Replywarezme said:Intel has been stagnant for so many generations of chips because it was on top and felt no need to move beyond 4 cores and a slight bump in performance while racking in the cash. It got caught with it's pants down. Catching up now and reaching competitiveness isn't necessarily doing anything super Moore's Law or whatever they want to call it. It's finally providing tangible performance and feature updates they have ignored for generations. I have no sympathy for them.

The quad core limit upset enthusiasts like us who frequent these kinds of forums, but I'm comvinced their overall market stagnation came from a neglect of efficiency. Servers that were sucking down more watts, requiring more cooling and higher energy bills than necessary, in addition to their inflated HEDT/Xeon prices. Mobile chips (in a laptop/device market that dwarfs gaming PCs/workstations) have been overheating, wearing down batteries, and possibly causing higher failure rates than previous generations. This lack of efficiency prompted Apple to go from customer to competitor in a short time, and led to AMD's domination particularly in mobile. While I might entertain the idea of an Alder lake desktop, I will accept nothing else but an AMD CPU in my next laptop I purchase. They will not catch up to TSMC, and all of the companies TSMC is producing chips for, until their node (regardless of nm measurement of name) is atleast as efficient.

passivecool said:This has been the problem of the last ten years. Intel got way to comfortable being ahead.

Now I am comfortably sure that they have felt the breath of the wolf in the small of their neck and are have been feeling anything other than comfortable.

But since 'corfortable' was quoted seven times in the article, we can all rest assured that intel has the intent to use the crushing force of many billions to get itself comfortable again.

So that it can go back to sleep, undisturbed, like a man rudely woken by a loud neighbor or a bad dream.

A classic case of resting on laurels. Hopefully other American tech companies, and Intel itself, studies it as a cautionary tale. -

korekan They realize that with AI in chip going forward x86 might be run on arm and vice versa and with minimum different performance. They will be doomed once it completely released. Just like blackberry and nokiaReply -

Howardohyea Interesting to see a deep dive and interview with Intel's CEO, reminds me of the article back then with an interview with Bob Swan. I hope more of these articles show up, with more companies :)Reply -

cyrusfox Reply

I have an AMD 4700U laptop spec with 32GB of DDR4 and a 1tb m.2 WD Black SN750, and it flies! But if I had to do it again, I wouldn't nor would I recommend it due to the persistent bugs. Most well known are the USB driver issues (Random disconnecting, really great when offloading pictures from a photo shoot). How about the web cam also randomly not working same with mic and speakers.PapaCrazy said:While I might entertain the idea of an Alder lake desktop, I will accept nothing else but an AMD CPU in my next laptop I purchase. They will not catch up to TSMC, and all of the companies TSMC is producing chips for, until their node (regardless of nm measurement of name) is atleast as efficient.

Even with my crappy Intel Celeron platforms that I subjucate the kids to show much better stability. I can't wait for Alder lake and am especially excited for what the e-cores can do there (Alder lake makes way more sense on the mobile to be switching to efficiency). Hopefully you have better luck than me, but I will side step AMD platforms until they can figure out how to write better drivers and support their platform to a better level of stability. Intel has us spoiled expecting everythign to jsut work thanks to the amazing driver support and QC they put there platform before launch, I really took for granted that stability until programs crash at critical times, takes me back 20 years... -

PapaCrazy Replycyrusfox said:I have an AMD 4700U laptop spec with 32GB of DDR4 and a 1tb m.2 WD Black SN750, and it flies! But if I had to do it again, I wouldn't nor would I recommend it due to the persistent bugs. Most well known are the USB driver issues (Random disconnecting, really great when offloading pictures from a photo shoot). How about the web cam also randomly not working same with mic and speakers.

Even with my crappy Intel Celeron platforms that I subjucate the kids to show much better stability. I can't wait for Alder lake and am especially excited for what the e-cores can do there (Alder lake makes way more sense on the mobile to be switching to efficiency). Hopefully you have better luck than me, but I will side step AMD platforms until they can figure out how to write better drivers and support their platform to a better level of stability. Intel has us spoiled expecting everythign to jsut work thanks to the amazing driver support and QC they put there platform before launch, I really took for granted that stability until programs crash at critical times, takes me back 20 years...

Dang, I had heard about the USB issue on X570 mobos, but assumed that wasn't an issue on the laptops. Thank you for the heads up. Had my eye on the upcoming Asus 16" OLEDs that use AMD 5900hx, but I will look further into issues before making any decisions. Stability is my #1 priority. -

watzupken Reply

I agree. I was certainly very annoyed at Intel for stagnating the CPU market for more than a decade of dual and quad core CPUs, and their extensive toll gates to get a more feature rich CPU. For example, if you want hyper threading, then you pay more. More cache, sure, pay more. Overclocking, pay more get a K series processor + a Z series motherboard. Comparatively, the product stack is a lot more simplified with AMD's Ryzen. You generally buy a chip, and it is unlocked for CPU and memory overclocking, and without a need for a top end chipset. Plus you generally get Hyper threading out of the gate and cache sizes that differs due to number of cores, and not artificially limited.PapaCrazy said:The quad core limit upset enthusiasts like us who frequent these kinds of forums, but I'm comvinced their overall market stagnation came from a neglect of efficiency. Servers that were sucking down more watts, requiring more cooling and higher energy bills than necessary, in addition to their inflated HEDT/Xeon prices. Mobile chips (in a laptop/device market that dwarfs gaming PCs/workstations) have been overheating, wearing down batteries, and possibly causing higher failure rates than previous generations. This lack of efficiency prompted Apple to go from customer to competitor in a short time, and led to AMD's domination particularly in mobile. While I might entertain the idea of an Alder lake desktop, I will accept nothing else but an AMD CPU in my next laptop I purchase. They will not catch up to TSMC, and all of the companies TSMC is producing chips for, until their node (regardless of nm measurement of name) is atleast as efficient.

I also agree that Intel is losing market share mostly due to poor power efficiency with their chips. Which I believe is the reason why big companies are moving to create their own custom SOC with ARM cores. If anything, Apple's M1 chips showed comparable performance with substantial power savings over any X86 processors. I've decided to try out the M1 chip at the start of the year, and seriously, I've never looked back after that. It may not have amazing single core performance for example, but at no point do I feel that it is slow. The battery life and the lack of any active cooling is a win for me. Also at this point, I think AMD offers more bang for buck when compared to Intel's Tiger Lake when you consider that AMD offers more cores and better multithreaded performance for their U series processor, and their H series are cheaper than an Intel equivalent by quite a fair bit. Alder Lake may change this, but I feel Intel's priority to squeeze out more performance is not going to change the fact that the chips will still be more power hungry and run hotter when the system is under load.

Intel has lost their competitive advantage in their fab, and I feel they are unlikely to win it back anytime soon. Their competitors were chugging along aggressively, while Intel was sleeping then. And even now, their competitors are still aggressively pushing forward.