Nvidia's RTX 40-Series Laptops Don't Bode Well for RTX 4060, 4050 Desktop GPUs

Prepare to be disappointed

Nvidia's Ada Lovelace architecture ushers in a new level of performance at the top of the stack, with the RTX 4090 besting the previous generation RTX 3090 Ti by 52% on average in our rasterization benchmarks, and 70% in ray tracing benchmarks — both at 4K, naturally. The 4090 now sits comfortably atop our GPU benchmarks hierarchy and ranks as one of the best graphics cards around, at least if you have deep pockets.

Unfortunately, the step down from the 4090 to the RTX 4080 is rather precipitous, dropping performance by 23% for rasterization and 30% for ray tracing. Dropping down another level to the new RTX 4070 Ti knocks an additional 22% off the performance relative to the 4080. If you're keeping track — and we definitely like to keep score — that means the third-string Ada card sporting the AD104 GPU is slower than the previous generation 3090 Ti, nevermind Nvidia's claims to the contrary that rely on benchmarks using DLSS 3's Frame Generation.

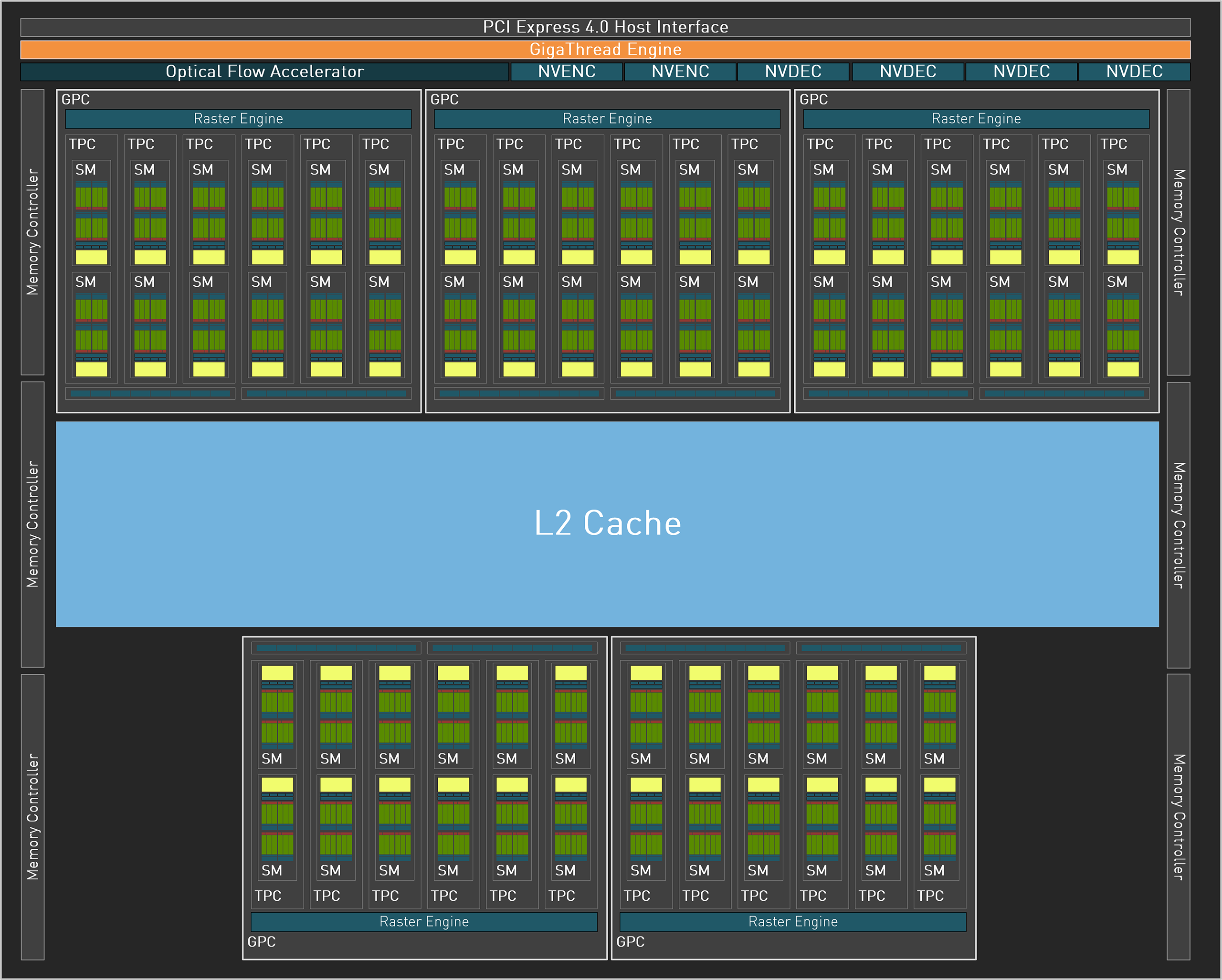

Perhaps more alarming with the RTX 4070 Ti is that it only has a 192-bit memory interface. It still has 12GB of GDDR6X memory, and the large L2 cache in general means that the narrower bus isn't a deal killer, but things don't look so good as we eye future lower-tier RTX 40-series parts like the 4060 and 4050.

Nvidia recently announced the full line of RTX 40-series laptop GPUs, ranging from the RTX 4090 mobile that uses the AD103 GPU (basically a mobile 4080) down to the anemic-sounding RTX 4050. Here's the full list of specs for the mobile parts.

| Graphics Card | RTX 4090 for Laptops | RTX 4080 for Laptops | RTX 4070 for Laptops | RTX 4060 for Laptops | RTX 4050 for Laptops |

|---|---|---|---|---|---|

| Architecture | AD103 | AD104 | AD106? | AD106? | AD107? |

| Process Technology | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N |

| Transistors (Billion) | 45.9 | 35.8 | ? | ? | ? |

| Die size (mm^2) | 378.6 | 294.5 | ? | ? | ? |

| SMs | 76 | 58 | 36 | 24 | 20 |

| GPU Shaders | 9728 | 7424 | 4608 | 3072 | 2560 |

| Tensor Cores | 304 | 232 | 144 | 96 | 80 |

| Ray Tracing "Cores" | 76 | 58 | 36 | 24 | 20 |

| Boost Clock (MHz) | 1455-2040 | 1350-2280 | 1230-2175 | 1470-2370 | 1605-2370 |

| VRAM Speed (Gbps) | 18? | 18? | 18? | 18? | 18? |

| VRAM (GB) | 16 | 12 | 8 | 8 | 6 |

| VRAM Bus Width | 256 | 192 | 128 | 128 | 96 |

| L2 Cache | 64 | 48 | 32 | 32 | 24 |

| ROPs | 112 | 80 | 48 | 32 | 32 |

| TMUs | 304 | 232 | 144 | 96 | 80 |

| TFLOPS FP32 (Boost) | 28.3-39.7 | 20.0-33.9 | 11.3-20.0 | 9.0-14.6 | 8.2-12.1 |

| TFLOPS FP16 (FP8) | 226-318 (453-635) | 160-271 (321-542) | 91-160 (181-321) | 72-116 (145-233) | 66-97 (131-194) |

| Bandwidth (GBps) | 576 | 432 | 288 | 288 | 216 |

| TDP (watts) | 80-150 | 60-150 | 35-115 | 35-115 | 35-115 |

It's a reasonably safe bet that the desktop RTX 4070 will use the same AD104 as the RTX 4070 Ti, just with fewer SMs and shaders. Desktop RTX 4060 Ti, assuming we get that anytime soon, may or may not use AD104; the only other option would presumably be the AD106 GPU used in the mobile 4070/4060. And that's a problem.

The previous generation RTX 3060 Ti came with 8GB of GDDR6 on a 256-bit interface. We weren't particularly pleased with the lack of VRAM, especially when AMD started shipping RX 6700 XT (and later 6750 XT) with 12GB VRAM. Nvidia basically did a course correction with the RTX 3060 and gave it 12GB VRAM, making it a nice step up from the previous RTX 2060 — and even the 2060 eventually saw 12GB models, though prices made them mostly unattractive.

Now we're talking about RTX 4060 most likely going back to 8GB, and that would suck. There are plenty of games now that can exceed 8GB of VRAM use, and that number will only grow in the next two years. But Nvidia doesn't have many other options, since GDDR6 and GDDR6X memory capacities top out at 2GB per 32-bit channel.

There's potential to do "clamshell" mode with two memory chips per channel, one on each side of the PCB, but that's pretty messy and not something we'd expect to see in a mainstream GPU. That could get the 128-bit interface up to 16GB of VRAM, which again would be odd as the higher-tier parts like the 4070 Ti only have 12GB. Still, that sounds better than an RTX 4060 8GB model to me!

And what about the RTX 4050? Maybe Nvidia will stick with the 128-bit interface on the AD106 GPU and just skip using AD107 on a desktop part — that's basically what happened with GA107 that was almost exclusively used for laptop RTX 3050. But if it does try to use AD107 in a desktop, it would only have up to 6GB VRAM, again with clamshell VRAM being a potential out.

It's not just the memory capacities that raise some concern. We said in the RTX 4070 Ti review that performance wasn't bad, but it also wasn't amazing. It's basically a cheaper take on an RTX 3090, with half the VRAM and lower power use. The 4070 Ti gets by with 60 Streaming Multiprocessors (SMs) and 7680 CUDA cores (GPU shaders), slightly more than the outgoing RTX 3070 Ti. But AD106 could top out at just 40 SMs, maybe even 36 SMs, which would put it in similar territory to the RTX 3060 Ti on core counts, leaving only GPU clocks as a performance boost.

Put those two things together — insufficient VRAM and relatively minor increases in GPU shader counts — and we're likely looking at modest performance improvements compared to the previous Ampere generation GPUs.

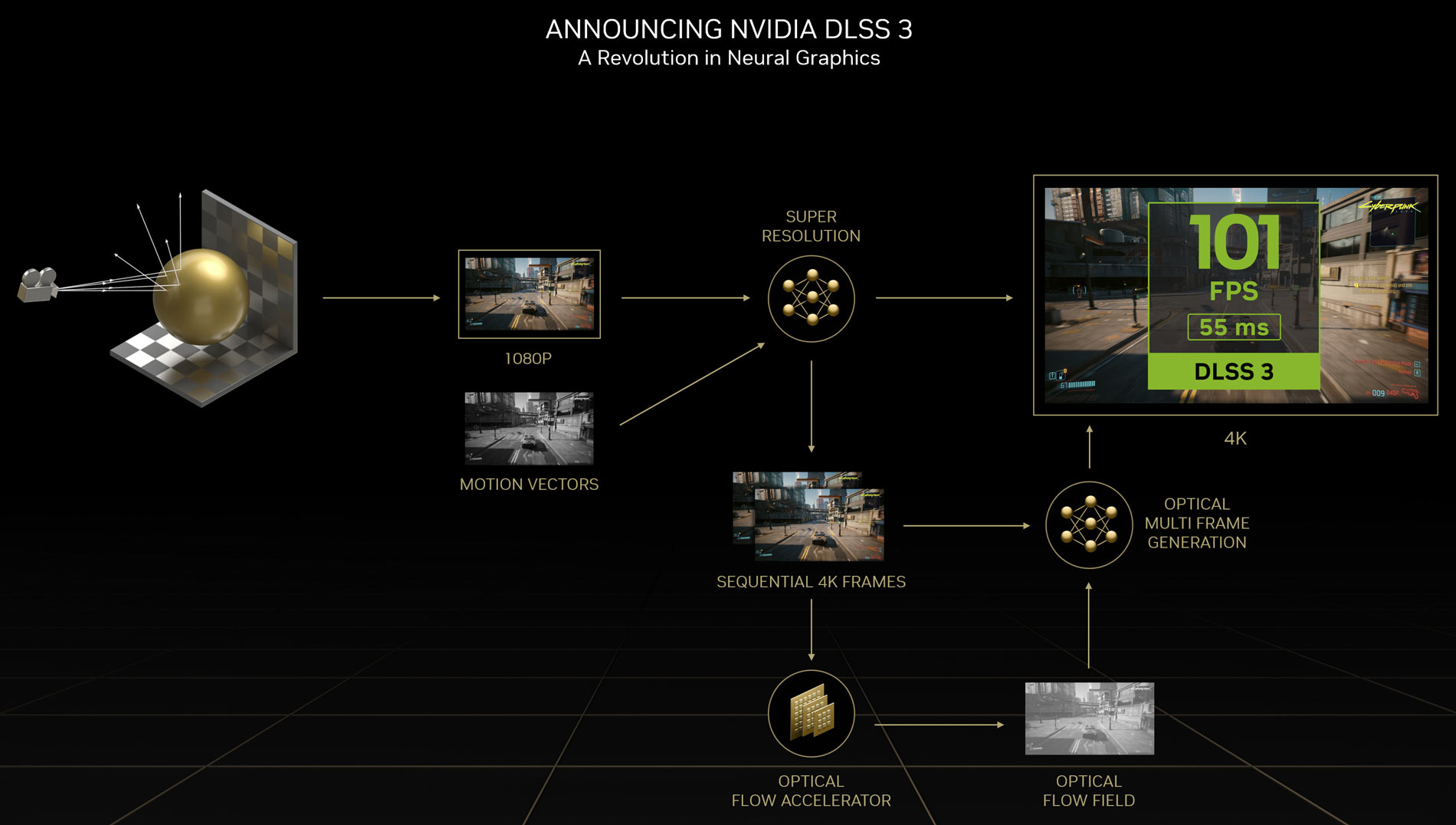

Nvidia will then trot out DLSS 3 performance improvements, which only apply to a subset of games and also don't offer true performance increases, and things start to sound even worse. Part of the benefit of having a GPU that can run games at 120 fps today is that, as games get more demanding, it will still be able to do 60 fps in most games a few years down the road. But what happens when those aren't real framerates?

Let's assume a game running at 120 fps courtesy of DLSS 3's Frame Generation technology, with a base performance of 70 fps. All is well and good for now, but down the road the base performance will drop below 40 fps as games become more demanding, and eventually it will fall below 30 fps. What we've experienced is that Frame Generation with a base framerate of less than 30 fps still feels like sub-30 fps, even if the monitor is getting twice as many frame updates per second.

That same logic applies to higher framerates as well, so DLSS 3 at 120 fps with a 70 fps base will still feel like 70 fps, even if it looks a bit smoother to the eye. Most people won't be able to tell the difference between input rates at 70 samples per second and inputs at 120 samples per second. But when you start to fall below 40, even non-professional gamers will start to feel the difference.

Or to put it more bluntly: DLSS 3 and Frame Generation are no panacea. They can help smooth out the visuals and maybe improve the feel of games a bit, but the benefit isn't going to be as noticeable as actual fully rendered frames with new user input factored in, particularly as performance drops below 60 fps.

That's not to say it's a bad technology — it's quite clever actually — and we don't mind that it exists. But Nvidia needs to stop comparing DLSS 3 scores against non-DLSS 3 results and acting like they're the same thing. Take the base framerate before Frame Generation and add maybe 10–20 percent and that's what a game feels like, not the 60–100 percent higher fps that benchmarks will show.

Back to the topic at hand, the future mainstream and budget RTX 40-series GPUs will no doubt beat the existing models in pure performance, and they'll offer DLSS 3 support as well. Hopefully Nvidia will return to prices closer to the previous generation, though, because if the RTX 4060 costs $499 and the RTX 4050 costs $399, they're going to end up being minor upgrades compared to the existing cards at those price points.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

bigdragon The RTX 40-series continues to be a disappointment. I'm surprised how close together the laptop 4070, 4060, and 4050 are. The specs make it look like there won't be a meaningful difference in performance unless you step up to the 4080 -- a GPU that likely will only appear in the oversized, gaudy, overpriced gamer laptops.Reply

Hopefully some manufacturer puts the 4070 in a 2-in-1 device, sells it in the USA, and prices it like other gaming laptops instead of putting it in some weird premium category. However, given what Nvidia has done on pricing, I'm not sure I want to know how expensive this year's systems are going to be. -

PlaneInTheSky ReplyThere are plenty of games now that can exceed 8GB of VRAM use.

Isn't this just because the memory is available. Many games just try to saturate the graphical memory with textures and shading code, because it's there so why no use it. A 12GB GPU might show 12GB in use, but that doesn't mean it can't run with 8GB.

I haven't seen any games that need more than 8GB of VRAM at 1080p, and 1080p would be kind of the target resolution for a 4050 no.

Steam shows 64% of Steam users are playing in 1080p, 11% plays in 1440p and only 2% at 4k.

To be honest, I could be wrong though, I don't follow every AAA game release. Needing more than 8GB VRAM at 1080p, just seems like...a lot. -

PlaneInTheSky I looked up some of the VRAM requirements of 2022 PC games with "demanding" graphics (subjective).Reply

Slim pickings in 2022, I really had to dig to find any "AAA" 3D PC games from 2022.

But still, none of them required anything more than 8GB VRAM, even for recommended specs.

The 4050 having "just" 8GB VRAM seems like a non issue?

VRAM requirements

A Plague Tale: RequiemMinimum: 4GB VRAM

Recommended: 8GB VRAM

Elden RingMinimum: 3GB VRAM

Recommended: 8GB VRAM

Need for Speed UnboundMinimum: 4GB

Recommended: 8GB

StrayMinimum: 1GB

Recommended: 3GB -

thestryker The only game that leaps to mind which can use over 8GB (without mods etc) at 1080p is Doom Eternal. 8GB really needs to be the starting point on desktop though and the bus width that nvidia is playing with doesn't really leave them any options. Given that their charts still show the 30 series covering lower end I'm not sure nvidia is even planning lower desktop parts any time soon.Reply -

ravewulf Alternatively, the laptop 4060 and 4050 might both be using AD107 but the laptop 4050 gets its bus cut down to 96-bit from the full 128-bit. This would mean the desktop versions may have higher specs, as NVIDIA is already doing with the other models.Reply -

bigdragon Reply

Have you considered the scenario of VR use? The 4050 is likely to be advertised as VR-ready just like the 3050 was. VR games can readily eat up tons of VRAM. While the user's primary display may be 1080p, there is a strong possibility of them plugging in a Quest headset or similar to experience VR with the power PC computing can provide.PlaneInTheSky said:I haven't seen any games that need more than 8GB of VRAM at 1080p, and 1080p would be kind of the target resolution for a 4050 no.

Furthermore, I don't trust big game studios to keep making games that fit within 8 GB VRAM. People said the same thing about 3 GB VRAM in the Radeon HD 7950 and 7970 being enough -- it wasn't! -

PlaneInTheSky ReplyFurthermore, I don't trust big game studios to keep making games that fit within 8 GB VRAM.

Game developers will just target what the average user has, like they always do.

Of the top 12 GPU in use by Steam users, only 1 out of those 12 has more than 8GB VRAM.

The average VRAM available is 6.2 GB.

Steam's most popular user GPU:

GTX 1650: 4 GB VRAM

GTX 1060: 3 GB - 6 GB VRAM

RTX 2060: 6 GB VRAM

GTX 1050 Ti: 4 GB VRAM

RTX 3060 (laptop): 6 GB VRAM

RTX 3060: 12GB

RTX 3070: 8 GB VRAM

GTX 1660 SUPER: 6 GB VRAM

RTX 3060 Ti: 8 GB VRAM

GTX 1660 Ti: 6 GB VRAM

GTX 1050: 4 GB VRAM

RTX 3050: 8 GB VRAM -

JarredWaltonGPU Reply

From my test suite, I can assure you that Far Cry 6, Forza Horizon 5, Total War: Warhammer 3, and Watch Dogs Legion all have performance fall off at 4K with 8GB, sometimes badly. Those are just four games out of 15. At 1440p, I think most of them all do okay, but I know a few other games in the past year have pushed beyond 10GB. I think Resident Evil Village and Godfall did, for example. And yes, you can turn down some settings to get around this, but the point is that we've got 12GB on a 3060 right now, and that feels like it should be the minimum for a mainstream GPU going forward. Anything using AD106 will presumably be limited to 8GB... the same 8GB that we had standard on R9 290/290X and GTX 1070 over six years ago.thestryker said:The only game that leaps to mind which can use over 8GB (without mods etc) at 1080p is Doom Eternal. 8GB really needs to be the starting point on desktop though and the bus width that nvidia is playing with doesn't really leave them any options. Given that their charts still show the 30 series covering lower end I'm not sure nvidia is even planning lower desktop parts any time soon.

As far as Steam goes, it tracks a very large group of users, many of whom only play on older laptops, stuff like DOTA and CSGO that will run on old hardware. The fact that the top 12 is dominated by lower cost GPUs isn't at all surprising. Fortnite hardware stats would probably look the same. But there are also roughly 5% of gamers that do have GPUs with more than 8GB, and more critically, most GPUs don't have more than 8GB. Like, the list of hardware with more than 8GB currently consists of:

RTX 4090

RTX 4080

RTX 4070 Ti

RTX 3090 Ti

RTX 3090

RTX 3080 Ti

RTX 3080 (both 12GB and 10GB)

RTX 3060

RTX 2080 Ti

RTX 1080 Ti

RX 7900 XTX

RX 7900 XT

RX 6950 XT

RX 6900 XT

RX 6800 XT

RX 6800

RX 6750 XT

RX 6700 XT

RX 6700

Radeon VII

Arc A770 16GB

Of those, only the 3060 and 6700 series are remotely mainstream, and Arc if we're gong to count that. But the point is we should be moving forward. Going from 8GB on a 256-bit interface to 8GB on a 128-bit interface will at times be a step back, or at best a step sideways. -

PlaneInTheSky I strongly agree with the comment about DLS 3.0.Reply

They're not real frames, and they introduce a delay. It adds half a frame of lag to delay the last frame and add in that interpolated frame in between.

A lot of players with lower end GPU play at relatively low, or very low, framerates.

For someone with a 4080 playing at 120 fps, half a frame delay is not a big deal. It's 1/240th of a second. But for someone with a low-end GPU playing at 30 fps or below, it is a big deal. It is extra lag you simply don't really want if your FPS is already low.

The lower your framerates, the more milliseconds of lag DLSS 3.0 introduces. When a game temporarily drops to 10 fps, and you delay that latest frame even more, that just tanks response time. It's like putting the chains on an already struggling GPU.

At 30 fps DLSS 3.0 adds 1/60th of an input delay. At a struggling 10 fps DLSS 3.0 adds 1/20th of a second of delay, that's an extra 50 milliseconds of lag, and you're definitely going to notice that, stutters in games will seem much worse.

I truly hope Nvidia is not going to pull out the "DLSS 3.0" fanfare if they make a desktop 4050 or 4060, because that extra bit of lag DLSS 3.0 introduces is negligeable at 120fps, but is a problem at 30fps and below. -

thestryker Reply

Yeah, but you're unlikely to have the graphics power to run any of those games well at 4k with lower end 40 series which is why I specified 1080p (though I'd bet most will be 1440p capable). We're probably still going to see 6GB desktop cards too which just shouldn't be happening anymore. I certainly agree it's a problem and it is only going to get worse as we move along.JarredWaltonGPU said:From my test suite, I can assure you that Far Cry 6, Forza Horizon 5, Total War: Warhammer 3, and Watch Dogs Legion all have performance fall off at 4K with 8GB, sometimes badly. Those are just four games out of 15. At 1440p, I think most of them all do okay, but I know a few other games in the past year have pushed beyond 10GB. I think Resident Evil Village and Godfall did, for example. And yes, you can turn down some settings to get around this, but the point is that we've got 12GB on a 3060 right now, and that feels like it should be the minimum for a mainstream GPU going forward. Anything using AD106 will presumably be limited to 8GB... the same 8GB that we had standard on R9 290/290X and GTX 1070 over six years ago.

Do you think this capacity issue with a low bit bus can be solved with Samsung's GDDR stacking technology they'd announced some weeks back?JarredWaltonGPU said:As far as Steam goes, it tracks a very large group of users, many of whom only play on older laptops, stuff like DOTA and CSGO that will run on old hardware. The fact that the top 12 is dominated by lower cost GPUs isn't at all surprising. Fortnite hardware stats would probably look the same. But there are also roughly 5% of gamers that do have GPUs with more than 8GB, and more critically, most GPUs don't have more than 8GB. Like, the list of hardware with more than 8GB currently consists of:

RTX 4090

RTX 4080

RTX 4070 Ti

RTX 3090 Ti

RTX 3090

RTX 3080 Ti

RTX 3080 (both 12GB and 10GB)

RTX 3060

RTX 2080 Ti

RTX 1080 Ti

RX 7900 XTX

RX 7900 XT

RX 6950 XT

RX 6900 XT

RX 6800 XT

RX 6800

RX 6750 XT

RX 6700 XT

RX 6700

Radeon VII

Arc A770 16GB

Of those, only the 3060 and 6700 series are remotely mainstream, and Arc if we're gong to count that. But the point is we should be moving forward. Going from 8GB on a 256-bit interface to 8GB on a 128-bit interface will at times be a step back, or at best a step sideways.