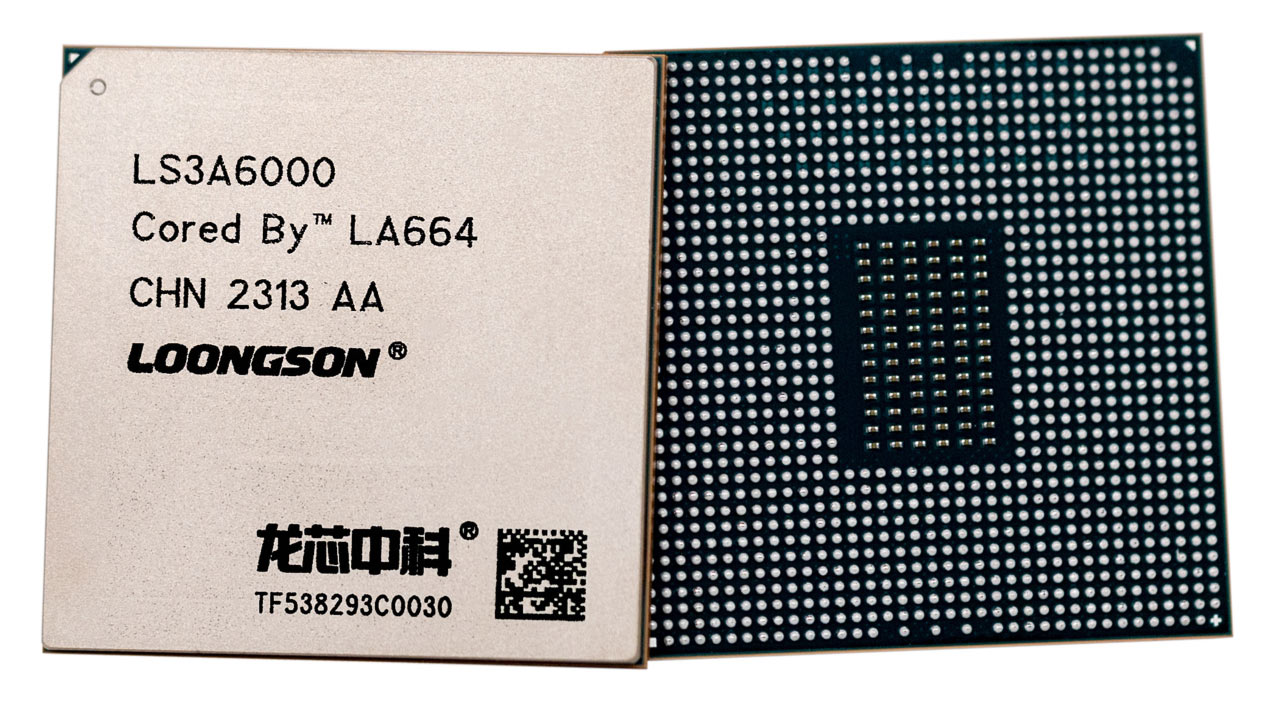

China's Loongson CPU seems to have IPC equivalent to Zen 4 and Raptor Lake -- but slow speeds and limited core count keeps 3A6000 well behind modern competition

Gotta get that clock speed up.

Loongson's 3A6000 CPU, though lacking in raw performance, is actually nearly on par with AMD's and Intel's latest architectures in respect to instructions-per-clock, according to Geekwan's review of the Chinese processor. Though the China-designed 3A6000 is nowhere near the performance level of the latest x86 and Arm CPUs, its high IPC shows promise for future generations of Loongson's chips, as long as the company can manage higher frequencies.

The 3A6000 uses the LoongArch architecture, an in-house design that has garnered some scrutiny for potentially being a clone of MIPS, which Loongson had formerly used for many years. The chip is fabricated on a 12nm process (probably from China's SMIC) and has four cores and eight threads capable of boosting to 2.5GHz under a 50 watt TDP. It's paired with an L2 cache of 256KB and an L3 cache of 16MB, and is compatible with DDR4-3200 memory. The 3A6000 is made for consumer PCs, and in theory competes with the lowest-end CPUs from AMD and Intel.

Given that the 3A6000 has such a low clock speed by today's standards (even the Pentium Gold G7400 has a boost clock of 3.7GHz), it wasn't surprising that Geekwan's benchmarks showed that Loongson's CPU wasn't an impressive performer. It couldn't even catch up to Intel's 2020-era Core i3-10100, which enjoyed roughly a 20% to 40% performance advantage. Efficiency wasn't impressive either, as the 3A6000 consumed about 40 watts to the 10100's 30 or so watts. It's not a great result for the 3A6000 considering the 10100's underlying technology is actually from 2016.

On the other hand, the 3A6000 has truly great IPC, or instructions-per-clock. This metric measures performance per clock cycle, and it basically evaluates the performance of the architecture itself. IPC is notoriously difficult to improve, and many designers improve performance by focusing on other specifications, like core count, clock speed, or cache.

| Row 0 - Cell 0 | Integer | Floating Point |

| 3A6000 | 4.8 | 6.0 |

| 3A5000 | 3 | 3.7 |

| Ryzen 9 7950X | 5.0 | 7.1 |

| Ryzen 9 5950X | 4.6 | 6.6 |

| Core i9-14900K | 4.9 | 7.8 |

In SPEC 2017's integer and floating point performance test with all CPUs locked to 2.5GHz (the maximum speed of the 3A6000), Loongson's chip looks far more potent. It's actually ahead of the Zen 3-based Ryzen 9 5950X and just barely behind the Zen 4-powered Ryzen 9 7950X and Raptor Lake Core i9-14900K. The comparison isn't quite as favorable for the 3A6000 in floating point performance, but it is nevertheless quite close. These results corroborate previous benchmarks that showed the 3A6000 packed some impressive IPC.

Loongson is far from being able to compete with modern flagship CPUs from AMD, Intel, Apple, and others. The 3A6000 can't truly harness its great IPC since it has such a low clock speed, and it's also lacking quite a bit in core count and cache size. The company's next-generation 3A7000 may improve on these points, as it's rumored to use a 7nm process, which may help to boost clock speeds and make more room for more cores and more cache.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

The Historical Fidelity It seems like the loongson cpu is using a short pipeline to yield similar IPC to AMD and Intel’s 14+ stage pipeline. The downside of a short pipeline is low fequency limits, but it’s very easy to implement to reach its competitors IPC. This just shows how much more advanced it’s competitors are that they can reach a similar IPC with 14+ stage pipelines and clock to 6 ghz.Reply -

LuxZg I believe this should be viewed against the 12nm production limitation. I wonder what would be possible if they had access to cutting edge process. Considering they are being compared to companies working on CPUs since "forever" they're doing pretty good. Now, if SMIC can do 7nm and possibly 5nm, and if government can help with this design similar as Huawei had huge resources tor their ARM CPU, they could be catching up. Modern processors barely advance gen on gen, most advances are due to manufacturing process and platform improvements (PCIe 5.0, DDR5, etc). So AMD/Intel won't be running away, and if China can keep pushing, they'll be closer and closer. Just saying... Good news is - this is and will stay product for internal market. Well, good news for Intel/AMD, bad for consumers and competition. For me there's no bad competition, the old corporations need kick in the tush to start moving from time to time.Reply -

The Historical Fidelity Reply

My 12nm AMD ryzen 2700x can do 4.3 ghz all day, my 32nm i7E-3960x from 2012 can do 5 ghz all day. 12nm is not the reason why it’s clocked at 2.5ghz. It’s directly related to the length of the pipeline which is why I’m saying loongson is not anywhere near on par with AMD and Intel. Short pipelined Intel pentium 3’s at 1.13 ghz performed similar to long pipeline pentium 4’s at 1.7ghz because Long pipelines reduce IPC in exchange for high clock speed stability. This pipeline effect is why loongson’s design is well behind AMD and Intel. For AMD and Intel to get the same IPC as loongson while utilizing a long pipeline capable of 6 ghz stability demonstrates a micro architecture years ahead of loongson.LuxZg said:I believe this should be viewed against the 12nm production limitation. I wonder what would be possible if they had access to cutting edge process. Considering they are being compared to companies working on CPUs since "forever" they're doing pretty good. Now, if SMIC can do 7nm and possibly 5nm, and if government can help with this design similar as Huawei had huge resources tor their ARM CPU, they could be catching up. Modern processors barely advance gen on gen, most advances are due to manufacturing process and platform improvements (PCIe 5.0, DDR5, etc). So AMD/Intel won't be running away, and if China can keep pushing, they'll be closer and closer. Just saying... Good news is - this is and will stay product for internal market. Well, good news for Intel/AMD, bad for consumers and competition. For me there's no bad competition, the old corporations need kick in the tush to start moving from time to time. -

bit_user The IPC of leading x86 CPUs is partly being held back by the ISA. I'm eager to see how the upcoming ARM cores from Qualcomm, AMD, Nvidia and others will compare.Reply -

DavidC1 Reply

It could be, but we can't say for sure.The Historical Fidelity said:It seems like the loongson cpu is using a short pipeline to yield similar IPC to AMD and Intel’s 14+ stage pipeline.

Clockspeeds aren't just a function of architecture, but the capability of the circuit designers and layout engineers in the team.

The 90's called, they want their claims back.bit_user said:The IPC of leading x86 CPUs is partly being held back by the ISA. I'm eager to see how the upcoming ARM cores from Qualcomm, AMD, Nvidia and others will compare.

Modern CPUs with billions of transistors aren't limited by the decoders anymore. That has been long past when engineers had transistor budgets measuring in the hundreds of thousands range.

Simple reason ARM vendors are leading is because both AMD/Intel has fallen completely flat on it's face over the years. You can tell by the comparison between AMD/Nvidia. The latter had far, far less missteps.

DEC in the 90's led everyone else despite having the same RISC instruction set. Then the team of DEC went to eventually work at Apple through acquisition of PA Semi. Along with them they had other brilliant engineers like Gerald Williams III, who moved on to find Nuvia, and breaking records again. As soon as Gerald left Apple, we had 4 generations of minimal gains from Apple chips.

Because we're outsiders it's very easy to fall into the trap the often clueless high level management with finance degrees fall into - the belief that it's the "tech" that makes the difference, when it's the ingenuity, perseverance, and creativity of the people that do.

The no-clues talked about the complexity of Sapphire Rapids as being the reason for downfall, when people in the know told for years how the management team led by Kraznich fired the ENTIRE SPR validation team at some point. That couldn't have had anything to do with SPR being delayed for 3+ years can't it? -

bit_user Reply

Look, I've been through this debate so many times I can't even keep track of whether or how many times I've debated it with you. So, I'm going to keep this short & sweet.DavidC1 said:The 90's called, they want their claims back.

AArch64 has an undeniable advantage in that its ISA is cheaper to decode and easier to scale up decoding. ARM's Cortex-X4 has a 10-way decoder, while Intel's Golden Cove is currently at just 6-way, and only a couple of those are fully general.

AArch64 has a more efficient memory consistency model.

AArch64 has twice as many general-purpose registers.

AArch64's SVE2 enables flexible scaling of vector width, without rewriting code. In a CPU with AVX10/512, the extra width potentially goes to waste, when executing code written with AVX2 or AVX10/256.

AArch64 has a 3-operand format, for most instructions, reducing the need for additional mov's.

Intel's announcement of APX is clear evidence that Intel is worried. Of the above advantages I listed, it enables them only to take #3 and #5 off the table. In exchange, it adds yet another prefix byte, which makes #1 even worse.

Their width can be a bottleneck. So, even if you take the area impact of the table, decoders still matter. I believe the power they require can also be relevant, in certain contexts.DavidC1 said:Modern CPUs with billions of transistors aren't limited by the decoders anymore.

Most of Sapphire Rapids' delay was due to manufacturing process, not validation. The bugs plaguing Sapphire Rapids only accounted for about the final year of delays in its launch date.DavidC1 said:the management team led by Kraznich fired the ENTIRE SPR validation team at some point. That couldn't have had anything to do with SPR being delayed for 3+ years can't it? -

The Historical Fidelity Reply

It could be circuit design and layout but that seems too rudimentary to mess up and this be the culprit. Im leaning towards a short pipeline, as one of the hardest parts of micro-architecture design is a high confidence branch predictor which becomes more and more important the longer the pipeline becomes as a mis-prediction will cause the entire pipeline to be flushed and the instructions to be re-run. A short pipeline can achieve high IPC with a low confidence branch predictor since the number of instructions flushed is maximum = to the number of stages. This is why pentium 4 was regarded as hot garbage with its 20+ stage pipeline mated with a mis-prediction prone branch predictor even though pentium 3’s branch predictor was objectively worse. I’m theorizing loongson does not have the experience in branch prediction to create a branch predictor that can be mated to a long pipeline design. However I could be wrong.DavidC1 said:It could be, but we can't say for sure.

Clockspeeds aren't just a function of architecture, but the capability of the circuit designers and layout engineers in the team.

The 90's called, they want their claims back.

Modern CPUs with billions of transistors aren't limited by the decoders anymore. That has been long past when engineers had transistor budgets measuring in the hundreds of thousands range.

Simple reason ARM vendors are leading is because both AMD/Intel has fallen completely flat on it's face over the years. You can tell by the comparison between AMD/Nvidia. The latter had far, far less missteps.

DEC in the 90's led everyone else despite having the same RISC instruction set. Then the team of DEC went to eventually work at Apple through acquisition of PA Semi. Along with them they had other brilliant engineers like Gerald Williams III, who moved on to find Nuvia, and breaking records again. As soon as Gerald left Apple, we had 4 generations of minimal gains from Apple chips.

Because we're outsiders it's very easy to fall into the trap the often clueless high level management with finance degrees fall into - the belief that it's the "tech" that makes the difference, when it's the ingenuity, perseverance, and creativity of the people that do.

The no-clues talked about the complexity of Sapphire Rapids as being the reason for downfall, when people in the know told for years how the management team led by Kraznich fired the ENTIRE SPR validation team at some point. That couldn't have had anything to do with SPR being delayed for 3+ years can't it? -

bit_user Reply

Branch prediction seems like the kind of thing which can be somewhat decoupled from the pipeline, itself. Also, as painful as it is to invalidate speculative executions, probably the bigger penalty associated with mispredections is having to take the latency hit of fetching instructions down the path you failed to predict. Especially if it's nowhere in the cache hierarchy, since DRAM can easily be around 500 cycles away!The Historical Fidelity said:Im leaning towards a short pipeline, as one of the hardest parts of micro-architecture design is a high confidence branch predictor which becomes more and more important the longer the pipeline becomes as a mis-prediction will cause the entire pipeline to be flushed and the instructions to be re-run.

BTW, don't forget that memory prefetchers are an important form of prediction.

This model of IPC is too simplistic. Since the days of Core 2, pipelines have again started to get longer, yet IPC has continued to increase.The Historical Fidelity said:A short pipeline can achieve high IPC with a low confidence branch predictor since the number of instructions flushed is maximum = to the number of stages. This is why pentium 4 was regarded as hot garbage with its 20+ stage pipeline mated with a mis-prediction prone branch predictor even though pentium 3’s branch predictor was objectively worse. -

The Historical Fidelity Reply

Oh I wasn’t saying that pipeline length is the only thing that affects IPC, the parts feeding the pipeline are also important to IPC. And today’s AMD/Intel designs are actually dynamic pipeline length, I believe Zen and Intel can be 14-19 stages depending on workload. That’s actually not that much of an increase from the original Core architectures 12-stages and Core 2’s 16 stages. Mind you, Core came right after Netburst Prescott with its 31-stage pipeline. Sandybridge to kabylake Intel architectures have all maintained a 14-19 stage dynamic pipeline so not really that much longer than the original core. I can’t seem to find any data on the number of stages in alderlake and newer architectures however it seems both Intel and AMD have decided 14-19 stages is the optimal length.bit_user said:Branch prediction seems like the kind of thing which can be somewhat decoupled from the pipeline, itself. Also, as painful as it is to invalidate speculative executions, probably the bigger penalty associated with mispredections is having to take the latency hit of fetching instructions down the path you failed to predict.

BTW, don't forget that memory prefetchers are an important form of prediction.

This model of IPC is too simplistic. Since the days of Core 2, pipelines have again started to get longer, yet IPC has continued to increase.

I’m simply saying that shortening the pipeline is an easy way to increase IPC to compete with AMD/Intel if the 3a6000’s architecture is unable to hit similar IPC with the same pipeline length. And if they did decide to shorten the pipeline for said reason, then it could explain why 3 ghz with liquid nitrogen cooling is the max stable clock assuming SMIC’s 12nm process and loongson’s circuit design and layout is competently designed.

And then my addition of branch predictor design was just to show to add more reinforcement that it is much simpler to increase IPC by shortening the pipeline as longer pipelines require more and more sophisticated front end (branch prediction, instruction fetch, buffers, schedulers, etc.) and backend (catch population and hit rates, etc.) to maintain the same IPC as a shorter pipeline.

But you are right that front end and backend optimization is key to increasing IPC in long pipeline designs as the longer the pipeline, the more perfect the front/backend must be to keep the pipeline fully utilized. Like in my example with pentium 3/4, going from a 10-stage pipeline to a 20-stage pipeline required an increase of 566mhz clock speed to match the pentium 3’s performance despite pentium 4’s improved front/back end. And when Prescott came out, it’s 31-stage pipeline was so long that AMD had the better processor even though it couldn’t clock nearly as high. -

Yan Alter Reply

Zen only has a 19 stage pipeline, Intel’s current P-core CPUs have a 15-20 stage pipeline since Alder LakeThe Historical Fidelity said:Oh I wasn’t saying that pipeline length is the only thing that affects IPC, the parts feeding the pipeline are also important to IPC. And today’s AMD/Intel designs are actually dynamic pipeline length, I believe Zen and Intel can be 14-19 stages depending on workload. That’s actually not that much of an increase from the original Core architectures 12-stages and Core 2’s 16 stages. Mind you, Core came right after Netburst Prescott with its 31-stage pipeline. Sandybridge to kabylake Intel architectures have all maintained a 14-19 stage dynamic pipeline so not really that much longer than the original core. I can’t seem to find any data on the number of stages in alderlake and newer architectures however it seems both Intel and AMD have decided 14-19 stages is the optimal length.

I’m simply saying that shortening the pipeline is an easy way to increase IPC to compete with AMD/Intel if the 3a6000’s architecture is unable to hit similar IPC with the same pipeline length. And if they did decide to shorten the pipeline for said reason, then it could explain why 3 ghz with liquid nitrogen cooling is the max stable clock assuming SMIC’s 12nm process and loongson’s circuit design and layout is competently designed.

And then my addition of branch predictor design was just to show to add more reinforcement that it is much simpler to increase IPC by shortening the pipeline as longer pipelines require more and more sophisticated front end (branch prediction, instruction fetch, buffers, schedulers, etc.) and backend (catch population and hit rates, etc.) to maintain the same IPC as a shorter pipeline.

But you are right that front end and backend optimization is key to increasing IPC in long pipeline designs as the longer the pipeline, the more perfect the front/backend must be to keep the pipeline fully utilized. Like in my example with pentium 3/4, going from a 10-stage pipeline to a 20-stage pipeline required an increase of 566mhz clock speed to match the pentium 3’s performance despite pentium 4’s improved front/back end. And when Prescott came out, it’s 31-stage pipeline was so long that AMD had the better processor even though it couldn’t clock nearly as high.