Startup claims it can boost any processor's performance by 100X — Flow Computing introduces its 'CPU 2.0' architecture

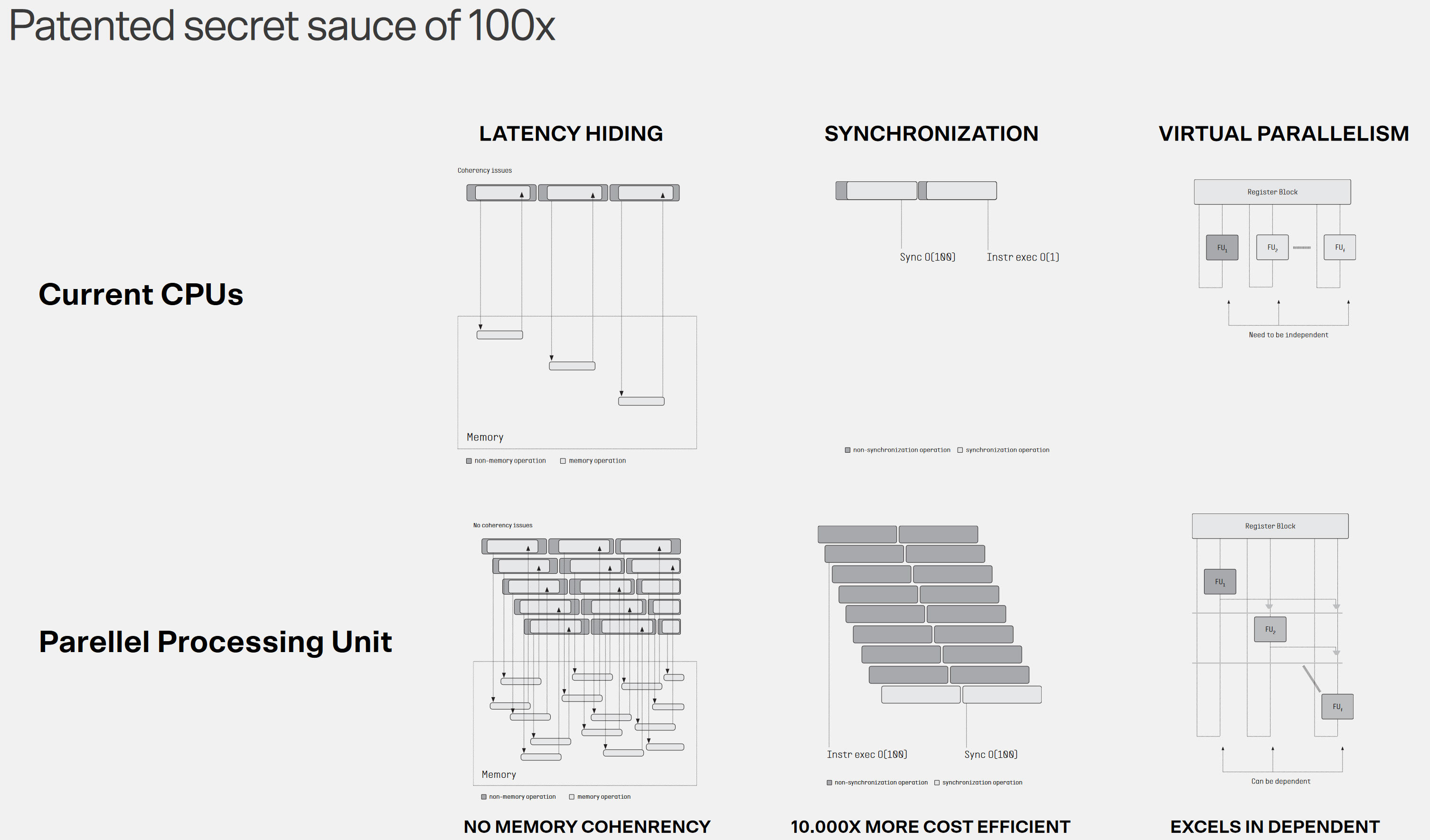

Firm's on-die Parallel Processing Unit (PPU) is the key innovation.

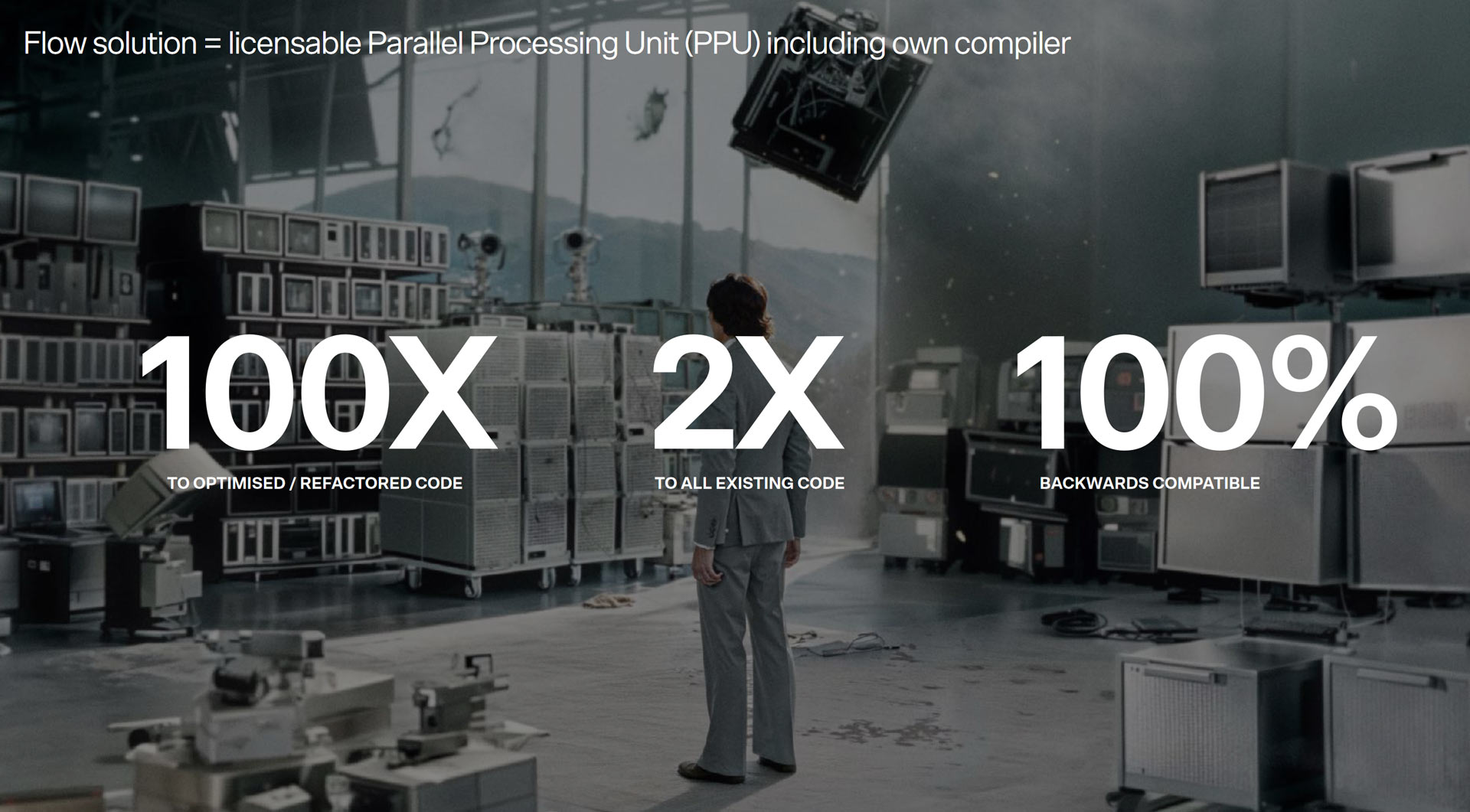

Flow Computing has exited stealth mode with some of the most explosive claims from an emerging technology company we have seen in a long time. A spinout from Finland’s acclaimed VTT Technical Research Center, Flow says its Parallel Processing Unit (PPU) can enable “100X Improved performance for any CPU architecture.” The startup has just secured €4M in pre-seed funding and its founders and backers are asking the tech world to brace for its self-titled era of "CPU 2.0."

According to Timo Valtonen, co-founder and CEO of Flow Computing, the new PPU was designed to break a decades-long stagnation in CPU performance. Valtonen says that this has made the CPU the weakest link in computing in recent years. “Flow intends to lead the SuperCPU revolution through its radical new Parallel Performance Unit (PPU) architecture, enabling up to 100X the performance of any CPU,” boldly claimed the Flow CEO.

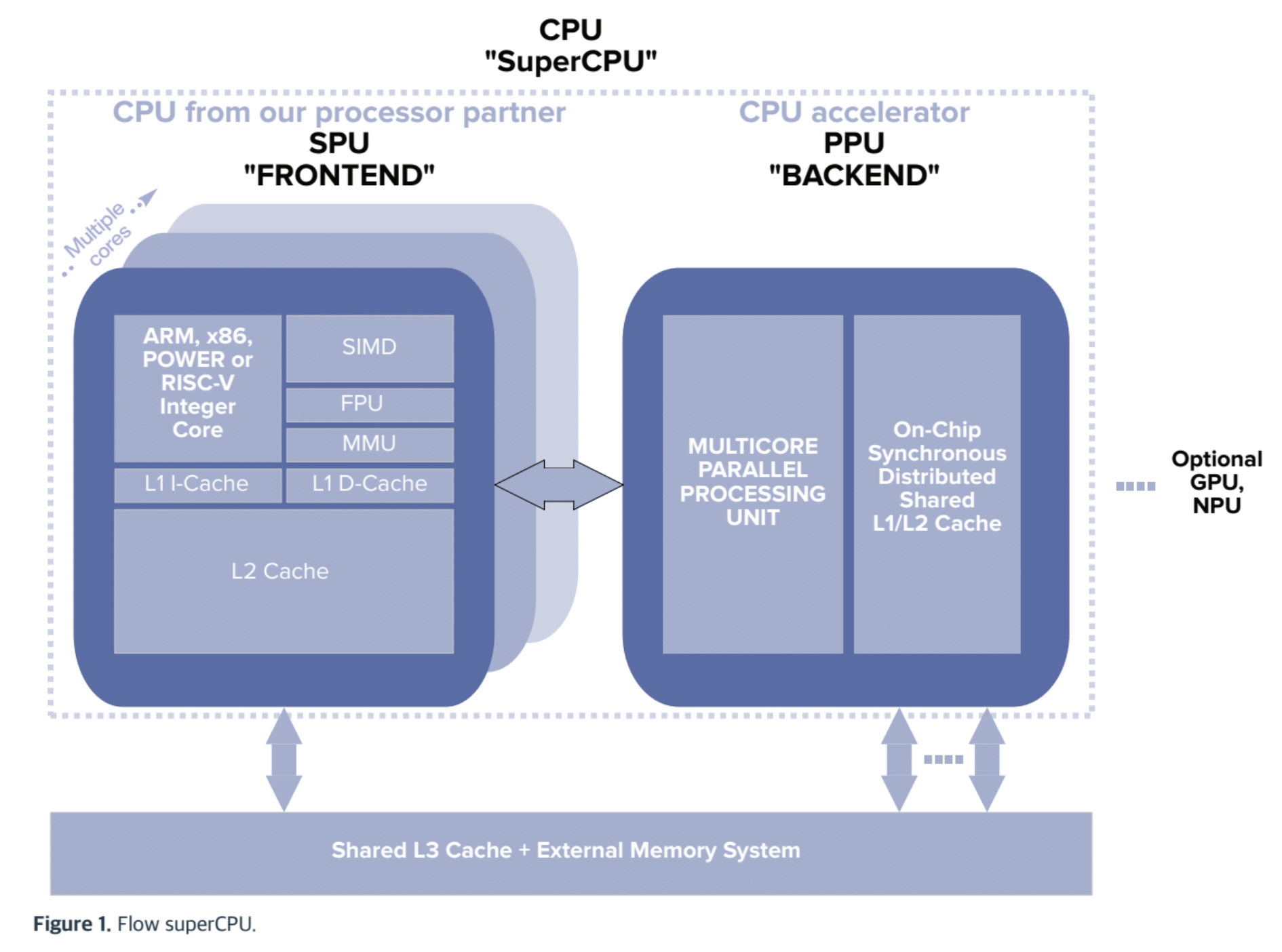

Flow’s PPU is claimed to be very broadly compatible with existing CPU architectures. It is claimed that any current Von Neumann architecture CPU can integrate a PPU. According to a press release we received, Flow has already prepared optimized PPU licenses targeting mobile, PC, and data center processors. Above, you can see a slide showing Flow PPUs configured with between 4 and 256 cores.

Another eyebrow-raising claim is that an integrated PPU can enable its astonishing 100x performance uplift “regardless of architecture and with full backward software compatibility.” Well-known CPU architectures/designs such as X86, Apple M-Series, Exynos, Arm, and RISC-V are name-checked. The company claims that despite the touted broad compatibility and boosting of existing parallel functionality in all existing software, there will still be worthwhile benefits gleaned from software recompilation for the PPU. In fact, recompilation will be necessary to reach the headlining 100X performance improvements. The company says existing code will run up to 2x faster, though.

PC DIYers have traditionally balanced systems to their preferences by choosing and budgeting between CPU and GPU. However, the startup claims Flow “eliminates the need for expensive GPU acceleration of CPU instructions in performant applications.” Meanwhile, any existing co-processors like Matrix units, Vector units, NPUs, or indeed GPUs will benefit from a “far-more-capable CPU,” asserts the startup.

Flow explains the key differences between its PPU and a modern GPU in a FAQ document. “PPU is optimized for parallel processing, while the GPU is optimized for graphics processing,” contrasts the startup. “PPU is more closely integrated with the CPU, and you could think of it as a kind of a co-processor, whereas the GPU is an independent unit that is much more loosely connected to the CPU.” It also highlights the importance of the PPU not requiring a separate kernel and its variable parallelism width.

For now, we are taking the above statements with bucketloads of salt. The claims about 100x performance and ease/transparency of adding a PPU seem particularly bold. Flow says it will deliver more technical details about the PPU in H2 this year. Hopefully, that will be a deeper dive stuffed with benchmarks and relevant comparisons.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The Helsinki-based startup hasn’t shared a commercialization timetable as yet but seems open to partnerships. It mentions the possibility of working with companies like AMD, Apple, Arm, Intel, Nvidia, Qualcomm, and Tenstorrent. Flow’s PR highlights its preference for an IP licensing model, similar to Arm, where customers embed its PPU for a fee.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Notton I hope you guys get one to review before they fold or get bought out and never see the light of day again.Reply -

Findecanor I have yet a few papers to read, but from my understanding so far this I think this approach is basically about taking SIMT technology that you'd typically find in a GPU and adapting it to run general-purpose code that you'd otherwise only run on a CPU.Reply

An NPU on the other hand, can only do one thing: matrix multiplication, and often does it at low precision. -

Tom's article seem to be short on details. I got some insight.Reply

https://techcrunch.com/2024/06/11/flow-claims-it-can-100x-any-cpus-power-with-its-companion-chip-and-some-elbow-grease/

CPUs have gotten very fast, but even with nanosecond-level responsiveness, there’s a tremendous amount of waste in how instructions are carried out simply because of the basic limitation that one task needs to finish before the next one starts.

What Flow claims to have done is remove this limitation, turning the CPU from a one-lane street into a multi-lane highway.

The CPU is still limited to doing one task at a time, but Flow’s PPU, as they call it, essentially performs nanosecond-scale traffic management on-die to move tasks into and out of the processor faster than has previously been possible.

Think of the CPU as a chef working in a kitchen.

The chef can only work so fast, but what if that person had a superhuman assistant swapping knives and tools in and out of the chef’s hands, clearing the prepared food and putting in new ingredients, removing all tasks that aren’t actual chef stuff?

The chef still only has two hands, but now the chef can work 10 times as fast. It’s not a perfect analogy, but it gives you an idea of what’s happening here, at least according to Flow’s internal tests and demos with the industry (and they are talking with everyone).

The PPU doesn’t increase the clock frequency or push the system in other ways that would lead to extra heat or power; in other words, the chef is not being asked to chop twice as fast. It just more efficiently uses the CPU cycles that are already taking place.

Flow’s big achievement, in other words, isn’t high-speed traffic management, but rather doing it without having to modify any code on any CPU or architecture that it has tested. It sounds kind of unhinged to say that arbitrary code can be executed twice as fast on any chip with no modification beyond integrating the PPU with the die.

Therein lies the primary challenge to Flow’s success as a business: Unlike a software product, Flow’s tech needs to be included at the chip-design level, meaning it doesn’t work retroactively, and the first chip with a PPU would necessarily be quite a ways down the road.

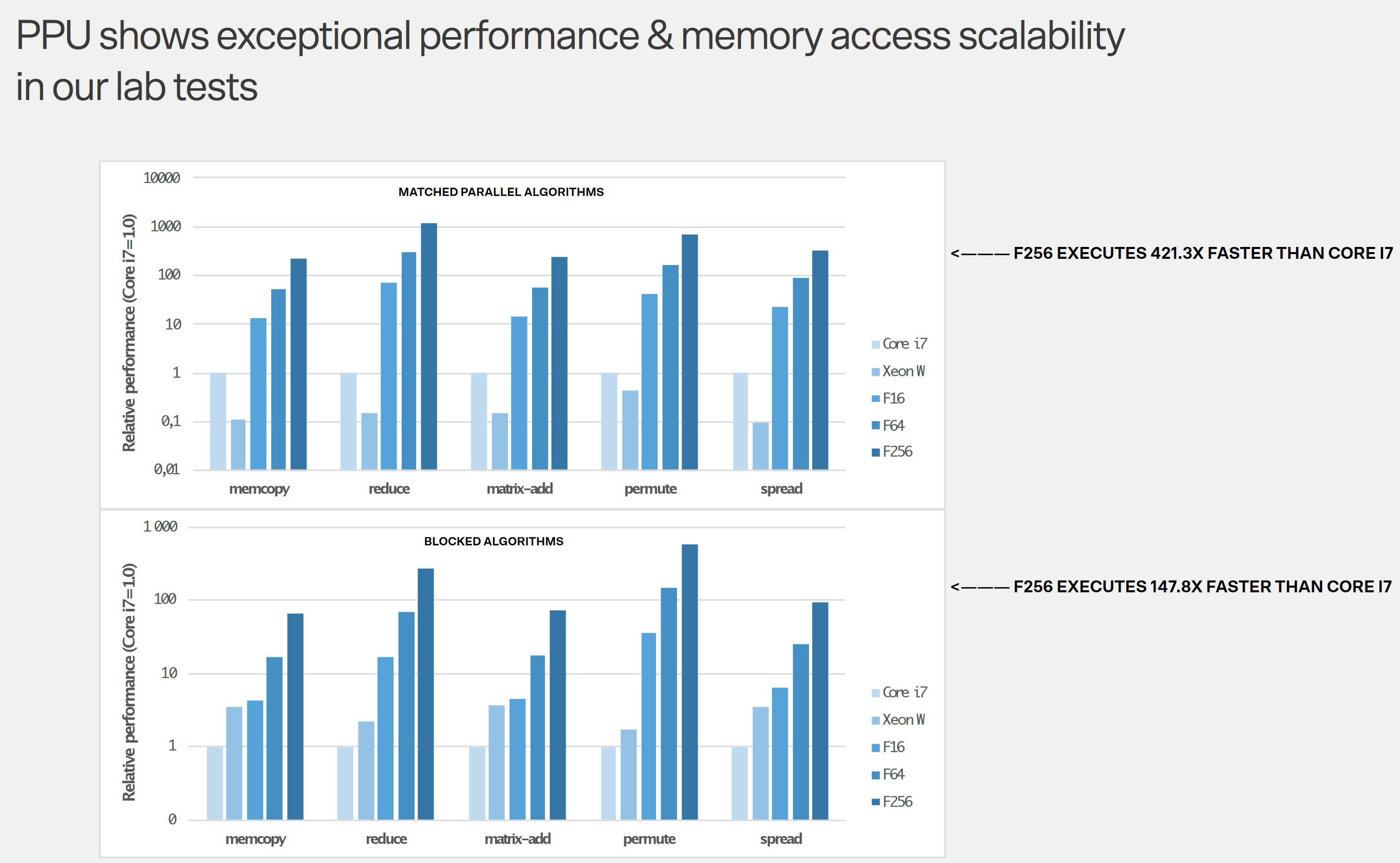

Chart showing improvements in an FPGA PPU-enhanced chip versus unmodified Intel chips. Increasing the number of PPU cores continually improves performance.

https://techcrunch.com/wp-content/uploads/2024/06/flow-chart.png -

TerryLaze Reply

Intel already failed at trying to do this some 10 years ago, this is just intel's xeon phi / knights landing but without intel's backing.peachpuff said:Intel: buy'em out boys!

-

Choiman1559 There are already products on the market that are very specific to OOoE technology, such as the POWER architecture, which produces similar results to this chip. (Although not x86 compatible and not for consumer use!)Reply -

Alvar "Miles" Udell It sounds good in theory, but it does sound suspiciously like a the NPU that Qualcomm, AMD, and Intel have themselves so it's impact may be limited to other companies, like Samsung if they don't license Qualcomm's.Reply -

Murissokah ReplyMetal Messiah. said:What Flow claims to have done is remove this limitation, turning the CPU from a one-lane street into a multi-lane highway.

Uberthreding? Seems like the kind of thing that is very powerful for some applications, but useless for othres. -

bit_user My guess is that it's basically like a tightly-integrated GPU. Like, if the compute cores of a GPU were integrated almost as tightly into a CPU as their FPU.Reply

There are a couple things this could mean. They could be talking about the way that CPU cores can operate on only 1 or 2 threads at a time (although I think POWER has done up to 8-way SMT), but the main thing I think they're probably talking about is how CPUs (especially x86) have tight memory synchronization requirements. GPUs famously have very weak memory models. This can limit concurrency, in CPUs.Metal Messiah. said:CPUs have gotten very fast, but even with nanosecond-level responsiveness, there’s a tremendous amount of waste in how instructions are carried out simply because of the basic limitation that one task needs to finish before the next one starts.

This is super sketchy, if they don't even tell us which models of CPUs they're talking about or show us what code they ran, compiler + options, etc. I find it pretty funny they used a core i7 that was 10x as fast as a Xeon W. Did they compare the newest Raptor Lake i7 against the slowest and worst Skylake Xeon W??Metal Messiah. said:Chart showing improvements in an FPGA PPU-enhanced chip versus unmodified Intel chips. Increasing the number of PPU cores continually improves performance.

https://techcrunch.com/wp-content/uploads/2024/06/flow-chart.png

I'm guessing the code they used on the x86 CPUs was probably brain dead and got compiled to use all scalar operations.