Nvidia's Blackwell GPUs are sold out for the next 12 months — chipmaker to gain market share in 2025

Analysts expect Nvidia to gain AI hardware market share in 2025.

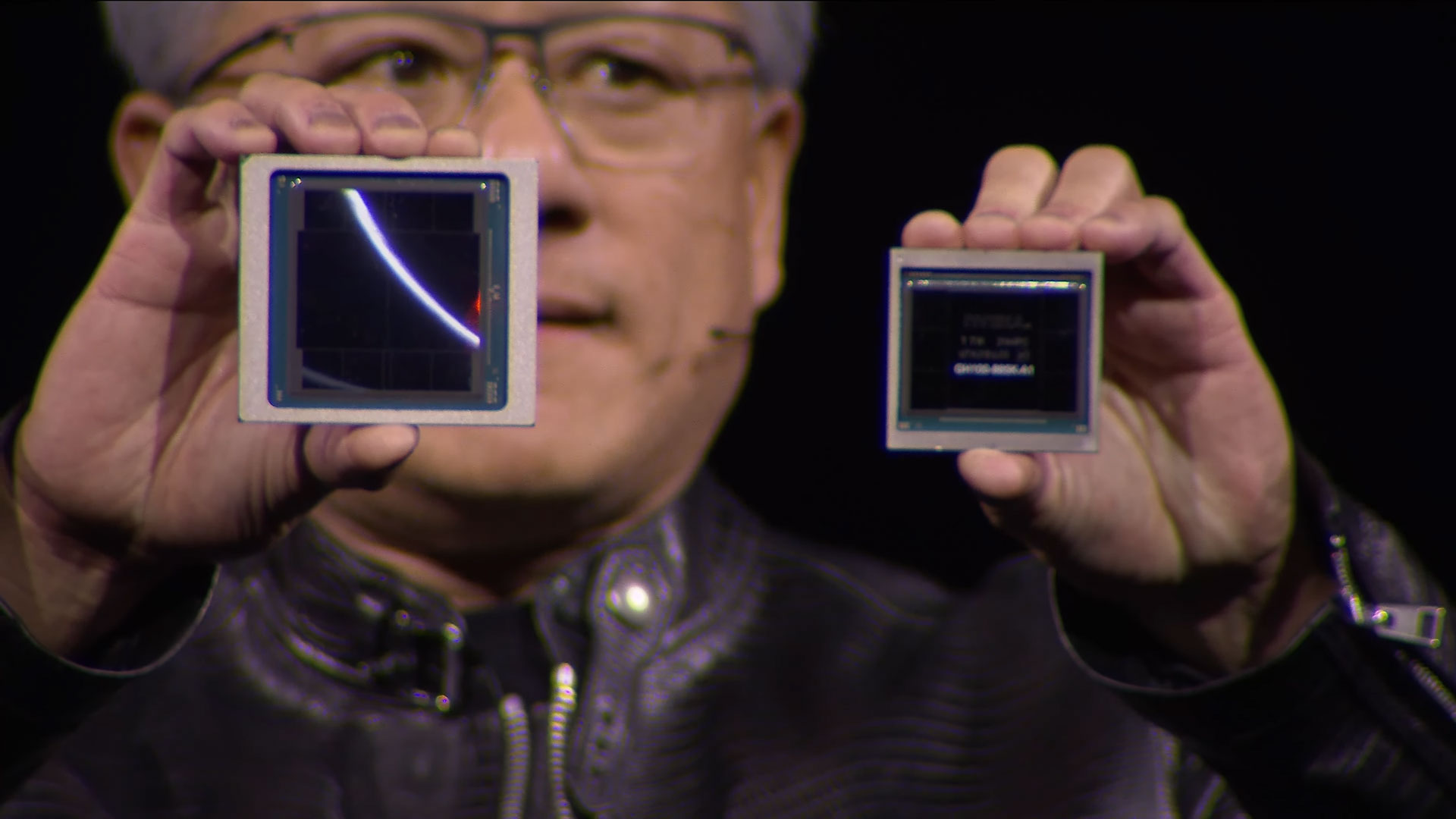

Nvidia's Blackwell GPUs for AI and HPC faced a slight delay due to a yield-killing issue with packaging that required a redesign, but it looks like this did not impact demand for these processors. According to the company's management questioned by Morgan Stanley analysts (via Barron's), the supply of Nvidia Blackwell GPUs for the next 12 months has been sold out, which mimics a situation with Hopper GPUs supply several quarters ago. As a result, Nvidia is expected to gain market share next year (via Seeking Alpha).

Morgan Stanley analysts shared insights from recent meetings with Nvidia's leadership, including CEO Jensen Huang. During these meetings, it was revealed that orders for the Blackwell GPUs are already sold out for the next 12 months. This means new customers placing orders today must wait until late next year to receive their orders.

Nvidia's traditional customers (AWS, CoreWeave, Google, Meta, Microsoft, and Oracle, to name some) have bought every Blackwell GPU that Nvidia and its partner TSMC will be able to produce in the coming quarters.

Such an overwhelming demand may indicate that Nvidia might gain market share next year despite intensified competition from AMD, Intel, cloud service providers (with proprietary offerings), and various smaller companies.

"Our view continues to be that Nvidia is likely to actually gain share of AI processors in 2025, as the biggest users of custom silicon are seeing very steep ramps with Nvidia solutions next year," Joseph Moore, an analyst with Morgan Stanley, wrote in a note to clients. "Everything that we heard this week reinforced that."

Now that packaging issues of Nvidia's B100 and B200 GPUs have been resolved, Nvidia can produce as many Blackwell GPUs as TSMC can. Both B100 and B200 use TSMC's CoWoS-L packaging, and whether the world's largest chip contract maker has enough CoWoS-L capacity remains to be seen.

Also, as demand for AI GPUs is skyrocketing, it remains to be seen whether memory makers can supply enough HBM3E memory for leading-edge GPUs like Blackwell. In particular, Nvidia has yet to qualify Samsung's HBM3E memory for its Blackwell GPUs, another factor influencing supply.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

ekio Customer: what's the price to get one ?Reply

Jensen: It's 40 ... no wait ! uhhhhh 80k, no wait wait !!!! it's 280k per GPU, and that's a deal just for you, my friend ! Now how many thousand will you buy ? We only sell them per 1000 at the time, it is too expensive to make a box for each of them. -

Mattzun Can you avoid using "GPU" in headlines when you are talking about dedicated AI products.Reply

This does explain the limited availability and high prices for NVidia cards

NVidia is dedicating just enough silicon to the lower margin product intended for deplorable poor people to keep people interested in NVidia GPUs.

Just think of how much money Jensen is losing by building $2500 5090's instead of AI cards. -

Heiro78 ReplyMattzun said:Can you avoid using "GPU" in headlines when you are talking about dedicated AI products.

This does explain the limited availability and high prices for NVidia cards

NVidia is dedicating just enough silicon to the lower margin product intended for deplorable poor people to keep people interested in NVidia GPUs.

Just think of how much money Jensen is losing by building $2500 5090's instead of AI cards.

What would you suggest these AI processing units be called when NVIDIA itself calls it a GPU? I hate the name AI for all of these narrow intelligence models companies have. But it's a marketing gimmick to catch headlines. To the point where true AI will come and they'll have to invent another name for it -

Eximo ReplyHeiro78 said:What would you suggest these AI processing units be called when NVIDIA itself calls it a GPU? I hate the name AI for all of these narrow intelligence models companies have. But it's a marketing gimmick to catch headlines. To the point where true AI will come and they'll have to invent another name for it

AGI is the commonly accepted term. -

DavidLejdar Sounds like Nvidia's AI revenue just hit a ceiling for the time being, doesn't it? Whereas AMD may have some spare Instinct MI325X accelerators.Reply

Might not see AMD's stock price double in the upcoming months - in particular, as many of Nvidia's customers lock themselves in to CUDA with ongoing projects. But the timing seems nice, to perhaps cash in a bit in a few months, for one of the flagship GPUs (desktop thingy).