Sponsored by AMD ATI Radeon

ADVERTORIAL: ATI Radeon HD 4650/70: Top Value for Bottom Dollar

Generation Gap

If you’re due for a graphics card purchase, odds are good that you’re not starting from scratch. Perhaps you’re doing one of those every-three-years-or-so PC makeovers common in the mainstream desktop crowd. Well, three years ago, ATI (still independent from AMD at that point) had just released its Radeon X1950 parts, with the XTX variant ringing in right around $450. At this time, everyone was still obsessing over speeds, feeds, and gaming features. As such, the press liked to tout the fact that the XTX was making the jump to GDDR-4 memory, although the other X1950 models were all doing well with their 256-bit wide GDDR-3 memory buses. Chips were built on a 90 nm fabrication process, cards generally used the PCI Express x16 slot interface, and the graphics libraries supported with the then-current DirectX 9.0c and OpenGL 2.0. The object of the game was to use traditional architectures and just ratchet them up as fast as possible.

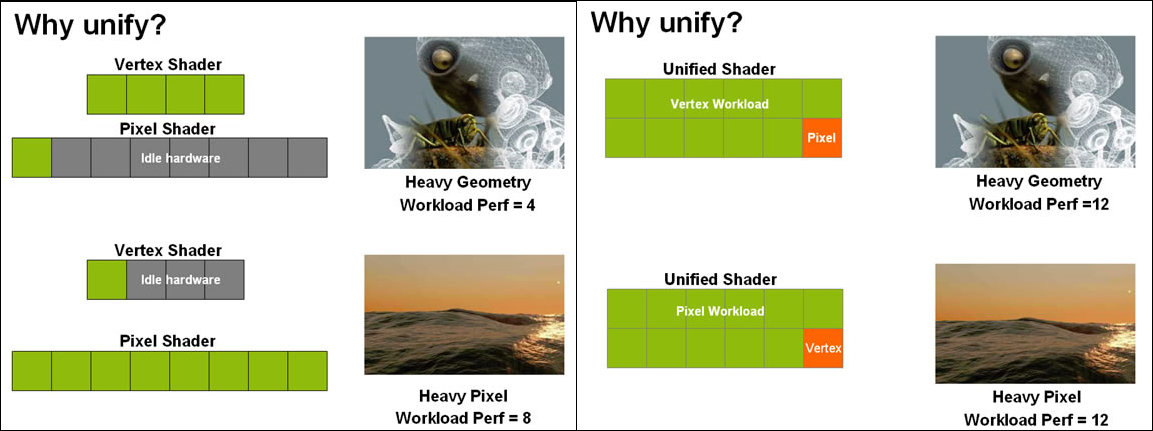

What a difference a few years makes. Just as we’ve seen happen with CPUs, graphics processors made several architectural leaps. The new goal wasn’t necessarily to strive for lightning fast frequencies. If cores could made more efficient and make better use of parallel processing, total performance would naturally follow. For example, the following year, with the advent of the HD 2000-series, ATI made the leap to unified shaders in its desktop GPUs. A shader is a little program designed primarily to perform a graphics rendering task. For example, vertex shaders would alter the shape of an object, while pixel shaders could apply textures to individual pixels. Inside the GPU, designers dedicated blocks of circuitry to running those shader tasks. The X1950 had integrated hardware for eight vertex shaders and 48 pixel shaders. The downside of this architecture was that if, for instance, an application needed a lot of vertex operations done but not much pixel processing, all eight vertex shaders would be cranking away while most of the pixel shader circuitry would sit around idle, filing its nails, waiting for something to do. The 2000-series introduced unified, programmable shaders, so any block of shader circuitry could perform any suitable shader task—vertex, pixel, or otherwise. A mid-range 2000-series card could suddenly blow through that vertex-intensive task in less time than the X1950 XTX, even when based on lower clock speeds and at lower price points.

Similar sorts of advances have appeared in other areas around the GPU. The ring bus architecture that debuted with the X1000 evolved, widened, and grew to encompass a PCI Express bus connection, allowing for more efficient data exchanges with the surrounding system. The PCI Express bus itself migrated from version 1.0 to 2.0, doubling the interface bandwidth. Graphics library support updated all the way to DirectX 10.1 and OpenGL 3.0. By the time, AMD/ATI reached the 4650/70 chips (code-named RV730), the fab process had shrunk to 55 nm and the number of transistors in each processor mushroomed to 514 million—over five times the 80 to 90 million found in the X1950 chips made only two years prior.

What was AMD doing with all of that extra circuitry? Keep reading.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Tom's Hardware is the leading destination for hardcore computer enthusiasts. We cover everything from processors to 3D printers, single-board computers, SSDs and high-end gaming rigs, empowering readers to make the most of the tech they love, keep up on the latest developments and buy the right gear. Our staff has more than 100 years of combined experience covering news, solving tech problems and reviewing components and systems.

-

mlcloud At least give us the links to some of the 4650/70 benchmarks... Other than that, great read, great recommendations, looking to upgrade my pentium 4, 1.4ghz 256mb (ddr). Was looking at using the HD4200 on the 785g series from AMD, but if I can make a true gaming computer out of it ... hm... tempting.Reply -

I'm waiting for a HIS HD4670 1GB to arrive soon. It even has HDMI output.Reply

Got it really cheap from newegg. It'll do fine with my Intel E5200. Nothing like a super gaming machine, but hope to play TF2 and L4D with good gfx. That's all i play atm.

-

tortnotes Advertorial? How much did AMD pay for this?Reply

Not that it's not good content, but come on. Doesn't Tom's make enough from normal ads? -

duckmanx88 mlcloud Was looking at using the HD4200 on the 785g series from AMD, but if I can make a true gaming computer out of it ... hm... tempting.Reply

on their gaming charts the 4670 is listed. plays FEAR 2 pretty well. i assume it can than handle all Source games as well but at lower resolutions, medium settings, no AA, the usual. -

Assuming I'm assembling a new system and the HD 4650/4670 is the most cost-effective graphics card... what then is the most cost-effective processor to pair with it?Reply

-

Good thing to see ATI marketing their 4650/4670.Reply

I was hoping to see more of their mid-range cards. -

WINTERLORD great article these are some nice cards for the price, i wonder though if you got 2 of them and tried to put them in a crossfire config. since they dont require a power source, other then the pci-e slot, would 2 of them cause any problems drawing all that current through the motherboard? kinda wondering if there would be any impact there.Reply -

Ati making great job. In my office there was need to meka PC with 6 individual monitors. Solution - mainboard asus p5q-e + 3 ati 3650 video cards with vga+DVI outputs. Great working very cheep in cost. Tried to meke the same with nvidia 8400gt - no result 4 monitors individual maximum. Ati - rulezzzReply

-

lien +Reply

Installed an Sapphire 4650 AGP on a backup system in August.

Overclocked it & almost pissed myself on how good the image quality was on that system.

Article confirms....

value based articles are refreshing