AMD Ryzen Threadripper 1920X Review

Why you can trust Tom's Hardware

Power Consumption

We establish the package’s power consumption results by using a special sensor loop. This way, our values represent the exact amount of power that goes into the CPU and then reemerges in the form of waste heat dissipated by the cooling subsystem. We check our sensor readings using shunts and by measuring overall power consumption directly at the EPS connector (with a current probe and direct voltage measurement).

AMD’s Threadripper CPUs use different partial voltages for the SoC and SMU rails at different clock rates. These partial voltages, which, again, vary based on frequency, do influence the package’s power consumption. AMD recommended that we use the profile included with its DDR4-3200 kit. But if we instead use the standard SPD values for DDR4-2133, our power measurement is 15W lower!Both of AMD’s CPUs are designed for a maximum power ceiling of 180W at their default settings. If the memory gets overclocked, the CPU has 15 fewer watts to work with. This could affect performance in workloads that utilize all cores and, consequently, get too close to the limit.

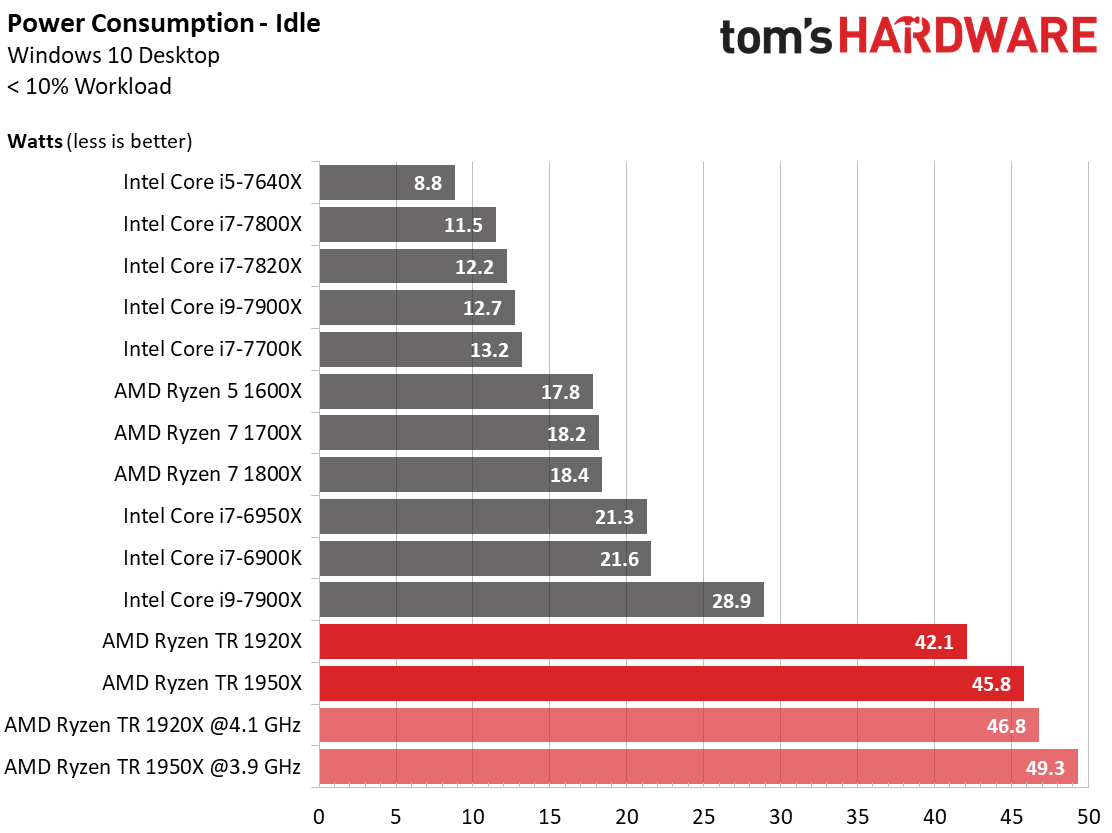

Idle Power Consumption

Threadripper’s idle power consumption is roughly twice that of the Ryzen 7 models. However, Threadripper also hosts two dies instead of one, and it also hits higher clock rates under sporadic loads. The overclocked version requires higher voltages as well, and memory also plays a role in power consumption. For instance, dropping to DDR4-2133 pulls the 1920X's idle power use down to 32W.

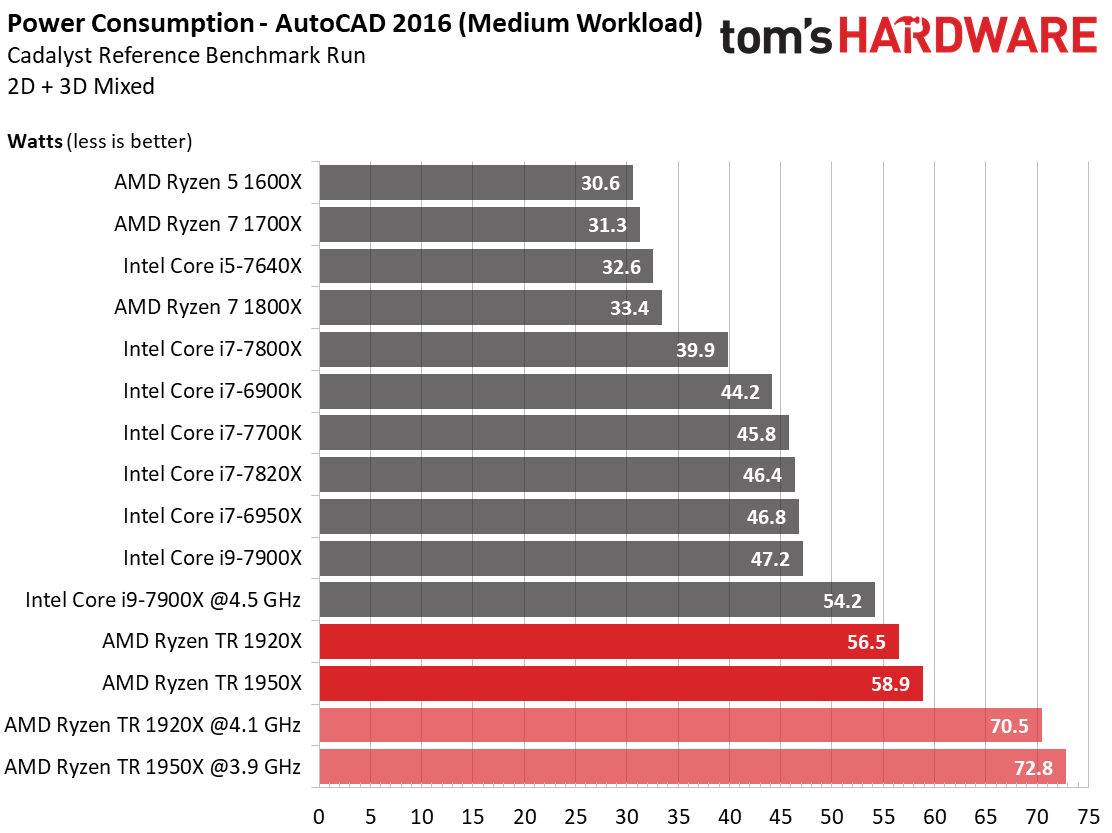

CAD Workload Power Consumption

AutoCAD 2016 rarely uses more than two or three cores. In fact, most of the time it's limited to a single core. Thus, it's not surprising that the CAD power consumption only adds a maximum of 15W to the idle power numbers. The two overclocked configurations add another 14W, which makes for an almost 30W difference compared to our idle power consumption results.

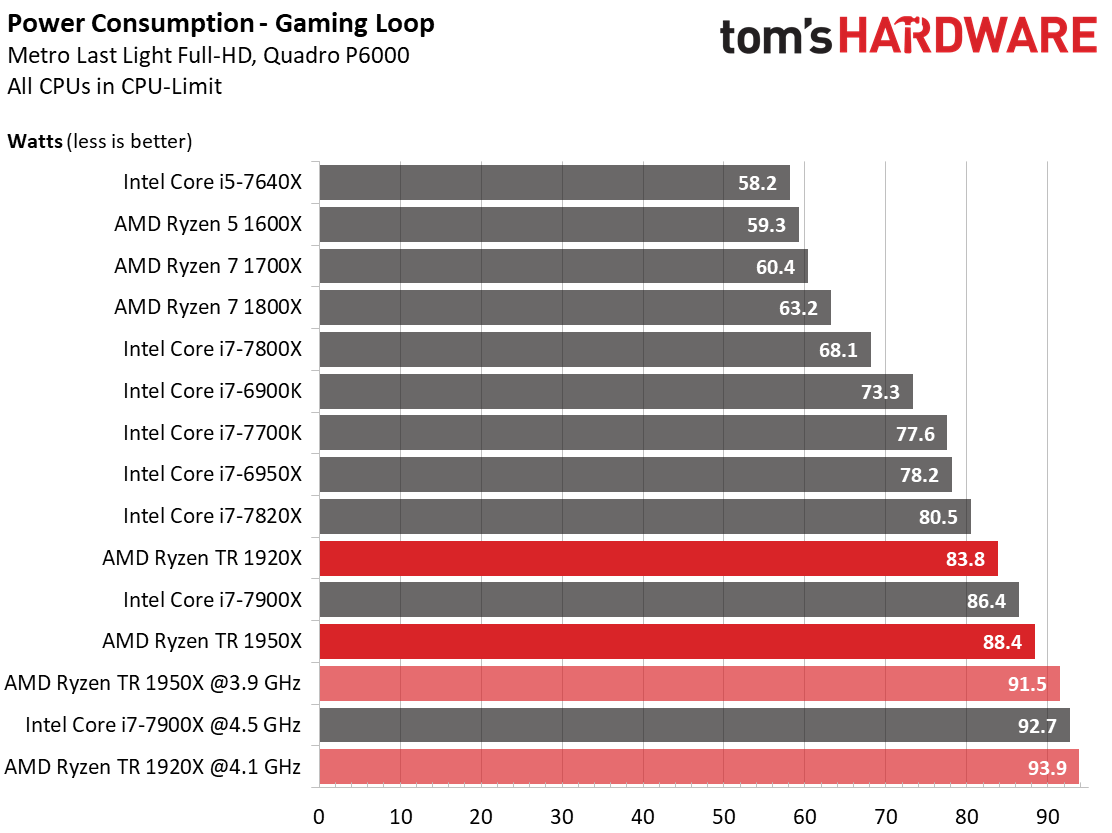

Gaming Power Consumption

When it comes to gaming, Threadripper’s MCM design causes its many cores to get in each others' way. Thus, the frame rates we report end up lower than competing processors. But power consumption ends up similar to Intel's Core i9-7900X, even though Skylake-X offers much more performance.

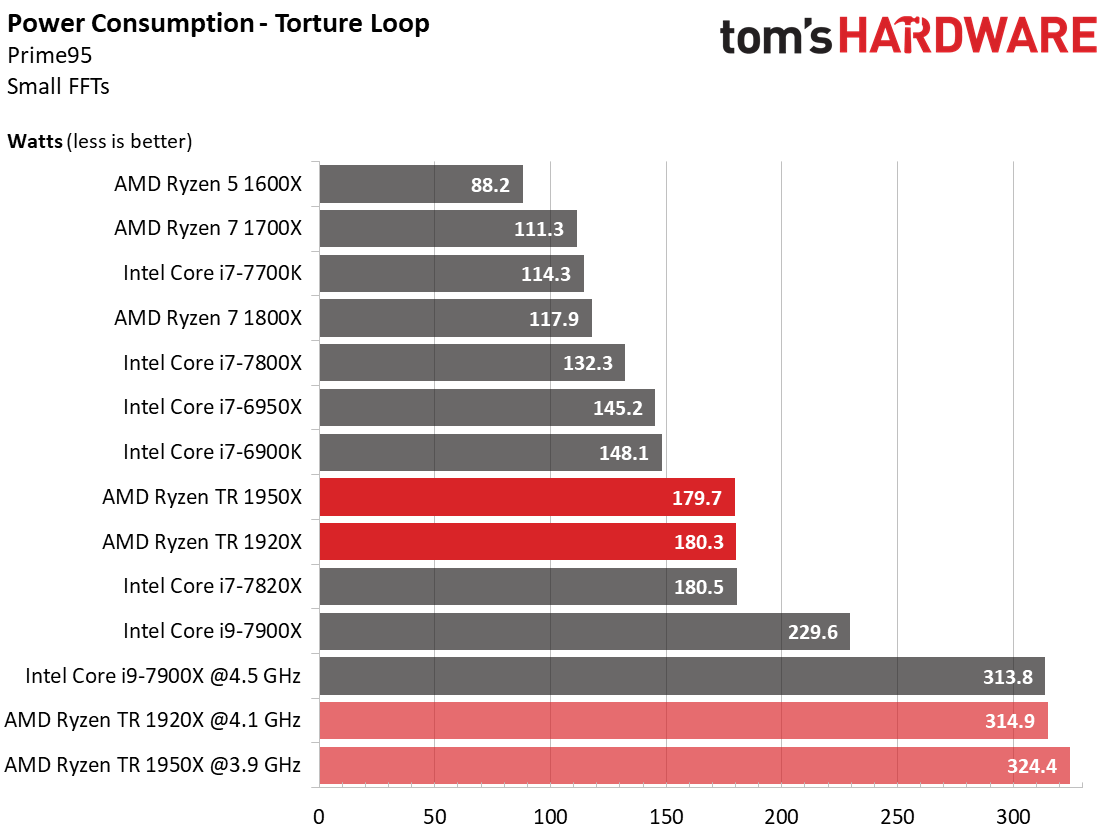

Stress Test & Maximum Power Consumption

Power consumption goes through the roof during our stress test, especially for the overclocked configurations.

The motherboard is partially to blame for the stock Intel Core i9-7900X's excessively high numbers. It doesn’t obey the standard Turbo Boost frequency thresholds, instead boosting aggressively and staying in those boost states longer than required. For more details, see our article about the power and thermal issues we encountered during our extended testing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Threadripper doesn’t have those kinds of issues. Asus X399 ROG Zenith Extreme limits power consumption to exactly 180W at stock settings, just as it should.

At a respectable 1.425V, the Ryzen Threadripper 1920X reaches 4.1 GHz. The higher-end 1950X needs 1.35V to achieve 3.9 GHz. Once overclocked, AMD’s new processors join Intel's Core i9-7900X overclocked to 4.5 GHz in the stratosphere beyond 300W.

In the end, Threadripper's two dies sometimes consume more power than other processors’ single dies, depending on the task. We succeeded in breaking the 4 GHz barrier by overclocking the 1920X to 4.1 GHz. At that speed, all 24 threads were fully functional and at our disposal. The high power consumption is acceptable if it's accompanied by comparably elevated application performance. For Threadripper, that requires highly parallelized workloads (and perhaps optimized software).

Unfortunately, Threadripper's efficiency during gaming turns out to be significantly worse than Intel’s. Threadripper draws an additional ~15W at idle due to the memory. Subtracting that 15W from AMD's gaming power consumption changes the picture, bringing power consumption in line with the lower gaming performance.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Current page: Power Consumption

Prev Page Overclocking, Cooling & Temperature Next Page Final Analysis

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Aldain Great review as always but on the power consumption fron given that the 1950x has six more cores and the 1920 has two more they are more power efficient than the 7900x is every regard relative to the high stock clocks of the TRReply -

derekullo "Ryzen Threadripper 1920X comes arms with 12 physical cores and SMT"Reply

...

Judging from Threadripper 1950X versus the Threadripper 1900X we can infer that a difference of 400 megahertz is worth the tdp of 16 whole threads.

I never realized HT / SMT was that efficient or is AMD holding something back with the Threadripper 1900x? -

jeremyj_83 Your sister site Anandtech did a retest of Threadripper a while back and found that their original form of game mode was more effective than the one supplied by AMD. What they had done is disable SMT and have a 16c/16t CPU instead of the 8c/16t that AMD's game mode does. http://www.anandtech.com/show/11726/retesting-amd-ryzen-threadrippers-game-mode-halving-cores-for-more-performance/16Reply -

Wisecracker Hats-off to AMD and Intel. The quantity (and quality) of processing power is simply amazing these days. Long gone are the times of taking days off (literally) for "rasterizing and rendering" of work flowsReply

...or is AMD holding something back with the Threadripper 1900x?

I think the better question is, "Where is AMD going from here?"

The first revision Socket SP3r2/TR4 mobos are simply amazing, and AMD has traditionally maintained (and improved!) their high-end stuff. I can't wait to see how they use those 4094 landings and massive bandwidth over the next few years. The next iteration of the 'Ripper already has me salivating :ouch:

I'll take 4X Summit Ridge 'glued' together, please !!

-

RomeoReject This was a great article. While there's no way in hell I'll ever be able to afford something this high-end, it's cool to see AMD trading punches once again.Reply -

ibjeepr I'm confused.Reply

"We maintained a 4.1 GHz overclock"

Per chart "Threadripper 1920X - Boost Frequency (GHz) 4.0 (4.2 XFR)"

So you couldn't get the XFR to 4.2?

If I understand correctly manually overclocking disables XFR.

So your chip was just a lotto loser at 4.1 or am I missing something?

EDIT: Oh, you mean 4.1 All core OC I bet. -

sion126 actually the view should be you cannot afford not to go this way. You save a lot of time with gear like this my two 1950X rigs are killing my workload like no tomorrow... pretty impressive...for just gaming, maybe......but then again....its a solid investment that will run a long time...Reply -

AgentLozen Replyredgarl said:Now this at 7nm...

A big die shrink like that would be helpful but I think that Ryzen suffers from other architectural limitations.

Ryzen has a clock speed ceiling of roughly 4.2Ghz. It's difficult to get it past there regardless of your cooling method.

Also, Ryzen experiences nasty latency when data is being shared over the Infinity Fabric. Highly threaded work loads are being artificially limited when passing between dies.

Lastly, the Ryzen's IPC lags behind Intel's a little bit. Coupled with the relatively low clock speed ceiling, Ryzen isn't the most ideal CPU for gaming (it holds up well in higher resolutions to be fair).

Threadripper and Ryzen only look as good as they do because Intel hasn't focused on improving their desktop chips in the last few years. Imagine if Ivy Bridge wasn't a minor upgrade. If Haswell, Broadwell, Skylake, and Kabylake weren't tiny 5% improvements. What if Skylake X wasn't a concentrated fiery inferno? Zen wouldn't be a big deal if all of Intel's latest chips were as impressive as the Core 2 Duo was back in 2006.

AMD has done an amazing job transitioning from crappy Bulldozer to Zen. They're in a position to really put the hurt on Intel but they can't lose the momentum they've built. If AMD were to address all of these problems in their next architecture update, they would really have a monster on their hands. -

redgarl Sure Billy Gates, at 1080p with an 800$ CPU and an 800$ GPU made by a competitor... sure...Reply

At 1440p and 2160p the gaming performances is the same, however your multi-threading performances are still better than the overprices Intel chips.