Deus Ex: Human Revolution Performance Analysis

The highly anticipated prequel to the game that started it all, Deus Ex: Human Revolution is now available. We take a close look at this intriguing title, the first to offer in-game morphological anti-aliasing and AMD HD3D support upon its release.

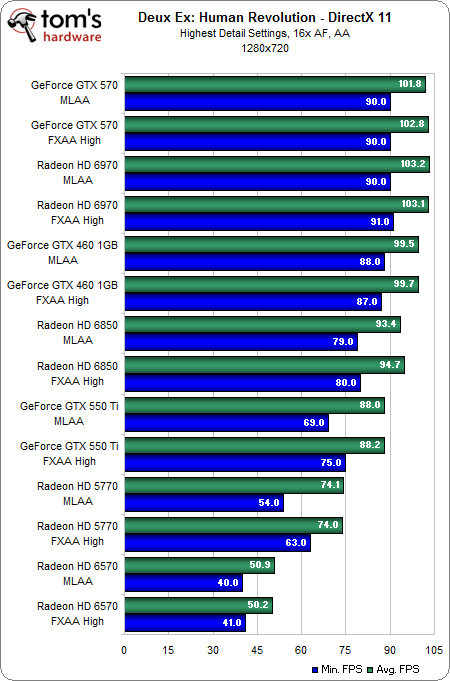

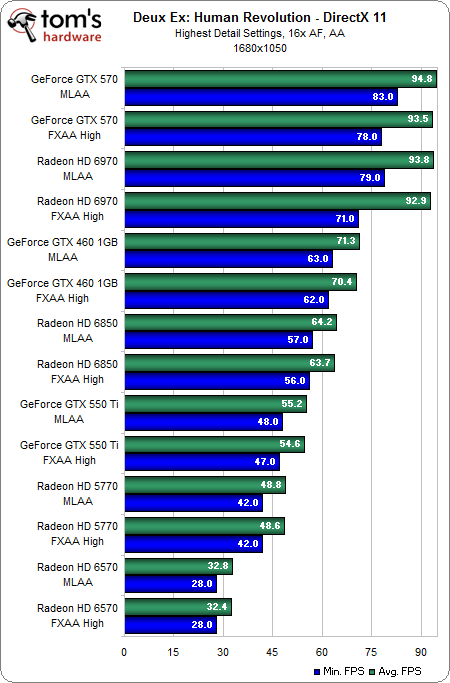

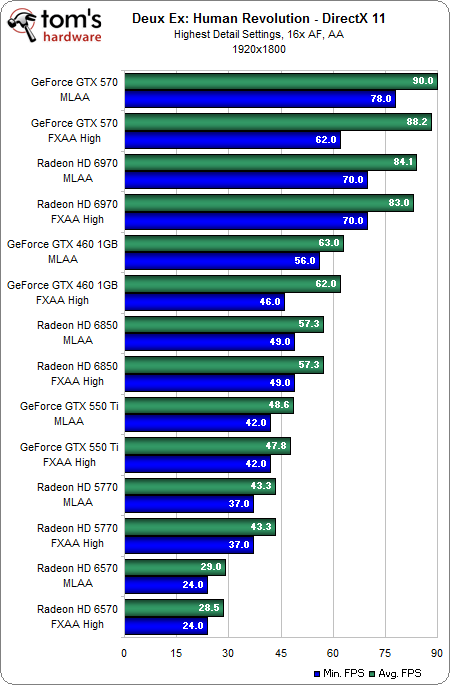

Benchmark Results: DirectX 11, Maximum Detail With Anti-Aliasing

In our next set of benchmarks, we're turning on soft shadows, increasing anisotropic filtering to 16x, and turning on anti-aliasing. We’ll compare both FXAA and MLAA to find out what kind of performance hit each method causes.

In general, it looks like MLAA incurs a slight performance hit at low resolutions. But by 1680x1050, there is no notable difference between MLAA and the FXAA High setting. In fact, MLAA appears to be easier for the GeForce cards to handle at 1920x1080.

What’s really impressive here is that, of all of the cards tested, only the Radeon HD 6570 struggles to provide a minimum of 30 FPS above 1680x1050. All of the other cards handle these maximum settings without a problem at 1920x1080.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Benchmark Results: DirectX 11, Maximum Detail With Anti-Aliasing

Prev Page Benchmark Results: DirectX 11, High Detail Next Page Benchmark Results: CPUsDon Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.

-

Soma42 Man as much as I prefer PC gaming I can't wait for the PS4 and Xbox 720 to come out so games will look noticeably better. Might actually want to upgrade my computer by then...Reply

Anywho, I didn't play the first two am I missing anything if I wanted to pick this up? -

festerovic @soma - I personally thought they were average - good, for the time. Not sure if they would stand up to time...Reply

Interesting to read the dual core HT chips outperformed real cores. Can we look forward to the 2600's HT being utilized in games before the next generation of CPUs comes out? -

gerchokas Good article - i still remember when i first saw the 2000' Deus Ex graphics on my friend's then-brand-new pc, i thought 'maaan... this looks *friggin* REAL!' I instantly knew my old Pentium cpu needed replacing ASAP...Reply

11 years later, i praise again the great graphics.. but this time they havent cought me off-guard! -

aznshinobi Hmm... The Nvidia cards perform better than the AMD cards of equivalent rank. I'm not playing fanboy but didn't AMD fund the studio? Afterall Eyefinity was made use of.Reply -

th3loonatic Are there any typos? Coz I see a GTX560 Ti listed as a card used to test, but it doesn't appear in the results.Reply -

fyasko festerovic@soma - I personally thought they were average - good, for the time. Not sure if they would stand up to time...Interesting to read the dual core HT chips outperformed real cores. Can we look forward to the 2600's HT being utilized in games before the next generation of CPUs comes out?Reply

HT isn't the reason dual core SB CPU's beat 6 core thubans. SB is a better architecture. Hurry up Bulldozer! -

mayankleoboy1 @ Don WoligroskiReply

i want the CPU benchmarks at 1080p with highest settings.

benches at 1024x768 are irrelevant. the gamer of today is atleast 1680, preferable 1080.

so please add to the benches. also, this would show the real impact of CPU on FPS.