G-Sync Technology Preview: Quite Literally A Game Changer

You've forever faced this dilemma: disable V-sync and live with image tearing, or turn V-sync on and tolerate the annoying stutter and lag? Nvidia promises to make that question obsolete with a variable refresh rate technology we're previewing today.

Is G-Sync The Game-Changer You Didn’t Know You Were Waiting For?

Before we even got into our hands-on testing of Asus' prototype G-Sync-capable monitor, we were glad to see Nvidia approaching a very real issue affecting PC gaming, for which no other solution had been proposed. Until now, your choices were V-sync on or V-sync off, each decision accompanied by compromises that detracted from the experience. When your line is "I run with V-sync disabled unless I can't take the tearing in a particular game, at which point I flip it on," then the decision sounds like picking the lesser of two evils.

G-Sync sets out to address that by giving the monitor an ability to scan at a variable refresh. Such innovations are the only way our industry can disruptively move forward and support the technical preeminence of PCs over consoles and other gaming platforms. No doubt, Nvidia is going to take heat for not pursuing a standard that competing vendors might be able to adopt. However, it's leveraging DisplayPort 1.2 for a solution we can go hands-on with today. As a result, two months after announcing G-Sync, here it is.

The real question becomes: is G-Sync everything Nvidia promised it'd be?

It's always hard to break past hype when you have three talented developers extolling the merits of a technology you haven't seen in action yet. But if your first experience with G-Sync is Nvidia's pendulum demo, you're going to wonder if such a severe and extreme difference is really possible, or if the test is somehow a specific scenario which is too good to be true.

Of course, as you shift over into real-world gaming, the impact is typically less binary. There are shades of "Whoa!" and "That's crazy" on one end of the spectrum and "I think I see the difference" on the other. The most splash happens when you switch from a 60 Hz display to something with a 144 Hz refresh and G-Sync enabled. But we also tried to test at 60 Hz with G-Sync to preview what you'll see from (hopefully) less expensive displays in the future. In certain cases, just the shift from 60 to 144 Hz is what will hit you as most effective, particularly if you can push those really high frame rates from a high-end graphics subsystem.

Today, we know that Asus plans to support G-Sync on its VG248QE, which the company told us will sell for $400 starting next year. That panel sports a native 1920x1080 resolution and refresh rates as high as 144 Hz. The non-G-Sync version won our Smart Buy award earlier this year for its exceptional performance. Personally, though, the 6-bit TN panel is an issue for me. I'm most looking forward to 2560x1440 and IPS. I'm even fine sticking with a 60 Hz refresh if it helps keep cost manageable.

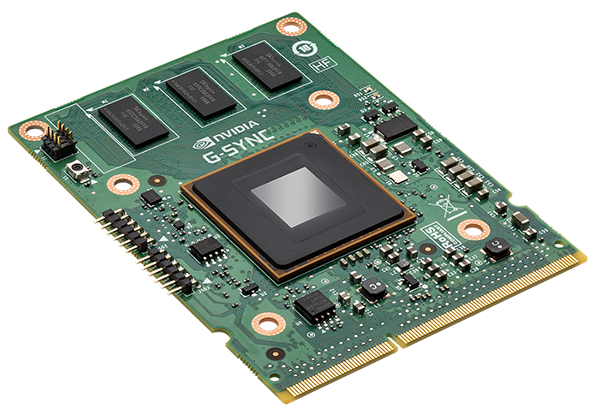

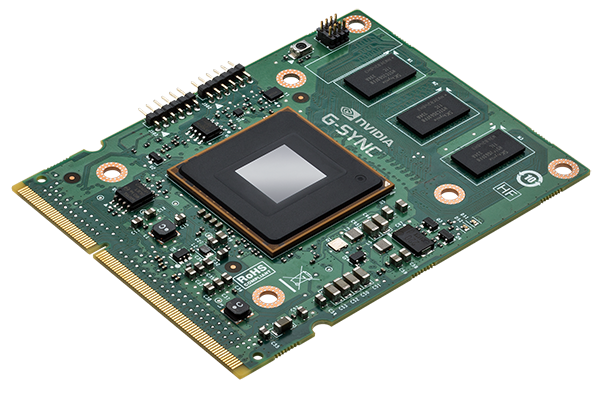

While we expect a rash of announcements at CES, we have no official word from Nvidia as to when other displays sporting G-Sync modules will start shipping or how much they'll cost. We also aren't sure what the company's plans are for the previously-discussed upgrade module, which should let you take an existing Asus VG248QE and make it G-Sync ready "in about 20 minutes."

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

What we can say, though, is that the wait will be worth it. You'll see its influence unmistakeably in some games, and you'll notice it less in others. But the technology does effectively solve that age-old question of whether to keep V-sync enabled or not.

Here's another interesting thought. Now that G-Sync is being tested, how long will AMD hold off on commenting? The company teased our readers in Tom's Hardware's AMA With AMD, In Its Entirety, mentioning it'd address the capability soon. Does it have something up its sleeve or not? Between the Mantle-enhanced version of Battlefield 4, Nvidia's upcoming Maxwell architecture, G-Sync, CrossFire powered by AMD's xDMA engine, and rumored upcoming dual-GPU boards, the end of 2013 and beginning of 2014 are bound to give us plenty of interesting news to talk about. Now, if we could just get more than 3 GB (Nvidia) and 4 GB (AMD) of GDDR5 on high-end cards that don't cost $1000...

Current page: Is G-Sync The Game-Changer You Didn’t Know You Were Waiting For?

Prev Page Game Compatibility: Mostly Great-

gamerk316 I consider Gsync to be the most important gaming innovation since DX7. It's going to be one of those "How the HELL did we live without this before?" technologies.Reply -

monsta Totally agree, G Sync is really impressive and the technology we have been waiting for.Reply

What the hell is Mantle? -

wurkfur I personally have a setup that handles 60+ fps in most games and just leave V-Sync on. For me 60 fps is perfectly acceptable and even when I went to my friends house where he had a 120hz monitor with SLI, I couldn't hardly see much difference.Reply

I applaud the advancement, but I have a perfectly functional 26 inch monitor and don't want to have to buy another one AND a compatible GPU just to stop tearing.

At that point I'm looking at $400 to $600 for a relatively paltry gain. If it comes standard on every monitor, I'll reconsider. -

expl0itfinder Competition, competition. Anybody who is flaming over who is better: AMD or nVidia, is clearly missing the point. With nVidia's G-Sync, and AMD's Mantle, we have, for the first time in a while, real market competition in the GPU space. What does that mean for consumers? Lower prices, better products.Reply -

This needs to be not so proprietary for it to become a game changer. As it is, requiring a specific GPU and specific monitor with an additional price premium just isn't compelling and won't reach a wide demographic.Reply

Is it great for those who already happen to fall within the requirements? Sure, but unless Nvidia opens this up or competitors make similar solutions, I feel like this is doomed to be as niche as lightboost, Physx, and, I suspect, Mantle. -

ubercake I'm on page 4, and I can't even contain myself.Reply

Tearing and input lag at 60Hz on a 2560x1440 or 2560x1600 has been the only reason I won't game on one. G-sync will get me there.

This is awesome, outside-of-the-box thinking tech.

I do think Nvidia is making a huge mistake by keeping this to themselves though. This should be a technology implemented with every panel sold and become part of an industry standard for HDTVs, monitors or other viewing solutions! Why not get a licensing payment for all monitors sold with this tech? Or all video cards implementing this tech? It just makes sense.

-

rickard Could the Skyrim stuttering at 60hz w/ Gsync be because the engine operates internally at 64hz? All those Bethesda tech games drop 4 frames every second when vsync'd to 60hz which cause that severe microstutter you see on nearby floors and walls when moving and strafing. Same thing happened in Oblivion, Fallout 3, and New Vegas on PC. You had to use stutter removal mods in conjunction with the script extenders to actually force the game to operate at 60hz and smooth it out with vsync on.Reply

You mention it being smooth when set to 144hz with Gsync, is there any way you cap the display at 64hz and try it with Gsync alone (iPresentinterval=0) and see what happens then? Just wondering if the game is at fault here and if that specific issue is still there in their latest version of the engine.

Alternatively I suppose you could load up Fallout 3 or NV instead and see if the Gsync results match Skyrim. -

Old_Fogie_Late_Bloomer I would be excited for this if it werent for Oculus Rift. I don't mean to be dismissive, this looks awesome...but it isn't Oculus Rift.Reply -

hysteria357 Am I the only one who has never experienced screen tearing? Most of my games run past my refresh rate too....Reply